Abstract

This paper presents a modification of the q-BFGS method for nonlinear unconstrained optimization problems. For this modification, we use a simple symmetric positive definite matrix and propose a new q-quasi-Newton equation, which is close to the ordinary q-quasi-Newton equation in the limiting case. This method uses only first order q-derivatives to build an approximate q-Hessian over a number of iterations. The q-Armijo-Wolfe line search condition is used to calculate step length, which guarantees that the objective function value is decreasing. This modified q-BFGS method preserves the global convergence properties of the q-BFGS method, without the convexity assumption on the objective function. Numerical results on some test problems are presented, which show that an improvement has been achieved. Moreover, we depict the numerical results through the performance profiles.

MSC:

65K10; 05A30; 90C26

1. Introduction

There are many methods for solving nonlinear unconstrained minimization problems [1,2,3,4,5], most of them are variants of the Newton and quasi-Newton methods. Newton’s method uses the specification of the Hessian matrix, which is sometimes difficult to calculate, whereas the quasi-Newton method uses an approximation of Hessian. Over time, several attempts have been made to improve the effectiveness of quasi-Newton methods. The BFGS (Broyden–Fletcher–Goldfarb–Shanno) method is a quasi-Newton method for solving nonlinear unconstrained optimization problems, which is developed by Fletcher [6], Goldfarb [7], Shanno [8], and Broyden [9]. Since the 1970s, the BFGS method has become popular and is considered an effective quasi-Newton method. Some researchers have established that the BFGS method achieves global convergence under the assumption of convexity on the objective function. Mascarene has been shown with an example that the standard BFGS method fails with exact line search for non-convex functions [10]. Using inexact line search, some authors [11,12] established that the BFGS method achieves global convergence without the assumption of convexity on the objective function.

Quantum calculus (q-calculus) is a branch of mathematics and does not require limits to derive q-derivatives; therefore, it is also known as calculus without limits. In quantum calculus, we can obtain the q-derivative of a non-differentiable function by replacing the classical derivative with the q-difference operator, and if we take the limit , then the q-derivative reduces to the classical derivative [13]. Since the 20th century, quantum calculus has been linking physics [14] and mathematics [15] that span from statistical mechanics [16] and quantum theory [17] to hyper-geometric functions and number theory [14]. Quantum analysis was first introduced in the 1740s when Euler wrote in Latin about the theory of partitions, also known as additive analytic number theory.

At the beginning of the 19th century, Jackson generalized the concepts of classical derivatives in the context of q-calculus, known as Jackson’s derivative, or q-derivative operator, or q-difference operator or simply q-derivative [18]. He systematically developed quantum calculus based on pioneer work by Eular and Henie. His work introduced functions, mean value theorems [19], Taylor’s formula and its remainder [20,21], fractional integrals [22], integral inequalities and generalizations of series in the context of q-calculus [23]. The first time Soterroni [24] introduced the q-gradient vector. To obtain this, instead of the classical first order partial derivative, the first order partial q-derivative obtained from the q-difference operator is used.

In unconstrained optimization first time, Soterroni [24] used the q-derivative to establish the q-variant of the steepest descent method. After that, he also introduced the q-gradient method for global optimization [25]. In recent years, some authors have given some numerical techniques in the context of q-calculus to solve nonlinear unconstrained optimization problems [26,27,28]. In these methods, instead of a general gradient, a q-gradient is used because it permits the descent direction to work in the broader set of directions to converse rapidly.

Moreover, optimization has a crucial role in the field of chemical science. In this field, optimization methods have been used to minimize the energy consumption process in plants, design optimum fluid flow systems, optimize product concentration and reaction time in systems, and optimize the separation process in plants [29,30,31]. Some authors [32,33] have shown that the BFGS method is systematically superior in obtaining stable molecular geometries by reducing the gradient norm in a monotonic fashion. In a similar way, the modified q-BFGS algorithm can be used to find stable molecular geometries for large molecules.

In this paper, we modify the q-BFGS method for nonlinear unconstrained optimization problems. For this modification, we propose a new q-quasi-Newton equation with the help of a positive definite matrix, and in the limiting case, our new q-quasi-Newton equation is close to the ordinary q-quasi-Newton equation. Instead of calculating the q-Hessian matrices, we approximate them using only the first order q-derivative of the function. We use an independent parameter and quantum calculus based q-Armijo–Wolfe line search [34] to ensure that the objective function value is decreasing. The use of q-gradient in this line search is responsible for escaping the point from the local minimum to the global minimum at each iteration. The proposed method is globally convergent without the convexity assumption on the objective function. Then, numerical results on some test problems are presented to compare the new method with the existing approach. Moreover, we depict the numerical results through the performance profiles.

The organization of this paper is as follows: In Section 2, we recall essential preliminaries related to the q-calculus and the BFGS method. In the next section, we present a modified q-quasi-Newton equation, and using this, we give a modified q-BFGS algorithm and discuss its properties. In Section 4, we present the global convergence of the modified q-BFGS method. In the next section, we present numerical results. Finally, we give a conclusion in the last section.

2. Preliminaries

In this section, we reviewed some important definitions and other prerequisites from q-calculus and nonlinear unconstrained optimization.

Let , then, a q-complex number is denoted by and defined as follows [14]:

A q-natural number is defined as follows [13]:

In q-calculus, the q-factorial [14] of a number is denoted by and defined as follows:

and

The q-derivative [18] of a real-valued continuous function , provided that f is differentiable at 0, is denoted by and defined as follows:

If provided that f is differentiable on then in the limiting case (q→1), the q-derivative is equal to classical derivative.

Let be a real continuous function, then for ∈, consider an operator on h as

The partial q-derivative [22] of f at x with respect to , denoted by and defined as follows:

In the same way, higher order partial q-derivatives are defined as follows:

Then, the q-gradient [24] of f is

To simplify the presentation, we use to denote any symmetric and positive definite (semi-definite) matrix A, use to denote a real-valued function, use to denote the q-gradient of f at x, use to denote Euclidean norm of a vector , use to denotes the q-quasi-Newton update Hessian at , throughout this paper.

Let be continuously q-derivative then consider the following unconstrained optimization problem:

The q-BFGS method [34] generates a sequence by the following iterative scheme:

where and are step length and q-BFGS descent direction, respectively.

The q-BFGS descent direction is obtained by solving the following linear equation:

where is the q-quasi-Newton update Hessian. The sequence satisfies the following equation:

where and In the context of q-calculus, we refer to the Broyden–Fletcher–Goldfarb–Shanno (BFGS) update formula as the q-BFGS update formula. Thus, the Hessian is updated by the following q-BFGS formula:

3. Modified q-BFGS Algorithm

We modify the q-BFGS algorithm using the following function [35]:

where is a positive definite symmetric matrix. We obtain the following new q-quasi-Newton equation by using the function to the q-quasi-Newton method in the kth iterate:

where If we take k and , our new q-quasi-Newton equation is similar to the ordinary q-quasi-Newton equation. Using the above modification of the q-BFGS formula, we obtain the new one as follows:

where

To provide a better formula, the primary task of this research is to determine how to select a suitable . We direct our attention to finding as a simple structure that carries some second order information of objective function. In this part, we will discuss a new choice of f and assume it to be sufficiently smooth.

Using the following quadratic model for the objective function [36,37], we have

where denotes a Hessian matrix at point

Hence,

Therefore,

where denotes the value of f at

By using (5), we have

The combination of Equations (9) and (10) shows that the reasonable choice of should satisfied the following new q-quasi-Newton equation:

Proof.

The conclusion follows immediately using Equations (10) and (11). □

The function f holds the Equation (12) without any convexity assumption on it and any formula derived from the original quasi-Newton equation fails to satisfy the Equation (12). From Equation (11), a choice of can be defined as follows :

In above Equation (13), is some vector such that .

By the Equations (2) and (3), we know that if then . Therefore, for all k we can always assume that ; otherwise, at the iteration, the algorithm terminates. Hence, we can choose . Taking in the Equation (10), we have a choice of as follows:

where the norm is the Euclidean norm and

Remark 1.

The structure of is very simple, so we can construct and analyze it easily. We only need to consider the value of to calculate the modified from the modified quasi-Newton Equation (5). Thus, once is fixed, different choices of , which satisfied (13) gives the same .

For computing the step length following q-gradient based modified Armijo–Wolfe line search conditions [34] are used:

and

where . Additionally, if = 1 satisfies (16), we take = 1. In the above line search, a sufficient reduction in the objective function and nonacceptance of short step length is ensured by (15) and (16), respectively.

A good property of Formula (6) is that inherits the positive definiteness of as long as ; provided that f is convex and step length is computed by an above line search. However, when f is a non-convex function, then the above line search does not ensure the condition . Hence, in this case, is not necessarily positive definite even if is positive definite. Therefore, some extra caution updates should be introduced as follows:

Define the index set K as follows:

where , with and are positive constants. We determine by the following rule:

Proof.

Without loss of generality, let We use mathematical induction on k to prove this corollary. Since is chosen as a positive definite symmetric matrix, the result holds for . Let’s assume that the result holds for k = n. We consider the case when . If , then from Equations (17) and (18), holds. Hence, for , the result also holds. If , then by our assumption, is also positive definite. This completes the proof. □

From the above modifications, we introduce the following Algorithm 1:

| Algorithm 1 Modified q-BFGS algorithm |

|

4. Analysis of the Convergence

Under the following two assumptions, the global convergence [11] of the modified q-BFGS algorithm is shown in this section.

Assumption 1.

The level set

is bounded.

Assumption 2.

The function f is continuously q-derivative on Ω, and there exist a constant (Lipschitz constant) , such that

Since is a decreasing sequence, it is clear that the sequence generated by the modified q-BFGS algorithm is contained in .

To establish the global convergence of the modified q-BFGS algorithm in the context of q-calculus, first, we show the following lemma:

Lemma 1.

Let Assumptions 1 and 2 hold for f and with , be generated by Algorithm 1. If there exist positive constants and such that the following inequalities:

holds for infinitely many k, then we have

Proof.

We consider a new case using the q-Armijo type line search (15) with backtracking parameter . If , then we have

By the q-mean value theorem [19], there is a such that

that is,

From Assumption 2, we obtain

Since

Using (22) in the above inequality, we obtain

We consider the case where line search (16) is used; then, from Assumption 2 and from the inequality (16), we obtain the following:

The above inequality implies that

Since ,

Since

The inequalities (25) and (26) together show that is bounded below away from zero whenever line search (16) and (15) are used. Moreover,

That is,

This gives the following result

The above inequality, together with (15) gives,

Since

From the above Lemma 1, we can say that to establish the global convergence of Algorithm 1, it is sufficient to show that there are positive constants and such that the (20) holds for infinitely many k. To prove this, we need the following lemma [34]:

Lemma 2.

Let be a positive definite and symmetric matrix and be updated by (18). Suppose that there exist positive constant m < M such that, for each and satisfy

Then, there exist constants such that for any positive integer t, (20) holds for at least values of

By using Lemma 2 and Lemma 1, we can prove the following global convergence theorem for Algorithm 1.

Theorem 2.

Let f satisfy Assumption 1 and Assumption 2, and be generated by modified q-BFGS Algorithm 1, then the Equation (21) is satisfied.

Proof.

By using Lemma 1, it is sufficient to show that there are infinitely many k which satisfies (20).

If the set K is finite, then after a finite number of iterations, remains constant. Since matrix, is positive definite and symmetric for each k, and it is clear that there are positive constants and such that Equation (20) holds for all sufficiently large k.

Now, consider the case when K is an infinite set. We go forward by contradiction and assume that (21) is not true. Then, there exists a positive constant α such that

From (19), we know that Thus, combining it with the above inequality, we obtain

Let then

Applying Lemma 2 to the matrix subsequence , we conclude that there exist constants such that the Equation (20) holds for infinitely many k. The proof is then complete. □

The above Theorem 2 shows that the modified q-BFGS algorithm is globally convergent even if convexity is not assumed for f [34].

5. Numerical Results

This section presents the comparison of numerical results obtained with the modified q-BFGS algorithm 1, the q-BFGS algorithm [34], and the BFGS Algorithm [38] for solving a collection of unconstrained optimization problems taken from [39]. For each test problem, we chose an initial matrix as a unit matrix, i.e., Our numerical results are performed on Python3.7 (Google colab). Throughout this section ‘NI’, ‘NF’, and ‘NG’ indicate the total number of iterations, the total number of function evaluations, and the total number of gradient evaluations, respectively. For each test problem, the parameters are common to modified q-BFGS, q-BFGS, and BFGS algorithms. We set , , and , and used the condition as the stopping criteria. Moreover, we set the parameter , when otherwise we take . In general, we take and . When , then the q-gradient can make any angle with the classical gradient and the search direction can point in any direction.

We have used performance profiles for evaluating and comparing the performance of algorithms on a given set of test problems through graphs. Dolan and More [40], presented an appropriate technique to demonstrate the performance profiles, which is a statistical process. We use this as an evaluation tool to show the performance of the algorithm. We are interested in using the number of the iteration, function evaluations, and q-gradient evaluations as the performance measure. The performance ratio is presented as

Here, refers to the number of the iteration, function evaluations, and q-gradient evaluations, respectively, required to solve problem p by solver s and refers to the number of problems in the model test. The cumulative distribution function is expressed as

where is the probability that a performance ratio is within a factor of of the best possible ratio. That is, for a subset of the methods being analyzed, we plot the fraction of problems for which any given method is within a factor of the best. Now we take the following examples to show the computational results:

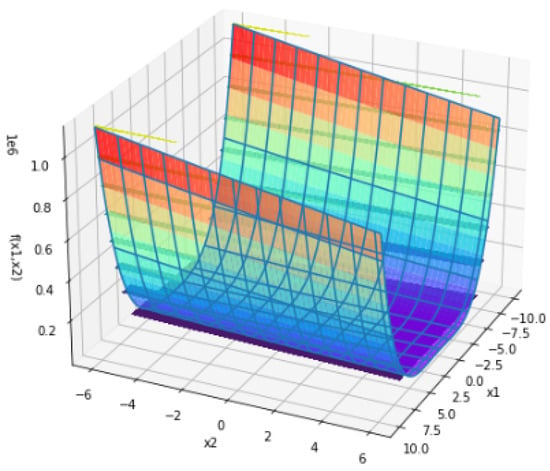

Example 1.

Consider the non-convex Rosenbrock function such that

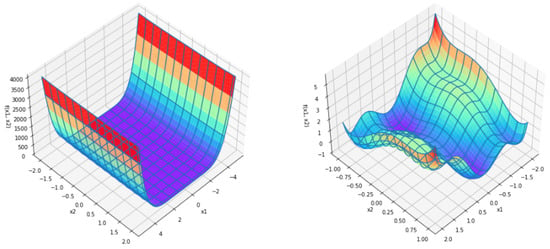

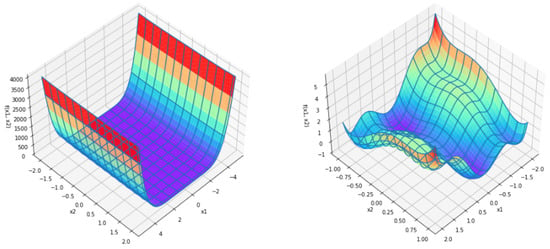

Following Figure 1 represents the surface plot of the Rosenbrock function:

Figure 1.

Surface plot of Rosenbrock function.

The Rosenbrock function was introduced by Rosenbrock in 1960. We tested modified q-BFGS, q-BFGS, and BFGS algorithms for 10 different initial points. Numerical results for the Rosenbrock function are given in the following Table 1:

Table 1.

Comparison of numerical results of Modified q-BFGS, q-BFGS, and BFGS algorithms for the Rosenbrock function.

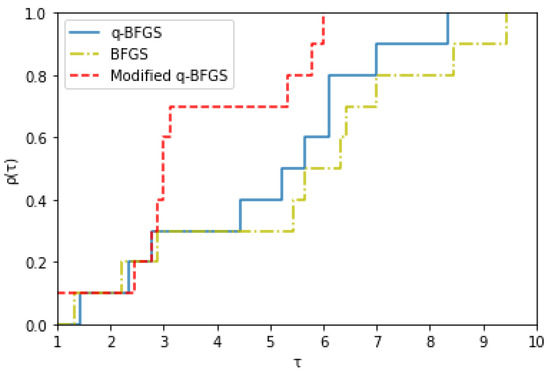

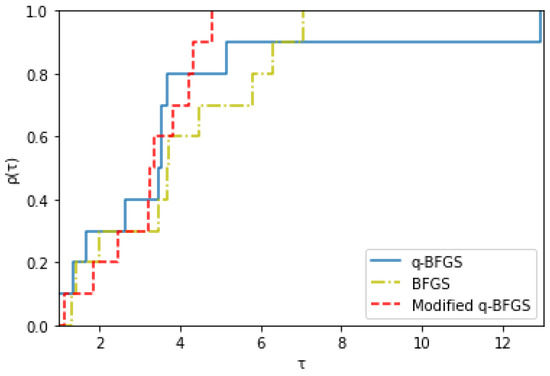

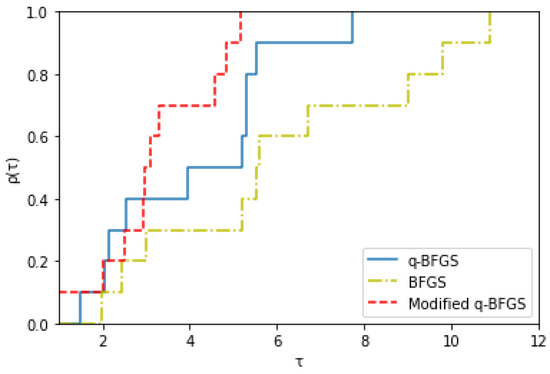

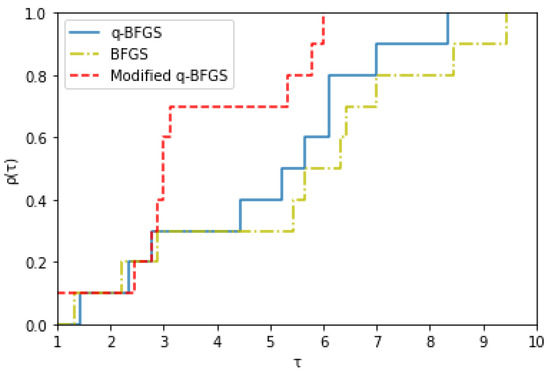

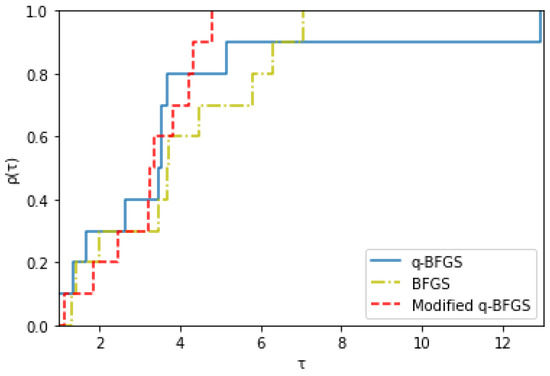

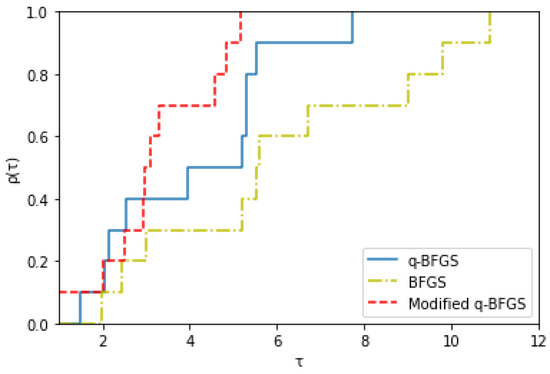

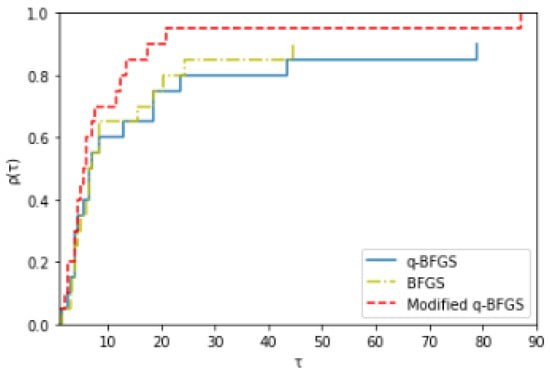

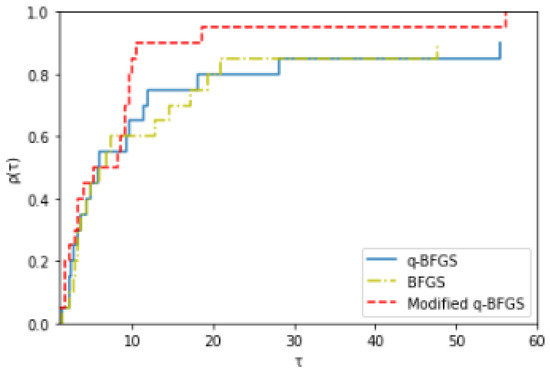

The Rosenbrock function converges to with value , for the above starting points . Figure 2, Figure 3, and Figure 4 show the Dolan and More performance profiles of modified q-BFGS, q-BFGS, and BFGS algorithms for the Rosenbrock function, respectively.

Figure 2.

Performance profile based on number of iterations.

Figure 3.

Performance profile based on number of gradient evaluations.

Figure 4.

Performance profile based on number of function evaluations.

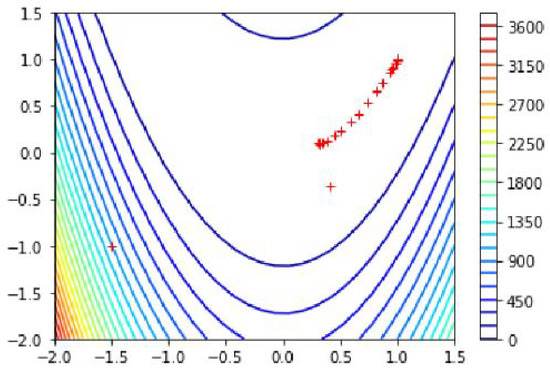

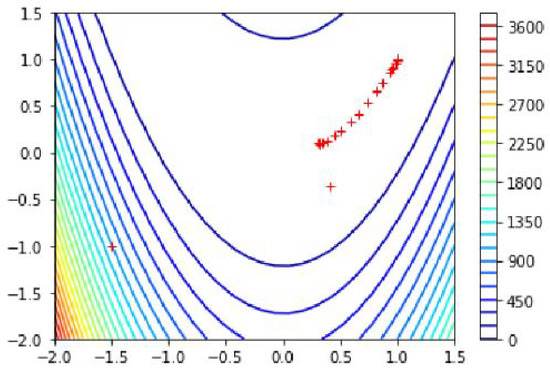

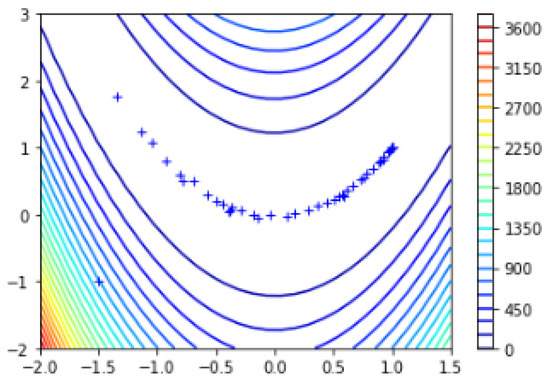

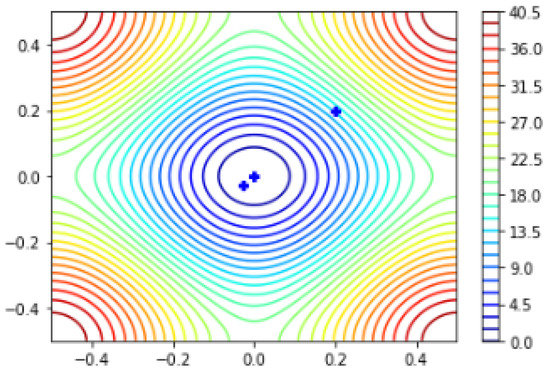

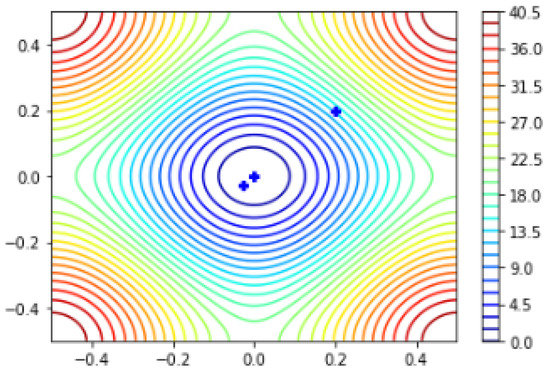

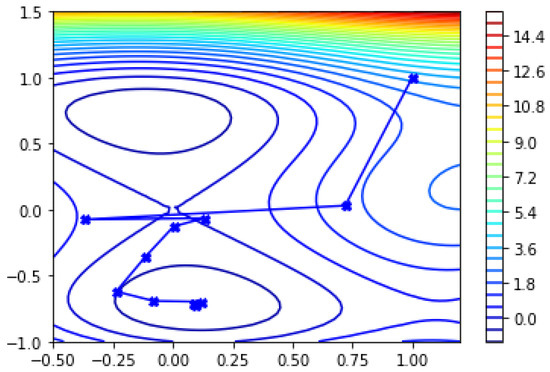

The global minima and plotting points of the Rosenbrock function using the modified q-BFGS algorithm can also be observed in Figure 5.

Figure 5.

Global minima of the Rosenbrock function using modified q-BFGS algorithm.

For the starting point , the Rosenbrock function converges to

in 25 iterations.

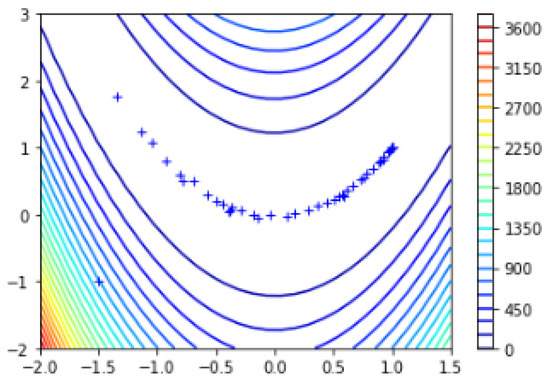

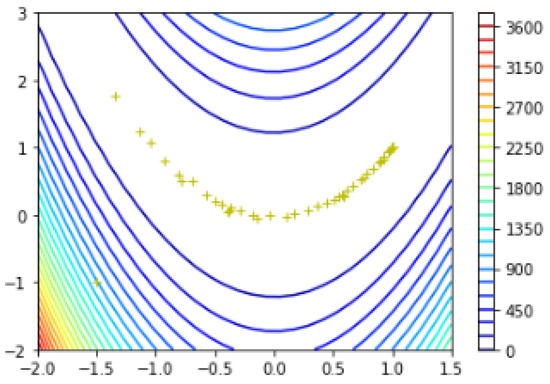

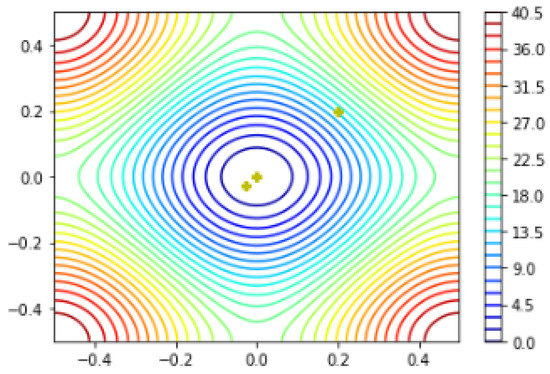

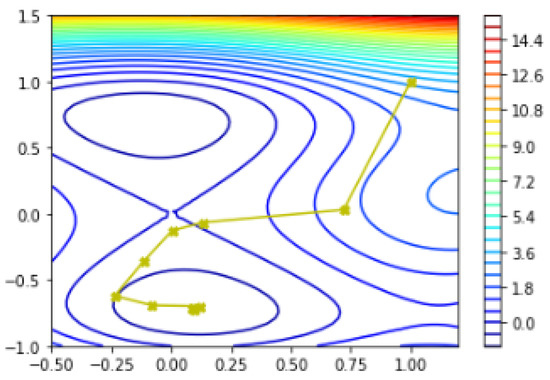

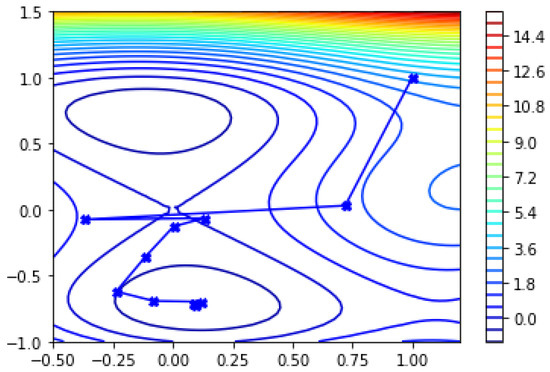

The global minima and plotting points of the Rosenbrock function using the q-BFGS algorithm can also be observed in Figure 6.

Figure 6.

Global minima of the Rosenbrock function using q-BFGS algorithm [34].

For the starting point , the Rosenbrock function converges to

in 47 iterations.

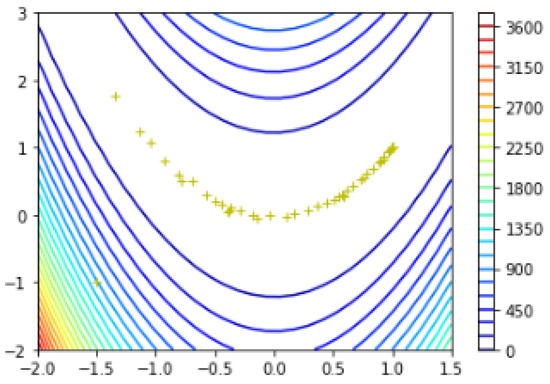

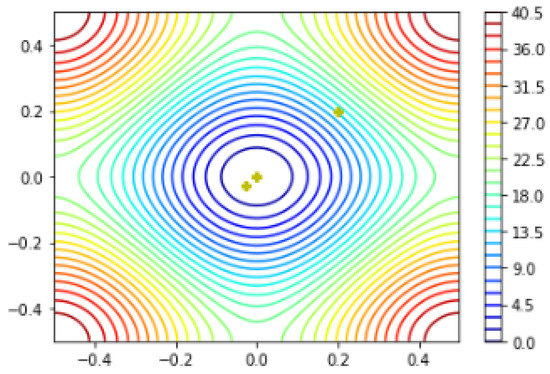

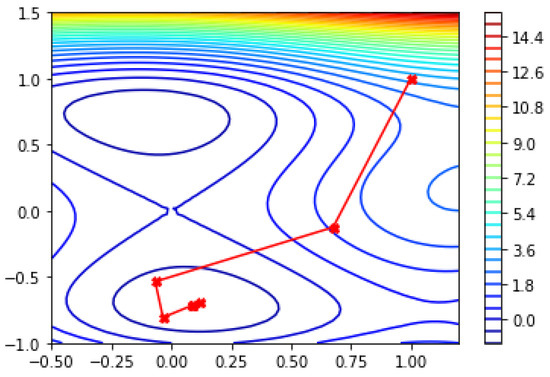

The global minima and plotting points of the Rosenbrock function using the BFGS algorithm can also be observed in Figure 7.

Figure 7.

Global minima of the Rosenbrock function using BFGS algorithm.

For the starting point , the Rosenbrock function converges to

in 49 iterations.

Example 2.

We consider

which is non-differentiable at . For initial point using our modified q-BFGS algorithm we reach minima at in 4 iterations, 10 function evaluations, and 5 gradient evaluations.

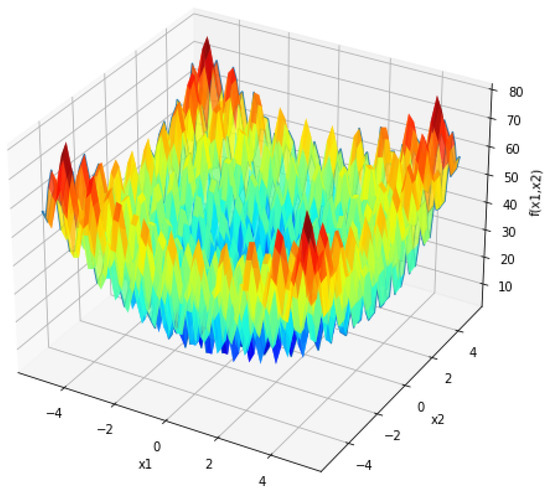

Example 3.

Consider the non-convex Rastrigin function f such that

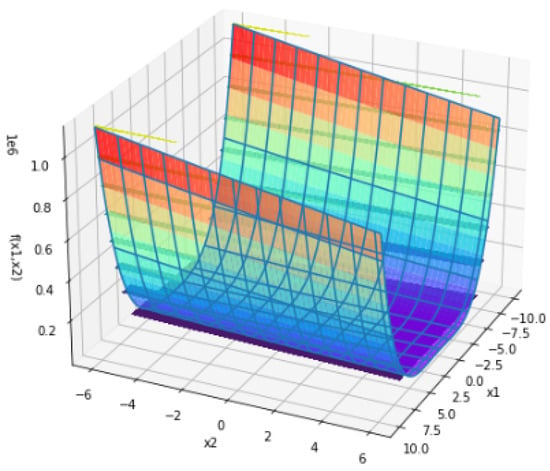

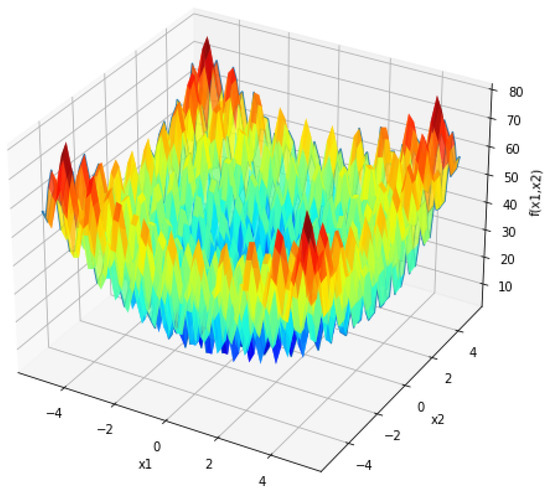

Following Figure 8 represents the surface plot of the Rastrigin function:

Figure 8.

Surface plot of the Rastrigin function.

The Rastrigin function f has a global minimum at

with value

We tested modified q-BFGS, q-BFGS, and, BFGS algorithms for initial point .

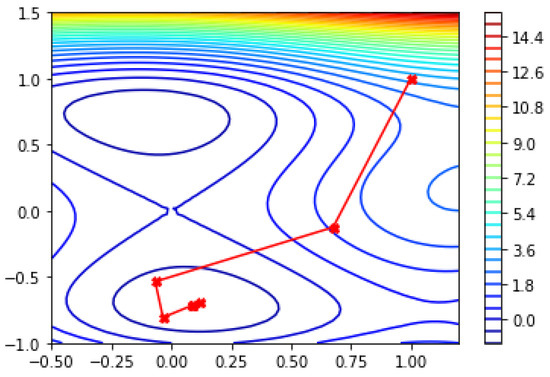

The global minima and plotting points of the Rastrigin function using the modified q-BFGS algorithm can be observed in Figure 9.

Figure 9.

Global minima of the Rastrigin function using the modified q-BFGS algorithm.

The numerical results for the Rastrigin function, using the modified q-BFGS algorithm are as follows:

For the starting point the Rastrigin function converges to

NI/NF/NG = .

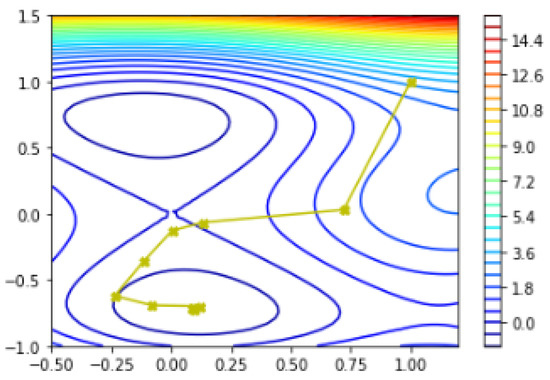

The global minima and plotting points of the Rastrigin function using the q-BFGS algorithm can be observed in Figure 10.

Figure 10.

Global minima of the Rastrigin function using q-BFGS algorithm.

The numerical results for the Rastrigin function, using the q-BFGS algorithm are as follows:

For the starting point The Rastrigin function converges to

NI/NF/NG = .

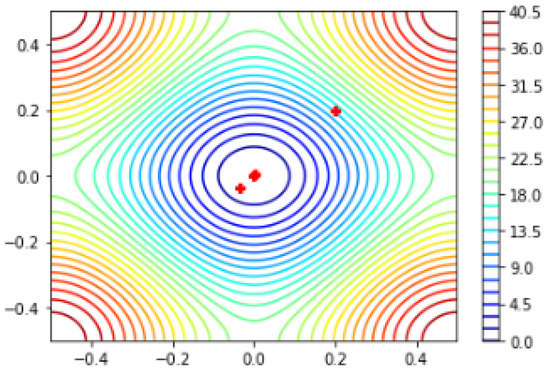

The global minima and plotting points of the Rastrigin function using the BFGS algorithm can be observed in Figure 11.

Figure 11.

Global minima of the Rastrigin function using BFGS algorithm.

The numerical results for the Rastrigin function using the BFGS algorithm are as follows:

For the starting point the Rastrigin function converges to

NI/NF/NG = .

From the above numerical results, we conclude that using the modified q-BFGS algorithm, we can reach the critical point by taking the least number of iterations.

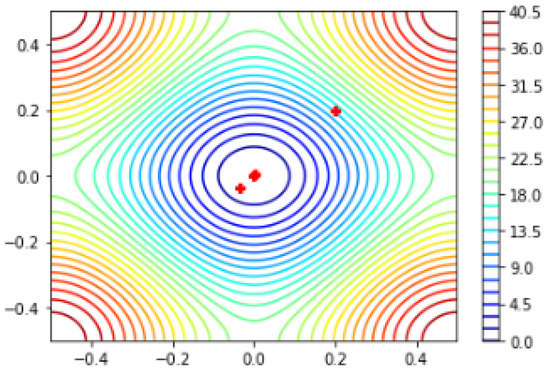

Example 4.

Consider the SIX-HUMP CAMEL function such that

The Figure 12 on the left shows the SIX-HUMP CAMEL function on its recommended input domain and on the right shows only a portion of this domain for easier view of the function’s key characteristics. The function f has six local minima, two of which are global.

Figure 12.

Surface plot of the SIX-HUMP CAMEL function.

Input Domain: The function is usually evaluated on the rectangle

This function has a global minimum at

with value

For the starting point with the modified q-BFGS algorithm f converges to in eight iterations whereas with q-BFGS and BFGS it takes 13 iterations. Table 2, Table 3, and Table 4 give numerical results and Figure 13, Figure 14, and Figure 15 represents the global minima and sequence of iterative points generated with modified q-BFGS, q-BFGS and BFGS algorithms, respectively.

Table 2.

Numerical results for Modified q-BFGS algorithm.

Table 2.

Numerical results for Modified q-BFGS algorithm.

| S.N. | x | ||

|---|---|---|---|

| 1 | 3.23333333333333 | ||

| 2 | 1.27218227259399 | ||

| 3 | −0.764057148938559 | ||

| 4 | −0.876032180235736 | ||

| 5 | −1.02498975962408 | ||

| 6 | −1.03153652682667 | ||

| 7 | −1.03162840874522 | ||

| 8 | −1.03162845348855 |

Here, we obtain

Figure 13.

Global minima of SIX-HUMP CAMEL function using modified q-BFGS algorithm.

Table 3.

Numerical results for q-BFGS algorithm [34].

Table 3.

Numerical results for q-BFGS algorithm [34].

| S.N. | x | ||

|---|---|---|---|

| 1 | 3.23333333333333 | ||

| 2 | 1.57257718494530 | ||

| 3 | 0.505072874807150 | ||

| 4 | 0.0413558838831559 | ||

| 5 | −0.0649165012454617 | ||

| 6 | −0.351034437749229 | ||

| 7 | −0.581320173229039 | ||

| 8 | −0.915418626192531 | ||

| 9 | −1.02633316281778 | ||

| 10 | −1.03082558867975 | ||

| 11 | −1.03159319420704 | ||

| 12 | −1.03162824822151 | ||

| 13 | −1.03162845277086 |

Here, we obtain

Using this q-BFGS algorithm, we can reach the critical point by taking 13 iterations.

Figure 14.

Global minima of SIX-HUMP CAMEL function using q-BFGS algorithm.

Table 4.

Numerical results for BFGS algorithm.

Table 4.

Numerical results for BFGS algorithm.

| S.N. | x | ||

|---|---|---|---|

| 1 | 3.23333333333333 | ||

| 2 | 1.5725772653791203 | ||

| 3 | 0.041355907444434827 | ||

| 4 | −0.06491646830150141 | ||

| 5 | −0.35103445558228663 | ||

| 6 | −0.5813202779118049 | ||

| 7 | −0.9154186025665918 | ||

| 8 | −1.0263331977999757 | ||

| 9 | −1.0308255945761227 | ||

| 10 | −1.031593194593363 | ||

| 11 | −1.031628248214576 | ||

| 12 | −1.0316284527721356 | ||

| 13 | −1.0316284534898297 |

Here, we obtain

Using this BFGS algorithm, we can reach the critical point by taking 13 iterations.

Figure 15.

Global minima of SIX-HUMP CAMEL function using BFGS algorithm.

We conclude that using the modified q-BFGS algorithm, we can reach the critical point by taking the least number of iterations. From the performance results and plotting points for the multimodal functions it could be seen that the q-descent direction has a mechanism to escape from many local minima and move towards the global minimum.

Now, we compare the performance of numerical algorithms for large dimensional Rosenbrock and Wood function. Numerical results for these functions are given in Table 5 and Table 6.

Numerical results for the large dimensional Rosenbrock function for

Table 5.

Comparison of numerical results of Modified q-BFGS, q-BFGS, and BFGS algorithm for the large dimensional Rosenbrock function.

Table 5.

Comparison of numerical results of Modified q-BFGS, q-BFGS, and BFGS algorithm for the large dimensional Rosenbrock function.

| S.No. | Dimension | Modified q-BFGS | q-BFGS | BFGS |

|---|---|---|---|---|

| NI/NF/NG | NI/NF/NG | NI/NF/NG | ||

| 1 | 10 | 58/889/132 | 63/972/81 | 61/1365/123 |

| 2 | 50 | 242/15,432/300 | 253/17,316/324 | 253/17,199/337 |

| 3 | 100 | 466/63,088/604 | 486/64,056/636 | 479/64,741/641 |

| 4 | 200 | 904/209,912/1175 | 978/248,056/1228 | 956/253,674/1262 |

Numerical results for large dimensional WOOD function [39] for

Table 6.

Comparison of numerical results of Modified q-BFGS, q-BFGS, and BFGS algorithm for Large Dimensional Wood function.

Table 6.

Comparison of numerical results of Modified q-BFGS, q-BFGS, and BFGS algorithm for Large Dimensional Wood function.

| S.No. | Dimension | Modified q-BFGS | q-BFGS | BFGS |

|---|---|---|---|---|

| NI/NF/NG | NI/NF/NG | NI/NF/NG | ||

| 1 | 20 | 85/1976/98 | 91/2872/130 | 103/2478/118 |

| 2 | 80 | 162/19,745/198 | 193/21,250/259 | 209/22,366/276 |

| 3 | 100 | 197/19,965/255 | 240/30,714/301 | 254/29,290/290 |

| 4 | 200 | 296/75,686/397 | 370/93,538/463 | 378/93,678/466 |

We have taken 20 test problems to show the proposed method’s efficiency and numerical results. We take tolerance . Our numerical results are shown in Table 7, Table 8 and Table 9 with the problem number(S.N.), problem name, Dimension (DIM), starting point, the total number of iterations (NI), the total number of function evaluations (NF), the total number of gradient evaluations (NG), respectively.

Table 7.

Numerical results for Modified q-BFGS algorithm.

Table 8.

Numerical results for q-BFGS algorithm.

Table 9.

Numerical results for BFGS algorithm.

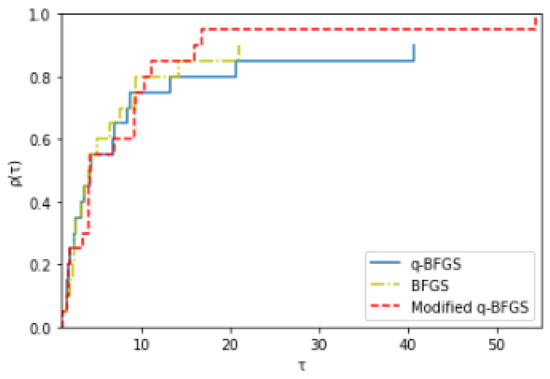

Table 5, Table 6, Table 7, Table 8 and Table 9 show that the modified q-BFGS algorithm solves about 86% of the test problems with the least number of iterations, 82% of the test problems with the least number of function evaluations, and 52% of the test problems with the least number of gradient evaluations. Therefore, with Figure 16, Figure 17 and Figure 18 we conclude that the modified q-BFGS performs better than other algorithms and improves the performance in fewer iterations, function evaluations, and gradient evaluations.

Figure 16.

Performance Profile based on number of iterations.

Figure 17.

Performance Profile based on number of function evaluations.

Figure 18.

Performance Profile based on number of gradient evaluations.

6. Conclusions and Future Directions

We have given a new q-quasi-Newton equation and proposed a modified q-BFGS method for unconstrained minimization based on this new q-quasi-Newton equation. The method converges globally with a q-gradient-based Armijo–Wolfe line search. The q-gradient allows the search direction to be taken from a diverse set of directions and takes large steps to converge. From the performance results and plotting points for the multimodal functions, it could be seen that the q-descent direction and q-gradient-based line search have a mechanism to escape from many local minima and move towards the global minimum. The first order q-differentiability of the function is sufficient to prove the global convergence of the proposed method. The convergence and numerical results show that the algorithm given in this paper is very successful. However, many other q-quasi-Newton methods still need to be studied using the q-derivative.

Author Contributions

Formal analysis, K.K.L., S.K.M., R.S., M.S. and B.R.; funding acquisition, K.K.L.; investigation, S.K.M.; methodology, S.K.M., R.S., M.S. and B.R.; supervision, S.K.M.; validation, R.S.; writing—original draft, R.S.; writing—review and editing, K.K.L., R.S., M.S. and B.R. All authors have read and agreed to the published version of the manuscript.

Funding

The Second author is financially supported by Research Grant for Faculty (IoE Scheme) under Dev. Scheme No. 6031 and the third author is financially supported by the BHU-UGC Non-NET fellowship /R/Dev./ix-Sch.(BHU Res.Sch.)/2022-23/46476. The fifth author is financially supported by the Centre for Digital Transformation, Indian Institute of Management, Ahmedabad.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

No data were used to support this study.

Acknowledgments

The authors are indebted to the anonymous reviewers for their valuable comments and remarks that helped to improve the presentation and quality of the manuscript.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Mishra, S.K.; Ram, B. Conjugate gradient methods. In Introduction to Unconstrained Optimization with R; Springer Nature: Singapore, 2019; pp. 211–244. [Google Scholar]

- Fletcher, R. Practical Methods of Optimization; John Wiley & Sons: Chichester, NY, USA, 2013. [Google Scholar]

- Gill, P.; Murray, W.; Wright, M. Practical Optimization; Academic Press: Cambridge, MA, USA, 1981. [Google Scholar]

- Mishra, S.K.; Ram, B. Newton’s method. In Introduction to Unconstrained Optimization with R; Springer Nature: Singapore, 2019; pp. 175–209. [Google Scholar]

- Mishra, S.K.; Ram, B. Quasi-Newton methods. In Introduction to Unconstrained Optimization with R; Springer Nature: Singapore, 2019; pp. 245–289. [Google Scholar]

- Fletcher, R. A new approach to variable metric algorithms. Comput. J. 1970, 13, 317–322. [Google Scholar] [CrossRef]

- Goldfarb, D. A family of variable-metric methods derived by variational means. Math. Comput. 1970, 24, 23–26. [Google Scholar] [CrossRef]

- Shanno, D.F. Conditioning of quasi-Newton methods for function minimization. Math. Comput. 1970, 24, 647–656. [Google Scholar] [CrossRef]

- Broyden, C.G. The convergence of a class of double-rank minimization algorithms: 2. The new algorithm. IMA J. Appl. Math. 1970, 6, 222–231. [Google Scholar] [CrossRef]

- Mascarenhas, W.F. The BFGS method with exact line searches fails for non-convex objective functions. Math. Program. 2004, 99, 49. [Google Scholar] [CrossRef]

- Li, D.H.; Fukushima, M. On the global convergence of the BFGS method for nonconvex unconstrained optimization problems. SIAM J. Optim. 2001, 11, 1054–1064. [Google Scholar] [CrossRef]

- Li, D.; Fukushima, M. A globally and superlinearly convergent gauss–Newton-based BFGS method for symmetric nonlinear equations. SIAM J. Numer. Anal. 1999, 37, 152–172. [Google Scholar] [CrossRef]

- Kac, V.G.; Cheung, P. Quantum Calculus; Springer: Berlin, Germany, 2002; Volume 113. [Google Scholar]

- Ernst, T. A Comprehensive Treatment of q-Calculus; Springer: Basel, Switzerland, 2012. [Google Scholar]

- Cieśliński, J.L. Improved q-exponential and q-trigonometric functions. Appl. Math. Lett. 2011, 24, 2110–2114. [Google Scholar] [CrossRef]

- Borges, E.P. A possible deformed algebra and calculus inspired in nonextensive thermostatistics. Phys. A Stat. Mech. Its Appl. 2004, 340, 95–101. [Google Scholar] [CrossRef]

- Tariboon, J.; Ntouyas, S.K. Quantum calculus on finite intervals and applications to impulsive difference equations. Adv. Differ. Equations 2013, 2013, 282. [Google Scholar] [CrossRef]

- Jackson, F.H. XI.—On q-functions and a certain difference operator. Earth Environ. Sci. Trans. R. Soc. Edinb. 1909, 46, 253–281. [Google Scholar] [CrossRef]

- Rajković, P.; Stanković, M.; Marinković, D.S. Mean value theorems in g-calculus. Mat. Vesn. 2002, 54, 171–178. [Google Scholar]

- Ismail, M.E.; Stanton, D. Applications of q-Taylor theorems. J. Comput. Appl. Math. 2003, 153, 259–272. [Google Scholar] [CrossRef]

- Jing, S.C.; Fan, H.Y. q-Taylor’s Formula with Its q-Remainder1. Commun. Theor. Phys. 1995, 23, 117. [Google Scholar] [CrossRef]

- Rajković, P.M.; Marinković, S.D.; Stanković, M.S. Fractional integrals and derivatives in q-calculus. Appl. Anal. Disc. Math. 2007, 1, 311–323. [Google Scholar]

- Jackson, D.O.; Fukuda, T.; Dunn, O.; Majors, E. On q-definite integrals. Q. J. Pure Appl. Math. 1910, 41, 193–203. [Google Scholar]

- Soterroni, A.C.; Galski, R.L.; Ramos, F.M. The q-gradient vector for unconstrained continuous optimization problems. In Operations Research Proceedings 2010; Springer: Heidelberg, Germany, 2011; pp. 365–370. [Google Scholar]

- Gouvêa, É.J.; Regis, R.G.; Soterroni, A.C.; Scarabello, M.C.; Ramos, F.M. Global optimization using q-gradients. Eur. J. Oper. Res. 2016, 251, 727–738. [Google Scholar] [CrossRef]

- Lai, K.K.; Mishra, S.K.; Ram, B. On q-quasi-Newton’s method for unconstrained multiobjective optimization problems. Mathematics 2020, 8, 616. [Google Scholar] [CrossRef]

- Mishra, S.K.; Samei, M.E.; Chakraborty, S.K.; Ram, B. On q-variant of Dai–Yuan conjugate gradient algorithm for unconstrained optimization problems. Nonlinear Dyn. 2021, 104, 2471–2496. [Google Scholar] [CrossRef]

- Lai, K.K.; Mishra, S.K.; Ram, B. A q-conjugate gradient algorithm for unconstrained optimization problems. Pac. J. Optim 2021, 17, 57–76. [Google Scholar]

- Van Voorhis, T.; Head-Gordon, M. A geometric approach to direct minimization. Mol. Phys. 2002, 100, 1713–1721. [Google Scholar] [CrossRef]

- [yop] Dominic, S.; Shardt, Y.; Ding, S. Economic performance indicator based optimization for the air separation unit compressor trains. IFAC-PapersOnLine 2015, 48, 858–863. [Google Scholar] [CrossRef]

- Dutta, S. Optimization in Chemical Engineering; Cambridge University Press: Cambridge, UK, 2016. [Google Scholar]

- Head, J.D.; Zerner, M.C. A Broyden—Fletcher—Goldfarb—Shanno optimization procedure for molecular geometries. Chem. Phys. Lett. 1985, 122, 264–270. [Google Scholar] [CrossRef]

- Ahuja, K.; Green, W.H.; Li, Y.P. Learning to optimize molecular geometries using reinforcement learning. J. Chem. Theory Comput. 2021, 17, 818–825. [Google Scholar] [CrossRef]

- Mishra, S.K.; Panda, G.; Chakraborty, S.K.; Samei, M.E.; Ram, B. On q-BFGS algorithm for unconstrained optimization problems. Adv. Differ. Equ. 2020, 2020, 638. [Google Scholar] [CrossRef]

- Wei, Z.; Li, G.; Qi, L. New quasi-Newton methods for unconstrained optimization problems. Appl. Math. Comput. 2006, 175, 1156–1188. [Google Scholar] [CrossRef]

- Yuan, Y. A modified BFGS algorithm for unconstrained optimization. IMA J. Numer. Anal. 1991, 11, 325–332. [Google Scholar] [CrossRef]

- Deng, N.; Li, Z. Some global convergence properties of a conic-variable metric algorithm for minimization with inexact line searches. Optim. Methods Softw. 1995, 5, 105–122. [Google Scholar] [CrossRef]

- Li, D.H.; Fukushima, M. A modified BFGS method and its global convergence in nonconvex minimization. J. Comput. Appl. Math. 2001, 129, 15–35. [Google Scholar] [CrossRef]

- Moré, J.J.; Garbow, B.S.; Hillstrom, K.E. Testing unconstrained optimization software. ACM Trans. Math. Softw. (TOMS) 1981, 7, 17–41. [Google Scholar] [CrossRef]

- Dolan, E.D.; Moré, J.J. Benchmarking optimization software with performance profiles. Math. Program. 2002, 91, 201–213. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).