Abstract

With the rapid growth of the Internet, a wealth of movie resources are readily available on the major search engines. Still, it is unlikely that users will be able to find precisely the movies they are more interested in any time soon. Traditional recommendation algorithms, such as collaborative filtering recommendation algorithms only use the user’s rating information of the movie, without using the attribute information of the user and the movie, which has the problem of inaccurate recommendations. In order to achieve personalized accurate movie recommendations, a movie recommendation algorithm based on a multi-feature attention mechanism with deep neural networks and convolutional neural networks is proposed. In order to make the predicted movie ratings more accurate, user attribute information and movie attribute information are added, user network and movie network are presented to learn user features and movie features, respectively, and a feature attention mechanism is proposed so that different parts contribute differently to movie ratings. Text features are also extracted using convolutional neural networks, in which an attention mechanism is added to make the extracted text features more accurate, and finally, personalized movie accurate recommendations are achieved. The experimental results verify the effectiveness of the algorithm. The user attribute features and movie attribute features have a good effect on the rating, the feature attention mechanism makes the features distinguish the degree of importance to the rating, and the convolutional neural network adding the attention mechanism makes the extracted text features more effective and achieves high accuracy in MSE, MAE, MAPE, R2, and RMSE indexes.

Keywords:

movie recommendation; attention mechanism; deep neural networks; convolutional neural networks; user network; movie network MSC:

68T07

1. Introduction

In recent years, with the rapid development of the Internet, mobile smart devices, and other technologies, a large amount of information has flooded the network [1]. Along with the continuous improvement of people’s living standards and the rapid popularization of mobile Internet, the Internet and smart mobile devices have gradually become the main way for people to obtain information resources. Today, with the rapid development of mobile Internet, the information in mobile Internet is increasing geometrically and the number of mobile users is soaring, making the contradiction between limited network resources and users’ increasing demand for network more and more acute [2]. When we enter the era of big data, modern technology has revolutionized the quantity, type, and speed of data generation, and the massive amount of data brings about information overload [3]. Information overload can be defined as a state in which information exceeds what people can receive, process, or use effectively. As human society enters the era of information explosion, it becomes more and more difficult to find the required information from the vast amount of data. Since different users have different hobbies, interests, and personal experiences, it is difficult for them to sift through the vast amount of data to find the information they are interested in. Search and recommendation technologies help people to acquire and process relevant data, and can better address information overload [4,5].

Due to the rapid development of the Internet, the film industry has been riding on the fast train of technology and has proliferated, with the number of films growing significantly, the variety of films becoming more diverse, and the quality of films improving [6]. A wealth of movie resources are readily available on major search engines. However, due to the wide variety of movies, users cannot precisely find the movies they are more interested in in a short period. Therefore, it is essential to explore the potential needs of users based on their hobbies and behaviours, and make personalized movie recommendations according to their needs [7,8,9]. To help users find movies of interest efficiently, recommendation systems have attracted the attention of many researchers. The movie recommendation system can help users overcome the obstacle of finding movies that meet their needs in a large number of movies [10], and can recommend only the movies that are suitable for them according to their needs, which achieves personalized recommendations for users. With the current limited resources, better algorithms need to be explored to accurately capture user features and movie features in order to accurately and efficiently extract user features and movie features, analyse user interests and needs, and achieve targeted personalized movie recommendations [11].

In recent years, there has been a lot of research on recommender systems. For example, the literature [12] investigated a hybrid recommendation system for heart disease diagnosis. The literature [13] studied personalized mobile news recommendation systems. The literature [14] mined practical features through statistical analysis of users’ shopping behaviour in e-commerce in Internet scenarios and predicted users’ shopping behaviour in the coming month by constructing random forest, logistic regression, and support vector machine models. The literature [15] proposed a “state-behaviour” model for describing user information behaviour, systematically describing and accurately modelling users’ information behaviour in e-commerce, which is vital for guiding the formulation of platform recommendation strategies and marketing programs. The literature [16] gave insights into building a microservice recommendation system for online news, which develops a personalized online news recommendation system by providing an initial menu of topics/themes for users to choose from to determine their initial preferences. The literature [17] presented a personalized product recommendation model for automated question-answering bots using deep learning, which is better adapted to the practical needs of automated question-answering bots. The literature [18] proposed a big data music personalized recommendation method based on big data analysis, integrating user behaviour, behavioural context, user information, and music work information, based on traditional music recommendation methods; a collaborative filtering recommendation algorithm was improved based on users’ behaviour; the semantic similarity between lyrics and song co-occurrence similarity was calculated based on users’ music download history. The literature [19] proposed a Chinese news text classification method based on combined convolutional neural network (combined-CNN), and finally, the Chinese news classification algorithm of combined-CNN was applied to implement a personalized recommendation system. The literature [20] analysed the connection between users, items, and tags in music social networks and implemented a personalized music recommendation algorithm. The literature [21] proposed a user-personalized recommendation algorithm based on deep learning networks, using a gated recurrent unit (GRU) network to build the main model of the personalized recommendation algorithm in order to reduce the influence of overfitting of multilayer networks, and introduces an attention mechanism in the GRU network so that the recommendation model can obtain more accurate information about the features of user data, reducing the influence of irrelevant data in the model. The above articles explored some sub-domains of recommendation systems, which can provide reference and help for researching personalized movie recommendation systems.

Traditional recommendation algorithms, such as collaborative filtering recommendation algorithms only use information about users’ ratings of movies, calculate user similarity or movie similarity only based on movie ratings, and then make recommendations without considering user characteristics such as the user’s gender, age, occupation, etc., or movie characteristics such as a movie’s title, title, release time, etc. [4,22]. Both user characteristics and movie characteristics have an impact on users’ interest preferences, which in turn influence users’ ratings of movies. Most of the current improvements to traditional collaborative filtering recommendation algorithms only consider the user rating as a factor in them. Jie Zhang et al. [23] proposed a fusion collaborative filtering recommendation algorithm based on the entropy of rating information, which improved the method of calculating the similarity between users and made the calculated similarity more accurate, thus making the recommendation more effective. Ziyan Zhang et al. [24] proposed an improved collaborative filtering recommendation algorithm by increasing user preferences for categories, and their experimental results showed an enhanced recommendation effect. Honglin Chu et al. [25] proposed the JSD-AC similarity calculation method, which improved the traditional modified cosine similarity and fused the improved JS scatter into the similarity calculation. Jinming Yu et al. [26] proposed a new similarity measure between items, IPSS, which enabled the cold start problem to be solved. Based on the above, traditional methods such as collaborative filtering and matrix decomposition only apply user rating information, and both user and item attribute information are not utilized. User and item feature representation is not accurate enough. Therefore, user and movie features can be fused to predict movie ratings, and then movies can be recommended to users.

To achieve personalized movie recommendations, this paper proposes a movie recommendation algorithm based on a multi-feature attention mechanism with deep neural networks and convolutional neural networks. The innovative points and contributions of this paper are as follows. (1) In order to better learn the user and movie feature representation, this paper adds user and movie features by considering the information of the user’s and movie’s own attributes. (2) In order to make the movie rating prediction more accurate, this paper first obtains the importance of features and then proposes a feature attention mechanism to give different weights to each feature so that different features can contribute differently to the movie rating prediction. (3) In order to make the extracted text features more accurate, this paper proposes a convolutional neural network plus an attention mechanism fusion model to extract text features. (4) Two deep neural networks, one learning user features, named the user network, and one learning movie features, named the movie network, are designed to learn user and movie features, respectively. The experimental results show that the algorithm is effective and achieves good results in terms of accuracy.

2. Related Work

A convolutional neural network, a representative model structure for deep learning, is a deep neural network inspired by the biological brain and simulates the activity of the visual nerve centre, commonly used to process images, text, and some unstructured data, among others [27]. The structural feature of convolutional neural networks with shared weights makes the computational scale during training much lower, and it has been applied to image recognition, semantic segmentation, and non-image aspects, such as natural language processing. Convolutional neural networks have also been applied to tasks such as text classification and language recognition [28]. The convolutional neural network mainly includes an input layer, hidden layer, and an output layer, where the hidden layer is divided into a convolutional layer, pooling layer, and fully connected layer.

The core of a convolutional neural network is the convolutional layer, which operates on the input by convolution, which simulates the response of visually relevant neurons within the brain to visual stimuli, and the computational results are similar to neurotransmitters for information transfer [27]. The convolutional layer consists of a set of convolutional kernels, which are also called filters. These convolutional kernels generally have a small coverage, i.e., the perceptual field size of the convolutional kernels. The concept of receptive fields was originally derived from some visual neurons, which have the property of receiving stimuli only in their control area. The perceptual field is covered by step and fill to cover the entire field of view. The step and fill settings can be overlapped to cover all channels of the input data in depth.

The pooling layer is a sampling-based discretization operation, which is a non-linear downsampling of the input data, which contains information about the features of local regions in order to reduce the dimensionality of the input data, thus reducing the number of parameters and the computational complexity [29]. Pooling operations do not need to be involved in parameter updates, and average pooling and maximum pooling are the two most common types of pooling. The role of the pooling layer is to aggregate information. For example, using a convolutional kernel, with a step size of 2, the height and width of the data can be halved to aggregate the values within the coverage of the convolutional kernel, usually with a maximum pooling operation and an average pooling operation.

The fully connected layer dimensionally transforms the data that has passed through the convolutional and pooling layers. The output data of the convolutional and pooling layers represent the high-level features of the original data, and the individual dimensional metrics represent the depth features of the original data. This layer has a large number of parameters to learn the non-linear way of combining these features [28].

The attention mechanism was initially derived from the brain’s attention and is a method based on human intuition [30]. People’s attention tends to focus more on what interests them, and what makes an item memorable is that it has characteristics that set it apart from other items. The attention mechanism is usually used between the input and output layers of a neural network, which simulates the changes in visual attention when humans observe objects. The main idea of the attention mechanism is to filter out useless or unimportant feature information as much as possible when processing many features and focus on some features that are most critical to the target task, and different weights can be assigned to each feature so that the critical feature information can be more prominent [22,29,31,32,33].

Attentional mechanisms have been shown to be powerful in the field of NLP. The role of the attention mechanism is to assign weights to the parts of the input being processed, with the more important parts being assigned greater weights. The attention mechanism, which first appeared in machine translation tasks, functions in the analysis of utterance components, and the output is an estimate of the weight of each element of the utterance [31,34].

In the movie recommendation scenario, different features of users and movies have different effects on the rating results of end users, and certain weights can be assigned to the features of users and movies, and the weights represent the degree of importance, with high weights representing high importance and low weights representing low importance [22]. For example, if age is a characteristic, users in the lower age group may rate animated movies higher, and the age group characteristic is more important to the user’s rating result than the gender characteristic or occupation characteristic. For example, some users like to watch horror movies. At this time, the movie subject matter is more critical to the user’s rating results. Therefore, the introduction of an attention mechanism allows focussing more attention to features with high importance to the end users’ scoring results, assigning higher weights, and giving features with low priority less attention [34].

The collaborative filtering recommendation algorithm is one of the most widely used and successful techniques in recommender systems [35,36,37,38]. The collaborative filtering recommendation algorithm assumes that users with similar preferences will like similar items or similar items will be liked by similar users. The core idea is to make recommendations based on nearest neighbours [39], which uses historical behavioural data between users or between items to calculate similarity and then effectively recommend items to target users. This recommendation technique can be implemented completely independent of the attributes of the items and is a recommendation that belongs to memory based on the data of the user’s historical behaviour and using group intelligence to solve the problem [40]. This can identify potential but undiscovered interests of users and can provide novel items for users, thus enhancing their experience. The collaborative filtering recommendation algorithm is based on the association between each user or item, based on the user’s interest preferences, and then fuses this association information to predict the user’s interaction behaviour. Collaborative filtering recommendation algorithms include user-, item-, and model-based recommendations.

User-based recommendations are based on a fundamental assumption that users in the same user group tend to choose similar items. The similarity between users can be in terms of demographic characteristics, such as age, gender, occupation, etc., or in terms of historical behaviourial records, such as clicked or followed the same type of items, or even giving the same rating for certain items. Item-based recommendations are based on the fundamental assumption that items of the same class tend to be interacted with by similar users, and that similarity between items is calculated not on the basis of the items themselves, but on the basis of users’ historical interactions with the items. Model-based recommendations broadly refer to many recommendation algorithms that use theories and tools from machine learning and data mining that can model a variety of recommendation scenarios [27].

A deep neural network is a feed-forward neural network [41], which is trained based on the error backpropagation algorithm and has a strong fitting and learning capability to fit and learn more complex functions due to its multiple hidden layers and the use of a non-linear activation function. Deep neural networks use the BP algorithm to continuously adjust the weight parameters in the network so that the loss function reaches a minimum value [42]. The training of neural networks includes forward propagation of signal and backpropagation of error. In the process of forward propagation, the input signal passes from the input layer through multiple hidden layers and finally reaches the output layer, and the state of neurons in each layer only affects the state of neurons in the next layer but not the state of neurons in this layer. If the state of the neurons in the output layer is not the desired output, then the neural network performs backpropagation of the error, which propagates the error signal backwards along the neural network and makes the error continuously decrease by modifying the weights between the neurons in each layer [43]. Deep neural networks generally include an input layer, hidden layer, and output layer. Each layer comprises multiple neurons, adjacent layers are fully connected to each other, neurons between the same layer are not connected to each other, and the hidden layer generally has multiple.

3. The Proposed Algorithm in This Paper

3.1. Overall Design of the Algorithm

Traditional collaborative filtering recommendation algorithms make recommendations for users based on their historical behavioural data, which only focuses on single explicit feature information of users’ rating data and cannot learn the deeper implicit non-linear feature information in the data, such as the user’s age, user’s occupation, movie’s subject matter, and other feature information [4]. The collaborative filtering recommendation algorithm has a significant head effect on the recommendation results and is weak in handling sparse vectors [44]. The collaborative filtering recommendation algorithm only utilizes information about the interaction between the user and the movie, i.e., the movie rating, and does not utilize information about the user’s own attributes, such as the user’s age, occupation, etc., or the movie’s own attributes, such as the movie’s title or subject matter, which undoubtedly results in the omission of valid information [22]. Although the function of recommendation can also be achieved by using a single dominant feature information, the result of recommendation is often less accurate and cannot meet the personalized needs of users. Therefore, this paper uses the attribute information of users, movies, and the movie rating information to design two neural networks, one for learning user features, named the user network, and one for learning movie features, named the movie network.

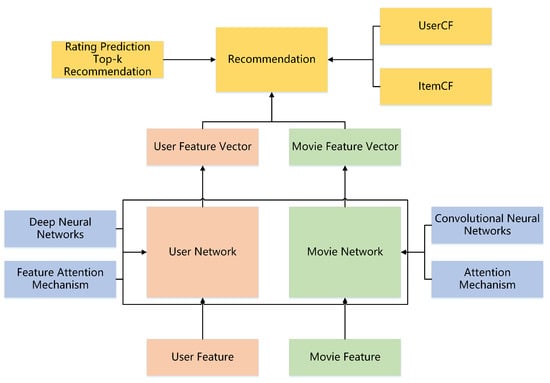

The user network uses a deep neural network to learn the user’s features, the input is each attribute feature of the user, and the output is the user feature vector. In order to focus on the critical user attribute information, the feature attention mechanism is used to reinforce the important feature information. The movie network uses a deep neural network and convolutional neural network to learn movie features, and the convolutional neural network is used to process movie title features. In order to make the extracted movie title features more accurate, an attention mechanism is added to the convolutional neural network, and the input is each attribute feature of the movie, and the output is the movie feature vector. In order to focus on the important movie attribute information, the feature attention mechanism is used to strengthen the important feature information. After obtaining the user and movie feature vectors, the movie rating is fitted using the number product of the vectors, and after training, the predicted rating values are compared with the real user rating values when the errors are backpropagated, and the parameters in the model are continuously adjusted and optimized to finally obtain the user feature vector that can characterize the user and the movie feature vector that characterizes the movie features. After obtaining the user and movie feature vectors that can accurately characterize users and movies, three movie recommendation schemes are given, one is a top-k recommendation based on predicted ratings, and the other two are a user-based collaborative filtering recommendation and a movie-based collaborative filtering recommendation, respectively. The overall design structure of the algorithm is shown in Figure 1.

Figure 1.

General algorithm design structure diagram. User and movie features are passed through the user and movie networks to generate user and movie feature vectors, respectively, and then the recommendation strategy is applied.

3.2. User Network Architecture Design

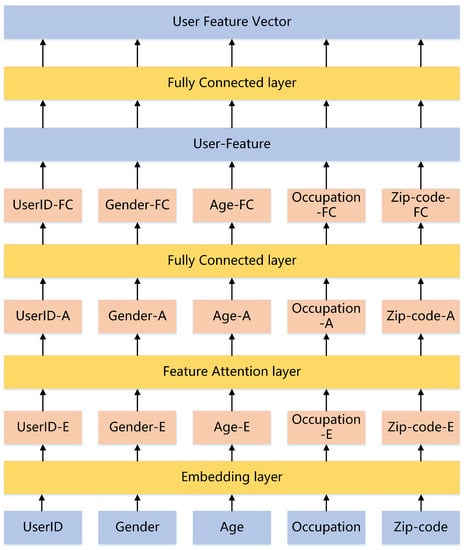

The inputs to the user network are user attribute characteristics such as user ID, gender, age, occupation, and zip code. Each category of input features first passes through the embedding layer to become a dense feature vector, then the features passes through the feature attention layer to dynamically learn the importance of each feature and assign weights to each feature, used to amplify important user features by large weights and suppress invalid user features by small weights, then each passes through the fully connected layer to obtain a highly abstract representation of each feature, each feature is then stitched together to form a feature vector and finally passes through the fully connected layer again to form a user feature vector. The user network structure diagram is shown in Figure 2.

Figure 2.

User network structure diagram. User features are sequentially passed through the embedding layer, feature attention layer, and fully connected layer to generate user feature vectors.

The feature attention layer first finds the weights of each user feature, and then multiplies the found weights to the corresponding feature vectors, thus completing the weighting operation of feature importance. Step one is the compression operation of the feature vectors, where the dimensionality of the feature vectors can be different, and the mean value of each feature vector is found to serve the purpose of data compression and information aggregation for each feature vector, which means that each user feature vector is compressed to a value, and then each value representing the user features is stitched into a vector, and the dimensionality of the vector is the number of user attribute features, which is five dimensions here. The number of neurons in this layer is set to three, and the number of neurons in this layer is set to less than five. This layer is mainly used for feature crossover, so that each user attribute feature fully interacts with each other through the fully connected layer. For user attribute features, they are interrelated to dynamically determine which features are important and which are not. After this, a fully connected layer is added, mainly to ensure that the dimension size of the output and the number of user attribute features are the same, so the number of neurons in this layer is five. After the mapping of the two fully connected layers, a weight vector with the same number of dimensions as the number of user attribute features is generated.

Suppose the user’s ith attribute feature is , then the compressed information value of the user’s ith attribute feature is , and the formula is shown in Equation (1), where is the user’s ith attribute feature vector, m is the dimension of the user’s ith attribute feature vector, and , x is the vector stitched by the compressed information value of the user’s ith attribute feature. The weight vector of the feature is calculated as shown in Equation (2), , denotes the weight of the user’s ith attribute feature, and the first fully connected layer uses the ReLU activation function, the second fully connected layer uses the Softmax activation function.

The user feature vector with attention is obtained by multiplying the ith attribute feature weight with the ith attribute feature vector , and the formula is shown in Equation (3).

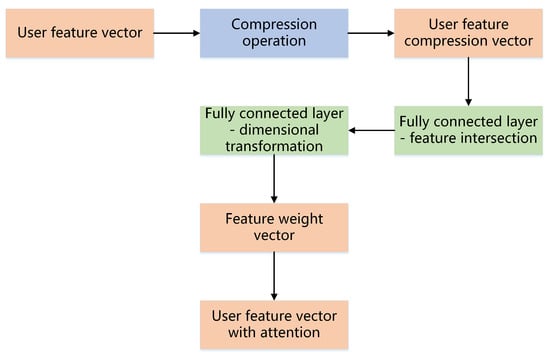

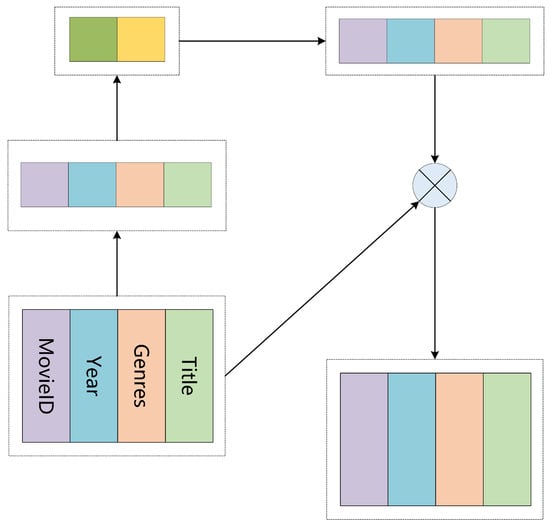

The flow chart of user network feature attention layer processing is shown in Figure 3, and the schematic diagram of user network attention calculation is shown in Figure 4.

Figure 3.

User network feature attention layer processing flow chart. The user feature vector is first obtained as a user feature compression vector then as a feature weight vector and finally as a user feature vector with attention.

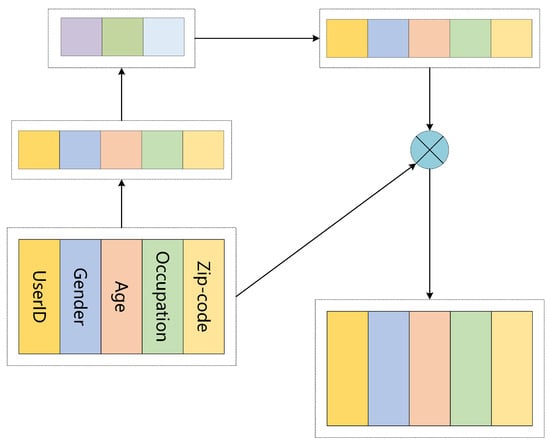

Figure 4.

User network attention calculation schematic. The user features are first obtained as attention scores, and then the attention scores are element-wise product with the user features to obtain the vector of user features with attention.

3.3. Movie Network Architecture Design

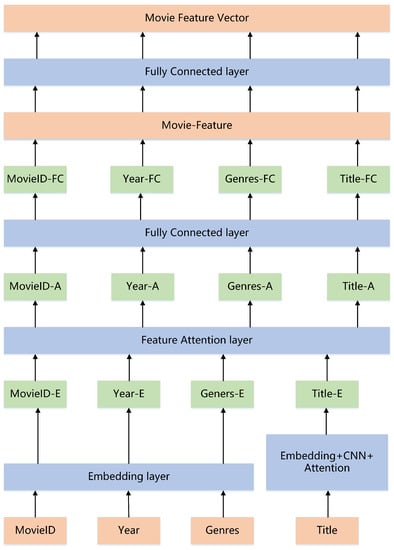

The inputs to the movie network are movie attribute features such as movie ID, title, and release date. Firstly, there are multiple input features which are processed separately. The input category features are transformed into dense feature vectors through the embedding layer, the input text features are transformed into dense feature matrices through word separation and encoding and then passed through the convolutional neural network plus attention mechanism to obtain text feature vectors, and the input multi-category features are transformed into feature vectors through muti-hot encoding. Secondly, the processed features go through the feature attention layer to dynamically learn the importance of each feature and assign weights to each feature, performed by amplifying important movie features with large weights and suppressing invalid movie features with small weights, and then each goes through the fully connected layer to obtain a highly abstract representation of each feature. Finally, each feature is stitched together to form a feature vector to go through the fully connected layer to form a movie feature vector. The structure diagram of the movie network is shown in Figure 5.

Figure 5.

Movie network structure diagram. The title feature in movie features goes through the CNN layer, other features go through the embedding layer, and then through the feature attention and fully connected layers in turn to generate a movie feature vector.

The feature attention layer first finds the weights of each feature of the movie, and then multiplies the found weights to the corresponding feature vectors, thus completing the weighting operation of feature importance. Finding the weights can be divided into two steps. Step one is the compression operation of the feature vectors, where the dimensionality of the feature vectors can be different, finding the mean value for each feature vector, which serves the purpose of data compression and information aggregation for each feature vector, that is to say, each movie feature vector is compressed to one value. Each value representing the movie features is then stitched into one vector, and the dimensionality of the vector is the number of movie attribute features, here it is four-dimensional. The number of neurons in this layer is set to two, and the number of neurons in this layer is set to less than four. This layer is mainly used for feature crossover, so that each movie attribute feature can fully interact with each other through the fully connected layer, and for movie attribute features, they can be interrelated to dynamically determine which features are important and which are not. After this, a fully connected layer is added, mainly to ensure that the dimensional size of the output and the number of movie attribute features are the same, so the number of neurons in this layer is four. After the mapping of the two fully connected layers, a weight vector with the same number of dimensions as the number of movie attribute features is generated.

Suppose the ith attribute of the movie is , then the compressed information value of the ith attribute of the movie is , and the formula is shown in Equation (4), where is the ith attribute vector of the movie, m is the dimension of the ith attribute vector of the movie, , and x is the vector formed by the compressed information value of the ith attribute feature of the movie. The formula of the feature weight vector is shown in Equation (5), , and denotes the weight of the ith attribute of the movie. The first fully connected layer uses the ReLU activation function and the second fully connected layer uses the Softmax activation function.

The movie feature vector with attention is obtained by multiplying the ith attribute feature weight with the ith attribute feature vector , and the formula is shown in Equation (6).

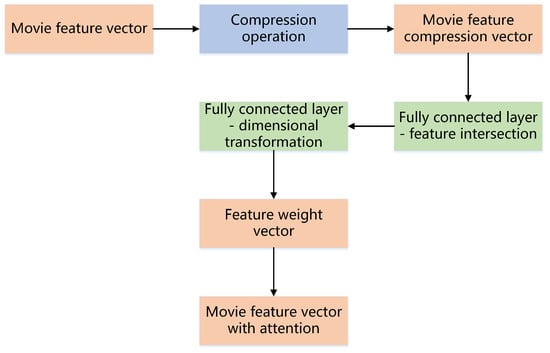

The flowchart of movie network feature attention layer processing is shown in Figure 6, and the schematic diagram of movie network attention calculation is shown in Figure 7.

Figure 6.

Movie network feature attention layer processing flow chart.

Figure 7.

Calculation diagram of movie network attention.

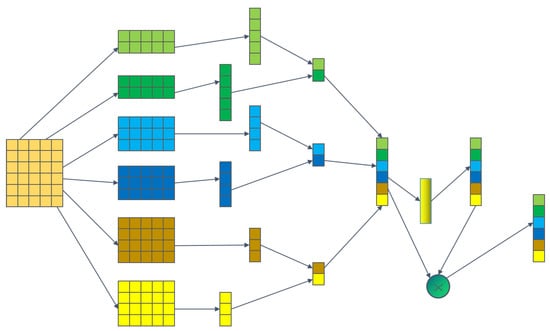

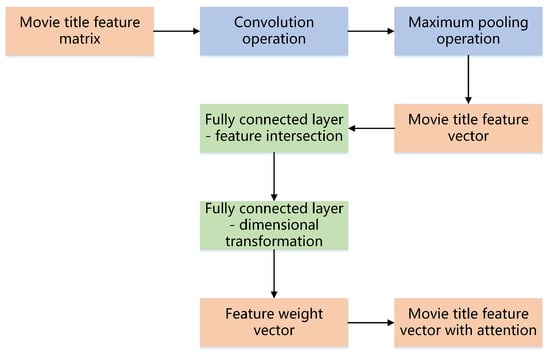

The movie title features are textual features, and for textual features, this paper uses convolutional neural networks and adds an attention mechanism to extract textual features [45]. The input of this module is the feature matrix of movie titles, each row represents a word vector, and the number of rows of the feature matrix represents the number of words in a movie title. In the data processing, the number of words of movie titles in each sample is unified, and the movie title with the maximum length is used as the benchmark, and the movie titles with less than the maximum length in other samples are used to fill the operation, so that the movie titles of all samples are of uniform length.

In processing image data, the height and width of convolutional kernels used in the convolutional neural networks are consistent, but in the text domain, it is necessary to set the width of convolutional kernels to be consistent with the dimension of the word vector. This is because each row in the feature matrix represents a word vector, and in extracting features the word is used as the minimum granularity of the text, if the width of convolutional kernels is smaller than the dimension of the word vector it is not the minimum granularity of the word, and the height of convolutional kernels can be set by itself. Since a movie title is a sentence and the correlation between adjacent words in a sentence is high, it can be operated with convolution, which takes into account not only the word’s meaning but also the context.

After the size of the convolution kernel is specified, the convolution can be performed. The result of one convolution operation is obtained by multiplying the convolution kernel with the corresponding positions of the corresponding feature matrix and then summing. With the sliding of the convolution kernel window, the feature matrix is continuously convolved, and finally, a feature vector of a certain dimension is obtained. Multiple convolutional kernels of different heights can be set, and multiple convolutional kernels of each different height can be set. Next, the pooling operation is performed, and here the maximum pooling strategy is used, taking the maximum of the values in each dimension of the feature vector obtained by the convolution operation until all pooling operations are completed. Finally, the values obtained by convolution and pooling operations under each convolution kernel are stitched into a vector as the feature representation of the movie title, whose dimension is the number of convolution kernels.

After obtaining the feature representation of the movie title, an attention mechanism layer is added so that the values obtained from operations under different convolution kernels receive different weights, and then the weights are multiplied onto the movie title feature representation vector to obtain the final movie title feature representation vector. The first fully connected layer plays the role of feature crossover, and the feature values obtained under each convolutional kernel are fully crossed over to learn which feature values are more important under the convolutional kernel. The second fully connected layer serves to keep the weight feature and movie title feature representation vector dimensions consistent.

The input to the convolutional layer is a feature matrix representing a sentence of dimension , i.e., each sentence has k words, each word is represented by a l-dimensional word vector, and a sentence of length k words is represented as . A convolution kernel w with h height and l width is used to convolve with h words, i.e., , and then the feature is obtained by the ReLU activation function, and represents the jth convolution operation, which is shown in Equation (7).

After all the convolution operations under one convolution kernel are completed, a -dimensional vector f is generated, , where f is the vector stitched by the eigenvalues obtained from all the convolution operations under one convolution kernel. Next is the pooling operation, where the maximum pooling strategy is used, and the formula is shown in Equation (8), which completes the convolution and pooling operations under one convolution kernel to obtain the feature values after convolution and pooling operations under one convolution kernel.

After the convolution and pooling operations under all convolution kernels are completed, all the obtained features are stitched into a vector , where n is the number of convolution kernels. Next is the attention layer, and the weights are calculated as shown in Equation (9), first by passing the obtained vector p through a fully connected layer so that the features obtained under each convolutional kernel are fully crossed, and second by adding another fully connected layer so that the dimensionality of the weight vector is the same as the number of convolutional kernels.

Finally, the obtained weight vector is made a Hadamard product with the feature vector p obtained by convolution and pooling operations, and the formula is shown in Equation (10) to obtain the final movie title feature representation.

The schematic diagram of convolution, pooling, and attention calculation is shown in Figure 8, and the flow chart of movie title feature processing is shown in Figure 9.

Figure 8.

Convolution, pooling and attention calculation schematic.

Figure 9.

Movie title feature processing flowchart.

3.4. Movie Rating Prediction and Recommendation

The user and movie feature vectors are obtained after the user and movie networks, respectively, and the rating prediction is performed next. Here, the product of the number of user and movie feature vectors are used to fit the movie ratings, and the formula is shown in Equation (11), where is the fitted movie rating, u is the user feature vector, and v is the movie feature vector.

After obtaining the user and movie feature vectors that can accurately characterize users and movies, three movie recommendation schemes are given.

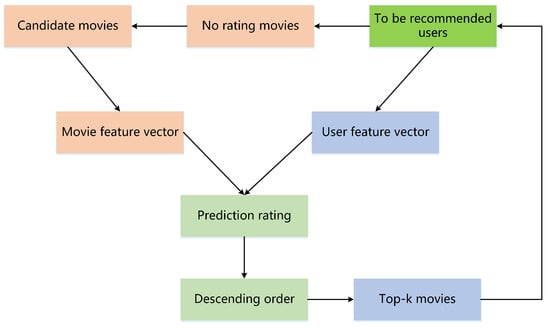

Scheme 1 is as follows: firstly, the candidate movies to be recommended to the user are selected, and the movies with no ratings from the user are screened as candidate movies. Secondly, the movie ratings are predicted by using the feature vector of the user and the feature vector of the candidate movies as a quantitative product; subsequently, the predicted ratings of the candidate movies are sorted in descending order. Finally, top-k recommendations are made. The schematic diagram of the recommendation strategy of scheme 1 is shown in Figure 10.

Figure 10.

Scheme 1 recommended strategy diagram.

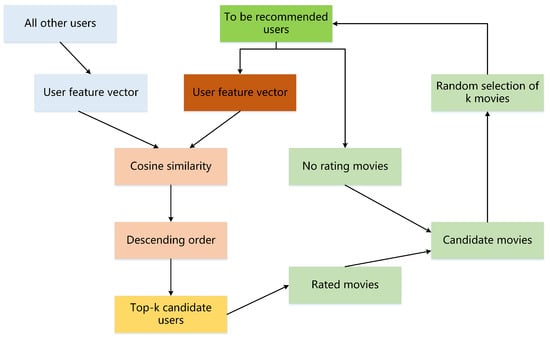

Scheme 2 is as follows: firstly, the similarity between the feature vector of the user and the feature vector of all other users is calculated, with the top-k users who are similar to the user to be recommended as the candidate users are filtered out; subsequently, a random selection of k movies rated by candidate users, but not rated by the targeted user, are recommended. The schematic diagram of the recommendation strategy of scheme 2 is shown in Figure 11.

Figure 11.

Scheme 2 recommended strategy diagram.

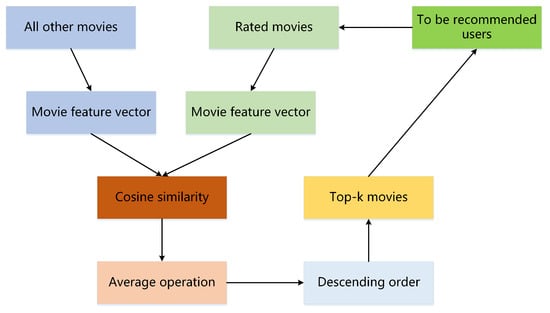

Scheme 3 is as follows: firstly, the similarity between the movie rated by the user to be recommended and all other movies is calculated. Secondly, we average the similarity between all other movies and the movie rated by the user to be recommended. Then we recommend the top-k movies to the user to be recommended. The schematic diagram of the recommendation strategy of scheme 3 is shown in Figure 12.

Figure 12.

Scheme 3 recommended strategy diagram.

The similarity in schemes 2 and 3 is calculated using the cosine similarity, and the formula is shown in Equation (12), where u is the user feature vector and v is the movie feature vector.

4. Experimental Design and Analysis

4.1. Dataset Introduction

The MovieLens 1M dataset, collected by the Grouplens team at the University of Minnesota, is used for this experiment and can be found at http://files.grouplens.org/datasets/movielens/ (accessed on 13 September 2022). The MovieLens 1M dataset contains 1,000,209 rating data of 3883 movies by 6040 users, including user attribute, movie attribute, and movie rating information. The user attribute information includes user ID, gender, age, occupation, postal code, etc. The movie attribute information includes movie ID, title, release time, etc. The rating information includes user ID, movie ID, movie rating and movie rating time, etc.

4.2. Experimental Environment Introduction

This experiment uses Python 3.6 as the development language, Jupyter Notebook as the development tool, a deep learning platform with TensorFlow as the basic framework, TensorFlow2 version, and the deep learning library Keras as the development library for the model, using 64-bit Windows 10 laptop with memory is 16.0 GB, and the processor is Intel(R) Core(TM) i5-7200U as the operating system.

4.3. Evaluation Metrics

In order to verify the accuracy and validity of the model predictions, five indicators, (mean square error), (mean absolute error), (mean absolute percentage error), (coefficient of determination), and (root mean square error), were selected for evaluation [46]. When the values of MSE, MAE, and RMSE are small, the value of MAPE tends to 0, and the value of tends to 1, the better the model fits the movie ratings and the more accurate the model predicts the movie ratings. , , , , and evaluation indicators are calculated as shown in Equations (13)–(17), where the predicted value is , the true value is y, and the number of samples is n.

where its value range is , and the larger the value, the larger the error.

where its value range is , and the larger the value, the larger the error.

where its value range is , and the larger the value, the larger the error.

where its value range is , and the closer the value is to 1, the smaller the error.

where its value range is , and the larger the value, the larger the error.

4.4. Performance Comparison with Classical Machine Learning Models

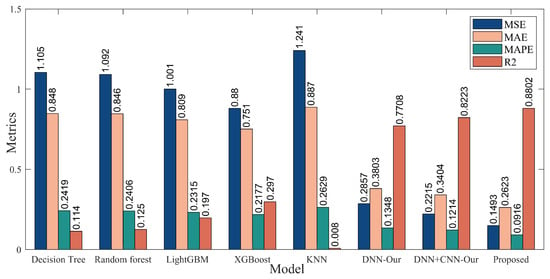

The performance comparison results of the DNN+CNN-attention+feature attention model proposed in this paper with classical machine learning models such as decision tree, random forest, LightGBM, XGBoost, and KNN on evaluation metrics , , , and are shown in Figure 13.

Figure 13.

Performance comparison chart with classical machine learning models.

From the results in Figure 13, it can be seen that the model proposed in this paper achieves the best performance, outperforming models such as decision tree, random forest, LightGBM, XGBoost, KNN, DNN-Our, and DNN+CNN-Our. Machine learning models, such as decision tree, random forest, LightGBM, XGBoost, and KNN, show worse performance than deep learning models in metrics such as , , , and , which indicates that deep learning models are capable of learning deep representations of features and have powerful learning capabilities. Classical machine learning models, such as decision tree, random forest, LightGBM, XGBoost, and KNN, have low performance compared with the model proposed in this paper, for which it is difficult to handle textual features which are poorly processed with label encoding, as is reported in the literature [47,48,49,50] where the data used do not have textual features. In addition, the DNN+CNN model achieves good performance compared to the DNN model, which indicates that the convolutional neural network performs well in learning movie text features, and the convolutional neural network is also able to learn accurate feature representations for text features. The performance of the model proposed in this paper is comparable with all the above models, which shows that the introduction of the attention mechanism in the CNN can assign different weights to different learned features. Meanwhile, the feature attention mechanism proposed in this paper is able to assign different weights to different user and movie features for the input, which achieves the different importance of different features for movie ratings, verifying the effectiveness of our proposed model.

4.5. Performance Comparison with Some of the Major and Recent Models

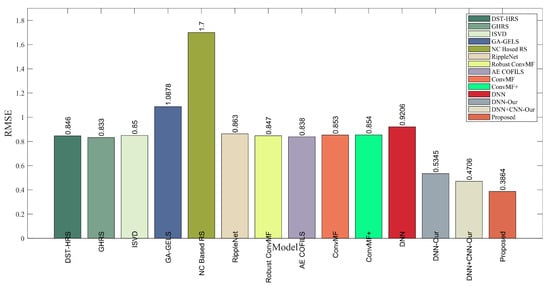

The model proposed in this paper was compared with some major approaches and some recent ones, and the performance comparison graph in terms of metrics is shown in Figure 14.

Figure 14.

Performance comparison chart with some major and recent models.

As can be seen from the results in Figure 14, the model proposed in this paper achieves the best performance, outperforming DST-HRS [51], GHRS [52], ISVD [53], GA-GELS [54], NC Based RS [55], RippleNet [56], Robust ConvMF [57], AE COFILS [58], ConvMF [59], ConvMF+ [59], DNN [60], DNN-Our, DNN+CNN-Our, and other models. DNN-Our shows good performance compared to DNN [60], indication that the user and movie networks designed in this paper learn user and movie features separately to obtain the user and movie feature vectors and then uses the number products of these two vectors to effectively fit the movie ratings. DNN+CNN-Our achieves good performance compared to DNN-Our, indicating that the movie title features learned by the convolutional neural network can reflect the movie title information well. The performance of the model proposed in this paper compares well with DST-HRS [51], GHRS [52], ISVD [53], GA-GELS [54], NC Based RS [55], RippleNet [56], Robust ConvMF [57], AE COFILS [58], ConvMF [59], and ConvMF+ [59]. All models have obvious advantages over each other, which fully demonstrates that the user and movie networks proposed in this paper learn user and movie features separately, and it is very effective in fitting movie ratings using the number products of the user and movie feature vectors. In addition, it also shows that the introduction of an attention mechanism in the CNN can assign different weights to different learned features, avoiding the problem that the learned features have the same importance. Meanwhile, the feature attention mechanism proposed in this paper can assign different weights to different user and movie features of the input, and realize the different importance of different features for movie scoring, fully validating the effectiveness of our proposed model.

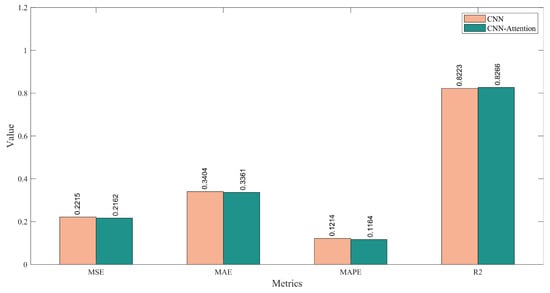

4.6. Performance Analysis of Text Feature Extraction Based on CNN-Attention

We designed experiments to further analyse the effect of attentional mechanisms on the effectiveness of convolutional neural networks in extracting text features. Figure 15 compares the effects of convolutional neural networks with or without attention mechanisms on the evaluation metrics of movie rating , , , and . From the experimental results in Figure 15, we can see that the convolutional neural network with the attention mechanism outperforms the convolutional neural network in the evaluation metrics of , , , and . This shows that the introduction of the attention mechanism in the convolutional neural network can assign different weights to different learned text features, avoiding the problem that the text features learned by the model have consistent weights, thus making the learned text features more accurate.

Figure 15.

CNN-Attention and CNN performance comparison.

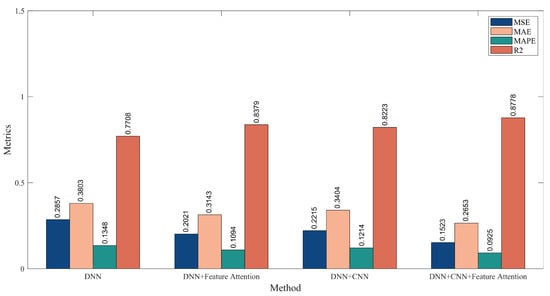

4.7. Feature Attention-Based Feature Extraction Performance Analysis

To verify the effectiveness of our proposed feature attention mechanism, we designed an experiment to compare the effects of DNN and DNN+Feature Attention, DNN+CNN and DNN+CNN+Feature Attention on the evaluation metrics of movie rating , , , and , respectively. The feature attention performance results graph is shown in Figure 16. From the experimental results in Figure 16, we can see that compared with DNN, DNN+Feature Attention has obvious advantages, improving by 0.0836, 0.066, 0.0254, 0.0671 in , , , and , respectively, indicating that different user and movie features have different contributions to movie ratings. This indicates that different user and movie features contribute differently to movie ratings, and the feature attention mechanism is used to make each feature have its own specific weight, achieving the different contributions of different features to movie ratings. Compared with DNN+CNN, DNN+CNN+Feature Attention improves by 0.0692, 0.0751, 0.0289, 0.0555 on , , , , respectively, which is equally apparent and shows the superiority of the feature attention mechanism, contributing to the movie scoring of different features with different weights, solving the problem of different features having the same weight, thus affecting the accuracy of movie ratings.

Figure 16.

Feature Attention performance results.

5. Conclusions

Traditional movie recommendation algorithms only utilize movie rating information. Considering that user and movie attribute information can have an impact on movie ratings, this paper considers user and movie attribute information and adds these two types of useful features to the model to participate in movie rating prediction. Considering the different importance of user and movie to movie ratings, this paper designs a feature attention mechanism to focus on features that are important in movie ratings. As can be seen from the experimental results in Figure 16, compared to DNN, DNN+Feature Attention has obvious advantages in , , , , as evaluation is improved by 0.0836, 0.066, 0.0254, 0.0671, respectively. Compared with DNN+CNN, DNN+CNN+Feature Attention has obvious advantages, with the , , , evaluation indexes are improved by 0.0692, 0.0751, 0.0289, 0.0555, respectively. In this paper, we design user and movie networks to learn user and movie features separately, and each network learns the user and movie feature vectors separately to carry out subsequent recommendation operations. After obtaining the user and movie feature vectors, they can be used flexibly to design a corresponding recommendation strategies. In this paper, three recommendation strategies were designed, for example, the first uses the product of the two to obtain the movie rating for a top-k recommendation; the second uses the user feature vector to calculate the similarity for collaborative filtering recommendation based on users; and the third uses the movie feature vector to calculate the similarity for collaborative filtering recommendation based on movies. In text feature processing, a convolutional neural network is used to process features and an attention mechanism is added to better focus on key features. From the experimental results in Figure 15, we can see that the convolutional neural network with the attention mechanism outperforms the convolutional neural network in , , , and evaluation metrics. On the MovieLens 1M dataset, using , , , , and evaluation metrics, a multi-feature attention mechanism movie recommendation algorithm based on deep neural network and a convolutional neural network proposed in this paper were compared with other models, showing that the , , and evaluation metrics were reduced and the was improved to a certain extent in the proposed algorithm. This shows that the movie recommendation algorithm proposed in this paper is effective.

This paper also has some limitations, this paper uses a convolutional neural network plus an attention mechanism to extract text features. In the future, we can use Transformer and other models with more powerful learning abilities to extract text features. The algorithm proposed in this paper can be applied in many fields. When the problem of a certain field is abstracted into a machine learning problem, the multi-feature attention mechanism can be used to extract features, and multiple networks can be abstracted to learn each feature separately to obtain multiple feature vectors according to the problem.

Author Contributions

Conceptualization, S.Y. and M.G.; methodology, S.Y.; software, S.Y.; validation, S.Y.; formal analysis, S.Y.; investigation, S.Y.; resources, S.Y.; data curation, S.Y.; writing—original draft preparation, S.Y.; writing—review and editing, M.G.; visualization, S.Y. and M.G.; supervision, M.G., X.C. and J.S.; project administration, M.G. and J.Q.; funding acquisition, M.G., X.C. and J.Q. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation of China (Grant No. 61903170, 62173175 and 61877033), the Natural Science Foundation of Shandong Province (Grant No. ZR2019BF045 and ZR2019MF021), and the Key Research and Development Project of Shandong Province of China (Grant No. 2019GGX101003).

Data Availability Statement

The dataset can be found at http://files.grouplens.org/datasets/movielens/ (accessed on 13 September 2022).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Li, C. An Advertising Recommendation Algorithm Based on Deep Learning Fusion Model. J. Sens. 2022, 2022, 1632735. [Google Scholar] [CrossRef]

- Zhao, K.; Ge, L. A survey on the internet of things security. In Proceedings of the 2013 Ninth International Conference on Computational Intelligence and Security, Emeishan, China, 14–15 December 2013; pp. 663–667. [Google Scholar]

- Wu, T.; Sun, F.; Dong, J.; Wang, Z.; Li, Y. Context-aware session recommendation based on recurrent neural networks. Comput. Electr. Eng. 2022, 100, 107916. [Google Scholar] [CrossRef]

- Yu, S.; Qiu, J.; Bao, X.; Guo, M.; Chen, X.; Sun, J. Movie Rating Prediction Recommendation Algorithm based on XGBoost-DNN. In Proceedings of the 2022 12th International Conference on Information Science and Technology (ICIST), Kaifeng, China, 14–16 October 2022; pp. 288–293. [Google Scholar]

- Li, J.; Xu, W.; Wan, W.; Sun, J. Movie recommendation based on bridging movie feature and user interest. J. Comput. Sci. 2018, 26, 128–134. [Google Scholar] [CrossRef]

- Zhang, Z.; Qiang, C.; Duan, S. Review of personalized movie recommendation algorithms. Comput. Knowl. Technol. 2021, 17, 80–81, 84. [Google Scholar] [CrossRef]

- Li, D. Movie recommendation system based on multi-feature fusion. Comput. Mod. 2019, 8, 121–126. [Google Scholar]

- Inan, E.; Tekbacak, F.; Ozturk, C. Moreopt: A goal programming based movie recommender system. J. Comput. Sci. 2018, 28, 43–50. [Google Scholar] [CrossRef]

- Sahu, S.; Kumar, R.; MohdShafi, P.; Shafi, J.; Kim, S.; Ijaz, M.F. A Hybrid Recommendation System of Upcoming Movies Using Sentiment Analysis of YouTube Trailer Reviews. Mathematics 2022, 10, 1568. [Google Scholar] [CrossRef]

- Fang, W.; Sha, Y.; Qi, M.; Sheng, V.S. Movie Recommendation Algorithm Based on Ensemble Learning. Intell. Autom. Soft Comput. 2022, 34, 609–622. [Google Scholar] [CrossRef]

- Jayalakshmi, S.; Ganesh, N.; Čep, R.; Senthil Murugan, J. Movie recommender systems: Concepts, methods, challenges, and future directions. Sensors 2022, 22, 4904. [Google Scholar] [CrossRef]

- Manogaran, G.; Varatharajan, R.; Priyan, M.K. Hybrid recommendation system for heart disease diagnosis based on multiple kernel learning with adaptive neuro-fuzzy inference system. Multimed. Tools Appl. 2018, 77, 4379–4399. [Google Scholar] [CrossRef]

- Yeung, K.F.; Yang, Y. A proactive personalized mobile news recommendation system. In Proceedings of the 2010 Developments in E-systems Engineering, London, UK, 6–8 September 2010; pp. 207–212. [Google Scholar]

- Lei, M. A study of shopping behavior based on Alibaba big data. Internet Things Technol. 2016, 6, 57–60. [Google Scholar]

- Yuan, X.; Zhang, P.; Wang, J. “State-Behavior” Modeling and Its Application in Analyzing Product Information Seeking Behavior of E-commerce Websites Users. Data Anal. Knowl. Discov. 2015, 31, 93–100. [Google Scholar]

- Asenova, M.; Chrysoulas, C. Personalized Micro-Service Recommendation System for Online News. Procedia Comput. Sci. 2019, 160, 610–615. [Google Scholar] [CrossRef]

- Peng, J.; Xu, J. Personalized Product Recommendation Model of Automatic Question Answering Robot Based on Deep Learning. J. Robot. 2022, 2022, 1256083. [Google Scholar] [CrossRef]

- Sun, P. Music Individualization Recommendation System Based on Big Data Analysis. Comput. Intell. Neurosci. 2022, 2022, 7646000. [Google Scholar] [CrossRef]

- Liu, K.F.; Zhang, Y.; Zhang, Q.X.; Wang, Y.G.; Gao, K.L. Chinese News Text Classification and Its Application Based on Combined-Convolutional Neural Network. J. Comput. 2022, 33, 1–14. [Google Scholar] [CrossRef]

- Wang, D. Analysis of Sentiment and Personalised Recommendation in Musical Performance. Comput. Intell. Neurosci. 2022, 2022, 2778181. [Google Scholar] [CrossRef]

- Zeng, F.; Tang, R.; Wang, Y. User Personalized Recommendation Algorithm Based on GRU Network Model in Social Networks. Mob. Inf. Syst. 2022, 2022, 1487586. [Google Scholar] [CrossRef]

- Chen, Z. Research on Movie Recommendation Algorithm Based on Convolutional Block Attention Module-Convolutional Neural Networks Model. Master’s Thesis, Anhui University of Science and Technology, Huainan, China, 2021. [Google Scholar]

- Zhang, J.; Li, G. A fusion collaborative filtering algorithm based on rating information entropy. J. Nanjing Univ. Posts Telecommun. (Nat. Sci. Ed.) 2021, 41, 71–76. [Google Scholar] [CrossRef]

- Zhang, Z.; Zhou, C. Collaborative filtering recommendation algorithm combined with category preference. Comput. Appl. Softw. 2021, 38, 293–296. [Google Scholar]

- Chu, H.; Liu, Q.; Mou, C. Improved collaborative filtering recommendation algorithm for adjusted cosine similarity. J. Yantai Univ. Sci. Eng. Ed. 2021, 34, 330–336. [Google Scholar] [CrossRef]

- Yu, J.; Meng, J.; Wu, Q. Item collaborative filtering recommendation algorithm based on improved similarity measure. J. Comput. Appl. 2017, 37, 1387–1391, 1406. [Google Scholar]

- Zhao, Y. Research of Recommendation Algorithms Based on Convolutional Neural Network. Master’s Thesis, University of Electronic Science and Technology of China, Chengdu, China, 2021. [Google Scholar]

- Liu, F.; Wang, Q.; Hao, J. A Survey of Recommendation System based on Deep Neural Network. J. Shandong Norm. Univ. Nat. Sci. 2021, 36, 325–336. [Google Scholar]

- Chen, B. Research on Recommendation Algorithm Based on Comment Text. Master’s Thesis, Nanjing University of Posts and Telecommunications, Nanjing, China, 2021. [Google Scholar]

- Zhou, C.; Bai, J.; Song, J.; Liu, X.; Zhao, Z.; Chen, X.; Gao, J. Atrank: An attention-based user behavior modeling framework for recommendation. In Proceedings of the Thirty-Second AAAI Conference on Artificial Intelligence, New Orleans, LA, USA, 2–7 February 2018; pp. 4564–4571. [Google Scholar]

- Liu, Z. Research on Sequence Recommendation Method Based on Hybrid Neural Network. Master’s Thesis, Kunming University of Science and Technology, Kunming, China, 2021. [Google Scholar]

- Wang, Y.; Xu, S. Method of Multi-feature Fusion Based on Attention Mechanism in Malicious Software Detection. In Proceedings of the Artificial Intelligence and Security: 6th International Conference (ICAIS 2020), Hohhot, China, 17–20 July 2020; pp. 3–13. [Google Scholar]

- Li, M.; Yuan, L.; Wen, X.; Wang, J.; Xie, G.; Jia, Y. Multi-Scale Attention Network Based on Multi-Feature Fusion for Person Re-Identification. In Proceedings of the 2021 International Joint Conference on Neural Networks (IJCNN), Shenzhen, China, 18–22 July 2021; pp. 1–8. [Google Scholar]

- Niu, Z.; Zhong, G.; Yu, H. A review on the attention mechanism of deep learning. Neurocomputing 2021, 452, 48–62. [Google Scholar] [CrossRef]

- Zhao, J.; Zhuang, F.; Ao, X.; He, Q.; Jiang, H.; Ma, L. Survey of Collaborative Filtering Recommender Systems. J. Cyber Secur. 2021, 6, 17–34. [Google Scholar]

- Roy, A.; Banerjee, S.; Sarkar, M.; Darwish, A.; Elhoseny, M.; Hassanien, A.E. Exploring New Vista of intelligent collaborative filtering: A restaurant recommendation paradigm. J. Comput. Sci. 2018, 27, 168–182. [Google Scholar] [CrossRef]

- Bhaskaran, S.; Marappan, R.; Santhi, B. Design and Analysis of a Cluster-Based Intelligent Hybrid Recommendation System for E-Learning Applications. Mathematics 2021, 9, 197. [Google Scholar] [CrossRef]

- Zhu, W.; Xie, Y.; Huang, Q.; Zheng, Z.; Fang, X.; Huang, Y.; Sun, W. Graph Transformer Collaborative Filtering Method for Multi-Behavior Recommendations. Mathematics 2022, 10, 2956. [Google Scholar] [CrossRef]

- Bobadilla, J.; Serradilla, F.; Bernal, J. A new collaborative filtering metric that improves the behavior of recommender systems. Knowl.-Based Syst. 2010, 23, 520–528. [Google Scholar] [CrossRef]

- Yan, H.; Tang, Y. Collaborative filtering based on Gaussian mixture model and improved Jaccard similarity. IEEE Access 2019, 7, 118690–118701. [Google Scholar] [CrossRef]

- Zhang, X. Aircraft pitch motion response prediction research based on DNN. Flight Dyn. 2022, 40, 53–60. [Google Scholar]

- Zhang, H.; Yu, H.; Qiao, Y.; Xu, M. Research on college students’ abnormal behavior diagnosis model based on DNN. Mod. Electron. Tech. 2022, 45, 57–61. [Google Scholar]

- Liu, Y. Research and Application of Deep Neural Network Prediction Fused with Variational Mode Decomposition. Master’s Thesis, Changchun University of Technology, Changchun, China, 2022. [Google Scholar]

- Guo, G.; Zhang, J.; Thalmann, D. Merging trust in collaborative filtering to alleviate data sparsity and cold start. Knowl.-Based Syst. 2014, 57, 57–68. [Google Scholar] [CrossRef]

- Kim, Y. Convolutional Neural Networks for Sentence Classification. In Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1746–1751. [Google Scholar]

- Li, Y. Prediction of Shanghai-Shenzhen 300 Index Based on XGBoost-LSTM Neural Network. Master’s Thesis, Shandong University of Finance and Economics, Jinan, China, 2020. [Google Scholar]

- Pekel, E. Estimation of soil moisture using decision tree regression. Theor. Appl. Climatol. 2020, 139, 1111–1119. [Google Scholar] [CrossRef]

- Shehadeh, A.; Alshboul, O.; Al Mamlook, R.E.; Hamedat, O. Machine learning models for predicting the residual value of heavy construction equipment: An evaluation of modified decision tree, LightGBM, and XGBoost regression. Autom. Constr. 2021, 129, 103827. [Google Scholar] [CrossRef]

- Chen, T.; Guestrin, C. XGBoost: A Scalable Tree Boosting System. In Proceedings of the 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Francisco, CA, USA, 13–17 August 2016; pp. 785–794. [Google Scholar]

- Breiman, L. Random Forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Khan, Z.; Iltaf, N.; Afzal, H.; Abbas, H. DST-HRS: A topic driven hybrid recommender system based on deep semantics. Comput. Commun. 2020, 156, 183–191. [Google Scholar] [CrossRef]

- Darban, Z.Z.; Valipour, M.H. GHRS: Graph-based hybrid recommendation system with application to movie recommendation. Expert Syst. Appl. 2022, 200, 116850. [Google Scholar] [CrossRef]

- Yuan, X.; Han, L.; Qian, S.; Xu, G.; Yan, H. Singular value decomposition based recommendation using imputed data. Knowl.-Based Syst. 2019, 163, 485–494. [Google Scholar] [CrossRef]

- Mohammadpour, T.; Bidgoli, A.M.; Enayatifar, R.; Javadi, H.H.S. Efficient clustering in collaborative filtering recommender system: Hybrid method based on genetic algorithm and gravitational emulation local search algorithm. Genomics 2019, 111, 1902–1912. [Google Scholar] [CrossRef]

- Bag, S.; Kumar, S.; Awasthi, A.; Tiwari, M.K. A noise correction-based approach to support a recommender system in a highly sparse rating environment. Decis. Support Syst. 2019, 118, 46–57. [Google Scholar] [CrossRef]

- Wang, H.; Zhang, F.; Wang, J.; Zhao, M.; Li, W.; Xie, X.; Guo, M. RippleNet: Propagating User Preferences on the Knowledge Graph for Recommender Systems. In Proceedings of the 27th ACM International Conference on Information and Knowledge Management, Torino, Italy, 22–26 October 2018; pp. 417–426. [Google Scholar]

- Kim, D.; Park, C.; Oh, J.; Yu, H. Deep hybrid recommender systems via exploiting document context and statistics of items. Inf. Sci. 2017, 417, 72–87. [Google Scholar] [CrossRef]

- Barbieri, J.; Alvim, L.G.; Braida, F.; Zimbrão, G. Autoencoders and recommender systems: COFILS approach. Expert Syst. Appl. 2017, 89, 81–90. [Google Scholar] [CrossRef]

- Kim, D.; Park, C.; Oh, J.; Lee, S.; Yu, H. Convolutional Matrix Factorization for Document Context-Aware Recommendation. In Proceedings of the 10th ACM Conference on Recommender Systems, Boston, MA, USA, 15–19 September 2016; pp. 233–240. [Google Scholar]

- Zhang, L.; Luo, T.; Zhang, F.; Wu, Y. A recommendation model based on deep neural network. IEEE Access 2018, 6, 9454–9463. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).