Abstract

In this paper, a stochastic configuration based fuzzy inference system with interpretable fuzzy rules (SCFS-IFRs) is proposed to improve the interpretability and performance of the fuzzy inference system and determine autonomously an appropriate model structure. The proposed SCFS-IFR first accomplishes a fuzzy system through interpretable linguistic fuzzy rules (ILFRs), which endows the system with clear semantic interpretability. Meanwhile, using an incremental learning method based on stochastic configuration, the appropriate architecture of the system is determined by incremental generation of ILFRs under a supervision mechanism. In addition, the particle swarm optimization (PSO) algorithm, an intelligence search technique, is used in the incremental learning process of ILFRs to obtain better random parameters and improve approximation accuracy. The performance of SCFS-IFRs is verified by regression and classification benchmark datasets. Regression experiments show that the proposed SCFS-IFRs perform best on 10 of the 20 data sets, statistically significantly outperforming the other eight state-of-the-art algorithms. Classification experiments show that, compared with the other six fuzzy classifiers, SCFS-IFRs achieve higher classification accuracy and better interpretation with fewer rules.

Keywords:

interpretable linguistic fuzzy rules; fuzzy system; incremental learning; stochastic configuration; particle swarm optimization MSC:

68T05

1. Introduction

Neuro-fuzzy systems [1,2], which inherit the advantages of both fuzzy inference systems and neural networks, have attracted sustained attention. The fuzzy rules in neuro-fuzzy systems make the system interpretable and the trainable parameters make the precision of the system improvable via learning.

Interpretability is a very important research direction for neuro-fuzzy systems. Since interpretability is considered as the ability to express the meaning of a model in an understandable way, it is of great help to understand the function of the model and what is learned from it [3]. Although we have not yet found a way to measure interpretability mathematically, a fuzzy inference system with high interpretability should have comprehensible fuzzy partition and simple fuzzy rules. We should use fuzzy sets that are distinguishable and semantically clear and use as few fuzzy rules as possible to build an interpretable rule base. In [4], a fuzzy extreme learning machine (FELM) uses ILFRs to improve the interpretability of an extreme learning machine (ELM). ILFRs use several fuzzy sets to equally divide the input universe of discourse in order to better endow the fuzzy sets with semantic labels and generate random matrices to select features and to combine rules. ILFRs have been concerned in neuro-fuzzy modeling because of their advantages in interpretability. In [5,6], two deep fuzzy classifiers which adopt ILFRs are proposed. In order to improve the model transparency of the fuzzy broad learning system (FBLS), a Compact FBLS (CFBLS) using ILFRs has been improved in [7]. Although there are a large number of neuro-fuzzy systems which have been developed to improve interpretability and approximation accuracy, there is still room for further improvement. It is still a significant research problem to achieve good approximation accuracy with an appropriate number of fuzzy rules and determine the number of these fuzzy rules autonomously rather than through trial and error.

The most commonly used fuzzy system in the neural fuzzy model is the Takagi-Sugeno-Kang (TSK), which has been widely applied in many fields, such as regression [8], classification [9], model approximation [10], adaptive control [11], business [12], etc. Neural-fuzzy systems adopt the form of a neural network to represent the fuzzy system, and the training algorithm in the neural network is used to realize the extraction of fuzzy rules and the establishment of the fuzzy system without tedious manual intervention. The back propagation (BP) algorithm, a commonly used training algorithm in neural network, has been used for training neuro-fuzzy systems [13]. However, as datasets grow in size and dimension, the training algorithm of the neuro-fuzzy system has encountered many difficulties, such as training rate, overfitting, low interpretability, etc.

Because of the effectiveness and efficiency of randomized learning techniques, they have great potential in developing fast learning algorithms for neuro-fuzzy systems. In [14], the proposed Bernstein fuzzy system uses the ELM training system to simultaneously approximate smooth functions and their derivatives. In the generalized Bernstein fuzzy system, the premise parameters are randomly generated and the consequent parameters are determined by solving a linear optimization problem. In [15], a regularized extreme learning adaptive neuro-fuzzy inference system (R-ELANFIS) is designed to improve the generalization performance of a neuro-fuzzy system. In [16], a broad learning system (BLS) is proposed for fast learning of a large volume of data, which randomly assign the values of input parameters and expand the neurons in a broad manner without a retraining process. In [17], a TSK fuzzy system is combined with a broad learning system to establish a fuzzy broad learning system.

The scope of random parameters plays an important role in the training process of randomized learning approaches and has been proved to affect approximation capability [18]. A stochastic configuration network (SCN) is proposed in [19] to improve randomized learning techniques. SCN is built incrementally by assigning the random parameters under a supervisory mechanism and adaptively setting their range. The supervisory mechanism of SCN is helpful for ensuring universal approximation and good performance. Various SCN models have been developed over the past decade. In [20], a deep SCN is developed to randomly assign the wights of each layer under inequality constraints. In [21], a deep stacked SCN is established to automatically extract hidden units from data streams. In [22], the driving amount is introduced into the structure of SCN to improve the generalization approximation ability.

In this paper, a novel stochastic configuration based fuzzy inference system called SCFS-IFRs is proposed to improve the interpretability and performance of the fuzzy system, which uses an adaptive incremental learning approach based on the stochastic configuration algorithm to determine an appropriate architecture autonomously and ensure universal approximation and continuous performance improvement of SCFS-IFRs. The contribution of SCFS-IFRs can be summarized as follows:

- (1)

- For high interpretability, SCFS-IFRs implement a fuzzy system through ILFRs and determine the appropriate number of fuzzy rules by an adaptive incremental learning algorithm. ILFRs better endow fuzzy sets with semantic labels by equally dividing the input universe of discourse and the rule combination matrix is used to select activated fuzzy sets. They help to improve the semantic expression of fuzzy rules. Moreover, the incremental learning method of SCFS-IFRs which determines a suitable number of fuzzy rules, is also beneficial for improving interpretability and avoiding the problem of “rule explosion”.

- (2)

- For good performance, a kind of supervisory mechanism and an intelligence search process are to be considered in the adaptive incremental learning algorithm of SCFS-IFRs. The inequality constraints that the premise parameters of fuzzy rules need to satisfy are given, which can ensure that the performance of SCFS-IFRs will be improved along with the increase in rules. The PSO algorithm, an intelligence search technique, is used in the process of stochastic configuration to obtain better random parameters.

The rest of this article is organized as follows. In Section 2, we review the TSK fuzzy system and SCN. In Section 3, we elaborate the proposed SCFS-IFRs. In Section 4, numerical experiments verify the performance of the proposed SCFS-IFRs. Some concluding remarks are given in Section 5.

Table 1.

Table of abbreviations.

Table 2.

Table of variables.

2. Preliminaries

2.1. Takagi-Sugeno-Kang Fuzzy Inference System

The Takagi-Sugeno-Kang (TSK) fuzzy system proposed in [23], as one of the most important fuzzy systems, has been applied extensively in many different fields [24]. The consequent part of the fuzzy rule is the function of the input variables rather than the fuzzy set. Specifically, given an input vector , the typical fuzzy rules are of the form:

where is an antecedent fuzzy set of variable for the rule , is the consequent function of the rule and is the number of fuzzy rules.

Although the popular first-order TSK fuzzy system sets the consequent function as linear function of the input variables, can be any form function. The linear combination of a subset of the Chebyshev polynomial has been adopted by the rule consequent for improving the non-linear expression ability of TSK fuzzy system in [25], which is of the form.

where is the expanded input vector of form, is the weight of the linear combination, is the Chebyshev polynomial of the form with and is the highest degree of the used Chebyshev polynomial.

For the th fuzzy rule , the activation level is:

where is the membership function of .

As pointed out in [26,27,28], the output system can be calculated as

In the above TSK fuzzy system, the parameters of membership function and the consequent weight need to be determined. Traditional gradient descent algorithms can be used to identify these parameters, but is usually time-consuming with a great deal of input data. Many learning algorithms [29,30,31] have been proposed for accelerating the parameter identification procedure and developing the approximation performance. For example, the premise parameters can be generated randomly and the consequent parameters can be quickly calculated using a pseudoinverse.

2.2. Stochastic Configuration Network

The stochastic configuration network (SCN) [19], which develops a randomized method, generates random parameters under a supervisory mechanism. It can guarantee the approximation capability and determine an appropriate architecture.

For a giving target function , an SCN with hidden nodes is denoted as

where is the activation function with random parameters and , is the output weight of the hidden node. The residual error is denoted as

If is bigger than a predefined error tolerance , the SCN framework incrementally adds hidden nodes until the residual error reaches . The supervisory mechanism of an inequality constraint is of the form

where and is a sequence with and and is the random basis function of the new hidden node.

The output parameters are calculated by

The leading model shows continuous performance improvement and the universal approximation of SCN have been proved in [19].

Although the existence of random parameters satisfying the constraint conditions has been proved theoretically, how to find better parameters among these satisfying constraint conditions is still a meaningful problem. In this paper, the PSO algorithm is applied to find better parameters to further improve the performance of the system.

3. Stochastic Configuration Based Fuzzy Inference System with Interpretable Fuzzy Rules and Intelligence Search Process

The SCFS-IFRs model establishes a TSK fuzzy system of form (1) by adopting the following new method.

- (1)

- The ILFRs with the rule combination matrix are used to construct the TSK fuzzy system to guarantee model interpretability and avoid the “rule explosion” problem.

- (2)

- An adaptive incremental learning algorithm based on stochastic configuration is proposed to train the SCFS-IFR model and fix the suitable number of fuzzy rules.

- (3)

- The PSO algorithm is adopted to search for better parameters to improve the performance of the system in the process of stochastic configuration.

This section elaborates the proposed SCFS-IFRs. Firstly, the general structure of the SCFS-IFRs is introduced and then their adaptive learning algorithm is presented.

3.1. General Structure of SCFS-IFRs

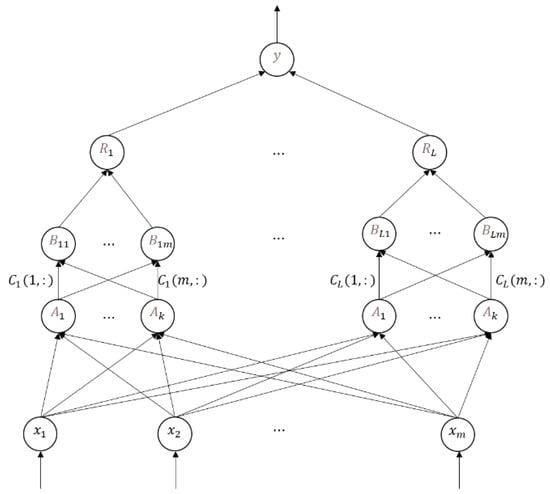

The details of SCFS-IFRs shown in Figure 1 are given as follows. Given a dataset with inputs and outputs , where and and suppose all input features are normalized to . We equally divide into parts, and correspondingly, each feature is transformed into fuzzy sets which are defined as follows

where is the Gaussian membership function of the th fuzzy set, is the th dimension of input feature , is the center of fuzzy set and is the randomly generated width. Here, the fuzzy sets can be assigned explicit linguistic labels. For instance, when , the five fuzzy sets can be assigned 5 linguistic labels: very low, low, medium, high, and very high. Compared with the random allocation of center parameters, the traditional equidistant partition method makes the fuzzy set more interpretable but may lead to “rule explosion”.

Figure 1.

Illustration of the SCFS-IFRs.

Motivated by [6], the rule combination matrix is used to determine which fuzzy sets of the input features are used to generate the fuzzy rules, where represents the th fuzzy set of the th feature in which the th fuzzy rule will be activated; conversely, it will not be activated. To ensure that at least one fuzzy set is activated for each feature in each rule, we require that . denotes the union of all activated fuzzy sets of the th feature in the th fuzzy rule, i.e.,

The SCFS-IFRs with ILFRs take the following form:

We can adopt the operator of algebraic sum to calculate the membership function of as

Therefore, the output and residual error of the SCFS-IFRs on dataset can be calculated as

where is the activation level of the th fuzzy rule, is the weight of the linear combination of the expanded input vector and denotes

Remark 1.

A SCFS-IFRs model is implemented in the framework of the TSK fuzzy system. The semantically clear fuzzy sets generated by equidistant partition and the rule combination matrix used to generate fuzzy rules contribute to the high interpretability of SCFS-IFRs.

For instance, let us have fuzzy rules, features for each input sample , fuzzy sets with linguistic labels: very low, low, medium, high, and very high. Given

The two ILFRs can be expressed as

In addition, the rule combination matrix defined in this paper allows multiple fuzzy sets corresponding to each feature in a fuzzy rule to be activated, which improves the semantic expression of fuzzy rules and makes it possible to express more complex relations with fewer rules.

3.2. Adaptive Incremental Learning for SCFS-IFRs

We propose an adaptive incremental learning algorithm to train the proposed SCFS-IFRs, which can be helpful in determining an appropriate model structure autonomously and ensure the approximation capability of SCFS-IFRs. In order to further improve the approximation accuracy, the PSO algorithm is adopted to search for better random parameters in the process of stochastic configuration.

The adaptive incremental learning algorithm starts with an initial system, which can be either an empty system or a system with rules generated by other traditional methods. Given training dataset , new fuzzy rules are increased incrementally in the light of a supervisory mechanism of stochastic configuration for improving the approximation performance until a predefined tolerance level is met.

Suppose that there is SCFS-IFRs with fuzzy rules of the form . The output and residual error of on dataset are expressed in the form

If does not reach the predefined tolerance level , i.e., , a new fuzzy rule should be generated to construct the new SCFS-IFRs with fuzzy rules. There are three parameters that should be determined for generating rule : the rule combination matrix (denoted by ), the width of gaussian membership function (denoted by ) and the weight of the linear combination in the consequent part (denoted by ).

The premise parameters and are randomly produced to satisfy the following inequalities

and the consequent parameter can be evaluated by

where is the regular coefficient, is the identity matrix, is of form and and is a sequence with and .

The output and the residual error of the can be represented as

and the improvement brought about from the new rule can be calculated as

The improvement provides the target fucntion for the choice of parameters and . There may be many values of parameters and that satisfy the inequality constraint . We adopt the PSO algorithm to search for better random parameters and which satisfy the inequality constraint and have smaller values of improvement , in order to improve the system performance.

In the process of stochastic configuration using the PSO algorithm, firstly pairs of parameters within . are randomly initialized as the position of initial population; correspondingly, pairs of parameters within are randomly initialized as the velocity vectors of the initial population. The fitness value of particle can be calculated by Equation .

At each iteration, the positions and velocity vectors can be updated as

where is the inertia weight factor, and are positive acceleration constants, and are random factors sampled from a uniform distribution , are represent the best position in the history of particle and the whole population, respectively. In this paper, we set the parameters of PSO as . Through iterations of PSO, we can obtain better parameters to generate new fuzzy rules to improve the performance of the system.

In this way, we gradually generate new fuzzy rules one by one and add them to the system until the residual error reaches the predefined error tolerance or the number of rules exceeds the given maximum rules number . The adaptive incremental learning algorithm can be summarized as follows.

Adaptive incremental learning algorithm of SCFS-IFRs:

Given dataset with inputs and outputs , the predefined maximum number of rules , the error tolerance , the population size , the maximum number of iterations of the PSO , the number of fuzzy sets for each feature, the upper bound of the width parameter and the regularization parameter .

- Initialize , ;

- Set center of Gaussian membership function ;

- While and , Do

- Set ;

- Randomly initialize particles with position and velocity vectors as and

- For to do

- Calculate the fitness value according to Equation ;

- Update the positions and velocity vectors using Equations (19) and (20);

- End for

- Set the best position as as ;

- Calculate according to Equation ;

- if Calculate according to Equations and respectively;

- Else randomly take , renew return to Step 4;

- End if

- Update ;

- End while

- Returen .

In this way, the adaptive incremental learning algorithm determines the structure and parameters of SCFS-IFRs. The random parameters are adaptively determined according to the inequality constraint and the appropriate architecture is determined. In the adaptive incremental learning algorithm, while the random parameters are optimized, the number of fuzzy sets for each feature, the regularization parameter , and the degree of Chebyshev polynomial are predefined and do not need to be optimized.

Remark 2.

The approximation capability of SCFS-IFRs can be analyzed as follows. If the random parametersof the new fuzzy rule satisfies the inequality constraintand the consequent parameteris evaluated by Equation (17), we can find thataccording to Equation (18). This means that. Since, it is obvious that, which proves the approximation capability of SCFS-IFRs.

4. Performance Evaluation

In this section, the performance of the proposed SCFS-IFRs is investigated. The proposed SCFS-IFRs is compared with some representative models such as RVFLN [32], ELM [33], RVFL-RS [34], HPFNN [35], SCN [19], SCBLS [36], BLS [16], FBLS [7], TSK-FC [37], L2TSK-FC [37], HID-TSK [6], CFBLS [7], FELM [4] and BL-DFIS [38] through numerical experiments on 20 regression datasets and 10 classification datasets.

For a fair comparison, we use the same training strategies for SCFS-IFRs as [6,7], i.e., a ten-fold cross validation. We repeat every experiment 30 times and report the average result. The optimal settings of parameters are obtained by grid search method. The number of fuzzy sets for each feature is chosen from {3,5,7}, while the regularization parameter is chosen from {}, and the degree of Chebyshev polynomial is chosen form {1,2,3,4}. The predefined maximum number of rules is set as 25, the population size is set as 50 and the maximum number of iterations of the PSO is set as 10.

4.1. Regression

20 regression datasets which are selected from the UCI [39] and KEEL[40] repositories are employed to evaluate the performance of the SCFS-IFRs in regression tasks. The relevant details of the 20 datasets are summarized in Table 3.

Table 3.

Details of 20 regression datasets from UCI and KEEL.

Table 4 compares the testing RMSE of the proposed models with another eight algorithms: RVFLN [32], ELM [33], RVFL-RS [34], HPFNN [35], SCN [19], SCBLS [36], BLS [16] and FBLS [7]. The results of RVFLN, ELM, RVFL-RS and HPFNN are borrowed from [35], and the results of SCN, SCBLS, BLS, FBLS are obtained from the source code provided by the cited author. It should be noted that, in order to be consistent with the results in [35], only the input features are normalized in this experiment. In Table 4, the bold items represent the smallest testing RMSE. Our SCFS-IFRs shows the smallest testing RMSE in 10 of 20 datasets while HPFNN shows the smallest testing RMSE in five datasets. It can be seen that SCFS-IFRs have better generalization performance than the other eight algorithms.

Table 4.

Comparisons of testing RMSE on 20 datasets.

In order to observe the statistical differences between SCFS-IFRs and the other eight algorithms in the 20 datasets, the Friedman test and the Holm post-hoc test are applied. The result of the Friedman test is displayed in Table 5. We can see that the SCFS-IFRs has the top rank and the -value is less than the significance level 0.1 which implies that there are significant differences in the ranking of generalization performance of these algorithms.

Table 5.

Result of Friedman tests (significance level of 0.10).

Then we compare SCFS-IFRs with other models using the Holm post-hoc test and the result is shown in Table 6. The null hypothesis in the Holm test is that the ranking of the two comparative methods has no significant difference. The significance level is set as 0.1. If the adjusted p-value of the Holm test is less than the significance level , this means we should reject the null hypothesis and there are remarkable differences between the two comparative methods. From the Holm test results in Table 6, we can see that the proposed SCFS-IFRs are significantly better than RVFLN, ELM, RVFL-RS, HPFNN, SCN, BLS, FBLS and SCBLS.

Table 6.

Result of Holm post-hoc tests (significance level of 0.10).

4.2. Classification

We select the following 10 datasets to evaluate the performance of the proposed SCFS-IFRs in classification tasks. The relevant details of the 10 classification datasets are summarized in Table 7.

Table 7.

Details of 10 classification datasets from UCI and KEEL.

The average classification accuracies for the proposed SCFS-IFRs and the other six fuzzy models, TSK-FC [37], L2TSK-FC [37], HID-TSK [6], CFBLS [7], FELM [4] and BL-DFIS [38], for the 10 classification datasets are reported in Table 8. The results of TSK-FC [37], L2TSK-FC, HID-TSK and CFBLS are borrowed from [7] and the results of FELM and BL-DFIS are borrowed from [38]. The bold items represent the highest testing classification accuracy. The comparisons of classification accuracy in Table 8 show that, SCFS-IFRs have the highest average classification accuracy. In terms of the classification accuracy of each data set, SCFS-IFRs have the highest classification accuracy in six of 10 data sets, while the accuracy of classification of SCFS-IFRs in the other four data sets is still comparable.

Table 8.

Comparisons of classification accuracies on 10 datasets.

The incremental learning algorithm is another advantage of SCFS-IFRs. This helps to achieve a compact architecture while maintaining good performance. Table 9 displays the comparisons of the average number of fuzzy rules that all seven models need to use in order to achieve the accuracy shown in Table 8. The bold items represent the minimum number of rules. From Table 9, It can be observed that the proposed SCFS-IFRs can achieve good classification accuracy with few rules.

Table 9.

Comparisons of average number of fuzzy rules on 10 datasets.

Finally, similar to Section 4.2, we use the Friedman test and the Holm post-hoc test to observe the statistical differences between SCFS-IFRs and the other six algorithms on the 10 classification datasets. The result of the Friedman test is displayed in Table 10. We can see that the SCFS-IFR has the highest ranking and the -value is 1.339 × . This implies that there are significant differences in the ranking of these algorithms.

Table 10.

Result of Friedman tests (significance level of 0.10).

The result of the Holm post-hoc test is listed in Table 11. The null hypothesis in the Holm test is that the rankings of the two comparative methods have no significant difference. From Table 11, we can see that the proposed SCFS-IFRs is significantly better than TSK-FC, L2TSK-FC, HID-TSK and FELM, but there is no significant difference in the comparison results between SCFS-IFRs, BL-DFIS and CFBLS.

Table 11.

Result of Holm post-hoc tests (significance level of 0.10).

However, SCFS-IFRs has an advantage over BL-DFIS and CFBLS in terms of interpretability. First, it can be seen from Table 9 that the average number of rules of SCFS-IFRs is 4.67, far less than the average number of rules for CFBLS, 11.56. The fewer the number of rules, the better the interpretability. Then, BL-DFIS use mapped features by ELM-AE as the premise variable of fuzzy rules, which is not conducive to the interpretation of the rule.

4.3. The Interpretability of SCFS-IFRs

Although a mathematical measure of interpretability has not been found, a fuzzy inference system with high interpretability should have clear semantic expression and simple fuzzy rules. We discuss the interpretability of SCFS-IFRs through one experimental result on the Monk2 task.

In this experiment, the input universe is divided into four equal parts, which implies five fuzzy subsets with five linguistic labels as shown in Section 3.1. The five fuzzy subsets have five fixed centers which are . In this experiment, two fuzzy rules generated by the proposed SCFS-IFRs are presented in Table 12. The widths of fuzzy subsets and the rule combination matrix are randomly assigned under the supervisory mechanism and optimized by the proposed adaptive incremental learning algorithm of SCFS-IFRs. The widths of the two fuzzy rules are 0.8139 and 0.4826. The rule combination matrixes of two fuzzy rules are:

and

where represents the the fuzzy set of the th input variable in the th fuzzy rule will be activated; conversely, it will not be activated.

Table 12.

Rule description for the Monk2 dataset.

The activated fuzzy sets and the polynomial consequent functions are listed in the two parts of Table 12, respectively. Since the highest degree of the used Chebyshev polynomial is set as 1, the polynomial consequent functions are linear functions.

In SCFS-IFRs, the fixed language labels of the antecedents make the intuitive interpretation of the antecedents possible, and the rule combination matrixes allow activation of more than one fuzzy set, corresponding to each feature in a fuzzy rule, which improves the semantic expression of fuzzy rules. This shortens the length of fuzzy rules while maintaining the performance of the model and avoids the “rule explosion” problem.

5. Conclusions

By incorporating stochastic configuration and ILFRs into a TSK fuzzy system, a novel neuro-fuzzy system named SCFS-IFRs is established for classification and regression data modeling. The SCFS-IFRs uses a stochastic configuration based incremental learning approach to determine the system architecture and the random parameters. Interpretable fuzzy rules are incrementally generated and the premise parameters are randomly selected based on stochastic configuration strategy. In addition, the PSO algorithm, an intelligence search technique, is used in the process of stochastic configuration to obtain better random parameters and further improve the approximation accuracy.

Hence, the proposed SCFS-IFRs improves the interpretability and approximation accuracy of the neuro-fuzzy system at the same time. We tested the model’s performance in both the regression task and the classification task. In the regression experiments, the results of 20 regression datasets which are selected from UCI [39] and KEEL [40] repositories show that the proposed SCFS-IFRs performs best on 10 of the 20 data sets while statistically significantly outperforming the other eight state-of-the-art algorithms. In the classification experiments, SCFS-IFRs achieves higher classification accuracy and better interpretation with fewer rules, compared with the other six fuzzy classifiers. In the future, a broad learning system can be considered to combine with SCFS-IFRs in order to improve approximation performance and decrease training time. In addition, it would be an interesting problem to analyze the approximation bound of SCFS-IFRs theoretically.

Author Contributions

Conceptualization, W.Z. and H.L.; methodology, W.Z.; software, W.Z.; validation, W.Z. and H.L. and M.B.; formal analysis, W.Z.; investigation, W.Z.; resources, M.B.; data curation, W.Z.; writing—original draft preparation, W.Z.; writing—review and editing, H.L.; visualization, W.Z.; supervision, W.Z.; project administration, W.Z.; funding acquisition, H.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the National Natural Science Foundation (NNSF) of China under Grant (61773088, 12071056).

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Jang, J.-S.R. ANFIS: Adaptive-Network-Based Fuzzy Inference System. IEEE Trans. Syst. Man Cybern. 1993, 23, 665–685. [Google Scholar] [CrossRef]

- Kar, S.; Das, S.; Ghosh, P.K. Applications of neuro fuzzy systems: A brief review and future outline. Appl. Soft Comput. 2015, 15, 243–259. [Google Scholar] [CrossRef]

- Pedrycz, W.; Chen, S.-M. (Eds.) Interpretable Artificial Intelligence: A Perspective of Granular Computing; Springer: Basingstoke, UK, 2021. [Google Scholar]

- Wong, S.Y.; Yap, K.S.; Yap, H.J.; Tan, S.C.; Chang, S.W. On Equivalence of FIS and ELM for Interpretable Rule-Based Knowledge Representation. IEEE Trans. Neural Netw. Learn. Syst. 2015, 26, 1417–1430. [Google Scholar] [CrossRef] [PubMed]

- Zhou, T.; Ishibuchi, H.; Wang, S. Stacked Blockwise Combination of Interpretable TSK Fuzzy Classifiers by Negative Cor-relation Learning. IEEE Trans. Fuzzy Syst. 2018, 26, 3327–3341. [Google Scholar] [CrossRef]

- Zhang, Y.; Ishibuchi, H.; Wang, S. Deep Takagi–Sugeno–Kang Fuzzy Classifier with Shared Linguistic Fuzzy Rules. IEEE Trans. Fuzzy Syst. 2018, 26, 1535–1549. [Google Scholar] [CrossRef]

- Feng, S.; Chen, C.L.P.; Xu, L.; Liu, Z. On the Accuracy–Complexity Tradeoff of Fuzzy Broad Learning System. IEEE Trans. Fuzzy Syst. 2021, 29, 2963–2974. [Google Scholar] [CrossRef]

- Siminski, K. Prototype based granular neuro-fuzzy system for regression task. Fuzzy Sets Syst. 2022, 449, 5. [Google Scholar] [CrossRef]

- Feng, S.; Chen, C.L.P.; Zhang, C.-Y. A Fuzzy Deep Model Based on Fuzzy Restricted Boltzmann Machines for High-dimensional Data Classification. IEEE Trans. Fuzzy Syst. 2020, 28, 1344–1355. [Google Scholar] [CrossRef]

- Sadjadi, E.N.; Herrero, J.G.; Molina, J.M.; Moghaddam, Z.H. On Approximation Properties of Smooth Fuzzy Models. Int. J. Fuzzy Syst. 2018, 20, 2657–2667. [Google Scholar] [CrossRef]

- Mahmoud, T.A.; Abdo, M.I.; Elsheikh, E.A.; Elshenawy, L.M. Direct adaptive control for nonlinear systems using a TSK fuzzy echo state network based on fractional-order learning algorithm. J. Frankl. Inst. 2021, 358, 9034–9060. [Google Scholar] [CrossRef]

- Rajab, S.; Sharma, V. A review on the applications of neuro-fuzzy systems in business. Artif. Intell. Rev. 2018, 49, 481–510. [Google Scholar] [CrossRef]

- Gaxiola, F.; Melin, P.; Valdez, F.; Castillo, O. Interval type-2 fuzzy weight adjustment for backpropagation neural networks with application in time series prediction. Inf. Sci. 2014, 260, 1–14. [Google Scholar] [CrossRef]

- Wang, D.-G.; Song, W.-Y.; Li, H.-X. Approximation properties of ELM-fuzzy systems for smooth functions and their derivatives. Neurocomputing 2015, 149, 265–274. [Google Scholar] [CrossRef]

- Kv, S.; Pillai, G. Regularized extreme learning adaptive neuro-fuzzy algorithm for regression and classification. Knowl.-Based Syst. 2017, 127, 100–113. [Google Scholar] [CrossRef]

- Chen, C.L.P.; Liu, Z. Broad Learning System: An Effective and Efficient Incremental Learning System Without the Need for Deep Architecture. IEEE Trans. Neural Netw. Learn. Syst. 2018, 29, 10–24. [Google Scholar] [CrossRef]

- Feng, S.; Chen, C.L.P. Fuzzy Broad Learning System: A Novel Neuro-Fuzzy Model for Regression and Classification. IEEE Trans. Cybern. 2020, 50, 414–424. [Google Scholar] [CrossRef]

- Li, M.; Wang, D. Insights into randomized algorithms for neural networks: Practical issues and common pitfalls. Inf. Sci. 2017, 382–383, 170–178. [Google Scholar] [CrossRef]

- Wang, D.; Li, M. Stochastic Configuration Networks: Fundamentals and Algorithms. IEEE Trans. Cybern. 2017, 47, 3466–3479. [Google Scholar] [CrossRef]

- Wang, D.; Li, M. Deep Stochastic Configuration Networks with Universal Approximation Property. In Proceedings of the 2018 International Joint Conference on Neural Networks (IJCNN), Rio de Janeiro, Brazil, 8–13 July 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Pratama, M.; Wang, D. Deep stacked stochastic configuration networks for lifelong learning of non-stationary data streams. Inf. Sci. 2018, 495, 150–174. [Google Scholar] [CrossRef]

- Wang, Q.; Dai, W.; Ma, X.; Shang, Z. Driving amount based stochastic configuration network for industrial process modeling. Neurocomputing 2020, 394, 61–69. [Google Scholar] [CrossRef]

- Sugeno, M.; Kang, G. Structure identification of fuzzy model. Fuzzy Sets Syst. 1988, 28, 15–33. [Google Scholar] [CrossRef]

- Ojha, V.; Abraham, A.; Snášel, V. Heuristic design of fuzzy inference systems: A review of three decades of research. Eng. Appl. Artif. Intell. 2019, 85, 845–864. [Google Scholar] [CrossRef]

- Pratama, M.; Lu, J.; Anavatti, S.; Lughofer, E.; Lim, C.-P. An incremental meta-cognitive-based scaffolding fuzzy neural network. Neurocomputing 2016, 171, 89–105. [Google Scholar] [CrossRef]

- Wang, S.; Chung, F.; HongBin, S.; Dewen, H. Cascaded centralized TSK fuzzy system: Universal approximator and high interpretation. Appl. Soft Comput. 2005, 5, 131–145. [Google Scholar] [CrossRef]

- Zhou, T.; Chung, F.-L.; Wang, S. Deep TSK Fuzzy Classifier with Stacked Generalization and Triplely Concise Interpretability Guarantee for Large Data. IEEE Trans. Fuzzy Syst. 2017, 25, 1207–1221. [Google Scholar] [CrossRef]

- Buckley, J. Sugeno type controllers are universal controllers. Fuzzy Sets Syst. 1993, 53, 299–303. [Google Scholar] [CrossRef]

- Zou, W.; Xia, Y.; Dai, L. Fuzzy Broad Learning System Based on Accelerating Amount. IEEE Trans. Fuzzy Syst. 2022, 30, 4017–4024. [Google Scholar] [CrossRef]

- Yousefi, J. A modified NEFCLASS classifier with enhanced accuracy-interpretability trade-off for datasets with skewed feature values. Fuzzy Sets Syst. 2021, 413, 99–113. [Google Scholar] [CrossRef]

- Han, H.-G.; Sun, C.; Wu, X.; Yang, H.; Qiao, J. Training Fuzzy Neural Network via Multi-Objective Optimization for Non-linear Systems Identification. IEEE Trans. Fuzzy Syst. 2022, 30, 3574–3588. [Google Scholar] [CrossRef]

- Pao, Y.-H.; Takefuji, Y. Functional-link net computing: Theory, system architecture, and functionalities. Computer 1992, 25, 76–79. [Google Scholar] [CrossRef]

- Huang, G.-B.; Zhou, H.; Ding, X.; Zhang, R. Extreme Learning Machine for Regression and Multiclass Classification. IEEE Trans. Syst. Man Cybern. Part B Cybern. 2012, 42, 513–529. [Google Scholar] [CrossRef] [PubMed]

- Vuković, N.; Petrović, M.; Miljković, Z. A comprehensive experimental evaluation of orthogonal polynomial expanded random vector functional link neural networks for regression. Appl. Soft Comput. 2018, 70, 1083–1096. [Google Scholar] [CrossRef]

- Zhang, C.; Oh, S.-K.; Fu, Z. Hierarchical polynomial-based fuzzy neural networks driven with the aid of hybrid network architecture and ranking-based neuron selection strategies. Appl. Soft Comput. 2021, 113, 107865. [Google Scholar] [CrossRef]

- Zhou, W.; Wang, D.; Li, H.; Bao, M. Stochastic configuration broad learning system and its approximation capability analysis. Int. J. Mach. Learn. Cybern. 2022, 13, 797–810. [Google Scholar] [CrossRef]

- Deng, Z.; Choi, K.-S.; Chung, F.-L.; Wang, S. Scalable TSK Fuzzy Modeling for Very Large Datasets Using Mini-mal-Enclosing-Ball Approximation. IEEE Trans. Fuzzy Syst. 2011, 19, 210–226. [Google Scholar] [CrossRef]

- Bai, K.; Zhu, X.; Wen, S.; Zhang, R.; Zhang, W. Broad Learning Based Dynamic Fuzzy Inference System with Adaptive Structure and Interpretable Fuzzy Rules. IEEE Trans. Fuzzy Syst. 2022, 30, 3270–3283. [Google Scholar] [CrossRef]

- Dua, D.; Graff, C. UCI Machine Learning Repository. University of California, Irvine, School of Information and Computer Sciences. 2017. Available online: http://archive.ics.uci.edu/ml (accessed on 26 February 2022).

- Triguero, I.; Gonzalez, S.; Moyano, J.M.; Garcıa, S.; Fernandez, A. KEEL 3.0: An Open Source Software for Multi-Stage Analysis in Data Mining. Int. J. Comput. Intell. Syst. 2017, 10, 1238–1249. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).