Abstract

Commonsense causal reasoning is the process of understanding the causal dependency between common events or actions. Traditionally, it was framed as a selection problem. However, we cannot obtain enough candidates and need more flexible causes (or effects) in many scenarios, such as causal-based QA problems. Thus, the ability to generate causes (or effects) is an important problem. In this paper, we propose a causal attention mechanism that leverages external knowledge from CausalNet, followed by a novel fusion mechanism that combines global causal dependency guidance from the causal attention with local causal dependency obtained through multi-layer soft attention within the CNN seq2seq architecture. Experimental results consistently demonstrate the superiority of the proposed framework, achieving BLEU-1 scores of 20.06 and 36.94, BLEU-2 scores of 9.98 and 27.78, and human-evaluated accuracy rates of 35% and 52% for two evaluation datasets, outperforming all other baselines across all metrics on both evaluation datasets.

Keywords:

deep learning; sequence to sequence; convolutional networks; commonsense causal reasoning; fine-tuning MSC:

68T50

1. Introduction

Commonsense causal reasoning entails inferring causal relations between everyday events or actions. For example, the statement “Amanda feels hot” can reasonably cause the effect “Amanda turns on the fan”, indicating a causal link between the two events. Previous works [1,2] have proposed various causal strength metrics to rank these candidates and identify the most plausible alternative for the premise. However, these ranking models for commonsense causality rely on human-labeled candidates, which is impractical for many natural language processing (NLP) generation scenarios, such as question answering and dialog completion. To address this limitation, we reframe the commonsense causal reasoning task as a generation problem. In this formulation, the model is presented with a cause (or effect) sentence as the premise and is tasked with generating reasonable effect (or cause) sentences as targets. We refer to the cause-to-effect inference process as “forward reasoning”. For instance, given the cause sentence “Amanda feels hot”, potential effect sentences could be “Amanda turns on the fan” or “Amanda takes off her coat”, among others. In contrast, “backward reasoning” treats the input sentence as an effect and attempts to infer the cause. An exemplary cause output for the effect “Amanda feels hot” might be “The air-conditioner stops working”.

Existing approaches for causal reasoning [1,2,3,4,5] have predominantly relied on a selection-based style. Although these methods can be adapted to support generation-based causal reasoning, the adaptation process involves automatically generating a candidate set for each premise and subsequently employing the selection-based methods to reason about causal relationships. However, these adapted methods suffer from two limitations. Firstly, incorporating an additional online step to construct an appropriate candidate set for each premise results in a considerable computational burden. The computational cost of this step can lead to inefficiencies in the reasoning process. Secondly, the adapted model is restricted to selecting targets exclusively from the predefined candidates, thereby diminishing its flexibility in handling diverse causal reasoning tasks. In recent years, sequence-to-sequence (seq2seq) learning has achieved tremendous success in various text generation applications, such as machine translation [6,7,8,9,10], summarization [11,12,13,14,15,16], language modeling [17,18,19], story generation [20,21], and dialogue [22,23,24]. Building upon this progress, this paper introduces a novel model based on the convolutional sequence-to-sequence framework [25], equipped with the causal attention fusion mechanism to empower generation-based causal reasoning tasks.

Within the encoder-decoder sequence-to-sequence architecture, the attention mechanism is intentionally designed to capture the semantic dependencies between the current decoding hidden state and each of the encoding hidden states. These semantic dependencies, commonly interpreted as alignments in machine translation and summarization, also play an important role in causal reasoning by serving as causal dependencies. However, the sparsity and ambiguity of commonsense causalities embedded in texts raise concerns about whether the seq2seq model could learn a robust causal dependency model for causality generation. To address this challenge, we first introduce syntactic causal patterns to automatically harvest causalities from the text corpus. Then, we present a causal attention fusion method to augment the seq2seq model with global causal dependency guidance for causality generation. Specifically, we propose a causal attention mechanism that leverages external causal knowledge from CausalNet [2] and a novel attention fusion mechanism that combines the global causal dependency guidance from the causal attention with the local causal dependency obtained from the soft attention mechanism at decoding time steps.

In summary, this paper makes the following contributions:

- We define a new problem, commonsense causality generation, which benefits many NLP applications such as question answering.

- We propose a method to automatically create a cause-effect pairs corpus which facilitates the training process for commonsense causality generation.

- We propose a novel causal attention fusion mechanism which introduces the global causal dependencies observed from an external knowledge source. Extensive experiments show that our approach outperforms multiple strong baselines by a substantial margin.

2. Related Work

Commonsense causal reasoning involves capturing and understanding the causal dependencies among events and actions. The most commonly used dataset for this task is the Choice of Plausible Alternatives (COPA), which includes a premise and two alternatives, along with a prompt specifying the relation between them. Previous studies can be broadly categorized into three lines: feature based methods, co-occurrence based methods, and neural-based methods. All of these approaches are applied to COPA to determine which alternative conveys a more plausible cause or effect based on the prompt from the premise sentence.

As for feature-based methods, Goodwind [3] proposes the COPACETIC system developed by the University of Texas at Dallas (UTD) for COPA. They take COPA as a classification problem and use features derived from varied datasets. For co-occurrence based methods, Roemmele [1] and Gordon [26] focus on lexical co-occurrence statistics gathered from story corpora. They use the Pointwise Mutual Information (PMI) statistic [27] to compute the frequency of two words co-occurring within the same context relative to their overall frequency. It is essential to note that this co-occurrence measure is order-sensitive. In contrast, Luo [2] proposes a framework that automatically harvests a network of causal-effect terms from a large web corpus referred to as the CausalNet. This framework identifies sequences matching lexical templates indicative of causality.

In neural-based methods, Roemmele [28] introduces a neural encoder-decoder model capable of learning to predict relations between adjacent sequences in stories as a means of modeling causality. Dasgupta [29] adopts meta-reinforcement learning to solve a range of problems, each containing causal structure, using an RNN-based agent trained with model-free reinforcement learning (RL). Additionally, Yeo [30] addresses the problem of multilingual causal reasoning in resource-poor languages, constructing a new causality network (PSG) of cause-effect terms, targeting machine-translated English without any language-specific knowledge of resource-poor languages.

Recently, neural-based sequence-to-sequence models have met a great success in machine translation and summarization [31,32,33,34,35], especially CNN seq2seq models [12,25,36,37], which are much faster than others. It shows that the CNN seq2seq models can obtain the latent relation between encoder input and decoder output. Inspired by this, we identify the causality generation problem.The proposed model employs a CNN seq2seq architecture combined with a causality attention mechanism. To facilitate training, we introduce a causality dataset consisting of cause-effect sequence pairs. The causality attention mechanism is a hybrid approach, incorporating both traditional attention and causal strength computed by CausalNet [2]. We further introduce an explicit switch probability to adjust the traditional attention distribution and causal strength distribution. This fusion attention mechanism enables the model to capture causality between texts, thereby allowing the generation of causal sentences from input sentences.

To facilitate a comprehensive comparison among the aforementioned methods, we summarize the main objectives and pros and cons of each method in Table 1.

Table 1.

Comparison of methods for commonsense causal reasoning.

3. Our Approach

In this section, we first describe our methodology for cause-effect pairs extraction. Then we illustrate the causal attention mechanism and its fusion with the soft attention within the CNN seq2seq model for generating commonsense causality.

3.1. Cause-Effect Pairs Extraction

Sentences serve as fundamental semantic units that comprehensively describe events, encompassing both agents and actions. We build a new dataset of cause-effect sentence pairs from novels (described in Section 4). The extraction process involves identifying main and subordinate clauses linked by causal conjunctions, specifically “because” and “so”. To be more specific, we carry out the extraction as follows:

- Cue Sentences Collection. We begin by collecting sentences containing either “because” or “so” through regular expression matching. These sentences are then processed using the Stanford CoreNLP [38] tools for tokenization, POS tagging, constituent parsing, and dependency parsing.

- Negation Detection. During the analysis, if the causal conjunction node in the dependency tree has a negation word sibling, such as “not” or “n’t”, we exclude this sentence from further extraction.

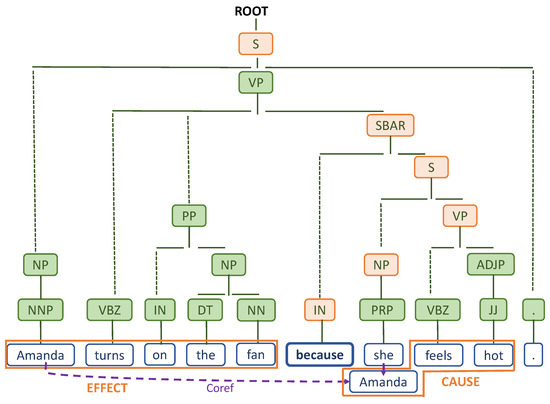

- Clause Detection. To extract cause-effect sentence pairs, we devise syntactic pattern matching rules. Specifically, we ensure that the occurrence of“because" in the sentence introduces a subordinate conjunction word leading to a subordinate clause (tagged as ‘SBAR’), which in turn contains a declarative sentence(‘S’) with a subject(‘NP’) and predicate(‘VP’). Similarly, we design analogous syntactic rules for the ‘so that’ pattern. Additional illustration of these syntactic rules is provided in Figure 1.

Figure 1. Cause and effect sentences extracted from a sample sentence after co-reference resolution, presented with their respective constituent parse trees. Nodes colored in light orange indicate their significance for syntactical checking in our extraction process.

Figure 1. Cause and effect sentences extracted from a sample sentence after co-reference resolution, presented with their respective constituent parse trees. Nodes colored in light orange indicate their significance for syntactical checking in our extraction process. - Spans Extraction. Next, we remove redundant punctuation and unwanted sentence constituents from both the main clause and the subordinate clause subtrees. The text spans within these subtrees are then extracted as cause-effect sentence pairs.

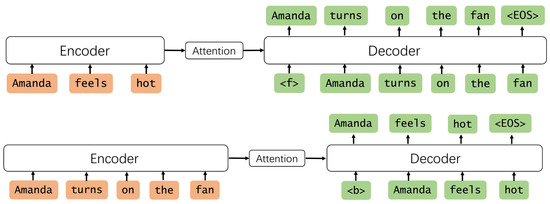

Input Transformations. We generate two data examples from each cause-effect sentence pair, one for forward causal reasoning and one for backward causal reasoning. During training, we prepend the special tokens or to the target span, indicating whether it corresponds to a forward or backward reasoning example. For testing, we initialize the first token of the generated sequence as or to perform either forward or backward reasoning accordingly. Furthermore, we perform co-reference resolution for the cue sentences, as we create both forward and backward reasoning examples. Finally, as shown in Figure 2, we generate two source-target pairs from the example sentence in Figure 1.

Figure 2.

The cause-effect pair for forward and backward causal reasoning.

3.2. Background: CNN Seq2seq Model

In this section, we briefly introduce the convolutional sequence-to-sequence (CNN seq2seq, https://github.com/facebookresearch/fairseq, accessed on 22 November 2023) model for causality generation. Later, we enhance the model by incorporating our proposed mechanism of causal attention along with its corresponding fusion approach with this framework.

In the CNN seq2seq models, given the input elements and output elements (), we obtain the input representations and output representations by combining word embeddings with their corresponding position embedding vectors. The encoder and decoder of the CNN seq2seq framework are composed of stacked convolutional layers, each with L layers (). Specifically, the output of the l-th convolutional layer in the encoder is denoted as , while in the decoder, it is represented as . Each convolutional layer in both the encoder and decoder employs architectural features such as Gated Linear Units (GLU) [39] and residual connections [40], facilitating effective progressive information propagation across layers. The output state at the i-th position of the l-th layer, denoted as , can be expressed as follows:

where k is the kernel width.

While decoding, the cross-attention mechanism is incorporated into each decoding layer to introduce adaptable and dynamic weights that quantify the semantic relationships between individual input elements and the current output element. To compute attention scores (i.e., dynamic weights), the current decoding state, , is combined with the embedded representation of previously generated elements, , resulting in the construction of a decoding state summary, , formulated as follows:

where and denote parameters of the l-th linear transformation layer. Within the l-th decoding layer, the attention score is computed as the dot product between and the encoded output state linked to the j-th input element, which stems from the last layer of the encoder (i.e., L-th encoding layer):

where , and assumes values ranging from 0 to 1. Additionally, the conditional input to the current decoder layer is a weighted sum of both encoder states and input element representations , utilizing attention scores obtained from Equation (3):

Moreover, is added to as the input for the next decoder layer. In the decoding phrase, to generate the next element , a linear transformation is first applied, followed by a softmax transformation, to the uppermost decoder output state , facilitating the computation of the probability distribution :

where and are the parameters of the linear transformation layer.

3.3. Causal Attention Fusion Mechanism

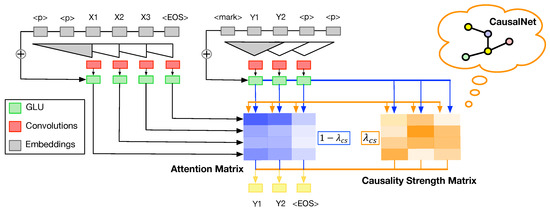

The CNN seq2seq model for causality generation is trained using the extracted cause-effect pairs described in Section 3.1. However, the scarcity and noise presented in the training pairs give rise to concerns regarding its capacity to effectively learn a robust causal dependency model for causality generation. To address this limitation, we propose a causal attention fusion mechanism that incorporates external causal knowledge sourced from CausalNet [2]. Our causal attention fusion mechanism aggregates causal information from the global and local causal dependency to refine the decoding at every time step. We illustrate the proposed attention fusion mechanism as shown in Figure 3.

Figure 3.

Causality Fused attention.

To be more specific, CausalNet [2] consists of a comprehensive web-scale corpus network of word-based causal relationships, along with the causal strength metric that could quantify the causal relationships among short texts. In seq2seq models, the attention mechanism governs the semantic interconnections between input and output elements, dynamically assigning weights to measure the strength of these semantic links, denoted as attention scores. As for causality generation, such semantic links can be interpreted as indicative of causal dependencies. Backed by the CausalNet framework and its causal strength metric , we compute global causal attention scores. These scores are then integrated with the original attention scores to infuse augmented semantic representations through the amalgamation of causal knowledge. This integration enhances the effectiveness of the causal generation model. Notably, given the multiplicative nature of the method, we compute causal attention scores by utilizing word-level causal relationship strength (referred to as ) within a logarithmic context. To further illustrate our model design, we consider the example of forward reasoning, wherein the input signifies the cause, and the generated target signifies the effect. Here, we assume that is the j-th word in the input sequence (cause events), which is denoted as , and is the i-th word in the target sequence (effect events), denoted as . Thus, the logarithm causal strength between and is computed as follows:

Here, is the minimum causal strength for all causal pairs, defined as:

where is the vocabulary. Similarly, during backward reasoning, we consider the input sequence as the effect and generate the output sequence as the cause. As illustrated in Section 3.1, we transform the input data format to distinguish between forward and backward reasoning.

To compute the causal attention score utilizing the word-based causal strength , we first introduce weights for each decoder layer to reserve the hierarchical semantic attending information. Then, we compute the weighted average of the causal strengths between the source word and all target candidate words as the causal attention score:

where represents the causal strength vector. The i-th element of is denoted as , with i representing the index corresponding to vocabulary terms. We substitute in Equation (9) for the in Equation (3). This forms the causal attention baseline model denoted as CAttn model in our experiments.

The original cross attention mechanism is capable of learning local semantic dependencies and propagating them layer by layer. In contrast, the causal attention harvested from CausalNet [2] carries the causal dependency globally. Thus, we propose an attention fusion mechanism to fuse the causal attention with the stacked soft attention, equipping the sequence-to-sequence model with global causal information. Specifically, we combine the global causal dependency with the local causal dependency using a weighting scheme to make the model more expressive. We implement this by weighing the causal attention scores and the soft attention scores through called causal fusion weight. The fused attention score is computed as follows:

where represents the weight of causal attention score, controlling the influence of global causal information in the model. Equations (3) and (9) show the computation of and separately. Then, we design the causal fusion weight as the normalized trainable parameter to make the weighing scheme more flexible. Thus, is computed as:

where denotes the sigmoid function which normalizes between 0 and 1, and is a learnable parameter.

4. Experiments

In this section, we first give some statistics of our corpus, and evaluate the quality of our extracted cause-effect causal training pairs. We then introduce two evaluation datasets and a range of evaluation metrics we adopted in our experiments. Next, we compared our results on the commonsense causality generation task against a number of baselines.

4.1. Dataset Description

We first describe the text corpus we used for cause-effect pairs extraction, and then introduce two evaluation datasets for the following experiments.

4.1.1. Cause-Effect Pairs Corpus

Causal reasoning has been hindered by the lack of extensive labeled resources, owing to issues like the intricacies of data annotation, substantial costs, and inadequate standardization. Previous works [26,41] show that the data of story texts from novels and books benefit the commonsense reasoning tasks more than other sources. Thus, we use a large number of novels, which are a good source of commonsense causal information, as our text corpus for the cause-effect pairs extraction.

Specifically, we first crawl the raw novel corpus from Library Genesis (libgen.is), a search engine for articles and books that allows free access. To maintain the quality of our corpus, we collect a group of prize-winning lists and top-x lists for novels, for example the Nobel Prize in Literature and Time Magazine All Time 100 Novels, and limit our crawler to them. The resulting 19,000 novels are split into around 100 million sentences, which served as our data source after filtering and text cleaning. We perform the cause-effect pairs extraction process on the crawled corpus (described in Section 3.1). Each pair can be transformed into two examples following the input transformation shown in Figure 2. Finally, we obtain 539,930 cause-effect example pairs, then split them into training split (431,944 examples, 80%), validation split (53,993 examples, 10%), and test split (53,993 examples, 10%).

4.1.2. Evaluation Datasets

We conduct the experiments on two evaluation datasets for the generation-based causal reasoning. We use the test split in Section 4.1.1 as the first evaluation dataset denoted as NOVTest. NOVTest contains 53,993 examples and shares the same data distribution as the training data. The specific examples are illustrated in Figure 2.

To better understand the performance of our model against related research, we also use the Choice of Plausible Alternatives (COPA) as the second evaluation dataset, which is a widely-used causal reasoning benchmark. It consists of one thousand multiple-choice questions. Each question is composed of a premise and two alternatives, where the task is to select the more plausible alternative as a cause (or effect) of the premise. We perform the input transformation on each question, which puts the special token or in front of the premise or alternative to form a cause-effect sequence pair. We use the test split in [26] which contains 500 questions. After the input transformation, the built COPATest evaluation dataset consists of 1000 cause-effect pairs.

4.2. Baselines

There are five baselines in the evaluation experiments, which can be divided into two categories: the selection-based methods and the generation-based methods. In this section, we describe those baselines in details.

4.2.1. Selection-Based Methods

To perform the causality generation task, selection-based models first automatically generate a set of target candidates for the given source s, and then select the best answer from those candidates to reason about causalities. For instance, in the context of forward reasoning as an example, when a cause s is designated as source, we automatically match s with all the cause events extracted from the corpus and select 10 semantically most similar cause events, forming the set . Within , for each , its corresponding effect event constitutes the target candidate set , with each pair serving as a training instance. Then we compute the causal strength between s and each t in to select the best answer and generate it as the target. We design three selection-based baselines as follows, among which the main difference is the matching approach when generating the candidate set for s. The first method, OUniMat (Original Unigram Matches), computes the count of matches between s and the unigram words present in the cause events from the training data (normalized by event length) to select the most matching events to form . A variant of this method, termed PUniMat (Processed Unigram Matches), involves preprocessing events by removing stopwords before matching. Additionally, we explore the use of bigram words as a substitute for unigram words in matching, referred to as BiMat (Bigram Matches).

4.2.2. Generation-Based Methods

While the Transformer Seq2Seq model does offer the advantage of capturing long-range dependencies and performs well in text generation tasks, our experiments revealed notable advantages of the CNN Seq2Seq model in terms of swift convergence and ease of training. Transformer Seq2Seq is data hungry and sensitive to noise, making it unsuitable for weakly supervised causal generation tasks. Therefore, generation-based baselines for causality generation are all based on the CNN encoder-decoder sequence-to-sequence model. We denote the model using multi-layer soft attention mechanisms as MultiSAttn (Multi-step Soft Attention) and the model incorporating causal attention mechanisms as CAttn (Causal Attention.). Finally, we propose the FAttn (Fused Attention) model equipped with a fusion mechanism to combine both attention types. These models can directly generate the causalities without the selection step in selection-based baselines.

4.3. Evaluation Metrics

We evaluate the performance of our methods by automatic metrics and human evaluation.

Automatic Metrics. We use two automatic metrics for evaluation: BLEU scores and Accuracy.

- BLEU scores [42] includes BLEU-1, BLEU-2, and BLEU-3 which combines modified n-gram precision and a sentence brevity penalty:where r is the sum of the best match lengths for each generated and corresponding reference text in the corpus and c is the total length of the predicted corpus. is uniform weights. N is based on the type of BLEU, for example, N is 2 for BLEU-2 and is the modified n-gram precision. To compute , one first counts the maximum number of times a word occurs in a reference text. Next, one clips the total count of each predicted word by its maximum reference count, adds these clipped counts up, and divides by the total number of predicted words.

- Accuracy (Acc) is the evaluation metric for COPA, computed as:where and denotes the number of the questions with correct selection and all questions in COPA, respectively. We generate cause (or effect) according to the premise in COPA and select the more “similar” alternative based on the similarity score, BLEU-1 metric, which is well-suited for evaluating the alignment between short sequences.

Human Evaluation. We use human-evaluated accuracy to supplement the automatic evaluation, considering the intricate many-to-many relationship inherent in the determination of cause and effect. To this end, we randomly sample generated cause-effect pairs for evaluation by three human annotators. For a given sample, we ask each annotator to manually inspect whether there is a correct causality between source sequence and generated sequence, and the final result depends on the consensus reached by at least two annotators. Then, we calculate the percentage of the generated cause-effect pairs with correct causality in each model. This estimates the ability of models to generate correct causes (or effects) based on provided premises.

4.4. Experiment Results and Analysis

In this section, we compare our proposed model with all competing models described above both quantitatively and qualitatively.

4.4.1. Comparison Results

We evaluate our model as well as baselines (Section 4.2) on two evaluation datasets (Section 4.1) using both automatic metrics and human evaluation metrics (Section 4.3). The comparison results are shown in Table 2 and Table 3.

Table 2.

Comparisons of results on NOVTest dataset.

Table 3.

Comparisons of results on COPATest dataset.

To be more specific, Table 2 and Table 3 show the comparisons of BLEU scores and human-evaluated accuracies among all competing models across the NOVTest and COPATest datasets. Notably, the PUniMat model, owing to the removal of stop words, is incapable of computing bi-grams and tri-grams, thus preventing the evaluation of BLEU-2 and BLEU-3 scores. We can see that except for a slightly lower BLEU-1 score compared to the MultiSAttn model, the causal attention fusion approach, FAttn, consistently outperforms all other baselines across all metrics on both evaluation datasets. Backed by the CNN seq2seq architecture, FAttn achieves BLEU-1 scores of 20.06 and 36.94, BLEU-2 scores of 9.98 and 27.78, along with human-evaluated accuracy rates of 35% and 52% for the NOVTest and COPATest, respectively.

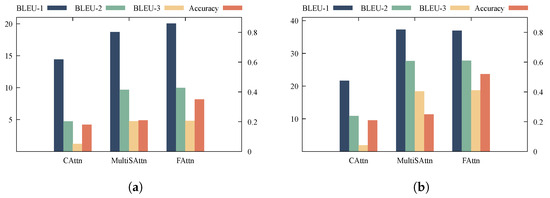

Furthermore, we compare the performance of neural-based models, namely CAttn, MultiSAttn, and FAttn on both NOVTest and COPATest datasets, as shown in Figure 4. We observe that the CAttn model underperforms in the generative-based approach due to its reliance on attention scores derived from global causal knowledge, which is confined to word-level causal associations. Therefore, at each decoding step, the causal strength vectors for different decoding layers remain the same, hindering the effective transfer of lower-level learned distributional features to higher levels and impeding the utilization of multi-layer convolutional networks for feature combination. In contrast, the MultiSAttn model, which employs a multi-step soft attention mechanism, effectively propagates semantic mapping information represented by attention across layers, achieving slightly better performance. Moreover, the FAttn model, utilizing the proposed causal attention fusion mechanism, combines the causal dependency information acquired through multi-step soft attention with global causal attention drawn from external knowledge sources, ultimately achieving superior results.

Figure 4.

Comparisons of neural-based models on NOVTest and COPATest datasets. (a) Comparisons on NOVTest dataset; (b) comparisons on COPATest dataset.

4.4.2. Case Studies

We have observed that, compared to the selection-based approach, the generation-based approach is more flexible and effective, performing better in end-to-end experiments. Next, we perform case studies to examine the impact of various attention mechanisms on the generation-based models. The case studies utilize running examples from the NOVTest and COPATest datasets, which are presented in Table 4.

Table 4.

The influence of attention mechanism for causality generation.

Table 4 presents the study cases from the NOVTest and COPATest datasets, including results for both forward and backward causal reasoning. We can see that the CAttn model, due to its insistence on using global causal attention for all decoding layers, loses hierarchical semantic alignment information. Therefore, CAttn tends to generate less informative outcomes and also has a tendency to produce out-of-vocabulary words. In contrast, the performance of the MultiSAttn and FAttn models, which incorporate multi-layer attention mechanisms, is significantly superior.

Specifically, as an illustration of forward reasoning, as seen in Table 4, given the cause “his wife was having an affair”, FAttn effectively generates a coherent effect “the husband was disappointed”, owing to its integration of comprehensive global causal knowledge that allows it to discern the causal link between “have an affair” and “disappoint”. In contrast, the MultiSAttn model infers a much less plausible effect, that “the husband was not in the least concerned”. However, since the fused causal attention is word-based, which could fail to entirely encapsulate the causal dependencies between events, it potentially results in contextual semantic incongruity during inference.

5. Implications

Our main aim in this study is to address the problem of generation-based commonsense causal reasoning, which has widespread implications for real-life applications such as question answering. While existing methods have shown effectiveness in causality prediction, they encounter difficulties when it comes to handling generation scenarios. To overcome these challenges, we propose a convolutional sequence-to-sequence framework. Firstly, we introduce syntactic causal patterns to automatically extract causalities from a large text corpus. Next, we present a causal attention fusion method to augment the seq2seq model by providing global causal dependency guidance for causality generation. In Section 4.4, we demonstrate the effectiveness of the components integrated within our framework.

A key insight in the generation of causal relations lies in the importance of both global and local causal knowledge. To capture this information, we employ a causal attention mechanism that utilizes external causal knowledge from CausalNet. Furthermore, we introduce a novel attention fusion mechanism that combines the global causal dependency guidance from the causal attention with the local causal dependency obtained from the soft attention mechanism during decoding steps.

6. Conclusions

In this paper, we focus on generation-based commonsense causal reasoning. Specifically, we introduce a novel causal attention mechanism that harnesses external knowledge from CausalNet and a unique fusion mechanism that integrates global causal dependency insights from the causal attention with local causal dependencies obtained through multi-layer soft attention within the CNN seq2seq architecture. The experimental results reveal that within the causal attention fusion framework (based on CNN seq2seq architecture), the FAttn model achieves the highest performance. It attains BLEU-1 scores of 20.06 and 36.94, BLEU-2 scores of 9.98 and 27.78, and human-evaluated accuracy rates of 35% and 52% on two evaluation datasets, respectively, outperforming all other baselines across all metrics on both evaluation datasets. However, the word-based nature of our proposed causal attention mechanism and subsequent fusion mechanism presents a limitation in effectively capturing the intricate causal relationships that arise from events involving multiple agents and complex structures. Consequently, our model may occasionally grapple with contextual and semantic incoherence during the generation of causal relationships. In further work, it is crucial to explore alternative sources of causal knowledge beyond CausalNet, capable of mitigating the constraints associated with word-based mechanisms. One potential direction involves incorporating higher-level representations, such as phrase or sentence-level information, to comprehensively capture complex causal relationships.

Author Contributions

Conceptualization, Z.L.; methodology, Z.L. and Y.L.; data curation, Z.L. and Y.L.; writing—original draft preparation, Z.L.; writing—review and editing, S.L.; supervision, S.L.; funding acquisition, S.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was supported by the Natural Science Foundation of Zhejiang Province, China (Grant No. LQ22F020027) and the Key Research and Development Program of Zhejiang Province, China (2023C01041).

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Roemmele, M.; Bejan, C.A.; Gordon, A.S. Choice of plausible alternatives: An evaluation of commonsense causal reasoning. In Proceedings of the AAAI Spring Symposium: Logical Formalizations of Commonsense Reasoning, Stanford, CA, USA, 21–23 March 2011; AAAI Press: Washington, DC, USA, 2011. [Google Scholar]

- Luo, Z.; Sha, Y.; Zhu, K.Q.; Hwang, S.; Wang, Z. Commonsense causal reasoning between short texts. In Principles of Knowledge Representation and Reasoning: Proceedings of the 15th International Conference (KR-16), Cape Town, South Africa, 25–29 April 2016; AAAI Press: Washington, DC, USA, 2016; pp. 421–431. [Google Scholar]

- Goodwin, T.; Rink, B.; Roberts, K.; Harabagiu, S.M. UTDHLT: COPACETIC system for choosing plausible alternatives. In Proceedings of the 1st Joint Conference on Lexical and Computational Semantics, Stroudsburg, PA, USA, 7–8 June 2012; pp. 461–466. [Google Scholar]

- Jabeen, S.; Gao, X.; Andreae, P. Using asymmetric associations for commonsense causality detection. In Proceedings of the Pacific Rim International Conference on Artificial Intelligence, Gold Coast, Australia, 1–5 December 2014; Springer: Berlin/Heidelberg, Germany, 2014; pp. 877–883. [Google Scholar]

- Lester, B.; Al-Rfou, R.; Constant, N. The power of scale for parameter-efficient prompt tuning. arXiv 2021, arXiv:2104.08691. [Google Scholar]

- Wang, J.; Hou, Y.; Liu, J.; Cao, Y.; Lin, C.Y. A statistical framework for product description generation. In Proceedings of the 8th International Joint Conference on Natural Language Processing, Taipei, Taiwan, 8 November 2017; Short Papers. Asian Federation of Natural Language Processing: Taipei, Taiwan, 2017; Volume 2, pp. 187–192. [Google Scholar]

- Chen, Y.; Li, V.O.; Cho, K.; Bowman, S.R. A Stable and Effective Learning Strategy for Trainable Greedy Decoding. arXiv 2018, arXiv:1804.07915. [Google Scholar]

- Song, K.; Tan, X.; He, D.; Lu, J.; Qin, T.; Liu, T.Y. Double path networks for sequence to sequence learning. arXiv 2018, arXiv:1806.04856. [Google Scholar]

- Wu, F.; Fan, A.; Baevski, A.; Dauphin, Y.N.; Auli, M. Pay Less Attention with Lightweight and Dynamic Convolutions. arXiv 2019, arXiv:1901.10430. [Google Scholar]

- Wang, W.; Jiao, W.; Hao, Y.; Wang, X.; Shi, S.; Tu, Z.; Lyu, M. Understanding and improving sequence-to-sequence pretraining for neural machine translation. arXiv 2022, arXiv:2203.08442. [Google Scholar]

- Fan, A.; Grangier, D.; Auli, M. Controllable abstractive summarization. arXiv 2017, arXiv:1711.05217. [Google Scholar]

- Liu, Y.; Luo, Z.; Zhu, K. Controlling length in abstractive summarization using a convolutional neural network. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 4110–4119. [Google Scholar]

- Narayan, S.; Cohen, S.B.; Lapata, M. Don’t Give Me the Details, Just the Summary! Topic-Aware Convolutional Neural Networks for Extreme Summarization. arXiv 2018, arXiv:1808.08745. [Google Scholar]

- Guo, J.; Xu, L.; Chen, E. Jointly masked sequence-to-sequence model for non-autoregressive neural machine translation. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Virtual, 5–10 July 2020; pp. 376–385. [Google Scholar]

- Kouris, P.; Alexandridis, G.; Stafylopatis, A. Abstractive text summarization: Enhancing sequence-to-sequence models using word sense disambiguation and semantic content generalization. Comput. Linguist. 2021, 47, 813–859. [Google Scholar] [CrossRef]

- Joshi, A.; Fidalgo, E.; Alegre, E.; Fernández-Robles, L. DeepSumm: Exploiting topic models and sequence to sequence networks for extractive text summarization. Expert Syst. Appl. 2023, 211, 118442. [Google Scholar] [CrossRef]

- Baevski, A.; Auli, M. Adaptive input representations for neural language modeling. arXiv 2018, arXiv:1809.10853. [Google Scholar]

- Song, K.; Tan, X.; Qin, T.; Lu, J.; Liu, T.Y. Mass: Masked sequence to sequence pre-training for language generation. arXiv 2019, arXiv:1905.02450. [Google Scholar]

- Lin, S.C.; Yang, J.H.; Nogueira, R.; Tsai, M.F.; Wang, C.J.; Lin, J. Conversational question reformulation via sequence-to-sequence architectures and pretrained language models. arXiv 2020, arXiv:2004.01909. [Google Scholar]

- Fan, A.; Lewis, M.; Dauphin, Y. Hierarchical neural story generation. arXiv 2018, arXiv:1805.04833. [Google Scholar]

- Fan, A.; Lewis, M.; Dauphin, Y. Strategies for Structuring Story Generation. arXiv 2019, arXiv:1902.01109. [Google Scholar]

- Miller, A.H.; Feng, W.; Fisch, A.; Lu, J.; Batra, D.; Bordes, A.; Parikh, D.; Weston, J. Parlai: A dialog research software platform. arXiv 2017, arXiv:1705.06476. [Google Scholar]

- Dinan, E.; Roller, S.; Shuster, K.; Fan, A.; Auli, M.; Weston, J. Wizard of wikipedia: Knowledge-powered conversational agents. arXiv 2018, arXiv:1811.01241. [Google Scholar]

- Zhao, J.; Mahdieh, M.; Zhang, Y.; Cao, Y.; Wu, Y. Effective sequence-to-sequence dialogue state tracking. arXiv 2021, arXiv:2108.13990. [Google Scholar]

- Ott, M.; Edunov, S.; Baevski, A.; Fan, A.; Gross, S.; Ng, N.; Grangier, D.; Auli, M. fairseq: A Fast, Extensible Toolkit for Sequence Modeling. In Proceedings of the NAACL-HLT 2019: Demonstrations, Minneapolis, MN, USA, 2–7 June 2019. [Google Scholar]

- Gordon, A.S.; Bejan, C.A.; Sagae, K. Commonsense causal reasoning using millions of personal stories. In Proceedings of the Association for the Advancement of Artificial Intelligence, San Francisco, CA, USA, 7–11 August 2011; AAAI Press: Washington, DC, USA, 2011. [Google Scholar]

- Church, K.W.; Hanks, P. Word association norms, mutual information, and lexicography. Comput. Linguist. 1990, 16, 22–29. [Google Scholar]

- Roemmele, M.; Gordon, A. An encoder-decoder approach to predicting causal relations in stories. In Proceedings of the 1st Workshop on Storytelling, New Orleans, LA, USA, 7 June 2018; Association for Computational Linguistics: New Orleans, LA, USA, 2018; pp. 50–59. [Google Scholar]

- Dasgupta, I.; Wang, J.X.; Chiappa, S.; Mitrovic, J.; Ortega, P.A.; Raposo, D.; Hughes, E.; Battaglia, P.; Botvinick, M.; Kurth-Nelson, Z. Causal Reasoning from Meta-reinforcement Learning. arXiv 2019, arXiv:1901.08162v1. [Google Scholar]

- Yeo, J.; Wang, G.; Cho, H.; Choi, S.; Hwang, S. Machine-Translated Knowledge Transfer for Commonsense Causal Reasoning. In Proceedings of the 32nd AAAI Conference on Artificial Intelligence, (AAAI-18), the 30th innovative Applications of Artificial Intelligence (IAAI-18), and the 8th AAAI Symposium on Educational Advances in Artificial Intelligence (EAAI-18), New Orleans, LA, USA, 2–7 February 2018; pp. 2021–2028. [Google Scholar]

- Bahdanau, D.; Cho, K.; Bengio, Y. Neural Machine Translation by Jointly Learning to Align and Translate. arXiv 2014, arXiv:1409.0473. [Google Scholar]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to sequence learning with neural networks. In Proceedings of the Advances in Neural Information Processing Systems 27: Annual Conference on Neural Information Processing Systems 2014, Montreal, QC, Canada, 8–13 December 2014; pp. 3104–3112. [Google Scholar]

- Paulus, R.; Xiong, C.; Socher, R. A Deep Reinforced Model for Abstractive Summarization. arXiv 2017, arXiv:1705.04304. [Google Scholar]

- See, A.; Liu, P.J.; Manning, C.D. Get to the point: Summarization with pointer-generator networks. In Proceedings of the 55th Annual Meeting of the Association for Computational Linguistics, ACL 2017, Vancouver, BC, Canada, 30 July–4 August 2017; Volume 1: Long Papers, pp. 1073–1083. [Google Scholar] [CrossRef]

- Nallapati, R.; Zhou, B.; dos Santos, C.N.; Gülçehre, Ç.; Xiang, B. Abstractive text summarization using sequence-to-sequence RNNs and beyond. In Proceedings of the 20th SIGNLL Conference on Computational Natural Language Learning, CoNLL 2016, Berlin, Germany, 11–12 August 2016; pp. 280–290. [Google Scholar]

- Gehring, J.; Auli, M.; Grangier, D.; Yarats, D.; Dauphin, Y.N. Convolutional sequence to sequence learning. In Proceedings of the 34th International Conference on Machine Learning, ICML 2017, Sydney, Australia, 6–11 August 2017; pp. 1243–1252. [Google Scholar]

- Fan, A.; Grangier, D.; Auli, M. Controllable abstractive summarization. In Proceedings of the 2nd Workshop on Neural Machine Translation and Generation, NMT@ACL 2018, Melbourne, Australia, 20 July 2018; pp. 45–54. [Google Scholar]

- Manning, D.C.; Surdeanu, M.; Bauer, J.; Finkel, J.; Bethard, S.J.; McClosky, D. The Stanford CoreNLP Natural Language Processing Toolkit. In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics: System Demonstrations, Baltimore, MD, USA, 23–24 June 2014; pp. 55–60. [Google Scholar]

- Dauphin, Y.N.; Fan, A.; Auli, M.; Grangier, D. Language modeling with gated convolutional networks. In Proceedings of the 34th International Conference on Machine Learning, ICML 2017, Sydney, NSW, Australia, 6–11 August 2017; pp. 933–941. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition, CVPR 2016, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Trinh, T.H.; Le, Q.V. A simple method for commonsense reasoning. arXiv 2018, arXiv:1806.02847. [Google Scholar]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W. Bleu: A Method for Automatic Evaluation of Machine Translation. In Proceedings of the 40th Annual Meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 6–12 July 2002; pp. 311–318. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).