Abstract

In this work, we introduce an extended gradient method that employs the gradients of the preceding two iterates to construct the search direction for the purpose of solving the centralized and decentralized smooth and strongly convex functions. Additionally, we establish the linear convergence for iterate sequences in both the centralized and decentralized manners. Furthermore, the numerical experiments demonstrate that the centralized extended gradient method can achieve faster acceleration than the compared algorithms, and the search direction also exhibits the capability to improve the convergence of the existing algorithms in both two manners.

Keywords:

gradient method; decentralized optimization; strongly convex optimization; acceleration; convergence analysis MSC:

90C25

1. Introduction

In this work, we consider the unconstrained optimization problem:

The cost function of this problem is a differentiable strongly convex function, and the gradient is L-Lipschitz continuous. Starting from an initial point with , the extended gradient method updates the iterates for :

where the step size () is sufficiently small, and it will be analyzed below to ensure the convergence of the extended gradient algorithm.

Firstly, we discussed briefly the accelerated scheme of gradient methods, that is, the iterate updates using the information of the preceding two iterates. Such as the gradient method with extrapolation that has the following forms: , where is the step size and is a scalar. When and are set as the constant scalar and , respectively, the method is equivalent to the heavy ball method [1]. In [2], the linear convergence rate was established for the heavy ball method applied to smooth, twice continuously differentiable and strongly convex functions. Further, the authors in [3] constructed an example to illustrate that even if the objective functions are strongly convex, the heavy ball method still can not converge when the functions are not twice continuously differentiable. Thus, the property that the objective function is twice continuously differentiable is a necessary condition in the convergence guarantees of the heavy ball method. Based on this fact, for the case of strictly convex quadratic functions, the recent work [4], obtained the iteration complexity of the heavy ball method under the assumption that the appropriate upper and lower bounds for eigenvalues of the Hessian matrix are known. In particular, several accelerated gradient descent methods with a similar structure to the heavy ball method also have been proposed in [5], and they converge linearly to the solution of the smooth and strongly convex functions with the optimal iteration complexity. Compared to the heavy ball method, the above accelerated methods fully utilize the difference between the preceding two iterates. Moreover, the main advantage of solving convex functions in practice is that the accelerated gradient descent methods can converge to the optimum more quickly [6].

On the other hand, the accelerated scheme also can update the variables employing the gradients of the preceding several iterates. As mentioned in [7,8], the optimistic gradient descent-ascent (OGDA) method and the inertial gradient algorithm with Hessian dampling (IGAHD) update the variable via the difference of the gradients of the preceding two iterates. Besides, for the least mean squares (LMS) estimation of graph signals, the extended LMS algorithm in [9] and proportionate-type graph LMS algorithm in [10] update the variable via the weighted sum of the gradients of the preceding several iterates. Further, the Anderson acceleration gradient descent method (AA-PGA) in [11] updates the variable by employing the convex combination of the gradients of the preceding several iterates. However, AA-PGA converges linearly to the optimal solution under the assumption . Inspired by the above works, we here introduce an extended gradient method, in which the variables are updated along the direction of the sum of the gradients of the preceding two iterates. The main purpose of this work is to analyze the convergence of the extended gradient method for finding the optimal solution of problem (1). We will show that when the step size is less than a given upper bound, the linear convergence of the extended gradient method can be guaranteed.

Next, we consider a class of smooth and strongly convex optimization functions on the real vector space with Euclidean norm and inner product .

Definition 1.

A function is L-smooth if the gradient is L-Lipschitz continuous, that is,

If f is convex and L-smooth it also holds that [12].

Definition 2.

A function is μ-strongly convex if there exists such that

2. Analysis of the Extended Gradient Method

In this section, to establish the convergence of the extended gradient method, we first obtain an important inequality in Theorem 1, where the upper bound on will be given in terms of the linear combinations of , and . This inequality is vital and will be used in the following analysis.

Theorem 1.

Assume that f is μ-strongly convex, and the gradient is L-Lipschitz continuous. Let the step size α satisfy the condition , and be generated by the extended gradient method (2). Then, the following hold.

(i) The iterates satisfy the following linear inequality

where is the optimal solution.

(ii) converges to the optimal value at the sub-linear rate of .

Proof.

(i) According to the update equation in (2), for any , we have

where , , and .

Now, substituting , and noting that , we first bound the term ,

Secondly, we provide a lower bound of with the strongly convex property of ,

Finally, we bound the term by employing and . Based on the smoothness of f, we can obtain that

Add and subtract the inner product to the right-hand side of (6) and rearrange the terms to have

Then by the bound of (5) (k is replaced by ), (7) can be rewritten as

wherein, in terms of the update Equation (2) and the bound of (4), the term in (8) can be further expressed as

Therefore, the bound of can be yielded as

Considering that in (11), we complete the proof of (i).

(ii) Rearranging the terms in (11), it holds

For , we obtain and thus

Summing over , we have

Noticed that , then . Therefore, the following inequality holds

which means that converges to the optimal value at the sub-linear rate of . □

The result in (ii) of Theorem 1 shows that the function value generated by the extended gradient method converges to the optimal solution of problem (1) at a sublinear rate. Further, in order to derive the linear convergence of the extended gradient method, we give two important lemmas as follows.

Lemma 1

([13] Corollary 8.1.29). Let be a nonnegative matrix, and be a positive vector. If , then .

Lemma 2

([14] Lemma 1 in Section 2.1). It holds that , i.e., the spectral radius of A gives the asymptotic growth rate of : For every there is such that , for all k.

Together with (i) of Theorem 1, Lemma 1 and Lemma 2, we give the following Theorem 2. For notational convenience, we define .

Theorem 2.

The same assumptions hold as in Theorem 1. Let the sequence generated by the extended gradient method and be the optimal solution. Then for every , there is such that , for all k, where is a constant.

Proof.

By the result in (i) of Theorem 1, we first readily find that , here

.

Under the assumption that the spectral radius of matrix A is less than 1 (i.e., ), the statement of convergence can be obtained via Lemma 2. Thus, it remains to analyze the spectral radius of the matrix A and show that the spectral radius of A is less than 1. Find a positive vector satisfying , then the following inequalities hold

To ensure that the first inequality in (16) holds, the necessary condition is that , which means . Then, according to the sign of , we further deduce the bounds of by considering the following two cases.

(i) If , we can derive the first inequality of (16) as

duo to . Then, since the left-hand side of the (17) is less than 0, we have

due to . Further, it follows from (18) that , namely, , which leads to a contradiction to the assumption.

(ii) If , the first inequality of (16) can be written as

due to . Then, based on the fact that the left-hand side of (19) is less than 0, we have

due to . On rearranging the terms of (20), we obtain that , which is consistent with the assumption. Therefore, the upper bound of step size can be reduced as

Finally, we have demonstrated that there exists a positive vector such that , then it is clear according to Lemma 1 for . □

Remark 1.

Choose and assume that for some fix k. It follows that

which means that . By induction and by the selections of initial points, it can be ensured that for all . As a consequence, by defining , we obtain . This is indeed similar to the inexact condition considered in [14] (Theorem 2 in Section 4.2.3), [15,16]. Note, that [14] considers the methods of the form

and where for the convex functions with Lipschitz continuous gradients. While [15,16] considers the methods of type for smooth functions without convexity. The constant 2 in the extended gradient method can relax the inexact condition.

3. Analysis of the Decentralized Extended Gradient Method

The traditional (centralized) optimization methods are found on a large assumption that all data samples are stored and operated at a computing unit [17]. However, in the networked multi-agent systems, the data resources and function information related to optimization problems are stored in each agent distribution, and the distributed schemes are introduced into the framework of optimization [18]. In the distributed fashion, all data are transmitted to a fusion center that conducts the optimization operation and scatters the updated information to all agents. Nevertheless, the distributed operation is gradually replaced by the decentralized manner for the reasons of privacy and communication costs. The decentralized optimization is designed for the situations of networked systems which can fully utilize the resources of multiple computing units and improve the reliability of the networked systems.

In this section, we focus on distributed gradient methods. The earlier distributed gradient methods [19,20,21] slowly converge to the optimal solution by utilizing the diminishing step size. With the constant step size, these methods only converge to a neighborhood of the optimal solution. Recently, the distributed gradient methods [22,23,24] combined with more techniques can converge linearly to the optimal solution. The techniques include the multi-step consensus, the difference between the two consecutive iterates and the gradient tracking technique, which could cause more computation and communication burden. Thus, we try to investigate the behavior of the decentralized extended gradient method working with the simple update mechanism.

3.1. Decentralized Extended Gradient Method

This section develops a decentralized version of the extended gradient method for the following problem:

where the local cost function is L-smooth and -strongly convex, which is defined over a network consisting of n agents. To apply the extended gradient method to problem (22) under the decentralized situation, all the agents carry out the extended gradient method in parallel.

A set of local variables is introduced, and is assigned to agent i. Denote the aggregate variable X and the aggregate gradient as and . The decentralized extended gradient method updates the iterates :

where the weight matrix W is symmetric and double stochastic, i.e., , and , herein . Weight the matrix W is generated based on the network. When the agent i and j are neighbors or , . In the decentralized setting, the local function is only accessed by agent i, . All agents cooperate to optimize the global function f via local intra-node computation and extra-node communication. The local computation is operated on the individual agent, i.e., the local variable of agent i is updated by the gradient information of the local function . The communication is operated over the network, i.e., the local variable is updated by combining the weighted average of its neighbor’s local variable, which enforces the consensus of all local variables.

3.2. Convergence Result

We need to define the average vector: . Then, we give the upper bounds on and by using the linear combinations of the values of the preceding two iterates.

Lemma 3.

Let be the iterates generated by the updates in (23). If , , then the following relation hold:

where and , here in and .

If and , where , , , and , then and thus and converge to zero at the linear rate of .

Proof.

First, we establish a linear system by using the upper bounds on and . Next, we can find the range of such that . From the update in (23), we derive

Step 1. Bound .

From the above equation, it follows that

Notice that and . Therefore, it follows

where . For the term , we can further bound as

where choosing such that . So

Similarly, we have

Step 2. Bound .

Consider the first term in (31), we have

where . For the second term and third term in (31), we bound them as

and

due to the smoothness of global function f. Substituting (32)–(34) into (31), it holds

Next, According to Lemma 1, we give the range of and and a positive vector such that , which is equivalent to the following inequalities

If , according to the definition that , we have that . Since satisfies (37), we require

4. Numerical Experiments

In this section, we perform computational experiments on both the centralized and the decentralized problems to demonstrate the efficiency of the extended gradient method. We compare our proposed method with many well-known algorithms. All algorithms are programmed in Matlab (R2018b, MathWorks, Natick, MA, USA) and run on a PC (Lenovo-M430, Beijing, China) with a 2.90 GHz Intel Core i5-10400 CPU and 8 GB of memory.

4.1. Centralized Problem

We consider the performance of the extended gradient method when it is applied to the LASSO problem:

where the random matrix is generated by the uniform distribution , where x also follows an uniform distribution with sparsity r. In particular, we set the parameters , , , and the initial condition . The termination criterion is set to reach the maximum number of iterations or . When the regularization parameter is zero, the LASSO problem becomes the least squares problem. Otherwise, the objective function is nonsmooth. Considering that the nonsmooth term is actually the sum of the absolute values of each component of x, we employ the proximal gradient method and the approximation method based on the Huber function, respectively, to solve the LASSO problem. The Huber function becomes closer to the absolute value function as the parameter approaches 0.

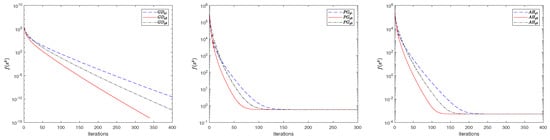

In order to study the influence of the previous gradients on the search direction of the optimization algorithm, we employ the gradient (denoted as ), the sum of the gradients of the preceding two iterates (denoted as ), and the sum of the gradients of the preceding three iterates (denoted as ) to construct search directions, respectively. Figure 1 shows the performance of the gradient descent method(GD) for the least square problem, the proximal gradient method(PG) and the approximation method based on the Huber function (AH) for the LASSO problem () when the above three search directions are employed. We set the parameter as 0.02. For these algorithms with the three search directions, we set the step sizes as , and , respectively. Observing that when the sum of the gradients of the preceding two iterates () is selected to construct the search direction, the optimization algorithms achieve better acceleration than them with the other two search directions ( and ).

Figure 1.

Performance of optimization algorithms with three search directions.

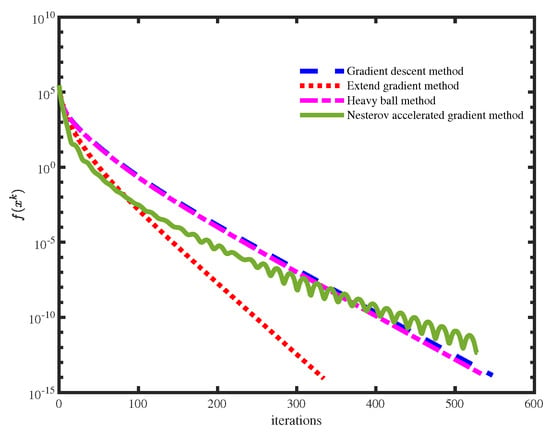

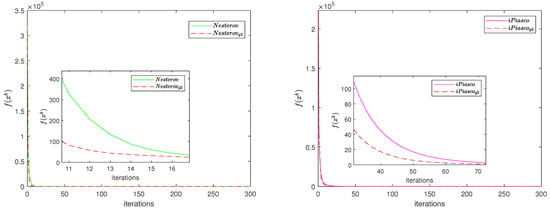

On the other hand, we compare the extended gradient method (our algorithm) with the gradient descent method, heavy ball method (iPiasco) [2] and Nesterov accelerated gradient descent method [5]. Specifically, for the gradient descent method, the step size is . For the extended gradient method, the step size is . For the heavy ball method, the step size is and the extrapolation parameter is . For the Nesterov accelerated gradient descent method, the extrapolation parameter is and step size is . Figure 2 depicts the comparison result of all compared methods for the least squares problem. We notice that the extended gradient method outperforms the other algorithms in terms of the number of iterations. Moreover, in order to show that the sum of two gradients () can accelerate the convergence of algorithms, we use it to construct the new search direction of the Nesterov accelerated gradient descent method and iPiasco. We set step sizes and as and . From Figure 3, we observe that when using the sum of two gradients to construct the new search direction, both the proximal Nesterov accelerated gradient descent method and the proximal iPiasco can achieve better acceleration performance.

Figure 2.

Comparison of different algorithms for the least squares problem.

Figure 3.

Performance of Nesterov accelerated gradient descent method and iPiasco with two search directions.

4.2. Decentralized Problem

We illustrate the performance of the decentralized extended gradient method () on the following problem

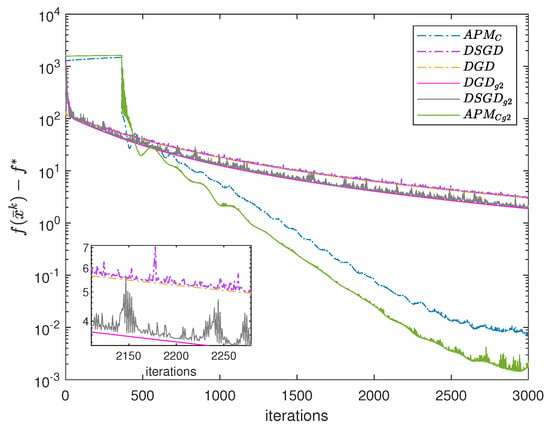

where the random matrix is generated by the uniform distribution , and each column of is normalized to be 1. We set the parameters , , , , and where x also follows the uniform distribution with sparsity r. The undirected graph is generated by Erdös-Rényi model [25] where each pair of nodes has the connection probability , and the weight matrix , here M is generated with the Metropolis constant edge rules [26]. To demonstrate the effect of the previous gradient on the search direction of decentralized optimization algorithms, we use the sum of the gradients of the preceding two iterates () to construct the search direction in the accelerated penalty method with consensus () [27], the decentralized gradient descent (DGD) [21] and the decentralized stochastic gradient descent (DSGD) [28]. For DGD, DSGD, and , we tune the optimized step size by hand, which are , , and , respectively. For and , the step sizes are and . The initial condition is . Compared to the original , DGD and DSGD, we can see from Figure 4 that the algorithms with the gradients of the preceding two iterates () to construct search direction outperform than those with the current negative gradient as the search direction.

Figure 4.

Comparison of different algorithms for the LASSO problem.

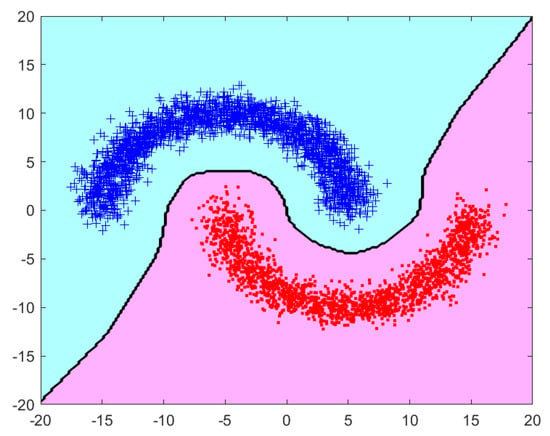

Finally, we extend the proposed method to a classification problem. First, we introduce the Radial Basis Function Network (RBFN) that uses a radial basis function as the activation function, where s is a sample and c is a center. The output of the RBFN is a linear combination of radial basis functions and the neuron weight parameters x. The training error that evaluates the performance of the RBFN can be written as (44). The training samples are . The centers are randomly chosen from the training samples. The radial basis function is Gauss function . The matrix represents , and is the corresponding sample label vector. We set , and . Each node holds samples set . For the decentralized extended gradient method, the step size is set as . Figure 5 shows the classification result, and the accuracy of classing the samples is 1, which further indicates the efficiency of our method.

Figure 5.

Classification result of the extended gradient method.

5. Conclusions

The gradient methods have been widely employed to solve the optimization problems. In this paper, we first introduce an extended gradient method for the centralized and decentralized smooth and strongly convex problems. Secondly, we establish the linear convergence of the extended gradient method in a centralized and decentralized manner, respectively. Finally, the numerical examples are used to demonstrate the convergence and to validate the efficiency compared to current classical methods. In the future, the acceleration technique used in this work can be applied to more optimization problems.

Author Contributions

Conceptualization, X.Z. and S.L.; methodology, X.Z.; software, X.Z. and N.Z.; validation, N.Z. and S.L.; investigation, X.Z.; Writing—Original draft preparation, X.Z.; Writing—Review and editing, S.L. and N.Z.; supervision, S.L. and N.Z.; funding acquisition, S.L. and N.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China (Grant Nos. 12271419 and 12302033), and the Natural Science Basic Research Program of Shaanxi Province, China (Grant No. 2023-JC-QN-0009).

Data Availability Statement

No new data were created or analyzed in this study. Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare that there are no conflict of interest with regards to the publication of this paper.

References

- Polyak, B.T. Some methods of speeding up the convergence of iteration methods. USSR Comput. Math. Math. Phys. 1964, 4, 1–17. [Google Scholar] [CrossRef]

- Ochs, P.; Brox, T.; Pock, T. iPiasco: Inertial Proximal Algorithm for Strongly Convex Optimization. J. Math. Imaging Vis. 2015, 53, 171–181. [Google Scholar] [CrossRef]

- Lessard, L.; Recht, B.; Packard, A. Analysis and design of optimization algorithms via integral quadratic constraints. SIAM J. Optim. 2016, 26, 57–95. [Google Scholar] [CrossRef]

- Hagedorn, M.; Jarre, F. Iteration Complexity of Fixed-Step-Momentum Methods for Convex Quadratic Functions. arXiv 2022, arXiv:2211.10234. [Google Scholar]

- Nesterov, Y. Introductory Lectures on Convex Optimization: A Basic Course; Springer: New York, NY, USA, 2003; Volume 87. [Google Scholar]

- Bertsekas, D. Convex Optimization Algorithms; Athena Scientific: Nashua, NH, USA, 2015. [Google Scholar]

- Popov, L.D. A modification of the Arrow-Hurwicz method for search of saddle points. Math. Notes Acad. Sci. USSR 1980, 28, 845–848. [Google Scholar] [CrossRef]

- Attouch, H.; Chbani, Z.; Fadili, J.; Riahi, H. First-order optimization algorithms via inertial systems with Hessian driven damping. Math. Program. 2022, 193, 113–155. [Google Scholar] [CrossRef]

- Ahmadi, M.J.; Arablouei, R.; Abdolee, R. Efficient estimation of graph signals with adaptive sampling. IEEE Trans. Signal Process. 2020, 68, 3808–3823. [Google Scholar] [CrossRef]

- Torkamani, R.; Zayyani, H.; Korki, M. Proportionate Adaptive Graph Signal Recovery. IEEE Trans. Signal Inf. Process. Netw. 2023, 9, 386–396. [Google Scholar] [CrossRef]

- Mai, V.; Johansson, M. Anderson acceleration of proximal gradient methods. International Conference on Machine Learning. PMLR 2020, 119, 6620–6629. [Google Scholar]

- Devolder, O.; Glineur, F.; Nesterov, Y. First-order methods of smooth convex optimization with inexact oracle. Math. Program. 2014, 146, 37–75. [Google Scholar] [CrossRef]

- Horn, R.A.; Johnson, C.R. Matrix Analysis; Cambridge University Press: Cambridge, UK, 2012. [Google Scholar]

- Poljak, B.T. Introduction to Optimization; Optimization Software, Inc.: New York, NY, USA, 1987. [Google Scholar]

- Khanh, P.D.; Mordukhovich, B.S.; Tran, D.B. Inexact reduced gradient methods in nonconvex optimization. J. Optim. Theory Appl. 2023. [Google Scholar] [CrossRef]

- Khanh, P.D.; Mordukhovich, B.S.; Tran, D.B. A New Inexact Gradient Descent Method with Applications to Nonsmooth Convex Optimization. arXiv 2023, arXiv:2303.08785. [Google Scholar]

- Schmidt, M.; Le Roux, N.; Bach, F. Minimizing finite sums with the stochastic average gradient. Math. Program. 2017, 162, 83–112. [Google Scholar] [CrossRef]

- Lee, J.D.; Lin, Q.; Ma, T.; Yang, T. Distributed stochastic variance reduced gradient methods by sampling extra data with replacement. J. Mach. Learn. Res. 2017, 18, 4404–4446. [Google Scholar]

- Jakovetić, D.; Xavier, J.; Moura, J.M.F. Fast distributed gradient methods. IEEE Trans. Autom. Control 2014, 59, 1131–1146. [Google Scholar] [CrossRef]

- Yuan, K.; Ling, Q.; Yin, W. On the convergence of decentralized gradient descent. SIAM J. Optim. 2016, 26, 1835–1854. [Google Scholar] [CrossRef]

- Nedic, A.; Ozdaglar, A. Distributed Subgradient Methods for Multi-Agent Optimization. IEEE Trans. Autom. Control 2009, 54, 48–61. [Google Scholar] [CrossRef]

- Berahas, A.S.; Bollapragada, R.; Keskar, N.S.; Wei, E. Balancing communication and computation in distributed optimization. IEEE Trans. Autom. Control 2018, 64, 3141–3155. [Google Scholar] [CrossRef]

- Shi, W.; Ling, Q.; Wu, G.; Yin, W. Extra: An exact first-order algorithm for decentralized consensus optimization. SIAM J. Optim. 2015, 25, 944–966. [Google Scholar] [CrossRef]

- Nedic, A.; Olshevsky, A.; Shi, W. Achieving geometric convergence for distributed optimization over time-varying graphs. SIAM J. Optim. 2017, 27, 2597–2633. [Google Scholar] [CrossRef]

- Erdös, P.; Rényi, A. On the evolution of random graphs. Publ. Math. Inst. Hung. Acad. Sci. 1960, 5, 17–60. [Google Scholar]

- Boyd, S.; Diaconis, P.; Xiao, L. Fastest mixing Markov chain on a graph. SIAM Rev. 2004, 46, 667–689. [Google Scholar] [CrossRef]

- Li, H.; Fang, C.; Yin, W.; Lin, Z. Decentralized Accelerated Gradient Methods With Increasing Penalty Parameters. IEEE Trans. Signal Process. 2020, 68, 4855–4870. [Google Scholar] [CrossRef]

- Chen, J.S.; Sayed, A.H. Diffusion Adaptation Strategies for Distributed Optimization and Learning Over Networks. IEEE Trans. Signal Process. 2012, 60, 4289–4305. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).