Abstract

This paper investigates a single-machine scheduling problem of a software test with shared common setup operations. Each job has a corresponding set of setup operations, and the job cannot be executed unless its setups are completed. If two jobs have the same supporting setups, the common setups are performed only once. No preemption of any processing is allowed. This problem is known to be computationally intractable. In this study, we propose sequence-based and position-based integer programming models and a branch-and-bound algorithm for finding optimal solutions. We also propose an ant colony optimization algorithm for finding approximate solutions, which will be used as the initial upper bound of the branch-and-bound algorithm. The computational experiments are designed and conducted to numerically appraise all of the proposed methods.

Keywords:

single-machine scheduling; shared common setups; total completion time; integer programming; branch-and-bound; ant colony optimization MSC:

68M20; 90B35; 90C57

1. Introduction

Scheduling is the decision-making process used by many manufacturing and service industries to allocate resources to economic activities or tasks over he planning horizon [1,2]. This paper studies a scheduling model that is inspired by real-life applications, where supporting operations need to be prepared before regular jobs are processed. The specific application context is the scheduling of asoftware test at an IC design company, where the software system is modular and can be tested module by module and level by level. Before starting a module test, we need to install software utilities and libraries as well as adjust system parameters to shape an appropriate system environment. The setup operation corresponds to the installation of utilities and libraries, which are supporting tasks for the job. Different tests may require part of the same environment settings. If two jobs have common supporting tasks, the common setups are performed only once. The abstract model was also studied by Kononov, Lin, and Fang [3] as a single-machine scheduling problem formulated from the production scheduling of multimedia works. In the context of multimedia scheduling, when we want to play multimedia, we need to download their content first, including audio tracks, subtitles, and images, which can correspond to setup operations and jobs for this study, respectively. Once the setup operations of the multimedia objects are prepared, they can be embedded in upper-level objects without multiple copies, as in physical products. This unique property is different from the manufacture of tangible products, such as vehicles and computers. Following the standard three-field notation [4], we denote the model by , where the one indicates the single-machine setting, indicates the bipartite precedence relation between shared setups and test jobs, and is the objective to minimize the total completion time.

This paper is organized into seven sections. In Section 2, the problem definition is presented with a numerical example for illustrations. The literature review follows. Section 3 introduces two integer programming models based on different formulation approaches. Section 4 is dedicated to the development of a branch-and-bound algorithm, including the development of upper bound, lower bound, and tree traversal methods. In Section 5, an ant colony optimization algorithm is proposed. Section 6 presents the computational experiments on the proposed methods. Finally, conclusions and suggestions for future works are given in Section 7.

2. Problem Definition and Literature Review

2.1. Problem Statements

We first present the notation that will be used in this paper. Note that all parameters are assumed to be non-negative integers.

| n | number of jobs; |

| m | number of setup operations; |

| set of jobs to be processed; | |

| set of setup operations; | |

| relation indicating whether the setup operation | |

| is required for each job ; | |

| processing time of job on the machine; | |

| processing time of setup on the machine; | |

| particular sequence of the jobs; | |

| optimal schedule sequence; | |

| completion time of job ; | |

| total job completion time under schedule . |

The subject of our research is dedicated to studying the single-machine scheduling problem with shared common setup operations. The objective is to minimize the total completion time of the jobs, i.e., . The problem can be described as follows:

From time zero onwards, two disjoint sets of activities S and T are to be processed on a machine. Each job has a set of setup operations that job can only start after its setups are completed. All setup operations and jobs can be performed on the machine at any time. Although all setup operations need to be processed once, they do not contribute to the objective function because their role is only the preparatory operations for jobs under the priority relation. At any time, the machine can process at most one setup operation or job. No preemption of any processing is allowed. In software test scheduling, jobs represent the software to be tested, and setups refers to the preparation of a programming language or compilation environment that needs to be installed in advance so that the test software can be executed. For example, if is an Android application that needs to be tested, and needs setups and , then could be JAVA, could be Android Studio, etc.

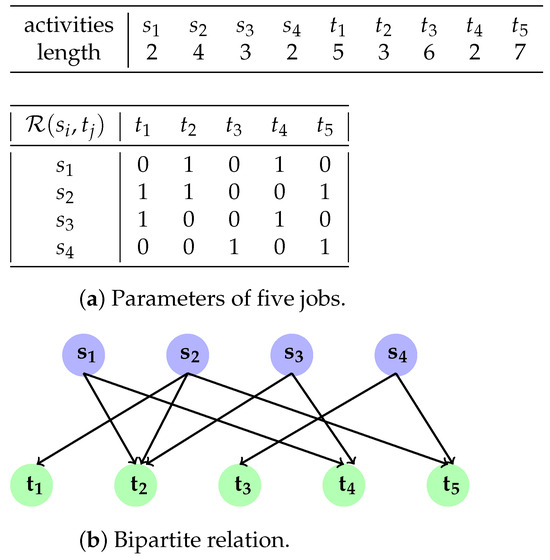

To illustrate the problem of our study, we give numerical examples of four setup operations, five test jobs, the relation , and its corresponding graph. The parameters and relation are shown in Figure 1.

Figure 1.

Example of 4 setups and 5 jobs.

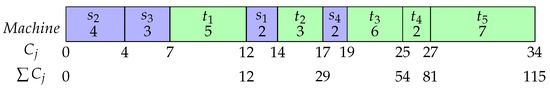

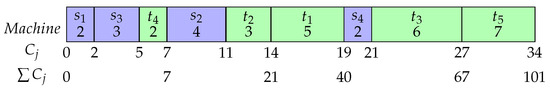

Two example schedules are produced and shown below in the form of Gantt charts. The feasible schedule shown in Figure 2 is with a total completion time of 115. Setups precede job . The order of and is immaterial. Figure 3 shows schedule associated with a total completion time of 101, which is optimal for the given instance. While both are feasible solutions, the objective value of Figure 3 will be better than that of Figure 2.

Figure 2.

Feasible solution.

Figure 3.

Optimal solution.

2.2. Literature Review

The scheduling problem studied by Kononov, Lin, and Fang [3] has a single machine that performs all setup operations and testing jobs. Referring to Brucker [5] and Leung [1], we know that precedence constraints play a crucial role in scheduling problems, especially when complexity status or categories are involved. Existing research works in the literature consider precedence relations presented in various forms. Bipartite graphs are often studied in graph theory. The graph shown in Figure 1 is bipartite because edges exist between nodes on one side and nodes on the other. Linear allocation problems can also be visualized by both supply and demand. Unfortunately, scheduling theory rarely addresses precedence constraints in bipartite graphs.

After formulation, Kononov, Lin, and Fang [3] studied two minimum sum objective functions, namely the number of late jobs and the total weighted completion time of jobs. As for the minimization of the total weighted completion time (), Baker [6] is probably the first paper to address the existence of precedence constraints. Adolphson and Hu [7] proposed a polynomial time algorithm for the case in which rooted trees give priority. A fundamental problem for the jobs-per-unit execution time is , where has been proven to be strongly NP-hard by Lawler [8]. The minimum latency set cover problem studied by Hassin and Levin [9] is the most relevant. The minimum latency set cover problem involves a subset of several operations. A subset is complete when all of their operations are finished. The objective function is the total weighted completion time of subsets. The minimum latency set cover problem is a special case of our problem, and the correspondence is as follows: In our problem, an operation is mapped to a setup operation (), and a subset is interpreted as a testing job (). Furthermore, Hassin and Levin [9] showed that the minimum delay set cover problem is strongly NP-hard even if all operations require the unit execution time (UET). Subsequently, the problem is hard as well. By performing a pseudo-polynomial time reduction in Lawler’s result about , where , Kononov, Lin, and Fang [3] proved is strongly NP-hard. In other words, it is very difficult to minimize the total weighted completion time in our model, even though all setup operations and all testing jobs require unit execution time and all weights are one.

To solve the scheduling problem, Shafransky and Strusevich [10]; Hwang, Kovalyov, and Lin [11]; and Cheng, Kravchenko, and Lin [12] studied several special cases with fixed job sequences and solved these problems in polynomial time. Moreover, the branch-and-bound algorithm is an enumeration technique that can be applied to combinatorial optimization problems. Brucker and Sievers [13] deploy branch-and-bound algorithms on the job-shop scheduling problem and Hadjar, Marcotte, and Francois [14] do the same on the multiple-depot vehicle scheduling problem. To find approximate solutions to a hard optimization problem, various meta-heuristics have been designed. Kunhare, Tiwari, and Dhar [15] used particle swarm optimization for feature selection in intrusion detection systems. Kunhare, Tiwari, and Dhar [16] further used a genetic algorithm to compose a hybrid approach to intrusion detection. For solving a worker assignment bi-level programming problem, Luo, Zhang, and Yin [17] designed a two-level algorithm, which simulated annealing as the upper level to minimize the worker idle time and the genetic algorithm as the lower level to minimize the production time. For more general coverage, the reader is referred to Ansari and Daxini [18] and Rachih, Mhada, and Chiheb [19]. Ant colony optimization (ACO) is a meta-heuristic algorithm that can be used to find approximate solutions to difficult optimization problems. Many research studies in the literature also use ACO to solve scheduling problems, such as Blum and Sampels [20] on group shop scheduling problems; Yang, Shi, and Marchese [21] on generalized TSP problems; and Xiang, Yin, and Lim [22] on operating room surgery scheduling problems. According to the above, it is known that the branch-and-bound algorithm and ACO may be effective in solving the scheduling problem in our study.

3. Integer Programming Models

In this section, to mathematically present the studied problem , we formulate two integer programming models. Since the problem’s nature is set on permutations of jobs, we deploy two common approaches, sequence-based decision variables and position-based decision variables, for shaping permutation-based optimization problems. The models will be then implemented and solved by the off-the-shelf Gurobi Optimizer.

3.1. Position-Based IP

In the section, we focus on the decision that assigns activities at positions. Activities are setups and activities and are jobs. Therefore, an activity could be either a job or a setup operation. In the model, there are six categories of constraints; binary variables x; and two subsets of integer variables, and . Index indicates the positions. We use the binary relation to indicate whether the setup i should finish before the job j starts. The variables used in the model are defined in the following:

Decision variables:

Auxiliary variables:

Note that extra variables, , are introduced for extracting the completion times of jobs. If a position k is loaded with a setup, then , where M is a big number.

Position-based IP:

The goal is to minimize the total completion time of jobs. Constraint (1) lets each position accommodate exactly one job or one setup. Constraint (2) lets each activity be assigned to exactly one position. Constraint (3) ensures that any job j can start only after its setup operations, i, are all finished. Constraint (4) lets the completion time of the first position be greater than or equal to the processing time of the event that occupied the first position. Constraint (5) defines the completion time of the position k to be greater than or equal to the completion time of plus the processing time of the event that is processed in the position k. Constraint (6) defines the completion time if the position k contains a job. The reason we added a variable is that if the objective function computes the completion time of jobs in ; it becomes quadratic. Therefore, we add an extra variable, , to make the objective function linear, i.e., .

3.2. Sequence-Based IP

In this section, the formulation approach is to determine the relative positions between each two activities. The model consists of five categories of constraints; binary variables x; and two subsets of integer variables, and .

Decision variable:

Auxiliary variables:

Sequence-based IP:

The objective value is to minimize the total completion time of jobs except for setup operations. Constraint (9) limits the precedence between the two events. Constraint (10) means that if job j needs setup i, then setup i should come before job j. Constraint (11) lets the completion time of job j be greater than or equal to the completion time of job i plus the processing time of job j if job i precedes job j. Constraint (12) defines the completion time of event i if it is a setup. Constraint (13) defines the completion time of event j if it is a job.

4. Branch-and-Bound Algorithm

In this section, we explore a search tree that generates all permutations of jobs. In the branch-and-bound algorithm, there will be an upper bound representing the current best solution during the search process. In the process of searching, each node will calculate the lower bound once, and if the lower bound calculated is not better than the upper bound, the subtree of the node will be pruned to speed up the search. Therefore, we propose an upper bound as the initial solution, a lower bound for pruning non-promising nodes, and a property to check whether each node satisfies the condition when pruning the tree.

4.1. Upper Bound

First, we use an ACO algorithm coupled with local search to find an approximate solution as an upper bound, sorted by the settings of pheromone and visibility. Details about the ACO algorithm will be introduced in Section 5. Implementing a branch-and-bound algorithm with tight upper bounds helps converge the solution process faster.

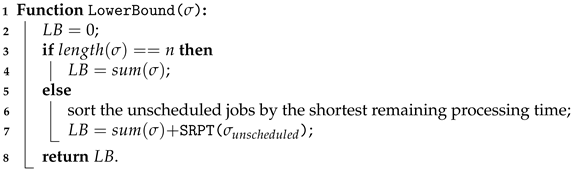

4.2. Lower Bound

Lower bounds can help cut unnecessary branches that will never lead to a solution better than the incumbent one. Different approaches can be used to derive lower bounds. In our study, we compute a lower bound by sorting the remaining processing times of unscheduled jobs. We can express it as the problem. When the setup operations of the scheduled jobs are complete, we can release these setup times. Then, we denote the unfinished setup operations as the release date of each job and implement the shortest remaining processing time (SRPT) method. The process with the least amount of time remaining before completion is selected to execute. Finally, we add the total current completion time of scheduled jobs and the result of the SRPT mentioned above as a lower bound. The Lower Bound algorithm is shown in Algorithm 1.

| Algorithm 1: LowerBound |

|

4.3. Dominance Property

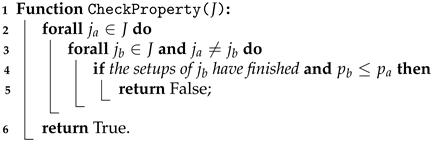

In this section, after we use the lower bound to prune nodes, we also propose a property of the branch-and-bound algorithm (Algorithm 2), which can also speed up node pruning and reduce tree traversal time. The content description and proof of the property are as follows:

Lemma 1.

Let be the unscheduled jobs at a node X in the branch-and-bound tree. For any unscheduled job , if there is another unscheduled job such that the setups of are all scheduled and , then the subtree by choosing as the next job to schedule can be pruned off because precedes in some optimal solution.

Proof.

Let be the sequence of scheduled jobs. Assume that there is an optimal solution , where L is a sequence of the unscheduled jobs. When the setups of job are not yet completed, its completion time would be , and, when the setups of job are completed, its completion time would be , where is the completion time of all jobs of L. Assuming the positions of and are swapped as , we denote their completion times as ,, and . At this point, will be , and will be . Suppose that if is less than , it makes less than , which also makes the completion time of L shorter, to the benefit of both job and L. When both and move forward, the result of will also decrease accordingly. According to the assumption, we know that the total completion time of will be smaller than that of . Therefore, we can prune off the branch of node , which will not lead to a better solution without sacrificing the optimality. □

| Algorithm 2: Check Property |

|

4.4. Tree Traversal

In this section, we use three different tree traversal methods, depth-first search (DFS), breadth-first search (BFS), and best-first search (BestFS), to perform the branch-and-bound algorithm. Moreover, we also added the upper bound, lower bound, and property mentioned above into our branch-and-bound algorithm.

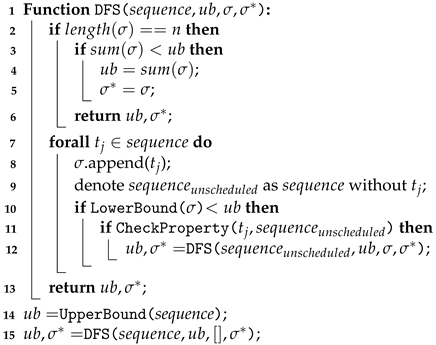

4.5. Depth-First Search (DFS)

DFS is a recursive algorithm for searching all the nodes of a tree and can generate the permutations of all the solutions. It starts at the root node and traverses along each branch before backtracking. The advantage of DFS is that the demand for memory is relatively low, but the disadvantage is that because of recursion, there will be a heavier loading in the stack operation, and it will take more time to find all the solutions. The DFS algorithm is shown in Algorithm 3.

First, the algorithm will obtain the upper bound from Line 15 and call the recursive DFS function. When we encounter the deepest node or have visited all of its children, we move backward along the current path to find the unvisited node to traverse. In the search process, we use LowerBound() and CheckProperty() to test whether we should continue to search down or not. If the lower bound is greater than or equal to the upper bound, or if the property is not met, we will prune the branch because it does not yield a better solution than the current one. This method can reduce the number of search nodes.

| Algorithm 3: Depth-First Search |

|

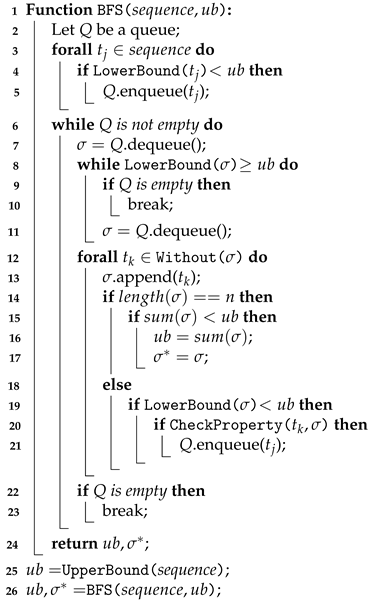

4.6. Breadth-First Search (BFS)

BFS is a tree traversal algorithm that satisfies given properties. It starts at the root of the tree, traverses all nodes at the current level, and moves to the next depth level. Unlike DFS, which will find a solution first, it will wait until the last level is searched to find all suitable solutions. In particular, this method uses a queue to record the sequence of visited nodes. The advantage of BFS is that each node is traversed by the shortest path, but the disadvantage is that it requires more memory to store all of the traversed nodes. It thus takes more time to search deeper trees.

The BFS algorithm is shown in Algorithm 4. First, the algorithm will obtain the upper bound by UpperBound() from Line 25. The BFS function starts from Line 2; we create a queue that uses the First-In-First-Out strategy. Lines 3 through 5 are the initial settings that we use to set a root. From Lines 6 to 24, we enqueue the root node and then dequeue the values in order. Then, we enqueue the unvisited nodes and recalculate the lower bound until there is no value in the queue. Before each enqueue, it is necessary to use LowerBound() and CheckProperty() to check whether the lower bound is smaller than the upper bound and whether it satisfies the property. It can reduce the number of visited nodes and shorten the execution time. In Line 12, we use the Without() function to obtain the nodes that have not been visited yet. The loop stops when the queue is empty, indicating that all nodes have been traversed.

| Algorithm 4: Breadth-First Search |

|

4.7. Best-First Search (BestFS)

BestFS works as a combination of depth-first and breadth-first search algorithms. It is different from other search algorithms that blindly traverse to the next node, it uses the concept of a priority queue and heuristic search, using an evaluation function to determine to which neighbor node is the best to move. It is also a greedy strategy because it always chooses the best path at the time, rather than BFS using an exhaustive search. The advantage of BestFS is that it is more efficient because it always searches through the node with the smaller lower bound first. On the other hand, the disadvantage is that the structure of the heap is difficult to maintain and requires more memory resources. Since each visited node will be stored in the heap, we can directly obtain the node with the smallest lower bound bound by heapsort. Therefore, when the amount of data is large, there will be too many nodes growing at one time, which will occupy a relatively large memory space.

The concept of the BestFS algorithm is the same as Algorithm 4. The difference is that in the BestFS function, we change the queue to a priority queue by using a min-heap data structure, where the priority order is sorted using the calculated lower bound, instead of using the FIFO order. The smaller the lower bound is, the higher the priority. When we use a heap to pop or push values, we will perform the function of heapify at the same time to ensure the heap is in the form of a min-heap. Heapify is the process of creating a heap data structure from a binary tree. Similarly, before each element is pushed into the heap, we use LowerBound() and CheckProperty() to check whether the lower bound is smaller than the upper bound and whether it satisfies the property.

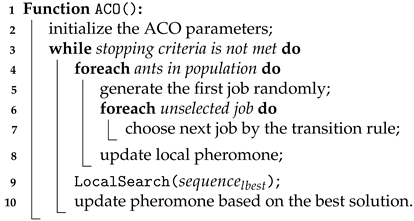

5. Ant Colony Optimization (ACO)

Ant colony optimization (ACO) was proposed by Dorigo et al. [23] and Dorigo [24]. It is a meta-heuristic algorithm based on probabilistic techniques and populations. ACO is inspired by the foraging behavior of ants, where the probability of an ant choosing a path is proportional to the pheromone concentration on the path, that is, a large number of ant colonies will give positive feedback. When ants are looking for food, they constantly modify the original path through pheromones and, finally, find the best path. Initially, Ant System (AS) was used to solve the well-known traveling salesman problem (TSP). Later, many ACO variants were produced to solve different hard combinatorial optimization problems, such as assignment problems, scheduling problems, or vehicle routing problems. In recent years, some researchers have focused on applying the ACO algorithm to multi-objective problems and dynamic or stochastic problems. In ant colony optimization, each ant constructs its foraging path (solution) node by node. When determining the next node to move on, we can use dominance properties and exclusion information to rule out the nodes that are not promising. In comparison with other meta-heuristics, this feature may save the time required for handling infeasible or inferior solutions. The pseudo-code of ACO that we adopt is shown in Algorithm 5.

| Algorithm 5: Ant Colony Optimization |

|

State transition rule: We treat each job as a node in the graph and all nodes are connected. To choose the next edge, the ant will consider the visibility of each edge available from its current location, as well as the pheromones. The formula for calculating the visibility value is given by , where is the visibility value from node i to node j defined as the inverse of the processing time of job j plus its unfinished setup operations. Then, we will calculate the probability of each feasible path; the probability formula is given as where is the pheromone on the edge from node i to node j, is a parameter for controlling the influence of the pheromone, and is a parameter for controlling the influence of invisibility. The next node is determined by a roulette wheel selection.

Pheromone update rule: When all ants have found their solutions, the pheromone trails are updated. The formula for updating the pheromones is defined as , where is the pheromone evaporation rate, and , the incremental of the pheromone from node i to node j by the kth ant, is if the ant k traverses ; 0, otherwise, where is the total completion time in the solution of the kth ant, and Q is a constant.

Stopping criterion: We set a time limit of 1800 s for the ACO execution. Once the course reaches the time limit, the ACO algorithm will stop and report the incumbent best solution.

To close the discussion of ACO features, we note that local search algorithms can improve on the ACO solution at each iteration and make the result closer to the global optimal solution. At the end of each ACO generation, we deploy a 2-OPT local search procedure to the best solution of each generation so as to probabilistically escape the incumbent solution away from the local optimum.

6. Computational Experiments

In this section, we generate test data for appraising the proposed methods. The solution algorithms were coded in Python, and the integer programming models are implemented on Gurobi 9.1.2 interfaced with Python API. The experiments were performed on a desktop computer with Intel Core(TM) i7-8700K CPU at 3.70GHz with 32.0 GB RAM. The operating system is Microsoft Windows 10. We will describe the data generation design and parameter settings in detail and discuss the experimental results.

6.1. Data Generation Scheme

In the experiments, datasets were generated according to the following rules, and all parameters are integers:

- Six different numbers of jobs and different numbers of setup operations .

- A binary support relation array of a size is randomly generated. If belongs to , denoted by , then job cannot start unless setup is completed. The probability for is set to be 0.5, i.e., if a generated random number , then . Note that when for all i and j, the problem can be solved by simply arranging the job in the shortest processing time (SPT) order.

- The processing times of jobs were generated from the uniform distribution .

- The processing times of setups were generated from the uniform distribution .

- For each job number, three independent instances were generated. In total, 18 datasets will be tested, as shown in Table 1.

Table 1. Categories of datasets.

Table 1. Categories of datasets.

6.2. Results of Integer Programming Models

In the experiments of the integer programming models, we ran two integer programming models on the dataset with a time limit of 1800 s. The results are shown in Table 2. If an IP model did not complete its execution of a dataset in 1800 s, its run time is denoted as ”−”. In the table, the column indicates the relative difference between the feasible solution found upon termination and the best proven lower bound. The gap values were in the output of Gurobi. The gap value is defined as: where and are a lower bound and the incumbent solution objective, respectively. When the gap is zero we have demonstrated optimality. The column represents the best result of all our proposed methods on the same dataset.

Table 2.

Results of different IP models.

When is 10, the sequence-based IP takes more than 1800 s, even though both methods can obtain the optimal solution. The position-based IP takes less time and ends up with a gap of . When is greater than or equal to 20, neither model can find the optimal solution within 1800 s, but there are still some solutions that can find the same solution as the best solution, such as of the position-based IP and of the sequence-based IP. As increases, the gap of the position-based IP will be greater than that of the sequence-based IP. However, when we compare it with the best solution, even if the objective value is the same as the best solution, the gap value is still very large, such as the position-based IP of and the sequence-based IP of to . It means that their lower bounds are not tight, i.e., they have a significant deviation from the final feasible solution.

6.3. Results of Branch-and-Bound Algorithm

Table 3 shows the results of the branch-and-bound algorithm with three different tree traversal methods. We set the time limit to 1800 s. In this table, the column represents the number of visited nodes. The column is an abbreviation for deviation, expressed as a percentage of the difference between the objective value and the best solution. The calculation formula is as .

Table 3.

Results of different tree traversal methods.

When is less than 20, DFS and BestFS successfully find the optimal solutions, but their execution times and the number of visited nodes of BFS are much larger than others. Even if is 20, BFS cannot find the optimal solution within the time limit. In addition, we can see that the execution time of BestFS is faster than that of DFS for a small number of jobs. When is greater than or equal to 30, the three methods fail to find the optimal solution within the time limit. The number of visited nodes and the deviation of DFS are clearly lower than those of BFS and BestFS. The results indicate that DFS is more efficient than BFS and BestFS because the DFS algorithm is not a layer-order traversal but will backtrack after finding the solution. Therefore, BFS and BestFS may not be able to find any feasible solution within the time limit. To sum up, the performance of DFS is better than those of BFS and BestFS, so we will analyze the experimental results of DFS in detail in the next section.

6.4. Results of DFS Algorithm

In the experiment, we compare three different cases, including the original DFS algorithm, DFS with the lower bound, and DFS with the dominance property. Table 4 shows the experimental results of the different cases and also compares their objective values , numbers of visited nodes , execution times , and deviations from the best solution.

Table 4.

Results of DFS algorithm.

We can find that when the lower bound and properties are incorporated into DFS, the number of visited nodes is significantly reduced. Since this method will cut off unhelpful branches, it can also speed up the traversal, making it easier to find better solutions. Even when is greater than or equal to 30, none of the three cases can find the best solution within the time limit. However, compared with the original DFS, DFS with a lower bound and DFS with a dominance property attained smaller deviations, indicating the capability of finding solutions closer to the best solution.

6.5. Results of ACO Algorithm

In this section, we performed the ACO algorithm on the 18 datasets and set the time limit to 1800 s. Table 5, Table 6 and Table 7 summarize the results of the three branch-and-bound algorithms with ACO upper bounds. The results include objective values and deviation of the ACO. The execution time of the ACO algorithm is much shorter than that of the branch-and-bound algorithm. In addition, we will compare the objective value , the number of visited nodes , and the execution times, , of the original algorithm and the algorithm with ACO as the upper bound. The ACO parameters used in the experiments are shown as follows: ; ; ; ; and .

Table 5.

Results of DFS with ACO upper bounds.

Table 6.

Results of BFS with ACO upper bounds.

Table 7.

Results of BestFS with ACO upper bounds.

As can be seen from the experimental table, the deviation of the ACO is small and an even better solution can be found than IP models within 1800 s. Therefore, we can use the ACO as the initial value of the upper bound to speed up the tree traversal time.

When the branch-and-bound algorithm is executing with the test , the ACO can make smaller, cutting more unnecessary branches. According to the tables, when is less than or equal to 20, the algorithm with an upper bound finds the best solution in a shorter time and visits fewer nodes; especially for the ACO in BFS, this is more obvious. As the value of becomes larger, it increases the probability of the algorithm finding the best solution within the same time limit. In summary, using the ACO solution as an upper bound can make the branch-and-bound algorithm perform better.

To summarize the computational study, we note that the two proposed integer programming approaches and the branch-and-bound algorithm, aimed at solving the problem to optimality, can complete their execution courses for 20 jobs or less. For larger instances, these exact two approaches become inferior. When reaching the specified time limit, the reported solutions are not favorable. Another observation is about the three traversal strategies. DFS has its advantages in its easy implementations (by straightforward recursions) and minimum memory requirement. The BFS and BestFS strategies are known to show their significance in maintaining acquired information about the quality of the unexplored nodes in a priority queue. On the other hand, they suffer from the memory space and heap manipulation work for the unexplored nodes. BFS and BestFS would be preferred when a larger memory is available and advanced data structure manipulations are available.

7. Conclusions and Future Works

In this paper, we studied the scheduling problem with shared common setups of the minimum total completion time. We proposed two integer programming models and the branch-and-bound algorithm, which incorporates three tree traversal strategies and the initial solutions yielded from an ACO algorithm. A computational study shows that the position-based IP outperforms the sequence-based one when the problem size is smaller. As the problem grows larger, the gap values for the sequence-based IP are smaller than those of the position-based IP. Similar to the branch-and-bound algorithm, the DFS performs best, regardless of whether lower bounds and other properties are used or not. Finally, we also observed that using ACO to provide an initial upper bound indeed speeds up the execution course of the branch-and-bound algorithm.

For future research, developing tighter lower bounds and upper bounds could lead to better performance. More properties can be found to help the branch-and-bound algorithm curtail non-promising branches. For integer programming models, tighter constraints can be proposed to reduce the execution time and optimality gaps to reflect a real-world circumstance in which multiple machines or servers are available for a software test project. In this generalized scenario, a setup could be performed on several machines if the jobs that it supports are assigned to distinct machines.

Author Contributions

Conceptualization, M.-T.C. and B.M.T.L.; methodology, M.-T.C. and B.M.T.L.; software, M.-T.C.; formal analysis, M.-T.C. and B.M.T.L.; writing—original draft preparation, M.-T.C. and B.M.T.L.; writing, M.-T.C. and B.M.T.L.; supervision, M.-T.C. and B.M.T.L.; project administration, B.M.T.L.; funding acquisition, B.M.T.L. All authors have read and agreed to the published version of the manuscript.

Funding

Chao and Lin were partially supported by the Ministry of Science and Technology of Taiwan under the grant MOST-110-2221-E-A49-118.

Data Availability Statement

The datasets analyzed in this study are be available upon request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Leung, J.Y.T. Handbook of Scheduling: Algorithms, Models, and Performance Analysis; CRC Press: Boca Raton, FL, USA, 2004. [Google Scholar]

- Pinedo, M. Scheduling; Springer: Berlin/Heidelberg, Germany, 2016. [Google Scholar]

- Kononov, A.V.; Lin, B.M.T.; Fang, K.T. Single-machine scheduling with supporting tasks. Discret. Optim. 2015, 17, 69–79. [Google Scholar] [CrossRef]

- Graham, R.; Lawler, E.L.; Lenstra, J.K.; Kan, A.R. Optimization and approximation in deterministic sequencing and scheduling: A survey. Ann. Discret. Math. 1979, 5, 287–326. [Google Scholar]

- Brucker, P. Scheduling Algorithms; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Baker, K.E. Single Machine Sequencing with Weighting Factors and Precedence Constraints. Unpublished papers. 1971. [Google Scholar]

- Adolphson, D.; Hu, T.C. Optimal linear ordering. Siam J. Appl. Math. 1973, 25, 403–423. [Google Scholar] [CrossRef]

- Lawler, E.L. Sequencing jobs to minimize total weighted completion time subject to precedence constraints. Ann. Discret. Math. 1978, 2, 75–90. [Google Scholar]

- Hassin, R.; Levin, A. An approximation algorithm for the minimum latency set cover problem. In Lecture Notes in Computer Science; Springer: Berlin/Heidelberg, Germany, 2005; Volume 3669, pp. 726–733. [Google Scholar]

- Shafransky, Y.M.; Strusevich, V.A. The open shop scheduling problem with a given sequence of jobs on one machine. Nav. Res. Logist. 1998, 41, 705–731. [Google Scholar] [CrossRef]

- Hwang, F.J.; Kovalyov, M.Y.; Lin, B.M.T. Scheduling for fabrication and assembly in a two-machine flowshop with a fixed job sequence. Ann. Oper. Res. 2014, 27, 263–279. [Google Scholar] [CrossRef]

- Cheng, T.C.E.; Kravchenko, S.A.; Lin, B.M.T. Server scheduling on parallel dedicated machines with fixed job sequences. Nav. Res. Logist. 2019, 66, 321–332. [Google Scholar] [CrossRef]

- Brucker, P.; Jurisch, B.; Sievers, B. A branch and bound algorithm for the job-shop scheduling problem. Discret. Appl. Math. 1994, 49, 107–127. [Google Scholar] [CrossRef]

- Hadjar, A.; Marcotte, O.; Soumis, F. A branch-and-cut algorithm for the multiple sepot Vehicle Scheduling Problem. Oper. Res. 2006, 54, 130–149. [Google Scholar] [CrossRef]

- Kunhare, N.; Tiwari, R.; Dhar, J. Particle swarm optimization and feature selection for intrusion detection system. Sādhanā 2020, 45, 109. [Google Scholar] [CrossRef]

- Kunhare, N.; Tiwari, R.; Dhar, J. Intrusion detection system using hybrid classifiers with meta-heuristic algorithms for the optimization and feature selection by genetic algorithms. Comput. Ind. Eng. 2022, 103, 108383. [Google Scholar] [CrossRef]

- Luo, L.; Zhang, Z.; Yin, Y. Simulated annealing and genetic algorithm based method for a bi-level seru loading problem with worker assignment in seru production systems. J. Ind. Manag. Optim. 2021, 17, 779–803. [Google Scholar] [CrossRef]

- Ansari, Z.N.; Daxini, S.D. A state-of-the-art review on meta-heuristics application in remanufacturing. Arch. Comput. Methods Eng. 2022, 29, 427–470. [Google Scholar] [CrossRef]

- Rachih, H.; Mhada, F.Z.; Chiheb, R. Meta-heuristics for reverse logistics: A literature review and perspectives. Comput. Ind. Eng. 2019, 127, 45–62. [Google Scholar] [CrossRef]

- Blum, C.; Sampels, M. An ant colony optimization algorithm for shop scheduling problems. J. Math. Model. Algorithms 2004, 3, 285–308. [Google Scholar] [CrossRef]

- Yang, J.; Shi, X.; Marchese, M.; Liang, Y. An ant colony optimization method for generalized TSP problem. Prog. Nat. Sci. 2008, 18, 1417–1422. [Google Scholar] [CrossRef]

- Xiang, W.; Yin, J.; Lim, G. An ant colony optimization approach for solving an operating room surgery scheduling problem. Comput. Ind. Eng. 2015, 85, 335–345. [Google Scholar] [CrossRef]

- Dorigo, M.; Maniezzo, V.; Colorni, A. Positive Feedback as a Search Strategy; Technical Report 91–016; Dipartimento di Elettronica, Politecnico di Milano: Milan, Italy, 1991. [Google Scholar]

- Dorigo, M. Optimization, Learning and Natural Algorithms. Ph.D. Thesis, Dipartimento di Elettronica, Politecnico di Milano, Milan, Italy, 1992. (In Italian). [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).