1. Introduction

The emergency control (EC) of electrical power systems (EPSs) is an important part of ensuring the reliability of power supply to consumers. For modern EPSs, emergency control is aimed at maintaining small-signal stability (SSS), transient stability (TS), voltage stability, and maintaining the required level of alternating current frequency and the permissible current load of electrical network elements [

1]. A separate automation is responsible for each EPS control task by analyzing the parameters of the electrical mode (currents, voltages, active power flows, reactive power flows, etc.) and issuing control actions (CAs) (load shedding, shutdown generators, unloading generators, etc.) followed by the specified logical rules. The operation of automation devices is implemented in stages: the first includes the operation of devices protecting local energy areas and the last includes devices aimed at dividing EPSs.

The EC structure described above shows high reliability and efficiency including the active introduction of renewable energy sources (RESs) [

2,

3,

4,

5]. The goal of introducing RESs is to reduce the impact of the electricity sector on global warming [

6] by transitioning from traditional power plants running on fossil fuels to low-carbon electricity production by converting solar, wind, tidal, and other renewable energy sources. This goal was partially achieved in many European countries, the USA, India, and China [

7].

The key features of RESs are the stochasticity of electricity generation [

8,

9,

10], low inertia [

11], and the difficulty of predicting their operating modes. The process of increasing the share of RESs in EPSs is accompanied by the simultaneous dismantling of traditional fossil fuel power units. As a result, the characteristics of the properties of modern EPSs have changed significantly: the speed of transient processes has increased and the accuracy of predicting steady-state processes has decreased.

Features of EPSs are considered in a complex technical system with a significant predominance of digital devices for the analysis, control, and planning of steady-state and transient processes. The digitalization of the electric power industry has led to the accumulation of large amounts of data and the possibility of their processing using machine learning (ML) algorithms [

12].

Following the tightening of the rules of the electricity market, there is an active implementation of digital systems that allow for increasing the flow of intersystem active power flows [

13,

14,

15], which reduces the stability margin and increases the likelihood of developing an accident with EPS division.

Table 1 provides an analysis of the features of modern EPSs.

The described features of modern EPSs place new demands on traditional EC systems in terms of adaptability, accuracy, and speed. Traditional EC systems are based on one of two principles:

The choice of CA is carried out based on a predetermined logic, considering the worst-case scenario for accident development;

The choice of CA is carried out based on the actual operating mode of the EPS in a cyclic mode based on a mathematical model of the protected section of the electrical network.

To implement both EC principles, a pre-prepared list of examined accidents is used. This list is compiled manually based on operating experience and EPS accident statistics. In addition, the logic of operation of traditional EC devices is based on strictly deterministic approaches by implying a numerical analysis of a system of differential-algebraic equations that describe the mathematical model of the protected EPS. These features of traditional EC devices do not meet the requirements of modern EPSs in terms of speed and adaptability.

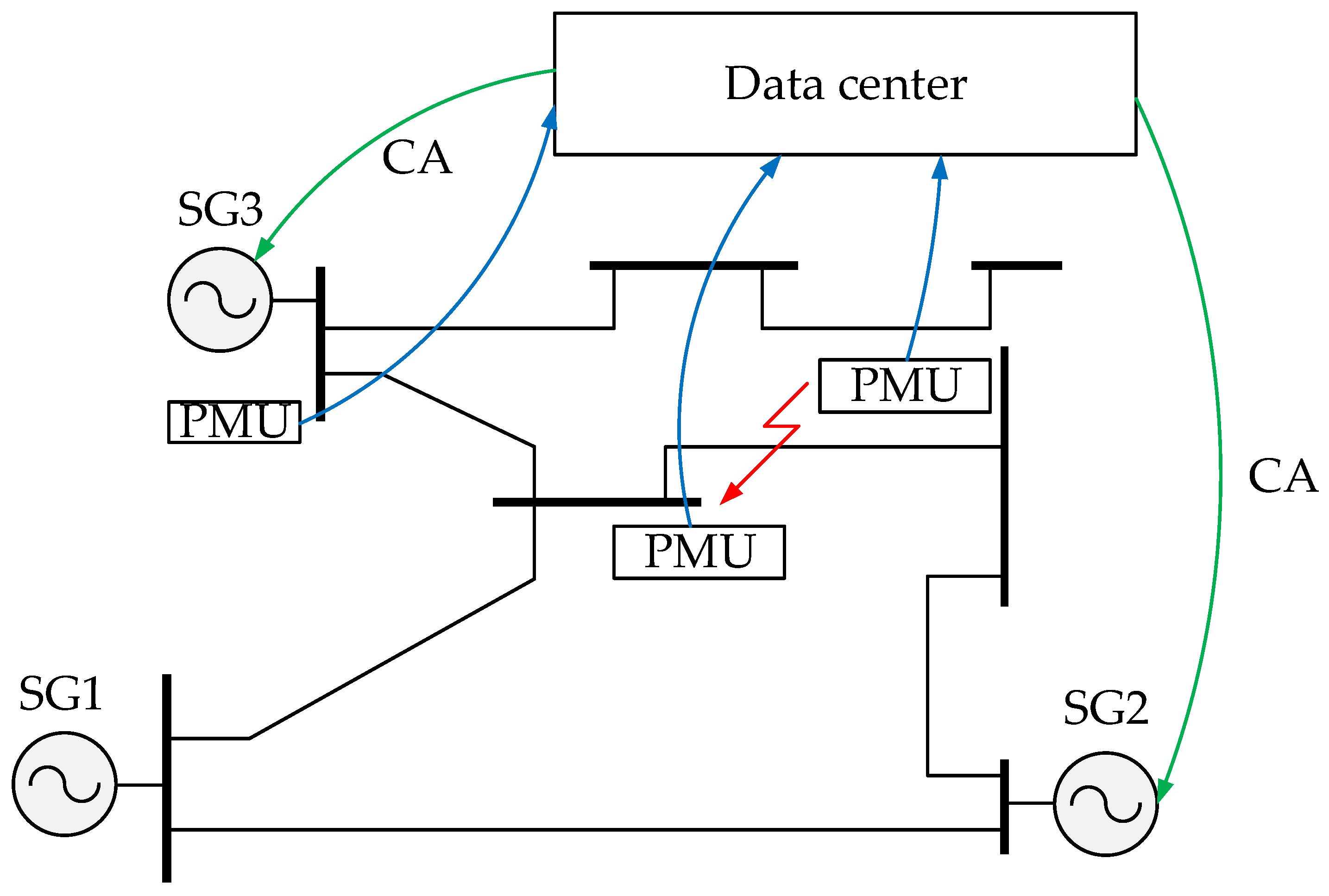

The development of computer technology, the theory of mathematical statistics, ML methods, as well as the active implementation of synchronized vector measurement devices (PMUs) make it possible to develop an adaptive EC EPS system based on big data classification algorithms using obtained PMUs during the registration of transient processes. This approach allows us to ensure the required adaptability and speed of CA selection.

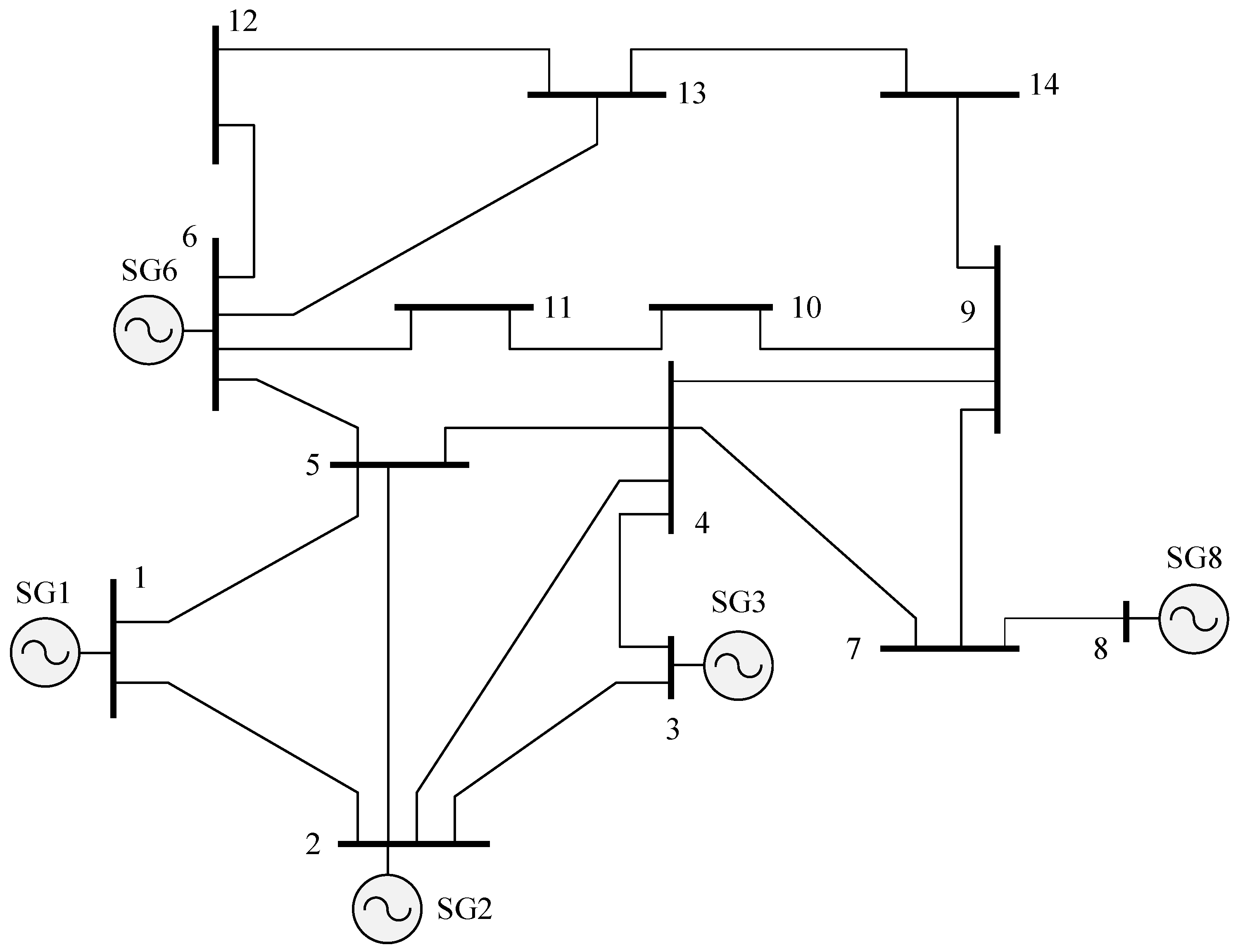

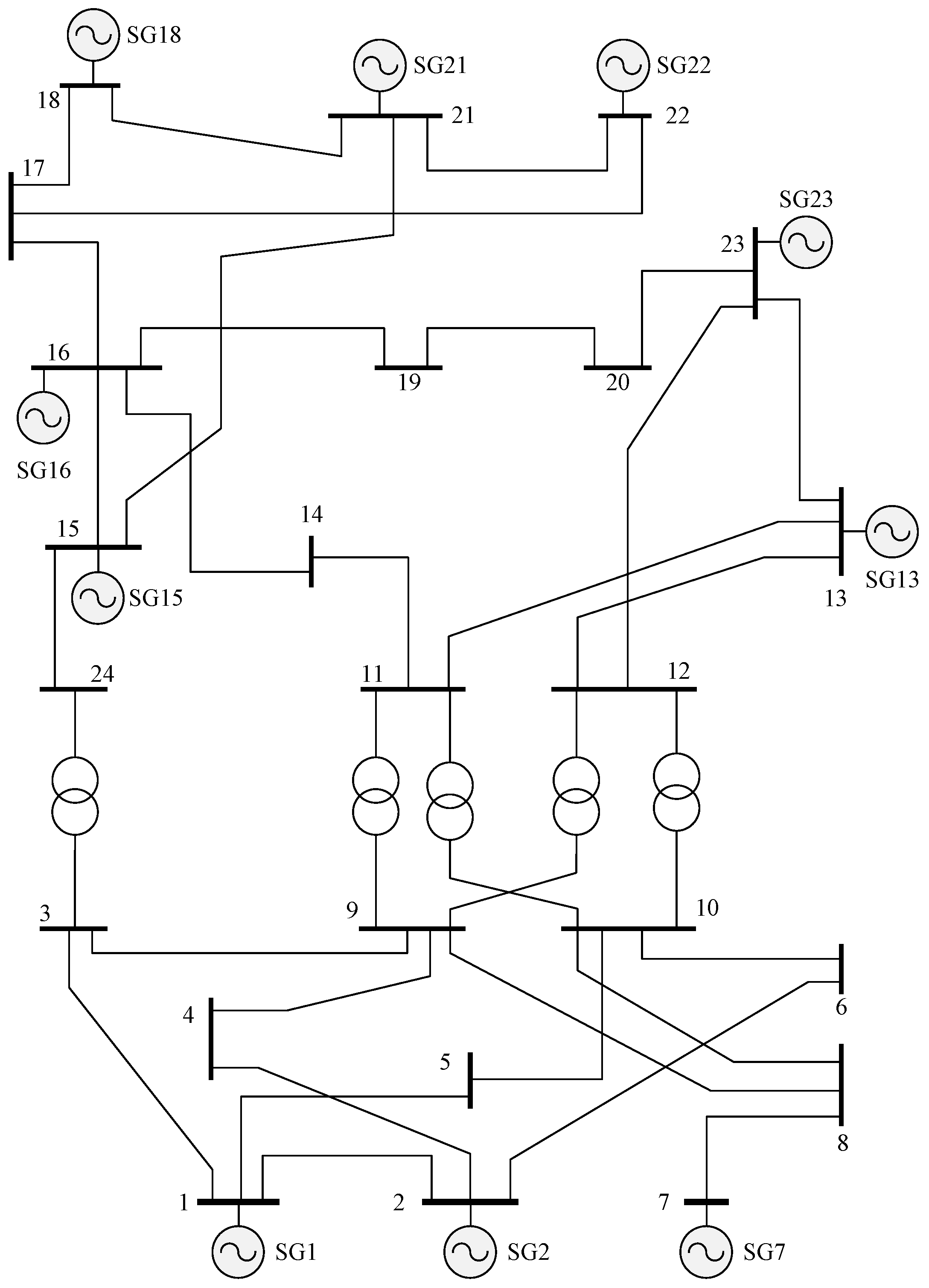

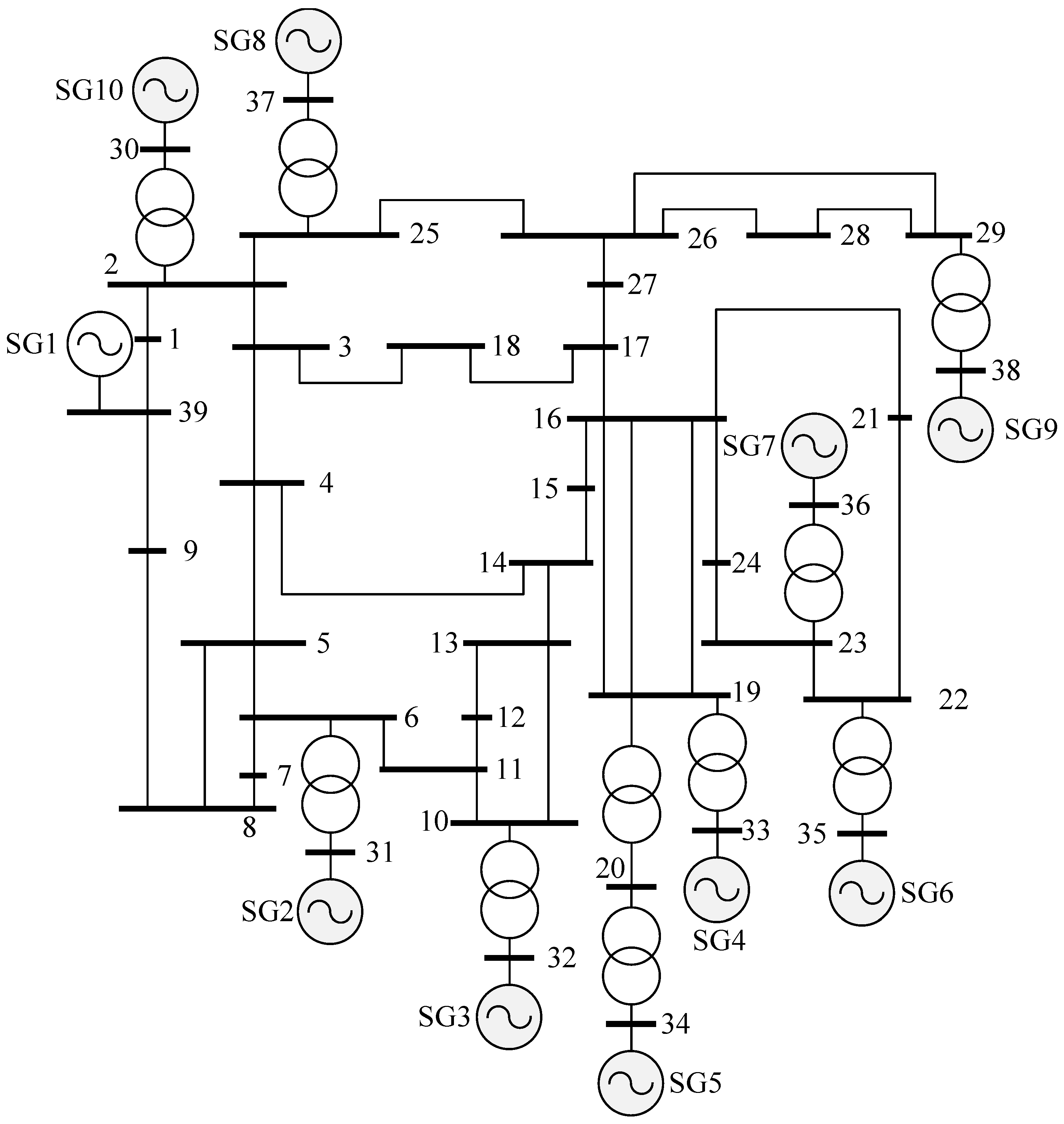

The purpose of this article is to develop and test the EC EPS methodology based on the IEEE14 [

16], IEEE24 [

17], and IEEE39 [

18] EPS mathematical models to preserve TS and SSS, considering a classification algorithm for big data obtained from PMUs.

The scientific contribution of this paper is described as follows:

A meta-analysis of existing EC EPS methods based on ML algorithms is presented. The advantages and disadvantages of existing methods are identified.

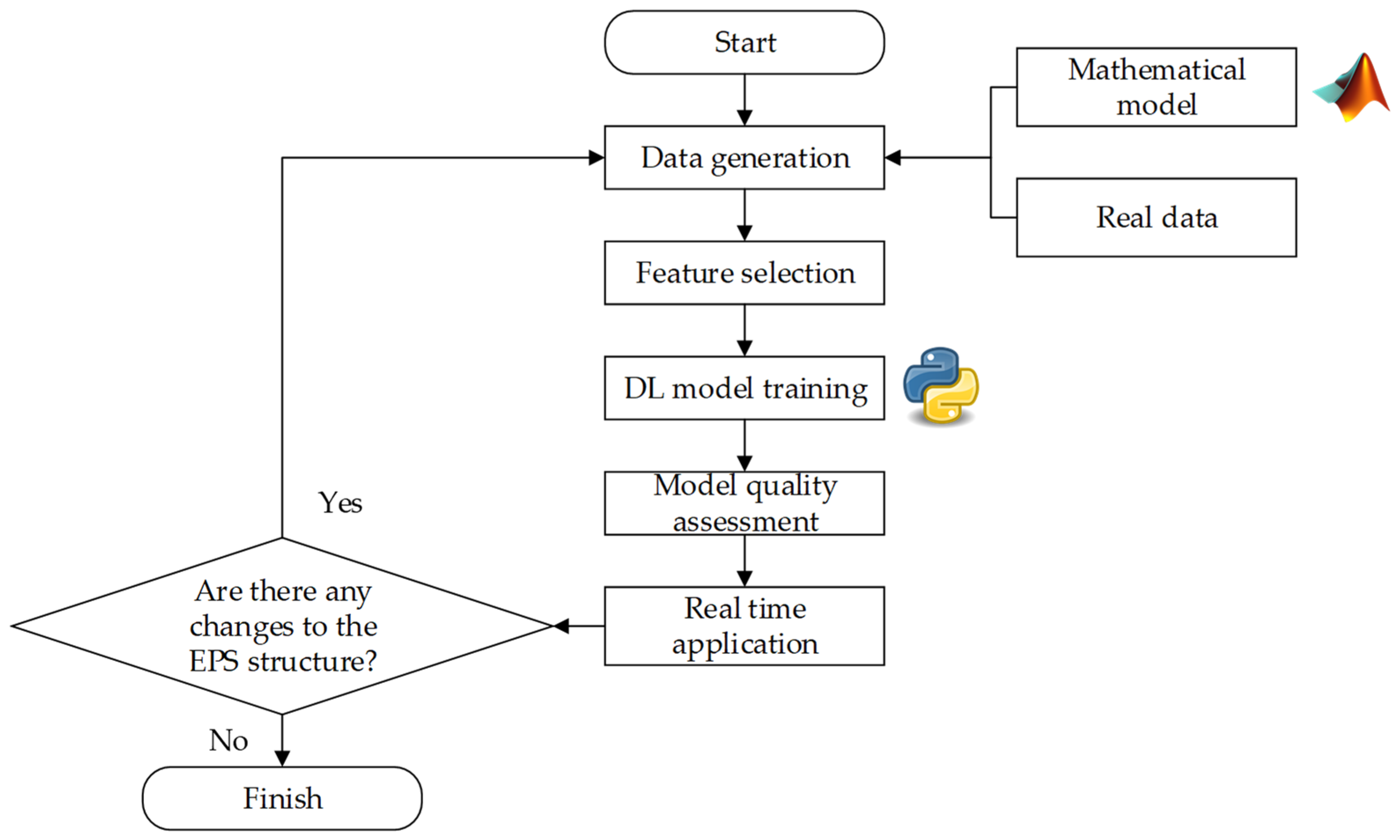

A comprehensive methodology for using ML algorithms in EC EPSs is proposed.

A study of the accuracy of EC EPS value classification based on ML algorithms is carried out.

The feasibility of using data from synchronized vector measurement devices for use in data sampling for ML algorithms is proved.

The feasibility of using ML algorithms to develop a flexible approach to provide SSS and TS EPSs is proved.

2. Related Works

From the ML perspective, the task of selecting CAs for EC EPSs to provide SSS and TS can be considered a multi-class classification problem. Unlike the task of estimating TS and SSS, the EC task is more complex due to the transition from binary classification [

19] to multi-class. Sets of optimal CAs aimed at ensuring the stability of the post-accident regime for the accident under consideration are considered as classes. The signs for the EC EPS problem are pre-emergency values of electrical mode parameters: voltages in EPS nodes, values of active and reactive power flows across the elements of the electrical network, load angles of synchronous generators (SGs), etc. In addition, most of the considered accidents in EPSs do not require EC, i.e., the post-emergency operating mode of EPSs is stable without the use of CA. Thus, the EC EPS problem based on ML algorithms has the following features:

A significant number of features in the data sample;

Uneven distribution of classes in the sample (most emergency processes do not require CA implementation);

Significant degree of overlap between classes (one CA can be part of many classes).

These features of the EC problem based on ML algorithms lead to the need for the pre-processing of data samples through the following actions:

Reducing the dimension of the problem by reducing the number of features;

Balancing the distribution of classes in the original data set;

Removing outliers from the original data set.

A similar algorithm is described in [

20]. In addition to processing the initial data sample, for the EC EPS task based on ML algorithms, an important step is the collection and retraining of the model in response to changes in the protected energy region (construction or dismantling of electrical network elements, active power sources, changes in SG excitation controller algorithms, etc.).

To solve the EC EPS problem based on ML algorithms, the following algorithms are used:

Decision trees (DTs) [

21,

22,

23];

Support vector machines (SVMs) [

25,

26,

27];

Artificial neural networks (ANNs) [

28,

29,

30,

31];

Extreme gradient boosting (XGBoost) [

36,

37].

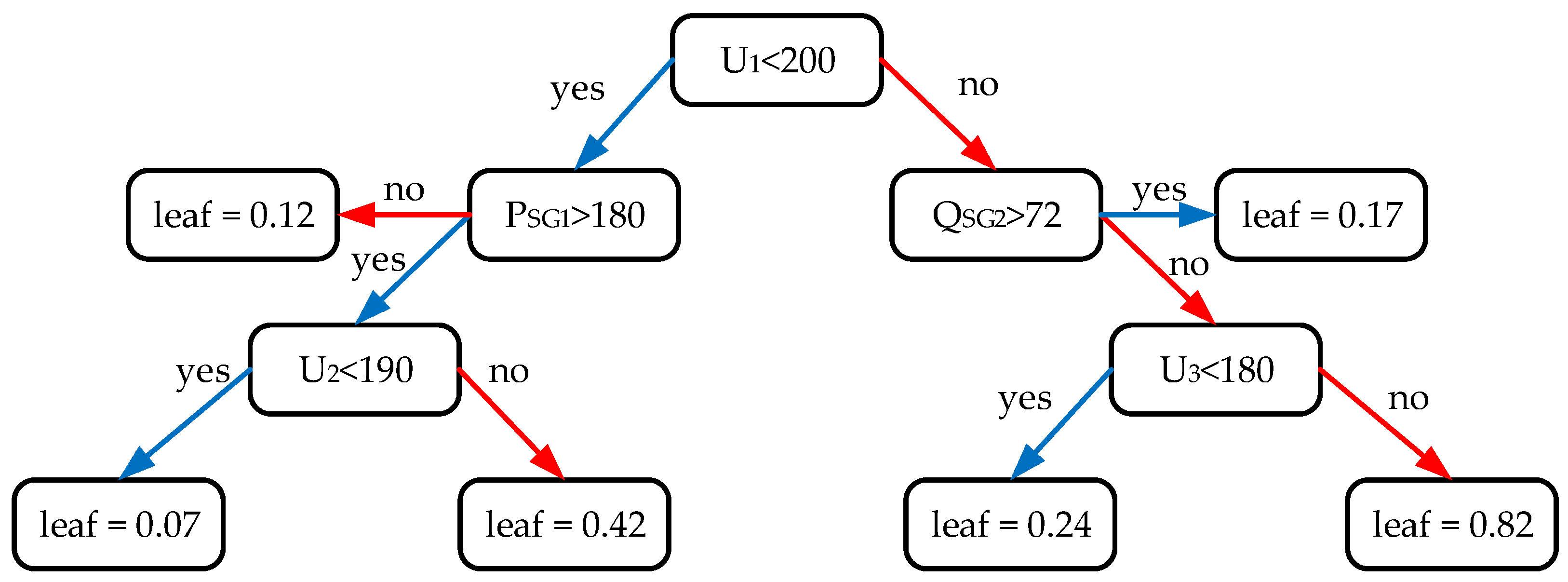

DT is a logistic classification and regression algorithm that combines logical conditions into a tree structure [

38]. The DT algorithm is based on a description of data in the form of a tree-like hierarchical structure with a specific classification rule in each node, which is determined as a result of training. DT can be constructed based on the analysis of the Gini coefficient [

39]:

where

Gini(m) is the value of the Gini coefficient, which shows the degree of heterogeneity of the data set

m; ∆

G is the value of the Gini coefficient increments after splitting the data at a node DT;

nm is the amount of data in the set

m;

n1 is the amount of data in the set

m1;

n2 is the amount of data in the set

m2;

m1 and

m2 are the data after splitting set

m at node DT.

As a result of DT learning, a set of rules is determined at each node in such a way as to minimize the value of the Gini coefficient. During the training process, logical rules are determined for only one sample feature in one iteration, so training data with multiple features is a time-consuming task.

In the study [

21], the DT algorithm was used to determine the CA to ensure EPS stability. To reduce the time delay for algorithm training, the authors used Fisher’s linear discriminant. When forming DT, the following objective function was used:

where

nG is the number of SGs by disabling which CA can be implemented,

nL is the number of loads by disabling which CA can be implemented,

PiG is the power SG

i, and

PjL is the load capacity

j.

The IEEE39 EPS model [

22] was used to train and test the developed algorithm. The accuracy of the DT algorithm on the training set was 99.43% and on the test set—99.66%.

In [

23], an algorithm for ensuring acceptable voltage levels is proposed based on the DT algorithm and data obtained from PMU devices. Testing was performed using a mathematical model from Hong Kong.

One way to overcome the disadvantages of the DT algorithm, associated with significant time costs for training and the tendency to overtrain, is to combine several shallow DT algorithms into an ensemble. This method of combining DT algorithms is called RF [

40].

In the study [

24], the RF algorithm was used to select CAs. The solution to the EC problem using EPS modes was divided into two stages:

The developed algorithm was tested based on the EPS IEEE16 model.

One of the ML algorithms that allows you to solve the classification problem is SVM. This method is based on searching for separating hyperplanes that maximize the distance between classes in the data sample. When using SVM, the assumption is made that classification reliability is increased by increasing the distance between the separating hyperplanes. To construct hyper-planes, the following objective function is used [

41]:

where ||

w|| is the norm of a vector perpendicular to the separating hyperplane,

ξi are variables describing the classification error,

C is a coefficient that provides a compromise between the training error and the separation boundary, and

N is the number of elements in the data sample.

The authors of [

25] used SVMs to analyze the static stability of EPSs based on data obtained from the PMU. The choice of SVMs is due to the ability to learn on small data samples, the absence of an obvious over-training problem, and the small number of adjustable parameters compared to ANNs. In this study, stress values at EPS nodes are used to analyze the control system. To test the proposed algorithm, the EPS IEEE39 mathematical model was used. The results of 492 simulations were used to form the data sample. The accuracy of the SVMs was 97% with a delay of 0.042 s for one emergency process.

In [

26], an SVM-based algorithm for analyzing the static stability of EPSs was developed. The paper compares the classification accuracy of SVMs and ANNs on obtained data during the calculation of a series of transient processes for a mathematical model of the Brazilian power system, consisting of 2684 nodes. Using SVMs allows you to increase the accuracy by 2.5 times and reduce the time of analyzing the static stability of EPSs by 2.5 times compared to ANNs.

The authors of the study [

27] proposed a method for ensuring acceptable voltage levels based on the SVM algorithm and data obtained from PMU devices. The proposed algorithm consists of two stages: training the SVM algorithm on historical data and running the trained algorithm in real time. The proposed algorithm was tested based on the EPS IEEE39 mathematical model.

The authors of [

28] proposed an algorithm for analyzing SSS EPSs based on deep learning ANNs, taking into account RESs. The initial data for the algorithm are retrospective data on electricity generation from salt and wind power plants, parameters of the electrical mode of the power system, and a set of CAs for the emergency processes under consideration. The next stage is a statistical analysis of the resulting sample to reduce its dimensionality by removing features with a low correlation concerning the classification object. Next, the sample is divided into tests and training. The final stage is training the model followed by testing. The proposed algorithm was tested on the EPS IEEE68 mathematical model. The accuracy of the model was 99.89%.

The study [

29] presents an EC EPS algorithm based on ANNs and the Lyapunov function, taking into account the minimization of the cost of CA implementation.

The authors of [

30] used the ANN algorithm to ensure acceptable voltage levels in EPS nodes. To increase the accuracy of the ANNs, the Proximal Policy Optimization algorithm was used [

31]. Testing was performed based on the EPS IEEE39 mathematical model.

In [

32,

33], the DL algorithm was used to develop the EC algorithm for EPS modes. This algorithm is based on the interaction of the agent function with the external environment, which is modeled as a Markov decision-making process. At each time step of the simulation, the agent can observe the state of the external environment and receive a reward signal depending on the influences issued to it. The goal of the algorithm is to apply optimal influences on the external environment to obtain the maximum total reward.

In [

32], to describe the EPS as an external environment for the DL algorithm, the following system of differential-algebraic equations with restrictions is used:

where

xt is the list of variables that determine the dynamic state of the EPS,

yt is the list of variables that determine the steady state of the EPS,

at is the list of CAs participating in the EC operating mode of the EPS,

dt is the magnitude of the disturbance, and values with superscripts

max and

min denote the maximum and minimum limitation of each variable.

To train and test an algorithm that implements EC modes of EPSs based on DL, the study [

32] proposed a platform containing two modules:

Module 1 implements the DL algorithm (OpenAI Gym, Python3);

Module 2 is used for EPS modeling and EC implementation (InterPSS, Java).

Communication between modules is ensured using the Py4J wrapper function. To configure the work of Module 1, a pre-prepared training sample is used. Next, in Module 2, the EPS transient process is simulated with the transfer of selected operating parameters to Module 1, in which the CA volume is calculated. To test the proposed platform, the standard EPS IEEE39 model was used; load shedding, generation shutdown, and the dynamic braking of generators due to braking resistors were used as CAs to ensure TS. As a result of a series of mathematical experiments, a high efficiency of the proposed platform was shown. The average CA-selection time for one emergency process was 0.18 s.

To ensure the required frequency level in isolated EPSs, the authors of [

34] proposed an algorithm based on the use of DL. The proposed algorithm was tested on the EPS IEEE37 mathematical model. For the considered test scenarios, the results of calculating the accuracy of CA determination and the time delay required to analyze one emergency process are not provided. The authors argue that a promising direction for the development of the proposed algorithm is to reduce its computational complexity. Work [

35] discusses the use of DL to ensure the required frequency level in isolated EPSs with RESs. The work also does not provide the results of determining the accuracy and time delays of the algorithm.

The development of the RF algorithm led to the development of the XGBoost algorithm [

36], which uses gradient descent for training. Thus, it is possible to overcome the disadvantages of the RF algorithm.

The authors of the study [

37] used the XGBoost algorithm to provide TS EPSs. Testing was performed on the IEEE39 EPS mathematical model, and real data were obtained from the South Carolina EPS. For the IEEE39 model, 478 stable modes and 362 unstable modes were considered, and the EC accuracy was 97.88%. For the South Carolina model, 483 stable modes and 405 unstable modes were considered, and the CA selection accuracy was 98.62%.

Table 2 provides an analysis of the reviewed ML algorithms used for EC EPSs.

The considered ML algorithms used to select a CA for storing TS EPSs are characterized by several disadvantages associated with the suboptimal choice of CAs, significant time costs for training the model, high requirements for RAM, a tendency to overtrain, and the complexity of determining hyperparameters. The XGBoost algorithm has the shortest CA-selection time to store TS EPSs compared to the DL and DT algorithms. For the RF algorithm, the study does not provide an estimate of time costs. To analyze and store SSS EPSs, two machine learning algorithms were considered: SVMs and ANNs. The highest performance of CA selection was shown for the SVM algorithm. The main disadvantage of this algorithm is its sensitivity to outliers and noise in the source data, which can lead to a significant displacement of the separating hyperplane from the optimal position. This problem can be effectively solved through the use of statistical filters at the data pre-processing stage.

One of the significant disadvantages of the considered ML algorithms is their non-universality in terms of choosing a CA only for storing TS or SSS. In addition, for the considered algorithms to work, a pre-generated list of accidents is required, for which the selection of a CA is required. This approach significantly reduces the adaptability of EC EPSs.

The purpose of this study is to develop and test, on mathematical data, a universal CA-selection algorithm for saving TS and SSS EPSs based on big data analysis through the use of DL algorithms. The following algorithms are under consideration: recurrent neural networks (RNNs), long short-term memory networks (LSTMs), restricted Boltzmann machines (RBMs), and self-organizing maps (SOMs). The selection of these algorithms was based on the analysis of the study [

42].

The scientific novelty of this research lies in solving the problem of adaptive EC EPSs based on deep learning methods for preserving TS and SSS. The adaptability of the proposed method is ensured by the absence of the need to specify a list of accidents under consideration for which the CA is selected. To increase the accuracy of CA selection in the original data set, measurements obtained from the PMU are used.

5. Conclusions

This study presents the results of developing an EC EPS methodology based on DL algorithms and obtained data from PMUs. Due to the introduction of a significant number of RESs and the rules tightening the functioning of the electricity market, there are significant changes in the speed of transition processes for modern EPSs. Therefore, traditional EC EPS systems do not meet the requirements for speed and reliability. This paper proposes a methodology for selecting a CA for storing TS and SSS EPSs.

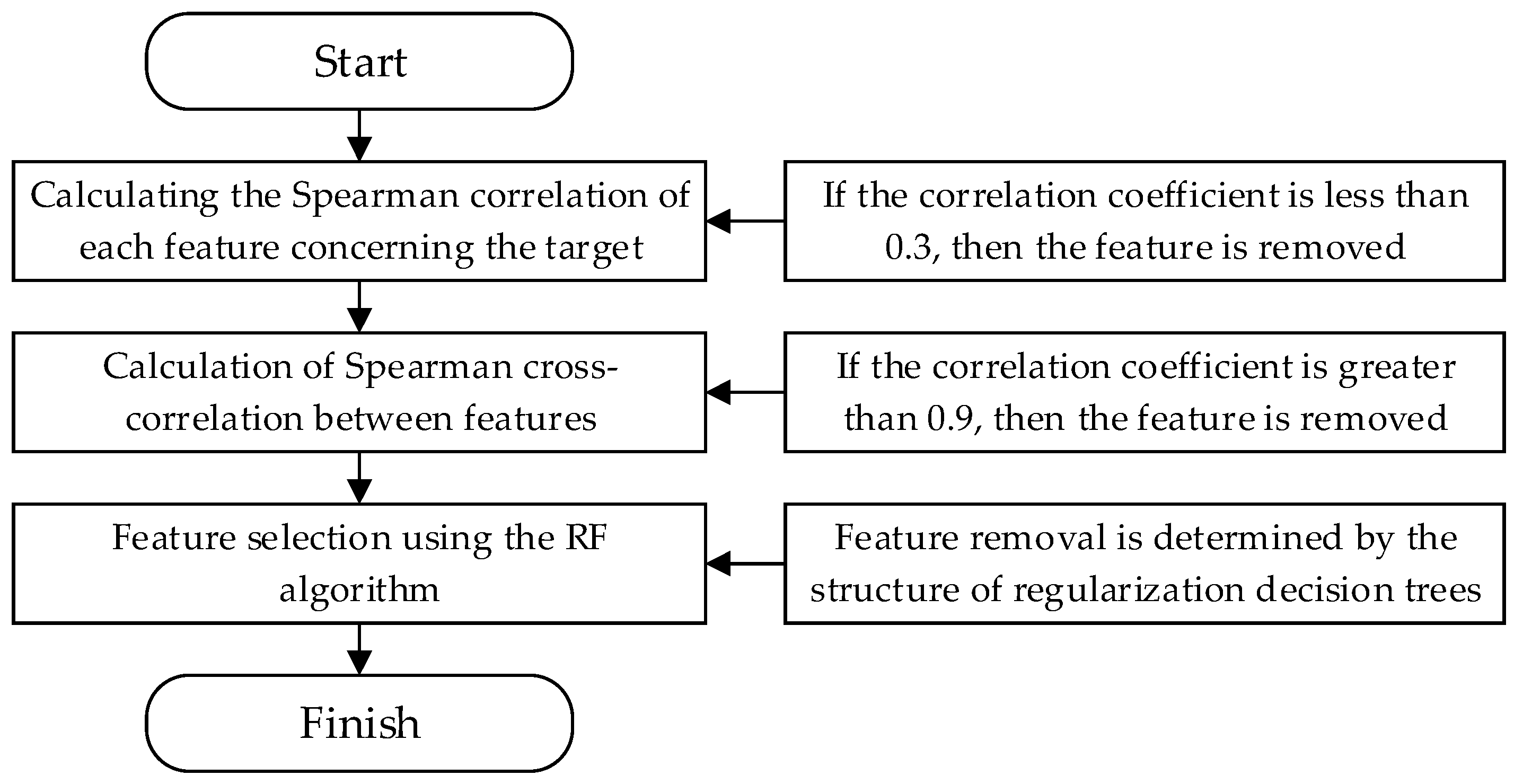

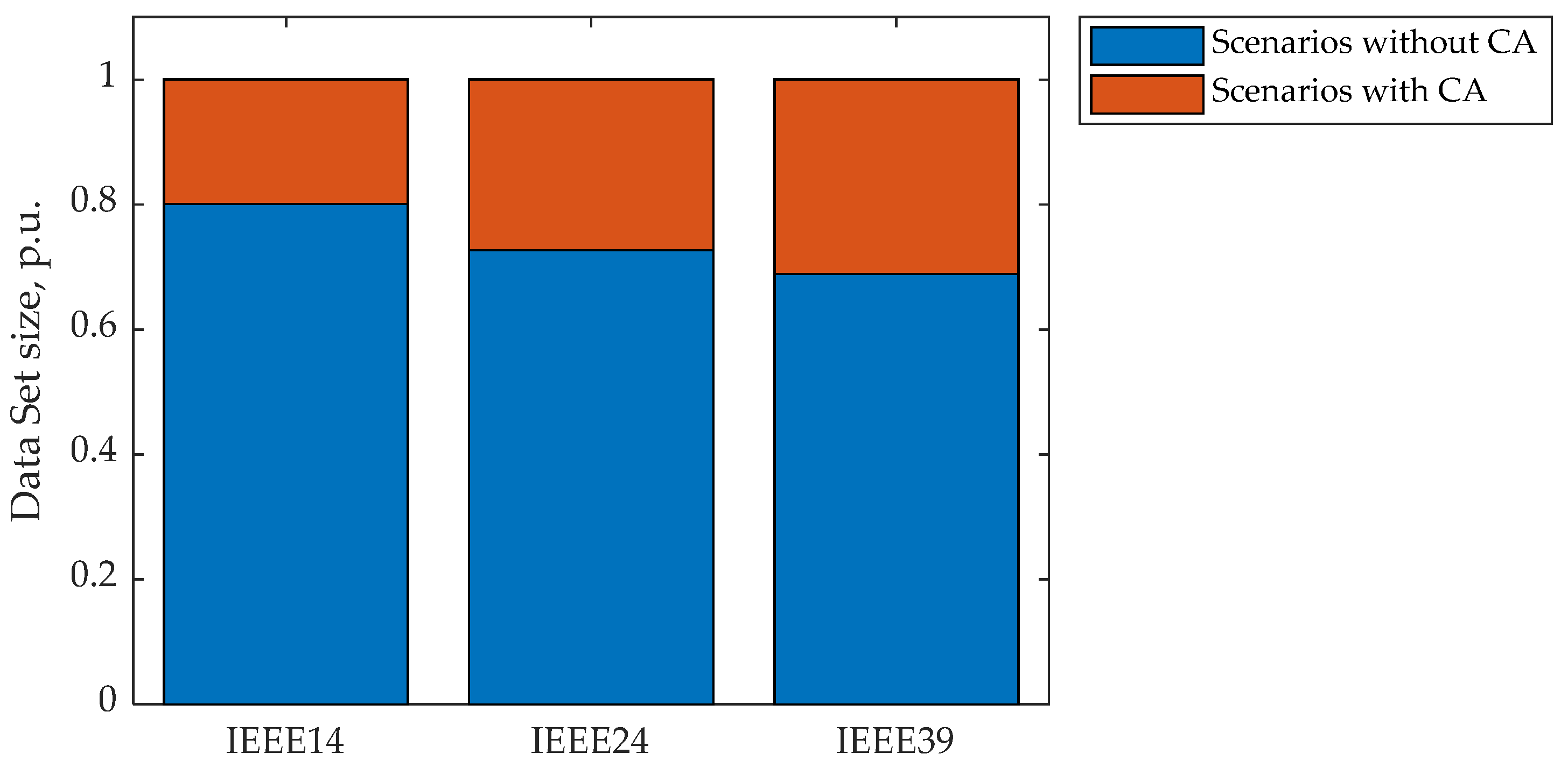

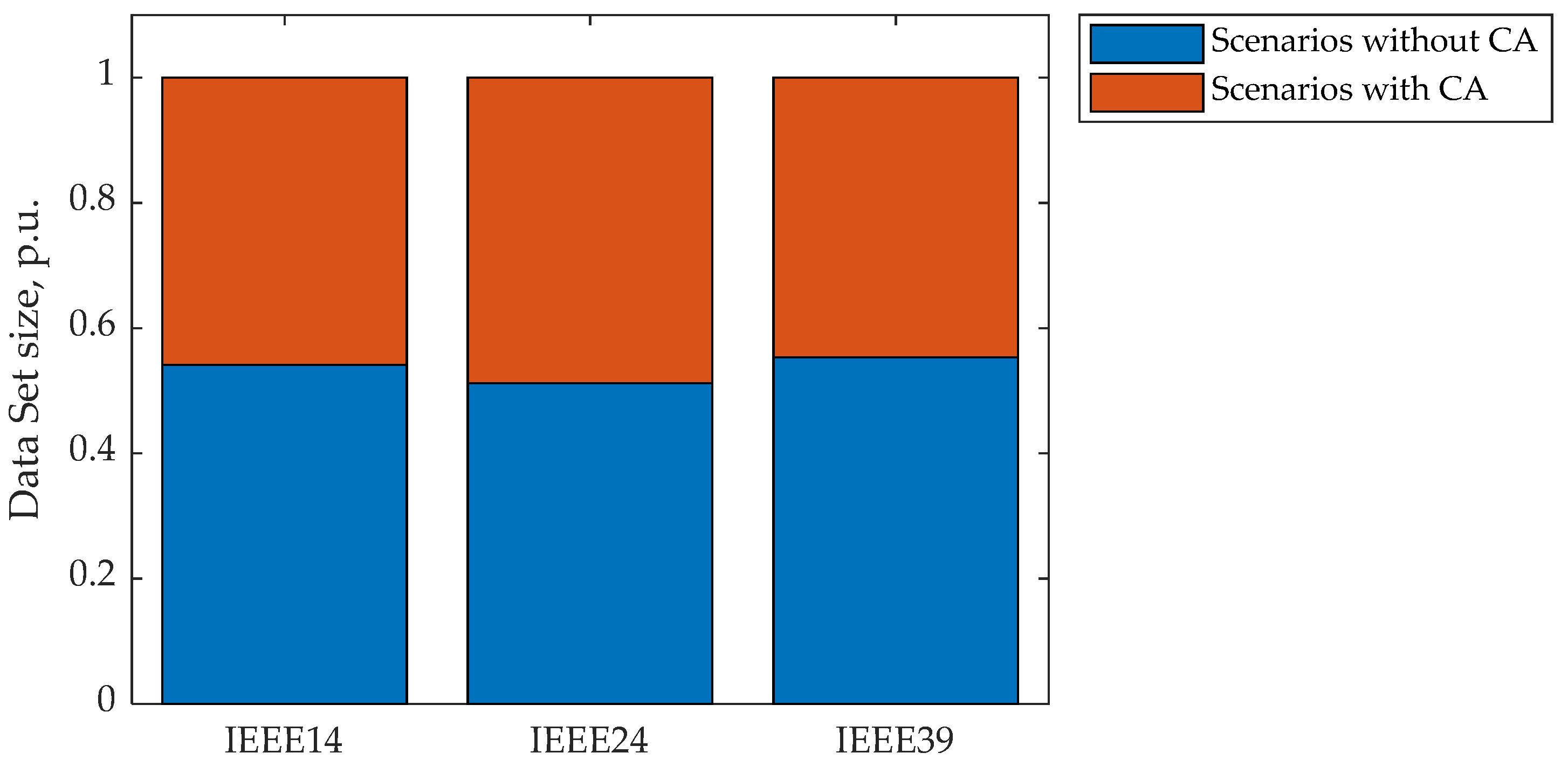

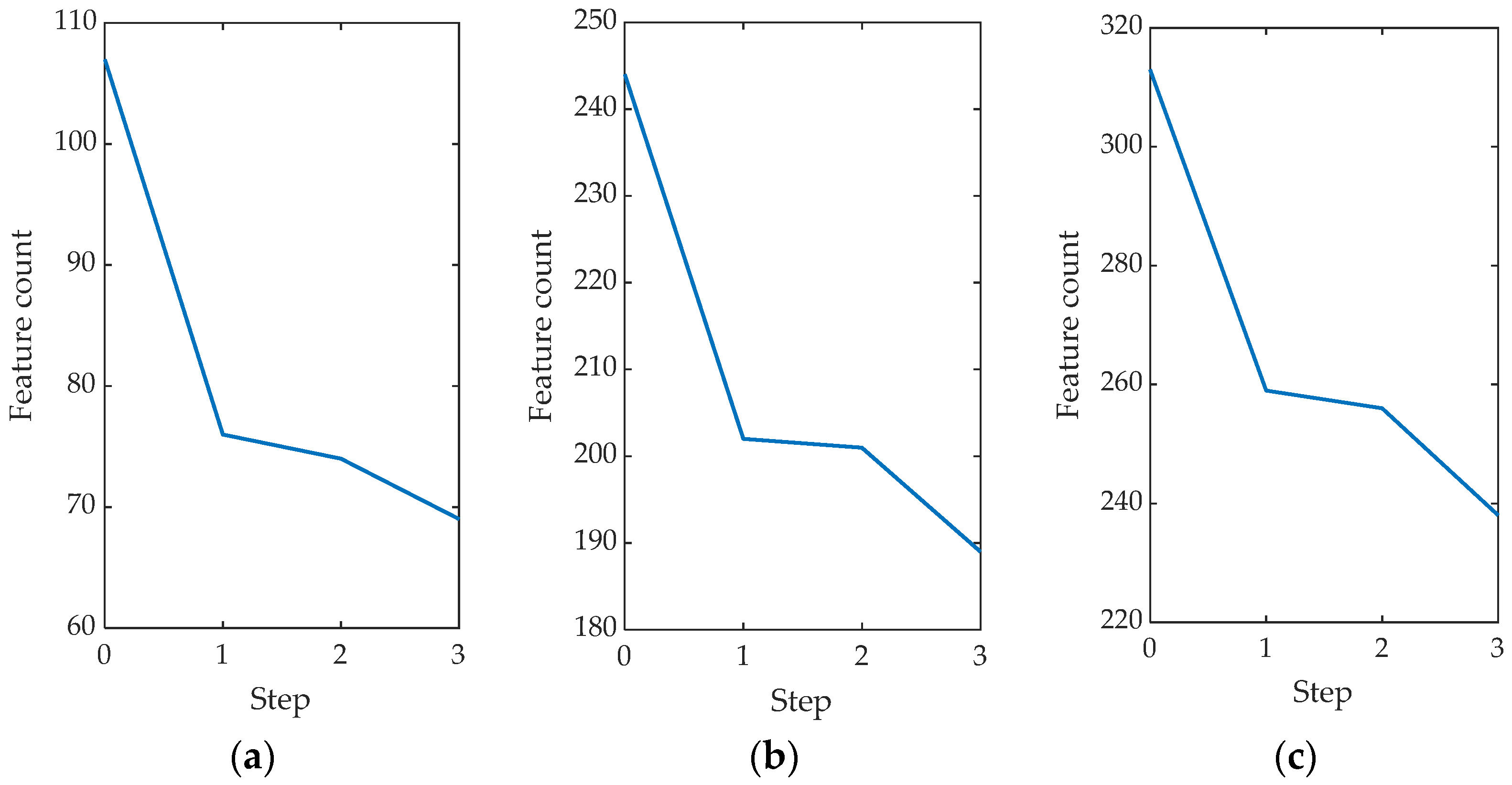

The following DL algorithms were considered: RNNs, LSTMs, RBMs, and SOMs. To form a data sample, the following EPS mathematical models were used: EEE14, IEEE24, and IEEE39. To process the data sample, a three-step algorithm was used, which consists of a sequential analysis of the Spearman correlation coefficient of each characteristic from the responses, the cross-Spearman correlation coefficient of the characteristics, and an analysis of the importance of the characteristics for the RF algorithm. For the IEEE14 model, the initial number of features in the data set was 107, for the IEEE24 model it was 244, and for the IEEE39 model, it was 313. After applying the feature selection algorithm shown in

Figure 2, the number of features was reduced for IEEE14 to 69, for IEEE24 to 189, and for IEEE39 to 238.

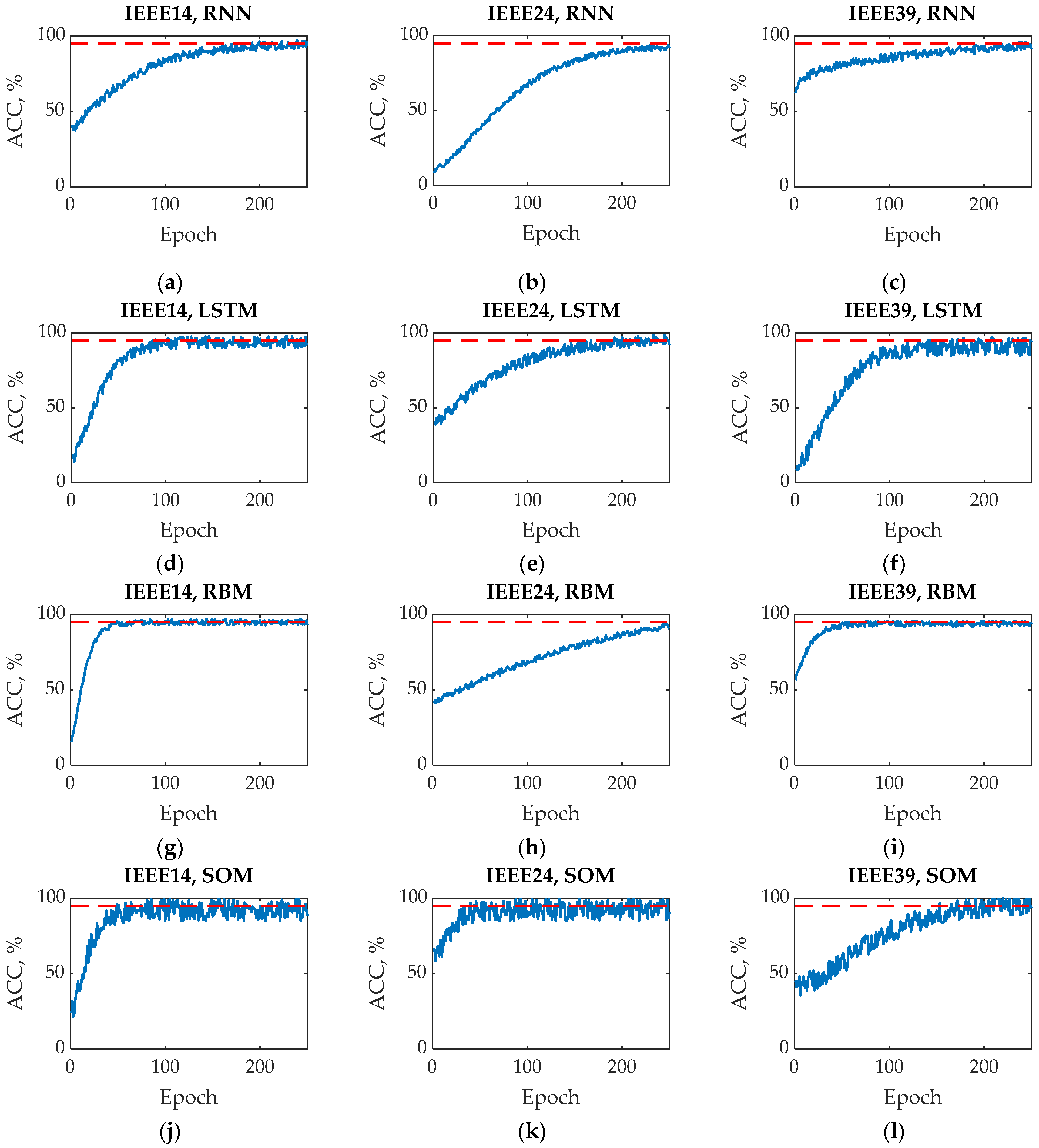

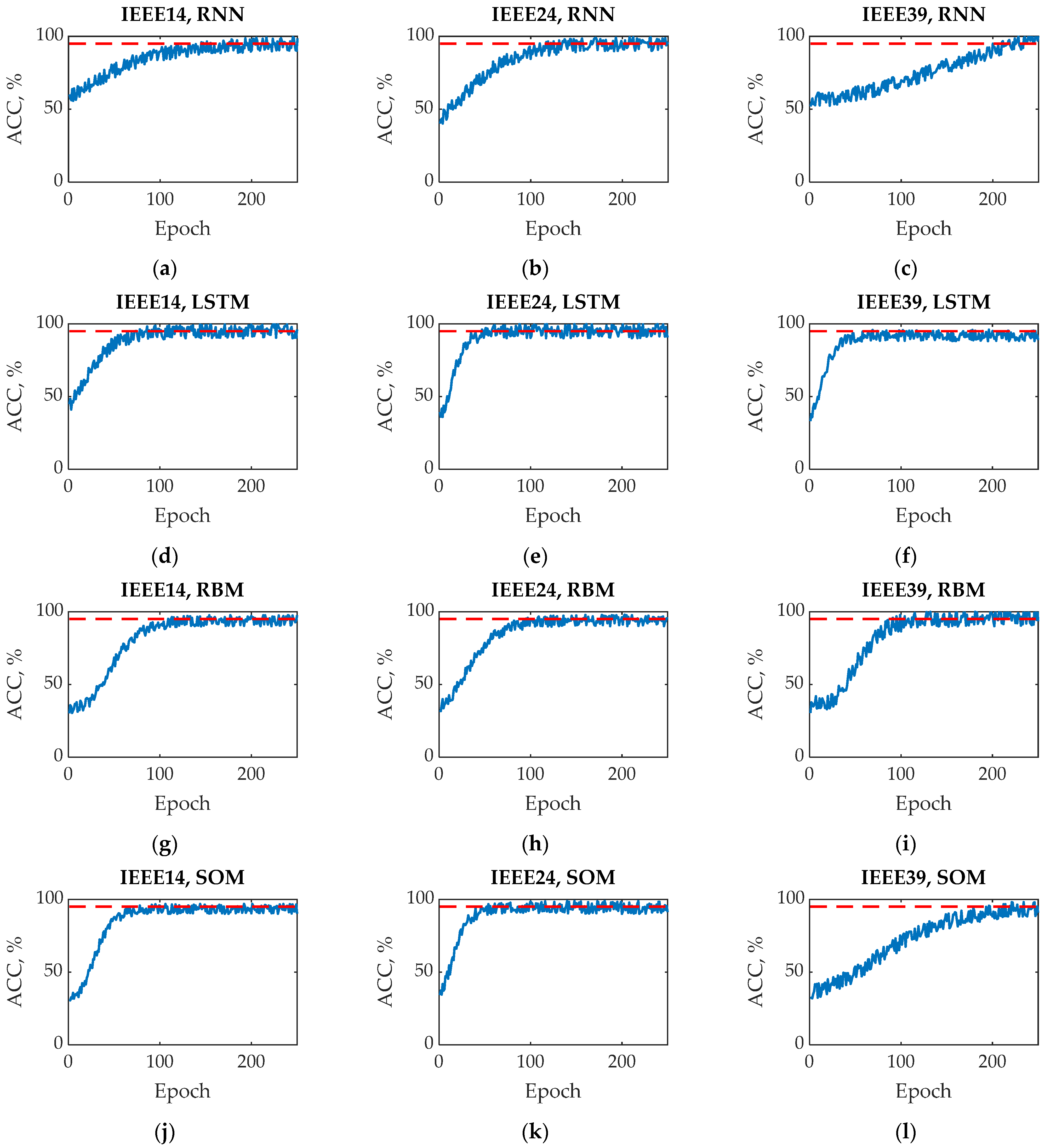

Next, the considered DL algorithms were trained and tested on a processed data sample, even without considering the voltage phase values that can be measured by PMUs. During testing, the following classification accuracy characteristics were analyzed: MDRs, FARs, ACCs, and AUCs.

Table 21 shows the average values for the IEEE14, IEEE24, and IEEE39 models of the ACC ratio of the RNN, LSTM, RBM, and SOM algorithms.

As a result of testing, it was found that the maximum accuracy corresponds to the LSTM algorithm. The ACC value for the LSTM algorithm is shown in bold in

Table 21.

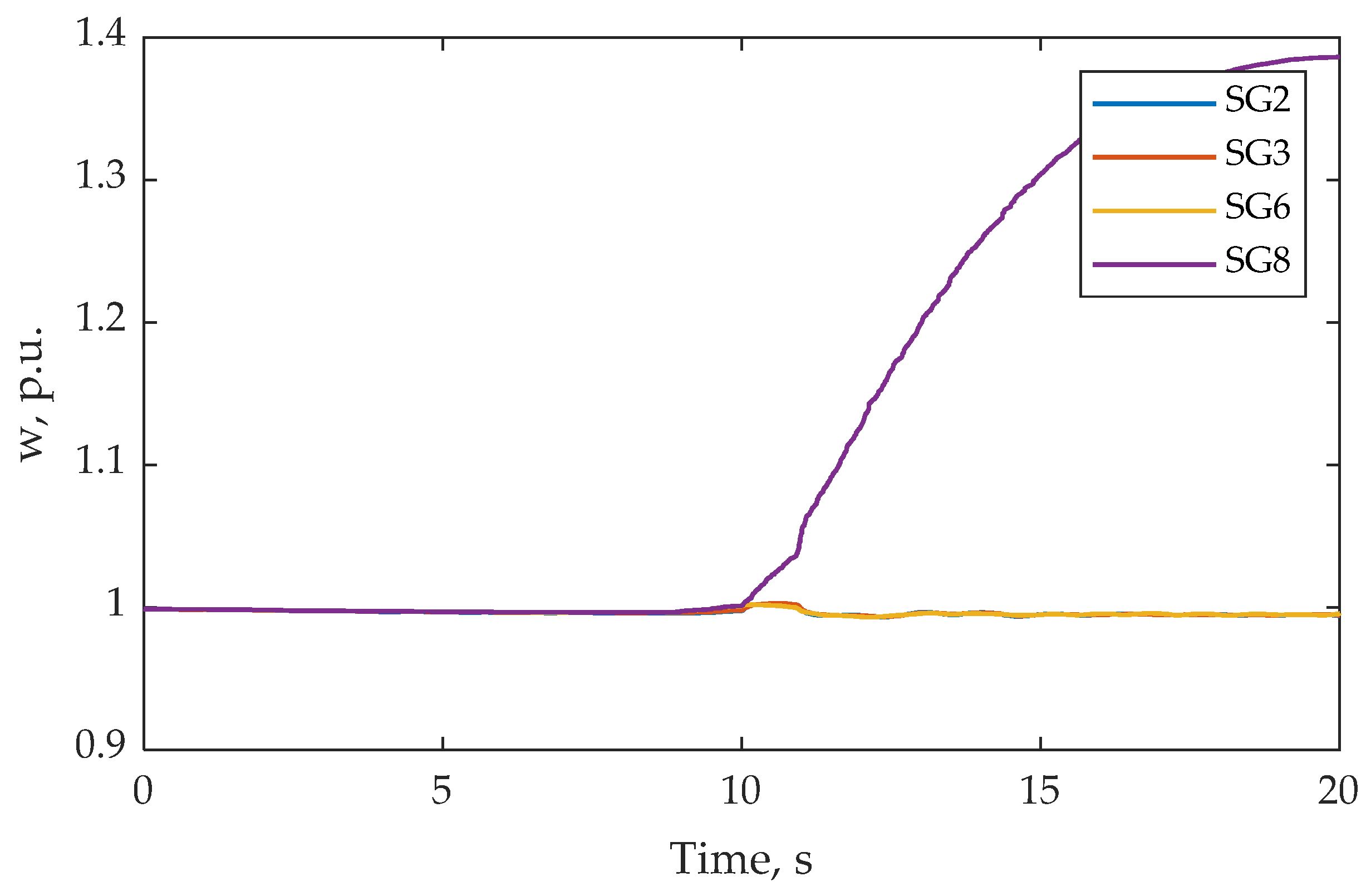

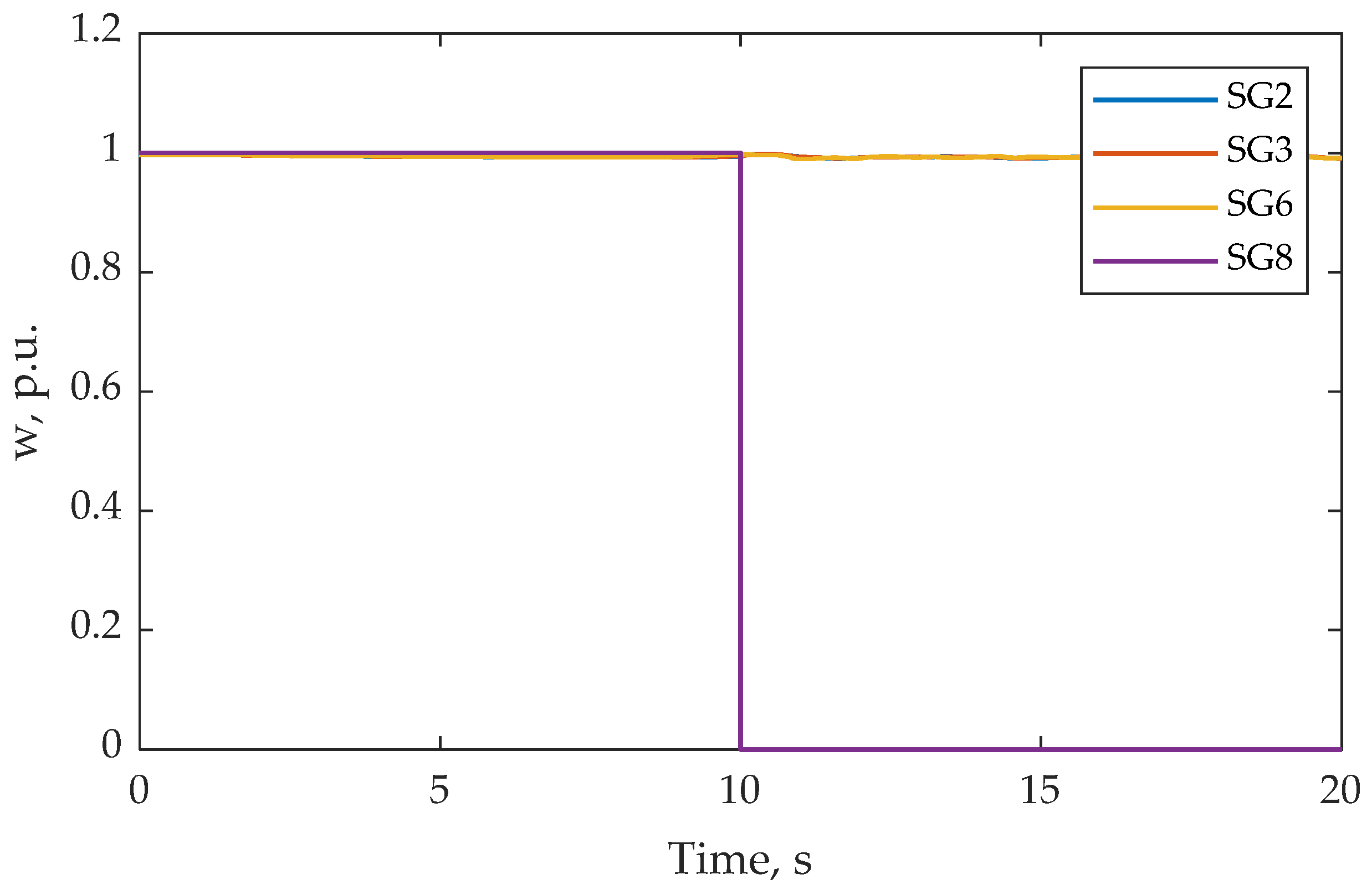

To test the possibility of selecting a CA for an accident that is missing in the data sample, for the IEEE14 model, an accident consisting of a short circuit in node 9 was considered. As a result of modeling this accident, SG8 and SG3 lose stability and operate in asynchronous mode. To provide TS, the CA was chosen in the form of SG8 shutdown. The correctness of this CA is confirmed by the results of the calculation of the transient process.

Further research will be aimed at developing a methodology for EC with isolated EPSs which contains a significant proportion of RESs and energy storage devices. For EPS data, the CAs are considered to provide the required AC voltage and frequency levels. Moreover, there are other important directions for future research. For instance, more detailed testing of the ability of DL algorithms to select optimal CAs for accidents that are not in the training set; the determination of acceptable values of time delays of DL algorithms, allowing us to define the optimal value of CAs during the development of the transient process; the determination of the minimum size of the data set; and the analysis of the influence of errors in data synchronization from PMU devices based on the accuracy of CA EPS selection are required.