Abstract

Parallel natural language processing systems were previously successfully tested on the tasks of part-of-speech tagging and authorship attribution through mini-language modeling, for which they achieved significantly better results than independent methods in the cases of seven European languages. The aim of this paper is to present the advantages of using composite language models in the processing and evaluation of texts written in arbitrary highly inflective and morphology-rich natural language, particularly Serbian. A perplexity-based dataset, the main asset for the methodology assessment, was created using a series of generative pre-trained transformers trained on different representations of the Serbian language corpus and a set of sentences classified into three groups (expert translations, corrupted translations, and machine translations). The paper describes a comparative analysis of calculated perplexities in order to measure the classification capability of different models on two binary classification tasks. In the course of the experiment, we tested three standalone language models (baseline) and two composite language models (which are based on perplexities outputted by all three standalone models). The presented results single out a complex stacked classifier using a multitude of features extracted from perplexity vectors as the optimal architecture of composite language models for both tasks.

Keywords:

language modeling; language models; composite structures; machine learning; Serbian language; text classification MSC:

68T50

1. Introduction

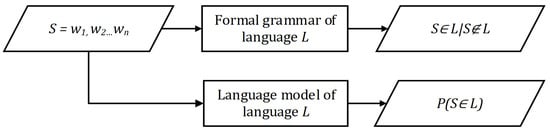

Nearing the end of the twentieth century, the accelerated development of artificial intelligence (especially machine learning methods) rekindled the idea that good results are obtainable in a much faster way and in many engineering spheres, including language modeling. In practice, it was established that one of the biggest disadvantages of formal grammar (language modeling state-of-the-art at the time) was the high cost of their creation. The extraction of grammatical rules from the corpus of texts can, of course, be carried out simply by making a list, but this leads to the problem of over-fitting the model, where individual rules are taken for general ones and the broader picture is lost. On the other hand, the derivation of general rules from individuals must be carried out carefully and requires an enormous amount of time. With new technological developments, however, the researchers began to investigate the creation of completely new probability-based models, which emulate automata and rule-based grammars. Instead of assigning a Boolean response to input strings, these new systems, called language models, assign probabilities based on a previously observed textual (training) corpus (Figure 1).

Figure 1.

A rough comparison of the functionality of a formal grammar (top) and a language model (bottom) for some language L, where S represents a string, and represents the probability that S belongs to L.

Language models are thus defined as systems that assign probabilities to strings (based on the context in which they occur), and the models are based on the previously collected corpus. Input strings refer to sequences of tokens , usually representing n-grams of words or characters.

In the previous couple of decades, language modeling was developed primarily using artificial neural networks (ANNs), according to the inspiring idea of Elman [1,2], who, while experimenting with time series as input data for machine learning (ML) models, constructed an artificial neural network whose goal was to predict the next element in a sequence. Although the potential of using ANNs for language modeling was recognized early on, the limitations imposed by this approach caused a stagger in development. A large amount of training data necessary for the correct generalization of grammatical rules, as well as satisfactory computing resources (especially working memory and processing power), were not available (at least not to the general public) at the time of the methodology’s development. In addition, the problem of the vanishing gradient, a consequence of backpropagation when training multi-layer and recurrent ANNs, was observed often in practice [3], especially on the task of natural language modeling.

Nevertheless, the exponential growth in the PC computing power that followed, as well as the exponential increase in the amount of data available (via the Big Data phenomenon), enabled the theory to finally be technologically supported, triggering a new wave of fresh research, based on the idea of deep learning [4], which is currently the most represented sub-field of machine learning research, and artificial intelligence in general. The use of the long short-term memory method (LSTM) [5] in language modeling solved the problem of the vanishing gradient at first glance, while also providing previously unattainable results.

1.1. State-of-the-Art

Only with the emergence of the Transformer architecture by Google [6], as an adequate alternative to LSTM models, a new step forward was made in the field of natural language modeling. The main difference between transformers and LSTM models is that transformers do not rely on recurrent structures, but have an improved model for attention, a special parameter propagated during learning, which serves to separate relevant from irrelevant information. Today, the most significant and widespread language models are built using this architecture, i.e., an encoder-decoder structure for model training, supported by pre-trained word vectorizations (word embeddings) for preprocessing.

The first outstandingly influential of the type models were BERT (bidirectional encoder representations from transformers) by Google [7] and GPT (generative pre-trained transformer) by OpenAI [8,9]. The former is an encoder-based model used primarily for text annotation and classification and the latter is a decoder-based model used primarily for language generation (prediction of the next token for some given left context). Fast forward to today, decoder-based language models are most prominent in the field, with the OpenAI GPT models (now in the fourth generation) being especially popular for instruction tuning [10]. However, their last model published in open code (and also the latest one available for Serbian) is still GPT-2 [11], with the efforts still being focused mainly on the development of encoder-based models both for Serbian [12] and similar Slavic languages [13,14,15,16].

1.2. Text Quality Evaluation and Perplexity

With the beginning of the twenty-first century and the emergence of the Big Data phenomenon, the necessity to separate significant, quality data from unusable or non-quality data became even more apparent. Machine-based classification methods that rely on automatically collected attributes such as user ratings or predefined expressions (e.g., [17]) are widely used today and represent the basis for web-originated data analysis.

Classical assessment methods such as evaluation by users or experts tend to be subjective, but an adequate alternative still does not exist. Evaluating the quality of a stimulus (irrelevant of its nature) must be subjective because different people perceive it differently. The evaluation metrics vary depending on the natural language processing (NLP) task, the phase (the model building, deployment, production phase), the focus (intrinsic and extrinsic, ML and business), etc. [18]. The extrinsic metric focuses on evaluating performance on the final objective of the concrete NLP task, while the intrinsic focuses on intermediary objectives.

Intrinsic evaluation metrics have the advantage of not relying on specific tasks or reference texts, but rather on the (language) models previously trained on reference texts, which are taken as the gold standard. A typical application of intrinsic metrics is to compare two models and analyze how likely they are to generate the same text. The most common intrinsic metric used in computational linguistics is perplexity, a measure of how much the model is surprised by seeing new input text. Another way to think about perplexity is to treat it as the weighted average branching factor of a language, i.e., the average number of possible next words that can follow any word [19].

Definition 1.

Let be a language model. Perplexity () of a language model on a string of tokens (sentence, text) is defined as the inverse probability that a model will generate W, normalized by the number of tokens n. Accordingly, perplexity is calculated as follows:

where is the probability that a model will generate W. If represents language generated by model and P is a probability function, then

This implies that the higher the value of perplexity, the poorer the fit of the tested input string and the model. If we have text that is taken as a gold standard, we can use perplexity as a measure of the quality of a model, or we can measure the quality of the generated text if we take a model as the gold standard. In both cases, we want the measure of perplexity to be as low as possible. In the worst case, if the model is completely unprepared and the probability for each token is the same, then the perplexity is equal to the size of the lexicon of tokens.

The aforementioned properties allow for perplexity to be used for automatically distinguishing between the high- and low-quality data [20], with one of the motives being the selection of data used to train new language models [21]. Perplexity can also be used for text classification based on language [22], the detection of harmful content [23], and fact checking [24].

1.3. Research Questions, Aims, Means, and Novelty

Recent developments in NLP (primarily statistically based language models) have brought us numerous new methods and technologies of language modeling [25], with new and arguably better language models appearing every so often. This paper constitutes an expansion of prior scholarly investigations dedicated to processing and evaluating texts written in arbitrary highly inflective and morphology-rich natural language, particularly Serbian. Two prior investigations considering (Serbian) language processing tasks are revisited, specifically, part-of-speech tagging [26] and literature authorship attribution [27] in order to inspect advantages of using composite language models. In these papers, several feature combination techniques were tested (e.g., voting, weighted voting, bidding), but it was concluded that the trained stacked classifier is the optimal method of feature combination, with the main advantage of distinguishing between quality and noise-inducing features. Additionally, if the trained stacked classifier’s complexity is kept low, their explicitness is reasonable and the risk of overfitting them is minimal. The specific aim of this research is to further develop the methodology for the creation of composite intelligent systems to aid in solving the task of language modeling, particularly focusing on the tasks of perplexity-based text evaluation and classification [20]. The main motivation of the experiment was to support the distinction between high-quality and low-quality text, particularly that acquired from the web, in order to secure the integrity of automatically constructed corpora.

In order to achieve this goal, a group of standalone transformer-based language models (GPT-2), previously trained on a corpus of texts in Serbian [28], were used to develop several different composite language models. The expediency of the models will be illustrated in the example of solving two binary classification tasks:

- C1

- Detection of low-quality sentences;

- C2

- Machine translation detection.

The first classification task was chosen because of its direct alignment with the goal of the research (distinguishing between high-quality and low-quality text), while the second task was chosen as an alternative, which is more difficult benchmark, especially with the recent advances in the field of machine translation [29]. The ability of the standalone models to classify the sentences will be tested using only the sentence perplexity value outputted by the model. The obtained results will be used as a baseline for the evaluation of the composite models. The first of the two envisioned composite models, , will use sentence perplexities outputted by each of the three standalone models (M1, M2, and M3, Section 2.1) as classification features. Besides the features, the second composite model, , will use additional features extracted from standalone models M1, M2, and M3.

This paper will address three research questions:

- RQ1

- Are semantic and syntactic models justified tools to use for sentence classification tasks, e.g., low-quality sentence or machine translation detection?

- RQ2

- Can composite language models based on outputted perplexities and the wisdom of crowds-based compositions improve on the accuracy of standalone models on classification tasks?

- RQ3

- Can features extracted from perplexity vectors be used to further improve the classification accuracy of composite models?

The main contributions of this research are:

- Development of a perplexity-based dataset for testing and validation of composite and standalone language models using existing models and parallel language corpora;

- Development of a detailed model of the composite systems for parallel unification of created models (which can be applied to both future models and other languages);

- Creation of composite Serbian language models that can be used in natural language processing tasks, including document classification and text evaluation;

- Evaluation of created models on two well-known binary classification problems.

The developed composite model architectures are to enable a more precise calculation of fitness between models and texts (i.e., a more precise calculation of perplexity) which could also induce performance improvement for generative language models. Additionally, the knowledge gathered through the inspection of the results should enable researchers to further develop the methodology of composite intelligent systems creation.

Section 2 of this paper will present the creation of the main evaluation dataset and its merits, and Section 3 will describe the process of feature extraction and model compositions. Section 4 will present the evaluation process and the results obtained, which will be followed by the discussion and concluding remarks, together with plans for future research in Section 5.

2. Dataset

The dataset used to evaluate the proposed methodology approach for this experiment is envisioned as a series of matrices containing perplexity values obtained through standalone language model evaluation. In order to prepare the dataset, several standalone language models (M1, M2, and M3) that output different perplexity values for the same text were needed, and also several series of textual sentences (T1, T2, and T3) not previously used for the training or fine-tuning of M1, M2, and M3. The final dataset is obtained by evaluation of M1, M2, and M3 using T1, T2, and T3 as the test sets.

The textual dataset T was envisioned as a list of three separate sets:

- T1

- High-quality sentences in Serbian, obtained from the expert translation of appraised novels written in other languages;

- T2

- List of low-quality sentences, i.e., a list of sentences from the dataset T1 corrupted using several different methods in order to make them semantically or syntactically incorrect;

- T3

- List of machine translations of the original literary sentences, as opposed to the expert translations from the dataset (T1).

The final dataset D was generated by recording the perplexity values of prepared language models against the prepared sets of sentences, and it was used to evaluate the methodology on both envisioned classification tasks. The detection of low-quality sentences (C1) is summed up as the classification between datasets T1 and T2, and the detection of machine translations (C2) as the classification between datasets T1 and T3. The complete process of the dataset generation can be summed up in three steps:

- Preparation of pre-trained language models for Serbian that tend to output different perplexity measures for textual input (M1, M2, and M3);

- Preparation of textual data T1, T2, and T3 (based on text not used for the training or fine-tuning of aforementioned language models), which will be used for the creation of evaluation dataset for both classification tasks (C1 and C2);

- Generation of the final dataset, based on perplexity outputs obtained via evaluation of the prepared sentences from the previous step (T1, T2, and T3) using prepared language models from the first step (M1, M2, and M3).

2.1. Language Models

A total of three standalone language models that were previously trained [28] on a collected corpus of Serbian texts and based on a second-generation generative pre-trained transformers architecture (GPT2, 137 million parameters) were used for this research:

- M1

- Control model trained using a standard corpus of contemporary Serbian texts (1 billion tokens), and standard training configuration for GPT2-based models;

- M2

- Experimental semantic model, trained on a specially prepared corpus representation, i.e., a corpus processed using latent semantic analysis methods [30], namely removal of stop words and lemmatization;

- M3

- Experimental syntactic model, trained on a different corpus representation that was processed using morphological dictionaries in such a way that the content words [31] were replaced with their grammatical category.

The two experimental models were supposed to model two complementary aspects of the text in natural language (semantics and syntax) and therefore produce potentially different perplexities when faced with the same piece of input text. It should be noted that when calculating perplexity using these models, input text must be preprocessed using the same transformation that was used for the generation of the training corpus data for the respective model in order to obtain correct readings. All three of these models are available in open access on the Huggingface platform and linked in the Data Availability Statement at the end of the paper. See Appendix A for the implementation details.

2.2. Textual Data

Textual data used to build the evaluation dataset for this research is based on a parallel corpus of literary texts (novels originally written in German and Italian and their expert translations into the Serbian language). The bigger share of the texts was pooled from parallel Serbian–German corpus, SrpNemKor [32], where only the novels originally written in German were used. The rest of the textual data represent the parallel translation of the third part of the Naples stories series [33,34], prepared as the part of the parallel Serbian–Italian corpus within the It-Sr-Ner project (supported by CLARIN ERIC “Bridging Gaps Call 2022”) [35]. A total of seven novel translations were used (Table 1).

Table 1.

A list of novels from which evaluation sentences were extracted.

The first envisioned set of sentences (a set of expert translations, ) was created by simply extracting sentences from the translations listed in novels. The set contains tokens (about per sentence) and has a type-token ratio of .

The second set (low-quality sentences, ) was created by taking each sentence from the first set and applying one of the following transformations at random:

- Lemmatization: Each word in the sentence is replaced with its lemma based on Serbian Morphological Dictionaries, to make the sentences prone to morphosyntactic incorrectness. Although it is possible that the lemmatized sentence is equal to the original one (in case all words in the original sentence were already lemmas), a simple equality comparison between them calculated that this happens less than 0.8% of the time;

- Random mixing of word order within a sentence: A sentence was transformed into a list of words and punctuation marks, which was then randomly shuffled and put back together into text. This was also conducted to make the sentences prone to syntactical incorrectness, especially regarding the position of prepositions and adjectives. As in the previous case, this does not necessarily mean that the sentences are incorrect, but a manual evaluation of a set of 400 sentences found that this happens in less than 0.6% of the cases;

- Random replacement of words in the sentence: namely, each word in the sentence is replaced by another, random word of the same grammatical category from the Serbian Morphological dictionaries, in order to make it prone to semantic incorrectness.

The application of these transformations does not affect sentence lengths, but the type-token ratio is decreased to (due to the lemmatization of one part of the sentences).

The third set of sentences (machine translations, ) was obtained by running the original sentences (in German and Italian) through the Google Translate service and translating them into Serbian. Another simple equality comparison revealed that they differ from expert translations about of the time. These sentences are somewhat shorter (average of tokens per sentence and total), but the type-token ratio of is quite similar to the one of the first set.

The complete textual dataset is a sequence comprising sentences divided into three subsequences of equal size .

2.3. Sentence Perplexities and Perplexity Vectors

Definition 2.

Let . Integer interval is defined as .

Definition 3.

Let and . The vector is the element-wise inverse (also called Hadamard inverse) of vector x.

Definition 4.

Perplexity vector () [36] of a language model on a sentence is calculated applying the Equation (1) to each N-gram of tokens within a sentence (N fixed, ):

Size is used during this experiment. The size of for a given sentence s is and therefore varies depending on the number of tokens n in s.

Let , , . The final dataset D consists of:

- D1

- Subset containing three sequences of inverse perplexity triples, one for each dataset , i.e.,where represents perplexity of the model on the kth sentence in the dataset , calculated using (1). See Appendix A for the implementation details.

- D2

- The subset comprised three sequences, one for each dataset , where every sequence element is a triple containing the Hadamard inverse of perplexity vectors, i.e.,and represents the perplexity vector of the model on the kth sentence in the dataset , calculated using (3).

Values stored in sets and were used to measure the classification performance of (both standalone and composite) language models on the tasks of detecting low-quality sentences and machine translations (see Section 4).

Definition 5.

Let and be two sequences of length n. The Pearson linear correlation coefficient r is defined as

where represents the mean of x and analogously for .

Let and be a sequence such that , i.e., a sequence of perplexity values obtained for sentences of dataset using model . In order to ensure that the perplexity values differ between both different models and different textual datasets, the Pearson coefficients were calculated using (6), as the primary measure of linear correlation between every two pairs and , where pairs share either a model () or a dataset ().

Table 2 and Table 3 contain the resulting coefficients between pairs, where pairs share the same dataset in Table 2, while pairs in Table 3 share the same model.

Table 2.

Pearson correlation coefficients between sequences of perplexities obtained using two different pairs (model, dataset) with the mutual dataset.

Table 3.

Pearson correlation coefficients between sequences of perplexities obtained using different pairs (model, dataset) with the mutual model.

The results presented in Table 2 confirm the uniqueness of perplexities outputted using the prepared models, with the highest correlation coefficient being 0.265 between the models M1 (control) and M2 (semantic) and all of the other correlation coefficients being less than 0.05. On the other hand, the much higher correlation was apparent in Table 3, averaging at about 0.56, indicating that the models have trouble differing between the datasets, especially model M2 between the datasets T1 and T2 (inability to distinguish the control set from the artificially-defected, low-quality sentences), with a correlation coefficient of over 0.8. In Section 3, we introduce composite models as a form of overcoming this insufficiency.

Once the data were confirmed to be of value, all of the perplexity values in sets D1 and D2 were converted to their inverse value, which concluded the creation of the dataset D according to Equations (4) and (5). This was carried out for the sake of their easier input into the machine learning algorithms afterwards in the experiment.

3. Features and Compositions

As mentioned in Section 1.3, two composite models, and , built on the resulting perplexities were envisioned for this experiment. The first, simpler model (Section 3.1) is based on a stacked classifier architecture which is directly derived from the previous research on the subject [26,27]. The second, more complex model (Section 3.2) was designed specially for this experiment and relies on features extracted using several different scenarios.

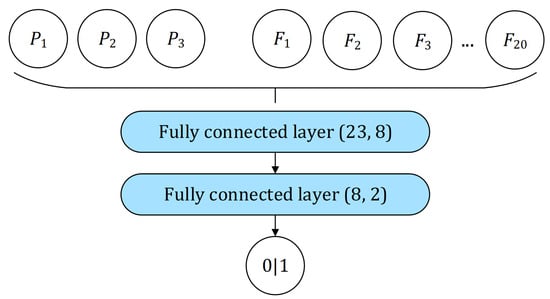

3.1. Simple Neural Network Classifier ()

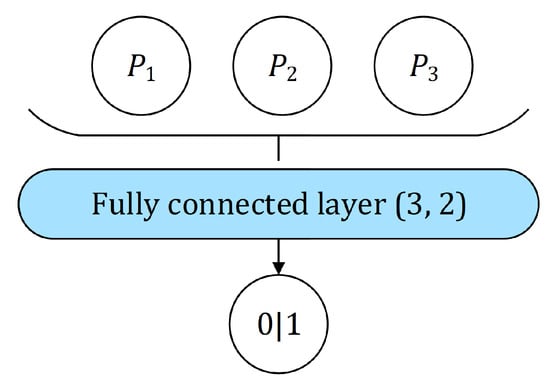

The stacked sentence classifier used in the first composition () is based on a simple neural network architecture consisting of one fully connected layer—two perceptrons, one for each class, sharing a triple of input values , an element of dataset D1. The triple p corresponds to a sentence being classified and each is an inverse of the perplexity value of model , (Figure 2).

Figure 2.

A simple neural network for binary sentence classification, consisting of one fully connected layer with input values (perplexities of the models on an input sentence, ).

The output y is the predicted class of the sentence. The value of y can be either 0, meaning expert translation, or 1, meaning an alternative class the network was trained to recognize, depending on the classification task. The calculation of y can be described in the following manner:

where:

- is a triple containing inverse of perplexities corresponding to the input sentence;

- is a weight matrix. For a fixed , are learnable weights of the ith perceptron in , ;

- components are learnable biases of the corresponding perceptrons in ;

- f is an activation function defined as identity , i.e., linear activation is used.

See Appendix A for the implementation details and Section 4 for training details.

The goal of this model was to confirm the advantages of using a stacked classifier on the perplexity outputs of transformer-based language models, as was already confirmed for using it on probabilistic outputs of part-of-speech taggers [26] and cosine similarities of documents before that [27], in order to give an answer to RQ2.

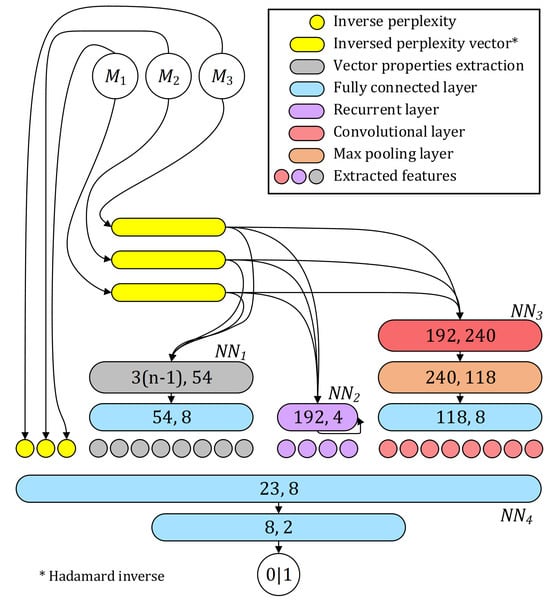

3.2. Complex Multi-Featured Neural Network ()

In contrast to (Section 3.1), the second composite model () was designed to maximize the volume of inputted features at the expense of simplicity. The goal of the feature extraction for this experiment was to create a large, determined, and finite list of inputs for a binary classifier; hence, all of the features are represented as numerical values in the range −1 to 1. In addition to the three features used by , a multitude of additional features are extracted from subset D2 (Section 2.3), using three separate neural network components:

- NN1

- The time-and-frequency-domain-based component represents a small, single-layer neural network used to extract eight features from a multitude of properties calculated using a set of prepared formulas over each vector from D2 (see Section 3.2.1);

- NN2

- The recurrent neural network (RNN) [37] component represents a small neural network with a recurrent layer with four hidden states. Vector triples from dataset D2 are inputted into this layer in order to extract four additional features for each triple (see Section 3.2.2);

- NN3

- The convolutional neural network (CNN) [38] component represents a small neural network with a convolutional and a pooling layer instead of a recurrent one, which is used to extract eight more features from each vector triple of dataset D2 (see Section 3.2.3).

For the purpose of training components and , the length of the vector inputs (extracted from the set) for these two components was resized to the length , employing either truncation (if the vector was longer) or zero-padding (if the vector was shorter). This was conducted for the purpose of easier batching of vector inputs during the training procedure for recurrent and convolutional layers. The component uses the original vectors. All of the mentioned components are connected to one final component:

- NN4

- The classifying component represents a neural network with two fully connected layers that takes all of the aforementioned features as input and then outputs the class of the inspected sentence.

The four components are trained together as one binary classification system (for each of two envisioned classification tasks) in order to give a definite answer to RQ3.

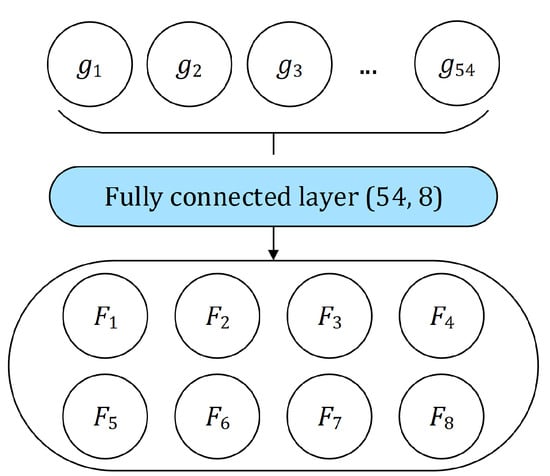

3.2.1. Time-and-Frequency-Domain-Based Component ()

The first component is used to extract features from different time-domain and frequency-domain properties of the vectors from dataset D2, while treating them as either time-series (by using tokens as a unit of time and inspecting the perplexity value at each point) or signals. In the case of time-domain (), the twelve properties TD1–TD12 were examined using each vector as an input for twelve different formulas. Some of them are reused to examine six frequency-domain () properties FD1–FD6, but the input is changed to be a power spectrum calculated using a fast Fourier transform of each vector.

The following time-domain properties were determined for vector :

- TD1

- Minimum value found in the inspected vector:

- TD2

- Maximum value found in the inspected vector:

- TD3

- Peak-to-peak, calculated as the difference between the maximum and minimum value:

- TD4

- The arithmetic mean of the values in the inspected vector:

- TD5

- Root mean square:

- TD6

- Variance, i.e., the spread of data around the mean:

- TD7

- The standard deviation of the inspected vector:

- TD8

- Crest factor, i.e the quotient of the maximum value and the root mean square:

- TD9

- Form factor, i.e., the quotient of the root mean square and mean:

- TD10

- Pulse indicator, i.e., the quotient of the maximum value and the mean of the vector:

- TD11

- Vector (Pearson) kurtosis, i.e., the measure of the outlier presence in the inspected vector:where is the expectation operator;

- TD12

- Vector skewness, i.e., the measure of the data symmetry around the mean:where is the expectation operator.

As the second set of properties is based in the frequency domain, each vector was first subjected to the fast discrete Fourier transform, calculating a new vector :

where n is the length of the vector x that is being transformed and is the imaginary unit, .

Afterwards, the power spectrum vector is calculated:

With the calculated power spectrum of the vector, the following frequency-domain properties were extracted:

- FD1

- Power spectrum maximum, calculated using Equation (9), where x is the power spectrum of the inspected vector;

- FD2

- Power spectrum peak, calculated as the absolute maximum value found in the power spectrum:where x is the power spectrum of the inspected vector;

- FD3

- Power spectrum mean, calculated as an arithmetic mean of the values in the power spectrum using Equation (11), where n is the length of the power spectrum x;

- FD4

- Power spectrum variance, calculated using Equation (13), where n is the length of the power spectrum x and is its sample mean;

- FD5

- Power spectrum kurtosis, calculated using Equation (18), where is the mean of the power spectrum vector x, its standard deviation, and is the expectation operator;

- FD6

- Power spectrum skewness, calculated using Equation (19), where is the mean of the inspected vector x, its standard deviation, and is the expectation operator.

These 54 properties (18 for each vector in a D2 dataset triple) are used as an input for a simple fully connected layer in order to extract eight final features as depicted in Figure 3. This was conducted in order to reduce the total number of features, as well as to extract only their most important aspects. The rectified linear unit function (ReLU) is applied to the output in order to prepare it for passing through the adjacent linear layer in . The neural network component is visualized in Figure 3. The calculation of the features can thus be described as follows:

where:

Figure 3.

Fully connected layer with an input size of 54 (for 18 vector properties extracted from each of three input vectors) that is used to extract a total of eight time and frequency-domain features .

- is a series of time-domain and frequency domain properties extracted from the triple containing the Hadamard inverse of perplexity vectors using TD1–TD12 and FD1–FD6, corresponding to the input sentence;

- is a weight matrix; are learnable weights of the ith perceptron of , , ;

- Components of are learnable biases of the corresponding perceptrons of ;

- If , is a rectified linear unit function defined as .

See Appendix A for the implementation details.

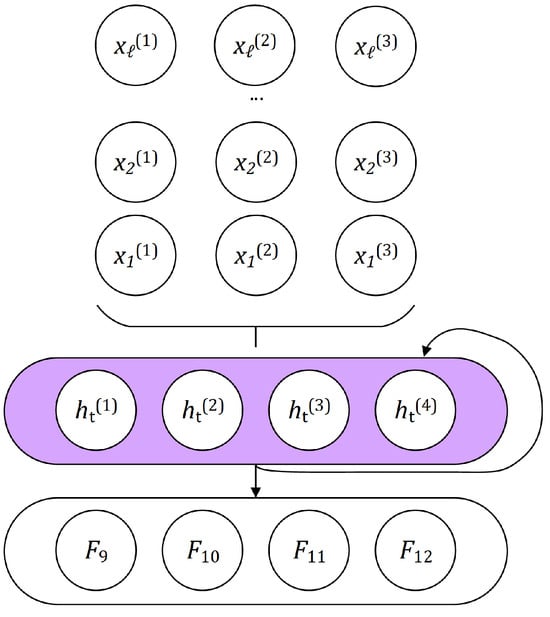

3.2.2. RNN Component ()

A second set of features was extracted using a recurrent neural network component (Figure 4). One recurrent layer with four hidden states was used to process each D2 dataset triple of vectors , where , , and ℓ is the resized length of input vector, introduced at the beginning of Section 3.2. For each , a triple is processed with hidden states from the previous loop pass-through (if any) with the goal to extract a number of recurrent features.

Figure 4.

Visualization of neural network component based on a recurrent layer with four hidden states h used to process input values , where corresponds to time point and language model , .

The calculation of the hidden state values is performed as follows:

where:

- is the hidden state at time t. The initial hidden state at time 0 is = 0 ;

- is the input at time t;

- are the learnable input-hidden weights of the (only) layer (4 hidden states, 3 input values) of ;

- is the learnable input-hidden bias of the (only) layer of ;

- are the learnable hidden-hidden weights of the (only) layer of ;

- is the learnable hidden-hidden bias of the (only) layer of ;

- tanh is the hyperbolic tangent activation function.

See Appendix A for the implementation details.

The recurrent layer outputs (from four hidden states after the final pass-through) are taken as four extracted features . The visualization of the component is depicted in Figure 4.

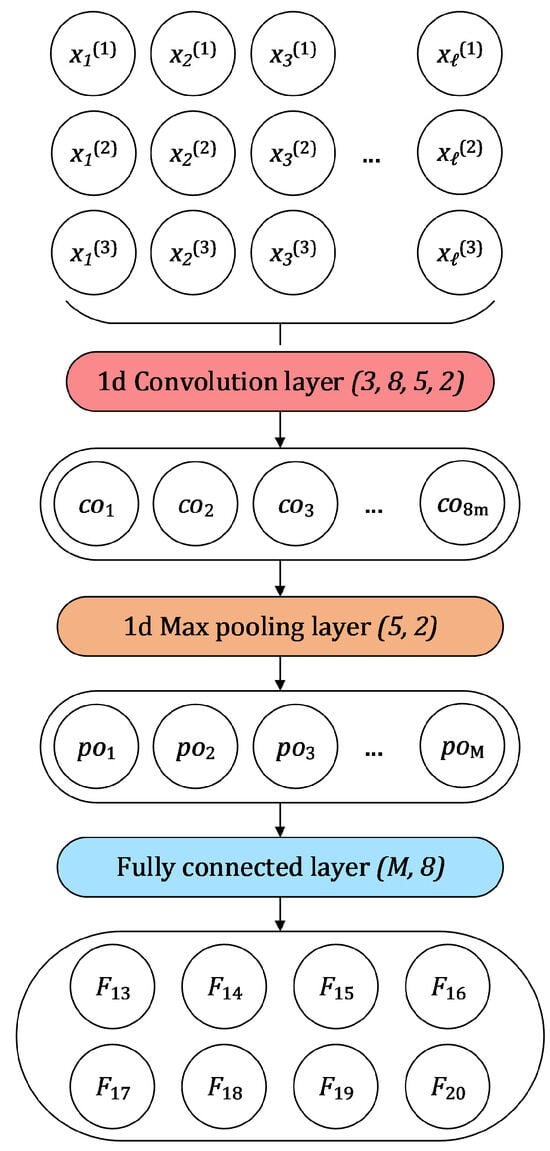

3.2.3. CNN Component ()

Definition 6.

For finite discrete functions , , the (circular) cross-correlation [39] is defined as:

A somewhat more complicated process was the extraction of the final eight features from the triples using the convolutional architecture, comprising three layers:

- A one-dimensional convolutional layer with three input channels (), eight output channels (), a size-five kernel (), and a stride of two ();

- A one-dimensional max pooling layer [40] with a size-five kernel () and stride of two ();

- A fully connected linear layer with an input layer with a size corresponding to the number of features extracted using the previous (pooling) layer and the output size of eight. Just like for , a ReLU activation function was applied in order to prepare features for passing through the first layer of the component.

During the processing of input using the first layer, the kernel is sliding simultaneously across the values in all three vectors , , extracting eight features for each inspection. The total number of inspections performed, m, is calculated as follows:

where ℓ is the resized and fixed length of the inputted sequences (), the size of the kernel (), and stride length (). Features outputted for each inspection are calculated in the following manner:

where:

- is the inspection index;

- is the outputted feature index;

- is the input channel index;

- Components of are learnable biases of the corresponding output channels for the convolutional layer;

- is a weight matrix; are learnable weights of the jth output channel and kth input channel, , ;

- represents the inspected values for the ith inspection and for kth input channel, with inspection being defined via the kernel size () and stride ().

Outputted values are then processed using a max pooling layer, where a second kernel of the same size is sliding across the values in each channel, performing the inspections and extracting the maximum value for each one. This step results in M number of new features, where M is calculated as:

where m is the number of inspection of the convolutional layer (26), the size of the kernel (), stride length (), and the number of convolutional layer output channels.

Values compiled using the max pooling layer are calculated as follows:

where:

- is the inspection index;

- is the index of values within inspections;

- is the size of the kernel of the max pooling layer;

- represents the inspected values of the ith inspection, with inspection being defined via the kernel size (), stride () and output channel of the convolutional being inspected.

Lastly, values compiled using the max pooling layer are used as an input for a fully connected linear layer with input size M and output size of eight, which is used to produce a final tally of eight features extracted by this specific method , where the feature values are calculated in the following manner:

where:

- is an array of features outputted from the max pooling layer, ;

- is a weight matrix; are learnable weights of the ith perceptron in the sole linear layer in , , ;

- Components of are learnable biases of the corresponding perceptrons of the sole linear layer in ;

- If , is a rectified linear unit function defined as .

See Appendix A for the implementation details.

A complete neural network component used to extract them is visualized in Figure 5.

Figure 5.

A neural network component featuring a single one-dimensional convolutional layer (with three input channels, a size-five kernel, and a stride of two) used to process input values , where corresponds to time point and language model , . Outputs of this step () are inputted into a single one-dimensional max pooling layer (with a size-five kernel and a stride of two), and the outputs of the max pooling layer () are used as inputs for a fully connected layer, which is used to extract the final features .

3.2.4. Classifying Component ()

Eight features were extracted using the first component (, Section 3.2.1), four features were extracted using the second component (, Section 3.2.2), and eight features were extracted using the third component (, Section 3.2.3) together with three values that were used by the first composition (, Section 3.1), which were used as an input for one final fully connected neural network component for binary classification. This final component consists of one input layer with input size 23 (20 for extracted features and 3 for a triple of inverse perplexity values ), connected to the output layer via one hidden layer with eight neurons (Figure 6).

Figure 6.

A neural network component consisting of one fully connected linear size-23 input layer (20 for extracted features and 3 for a triple of inverse perplexity values ), and one fully connected linear size-8 hidden layer used to perform binary classification based on the inputted features.

As is the same for , the output y of is the predicted class of the sentence. The value of y can be either 0 (expert translation) or 1 (alternative class the network was trained to recognize, depending on the classification task). Calculation of the y for can be described in the following manner:

where:

- p is a concatenation of , a triple containing inverse of perplexities corresponding to the input sentence, and , a triple containing the inverse of perplexities corresponding to the input sentence;

- is a weight matrix; are learnable weights of the ith perceptron in the input layer, , ;

- is a weight matrix; are learnable weights of the ith perceptron in the hidden layer, , ;

- Components of and are learnable biases of the corresponding perceptrons in the first () and second () fully connected layer;

- If , is a rectified linear unit function defined as .

A complete stacked classifier that uses transformer outputs as inputs is composed of all of the described components and is depicted in Figure 7. See Appendix A for the implementation details and Section 4 for training details.

Figure 7.

A visualization of the complete architecture of composite model where Hadamard inverse perplexity vectors (depicted as yellow stadiums) are generated using standalone language models (–) and are being used as input for –. All layers are denoted with a number of input and output parameters, and stadiums of different colors: violet for the recurrent, red for the convolutional, orange for the max pooling layer, and blue for fully connected linear ones. The gray stadium represents vector properties extraction (not a trainable layer), where n is a variable sentence length. The colored circles mark different features used for (yellow: inverse perplexities calculated using –; gray: time-and-frequency-based features calculated using ; violet: recurrent features calculated using ; red: convolutional features calculated using ).

4. Results

For the evaluation, we used five-fold cross-validation over dataset D, for which both subsets were split into five (nearly) equal, class-balanced chunks. For each of the five folds, a different chunk was used for testing, while the other four were used to train ten classifiers, including five for each classification task (, ). Three simple classifiers were based directly on standalone models (, , and ), while two composite classifiers ( and ) were trained on top of all three standalone models. Different training procedures were deployed depending on the classifier being trained, where different levels of input data complexity influenced the complexity of the models (Table 4).

Table 4.

Five classifiers used for each classification task, input data they are using (middle) (cf. Section 2), and the description of their architecture (right).

For each training session, the Adam optimizer [41] with a learning rate of and a batch size of 64 was used, and the number of training epochs was limited to 50. In order to measure the improvements achieved using the proposed composite models, the results achieved using the standalone models (M1, M2, and M3) were marked as the baseline. More precisely, the baseline was defined as the best result achieved by any of these , for each classification task and . The experiment was conducted to explore whether the composite models would achieve a statistically significant improvement.

As already mentioned, during the preparation of the five data chunks for each of the two binary classification tests, an equal number of samples for both classes ( and for task or and for task ) was prepared by stratifying the already balanced data according to the output class. This resulted not only in the effective training but also in the accuracy always being equal to the score. For that reason, we will focus on the classification accuracy metric when presenting the results of the cross-validation, or relative accuracy increase when depicting the improvements the composite models achieved over the baseline. The results of the evaluation will be presented in Section 4.1.

4.1. Quantitative Results

The cross-validation accuracy of all of the five inspected models (, , , and ) on the task of low-quality sentence detection (), as well as the highest achieved accuracy and mean accuracy, are presented in Table 5. The accuracy results of the same models, but on the task of machine translation detection (), are presented in the same manner in Table 6.

Table 5.

Cross-validation accuracy results achieved by three simple (left) and two composite models (right) on the low-quality sentence detection task (). The upper part of the table depicts the results for each of the five folds, while the lower part of the table depicts maximum () and mean () accuracy. The highest accuracy among standalone models (baseline) and the best overall scores are marked in bold.

Table 6.

Cross-validation accuracy results achieved by three simple (left) and two composite models (right) on the machine translation detection task (). The upper part of the table depicts the results for each of the five folds, while the lower part of the table depicts maximum () and mean () accuracy. The highest accuracy among standalone models (baseline) and the best overall scores are marked in bold.

The average relative accuracy increase (RAI) and average error rate reduction (ERR) compared to the baseline are calculated for both composite models ( and ) on both classification tasks ( and ) using the equations:

and

where a is the baseline accuracy and is the alleged improved accuracy.

These results, aiming to give a definite answer to the research questions RQ1–RQ3, are presented in Table 7.

Table 7.

Relative accuracy increase (RAI) and error rate reduction (ERR) achieved by each composite model ( and ) for each classification task ( and ), relative to the baseline results (highest achieved accuracy among the standalone models: , , and ). The highest relative accuracy increase and error rate reduction for each task are marked in bold.

4.2. Qualitative Results

The improvement achieved by the composite model over the baseline (2.06% relative accuracy increase on and 6.88% relative accuracy increase on ) is probably not due to mere chance, but despite that, we cannot ascertain the statistical significance via simple comparison. In order to check the integrity of the results, we used the corrected repeated k-fold cross-validation test [42] to determine the actual statistical significance of the achieved improvements. The t-score was calculated as:

where k is the number of cross-validation folds (), the baseline accuracy at fold i, the improved accuracy at fold i, r the size ratio of test and training sets (), and the variance of the difference of a and across folds.

For each composite model (, ) and for each classification task (, ), we calculate the t-score using Equation (34) and from it the p-value using Student’s Cumulative distribution function [43]. These results are presented in Table 8. Here, we observe a high statistical significance of the accuracy increase in three out of four cases with the p-values being below 0.05, in accordance with the standard confidence level of 0.95. The only outlier represents what the improvements classifier achieved over the baseline for task (machine translation detection), , in which case the null hypothesis (stating that no statistical significance exists) cannot be rejected.

Table 8.

Calculated t-score and p-value, indicating statistical significance of accuracy improvements the composite classifiers ( and ) achieved over the determined baseline for each classification task ( and ).

5. Discussion

In this paper, we experiment with two separate classification tasks: low-quality sentence detection () and machine translation detection (). On both tasks, we test the improvements achieved using composite language models (built upon perplexity outputs of several language models) over the accuracy of standalone models, which is taken as a baseline.

From the results presented in previous section, precisely Table 5 (cross-validation results on task ), the following observations are made:

- Q1

- Model is the best standalone model for low-quality sentence detection (average accuracy of 84.88%), and should thus be taken as the baseline for ;

- Q2

- Composite model outperforms this baseline on each cross-validation fold (with an average accuracy of 85.69%;

- Q3

- Composite model outperforms the composite model across all cross-validation folds with an average accuracy of 86.63%.

Additionally, from the results presented in Table 6 (cross-validation on task ), we note the following observations:

- Q4

- Model (syntactic) is the best-performing standalone model for machine-translation detection and should thus be taken as the baseline for , although with an accuracy of only 50.9%;

- Q5

- None of the other standalone models managed to surpass the 50% accuracy score (on any fold), indicating that perplexities outputted by the control () and semantic model () are not indicators for machine translation detection;

- Q6

- Composite model slightly outperforms the baseline on four out of five cross-validation folds, and also on average (accuracy of 51.71%);

- Q7

- Composite model outperforms the baseline, as well as composite model across all cross-validation folds, with an average accuracy of 54.4%.

Lastly, from the results presented in Table 7 (average relative accuracy increase and error rate reduction per composite model and per task) and Table 8 (statistical significance of achieved accuracy improvements per composite model and per task), the following is observed:

- Q8

- Composite model achieved the average RAI of 0.95% for classification task and 1.59% for classification task . The former is deemed statistically significant for a confidence level of 95% (), while the latter is deemed statistically insignificant ();

- Q9

- Composite model achieved the average RAI of 2.06% for , 6.88% for , error rate reduction of 11.57% for , and 7.13% for . Both improvements are deemed statistically significant for a confidence level of 95% ( and , respectively);

- Q10

- The results achieved by all tested models, and especially , , and , are comparable to the state-of-the-art results achieved for low-quality sentence detection for the English language [20].

Based on the collected cues, primarily and , we conclude that there is indeed a use for semantic and syntactic models in sentence classification. While the positive results achieved using composite classifiers that incorporate these models indicate their importance for refinement of the classification, the fact that syntactic model outperformed the control model for classification task indicates a positive answer to the research question RQ1:

RQ1: Are semantic and syntactic models justified tools to use for sentence classification tasks, e.g., low-quality sentence or machine translation detection?

This notion that models and provide additional information despite being trained on the same text (just different representation) is additionally apparent through results achieved by composite model (, , ). While there is not definite statistical significance in its improvements over the baseline for the task (), it definitely improved over the baseline on the task () as evident in , confirming a positive answer to the first research question and imploring a positive answer to the research question RQ2:

RQ2: Can composite language models based on outputted perplexities and the wisdom of crowds-based compositions improve on the accuracy of standalone models on classification tasks?

Finally, the improvements the composite model achieved over both the standalone models (, , and ) and the composite model (, , ) undoubtedly provide a positive answer to the final research question RQ3:

RQ3: Can features extracted from perplexity vectors be used to further improve the classification accuracy of composite models?

This also furthers the indication of the value of semantic and syntactic models, but most of all, it affirms the value of perplexity vectors [36] in perplexity-based sentence classification.

If we revisit the results for low-quality sentence detection task (, , ), we conclude that for the task, while partially solvable using a standard language model, with an accuracy of nearly 85%, a significant improvement can be made via incorporating other language models and perplexity vectors. No statistically significant improvements over the baseline were found using the model for this task, which is probably caused by the poor performance of the semantic and syntactic model (average accuracy of 55.84% and 61.75% compared to the baseline of 84.88%). Due to this fact, we must contribute the improvements achieved by model (total error rate reduction of over 11%, ) to the usage of perplexity vectors, indicating that low-quality sentences are detectable via features contained within them.

As for the task of machine translation detection () and the observed results on it (, , , ), it is apparent that its difficulty is much higher. Two out of three standalone models failed to outperform the 50% accuracy mark, which can be attributed to random selection. The only standalone model that could even slightly differentiate between the expert and machine translations was the syntactic one (but with very low accuracy), which could mean that expert and machine translations differ mostly in the syntax used. However, model which uses all three achieved better results (average accuracy of 51.71%) and the improvements were found to be statistically significant, indicating that the combination of syntax and semantics is a better indicator. Lastly, the results achieved by the model (relative accuracy increase of 6.88% and error rate reduction of 7.13%, ), despite the somewhat low achieved accuracy of 54.4%, indicate a high improvement through the usage of features extracted from perplexity vectors for this quite difficult task.

In conclusion, composite models are shown to improve on the accuracy of standalone models for classification tasks, with a composite language model based on a stacked classifier architecture that uses properties extracted from perplexity vectors as features being singled out as the best option for detection of both machine translations (low accuracy) and low-quality sentences (high accuracy). It should be noted that the drawback of composite models is higher training complexity and higher execution time. In future work, they should also be compared to bigger standalone models, i.e., whether the composition of a few smaller models is better than a large standalone model in terms of both training and execution speed, as well as in accuracy. If composite models are shown to be feasible, the research should focus on improving their quality through the improvement of the standalone models that they are composed of.

Perplexity vectors are shown to mitigate the main limitation of perplexity-based classification (the lack of dimensionality), but their limitations (aside from slightly higher execution time) are yet to be determined through future research. For example, features analysis should disclose the highest-value features of perplexity vectors, e.g., features extracted using RNN or features extracted from frequency-domain-based properties of perplexity vectors.

An inspection of further usages of both composite language models and perplexity vectors should be performed in order to expand on the idea of this research. Lastly, other methods should be tested for the examined tasks for the Serbian language, and a comparative study should be performed for a better understanding of both previously achieved and future results. Most prominently, BERT or a RoBERTa-based model for Serbian should be fine-tuned for the aforementioned tasks and tested on the prepared dataset.

Author Contributions

Conceptualization, M.Š. and R.S.; Data curation, M.U. and R.S.; Formal analysis, M.Š. and M.U.; Investigation, M.Š.; Methodology, M.Š. and M.U.; Project administration, R.S.; Resources, M.Š., M.U. and R.S.; Software, M.Š.; Supervision, M.U. and R.S.; Validation, M.U.; Visualization, M.Š.; Writing—original draft, M.Š.; Writing—review and editing, M.Š., M.U. and R.S. All authors have read and agreed to the published version of the manuscript.

Funding

The research is inline with the preparation for the TESLA project (Text Embeddings—Serbian Language Applications), Program PRIZMA, the Science Fund of the Republic of Serbia, grant number 7276.

Data Availability Statement

All of the data produced by this experiment as well as the complete code, which can be used to reproduce the results of the study, is publicly available as a repository at https://github.com/procesaur/composite-lang-models (accessed on 29 September 2023). All of the pre-trained models are available on the web: Baseline language model https://huggingface.co/procesaur/gpt2-srlat (accessed on 29 September 2023); Semantic language model https://huggingface.co/procesaur/gpt2-srlat-sem (accessed on 29 September 2023); Syntactic language model https://huggingface.co/procesaur/gpt2-srlat-synt (accessed on 29 September 2023).

Acknowledgments

The authors thank numerous contributors to the Serbian Corpora collection, especially the members of the Language Resources and Technologies Society JeRTeh.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study; in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Abbreviations

The following abbreviations are used in this manuscript:

| ANN | Artificial neural network |

| BERT | Bidirectional Encoder Representations from Transformers |

| CLARIN | Common Language Resources and Technology Infrastructure |

| CNN | Convolutional Neural Network |

| ERR | Error rate reduction |

| GPT | Generative Pre-trained |

| LSTM | Long short-term memory |

| ML | Machine Learning |

| NLP | Natural Language Processing |

| PC | Personal computer |

| RAI | Relative accuracy increase |

| ReLU | Rectified linear unit function |

| RNN | Recurrent neural network |

Appendix A. Implementation

Perplexity. The calculation of sequence (4) is based on the Equation (1) and implemented using the transformers Python library (https://huggingface.co/docs/transformers, accessed on 13 October 2023).

Perplexity vector. The calculation of sequence (5) is based on the Equation (3) and implemented using the transformers Python library (https://huggingface.co/docs/transformers, accessed on 13 October 2023).

GPT2 models. The training of the used language models was implemented using the transformers Python library (https://huggingface.co/docs/transformers, accessed on 13 October 2023). The training of all models was based on the GPT2 training configuration (https://huggingface.co/gpt2/raw/main/config.json, accessed on 11 November 2023), and the tokenization of the dataset was performed using the tokenizers Python library ((https://huggingface.co/docs/tokenizers, accessed on 11 November 2023).

Fully connected layers. All fully connected layers for this research (used for composite model , as well as neural network components and for composite model ) are implemented using PyTorch library and torch.nn.Linear class (https://pytorch.org/docs/stable/generated/torch.nn.Linear.html#torch.nn.Linear, accessed on 13 October 2023).

Recurrent layer. A recurrent layer used for the component of the composite model is implemented using PyTorch library and torch.nn.RNN class (https://pytorch.org/docs/stable//generated/torch.nn.RNN.html#torch.nn.RNN, accessed on 13 October 2023).

Convolutional layer. A (one-dimensional) convolutional layer employed in the component of the composite model is implemented using PyTorch library, torch.nn.Conv1d class (https://pytorch.org/docs/stable//generated/torch.nn.Conv1d.html#torch.nn.Conv1d, accessed on 13 October 2023).

Max pooling layer. A (one-dimensional) max pooling layer is employed in the component of the composite model is implemented using PyTorch library, torch.nn.MaxPool1d class (https://pytorch.org/docs/stable//generated/torch.nn.MaxPool1d.html#torch.nn.MaxPool1d, accessed on 13 October 2023).

Hyperbolic Tangent (Tanh) function. Tanh activation is used on the output of the recurrent layer in the component and implemented using PyTorch library, torch.nn.Tanh class (https://pytorch.org/docs/stable/generated/torch.nn.Tanh.html, accessed on 13 October 2023).

Rectified linear unit function. After each non-terminal fully connected linear layer, as well as after each convolutional and max pooling layer, a rectified linear unit (ReLU) activation is implemented using PyTorch library, torch.nn.ReLU class (https://pytorch.org/docs/stable//generated/torch.nn.ReLU.html#torch.nn.ReLU, accessed on 13 October 2023). The following layer use ReLU activation:

- The sole layer component;

- Each layer of the component;

- The first layer of the component.

References

- Elman, J.L. Finding Structure in Time. CRL Technical Report 9901; Technical Report, Center for Research in Language; University of California: San Diego, CA, USA, 1988. [Google Scholar]

- Elman, J.L. Finding Structure in Time. Cogn. Sci. 1990, 14, 179–211. [Google Scholar] [CrossRef]

- Hochreiter, J.S. Untersuchungen zu Dynamischen Neuronalen Netzen. Master’s Thesis, Institut für Informatik Technische Universität München, München, Germany, 1991. Available online: https://people.idsia.ch/~juergen/SeppHochreiter1991ThesisAdvisorSchmidhuber.pdf (accessed on 12 November 2023).

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef] [PubMed]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention Is All You Need. In Proceedings of the 31st Conference on Neural Information Processing Systems (NIPS 2017), Long Beach, CA, USA, 4–9 December 2017. [Google Scholar]

- Kenton, J.D.M.W.C.; Toutanova, L.K. BERT: Pre-Training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the NAACL-HLT 2019, Minneapolis, MN, USA, 2–7 June 2019. [Google Scholar]

- Radford, A.; Narasimhan, K.; Salimans, T.; Sutskever, I. Improving Language Understanding by Generative Pre-Training. 2018. Available online: https://cdn.openai.com/research-covers/language-unsupervised/language_understanding_paper.pdf (accessed on 12 November 2023).

- Lee, M. A Mathematical Interpretation of Autoregressive Generative Pre-Trained Transformer and Self-Supervised Learning. Mathematics 2023, 11, 2451. [Google Scholar] [CrossRef]

- Peng, B.; Li, C.; He, P.; Galley, M.; Gao, J. Instruction Tuning with GPT-4. arXiv 2023, arXiv:2304.03277. [Google Scholar]

- Radford, A.; Wu, J.; Child, R.; Luan, D.; Amodei, D.; Sutskever, I. Language Models are Unsupervised Multitask Learners. OpenAI Blog 2019, 1, 9. [Google Scholar]

- Bogdanović, M.; Tošić, J. SRBerta-BERT Transformer Language Model for Serbian Legal Texts. In Proceedings of the Analysis, Approximation, Applications (AAA2023), Vrnjačka Banja, Serbia, 21–24 June 2023. [Google Scholar]

- Ljubešić, N.; Lauc, D. BERTić-The Transformer Language Model for Bosnian, Croatian, Montenegrin and Serbian. In Proceedings of the 8th Workshop on Balto-Slavic Natural Language Processing, Online, 20 April 2021; pp. 37–42. [Google Scholar]

- Dobreva, J.; Pavlov, T.; Mishev, K.; Simjanoska, M.; Tudzarski, S.; Trajanov, D.; Kocarev, L. MACEDONIZER-The Macedonian Transformer Language Model. In Proceedings of the International Conference on ICT Innovations, Skopje, North Macedonia, 29 September–1 October 2022; Springer: Cham, Switzerland, 2022; pp. 51–62. [Google Scholar]

- Šmajdek, U.; Zupanič, M.; Zirkelbach, M.; Jazbinšek, M. Adapting an English Corpus and a Question Answering System for Slovene. Slov. 2.0 EmpiričNe Apl. Interdiscip. Raziskave 2023, 11, 247–274. [Google Scholar] [CrossRef]

- Singh, P.; Maladry, A.; Lefever, E. Too Many Cooks Spoil the Model: Are Bilingual Models for Slovene Better than a Large Multilingual Model? In Proceedings of the 17th Conference of the European Chapter of the Association for Computational Linguistics. Association for Computational Linguistics, Dubrovnik, Croatia, 2–6 May 2023; pp. 32–39. [Google Scholar]

- Agichtein, E.; Castillo, C.; Donato, D.; Gionis, A.; Mishne, G. Finding High-Quality Content in Social Media. In Proceedings of the 2008 International Conference on Web Search and Data Mining, Palo Alto, CA, USA, 11–12 February 2008; WSDM ’08. Association for Computing Machinery: New York, NY, USA, 2008; pp. 183–194. [Google Scholar] [CrossRef]

- Vajjala, S.; Majumder, B.; Gupta, A.; Surana, H. Practical Natural Language Processing: A Comprehensive Guide to Building Real-World NLP Systems; O’Reilly Media: Newton, MA, USA, 2020. [Google Scholar]

- Jurafsky, D.; Martin, J.H. Speech and Language Processing, 3rd ed.; Draft; Pearson; Prentice Hall: Hoboken, NJ, USA, 2023. [Google Scholar]

- Fernández-Pichel, M.; Prada-Corral, M.; Losada, D.E.; Pichel, J.C.; Gamallo, P. An Unsupervised Perplexity-Based Method for Boilerplate Removal. Nat. Lang. Eng. 2023, 1–18. [Google Scholar] [CrossRef]

- Toral, A.; Pecina, P.; Wang, L.; Van Genabith, J. Linguistically-Augmented Perplexity-Based Data Selection for Language Models. Comput. Speech Lang. 2015, 32, 11–26. [Google Scholar] [CrossRef]

- Gamallo, P.; Campos, J.R.P.; Alegria, I. A Perplexity-Based Method for Similar Languages Discrimination. In Proceedings of the Fourth Workshop on NLP for Similar Languages, Varieties and Dialects (VarDial), Valencia, Spain, 3 April 2017; pp. 109–114. [Google Scholar]

- Jansen, T.; Tong, Y.; Zevallos, V.; Suarez, P.O. Perplexed by Quality: A Perplexity-based Method for Adult and Harmful Content Detection in Multilingual Heterogeneous Web Data. arXiv 2022, arXiv:2212.10440. [Google Scholar]

- Lee, N.; Bang, Y.; Madotto, A.; Fung, P. Towards Few-Shot Fact-Checking via Perplexity. In Proceedings of the 2021 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Online, 6–11 June 2021; pp. 1971–1981. [Google Scholar]

- Kalchbrenner, N.; Grefenstette, E.; Blunsom, P. A Convolutional Neural Network for Modelling Sentences. In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Baltimore, MD, USA, 22–27 June 2014; pp. 655–665. [Google Scholar]

- Stanković, R.; Škorić, M.; Šandrih Todorović, B. Parallel Bidirectionally Pretrained Taggers as Feature Generators. Appl. Sci. 2022, 12, 5028. [Google Scholar] [CrossRef]

- Škorić, M.; Stanković, R.; Ikonić Nešić, M.; Byszuk, J.; Eder, M. Parallel Stylometric Document Embeddings with Deep Learning Based Language Models in Literary Authorship Attribution. Mathematics 2022, 10, 838. [Google Scholar] [CrossRef]

- Škorić, M.D. Kompozitne Pseudogramatike Zasnovane na Paralelnim Jezičkim Modelima Srpskog Jezika. Ph.D. Thesis, University of Belgrade, Belgrade, Serbia, 12 November 2023. Available online: https://nardus.mpn.gov.rs/handle/123456789/21587 (accessed on 12 November 2023).

- Costa-jussà, M.R.; Cross, J.; Çelebi, O.; Elbayad, M.; Heafield, K.; Heffernan, K.; Kalbassi, E.; Lam, J.; Licht, D.; Maillard, J.; et al. No Language Left Behind: Scaling Human-Centered Machine Translation. arXiv 2022, arXiv:2207.04672. [Google Scholar]

- Landauer, T.K.; Dumais, S. Latent Semantic Analysis. Scholarpedia 2008, 3, 4356. [Google Scholar] [CrossRef]

- Grace Winkler, E. Understanding Language; Continuum International: Danbury, CT, USA, 2008; pp. 84–85. [Google Scholar]

- Andonovski, J.; Šandrih, B.; Kitanović, O. Bilingual Lexical Extraction Based on Word Alignment for Improving Corpus Search. Electron. Libr. 2019, 37, 722–739. [Google Scholar] [CrossRef]

- Perisic, O.; Stanković, R.; Ikonić Nešić, M.; Škorić, M. It-Sr-NER: CLARIN Compatible NER and GeoparsingWeb Services for Italian and Serbian Parallel Text. In Proceedings of the Selected Papers from the CLARIN Annual Conference 2022, Prague, Czech Republic, 10–12 October 2022; Linköping University Electronic Press: Linköping, Sweden, 2023; pp. 99–110. [Google Scholar] [CrossRef]

- Perišić, O.; Stanković, R.; Ikonić Nešić, M.; Škorić, M. It-Sr-NER: Web Services for Recognizing and Linking Named Entities in Text and Displaying Them on a Web Map. Infotheca—J. Digit. Humanit. 2023, 23, 61–77. [Google Scholar] [CrossRef]

- Hinrichs, E.; Krauwer, S. The CLARIN Research Infrastructure: Resources and Tools for eHumanities Scholars. In Proceedings of the Ninth International Conference on Language Resources and Evaluation (LREC’14), Reykjavik, Iceland, 26–31 May 2014; Calzolari, N., Choukri, K., Declerck, T., Loftsson, H., Maegaard, B., Mariani, J., Moreno, A., Odijk, J., Piperidis, S., Eds.; European Language Resources Association (ELRA): Reykjavik, Iceland, 2014. [Google Scholar]

- Škorić, M. Text Vectorization via Transformer-Based Language Models and N-Gram Perplexities. arXiv 2023, arXiv:2307.09255. [Google Scholar] [CrossRef]

- Amari, S.I. Learning Patterns and Pattern Sequences by Self-Organizing Nets of Threshold Elements. IEEE Trans. Comput. 1972, 100, 1197–1206. [Google Scholar] [CrossRef]

- Waibel, A.; Hanazawa, T.; Hinton, G.; Shikano, K.; Lang, K.J. Phoneme Recognition Using Time-Delay Neural Networks. In Backpropagation; Lawrence Erlbaum Associates Inc.: Hillsdale, NJ, USA, 2013; pp. 35–61. [Google Scholar]

- Rabiner, L.; Gold, B.; Yuen, C. Theory and Application of Digital Signal Processing. IEEE Trans. Syst. Man, Cybern. 1978, 8, 146. [Google Scholar] [CrossRef]

- Yamaguchi, K.; Sakamoto, K.; Akabane, T.; Fujimoto, Y. A Neural Network for Speaker-Independent Isolated Word Recognition. In Proceedings of the ICSLP, Kobe, Japan, 18–22 November 1990. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Bouckaert, R.R.; Frank, E. Evaluating the Replicability of Significance Tests for Comparing Learning Algorithms. In Proceedings of the Pacific-Asia conference on knowledge discovery and data mining, Sydney, Australia, 26–28 May 2004; Springer: Berlin/Heidelberg, Germany, 2004; pp. 3–12. [Google Scholar]

- Student. The Probable Error of a Mean. Biometrika 1908, 6, 1–25. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).