1. Introduction

Metaheuristic optimization algorithms have been extensively utilized in several engineering applications to reduce costs. They have used either an optimal design or online process control. For optimal design problems, it does not pose a significant problem if the optimization algorithm is complex and takes a long time to find the best design parameters. However, for online process control, it is required that optimization algorithms have a fast response and less computation complexity. For example, energy harvesting from solar and wind resources or the optimal power flow in large electrical networks require fast online tracking of power variations, which increases system efficiency. Therefore, particle swarm optimization (PSO) has been utilized in most engineering applications because of its simplicity of coding and capability to find an optimum local or global solution. However, many metaheuristic algorithms with high computational complexity and long codes have recently emerged to achieve optimum solutions, which can be applied to optimal design problems but not in online control processes. Therefore, proposing new metaheuristic algorithms with less computational complexity is very much welcome for engineering applications.

In the literature review, many metaheuristic optimization algorithms have been stimulated by nature, biological behavior, or physical actions. For biological behavior, algorithms are inspired by different behaviors, either social communities, reproduction, food finding, or survival instinct. In 1975, Holland invented the genetic algorithm (GA), the first metaheuristic algorithm version that uses random searches to produce a new set of offspring [

1]. After that, in 1995, Kennedy and Eberhart presented a new simple algorithm, the PSO algorithm, stimulated by the swarming performance of birds and fish [

2]. Then, in 1995, Dorigo and Caro proposed the ant colony optimization (ACO) algorithm, which is motivated by the manners of ants to find a straight path between the colony and food position [

3]. After that, many optimization algorithms emerged, such as the artificial bee colony (ABC) [

4], which is stimulated by the swarming deeds of honeybees; firefly algorithm (FA) [

5], which is stimulated by the blinking light of fireflies for communicating and attracting prey; and cuckoo search (CS) algorithm [

6], which is stimulated by the levy walk and intrusions on the nests of other birds.

Furthermore, there are many recent metaheuristic algorithms that have been stimulated by alive creatures’ behaviors, such as the grey wolf optimizer (GWO), which was stimulated by the hierarchy of guidance and hunting [

7]; the whale optimization algorithm (WOA), which was stimulated by producing spiral bubbles around a school of fish [

8]; the salp swarm algorithm (SSA), which was stimulated by the teeming of salps to track food [

9]; Harris hawk optimization (HHO), which was stimulated by the teeming work of many hawks to attack prey [

10]; the mantis search algorithm (MSA), which was inspired by the foraging process of mantises [

11]; the nutcracker optimization algorithm (NOA), which was stimulated by the seasonal deeds of nutcrackers in finding, storing, and memorizing food [

12]; the Aquila optimizer (AO), which was stimulated by the hunting style of Aquila [

13]; the black widow optimizer (BWO), which was stimulated by the mating and flesh-eating of black widow spiders [

14]; and the Tunicate swarm algorithm (TSA), which was stimulated by the swarming manners of tunicates in tracking food [

15]. Consequently, many algorithms are stimulated by the conduct of living creatures, for example, dolphins [

16], white sharks [

17], vultures [

18], orcas [

19], starlings [

20], rabbits [

21], frogs [

22], butterflies [

23], hyenas [

24], reptiles [

25], coati [

26], leopards [

27], and eagles [

28].

Physicists such as Newton, Einstein, etc., were attracted by the physical phenomena in the universe, which led them to find mathematical laws and paradigms after a long study. Therefore, many algorithms have been proposed based on their physical models, such as the annealing process of metals [

29]; the law of gravity by Newton [

30]; the law of gas by William Henry [

31]; the heat transfer between materials and ambient [

32]; the collision of bodies [

33]; the pull and repulsion forces between atoms [

34]; the laws of electrostatic and dynamic charges by Coulomb and Newton [

35]; the migrant light between mediums with different intensities [

36]; the transient behavior of first- and second-order electrical circuits [

37]; the motion and speed of planets by Kepler [

38]; the electrical trees and figures of lightning by Lichtenberg [

39]; the electromagnetic field [

40]; circles geometry [

41]; and the electrical field [

42]. Moreover, many metaheuristic algorithms were inspired by the gravity effect on the motion of planets and stars [

43]; the centrifugal forces and gravity relations [

44]; ion motions [

45]; and the orbits of electrons around the nucleus inside atoms [

46].

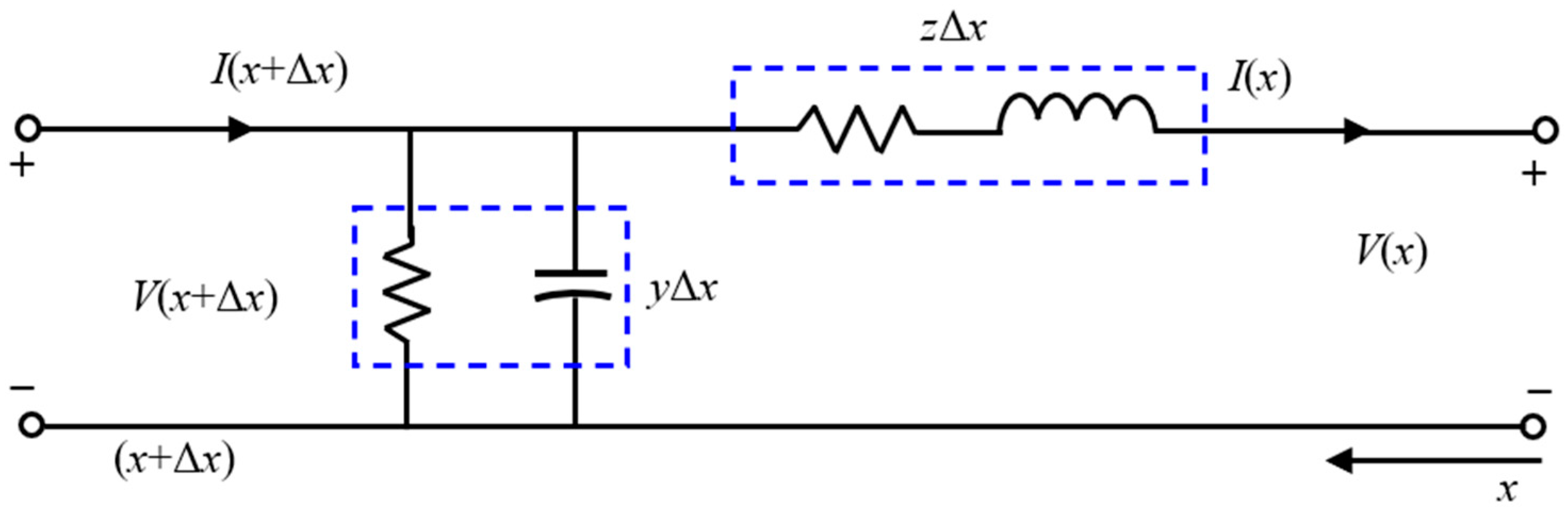

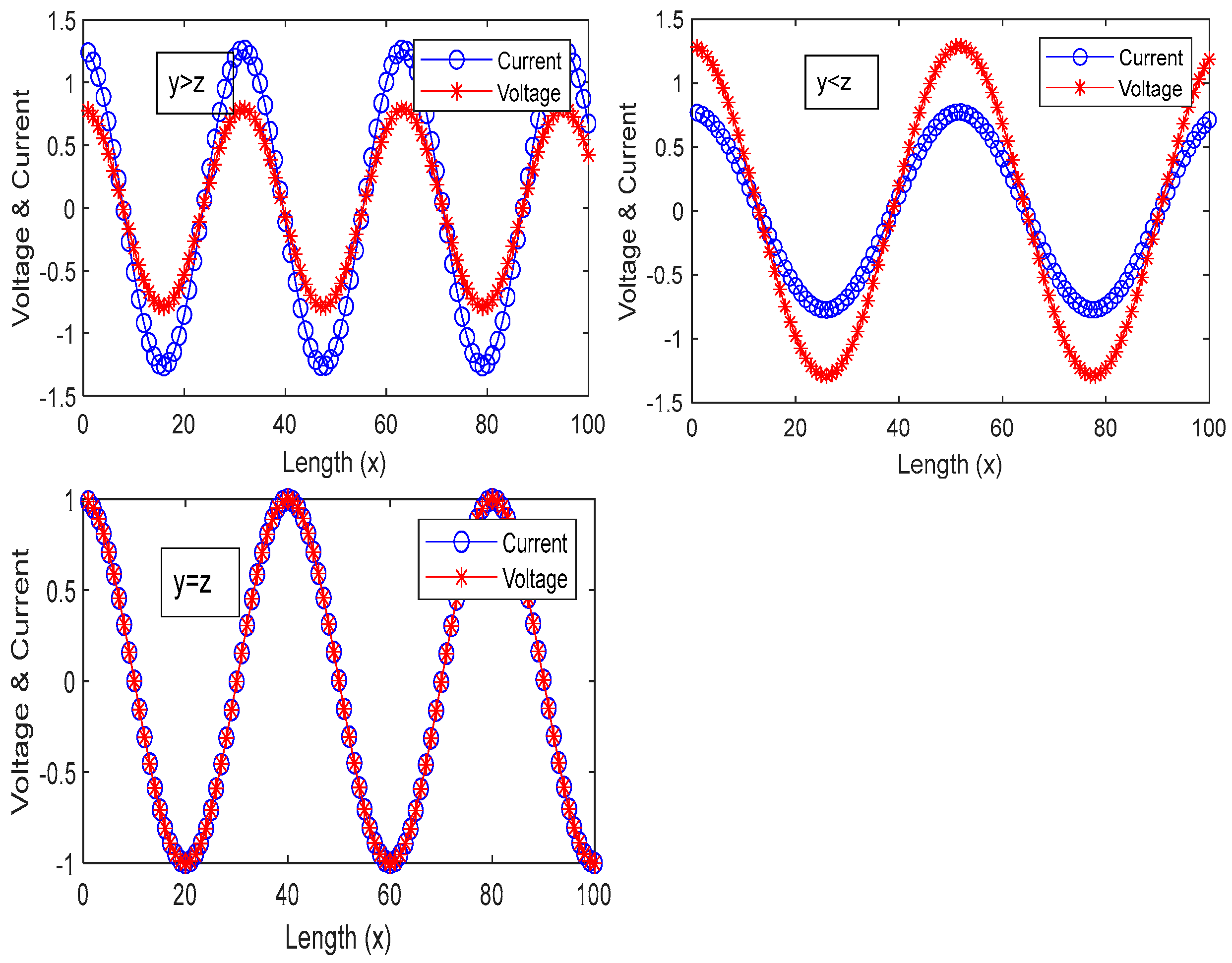

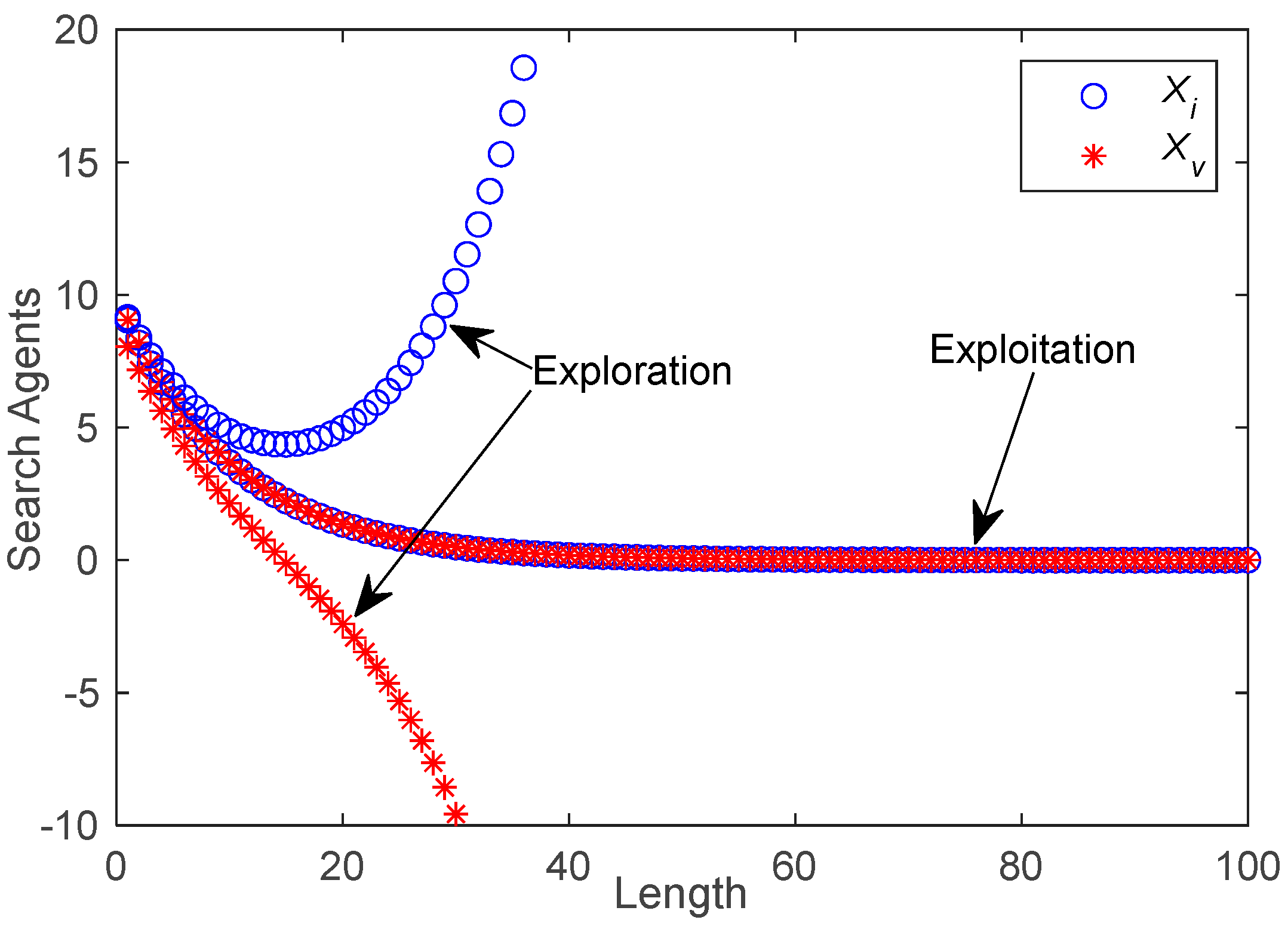

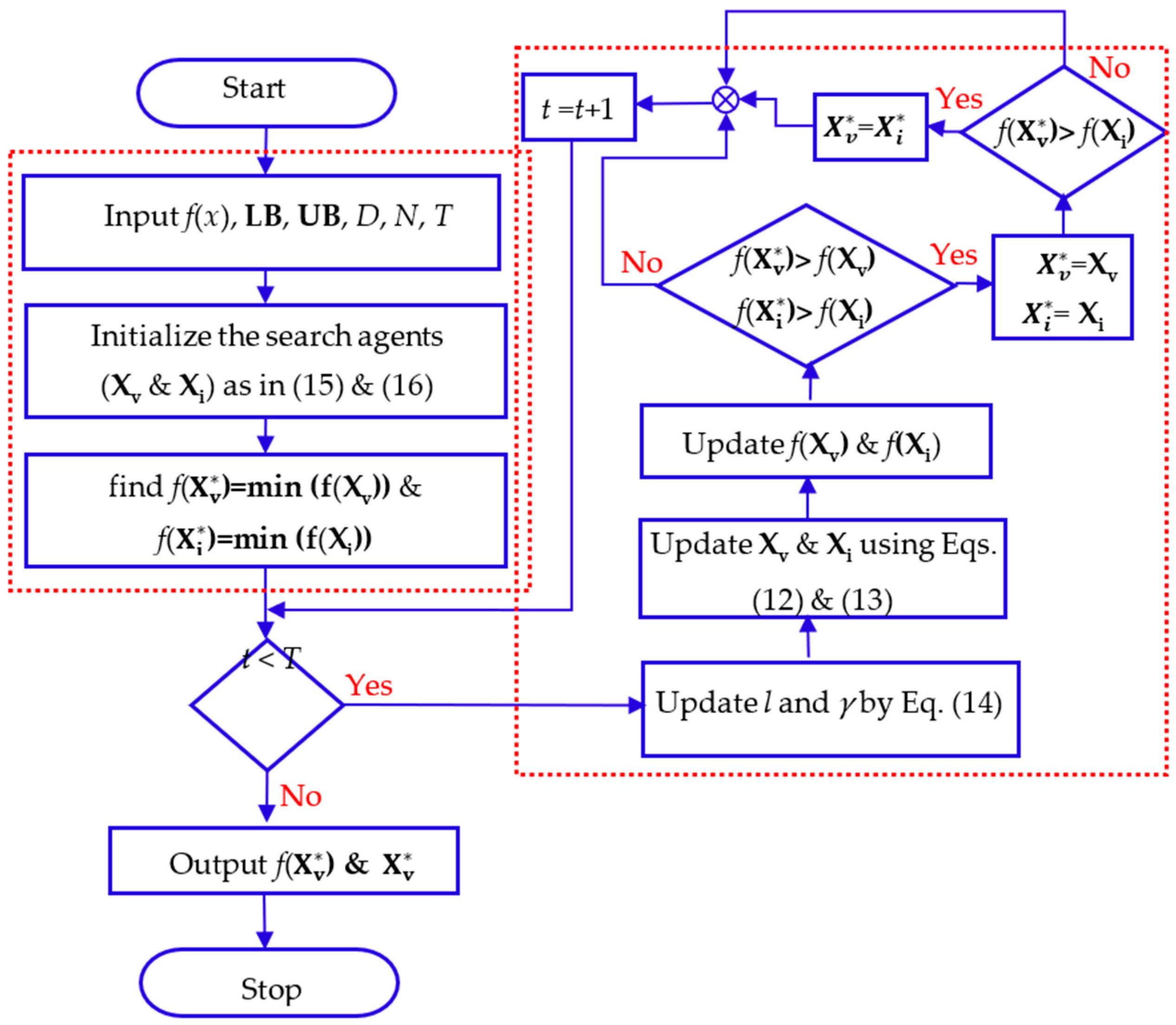

The research gap is defined by the no-free lunch theorem, which states that there is no single algorithm that can succeed in solving all optimization problems. In addition, the mathematical modeling and program coding of some recent metaheuristic algorithms are complex and cannot be easily applied to the online process control of engineering applications. Moreover, some models of algorithms are not investigated by related scientists. Therefore, using a studied mathematical model, simple software code, and fast convergence motivated us to propose a new metaheuristic optimizer called the propagation search algorithm (PSA), stimulated by the propagation of the waveforms of the electrical voltage and current along long transmission lines. Scientists previously offered mathematical voltage and current models at any transmission line section. Then, we adapted these models to be randomized models for random transmission lines with random impedances and admittances. The voltage and current are considered the search agents of the PSA, where they propagate based on their previous values and the propagation constant of the transmission line. These search agents rely on each other’s values, which helps them to encircle the best solution.

The main contributions of this paper are summarized below:

Developing a new physics-based metaheuristic algorithm called the propagate search algorithm (PSA), inspired by voltage and current waveform propagation along long transmission lines.

Testing the PSA using the 23 famous testing functions and comparing the outcomes with eight famous metaheuristic algorithms.

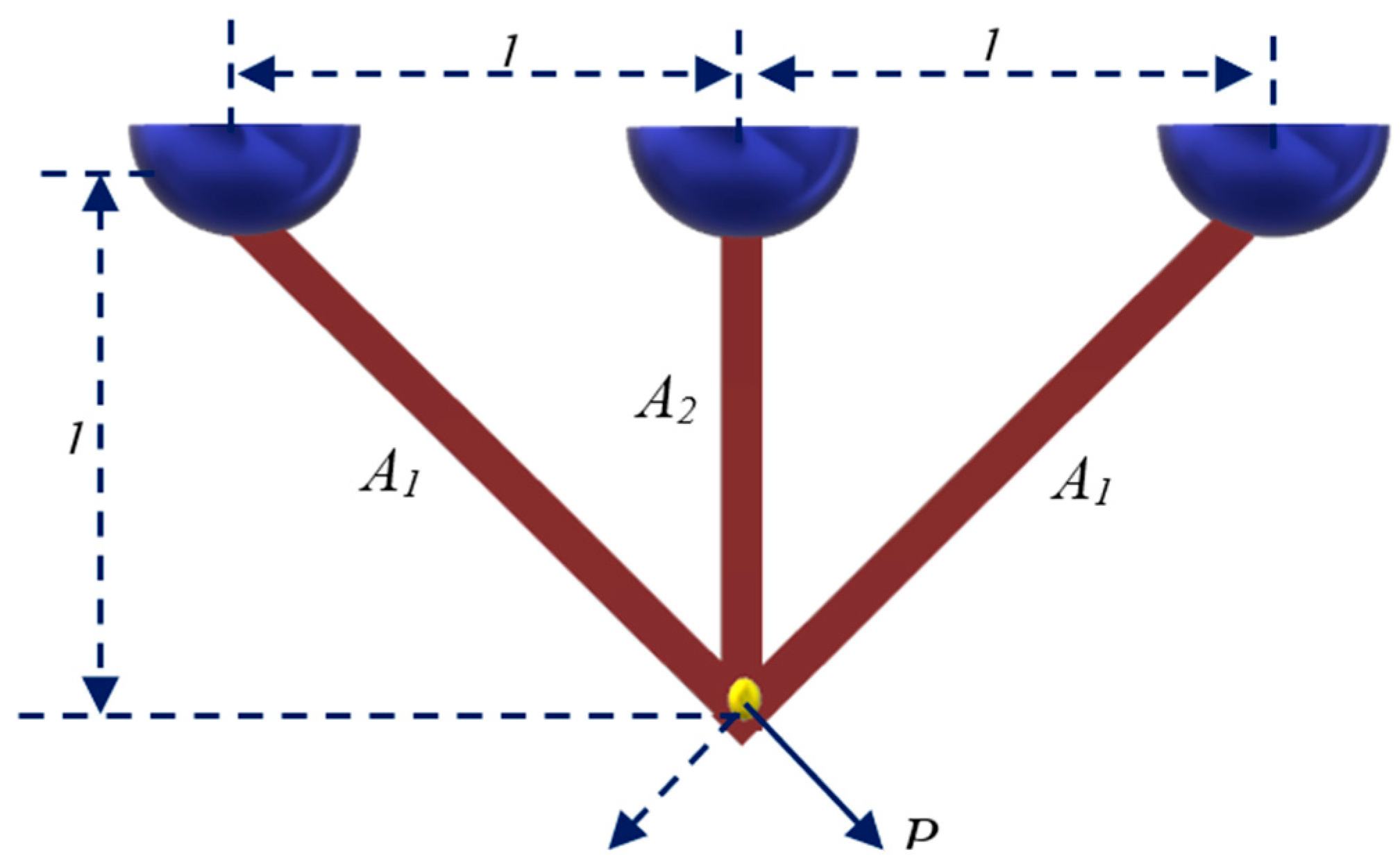

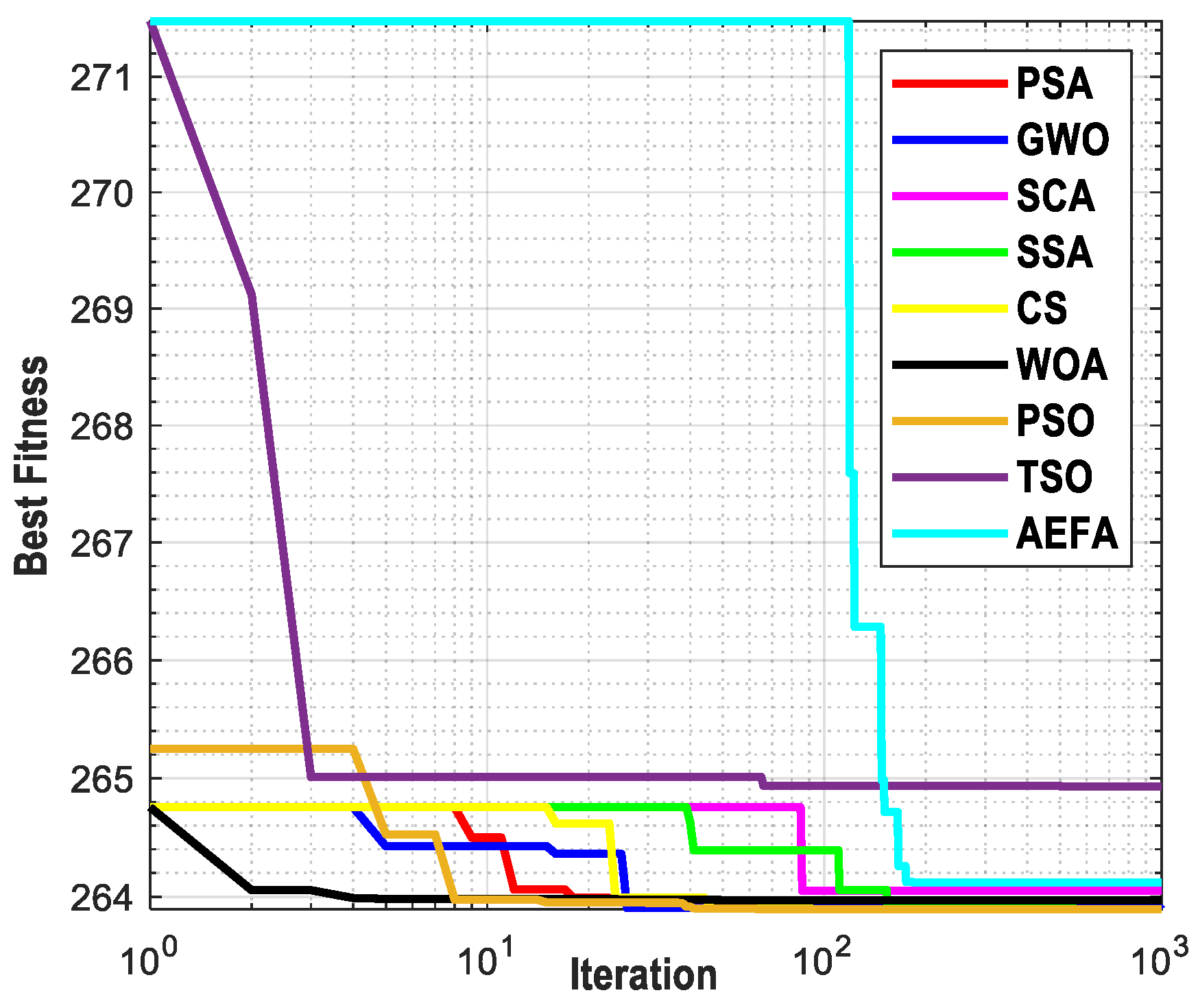

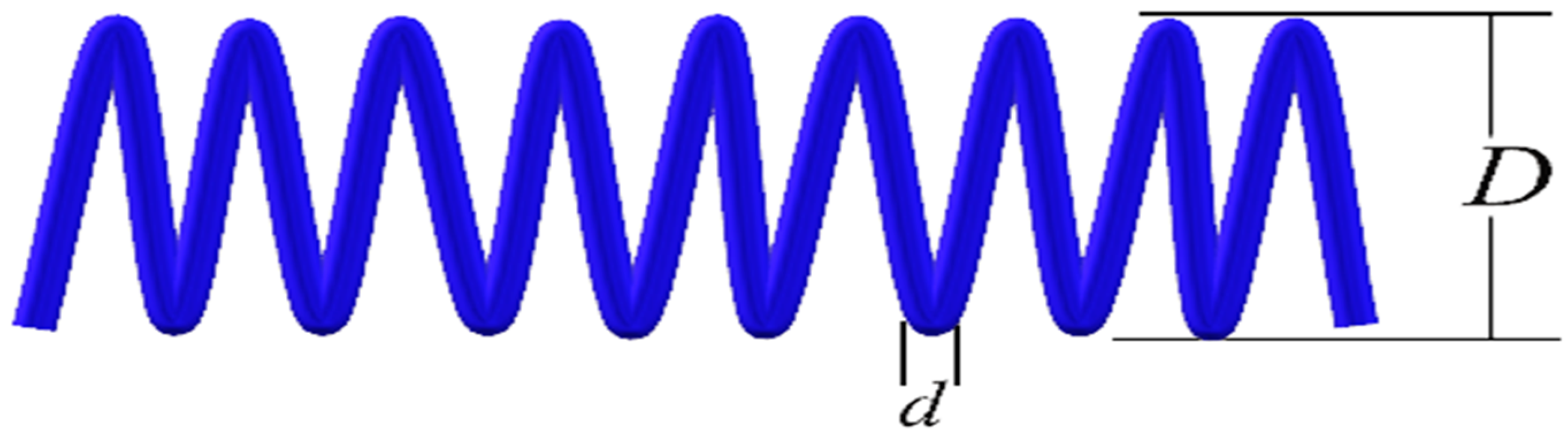

Applying the PSA to find the optimum design of four famous engineering problems and comparing it with other metaheuristic algorithms.

The remainder of this paper is designed as follows:

Section 2 describes the background, the mathematical modeling, and the flowchart of the proposed PSA;

Section 3 describes the testing results;

Section 4 shows the application of the proposed PSA to different engineering applications; and

Section 5 presents a brief conclusion about the contribution and the results of this paper.

3. Optimization Results of Testing Functions

First of all, the robustness of the proposed PSA should be tested using the 23 well-known testing functions. These functions are utilized to verify the exploration and exploitation behaviors of all previously published algorithms. Some of these functions are utilized to test the exploitation ability of the algorithms because they are convex and have one lowest solution, such as the unimodal functions (F1–F7) displayed in

Table 1. Other functions are utilized to verify the exploration capability of algorithms to escape being trapped in a local minimum solution because they have different local minimum solutions rather than the global minimum solution, such as the multimodal functions (F8–F23) displayed in

Table 2 and

Table 3. Then, the minimum values of these functions found by the PSA are compared with those of other renowned algorithms.

In this section, we used eight metaheuristic optimization algorithms besides the PSA. These are the particle swarm optimization (PSO), grey wolf optimizer (GWO), whale optimization algorithm (WOA), sine-cosine algorithm (SCA), transient search optimization (TSO), salp swarm algorithm (SSA), cuckoo search algorithm (CS), and artificial electric field algorithm (AEFA). These algorithms are carefully selected based on the most popular algorithms applied in different engineering applications, such as PSO, GWO, and the WOA. Some of these algorithms are selected because they are inspired by physical behaviors, such as TSO and the AEFA. The remaining algorithms are selected randomly for more comparisons. The values of the algorithms’ parameters are displayed in

Table 4.

In this work, the testing experiments are implemented using MATLAB R2023a on Windows 10 64 bits on a Core i7, 16 GB RAM laptop. For an unbiased evaluation, all compared algorithms have a similar number of search agents, N = 30, and the same total number of iterations, T = 500, and all algorithms have the same initialized search agents.

3.1. Statistical Analysis

Due to the random operation of all metaheuristic algorithms, we need to test these algorithms in many independent optimization experiments. Therefore, all optimization algorithms are executed 20 times for each testing function in this paper. Then, the robustness of an algorithm is confirmed by applying different statistical methods, for example, mean (

m) and standard deviation (

σ) and nonparametric testing methods.

Table 5 displays the

m and

σ of 20 independent runs for all 23 testing functions using the offered PSA and other compared algorithms. The outcomes proved that the offered PSA is competitive with different algorithms and obtained the optimum outcomes for 16 functions out of 23. However, the second competitive algorithm is the CS algorithm, which achieves 9 best functions out of 23 functions.

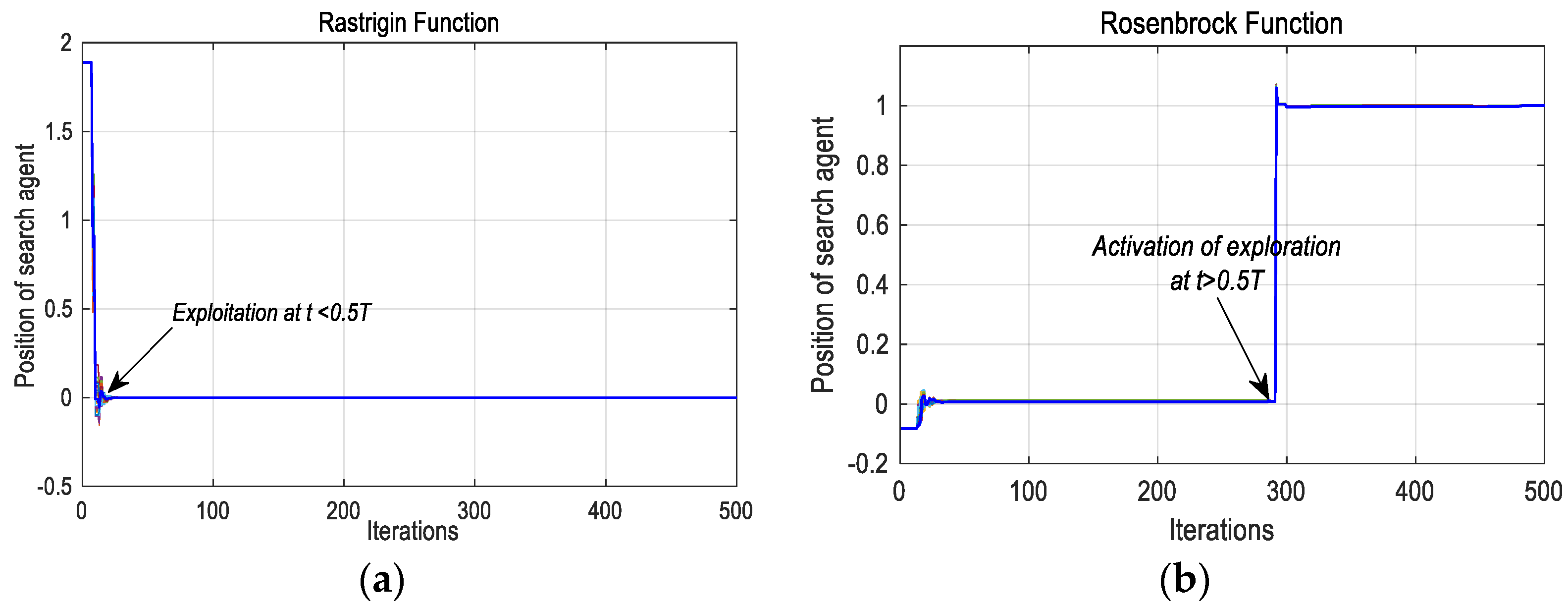

The exploitation of the algorithms is measured using unimodal functions (F1–F8), where the proposed PSA achieved the best outcomes for seven out of eight functions, which proved the exploitation capability of the PSA among other algorithms. On the other side, the exploration capability of the PSA is measured using the multimodal functions (F9–F23). The results show that the PSA obtained the 10 best results out of 15, whereas the CS algorithm achieved second place with the 9 best results out of 15. To check the equilibrium between exploration and exploitation, Rosenbrock (F5) and Rastrigin (F9) are used because they are contrasted, and no algorithm can solve both of them successfully. The results verified that the PSA succeeded in obtaining better results than other algorithms in both types of functions.

Additionally, the Wilcoxon signed-rank test with a 5% significance level is utilized to verify the significance of the PSA among other compared algorithms. This test is a null hypothesis test, which hypothesizes that the results of the PSA and other algorithms are the same if the

p-value is higher than 0.05.

Table 6 shows the

p-values and the denial of the null hypothesis (

h = true) or acceptance of it (

h = false). We can notice that the most dominant decision is

h = true, meaning a significant difference exists between the PSA and other algorithms.

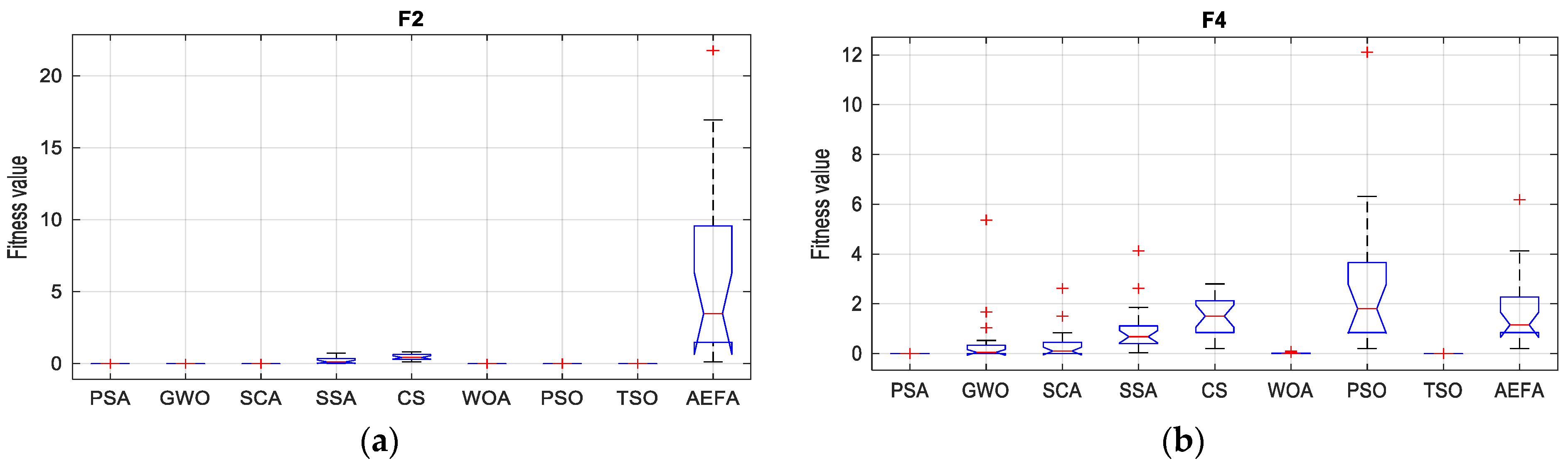

Finally, a boxplot is used for further statistical analysis to verify the performance of the PSA during 20 independent experiments.

Figure 6a–f shows the boxplot for six benchmark functions (F2, F4, F8, F10, F12, and F21). The boxplot analysis shows that the PSA has obtained a very small deviation between the maximum and minimum values of 20 experiments. Moreover, it is noticeable that there are outlier points, which are labeled by (+), for all compared algorithm except PSA. Therefore, the boxplot proved the robustness of the PSA over other algorithms.

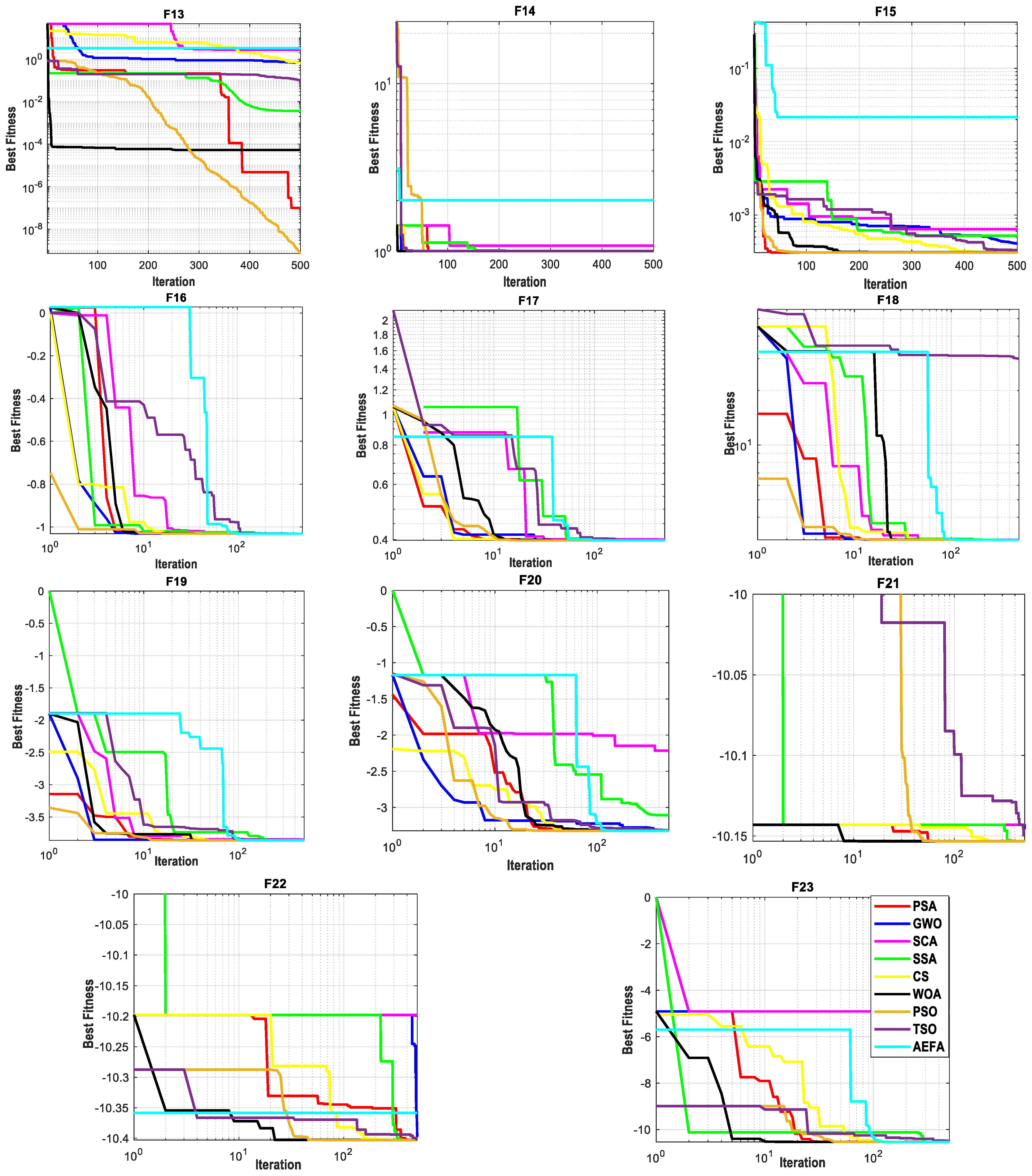

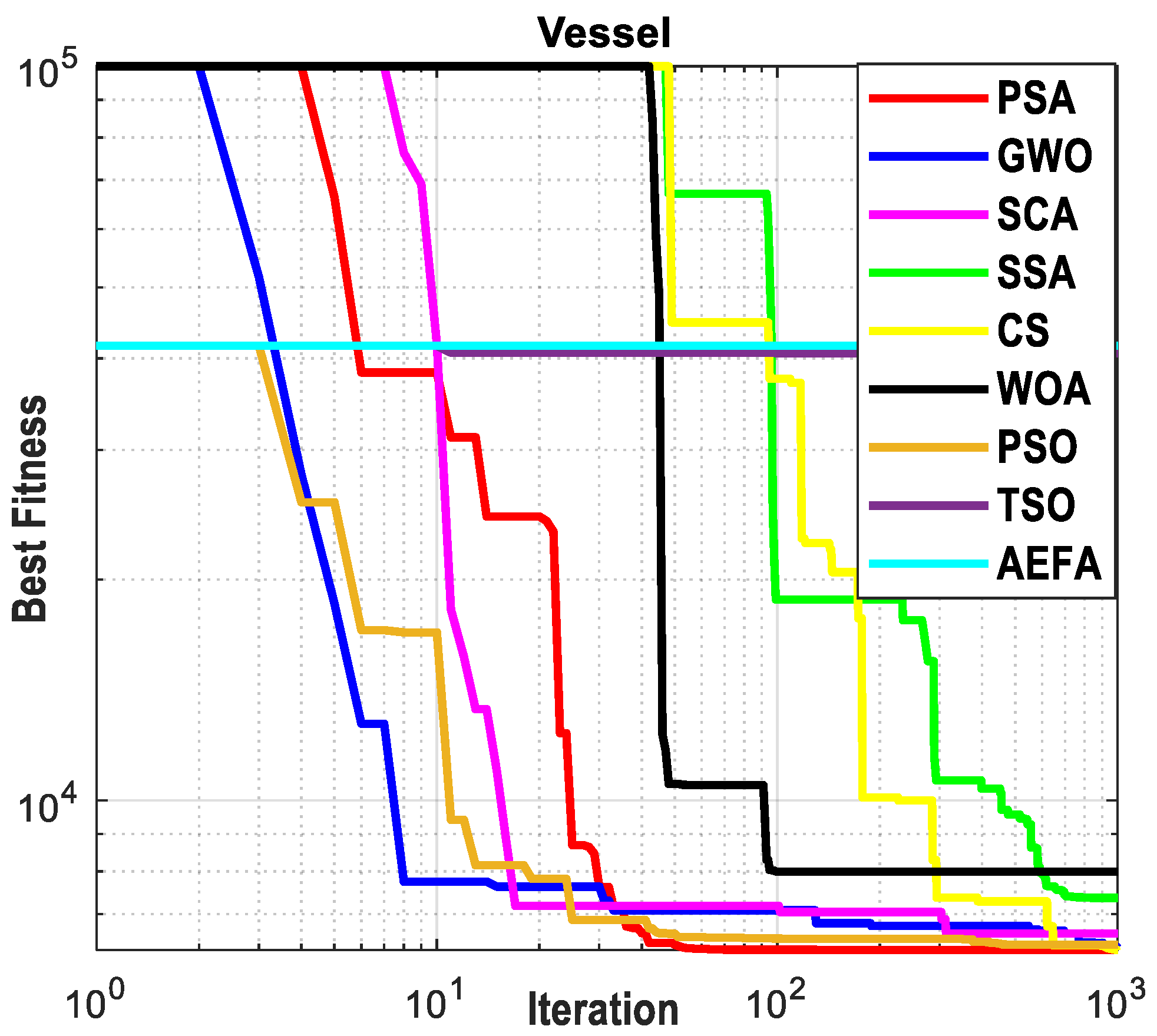

3.2. Optimization Convergence

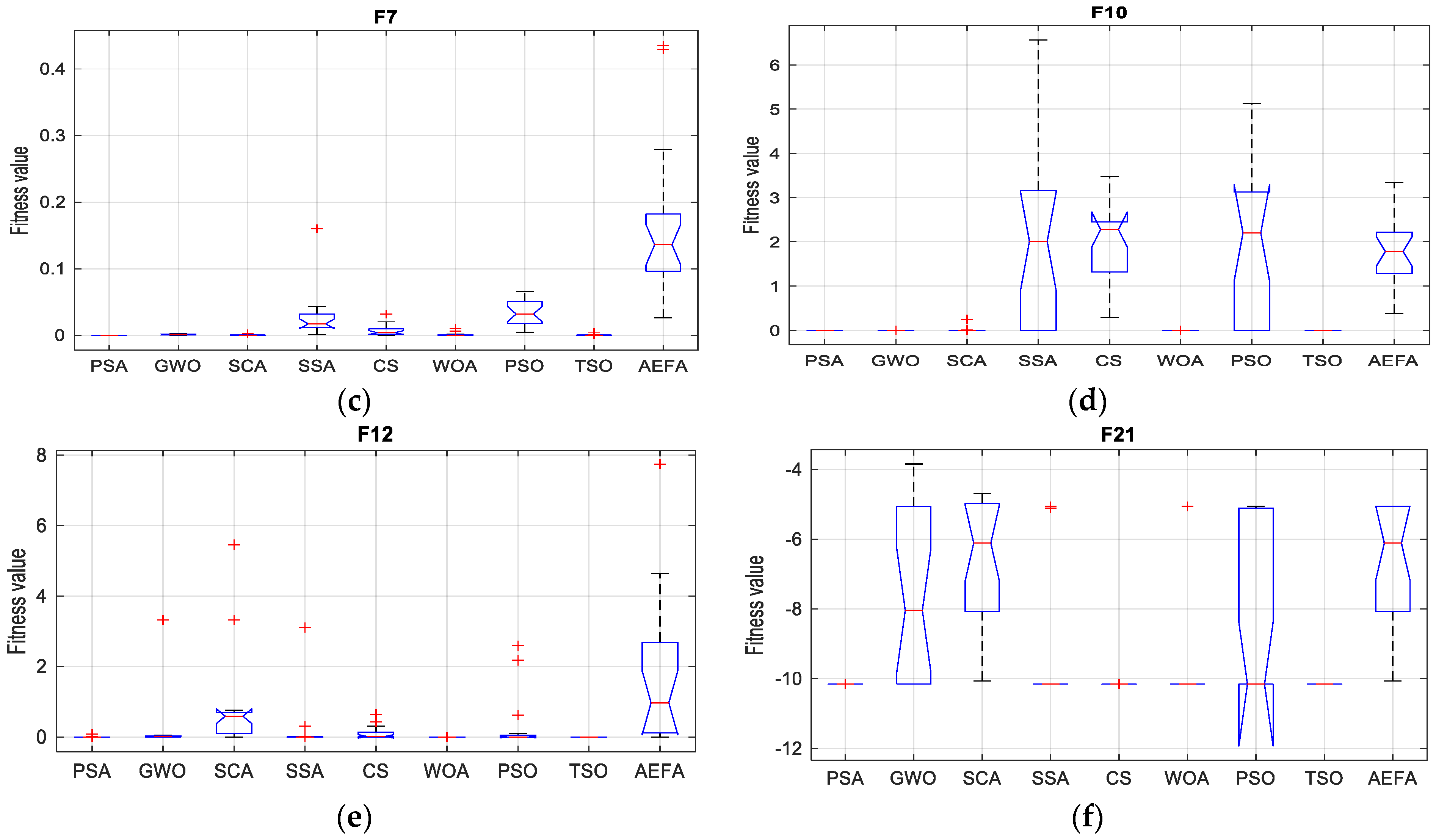

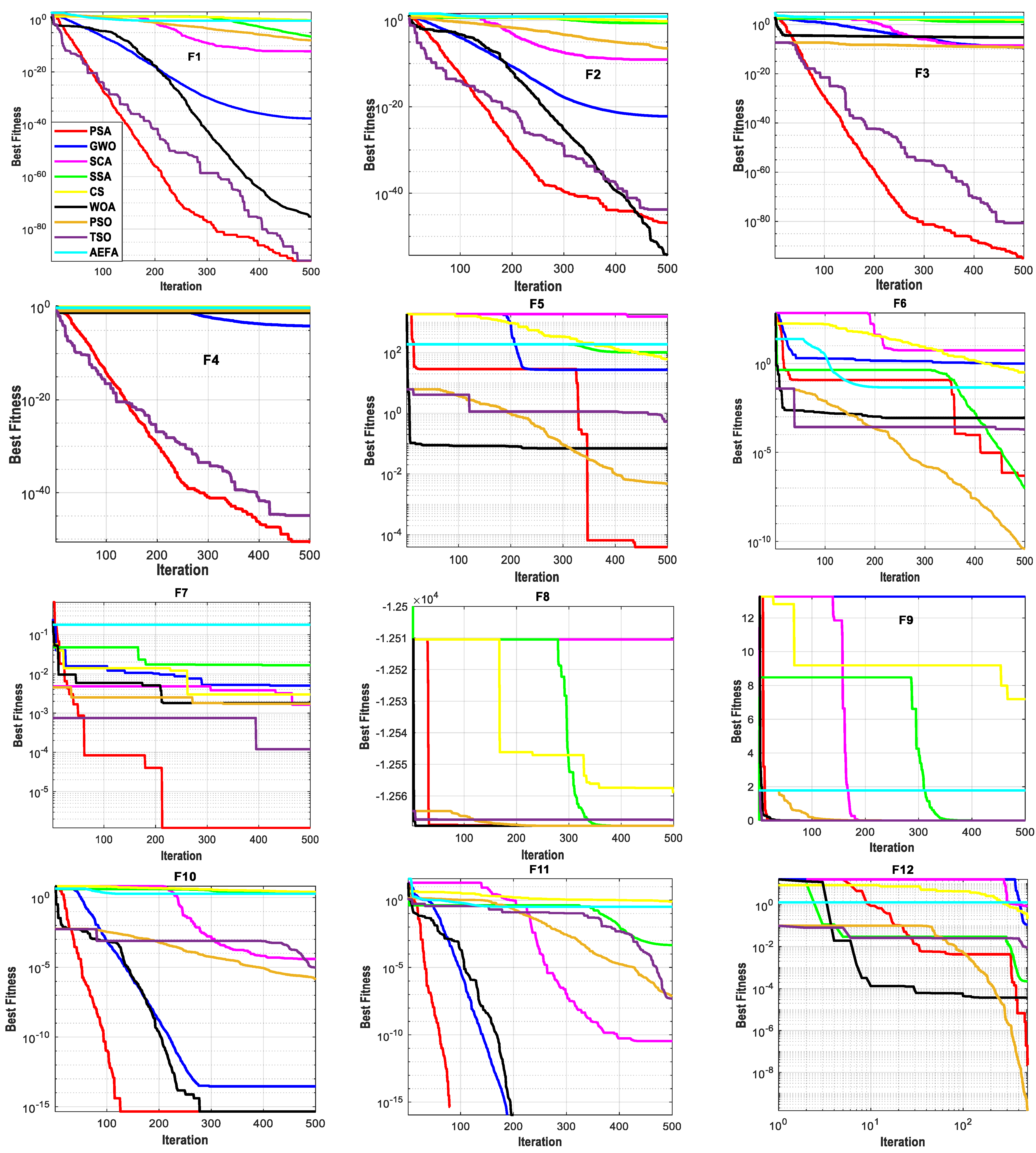

The speed of finding the best solution plays a critical role in control systems, so we need to check the behavior of algorithms with each increment of iterations.

Table 7 displays the total elapsed time for all compared algorithms during each independent experiment. However,

Figure 7 shows the convergence behavior for all compared algorithms toward the best minimum solution. It is noticeable that all algorithms start from the same initial point for unbiased comparison. The proposed PSA shows fast convergence for the testing functions F1–F8, and then at the middle of total iterations (

t = 250) it becomes slower because of changes in the values of the propagation constant (

γ). For the remaining functions, the PSA competes with the other algorithms in searching for global solutions; particularly, at

t = 250 the PSA escapes being trapped in a local solution.