Abstract

An improved hybrid firefly algorithm with probability attraction model (IHFAPA) is proposed to solve the problems of low computational efficiency and low computational accuracy in solving complex optimization problems. First, the method of square-root sequence was used to generate the initial population, so that the initial population had better population diversity. Second, an adaptive probabilistic attraction model is proposed to attract fireflies according to the brightness level of fireflies, which can minimize the brightness comparison times of the algorithm and moderate the attraction times of the algorithm. Thirdly, a new location update method is proposed, which not only overcomes the deficiency in that the relative attraction of two fireflies is close to 0 when the distance is long but also overcomes the deficiency that the relative attraction of two fireflies is close to infinity when the distance is small. In addition, a combinatorial variational operator based on selection probability is proposed to improve the exploration and exploitation ability of the firefly algorithm (FA). Later, a similarity removal operation is added to maintain the diversity of the population. Finally, experiments using CEC 2017 constrained optimization problems and four practical problems in engineering show that IHFAPA can effectively improve the quality of solutions.

Keywords:

improved hybrid firefly algorithm; probability attraction model; constrained optimization problem; remove similarity operation; combined mutation MSC:

6804

1. Introduction

Optimization problems widely exist in various fields of life. Traditional solving methods, such as the Newton method, conjugate gradient method and simplex method, need to traverse the entire search space, resulting in a combination explosion of search, that is, the search cannot be completed in polynomial time. In view of the complexity, constraint, nonlinearity and modelling difficulties in practical engineering problems, the research on meta-heuristic algorithms is particularly important. A meta-heuristic algorithm has the following advantages in solving complex engineering problems: (1) simple principle, fewer parameters to be adjusted and easy to implement; (2) do not need the derivative information of objective function; (3) differentiability and convexity are not required; (4) widely used to solve problems difficult to define with mathematical models or complex optimization problems; (5) strong, robust, versatile and suitable for parallel processing. Compared with traditional optimization methods, the probability of successfully escaping from the local optimal region is higher when solving complex problems, such as multi-extremum optimization. Therefore, meta-heuristic algorithms have great advantages in solving complex optimization problems and have been widely used in many fields.

Meta-heuristic algorithm includes the evolutionary algorithm as a key component. Evolutionary algorithms are primarily used to identify the best answer to optimization problems through the use of selection, crossover, mutation and other processes. The most well-known evolutionary algorithms are the genetic algorithm (GA) [1] and the differential evolution algorithm (DE) [2]. Population intelligence algorithms, as another important part of metaheuristic algorithms, update the position of individuals by simulating the behavior of animals, such as foraging, hunting and finding mates, to find the optimal solution of the optimization problem. In the past few decades, people have proposed many intelligence optimization algorithms, such as particle swarm optimization (PSO) [3], firefly algorithm (FA) [4], artificial bee colony algorithm (ABC) [5] and other swarm intelligence optimization algorithms. In 2008, Yang et al. [6] were inspired by fireflies’ luminous characteristics to attract mates and avoid natural enemies at night and proposed an FA. FA has the advantages of simple principles, few parameters, easy implementation, high precision and fast convergence. Therefore, the firefly algorithm is implemented to solve various problems in different domains, such as neural networks [7,8,9], scheduling problems [10,11,12], image processing [9,13], wireless parameter optimization of sensor networks [14,15,16] and big data processing [17,18].

Numerous academics have worked tirelessly over the past ten years to boost the FA’s effectiveness, with some promising study findings. In the FA, the attraction model greatly impacts the computational TC, convergence speed and solution quality in the algorithm. The more times of attraction in the attraction model, the faster the convergence speed of the algorithm and the worse the diversity of the population. The higher the TC, the easier it is to fall into a local optimum. In addition, the comparison times of fitness are different for different attraction models. The more fitness comparisons, the higher the time complexity (TC) in the algorithm. The attraction model used by the standard firefly algorithm (SFA) [6] is a complete attraction model (CAM). SFA can have an excess of attraction because each firefly can be attracted to another, with brighter fireflies in the population. Too much attraction can lead to oscillations or fluctuations in the algorithm search process, resulting in a poor quality algorithm solution. In 2016, Wang et al. proposed a firefly algorithm with random attraction (RaFA) [19]. RaFA uses a random attraction model (RAM) in which each firefly in the population is compared with another firefly chosen at random. If the brightness of the randomly selected firefly is higher than that of the current firefly, the current firefly is attracted by the randomly selected firefly; otherwise, the current firefly is not attracted. The RAM reduces the TC of the algorithm due to its smaller number of attractions, but also slows down the algorithm’s convergence due to the smaller number of attractions. In 2017, Wang et al. proposed a neighborhood attraction firefly algorithm (NaFA) [4], which uses a neighborhood attraction model (NAM), where brighter fireflies in the k-neighborhood are attracted to the current firefly. Although the NAM has a relatively moderate number of attractions, which has a greater possibility of reducing the premature convergence phenomenon, too many comparisons of too much brightness will lead to too much TC in the algorithm. In 2021, Cheng et al. proposed the hybrid firefly algorithm for group attraction (GAHFA) [20], which uses the grouping attraction model (GAM). GAHFA population size was even n, and all fireflies in the population were sorted and grouped according to the OFV, consisting of n/2 groups. Fireflies with high brightness in each group attract fireflies with low brightness, and fireflies with the highest brightness in the population attract fireflies with high brightness in each group. Although GAHFA has moderate attraction times and low TC in the algorithm, the attraction relationship between fireflies in the GAM is fixed, and it is easy to become stuck in a local optimum. To solve this problem, a new firefly attraction model, probabilistic attraction model, is proposed.

Use the example of an optimization problem where the goal function is the minimum value to demonstrate the issue. First, all fireflies are sorted according to fitness value from small to large. After sorting, the first firefly is the brightest firefly and the best firefly. Second, the first firefly in the population moves randomly. Third, starting from the second firefly, calculate the selection probability of each firefly. According to the selection probability of fireflies, select a brighter firefly from the fireflies in front of the current firefly. Fireflies are attracted to the brighter fireflies selected and so on. The probability attraction model can decrease the quantity of attraction times and brightness comparisons while preventing a situation in which there are insufficient numbers of both. In addition, the fireflies selected by the probability attraction model are random, which will reduce the possibility of the algorithm being trapped in local optimum. At the same time, the brightness of the firefly chosen by the probability attraction model is higher, which overcomes the drawback that the firefly chosen by the RAM cannot attract the present firefly.

The position-updating formula of the FA in the existing literature may have a phenomenon that the relative attractiveness tends to zero at the beginning of the iteration. The method conducts a random search because the relative attractiveness is 0, which causes a slow convergence of the algorithm and poor solution quality. In response to this problem, a formula for updating the position of the firefly with adaptive changes in relative attractiveness is presented. The relative attractiveness of adaptive changes will not approach zero in the iterative process. In addition, the location update formula not only considers the influence of high-brightness fireflies on the location-update fireflies but also considers the guiding effect of the best firefly in the population on the position-update fireflies.

In the later iteration of the algorithm, fireflies will gradually gather near the best fireflies, so the fitness difference among fireflies will be very small. In light of this circumstance, a formula is suggested to calculate the degree of similarity S among population members. The higher the similarity, the worse the diversity in the population and the lower the exploration ability of the algorithm; there is a high probability of getting trapped by a local optimum. The updating strategy of some individuals in the population is proposed to eliminate the similarity between individuals in the population. The update strategy is that, when S is less than the threshold, update some individuals in the population.

A combined mutation based on selection probability is proposed to dynamically select a single mutation operator with strong exploration and exploitation ability. This will further improve the solution quality and convergence speed of the algorithm and better balance the FA’s exploration and exploitation ability. In the early stages of the algorithm’s iteration, the combined mutation operator based on selection probability has a large chance of selecting a single mutation operator with strong global ability; in the later phases of the iteration, the probability of selecting a single mutation operator with strong local ability is high. First, according to the exploration and exploitation ability of each single mutation operator, multiple mutation operators are divided into two categories. The single mutation operator in the first category has a strong exploration ability, and the single mutation operator in the second category has a strong exploitation ability. Second, a formula is designed to calculate the selection probabilities of the two types of mutation operators based on improvements in the solutions to the optimization problems of the two types of mutation operators. A type of mutation operator has a bigger selection probability if it can enhance the quality of the problem’s solution, whereas if it cannot, it has a reduced selection probability. Each mutation operator within each category is chosen at random.

The major contributions of this paper are:

- According to the uniformity and diversity of the initial population generated by different initialization methods, the best method of population uniformity and diversity is selected as the population initialization method.

- A probabilistic attraction model is proposed for the problems of various attraction models.

- A firefly position update formula with an adaptive change in relative attraction is proposed to improve the convergence speed and solution quality of FA.

- A combined mutation operator based on selection probability is proposed, which can adaptively select a single mutation operator with strong exploration ability and exploitation ability.

- A remove similarity operation is added to the algorithm to enhance the exploration ability of the algorithm and maintain the diversity of the population.

- The proposed IHFAPA is compared with other improved algorithms in the literature in parameter optimization, such as reducer and cantilever beam. IHFAPA is superior to them in solution quality.

The organization of the rest of this paper is: Section 2 reviews the existing firefly algorithms; in Section 3, an IHFAPA is proposed; in Section 4, the proposed IHFAPA algorithm is experimentally analyzed using the CEC 2017 test function set and the performance is compared with other algorithms; Section 5 utilizes the IHFAPA algorithm to solve four classical engineering optimization problems, which is then compared with other algorithms; Section 6 is the Conclusion.

2. Related Works

2.1. Firefly Algorithm

In 2008, Yang proposed an FA based on the luminous characteristics of fireflies and the principle of mutual attraction between individual fireflies [6]. In order to construct the FA, some characteristics of the firefly flash need to be idealized. The specific idealization criteria are as follows:

(1) Fireflies are male and female, that is, fireflies are attracted to each other.

(2) Attraction is proportional to the brightness of fireflies. For any two fireflies, the high-brightness fireflies attract the low-brightness fireflies and move toward them, and the brightest fireflies move randomly.

(3) The brightness of fireflies is determined by the OFV of the problem to be optimized. Let n be the population size, the i-th firefly in the population is Xi = (xi1, xi2, …, xiD)T (i = 1, 2, …, n) and D is the dimension of the variable. The distance rij between any two fireflies i and firefly j in the population is:

where Xi and Xj are the i-th fireflies and j-th fireflies in the population, xik is the k component of the firefly i in the population and xjk is the k component in the j-th firefly in the population.

The attraction of firefly i to firefly j βij(rij) is:

where β0 is the maximum attraction, that is, when the attraction rij = 0, usually β0 = 1.

If the brightness of firefly j is higher than that of firefly i, firefly i is attracted by firefly j and moves toward firefly j. The location-update formula of firefly i is:

where t is the number of iterations; step is the step factor step ∈ [0,1]; r is a random number between [0,1].

The pseudo code of the standard firefly algorithm is shown in Algorithm 1.

| Algorithm 1: Pseudo code of FA |

| Input: The population size of the population is n, and the dimension of the variable is D, T is maximum number of iterations; |

| Output: The final population; |

| Randomly generate n initial fireflies; Calculate the fitness value of all initial fireflies; Parameter initialization, population size, maximum attraction and light attraction coefficient; let t = 0; |

| While t ≤ T do t←t + 1; |

| for i = 1 to n do |

| for j = 1 to n do |

| If firefly j is brighter than firefly i then |

| Generate a new firefly according to Equation (3); |

| Evaluate the new solution; |

| End if |

| End for |

| End for |

| Rank the fireflies and find the current best; |

| End While End |

2.2. Brief Review of FA

FA has received wide attention from many scholars because of its simple concept, fewer parameter settings and easy implementation. In the past decade, the research results on FA improvement have mainly focused on the adaptive adjustment of parameters, the improvement in position update formula, the improvement in attraction model and the hybrid firefly algorithm.

2.2.1. Adaptive Adjustment of Parameters

The performance of the FA is significantly influenced by the parameters in the position-update formula, and the adaptive adjustment parameters can significantly enhance the algorithm’s performance. As a result, numerous researchers have examined parameter adaptive adjustments in the algorithm and proposed numerous adjustment methods. In 2012, Leandro et al. [21] proposed the light-absorption factor γ and step factor α adaptive adjustment firefly algorithm (CFA). CFA introduces Tinkerbell map in the light-absorption factor γ, which reduces the probability of the FA falling into a local optimum. The step size factor α in the CFA algorithm decreases linearly with the number of iterations, which better balances the exploration and exploitation ability of the algorithm. In 2013, Rizk-Allah et al. [22] proposed a method of non-linearly adjusting the step-size factor. The step-size factor decreases non-linearly with the increase in the number of iterations, which avoids disturbances in the optimal position of the firefly by the random term. Liang et al. [23] proposed an enhanced FA, which achieves a balance between exploration and exploitation ability by dynamically adjusting the step factor α. In the initial stage of the iteration, the value of α is large and the algorithm’s exploration ability is strong; in the later stage of the iteration, the value of α is small and the algorithm’s exploitation ability is strong. In 2017, Wang et al. [4] proved that when the number of iterations approached infinity and the limit of the step factor α was equal to 0, the algorithm converged, and they proposed a new method for dynamically adjusting the step factor α. The step factor α in this method decreases rapidly with the increase in iteration times and finally approaches zero, thus, ensuring the convergence of FA. In 2018, Banerjee et al. [24] proposed a firefly algorithm (PropFA) based on a new parameter-adjustment mechanism. In this mechanism, all parameters in the FA are dynamically adjusted according to the value of the objective function, striking a balance between exploration and exploitation. Experiments show that Prop FA performs better than other comparison algorithms. In 2019, Zhang et al. [25] proposed a dynamic adjustment in step-size factor α to improve the firefly algorithm. The results show that this method has better performance than other algorithms. In 2020, Amit et al. [26] proposed an improved firefly algorithm that considers the initial brightness β0 of environmental factors. The initial brightness of the algorithm changes dynamically, thereby achieving a better balance between exploration and exploitation. However, the algorithm does not consider the situation where the attractiveness value β is 0 when the two fireflies attracting each other in the population are far apart, which affects the performance of the algorithm.

2.2.2. Improved Location-Update Method

Location update is a crucial component in the FA and has a significant impact on how well the algorithm can optimize. The convergence speed and solution accuracy of the algorithm can be increased by optimizing the FA’s location-updating procedure. In 2016, Wang [19] and others proposed an improved firefly algorithm that replaces full attraction with random attraction, which greatly reduces the number of attractions and reduces the TC in the algorithm. However, the RAM may cause slow convergence of the algorithm and poor solution quality due to too few attraction times. In 2018, Zhan et al. [27] proposed an improved firefly algorithm, which introduced accelerated attractiveness and evading strategies into the location-update formula of the firefly algorithm. Accelerated attractiveness makes the current firefly converge in the vicinity of the optimal firefly faster, and the evading strategy keeps the current firefly away from the low-brightness firefly. The two operations can improve the convergence speed of the algorithm and reduce the probability of the algorithm falling into local optimum. In 2019, Wang et al. [28] proposed a firefly algorithm based on gender differences and designed two position-update formulas for fireflies of different genders. Male fireflies are attracted to two randomly selected females for global search; the female fireflies move to the vicinity of the best male fireflies for local search, which better balances the exploration and exploitation ability of the algorithm. The problem with the gravity calculation formula of this algorithm is that when the two fireflies are far apart, the value of relative attraction approaches 0, which causes the algorithm’s update formula to not work. In 2020, Wu et al. [29] proposed an adaptive logarithmic spiral-Levy FA (ADIFA). ADIFA designed two position-update formulas: the first position update formula Introduced Levy flight, which improves the exploration ability of the algorithm; the second position-update formula introduces the logarithmic spiral path, which improves the exploitation capabilities of the algorithm. In addition, an adaptive switch is also designed to realize the algorithm’s adaptive switching between exploration and exploitation modes. Experimental results show that the ADIFA algorithm is much better than the other three firefly algorithms.

2.2.3. Improvement in Attraction Model

The attraction model is an important part of the FA, which has a great impact on its performance. In 2008, the standard FA proposed by Yang et al. [4] used the CAM, in which each iteration can attract any brighter firefly in the population. In 2016, Wang et al. [19] proposed a random-attracting firefly algorithm to solve the problem that the algorithm too easily falls into local optimum due to too many times of attraction in the CAM. In each iteration of the random-attraction firefly algorithm, the i-th firefly in the population (i = 1, 2, …, n, n is the population size) matches the randomly selected j-th (j = 1, 2, …, n, i ≠ j) and compares the brightness of only fireflies. If the brightness of the j randomly selected firefly is higher than that of firefly i, firefly i will be attracted by firefly j. Otherwise, the i-th firefly will not be attracted by the j-th firefly. Compared with the CAM, the attraction times of the RAM are greatly reduced. Too few attraction times will cause the algorithm to converge slowly. In 2017, Wang et al. [30] proposed a firefly algorithm with neighborhood attraction. Each firefly in the population can be attracted by a brighter firefly in its k-neighborhood. The number of attractions in the NAM is relatively moderate, which can improve the convergence speed of the algorithm. Too much brightness comparison leads to high TC in the algorithm. In 2021, Cheng et al. [20] proposed a GAM. The GAM sorted the fireflies in the population according to the fitness value from small to large, and then the fireflies in the population are divided into n/2 groups. The fireflies with high brightness in each group attract the fireflies with low brightness. In addition, the best fireflies in the population also attracted bright fireflies in each group. Although the number of attraction times and the number of brightness comparisons are moderate in the GAM, since each firefly in the population is attracted by a fixed firefly, the algorithm easily falls into a local optimum.

2.2.4. Hybrid Firefly Algorithm

A single intelligence optimization method is constrained by the program’s structure or conditions that are associated with it and it easily falls into local optimum, producing subpar solutions. In order to fully exploit various intelligence optimization algorithms, the hybrid optimization algorithm combines two or more intelligence optimization algorithms or optimization ideas. Furthermore, the advantages of optimization ideas can improve the algorithm’s performance. In 2013, Huang et al. [31] proposed a hybrid firefly algorithm combining local random search methods, which effectively improved the local search ability of the algorithm (HFA). In addition, HFA is applied to reduce the jacket loss of downhole transmission and good results are achieved. In 2016, Verma et al. [32] proposed a hybrid firefly algorithm based on reverse learning (ODFA). ODFA uses reverse learning to optimize the position of the initial solution and improve the quality of the initial population. In 2017, Dash et al. [33] proposed a hybrid meta-heuristic algorithm that mixes the firefly algorithm and the differential evolution algorithm. The algorithm takes full advantage of the strengths of the firefly algorithm and the differential evolution algorithm and is well balanced, with improved algorithm exploration and exploitation ability. In 2018, Aydilek et al. [34] proposed a hybrid algorithm combining the firefly algorithm and particle swarm algorithm (HFPSO). The algorithm makes use of the fast convergence of PSO for global search and the fine tuning of FA for the local search, to balance the relationship between algorithm exploration and exploitation. The experimental results of the two test function sets, CEC 2015 and CEC 2017, show that HFPSO is significantly better than other algorithms. In 2019, Li et al. [35] proposed a hybrid firefly algorithm that embeds the cross-entropy method into the firefly algorithm. The method uses adaptive smoothing and co-evolution to fully absorb the robustness, ergodicity and adaptability of the cross-entropy method. This hybrid algorithm enhances the global search ability of the algorithm, stops the algorithm from falling into local optimization and improves the convergence speed of the algorithm. In 2020, Wang et al. [36] proposed a hybrid firefly algorithm that introduces a learning strategy containing Cauchy mutation into the firefly algorithm. In each iteration, the best firefly must implement L learning strategies to better balance the relationship between exploration and exploitation. However, the value of L is too large, which greatly increases the TC in the algorithm and will cause the algorithm to fall into a local optimum.

3. Proposed Methods

3.1. Population Initialization

3.1.1. Initial Population Generation Method Based on Square-Root Sequence Method

The good point set theory was first proposed by Hua Luogeng and Wang Yuan in the book “The Application of Number Theory in Approximate Analysis” [37]. The point set generated based on this theory is evenly distributed in the unit space. Therefore, using the good point set theory can produce a uniformly distributed initial population in the search space, which can ensure the diversity of the initial population and reduce the possibility of the algorithm falling into local optimum. The specific steps of the initial population generation method based on the square-root sequence method are as follows:

Step 1: use the square-root sequence method to generate the first good point in the unit cube, that is, r1 = (, , …, ), where, = {}, j = 1, 2, …, D. p1, p2, …, pD is D prime numbers from small to large; {•} represents the decimal part.

Step 2: based on r1, generate a good point set containing n good points according to Equation (4) Pn = (r1,r2,…, rn).

where n is the population size and pj is the j-th prime among D prime numbers that are not equal from small to large. D is the dimension of the problem and {•} is the decimal part.

Step 3: map the good point set generated by the square-root sequence method to the search space to obtain the initial population X of the population. The method of mapping the good point set to the search space is:

where Xi is the i-th individual in the population, Ub and Lb are the upper and lower bounds of the search space, ri is the i-th good point in the good point set Pn and ⊗ is the product of the corresponding elements of the two vectors.

The method of initial population generation is shown in Algorithm 2.

| Algorithm 2: Initial population generated based on the square-root sequence good point set method |

| Input: The population size of the population is n, and the dimension of the variable is D; Output: The initial population of the population; |

| Produce the first good point r1: |

| Generate a good point set according to Equation (4) Pn |

| for I = 1 to n do |

| The individual generating the initial population according to Equation (5) Xi: |

| end for |

3.1.2. Compare Different Population Initialization Methods

The commonly used population initialization methods include random initialization method, population initialization method based on chaotic mapping, population initialization method based on reverse learning and population initialization method based on good point set theory. The random initialization method is simple in principle and easy to implement. Many references [19,38] use this method to generate the initial population and achieve good results. Chaotic mapping has the characteristics of randomness, ergodicity and regularity. Many scholars often use chaotic mapping to generate an initial population. In 2005, Yu et al. [39] took the lead in using chaotic mapping to generate an initialization population in GA, which effectively improved the optimization accuracy of the algorithm. Since then, many scholars have used chaotic mapping to generate initialization populations [40,41,42]. There are more than ten kinds of chaotic mappings. The chaotic mappings commonly used to generate the initial population are logistic mapping and tent mapping. The initial population generated by logistic mapping is mainly distributed at both ends of the search space, and the middle region less so. If the optimal solution is in the middle region of the search space, the convergence speed of the algorithm will be reduced. Tent mapping has better ergodicity and uniformity than logistic mapping, so tent mapping is often used to generate the initial population. In 2005, Tizzoosh et al. [41] proved that both the current solution and the elite individual have a 50% probability that they are far from the optimal solution compared to the reverse solution. In 2008, Rahnamayan et al. [40] used the population initialization method based on reverse learning to generate the initial population and achieved good results. Good point set theory is a point set generation method proposed by Professor Luogeng Hua [37]. It is mathematically proved that the point sets generated by good point set theory are evenly distributed in unit space. There are three major ways to generate point sets in the unit cube: exponential sequence method, circular domain method and square-root sequence method. Because the number of digits after the decimal point that can be displayed by the computer is limited, when the dimension is greater than 35, the decimal part of the number generated by the exponential sequence ek is 0, and the exponential sequence method is invalid. Therefore, the initial population can be generated using the split-circle domain method or the square-root sequence method.

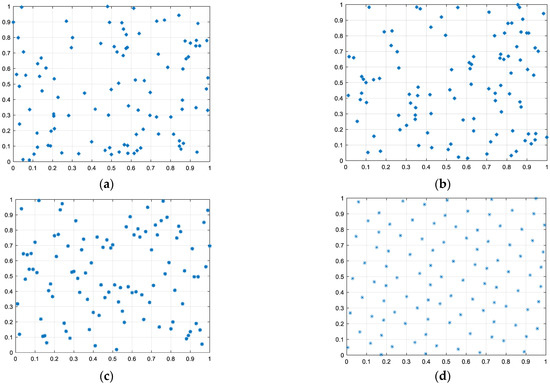

The advantages and disadvantages of the initial population generated by different methods can be judged according to the uniformity of individual distribution in the search space and the polyphase of the population. In order to clearly describe the uniformity of the initial population generated by different methods in the search space, taking a population size of 100 and a variable dimension of 2 as examples, the initial population is generated in the interval [0,1] by using the random initialization method, the population initialization method based on tent mapping, the population initialization method based on reverse learning and the population initialization method based on the square-root sequence method. The initial population generated by different initialization methods is shown in Figure 1.

Figure 1.

Schematic diagram of initial population generated by different methods. (a) Random initialization method; (b) initialization method based on Tent mapping; (c) initialization method based on reverse learning; (d) initialization method based on square-root sequence.

From Figure 1, the initial population generated by the random initialization method, the population initialization method based on tent mapping and the population initialization method based on reverse learning have the phenomenon of uneven distribution and aggregation. If the optimal solution is far away from the aggregation region, the convergence speed of the algorithm will be slow and it easily falls into a local optimum. The initial population generated by the population initialization method based on square-root sequence is dispersed in the whole search space and there is no aggregation phenomenon. Therefore, the initial population is generated based on square-root sequence.

The variety in the population has a significant effect on how well the algorithm performs. Good population variety makes it difficult for the algorithm to fall into premature convergence. A population diversity computation method is provided in order to quantitatively compare the diversity in the starting population created by various population initiation methods. The calculation method of population diversity is:

where n is the population size, Xi is the i-th firefly, Xj is j-th firefly in the population and d (Xi, Xj) represents the Euclidean distance between the i-th firefly and the j-th firefly. The calculation formula of d (Xi, Xj) is as follows:

where D is the dimension of the variable, xik is the k-th component of the i-th firefly and xjk is the k-th component of the j-th firefly.

In Equation (6), the value of Diversity is proportional to the distance between individual fireflies in the population. The greater the distance between individual fireflies, the greater the value of Diversity; the smaller the distance between individual fireflies, the smaller the value of Diversity. The greater the value of Diversity, the more uniform the distribution of individuals in the population, and the less likely it is the algorithm will fall into local optimum; the smaller the value of Diversity, the more likely it is that there is individual clustering in the population, and the easier it is for the algorithm to fall into local optimum.

According to Equation (4), the diversity in the initial population generated by the above four methods is calculated, and the calculation results are given in Table 1.

Table 1.

Population diversity.

In Table 1, the population initialization method based on the square-root sequence method produces the best initial population diversity, followed by the population initialization method based on tent mapping and the population initialization method based on reverse learning, then for the initial population generated by the random initialization method, the population diversity in the population is the worst.

In conclusion, whether it is population diversity or population uniformity in the search space, the initial population produced by the population initialization approach based on the square-root sequence method is the best. Therefore, the square-root-sequence-based population initialization approach is chosen as the population initialization method.

3.2. Probability Attraction Model

3.2.1. Common Attraction Model

In the existing literature, the commonly used attraction models are: complete attraction model (CAM), random attraction model (RAM), neighborhood attraction model (NAM) and grouping attraction model (GAM). In order to facilitate the statistics of the attraction times and brightness comparison times of various attraction models, let the population size of FA be n and the objective function is the minimum.

In the CAM, each firefly is attracted by other brighter fireflies in the population at each iteration. The individuals in the population are sorted according to the OFV from small to large. Since each firefly will only be attracted to other brighter fireflies, the first firefly (the best firefly) is not attracted and the number of attractors is 0; the second firefly is only attracted by the first firefly, and the number of attractions is 1. By analogy, the last firefly (the worst firefly) in the population will be attracted by all other fireflies, and the number of attractions is n − 1. Therefore, the total attraction times T1 of the CAM are:

According to Equation (8), the total attraction times T1 of the CAM T1 = n (n − 1)/2, then the average number of attractions t1 = T1/n = (n − 1)/2 for each firefly.

For the FA using the CAM, each firefly must judge whether it is attracted by other fireflies. Therefore, the n fireflies in the population need brightness comparison. When comparing brightness, each firefly needs to compare brightness with another n − 1 firefly, then the total brightness comparison times of n fireflies are T2 = n (n − 1), and the average brightness comparison times of each firefly are t2 = n − 1.

In the NAM [30], each firefly is attracted by a brighter firefly in the k-neighborhood during iteration. The k-neighborhood of Xi consists of 2k + 1 fireflies {Xi-k, …, Xi, …, Xi+k}, where k is an integer and 1 ≤ k ≤ (n − 1)/2. k in reference [42] is much smaller than (n − 1)/2. In order to analyze the times of attraction in each iteration, firstly, the fireflies are sorted according to the OFV from small to large. After sorting, the first firefly is the firefly with the highest brightness and the nth firefly is the firefly with the darkest brightness. Secondly, all fireflies are linked to a ring topology according to the indicator order 1, 2, …, n. The total attraction times of all fireflies are T1 = 0 + 1 + … + k − 1 + k + k + 1 + k + 2 + … + 2k − 1 + 2k = kn, and the average attraction times of each firefly are t1 = T1/n = k. Each firefly needs to compare its brightness with all other fireflies in its k-neighborhood. The total brightness comparison times are T2 = 2kn, and the brightness comparison times of each firefly are t2 = 2k.

In the RAM [19], each firefly compares its brightness with another randomly selected firefly in each iteration. Only the fireflies move; otherwise, they do not move. Therefore, the maximum number of attraction times for all fireflies is T1 = n, and the average number of attraction times for each firefly is t1 = 1. Since each firefly has to be compared with another randomly selected firefly, the number of times of brightness comparison for each firefly is 1. For the RAM with a population size of n, the total number of comparisons of the brightness of all fireflies is n, and the number of comparisons of the average brightness of each firefly is t2 = 1.

In the GAM [20], there are two fireflies in each group. In each iteration, the fireflies with low brightness in each group are attracted by the fireflies with high brightness, and the fireflies with high brightness in each group are also attracted by the fireflies with the highest brightness in the population. Therefore, the total attraction times of all fireflies are T1 = n − 1, and the average attraction times of each firefly are t1 = T1/n = (n − 1)/n. In the GAM, the brightness of two fireflies in each group should be compared. There are n/2 groups, which need to be compared n/2 times; the firefly with high brightness in each group needs to be compared with the highest firefly in the population for (n − 1)/2 times. Therefore, the sorting of n fireflies requires n − 1 comparisons, and the average brightness comparison times of each firefly t2 = (n − 1)/n.

The population variety is worse, the TC is higher and local optimization is simpler to fall into as there are more times of attraction. Additionally, for different attraction models, there are various brightness comparison times. The algorithm’s TC increases with the number of fitness comparisons. For a good attraction model, the attraction times and brightness comparison times should be moderate, and the selection of fireflies with high brightness should be adaptive or have a better guiding effect on the attracted fireflies. A new attraction model known as the probability attraction model is developed in order to make the attraction times and brightness comparison times of the attraction model modest and to make the attraction model adaptive while choosing fireflies with high brightness.

3.2.2. Probability Attraction Model

To facilitate the description of the probability attraction model, suppose the population size is n and the objective function of the optimization problem is to find the minimum. If the objective function is to seek the maximum value, the objective function can be changed to seek the minimum value according to max P(X,M) = −min[−P(X,M)]. In addition, in order to make the probability attraction model adaptive in choosing to attract fireflies, and to make the attracted fireflies have a better guiding effect on the attracted fireflies, all the fireflies in the population are sorted according to their OFV and the selection probability of each firefly is calculated. When selecting to attract fireflies, the fireflies with a high probability of selection have a high probability of being selected as attracting fireflies. In the GAM, a firefly is used to attract fireflies, and a firefly in the probability attraction model is selected, as attracting fireflies is determined by its selection probability. The firefly with high probability of selection has a greater probability of being selected as an attractive firefly. Therefore, the probability attraction model is adaptive in choosing to attract fireflies. At the same time, the selected attracting fireflies have a better guiding effect on the attracted fireflies. The specific steps in the probability attraction model are as follows:

(1) Order fireflies according to the OFV from small to large.

(2) Calculate the selection probability of each firefly after sorting. The calculation formula for selection probability is:

where Pk is the selection probability of the k-th individual in the population, λ is a constant between 0.01 and 0.3, and λ = 0.15, fit(k) is the fitness value of the k-th firefly.

(3) According to the selection probability Pk (k = 1,2, …, n − 1) of each firefly, calculate the cumulative probability PPk (k = 1,2, …, n − 1) of each firefly. PPk is calculated as follows:

(4) Choose to attract fireflies for the n-th firefly in the population. The selection method is to generate a random number rr evenly distributed in the interval [0,1]. If rr satisfies PPk − 1 ≤ rr ≤ PPk (k = 2, 3, …, n − 1), the k-th firefly is selected as the attracting firefly. Selecting and attracting fireflies for the n − 1 firefly also requires generating a random number rr, and if rr satisfies PPk – 1 ≤ rr ≤ PPk (k = 2, 3, …, n − 1), select the k-th firefly as the attracting firefly. By analogy, the n − 3, n − 4, …, 2th fireflies are selected to attract fireflies, and the first firefly is the best. It is not attracted by any fireflies and only moves randomly.

In summary, when the population size is n, the total number of attraction times is T1 = n − 1 and the average number of attraction times for each firefly is t1 = (n − 1)/n. The total number of brightness comparisons T2 = n(n − 1)/2, the average number of brightness comparisons of each firefly t2 = (n − 1)/2. The number of attraction times and the number of brightness comparisons of the five attraction models are shown in Table 2.

Table 2.

The number of attractions and brightness comparison of the 5 attraction models.

In Table 2, the total attraction times of the probability attraction model are less than the CAM and the NAM, which are equal to the GAM. For the RAM, if the randomly selected n fireflies have high brightness, the total attraction times of the probability attraction model are less than that of the RAM; otherwise, the total attraction times of the probability attraction model are greater than or equal to the RAM. For the average attraction times, the comparison result of the average attraction times of each firefly is the same as that of the total attraction times. In addition, the brightness comparison times of the probability attraction model are equal to the GAM, but less than the CAM, RAM and NAM.

To sum up, for the five attraction models, the probability attraction model and GAM have moderate attraction times and the least brightness comparison times. In the GAM, the attraction relationship between fireflies is fixed, that is, the attraction of the next n/2 fireflies to the first n/2 fireflies is unchanged, and the attraction from the second to the n/2 fireflies to the first firefly is also unchanged. In the probability attraction model, whether a firefly with low brightness is attracted by a firefly with high brightness is determined by the selection probability of the firefly with high brightness. When selecting to attract fireflies, it is adaptive and has a great probability to select the firefly with higher brightness, which has a better guiding role for the attracted firefly.

Therefore, the probability attraction model can avoid premature convergence caused by too many times of attraction and can also avoid too little attraction and reduce the convergence speed of the algorithm. When choosing to attract fireflies, according to the selection probability of fireflies, the selection strategy is adaptive and has a better guiding role for attracted fireflies. In addition, because the brightness comparison times of the probability attraction model are less, the TC in the algorithm can be reduced.

3.3. Improved Location-Update Method

In the firefly algorithm in the existing literature [6,43,44], the position-update formula and the calculation formula of relative attraction are:

where the brightness of xi is higher than that of xj, βij is the relative attraction between firefly i and firefly j, β0 is the maximum attraction, γ is the light-absorption coefficient, rij is the distance between firefly i and firefly j, α is a constant and εj is a random number obtained from Gaussian distribution. For most problems, it can take β0 = 1, γ ∈ [0.01,100], α ∈ [0,1].

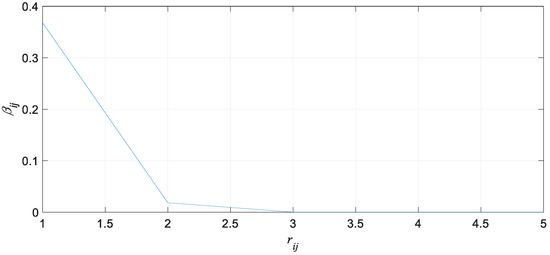

When γ = 1, the curve of relative attractiveness βij as the number of iterations increases is shown in Figure 2.

Figure 2.

Variation curve of relative attraction βij.

In Figure 2, the relatively attractive force βij decreases with the distance rij between firefly i and firefly j increases. When the distance between firefly i and firefly j is rij ≥ 3, the value of relative attraction βij(rij) approaches 0. At this point, the value of the attractive term βij in the position-update formula approaches 0, the βij has no effect, the position-update formula has no guiding effect and the algorithm degenerates into a random search with a slow convergence speed. At the same time, because of the relatively small value of the perturbation term αεj, the global search ability of the algorithm is weak, and it is easy to fall into local optimization. In addition, for a given problem to be optimized, if the value range of variables is large (for example, −100 ≤ xik ≤ 100, I = 1, 2, …, n, k = 1, 2, …, D), the distance between fireflies in the population after initialization is relatively far, and the value of relative attraction βij(rij) may approach 0, so the attraction term does not work. As the number of iterations increases, the distance between fireflies in the population decreases gradually and the value of relative attraction βij(rij) increases gradually. When rij < 3, the attraction term works, and with the increase in attraction βij(rij), the attraction term gradually increases, so the guiding effect of the firefly with high brightness in the position-update formula gradually increases and the convergence speed of the algorithm will be gradually improved. To solve the above problems, a new position-update formula is proposed. The new location-update formula is:

(1) Sort the fireflies according to their OFV from small to large.

(2) The fireflies in the population update their positions as follows.

where t is the number of iterations, Xi(t) is the position of the i-th firefly of generation t, Xi(t + 1) is the position of the i-th firefly in generation t + 1, Xbest(t) is the position of the best firefly in generation t, rand(1,D) is a random vector uniformly distributed between [0,1], Xk(t) is the position of the firefly selected by probability attraction in generation t, β is the position of the firefly i and the attraction between fireflies Xk, R is the adjustment coefficient, α is the dynamic step and ε is a uniformly distributed D-dimensional random vector between [0,1], ⊕ indicating that the elements of two vectors at the same position are multiplied.

βR(Xk(t) − Xi(t)) in Equation (14) is called the attraction term, (1 − R) rand (1, D) ⊕ (Xbest(t) − Xi(t)) is called the bootstrap term and α(ε − 1/2) is called the stochastic term. β and R are calculated as follows:

where βmax is the maximum attraction, βmin is the minimum attraction (both are constants), is the light-absorption coefficient, usually taken as 1, runtime is the time of the current iteration and Maxtime is the maximum time for the algorithm to run.

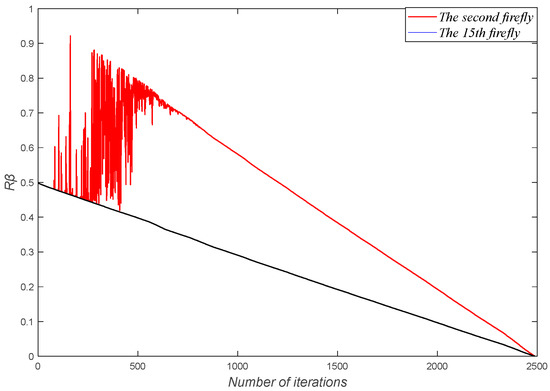

From Equations (14) and (16), it can be seen that Equation (16) can avoid the attraction β being 0 at the beginning of the iteration, which leads to the phenomenon that the attraction term does not work. In addition, from Equation (16), at the beginning of the iteration, the fireflies are far away from each other, so the value of β is small, and with an increase in the number of iterations, the distance of fireflies gradually decreases, and the value of β gradually becomes larger; when the distance of fireflies is 0, the value of β reaches the maximum, at which time β = βmax. As the number of iterations increases, β becomes larger and R becomes smaller, so the trend of βR cannot be determined. To judge the changing trend of βR with the increase in the number of iterations, the C01 function in the CEC 2017 function set was chosen as the test function, and the βR values of the 2nd firefly and the 15th firefly at each iteration were kept, and a change curve of βR with an increase in the number of iterations was drawn, as shown in Figure 3.

Figure 3.

Variation curve of βR.

Figure 3 illustrates that the value of βR decreases with an increase in the number of iterations. At the beginning of the iteration, the value of βR is larger, the distance between fireflies in the population is relatively far, the value of Xk(t) − Xi(t) is larger and the value of βR(Xk(t) − Xi(t)) is larger. In addition, it can be seen from Equation (17) that at the beginning of the iteration, the value of R is larger, the value of 1 − R is smaller, the value of rand(1, D)(1 − R) is even smaller and the value of rand(1,D)(1 − R)( Xbest(t) − Xi(t)) is also smaller. At the beginning of the iteration, the term βR(Xk(t) − Xi(t)) plays a major role, then the algorithm’s global search ability is strong; at the later part of the iteration, the distance between fireflies becomes smaller compared with the beginning of the iteration and the value of Xk(t) Xi(t) is also decreasing compared with the beginning of the iteration. In addition, in the later stage of the iteration, the value of βR gradually decreases. Hence, in the later stage of the iteration, the value of βR(Xk(t) − Xi(t)) gradually decreases compared with the initial stage of the iteration, and the βR(Xk(t) − Xi(t)) term has a weaker influence on the change in firefly position. As the value of R gradually becomes smaller in the later iterations, the value of 1 − R gradually becomes larger and the value of rand(1,D)(1 − R)(Xbest(t) − Xi(t)) gradually changes in a large manner. Therefore, at the later stage of the iteration, the local search capability of the algorithm is enhanced. The position-update formula of the population can better consider the global search ability and local search ability of the algorithm.

The main content of the firefly population position update is given in Algorithm 3.

| Algorithm 3: Improved position update based on probability attraction model |

| Input: individual fireflies of the population; Output: the updated firefly population; |

| Calculation of the OFV for fireflies in the current population; |

| The fireflies in the population are sorted according to their OFV from small to large; |

| Recording the best firefly Xbest and its OFV fbest; |

| for i = 2 to n do |

| Update Xi(t) and Xbest according to Equation (14); |

| End for |

3.4. Combined Mutation Operator Based on Selection Probability

FA’s exploration and exploitation abilities are determined by the firefly’s location-update formula. The firefly location-update formulation given in the existing literature has a strong exploration capability in the early iteration and a strong exploitation capability in the late iteration, which can balance the exploration and exploitation ability of the algorithm to some extent. For a multiple-optimization problem, even if the iteration termination condition is the maximum number of iterations or the maximum running time, it is impossible to judge when it is the early stage of the iteration and when it is the late stage of the iteration. Therefore, estimating the early and late iterations based on the quantity of iterations or running duration is incorrect. According to the maximum number of iterations and the maximum running duration, there are two issues with determining the issues in the early and late iterations: First, the algorithm performs local search when local search is required and global search when local search is required. In addition, the algorithm does not need global search in each iteration in the early stages of iteration, and sometimes it may need local search; in the late stage of iteration, local search is not required for each iteration. If it falls into local optimum, global search is required. The combination mutation operator in the literature [20] selects a single mutation operator according to the same probability. It is possible that when a mutation operator with strong exploration ability is needed, a mutation operator with strong exploitation ability is selected. A combined mutation operator based on selection probability is proposed to solve the above problem.

For the convenience of description, suppose the i-th firefly participating in the mutation operation is Xi(t) (i = 1, 2, …, n) and the firefly obtained after the mutation is Xi(t + 1) (i = 1, 2, …, n), b1, b2, b3, b4, b5 ∈ {1, 2, …, n}, and b1 ≠ b2 ≠b3 ≠ b4 ≠ b5 ≠ i, Xbest(t) is the best firefly in the t-th generation population. The four single variance operators selected are:

where F is the step size and the calculation formula of F is:

where r is a random number between [0,1].

The single mutation operators in Equation (18) to Equation (21) are divided into two categories: the first is the single mutation operator with strong exploration ability, including Equations (18) and (19); the second type is a single mutation operator with strong exploitation ability, including Equations (20) and (21). The calculation formula of the selection probability of the two types of mutation operators is:

where P1 is the selection probability of the first type of mutation operator, S1 is the number of candidate solutions generated by the first type of mutation operator retained for the next generation, F1 is the number of candidate solutions generated by the first type of mutation operator that is not kept for the next generation, and S2 is the number of candidate solutions generated by the second type of mutation operator that is retained for the next generation and F2 is the number of candidate solutions generated by the second type of mutation operator that cannot be retained to the next generation. Generally, the initial values of S1, F1, S2 and F2 are taken as 1.

First, judge whether to choose the first type of mutation operator or the second type of mutation operator. The judgment method is randomly generating a random number μ between [0,1]. If μ < P 1, select the first type of mutation operator; if μ ≥ P 1, select the second type of mutation operator. Secondly, judge whether to choose the first mutation operator or the second mutation operator in a certain class. The method of judgment is: randomly generate a random number λ between [0,1]; if λ < 0.5, select the first mutation operator in a certain type of mutation operator; otherwise, select the second mutation operator.

Algorithm 4 describes the main steps of combining mutation operators based on selection probability.

| Algorithm 4: Combined mutation operation based on selection probability |

| Input: Firefly individuals in the population; Output: Firefly individuals in the population after combined mutation operation; |

| Calculate the value of scaling factor f according to Equation (22); |

| for i = 1 to n |

| if rand < P1 |

| if rand ≤ 0.5 |

| Generate a new solution Xi(t + 1) according to Equation (18); |

| else |

| Generate a new solution Xi(t + 1) according to Equation (19); |

| else |

| Else rand ≤ 0.5 |

| Generate a new solution Xi(t + 1) according to Equation (20); |

| else |

| Generate a new solution Xi(t + 1) according to Equation (21); |

| End if End if |

| End for |

Equations (23) and (24) report that, if a global search is required for the t-th iteration, if the second type of mutation operator is selected, the value of S2 is less than that of F2. Since the values of S1 and F1 remain unchanged, the value of (S2F1)/(S1F2) decreases and the value of P1 increases. The first type of mutation operator will probably be selected in the t + 1 iteration. If a global search is required for the t-th iteration, if the first type of mutation operator is selected, the value of S1 is greater than the value of F1. Since the values of S2 and F2 remain unchanged, the value of (S2F1)/(S1F2) decreases and the value of P1 increases. The first type of mutation operator will be selected with a high probability in the t + 1 iteration, and vice versa.

In conclusion, based on the historical contributions of the two types of mutation operators, the combined mutation operator based on the selection probability can choose which type of mutation operator is selected to perform the mutation operation in the following iteration. As a result, regardless of whether the algorithm is in an early or late iteration, the combined mutation operator based on the selection probability can flexibly select a particular type of mutation operator with high exploration or exploitation ability. In addition, due to the strong exploration ability of the first type of mutation operator, the contribution made at the beginning of the iteration is greater than that of the second type of mutation operator, so the first type of mutation operator is more likely to be selected, which improves the exploration of the algorithm ability. At the end of the iteration, the historical contribution of the second type of mutation operator is greater than that of the first type of mutation operator, so mutation operators of the second type are more likely to be selected, which improves the exploitation ability of the algorithm.

3.5. Remove Similarity Operation

Similar individuals mean that the difference in fitness values between individuals is less than a certain threshold ζ. There will be a large number of similar individuals in the population as iteration times rise. The population’s members are generally gathered close to the optimal firefly, especially later in the iteration. For the multi-extremum optimization problem, if the best individual in the population is near a local extremum, the algorithm can easily fall into a local optimum. Therefore, in the iterative process, similar individuals need to be removed from the population to maintain diversity. In order to keep the population size constant, similar individuals that were removed can be generated via the random initialization method. The specific steps in similarity removal are:

(1) Order the fireflies according to the OFV from small to large;

(2) Evaluate the similarity of fireflies. The formula for calculating the similarity S between fireflies in the population is:

where f(0.5n) is the OFV of the 0.5n firefly in the sorted population and f(1) is the OFV of the first firefly in the sorted population, f(n) is the OFV of the last firefly in the sorted population, eps is a small number, eps = 2.2204 × 10−16 and eps is used to avoid the denominator being zero.

(3) If S ≥ ζ, the similarity between fireflies in the population is higher and the population diversity is poor. In this case, the better q individuals in the population are retained, and the remaining n-q individuals are generated by a random initialization method.

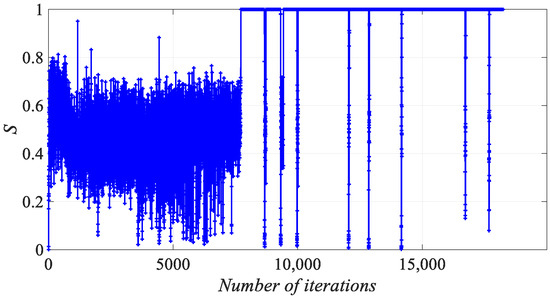

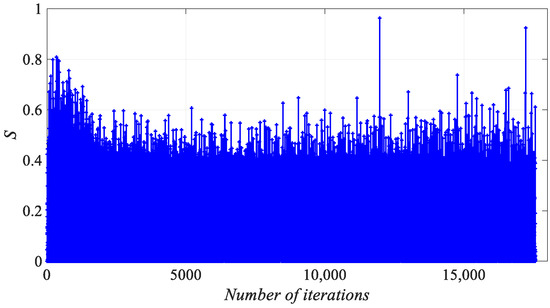

To visualize the similarity degree of the population, the population is processed in two dimensions; let the population size be n = 40 and the number of dimensions of the variables be D = 2. In the iterative process of the algorithm, Figure 4 shows the degree of similarity in the population that does not use a remove-similarity operation, and Figure 5 illustrates the degree of similarity in the population after using a remove-similarity operation.

Figure 4.

Population similarity without adding removal similarity operation.

Figure 5.

Add the population similarity after removing similarity operation.

In Figure 4, the difference between the OFV of the first firefly and the last firefly is larger in the early iteration, and the difference between the OFV of the 0.5n-th firefly and the first firefly is also larger, so the S value is smaller. As the algorithm gradually iterates, the individuals in the population will slowly gather near the optimal individual, and the 0.5n firefly and the last firefly in the population also focus on the optimal individual. The difference between the OFV of f(0.5n) and f(n) gradually becomes smaller, and the value of S gradually increases. Due to the introduction of a combinatorial mutation operator in the algorithm, the S value is small occasionally, but it is not enough to jump out of the local optimum, so the value of S increases rapidly. From Figure 5, after adding the remove-similarity operation, the S value of the algorithm is small in most cases and large in occasional cases. When S ≥ ζ, the S value decreases rapidly, and the diversity in the population is better because of the increase in performing the remove-similarity operation in the population. Therefore, the addition of the remove-similarity operation increases the global search capability of the algorithm and reduces the probability of the algorithm falling into a local optimum.

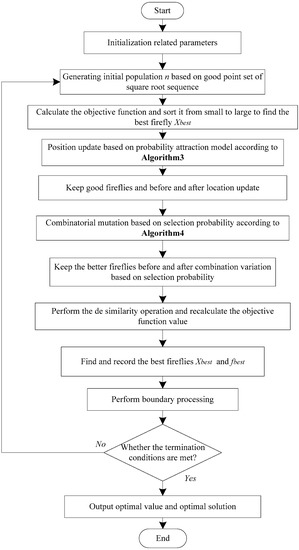

3.6. The Evolutionary Strategy of IHFAPA

The evolutionary strategy in the improved hybrid firefly algorithm with probability attraction (IHFAPA) is presented in Algorithm 5. Figure 6 shows a flow chart of IHFA-PA, which more clearly displays the specific steps in the algorithm. From Figure 6, we can see that the performance of IHFAPA is related to the location-update method, probability attraction model, combined mutation operator, remove-similarity operation and evolution strategy of fireflies. IHFAPA uses a new location-update method. Under the attraction of the high-brightness fireflies and the best fireflies, the low-brightness fireflies move to the high-brightness fireflies, which better balances the exploration and exploitation ability of the algorithm. Meanwhile, IHFAPA uses the combined mutation operator, which can be based on the historical contributions of the two types of mutation operators, to select a suitable single mutation operator to perform mutation operation adaptively according to needs. Combined mutation operators include two different single mutation operators, and each type of mutation operator has a different selection probability. If a certain type of operator has a greater contribution in the previous iteration process, then this type of operator has a greater probability of selection; on the contrary, this type of mutation operator has a smaller selection probability. In the iterative process, the selection probabilities of the two types of mutation operators are not static. As the historical contributions of the two types of mutation operators change, the selection probability will also change. Therefore, the combined mutation operator based on the selection probability can well balance the exploration and exploitation ability of the algorithm. In addition, a remove-similarity operation is added to IHFAPA, so that the algorithm can better maintain the diversity in the population in the iterative process and reduce the probability of the algorithm falling into a local optimum. IHFAPA uses an evolutionary strategy of elite retention in its iterative process. The position-update formula, combination mutation operator and similarity-removal operation balance the exploration and exploitation ability of IHFAPA and facilitate the maintenance of population diversity.

Figure 6.

A flow chart of IHFAPA.

| Algorithm 5: IHFAPA |

| Input: population size n, initial values of S1, F1, S2 and F2, and step size α0, maximum attraction βmax, minimum attraction βmin Output: optimal solution x and optimal value f(x); |

|

4. Numerical Experiment and Result Analysis

To ensure the fairness of the experiment, all experiments were conducted on the same computer. The computer’s operating system is Windows 10, the processor is AMD Ryzen 9 3900 12-Core, the main frequency is 3.09 GHz and the RAM is 32 GB. All algorithms are developed in MATLAB R2019b programming language.

4.1. Selection of Test Function

The CEC2017 benchmark test function set, which is currently common internationally, is selected to verify the performance of the IHFAPA. Table 3 provides some brief information regarding the CEC 2017 test function set (see reference [41] for details). The 28 test functions in CEC 2017 are constrained optimization problems, and the objective function is to find the minimum value.

Table 3.

The specifics of the 28 CEC 2017 optimization questions.

4.2. Evaluation Method of Algorithm Performance

4.2.1. Algorithm Performance Evaluation Indicators

To compare the performance of IHFAPA and various comparison algorithms, the mean value, standard deviation, w/t/l, Friedman rank ranking [45] and Holm’s procedure [46] are selected as performance evaluation indicators.

- Mean value

The mean value is the average value of the optimal value of the test function obtained by the algorithm in R independent runs, noted as Mean. The calculation formula of Mean is:

where R is the total number of independent runs in the algorithm. fi is the optimal value of the test function obtained by the algorithm in the i-th independent operation.

- Standard deviation

The standard deviation is the deviation between the mean value of the optimal value obtained by the algorithm in R runs and the respective optimal value, noted as Std. The calculation formula for standard deviation is:

where R is the total number of algorithm runs and fi is the optimal value of the test function obtained by the algorithm in the i-th independent operation. Mean is the average of R optimal values.

- w/t/l indicators

To better measure the performance of IHFAPA and the various algorithms participating in the comparison, compare the average value of the optimal value of the objective function of IHFAPA with other comparison algorithms and the comparison result is recorded as w/t/l. For a certain test function, if the performance of the IHFAPA is better than the other algorithm (denoted as Alg), then the value of w is recorded as 1; if the performance of the IHFAPA algorithm is the same as the performance of another algorithm taking part in the comparison, the value of t is recorded as 1; if the performance of another algorithm participating in the comparison is better than IHFAPA, then the value of l is recorded as 1. For all test functions, add the value of w from the algorithm to obtain the value of w. In the same way, the values of t and l can be calculated.

- Friedman rank ranking

Friedman rank ranking is a nonparametric statistical method, which can rank the performance of participating comparison algorithms. Suppose there are m algorithms involved in the comparison and k test functions are selected. Then, the specific steps in Friedman rank sorting are (take the minimization problem as an example):

- Each algorithm is run R times independently on each test function, and the optimal value of each run is retained.

- Record the optimal value obtained from R runs and calculate the average value of R optimal values according to the following formula:where m is the number of algorithms participating in the comparison, k is the number of test functions and R is the number of independent runs. meanfij represents the average value of the optimal value obtained by the i-th algorithm independently running on the j-th test function for R times.

- For each test function, all the algorithms participating in the comparison are sorted in the order of meanfij from small to large and give the algorithm rank ranking rankij (i = 1, 2, …, m; j = 1, 2, …, k). If the average value of the optimal value of the comparison algorithm is the same, then take the average of the ranking position as the rank ranking. To explain the calculation method of ranking and rank ranking, suppose there are five algorithms involved in the comparison. For a certain test function, if the average value of the optimal value obtained by the algorithm participating in the comparison is 1, 3, 3, 2 and 4, respectively, since the average of the optimal values found by the second and third algorithms are the same, then the ranking of the two algorithms are 3 and 4, respectively. Take the average of the ranking positions of these two algorithms ((3 + 4) / 2 = 3.5) as the rank ranking of the algorithm. Therefore, the rank rankings corresponding to the five algorithms are 1, 3.5, 3.5, 2 and 5. The results of the rank ranking are shown in Table 4.

Table 4. Results of rank ranking.

Table 4. Results of rank ranking. - Calculate the average of the rank ranking of each algorithm Averanki.where m is the number of participating comparison algorithms and k is the number of test functions.

- Rank according to the average value Averanki of the rank ranking of each algorithm from small to large, the result of the sorting is the final ranking Finalranki of various algorithms.

4.2.2. Algorithm Performance Difference Significance Test

The Friedman test was first proposed by Friedman in 1945. It is a nonparametric test and is used to determine whether there are significant differences between the comparison algorithms. Suppose there are m comparison algorithms, that is, there are m samples. Each sample contains the average of the optimal value of the objective function obtained by k corresponding algorithms. Then, the Friedman test steps are as follows:

- The original hypothesis, opposite hypothesis and significance level of Friedman test are given α.

H0: There is no significant difference in the performance of the m algorithms participating in the comparison;

H1: There are obvious differences in the performance of the m algorithms participating in the comparison.

- 2.

- Calculate the rank of each algorithm corresponding to each test function rankji(1 ≤ I ≤ m, 1 ≤ j ≤ k).

- 3.

- Calculate the sum of rank ranking of each test function corresponding to each algorithm sunranki; the sunranki calculation formula is sunranki:

- 4.

- Calculate the Friedman test value χ2. The calculation formula of χ2 is:

- 5.

- According to the pre-determined significance level α and degrees of freedom (m − 1). Critical values can be obtained from the table of critical values of the Chi-square test χ2α[m − 1], if

Then, reject the original hypothesis H0. It shows that there are obvious differences in the performance of the m algorithms participating in the comparison. Otherwise, accept the null hypothesis H0. It shows that there is no significant difference in the performance of the m algorithms involved in the comparison.

4.3. Obtain the Optimal Parameter Combination through Orthogonal Experiments

The best parameter combination can be found via orthogonal experiments because the threshold size and population size of the similarity-removal operation have a significant impact on algorithm performance. There are two factors in the orthogonal experiment, that is, the threshold ζ for the degree of similarity between individuals in the population and the population size n. Design three levels for each factor. The three levels of the threshold ζ are 0.3, 0.4 and 0.5; the three levels of population size are 0.95, 0.97 and 0.98. Therefore, the orthogonal experiment includes two factors and three levels. Table 5 and Table 6 show the orthogonal experimental design.

Table 5.

Factors and level of orthogonal experiment.

Table 6.

Orthogonal array L9 (32) for the orthogonal experiment.

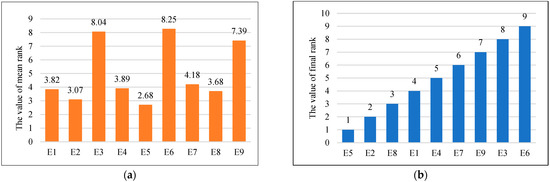

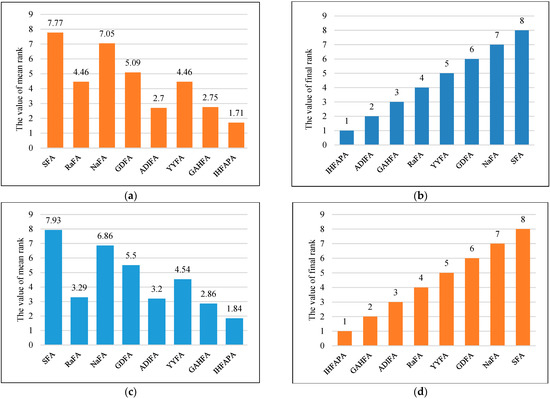

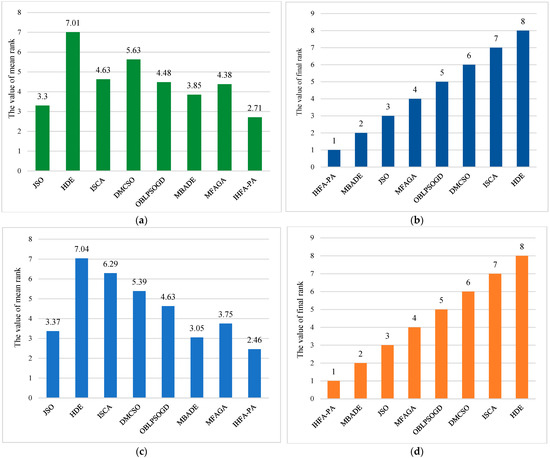

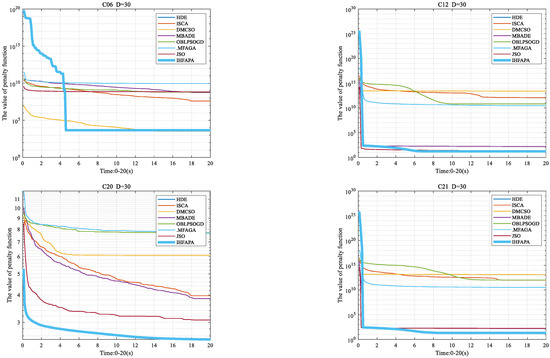

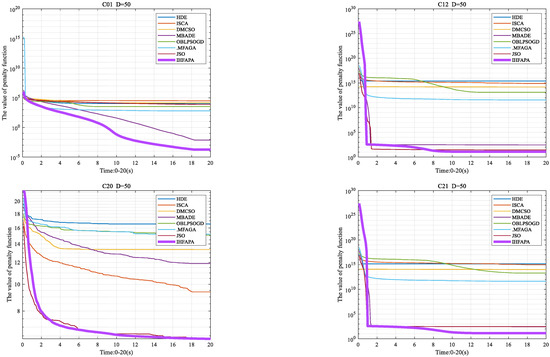

To obtain the optimal combination of parameters, the 30-dimensional CEC 2017 test function is selected. In addition, to ensure the fairness of the comparison results, suppose the population size n = 40, the dimension of the variable D = 30, maximum running time Maxtime = 20 s, the penalty factor M = 108, statistics times tjcs = 20. Table 7 and Table 8 and Figure 7 report the results of orthogonal experiments. The test results obtained are compared in Table 7. Table 8 exhibits the Friedman test results of the orthogonal experiment, and Figure 7 shows Friedman mean rank and final rank ranking.

Table 7.

The results of orthogonal experiment.

Table 8.

Friedman test results of orthogonal experiment.

Figure 7.

Results of the Friedman mean ranking test of various experiment number. (a) Mean rank ranking; (b) final rank ranking.

In Table 8, χ2 = 160.54, χ2 α[k − 1] = 15.51. Since χ2 ≥ χ2 α[k − 1], the original hypothesis is rejected. It shows that there are significant differences between the comparative test combinations. In addition, since E5 ranks first in all trial combinations (mean rank = 2.68), it shows that E5 is significantly better than other test combinations. Therefore, the optimal parameter combination is ζ = 0.4, P = 0.97.

4.4. Termination Condition of Algorithm Iteration

There are two commonly used iteration termination conditions at present. One is taking the maximum number of iterations as the termination condition and the other is taking the maximum evaluation times as the termination condition. However, the two iteration termination conditions are not fair to other algorithms participating in the comparison. The specific reasons are as follows:

- Taking the maximum number of iterations as the iteration termination condition, it is unfair to the algorithms that participate in the comparison. Let the time required for an iteration of algorithm A be t1, the time required for one iteration of algorithm B is t2, and t1 > t2. Let t1 = 1.2t2, t2 = 0.005 s, the maximum number of iterations is 1000. When both algorithm A and algorithm B reach the maximum number of iterations, algorithm A needs 6 s and algorithm B needs 5 s. The running time of A is 1 s longer than the running time of B. If the solution quality of A is better than that of B, it cannot be said that the performance of algorithm A is better than that of algorithm B. Because the running time of A is long, if algorithm B runs for another 1 s, the quality of the solution from algorithm B is not necessarily worse than that of algorithm A.

- Taking the maximum evaluation times of the objective function as the termination condition of the algorithm iteration, it is also unfair to the algorithms involved in the comparison. The main reasons are: some algorithms in an iteration process, although the evaluation times of the objective function are few, the running time of the program is very long; some algorithms in an iteration process, although there are many evaluation times of the objective function, but the running time of the program is very short. Therefore, taking the maximum evaluation times of the objective function as the iteration termination condition is unfair to some algorithms.

In summary, to fairly compare various algorithms, the maximum running time Maxtime can be used as the iteration termination condition of the algorithm. Therefore, Maxtime is taken as the termination condition of algorithm iteration in this paper. When the program’s running time reaches Maxtime, the algorithm stops iterating and outputs the optimal solution and value. The advantages of taking the maximum running time Maxtime as the termination condition of algorithm iteration are: regardless of whether the time and space complexity of different algorithms are the same, it is fair to the algorithms participating in the comparison.

4.5. Performance Comparison of Different Attraction Models

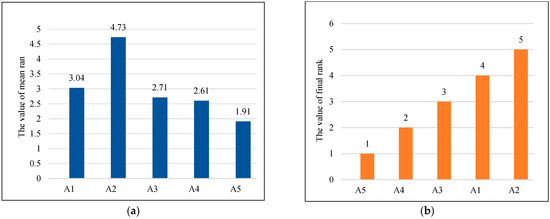

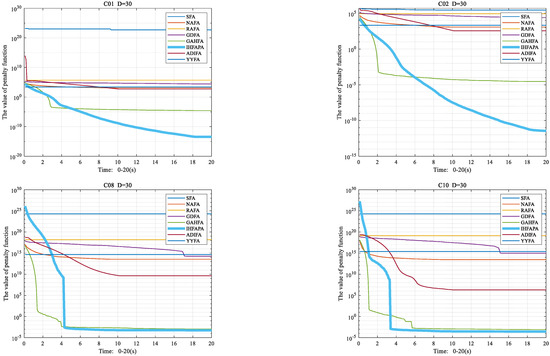

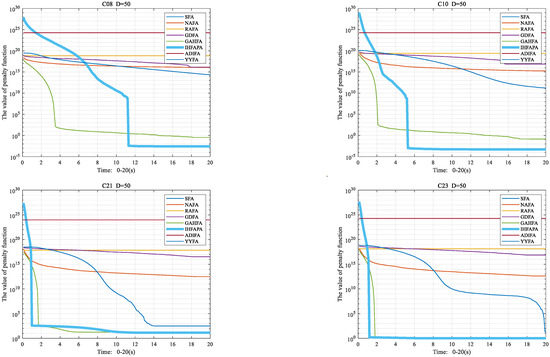

To verify that the performance of the probability attraction model is better than other attraction models, the CAM, RAM, NAM and GAM were selected to compare their performance with the probability attraction model. In addition, to ensure the fairness of the comparison, IHFAPA uses a different model of attraction and the rest is the same. Suppose population size n = 40, dimension of variable D = 30, maximum running time Maxtime = 20 s, maximum attraction βmax = 1, minimum attraction βmin = 0.5, light-absorption coefficient γ = 1, penalty factors corresponding to equality constraints and inequality constraints M1 = M2 = 108. The test function is CEC 2017; IHFAPA with different attractors was independently run 20 times. Run 20 times independently to obtain the mean value of the optimal value of the test function and the standard deviation of the optimal value, as shown in Table 9. The results of Friedman’s mean rank and final rank ordering for different attraction models are given in Figure 8. The Friedman test results of different attraction models are shown in Table 10.

Table 9.

Statistical results of IHFAPA with different attraction models.

Figure 8.

Friedman rank ranking results of various attraction models (D = 30). (a) Mean rank ranking; (b) final rank ranking. A1 = Complete attraction model; A2 = Random attraction model; A3 = Neighborhood attraction model; A4 = Grouping attraction model; A5 = Probability based attraction model.

Table 10.

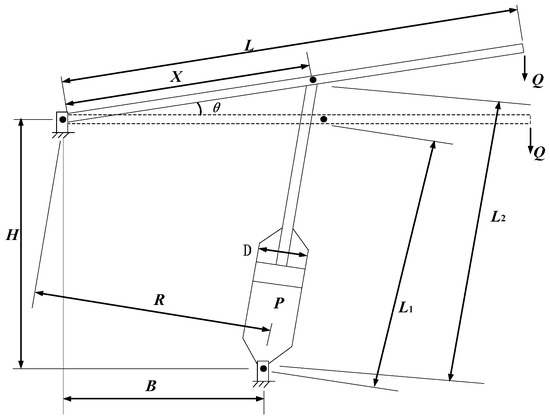

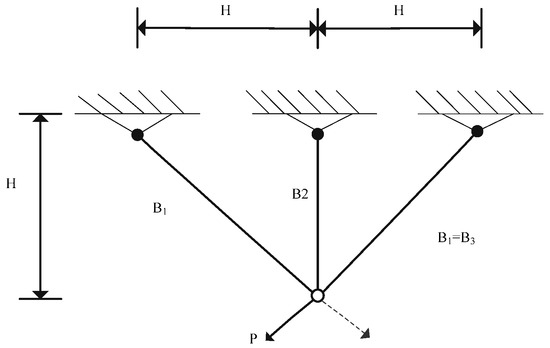

Friedman test results of various attraction models.