Abstract

Two methods for multistage adaptive robust binary optimization are investigated in this work. These methods referred to as binary decision rule and finite adaptability inherently share similarities in dividing the uncertainty set into subsets. In the binary decision rule method, the uncertainty is lifted using indicator functions which result in a nonconvex lifted uncertainty set. The linear decision rule is further applied to a convexified version of the lifted uncertainty set. In the finite adaptability method, the uncertainty set is divided into partitions and a constant decision is applied for each partition. In both methods, breakpoints are utilized either to define the indicator functions in the lifting method or to partition the uncertainty set in the finite adaptability method. In this work, we propose variable breakpoint location optimization for both methods. Extensive computational study on an illustrating example and a larger size case study is conducted. The performance of binary decision rule and finite adaptability methods under fixed and variable breakpoint approaches is compared.

Keywords:

adaptive optimization; decision rule; scenario tree; uncertainty set; lifting; robust optimization MSC:

90C15; 90C17

1. Introduction

Multistage decision making under uncertainty has practical applications in many areas such as finance, engineering, and operations management, etc. To mention a few examples, Goulart et al. [1] applied stochastic optimization for the robust control of linear discrete-time systems. Skaf and Boyd [2] designed an affine controller for linear dynamic systems. Ben Tal et al. [3] addressed the problem of minimizing the overall cost of a supply chain under demand uncertainty. See and Sim [4] proposed a robust optimization method to tackle an inventory control problem where only limited demand information is available. Gounaris et al. [5] studied the robust vehicle routing problem to minimize the delivery costs of a product to geographically dispersed customers using capacity-constrained vehicles. Calafiore [6] presented an affine control method for dynamic asset allocation. Fadda et al. [7] studied the multi-period stochastic assignment problem for social engagement.

There are challenges to solving multistage adaptive robust optimization problems. As Shapiro and Nemirovski [8] pointed out, multistage adaptive robust optimization problems including real-valued and binary decision variables are computationally intractable in general. Dyer and Stougie [9] demonstrated that obtaining the optimal solution for the class of single-stage uncertain problems involving only real-valued decisions is already P-hard. One popular solution approach to this problem is to use decision rules where variables are modeled as functions of uncertain parameters. The application of decision rules for real-valued functions in stochastic programming goes back to 1974 [10]. However, only recently did the decision rule-based approach received major attention with the research advances made in robust optimization [11,12]. Ben-Tal et al. [13] formulated the real-valued functions as affine functions of uncertain parameters. The simple structure of linear decision rules may result in some optimality loss. However, it has the advantage of providing the required scalability to deal with multistage stochastic adaptive problems. It should be mentioned that linear decision rules are shown to be optimal in some problem instances. For instance, Bertsimas et al. [14] showed the optimality of affine control policies in one-dimensional uncertainty within robust optimization context. The reader can refer to [5,15] for other cases.

In order to reduce the loss of optimality due to linear decision rules, various nonlinear decision rule structures were proposed. Motivated by the success of linear decision rules in providing the favorable scalability features for multistage problems, the nonlinear decision rules are formulated as where x is the decision variable, denotes the uncertain parameters, X represents the decision rule coefficients, and is a nonlinear operator that defines the basis terms in the decision rule. This structure considerably improves the solution optimality while retaining the scalability property. To mention some instances, different authors have suggested various decision rule structures such as linear [1,2,13,16], piecewise linear [17,18,19,20], multilinear [19], quadratic [19,21] and polynomial. Kuhn et al. [16] proposed a method to estimate the approximation error introduced by linear decision rules. They have argued the method is applicable to problems with random recourse and multiple decision stages. Chen et al. [17] addressed uncertain problems where only limited information of underlying stochastic parameters are available. The authors discussed that linear decision rules are inadequate for this type of problem and can result in infeasible solutions. They suggested an alternative second-order conic optimization model that can be solved efficiently. Chen and Zhang [18] presented an extended affinely adjustable robust counterpart method to solve multistage uncertain linear problems and illustrated that the potential of their proposed method is beyond what is presented in the literature. Georghiou et al. [19] proposed a lifting technique that provides tighter upper and lower bounds compared to the case where the linear decision rule is directly applied to the original stochastic problem. They proposed a structured lifting method that gives rise to a flexible piecewise linear and nonlinear decision rules. Goh and Sim [20] developed new piecewise linear decision rules that provide a more flexible formulation of the original uncertain problem and results in better bounds on the objective. Bertsimas et al. [22] proposed a framework for tackling linear dynamic systems under uncertainty. They introduced a hierarchy of polynomial control policies that exhibited strong numerical performance at a moderate computational expense.

Although there is a wealth of literature available for real-valued decision rules, the available literature for binary decision rules is relatively scarce. Bertsimas and Georghiou [23] proposed a structure for binary decision rules that models binary variables as piecewise constant functions and can provide high-quality solutions. However, the computational expense is significant. Bertsimas and Caramanis [24] proposed a structure for integer decision rules formulated as , where and is the ceiling function. In their work, the resulting problem is approximated and solved using a randomized algorithm [25] that provides only a limited guarantee on solution feasibility. Hanasusanto et al. [26] proposed a so-called “k-adaptable” structure that can only be applied to two-stage uncertain binary problems where the decision maker pre-commits to k second-stage policies and implements the best one once the uncertain parameters are revealed. Recently, Bertsimas and Georghiou [27] proposed a systematic lifting method for binary decision rule that trades off scalability and optimality. This method can be applied to large multistage problems. They demonstrated that the method is highly scalable and provides high-quality solutions and can readily be used along with real-valued decision rules with the general structure of . Postek and Den Hertog [28] proposed a method to iteratively split the uncertainty set into subsets based on some heuristics. The method keeps the computational complexity at the same level as the static robust optimization problem. Bertsimas and Dunning [29] extended the work of finite adaptability and presented a partition-and-bound method for the multistage adaptive robust mixed integer optimization problem.

While there are many possible ways for uncertainty lifting and uncertainty set partition, we focus on the grid partition-based method in this work considering its simplicity in implementation. This means that for the lifting method, we lift each uncertain parameter individually instead of any aggregated version among them. Whereas, for the uncertainty set partition method, we divide the uncertainty set using hyper-rectangles. Under this assumption, the binary decision rule (lifting) method and the finite adaptability method (with grid partitioning) for addressing multistage adaptive robust optimization problems inherently share similarities. The goal of this study is to compare the solution complexity of these two methods in order to obtain insights into the advantages and limitations of each method. The contribution of this work is summarized below:

- Point out the similarity and difference between the lifting method and the finite adaptability (partitioning) method. We demonstrate that under the equivalent setting, the partition method in general leads to better solution quality since the lifting method has less flexibility due to the restriction of linear decision rule.

- Propose novel breakpoint optimization formulations for both lifting and partitioning methods. It is shown that breakpoint optimization leads to improved solution quality with the cost of solving mixed integer nonlinear problems (MINLP) compared to mixed integer linear problems (MILP) under a fixed breakpoint setting.

- Conduct an extensive computational study to investigate the advantages of lifting and finite adaptability methods under fixed breakpoint and variable breakpoint settings. We highlight the tractability of the lifting method for handling large cases with many time stages, and the limitation of finite adaptability method caused by the quick increase in model size.

This paper is organized as follows. Section 2 presents the general multistage adaptive robust binary optimization problem formulation and the traditional scenario tree-based method is applied to an illustrating example. Section 3 provides detailed formulations of the binary decision rule method based on the lifting technique and the variable breakpoint technique is explained. Section 4 provides the detailed formulation for the finite adaptability method based on uncertainty set partitioning and the variable breakpoint-based formulation is presented. Section 5 presents an extensive computational study using an inventory control problem and finally, Section 6 concludes the paper.

2. Multistage Adaptive Robust Binary Optimization

The multistage adaptive robust binary optimization problem to be addressed is as follows:

where , and are constant vectors/matrices, is the adaptive binary decision variable expressed as a function of uncertainty vector , based on the following notation:

| scalar, q-th uncertain parameter of stage t | |

| vector of uncertain parameters of stage t: , | |

| where is the number of uncertain parameters in stage t | |

| vector of uncertain parameters from stage 1 to t: | |

| vector of all uncertain parameters (from stage 1 to T): , that is, |

In this work, we consider polyhedral uncertainty set for ( is the size of ):

where and are the constant matrix and vector, respectively. For each uncertain parameter, we assume that it is subject to an interval .

Illustrating example

Throughout this paper, we will study the following illustrating example while presenting various solution methods. The problem has two stages (t = 1, 2). Each stage includes only one uncertain parameter. First stage decision could depend on , and the second stage decision could depend on both and . The problem is formulated as:

Assume that each uncertain parameter follows an independent uniform distribution in a certain interval and the uncertainty set is given as:

The above numeric example can be casted into the formulation (1a)–(1c) with , , , , , , , , .

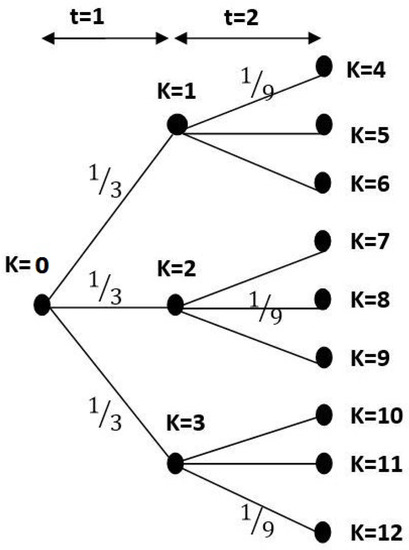

Before presenting the lifting and the finite adaptability methods, the traditional scenario tree-based method is applied to solve the illustrating problem in order to investigate the problem’s true optimal solution. While the number of scenarios is reasonably large, we can find the approximate optimal solution of the adaptive optimization problem. Figure 1 illustrates the scenario tree for two-time stages where each node includes three-branches.

Figure 1.

Scenario tree for two-time stages and 3 branches for each node.

In the following scenario tree-based model, represents the decision made at node k, denotes the parent node of k, is the unconditional probability of node k. Equations (4a)–(4d) present the nodal formulation. For the above scenario tree shown in Figure 1, , , which indicate the set of nodes at the first and second time steps, respectively.

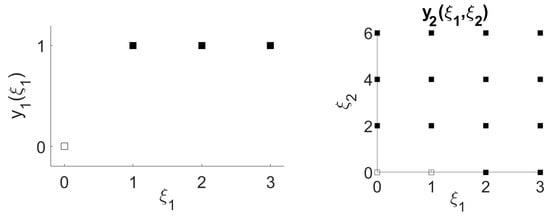

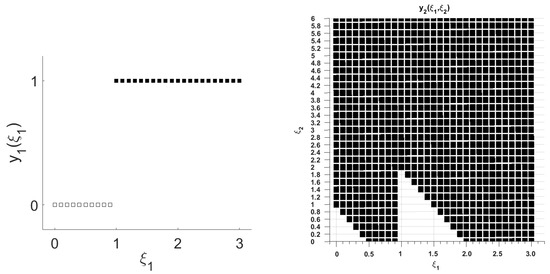

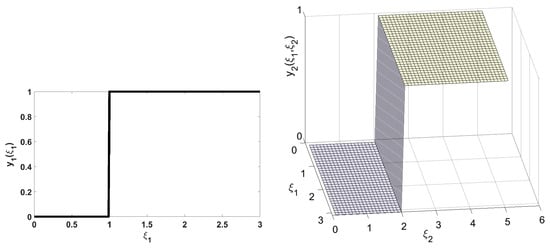

In this work, all the mixed-integer linear optimization problems were modeled in GAMS 25 and solved using CPLEX 12 solver on a workstation (Intel Xeon Dual 20 Core 2.0 GHz Processor, 128 GB DDR4 ECC RAM). Table 1 present the results of the above model for 4, 11, 31 and 99 branches per node. Figure 2 and Figure 3 illustrate the binary solution for 4 and 31 branches per node, respectively. In these figures, the black squares indicate a value of 1 and the white squares indicate a value of 0. Table 1 shows that, by increasing the number of scenarios, the optimal objective value converges to a value around . We use this as a benchmark for comparing the different methods to be discussed.

Table 1.

Results of scenario tree method for the illustrating example.

Figure 2.

Solution under the scenario tree with 4 branches per node.

Figure 3.

Solution under the scenario tree with 31 branches per node.

Regarding the recourse decision, Figure 3 illustrates that, for , there is a change point around 1 for . For , there are three change points at around 0.5, 1 and 2 for , and two change points at around 1 and 2 for . Those values can be used as a basis for comparison while the lifting and partitioning methods are implemented.

3. Binary Decision Rule with Lifted Uncertainty

3.1. Uncertainty Lifting

Bertsimas and Georghiou [27] proposed a decision rule method for adaptive binary variables. This method enforces a linear relation between the binary variable and the lifted uncertain parameters. In this method, 0–1 indicator functions are defined based on a set of breakpoints for each uncertain parameter. The utilization of the indicator functions results in a nonconvex lifted uncertainty set. To resolve this problem, convex overestimation is applied to the lifted nonconvex set in order to obtain a convex set. The accuracy of the solution can be improved by increasing the number of breakpoints in the lifted set. While traditional scenario-tree methods result in an exponential growth of model size which restricts the application in large-scale problems, the lifting method provides the scalability and tractability required for large-scale problems.

Consider a single uncertain parameter subject to the interval , and assume that the interval is divided into subintervals using breakpoints: . To lift the uncertainty, the indicator functions of uncertain parameters are used. The following list summarizes the notation used for lifting a single uncertain parameter :

| scalar, number of breakpoints applied for | |

| scalar, value of the r-th breakpoint for , | |

| as a generalization, we denote the bounds as: , | |

| the r-th lifted uncertain parameter for (an indicator function of ), | |

| vector of all lifted parameters for : | |

| vector of all lifted parameters for : | |

| vector, all lifted parameters for : | |

| the first element 1 is used for the intercept term while implementing linear decision rule | |

| vector of overall (original + lifted) uncertainty related to parameter : | |

| vector of overall uncertainty for stage t: | |

| vector of overall uncertainty from stage 1 to t: | |

| vector of overall uncertainty from stage 1 to T: | |

| the p-th line segment of the lifted uncertainty set for , | |

| the lifted uncertainty set (nonconvex) for | |

| the lifted uncertainty set (nonconvex) for | |

| the two extreme points of , | |

| scalar, coefficient of extreme points in convex full formulation |

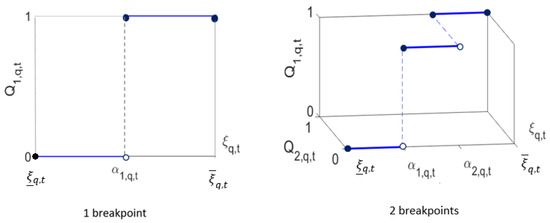

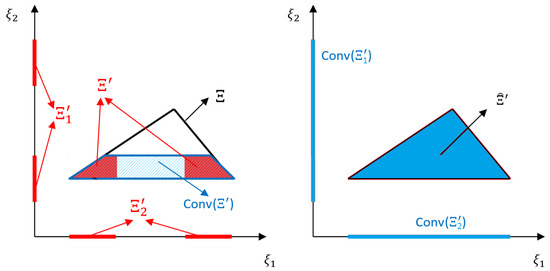

Figure 4 illustrates the lifted uncertainty set () for a single uncertain parameter based on 1 and 2 breakpoints. It is clear that the lifted uncertainty set is nonconvex since it contains disconnected pieces () in a higher dimensional space.

Figure 4.

Lifting scheme for 1 breakpoint (left) and 2 breakpoints (right) on a single uncertain parameter .

In addition, projection matrices and are used in order to obtain and from the overall uncertainty vector, as follows:

Based on the above notation and the original uncertainty set definition in Equation (2), the lifted nonconvex uncertainty set for can be written as:

The nonconvexity of the lifted uncertainty set poses challenges to the optimization problem. The lifted set is convexified such that the semi-infinite constraints can be addressed. Given the extreme points, the convex hull of can be constructed as:

Based on the above convex hull, we define the following convex overestimation for the overall uncertainty set defined in Equation (6):

Notice that the convex hull of is a subset of the above overestimation. Figure 5 illustrates the relation between and the overestimation . The left figure illustrates according to Equation (6). is obtained by intersecting the original uncertainty set (the black triangle) and the nonconvex sets and denoted by discontinuous red lines segments. Notice that the sets and are at least two dimensional where the dimension depends on the number of breakpoints used in the definition of the lifted set. However, for illustration purposes, the dimension of and is assumed to be one since using two dimensions on each axis will make the visualization impossible. The blue shaded area demonstrates . In the right figure, the blue triangle corresponds to the overestimation set (Equation (8)), which is obtained by intersecting the original uncertainty set and . As the figure shows, is an overestimation of .

Figure 5.

Illustration of the relation between and its convex overestimation .

Finally, for simplicity in derivation, we project the polyhedral uncertainty set onto the space of , and compactly write it as:

where and are constant matrix calculated from the breakpoints value and the original uncertainty set parameters .

Illustrating example (cont.)

In this section, the lifting method is applied to the illustrating example. Two breakpoints are considered for each uncertain parameter (r = 1, 2):

The lifted uncertainty vector is formulated as:

The 0–1 indicator functions are defined as:

The associated projection matrices and the corresponding (original or lifted) uncertainty vectors are:

The lifted uncertainty set is defined as: , where . Next, the convex hull for each single lifted uncertain parameter is constructed. For simplicity in presentation, and are represented by and in the following equations, respectively.

The overall convex hull is a subset of the following overestimated set .

3.2. Binary Decision Rule

To approximate the optimal adaptive binary solution, binary decision rule is employed. It enforces a linear relation with respect to the lifted uncertainty (indicator function). The binary decision depends on uncertainty up to time stage t. In binary decision rule, is approximated by a linear combination of indicator functions from stage 1 to stage t, :

where the coefficients only take the following possible integer values: . Furthermore, binary restriction on original variables need to be enforced, hence:

where is the vector of all ones. By applying the binary decision rule to the general stochastic formulation, a semi-infinite optimization problem with a finite number of variables but infinite number of constraints is obtained:

In the above model, the constraints contain a nonconvex indicator functions of original uncertain parameters. Using Equations (5a) and (5b), the stochastic model can be written as the following model with linear constraints with respect to the lifted uncertainty :

Since the lifted uncertainty set is nonconvex and consequently the problem is still intractable. The problem can be conservatively approximated by using the convex overestimation of the lifted uncertainty set as defined in Equation (9).

Since constraints (14b) and (14c) are linear with respect to , and the uncertainty set is a polyhedral set, duality theorem can be used to covert this semi-infinite problem into its deterministic robust counterpart. As an example, constraint (14b) can be written as

or equivalently as in the following, based on the uncertainty set definition in Equation (9)

Its deterministic counterpart is obtained as follows after applying duality to the inner maximization problem:

Similarly, constraint (14c) can be divided into two parts: and , and the robust counterpart is derived accordingly. The overall deterministic counterpart formulation is given by

where is trace operator and can be derived from the know distribution of the uncertainty.

Illustrating example (cont.)

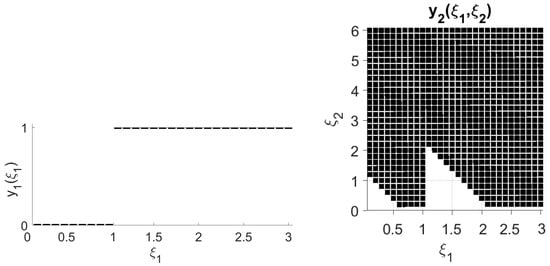

Table 2 presents the results of the above lifting method for different numbers of breakpoints. For 1 breakpoint case, the breakpoint values are set as 1.5 for , and 3 for . For 2 breakpoints case, the breakpoint values are set as for , and for . For 9 breakpoints case, the breakpoint values are set as for , and for . The objective did not improve beyond 1.333 even for nine breakpoints. This observation indicates that the lifting solution quality may be restricted. Using the definition of the lifting method for the binary variable, we can plot the and variables. For the case of 2 breakpoints, the model solution is and , and the adaptive binary variables are expressed as:

As shown in Figure 6, the binary variable is only a function of parameter which indicates a restricted solution quality.

Table 2.

Solution from lifting method with different numbers of fixed breakpoints.

Figure 6.

solution under 2 breakpoints: for , and for .

3.3. Breakpoint Optimization for Lifting Method

In this section, we assume that the location of breakpoints is not pre-fixed and they are optimized instead. Based on Equation (9), the breakpoints information is contained in the parameters and can be formulated as:

where are known constant matrices/vectors, is the vector involving all location information of breakpoints. The above formulated and should be substituted in the dual counterpart formulation (Equations (15a)–(15j)) to complete the variable breakpoint lifting technique.

Notice that the expectation of the lifted uncertainty also depends on the location of the variable breakpoints, so is also a function of (which can be analytically evaluated based on the distribution information). The resulting model will be a mixed integer nonlinear optimization problem, where the integer variables are the binary decision rule coefficients , and the continuous variables include the dual variables and the breakpoint locations .

In this work, all MINLP problems were solved using the ANTIGONE 1.0 solver [30] in the GAMS 25 platform on a workstation (Intel Xeon Dual 20 Core 2.0 GHz Processor, 128 GB DDR4 ECC RAM) using a time resource limit of 10 h. The reported solution have zero optimality gap, otherwise it is reported. Using a single variable breakpoint in the illustrating example, the obtained objective () is better than using a single fixed breakpoint (). This shows the advantage of variable breakpoint lifting compared to the fixed breakpoint method. However, in this example, using more than one variable breakpoint did not further improve the objective, as shown in Table 3. This observation shows that the lifting method’s solution quality is restricted.

Table 3.

Solution statistics for variable breakpoint lifting.

The optimized breakpoint values are summarized below:

- For 1 breakpoint case, 1 for , 2 for

- For 2 breakpoints case, (0, 1) for , (0, 2) for

- For 9 breakpoints case, (1.5, 1.5, 1.5, 1.5, 1.5, 1.5, 3, 3, 3) for , (1, 1, 1, 1, 2.52, 3.6, 4.2, 4.8, 5.4) for

Notice that the variable breakpoints are not forced to be different to each other, so the solution has overlapped breakpoints while the number of breakpoints increases.

4. Finite Adaptability with Uncertainty Set Partitioning

4.1. Uncertainty Set Partitioning

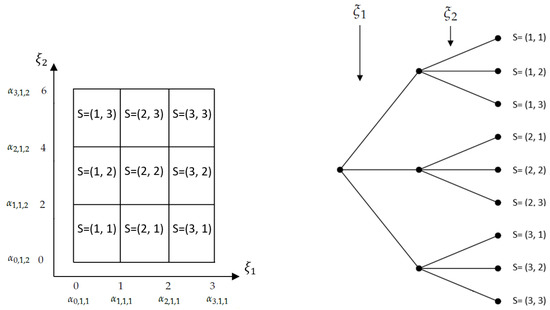

In this method, the uncertainty set is divided into small partitions and a constant binary decision is assumed for each partition. Similarly to the lifting method, we define breakpoints for each uncertain parameter and each subset is a box type of uncertainty set. Figure 7 illustrates the partitioning of a two-dimensional rectangular uncertainty set and the corresponding scenario tree with each branch representing a subinterval for each parameter. In this figure, the interval of each uncertain parameter is divided into three segments such that there are three nodes in the first stage and nine nodes in the second stage. The following notations were used in this method:

Figure 7.

Partitioning of the two-dimensional uncertainty set (left figure) and scenario tree representation (right figure).

- s:

- a scenario (each scenario is represented by a matrix structure (allowing empty elements), its element gives the subinterval index of the uncertain parameter ) under this scenario

- :

- scalar, number of breakpoints for

- :

- scalar, upper bound value for element under scenario s

As an example, consider a two-stage problem: the first stage has uncertain parameters and the second stage has one uncertain parameter . Assume no breakpoint is applied to , one breakpoint is applied to , and two breakpoints are applied to . Then, the set is:

where “*” denotes that the corresponding element does not exist.

For each scenario , the uncertainty set is a hyper-rectangular (subset of original set )

which can be compactly written as

4.2. Finite Adaptability

Next, the finite adaptability method (also denoted as “partitioning method” in this work) is applied to the original problem (1a)–(1c). The idea is a combination of scenario tree-based stochastic formulation and robust optimization. For each scenario, we enforce constraint satisfaction over a uncertainty set as defined in Equation (17) instead of a single point in the uncertainty space. The resulting model can be cast as:

where the last constraint is enforcing non-anticipativity and is the set of all scenarios with the same path up to time t:

Reformulating the semi-infinite constraint using linear programming duality, the corresponding robust scenario-based formulation is

Illustrating example (cont.)

In this section, the partitioning method is applied to the illustrating example (3a)–(3d) and the results are presented. Figure 7 illustrates the partitioning and the corresponding scenario tree for the illustrating example.

The formulation for the partitioning of the uncertainty set is based on two equally-spaced breakpoints for each uncertainty element: for , the breakpoints are set as ; for the breakpoints are . Hence,

The non-anticipativity condition set contains the following elements:

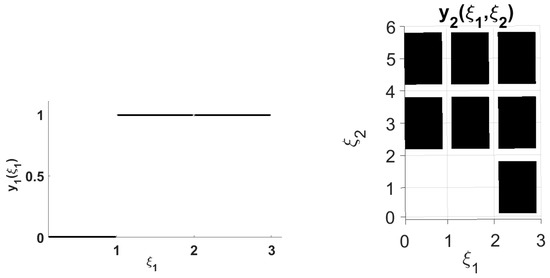

The partitioning-based model is the MILP problem. Table 4 presents the results from the finite adaptability method. As the number of partitions increases, the objective improves until it reaches close to the optimal solution obtained from scenario method (−1.594 for 99 branches per node). For comparison, the branches and breakpoints are evenly distributed in the scenario and finite adaptability method, respectively. For 29 breakpoints in the partitioning method and 31 branches in the scenario method, the optimal objectives are −1.589 and −1.605, respectively. Figure 8 and Figure 9 illustrate the partitioning solutions for 2 and 29 breakpoints. As the figures demonstrates, there is a close match between the partitioning and scenario solution.

Table 4.

Solution of finite adaptability method.

Figure 8.

Solution from finite adaptability method using two breakpoints for each parameter.

Figure 9.

Solution from finite adaptability method using 29 breakpoints for each parameter.

4.3. Breakpoint Optimization in Partitioning Method

In the variable breakpoint partitioning technique, the location of breakpoints is unknown a priori and it is optimized instead. The location of breakpoints is reflected in the matrix of Equation (17) and it is formulated as follows:

where and are constant matrices. The corresponding robust scenario-based formulation is

In this formulation, the probability of the occurrence of each scenario depends on the location of breakpoints since the length of each dimension in each scenario depends on the location of breakpoints. The probability for each scenario and are both functions of and they can be evaluated based on the distribution of the uncertainty.

Table 5 summarized the results of the variable partitioning technique. It can be observed that, for the same number of partitions, the variable partitioning method provided a better objective compared to the fixed breakpoint method. For instance, for 2 breakpoints per uncertain parameter, the variable and fixed methods’ objectives are −1.500 and −1.444, respectively. The variable method even provided a better objective with just three breakpoints compared to the fixed method with nine breakpoints. However, for a large number of partitions, the fixed breakpoint method is much faster in terms of solution and it could provide a better objective using 29 breakpoints in less than 1 s compared to the variable method using 5 breakpoints after about 18 min run time.

Table 5.

Variable breakpoint partitioning applied to the illustrating example.

4.4. Flexibility Comparison

We can observe from Table 2 that, for the illustrating problem, the lifting method’s objective does not improve beyond −1.333, even for nine breakpoints, while the partitioning method could provide a better objective of −1.444 with two breakpoints for each parameter. In this section, we investigate the reason why lifting method leads to restricted solution quality compared to the partitioning method. For this purpose, the solution from partitioning method is substituted into the decision rule solution from the lifting method. Note that the same breakpoints are applied in the lifting and partitioning method (as shown in Figure 7). This will result in a linear system of equations. If the set of equations has no solution, this means that the lifting method has a restricted flexibility such that it cannot cover the solution of the partitioning method.

Figure 8 presents the partitioning solution. In this problem, the intervals for , are divided into three equally distributed pieces. Based on the binary decision rule equations:

For the three nodes at stage 1, the corresponding lifted uncertainty vector takes the three following values:

There is a solution for the above equations. For the nine nodes at stage 2, the lifted uncertainty vector takes nine different values for nodes 4 to 12:

There is no solution satisfying this linear system of equations (there are nine equations and five variables for ). This evidence indicates that lifting the method’s solution is restricted compared to the partitioning method. The limited solution flexibility is due to the affine decision rule over the lifted uncertainty.

5. Case Study: Inventory Control Problem

In this section, an inventory control problem with discrete ordering decisions is studied where the problem is adapted from [27]. This problem can be formulated as an multistage adaptive robust optimization problem with fixed recourse and can be explained as follows. At the beginning of each time period , the product demand is observed. This demand can be satisfied in two ways: (1) by pre-ordering at period t using maximum N lots (binary variable is introduced to express whether we order from n lots in time period t or not), each delivering a fixed quantity at the beginning of period t for a unit cost of ; (2) by placing a recourse order during the period t using maximum N lots (binary variable is introduced for this decision), each delivering immediately a fixed quantity for a unit cost of . The pre-ordering cost is always less than the immediate ordering cost (). If the ordered quantity is greater than the demand, the excess units are stored in a warehouse that incurs a unit holding cost of and can be used to satisfy future demand. Furthermore, the cumulative volume of pre-orders cannot exceed the ordering budget . The objective is to minimize the total ordering and holding costs over the planning horizon by determining the optimal decision of and . Equations (21a)–(21e) express the problem formulation.

Notice that is a static binary decision variable, while is the adaptive binary decision variable dependent on the realized uncertainty . The uncertainty set is represented as: . Uniform distribution is assumed for all of them. The bounds for each random parameter are chosen from and for . The cumulative ordering budget equals to for . It is also assumed that and the initial inventory is zero (.

Two different configurations of the inventory problem are studied in this work (Table 6). In the second configuration, the unit cost for immediate ordering (), unit cost for storage at warehouse () and the maximum number of delivery lots (N) are greater than the first configuration. Since, at the second configuration, the costs for immediate ordering and warehouse are higher, it is therefore more economical to satisfy demand using first-stage decisions. Lifting and partitioning methods using both fixed and variable breakpoints are applied to the case study and the obtained results are discussed.

Table 6.

Inventory problem parameters.

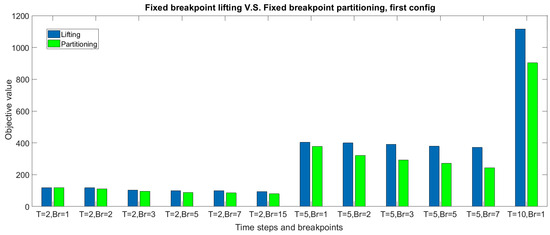

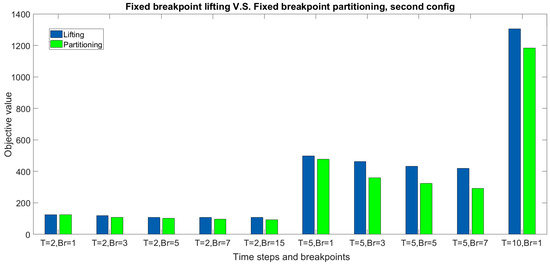

First, the comparison is made between lifting and partitioning methods using same fixed breakpoint setting. The following results are obtained:

- The number of variables grows exponentially in the partitioning method while it grows linearly in the lifting. For a large number of time stages (T) and number of breakpoints used (), the number of variables in the partitioning method can be prohibitively large and it may not be possible to run the model (Table 7 and Table 8). Therefore, considering time and computational resource limitations, the lifting method is suggested for large models (experiments next to , in this case study, Table 9 and Table 10). Thus, the ability to run large models can be considered the main advantage of the lifting method.

Table 7. Results for the partitioning method, first configuration.

Table 7. Results for the partitioning method, first configuration. Table 8. Results for the partitioning method, second configuration.

Table 8. Results for the partitioning method, second configuration. Table 9. Results for the lifting method, first configuration.

Table 9. Results for the lifting method, first configuration. Table 10. Results for lifting method, second configuration.

Table 10. Results for lifting method, second configuration. - It is observed that for experiments with small and medium model size (up to , in this case study), the partitioning method provides a better objective value in a shorter run time compared to the lifting method. For instance, for experiments with 5 time steps, the partitioning method provides a better solution in 9.5 s (Table 7, , ) compared to the lifting method in 10 h run time (Table 9, , ). Figure 10 and Figure 11 compare the fixed breakpoint lifting and finite adaptability (partitioning) methods for the first and second configurations, respectively.

Figure 10. Comparison of lifting and finite adaptability method using the same fixed breakpoints setting (first configuration).

Figure 10. Comparison of lifting and finite adaptability method using the same fixed breakpoints setting (first configuration). Figure 11. Comparison of lifting and finite adaptability method using the same fixed breakpoints setting (second configuration).

Figure 11. Comparison of lifting and finite adaptability method using the same fixed breakpoints setting (second configuration). - In general, in both partitioning and lifting methods, the objective improves by increasing the number of breakpoints except for large problems where the optimality gap is still quite large after 10 h run time limitation (Table 9 and Table 10). Thus, for large models, in order to obtain the best objective in limited run time, there is no need to consider a large number of breakpoints.

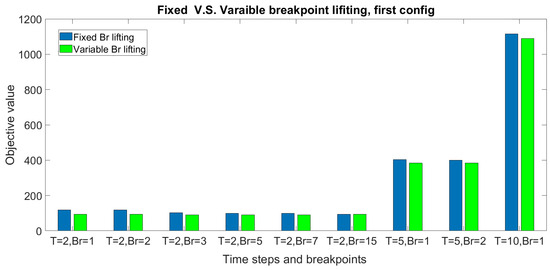

Next, the comparison is made between fixed and variable breakpoint methods. The following observations are made:

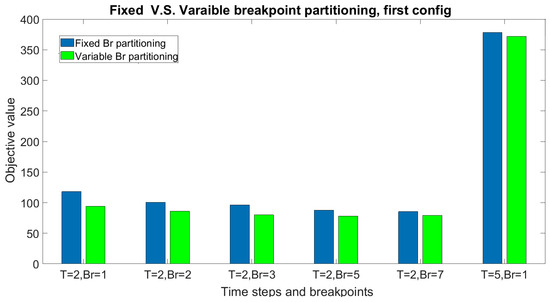

- Variable breakpoint lifting and partitioning techniques introduce additional variables and require the mixed integer nonlinear optimization compared to mixed integer linear optimization for fixed breakpoint case. As shown in Table 7, Table 9, Table 11 and Table 12, and Figure 12 and Figure 13, the variable breakpoint techniques can provide a better objective compared to fixed methods for small-to-medium size models ( and ). However, the run time is longer. For a large number of time steps and breakpoints, the problem size is too large such that it is impossible to run the model under computational resource restrictions.

Table 11. Results for lifting method with breakpoint optimization, first configuration.

Table 11. Results for lifting method with breakpoint optimization, first configuration. Table 12. Results for partitioning method with breakpoint optimization, first configuration.

Table 12. Results for partitioning method with breakpoint optimization, first configuration. Figure 12. Comparison of the solution from the lifting method using fixed breakpoints and optimized breakpoints.

Figure 12. Comparison of the solution from the lifting method using fixed breakpoints and optimized breakpoints. Figure 13. Comparison of solutions from finite adaptability method using fixed breakpoints and optimized breakpoints.

Figure 13. Comparison of solutions from finite adaptability method using fixed breakpoints and optimized breakpoints. - For small-to-medium size experiments (, ), the fixed breakpoints partitioning method is recommended since it provides the best objective within the shortest run time considering computational resource restrictions.

- For large-size problems (, ), the lifting method under fixed breakpoints is the only method that results in a feasible solution considering the computational resource limitations. The partitioning technique leads to large-size problems such that the solver could not find a solution in the 10 h time limit.

6. Conclusions

In this work, the lifting and partitioning methods for multistage adaptive robust binary optimization problems were studied. Different formulations based on fixed or variable breakpoints setting for each method were presented. Computational studies were made to compare the computational performance and the solution quality. The following conclusions can be made from this study. First, the binary decision rule (lifting)-based method and the finite adaptability (partitioning)-based method share a similar idea of uncertainty set splitting using breakpoints for each uncertain parameter. While the lifting-based method has less solution flexibility than the partitioning method (under the same breakpoint setting), the lifting method has the advantage of superior computational tractability and scalability for large problems. While computational resource restrictions is a major concern (especially for problems with a large number of stages), the lifting method with fixed breakpoints is suggested and the number of breakpoints can be moderately large to avoid inferior solution quality. Otherwise, the partitioning method is suggested. Variable breakpoints can be implemented for partitioning method especially for small number of stages with the usage of small number of breakpoints. As a future research direction, the number of breakpoints can be optimized to avoid unnecessary model complexity.

Author Contributions

Methodology, F.M.N. and Z.L.; Software, F.M.N.; Validation, F.M.N.; Writing—original draft, F.M.N.; Writing—review & editing, Z.L.; Supervision, Z.L.; Funding acquisition, Z.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

Data is contained within the article.

Acknowledgments

The authors gratefully acknowledge the financial support from the Natural Sciences and Engineering Research Council of Canada (NSERC).

Conflicts of Interest

The authors declare no conflict of interest.

References

- Goulart, P.J.; Kerrigan, E.C.; Maciejowski, J.M. Optimization over state feedback policies for robust control with constraints. Automatica 2006, 42, 523–533. [Google Scholar] [CrossRef]

- Skaf, J.; Boyd, S.P. Design of affine controllers via convex optimization. IEEE Trans. Autom. Control 2010, 55, 2476–2487. [Google Scholar] [CrossRef]

- Aharon, B.T.; Boaz, G.; Shimrit, S. Robust multi-echelon multi-period inventory control. Eur. J. Oper. Res. 2009, 199, 922–935. [Google Scholar] [CrossRef]

- See, C.T.; Sim, M. Robust approximation to multiperiod inventory management. Oper. Res. 2010, 58, 583–594. [Google Scholar] [CrossRef]

- Gounaris, C.E.; Wiesemann, W.; Floudas, C.A. The robust capacitated vehicle routing problem under demand uncertainty. Oper. Res. 2013, 61, 677–693. [Google Scholar] [CrossRef]

- Calafiore, G.C. An affine control method for optimal dynamic asset allocation with transaction costs. SIAM J. Control Optim. 2009, 48, 2254–2274. [Google Scholar] [CrossRef]

- Fadda, E.; Perboli, G.; Tadei, R. A progressive hedging method for the optimization of social engagement and opportunistic IoT problems. Eur. J. Oper. Res. 2019, 277, 643–652. [Google Scholar] [CrossRef]

- Shapiro, A.; Nemirovski, A. On complexity of stochastic programming problems. In Continuous Optimization; Springer: Berlin/Heidelberg, Germany, 2005; pp. 111–146. [Google Scholar]

- Dyer, M.; Stougie, L. Computational complexity of stochastic programming problems. Math. Program. 2006, 106, 423–432. [Google Scholar] [CrossRef]

- Garstka, S.J.; Wets, R.J.B. On decision rules in stochastic programming. Math. Program. 1974, 7, 117–143. [Google Scholar] [CrossRef]

- Ben-Tal, A.; El Ghaoui, L.; Nemirovski, A. Robust Optimization; Princeton University Press: Princeton, NJ, USA, 2009; Volume 28. [Google Scholar]

- Ben-Tal, A.; Nemirovski, A. Robust convex optimization. Math. Oper. Res. 1998, 23, 769–805. [Google Scholar] [CrossRef]

- Ben-Tal, A.; Goryashko, A.; Guslitzer, E.; Nemirovski, A. Adjustable robust solutions of uncertain linear programs. Math. Program. 2004, 99, 351–376. [Google Scholar] [CrossRef]

- Bertsimas, D.; Iancu, D.A.; Parrilo, P.A. Optimality of affine policies in multistage robust optimization. Math. Oper. Res. 2010, 35, 363–394. [Google Scholar] [CrossRef]

- Anderson, B.D.; Moore, J.B. Optimal Control: Linear Quadratic Methods; Courier Corporation: North Chelmsford, MA, USA, 2007. [Google Scholar]

- Kuhn, D.; Wiesemann, W.; Georghiou, A. Primal and dual linear decision rules in stochastic and robust optimization. Math. Program. 2011, 130, 177–209. [Google Scholar] [CrossRef]

- Chen, X.; Sim, M.; Sun, P.; Zhang, J. A linear decision-based approximation approach to stochastic programming. Oper. Res. 2008, 56, 344–357. [Google Scholar] [CrossRef]

- Chen, X.; Zhang, Y. Uncertain linear programs: Extended affinely adjustable robust counterparts. Oper. Res. 2009, 57, 1469–1482. [Google Scholar] [CrossRef]

- Georghiou, A.; Wiesemann, W.; Kuhn, D. Generalized decision rule approximations for stochastic programming via liftings. Math. Program. 2015, 152, 301–338. [Google Scholar] [CrossRef]

- Goh, J.; Sim, M. Distributionally robust optimization and its tractable approximations. Oper. Res. 2010, 58, 902–917. [Google Scholar] [CrossRef]

- Ben-Tal, A.; Den Hertog, D. Immunizing conic quadratic optimization problems against implementation errors. SSRN Electron. J. 2011. [Google Scholar] [CrossRef][Green Version]

- Bertsimas, D.; Iancu, D.A.; Parrilo, P.A. A hierarchy of near-optimal policies for multistage adaptive optimization. IEEE Trans. Autom. Control 2011, 56, 2809–2824. [Google Scholar] [CrossRef]

- Bertsimas, D.; Georghiou, A. Design of near optimal decision rules in multistage adaptive mixed-integer optimization. Oper. Res. 2015, 63, 610–627. [Google Scholar] [CrossRef]

- Bertsimas, D.; Caramanis, C. Adaptability via sampling. In Proceedings of the 2007 46th IEEE Conference on Decision and Control, New Orleans, LA, USA, 12–14 December 2007; pp. 4717–4722. [Google Scholar]

- Caramanis, C.C.M. Adaptable Optimization: Theory and Algorithms. Ph.D. Thesis, Massachusetts Institute of Technology, Cambridge, MA, USA, 2006. [Google Scholar]

- Hanasusanto, G.A.; Kuhn, D.; Wiesemann, W. K-adaptability in two-stage robust binary programming. Oper. Res. 2015, 63, 877–891. [Google Scholar] [CrossRef]

- Bertsimas, D.; Georghiou, A. Binary decision rules for multistage adaptive mixed-integer optimization. Math. Program. 2018, 167, 395–433. [Google Scholar] [CrossRef]

- Postek, K.; Hertog, D.D. Multistage adjustable robust mixed-integer optimization via iterative splitting of the uncertainty set. INFORMS J. Comput. 2016, 28, 553–574. [Google Scholar] [CrossRef]

- Bertsimas, D.; Dunning, I. Multistage robust mixed-integer optimization with adaptive partitions. Oper. Res. 2016, 64, 980–998. [Google Scholar] [CrossRef]

- Misener, R.; Floudas, C.A. ANTIGONE: Algorithms for continuous/integer global optimization of nonlinear equations. J. Glob. Optim. 2014, 59, 503–526. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).