Abstract

Over the past decade, Convolutional Neural Networks (CNNs) have been extensively deployed in security-critical areas; however, the security of CNN models is threatened by adversarial attacks. Decision-based adversarial attacks, wherein an attacker relies solely on the final output label of the target model to craft adversarial examples, are the most challenging yet practical adversarial attacks. However, existing decision-based adversarial attacks generally suffer from poor query efficiency or low attack success rate, especially for targeted attacks. To address these issues, we propose a query-efficient Adversarial Direction-guided Decision-based Attack (ADDA), which exploits the advantages of transfer-based priors and the benefits of a single query. The transfer-based priors provided by the gradients of multiple different surrogate models can be utilized to suggest the most promising search directions for generating adversarial examples. The query consumption during the ADDA attack is mainly derived from a single query evaluation of the candidate adversarial samples, which significantly saves the number of queries. Experimental results on several ImageNet classifiers, including and threat models, demonstrate that our proposed approach overwhelmingly outperforms existing state-of-the-art decision-based attacks in terms of both query efficiency and attack success rate. We show case studies of ADDA against a real-world API in which it is successfully able to fool the Google Cloud Vision API after only a few queries.

Keywords:

adversarial example; deep neural networks; decision-based attacks; black-box setting; transferable priors MSC:

68T01

1. Introduction

Convolutional Neural Networks (CNN) have been increasingly deployed and achieved remarkable success on many real-world critical tasks, such as computer-aided diagnosis [1], face recognition systems [2], and autonomous driving [3]. However, CNN models are challenged by their inherent vulnerability to adversarial examples [4,5], which are crafted by adding minor human-imperceptible perturbations to legitimate inputs and can maliciously mislead even state-of-the-art CNN models into making incorrect predictions. Such adversarial attacks cause CNN models to fail to capture the task-relevant intrinsic properties of images, and render them unable to judge the visual concept correctly; this may pose serious security threats, especially in high-stakes areas. Thus, the study of adversarial examples to identify the weaknesses and blind spots of deep learning algorithms is crucial [4,6], allowing potential security risks to be investigated [7] and the robustness of models to be evaluated [8].

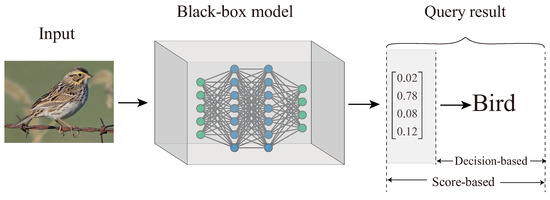

In terms of the adversary’s goal, adversarial attacks can be classified into untargeted and targeted attacks. In an untargeted attack, the classification model is misled into predicting any incorrect class, whereas in a targeted attack the threat model is misguided by producing a chosen (target) class. Based on the knowledge that an attacker has about the target model, adversarial attacks can be generally divided into two primary types, namely, white-box attacks and black-box attacks. In the white-box setting, an adversary has complete access to the victim model, including its structure and parameters, and can easily exploit various gradient-based methods to craft adversarial samples [8,9,10,11]. However, white-box attacks are not practical in real-world applications, as model-specific information may not be exposed to users. As such, the focus is shifting towards black-box adversarial attacks, where the adversary is restricted to only acquiring the input–output correspondences of the target model and has no access to the threat model. Current existing black-box attacks can be categorized into transfer-based and query-based methods according to whether the attacker needs to query the target model. Transfer-based methods [12,13] can launch a black-box attack without model queries, which leverages the transferability of adversarial examples, i.e., an adversarial example generated by one visual model may fool other neural networks with different architectures trained on the same task. In consequence, the consistency between the victim model and the surrogate model is crucial, and greatly influences the attack success rate. Based on the information received from queries, the query-based approaches can be broadly divided into score-based attacks (known as soft-label attacks) and decision-based attacks (known as hard-label attacks), as shown in Figure 1. As far as score-based attacks are concerned, the adversary has access to the prediction probabilities (e.g., soft-label output) of the target model, and can estimate the gradients by adopting zeroth-order optimization techniques to craft adversarial examples [14,15,16,17]. On the other hand, the decision-based setting only provides prediction labels (e.g., hard-label output) of the model, and does not even provide any prediction probabilities [18,19]. Due to less information being exposed from the query feedback, gradient estimation in decision-based attacks is very inefficient and impractical.

Figure 1.

An illustration of two types of query-based black-box attacks. Score-based attacks can obtain predicted probabilities from the victim model, while decision-based attacks only have access to predicted labels.

In this paper, we focus on the most challenging yet practical decision-based black-box setting, where only the final decision (e.g., the top-1 label) is available from each query. In a real-world scenario this decision-based attack is more feasible, although it usually requires a large number of queries, as the information returned from a single model query is very little. Boundary attacks [18], as the initial attempt for decision-based attacks, may take millions of queries to converge. Although varied approaches [19,20,21] have been introduced to improve the query efficiency of boundary attack, the most effective and advanced attacks typically require more than tens of thousands of queries. Generally, in addition to the potentially high query costs in terms of time and money, online machine learning services restrict the number of queries allowed per unit of time. For example, the Google Cloud Vision API presently permits 1800 requests per minute, and a tremendous number of queries in a short time may be identified as fraud by the system. Therefore, decision-based attacks with low query efficiency may be inapplicable in the real world. Recently, Shukla et al. [22] presented a hard-label black-box attack in low query budget regimes through Bayesian optimization. Although the requisite number of queries is greatly reduced, the attack success rate remains unsatisfactory. To date, how to develop a query-efficient decision-based black-box attack that achieves a higher success rate with smaller distortions in a limited query setting remains an open question.

The gradient of the surrogate model, known as the prior gradient, contains plentiful prior information of the true gradient [23,24]. Several recent works [23,24,25] have adopted both transfer-based prior queries and model queries, reducing the query amount of score-based attacks from the initial millions of calls [14,15] to less than a thousand. However, this impressive drop is unreachable in the decision-based setting at present. Brunner et al. [20] utilized the gradient of a reference model to improve the efficiency of decision-based attacks, and gained benefit from surrogates as well. Surrogate models are widely used for black-box attacks, and cannot be ignored whenever they are available.

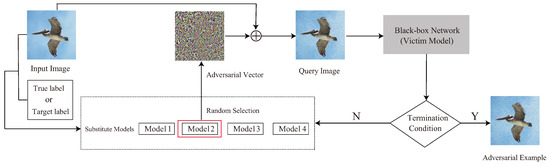

In this paper, we propose an Adversarial Direction-guided Decision-based Attack (ADDA) using several pretrained white-box surrogate models. We aim to greatly improve the success rate of non-targeted and targeted attacks within a low query budget (a thousand queries) as well as small constraint perturbations. We turn this adversarial process into a constrained optimization problem and then develop an iterative random search framework to craft adversarial examples. At each iteration, we search along the adversarial direction generated by the surrogate model, which is randomly chosen from the pretrained model set, to produce a candidate seed. Then, we use the predefined query function to judge whether the candidate seed is an adversarial example. The failed seed contains location information of decision boundaries [26], which can be used as the starting point of the next iteration. The iterative process stops if an adversarial example is successfully found or if the query budget is exhausted. Figure 2 illustrates the framework of ADDA. The superiority of our method is due to three main aspects: (1) under the guidance of adversarial direction, the candidate adversarial example has the most promising updating direction; (2) random search along adversarial directions generated by different surrogate models may mutually revise previous inaccurate directions; (3) the whole process of crafting an adversarial example does not increase the number of queries, and only a single query is used to evaluate the adversarial example at each iteration, which saves a significant number of queries.

Figure 2.

Pipeline of our ADDA proposal. At each step, we randomly choose a surrogate model to calculate the adversarial direction. Then, we update the current candidate seed along the adversarial direction. Next, we use the query function to judge whether the candidate seed is an adversarial example. The iteration continues until the victim model classifies the candidate seed into the specified class or the query budget is exhausted, then exits.

Our main contributions can be summarized as follows:

- We design a simple yet novel algorithm called ADDA based on the random search mechanism via exploiting multiple pretrained white-box surrogate models, drastically reducing the query amount while achieving a high fooling rate.

- We explore the geometric properties of three large classification models trained on the ImageNet dataset along adversarial directions generated through different reference models. The results reveal several interesting findings, such as that the adversarial directions always outperform the random directions in both targeted and untargeted settings.

- We demonstrate the superior efficiency and effectiveness of our algorithm over several state-of-the-art decision-based attacks through comprehensive experiments. Our source code is publicly available at (https://github.com/whitevictory9/ADDA, accessed on 1 July 2023).

- Our approach is able to successfully fool Google Cloud Vision, a black-box machine learning commercial system, while using an unprecedentedly low number of queries.

2. Related Work

Decision-Based Attacks. We focus on the restrictive hard-label or decision-based black-box settings where only the final decision, i.e., the top-1 predicted label, is returned by the victim model. Brendel et al. [18] first studied this problem and proposed the Boundary Attack (BA). It starts from a large adversarial perturbation and iteratively reduces the distortion by random walks along the decision boundary. Although the performance of BA is comparable to that of state-of-the-art white-box attacks, it demands millions of model queries before convergence. Subsequently, several other works have extended this work and improved its query efficiency. HopSkipJumpAttack (HSJA) [21] achieves this through the utilization of discarded information in the gradient-direction estimation, while Biased Boundary Attack [20] incorporates low-frequency perturbations, regional masks, and surrogate gradients. In both cases, tens of thousands of queries are needed to generate adversarial examples with small perturbations. Cheng et al. [19] proposed Opt Attack, which reformulates the original adversarial optimization problem as a search for the optimal direction which leads to the shortest distance to the decision boundary. Sign-Opt Attack [27] refines this method by estimating the sign of gradient at any direction instead of the true gradient. Recent approaches have studied decision-based black-box scenarios with severely limited queries. Bayes attacks [22] exploit Bayesian optimization for finding adversarial examples in a structured low-dimensional subspace. SurFree [28] searches along precise indications of geometrical properties of the classifier decision boundaries to find the largest distortion decrease. Although the former leverages Fast Fourier Transform (FFT) to alleviate the high dimensionality of the image, the structured subspace is still in thousands of dimensions, which makes Bayesian optimization computationally expensive and ineffective. The latter, however, performs outstandingly in terms of adversarial distortion under low query budgets, and can be assumed as the state-of-the-art method in the decision-based black-box setting.

Transfer-Based Attacks. It is well known that adversarial examples often transfer across different models, i.e., an adversarial example generated by one visual model could probably deceive another [4,29]. Several works on black-box attacks combine both transfer-based attacks and query-based attacks. Papernot et al. [7,12] trained a substitute model to mimic the victim model with a synthetic dataset labeled by the target model through queries and then mount black-box attacks based on the transferability of adversarial examples. However, these methods rely solely on transferability, and tend to suffer from high failure rates. Several score-based black-box attacks have introduced reference models as transferable priors to conduct gradient estimation, which greatly improves their performance [20,23,25]. Thereafter, Yang et al. [30] further improved the attack success rate and query efficiency by updating the surrogate model with query feedback. Suya et al. [31] proposed a hybrid attack strategy that exploits the local surrogate model to generate the starting points for optimization-based attacks and then tune the local surrogate model using labels learned from optimization attacks. Ma et al. [32] proposed Simulator Attack to reduce the query complexity, which leverages a meta-learning-based framework to train a generalized surrogate model to precisely mimic any unknown victim model. Unlike previous approaches, we do not optimize the surrogate model, and instead focus on utilizing the adversarial directions generated by multiple reference models as directional guidelines for the random search framework.

3. Problem Formulation

Let denote a K-class image classification model. Starting with an input image , the model produces an output . The output vector can be considered as a probability distribution over the set of labels . The final output label of image is the class with maximum probability . We consider adversaries of both the untargeted and targeted types. For an original well-classified image and its ground-truth label y, the goal of the non-targeted attacker is to find an adversarial image such that while is visually indistinguishable from the original image . The similarity between and can be quantified by the perceptibility metric . Standard choices of d are usually -norms; we focus on -norm and -norm distance in this paper. To constrain adversarial perturbations, we adopt the common approach of requiring less than a given threshold .

In the decision-based black-box setting, we assume that the function f is not accessible, and that only the final decision (top-1 prediction) can be obtained by querying the classification model. Because each query to the target network may incur time or monetary costs, we are interested in query-efficient black-box attack algorithms. Specifically, an attack is considered successful only if an adversarial example is constructed within a given query budget Q. Hence, the process of finding adversarial examples can be expressed as solving the following constrained optimization problem:

The targeted attack instead replaces the object function in Equation (1) with

where is a target class provided by the attacker.

4. Our Approach

4.1. Basic Idea

The study of transfer-based attacks reveals that different classification models tend to have similar decision boundaries for the same classification tasks [13]. In this paper, we assume that the attacker can access pretrained local models, which is a common assumption used in transfer-based attacks. Inspired by [13], for a non-targeted attack, if a point within the perturbation constraint is far from the decision boundary of the clean image which is found in the local surrogate model, it may be an adversarial example for the target model or it may accelerate the adversarial attack. Based on this idea, we roughly regard the process of searching for adversarial examples as a problem of minimizing the true class probability along the correct classification direction of the surrogate model. Specifically, for a given clean image and its true label y, can be seen as the direction of the correct classification. Given substitute model , we denote the true class probability for this model as ; thus, the optimization problem in Equation (1) can be converted into the problem of minimizing the true class probability for the surrogate model, as follows:

In the iterative approach to solving Equation (3), the number of iterations is constrained by the query budget Q. Therefore, a query-efficient attack is an attack algorithm that quickly converges to a solution. As an indicator of a successful black-box attack, we introduce the query function via

where V is the victim model. Generally, the input is an adversarial example for V if and only if . The query function is accessible in the decision-based black-box setting, as it can be obtained by querying the victim model V alone. Therefore, within a given query budget Q, Equation (1) can be reformulated as the following optimization problem:

Projected gradient descent (PGD) [8], as the backbone of some of the most powerful white-box adversarial attacks, can be used to generate adversarial samples iteratively:

with

Here, denotes the projection onto , is an ball of radius centered on , and is the step size. In fact, under the norm, is the sign vector of the gradient, while in the case of the norm represents the normalized gradient. Because the adversarial example is crafted iteratively along , we can intuitively define as the adversarial direction.

Similarly, the goal of the targeted attack can be approximated as maximizing the probability of the target class on the surrogate model, namely, , and then searching for the adversarial example by querying the target model. We define the query function of the targeted attack as

The process of generating targeted adversarial examples is similar to that of the non-target attack.

Unfortunately, the proposed attack method above may fail to find an adversarial sample if only a single surrogate model is used. This is because the success rate of the adversarial attack depends on the similarity between the substitute model and the victim model. Therefore, it is necessary to further develop an algorithm to successfully and quickly find adversarial examples.

4.2. Exploration of Different Adversarial Directions

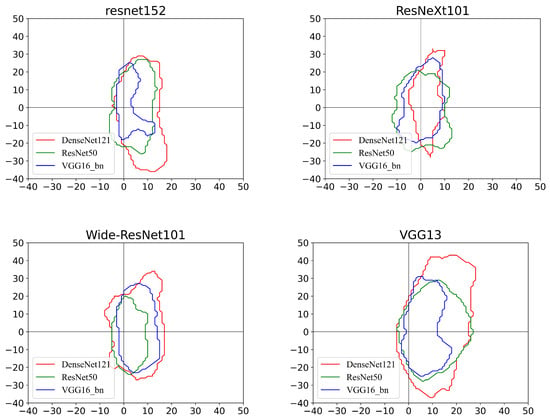

In this section, we explore the geometric properties of the classification model along the adversarial directions from different reference models. Specifically, we study the decision boundary of three victim models, DenseNet121 [33], ResNet50 [34], and VGG16_bn [35], in the planes spanned by the adversarial directions from ResNet152 [36], ResNeXt101 [37], Wide ResNet101 [38], and VGG13 [35], respectively. These seven models are all state-of-the-art models that were pretrained on the ImageNet [39] dataset, with the three victim models commonly chosen by prior works [22,24] and the other four victim models selected randomly from the torchvision package. For brevity, we denote the four surrogate models as , respectively. We randomly select an image from the ILSVRC2012 verification set, originally classified as ‘brambling’, and use it as an example to study the decision boundary.

Decision Boundaries of the Untargeted Method using Different Reference Models. We set the gradient direction of the substitute model in Equation (6) as

with the negative direction being the non-targeted adversarial direction. We choose and its random normalized orthogonal directions as the horizontal and vertical axes of a 2D plane Cartesian coordinate system. The unit value for both axes is one pixel. For any point in the 2D plane corresponding to an image perturbed by i and j along each direction,

where is the pixel value vector of the original image. The decision boundaries of all victim models in different planes are plotted in the first row of Figure 3.

Figure 3.

Different victim models’ decision boundaries of ground truth label regions. From left to right, the images demonstrate the performance of three victim models in different planes spanned by the adversarial directions generated by different surrogate models. Each closed curve within which all points are classified as the correct class is the decision boundary in order to separate the true class from others. The origin of the coordinates corresponds to the original image.

From Figure 3, it can be observed that:

- For all victim models, the area where each model can correctly predict the image is limited to the middle region. In addition, the decision boundary of each model is within a small closed curve. The models are quickly misled along the untargeted adversarial direction (e.g., negative gradient direction). This partially explains the feasibility of the non-targeted attack approach presented in Section 4.1.

- The distance to the boundary along the non-targeted adversarial direction is less than along other directions. Note that this conclusion holds for different planes; that is, even if the reference model provides a direction that is not optimal for searching for adversarial samples, it is still better than a random direction. For example, for the ResNet50 model, using the ResNeXt101 surrogate model as the direction guide is slower to reach the boundary than following the directions provided by other substitute models, but is faster than the random directions. Therefore, it can be concluded that the reference model can provide useful guidance in searching for adversarial examples even when it is not optimal.

- Using different surrogate models, the distance to the boundary along the non-targeted adversarial direction is unequal. For an attacker, the ideal situation is to reach the boundary in the shortest distance. This means that only a very small perturbation is needed in order for the victim model to be misclassified. Specifically, among the four substitute models, when the reference model ResNeXt101 is used as the direction guidance the number of steps to move out of the ground-truth region of the DenseNet121 model is the least. Thus, intuitively, if we can take advantage of this optimal situation it will greatly improve the efficiency of the adversarial attack.

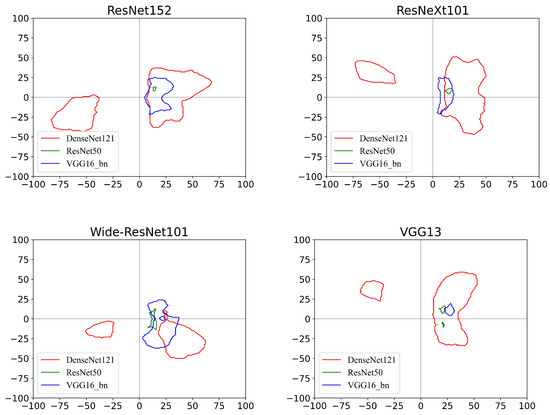

Decision Boundaries of the Targeted Method using Different Reference Models. We randomly choose ‘Ptarmigan’ as the target label. Setting

as the respective targeted adversarial directions, we then plot the decision boundaries of the three victim models on the planes crossed by and its random orthogonal direction, as shown in Figure 4. In addition to findings in the non-targeted case, the following can be observed:

Figure 4.

Decision boundaries of the target class regions for the different victim models. From left to right, the images demonstrate the performance of the three victim models in different planes spanned by the adversarial directions generated by different surrogate models. Each closed curve within which all points are classified as the target class is the decision boundary to separate the target class from others. In each graph, the same color represents the decision boundary of the same model. The origin of the coordinates corresponds to the original image.

- With the guidance of the targeted adversarial direction, each victim model finds the target area within which all points are predicted as the target class. However, certain targeted regions are very small, and each of the centers deviates from the x-axis (i.e., the targeted adversarial direction). This may be due to the inaccurate targeted adversarial direction provided by the surrogate model, such as the other three cases when the victim model is ResNet50, excluding Wide ResNet101 used as the reference model. Obviously, compared with the other three surrogate models, the success probability of the targeted adversarial attack is higher along the adversarial direction generated by Wide ResNet101. In contrast, for the DenseNet121 model, it is possible to reach the targeted regions faster along the other three adversarial directions. Therefore, the optimal adversarial direction is different for different victim models.

- Another interesting finding is that certain victim models appear to have multiple target regions in the plane. This may be attributed to the nonlinearity of the function , i.e., the gradient direction may change significantly when the distortion is large [37]. In this situation, moving along the direction of the original adversarial gradient no longer increases the probability of being classified as the target label.

4.3. Adversarial Direction-Guided Decision-Based Attack (ADDA)

Based on the above analysis, it might be wondered whether there is an algorithm that can exploit all of these adversarial directions such that each victim model can quickly move out of/reach the ground truth region/targeted region. A natural idea is to regard the adversarial directions provided by the surrogate models as a candidate direction set, then randomly select an adversarial direction from the set to generate the candidate seed. This means that multiple adversarial directions are used for random wandering within the perturbation limits. Because the adversarial directions are purposeful and abundant, it becomes easy to find adversarial samples through candidate seeds.

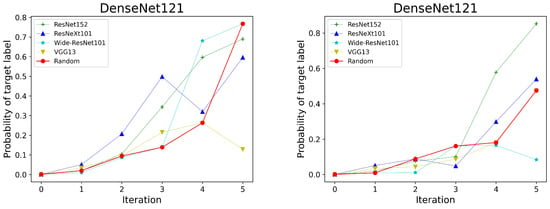

We now develop the above ideas into a query-efficient iterative attack algorithm, which we name ADDA. One iteration of the algorithm consists of three components. For brevity, we denote a set of candidate models as . First, a candidate model is randomly chosen from as a substitute model. Second, an adversarial direction is obtained by Equation (7) using the candidate model. Following this adversarial direction can generate a candidate seed via Equation (6). Third, the query function (Equation (4) for an untargeted attack and Equation (8) for a targeted attack) is used to judge whether the candidate seed is an adversarial example. If the value of the query function is equal to 1, the candidate seed is considered as an adversarial example and the adversarial attack is successful. Otherwise, the candidate seed is used as the starting point for the next iteration. The termination condition of the whole iterative process is when the adversarial example is successfully found or the query budget runs out. The complete procedure is shown in Algorithm 1. In addition, Figure 5 intuitively shows the process of targeted ADDA for the norm. From the picture, it can be observed that even when using different random seeds, the ADDA algorithm can find the adversarial examples successfully after five iterations.

| Algorithm 1 ADDA Algorithm |

|

Figure 5.

The process of searching for targeted adversarial examples by randomly selecting the targeted adversarial direction. The red line shows the entire iteration of ADDA. The lines in other colors display the output probability of the victim model varying with the number of iterations under the guidance of the targeted adversarial directions generated from different reference models. In the above two figures, the ADDA algorithm successfully finds the adversarial examples after five iterations with different random seeds.

5. Experiments

In this section, we first compare ADDA with several state-of-the-art decision-based attacks on the ImageNet dataset and then apply it to the popular commercial system Google Cloud Vision API to demonstrate the realistic threat of ADDA.

5.1. Experimental Setup

Dataset. We randomly sampled 1000 images from the validation set of ILSVRC2012 as the test images, which were evenly distributed across 1000 classes. All images were resized to and normalized to . To ensure that the perturbed images were valid, we clipped them back to for all algorithms. In all experiments, we only considered images that were initially correctly predicted by the target network.

Models. We considered three commonly used models, DenseNet121, ResNet50, and VGG16_bn, as the victim classifiers, and used four models, ResNet152, ResNeXt101, Wide ResNet101, and VGG13, as surrogate models. All models were derived from the respective PyTorch [40] pretrained models.

Baselines. In untargeted settings, we compared our proposed algorithm with four state-of-the-art decision-based attacks: Sign-opt [27], HSJA [21], Bayes attack [22], and SurFree [28]. We used the publicly available implementations by these four authors and the same hyperparameter settings as in the original papers. In the targeted setting, we only report the performance of our method and do not compare it with these four methods, which is due to the following reasons: (a) SurFree currently only supports untargeted attacks; (b) for targeted attacks, Bayes attack only supports the low-dimension image, not larger image sizes such as ImageNet; (c) Sign-opt and HSJA cannot achieve any success under the dual constraints of a low query budget and small perturbation, requiring larger perturbations or more model queries to achieve a non-zero success rate.

Evaluation Metrics. The two core evaluation metrics were the attack success rate (ASR) and the average number of queries (Avg. Q). We defined the success rate as the percentage of the number of images that were successfully perturbed below a given distance threshold within a query budget Q. For both untargeted and targeted attacks, we restricted the query budget Q to 1000, which is consistent with that in Bayes attack.

5.2. Untargeted Attacks on ImageNet

First, we compared our proposed ADDA with Sign-opt, HSJA, and Bayes attack, which are the current state-of-the-art decision-based attacks for the threat model. We set the perturbation bound to and the step size of ADDA in PGD to . Table 1 presents the performance comparison of untargeted attacks on ImageNet. It can be observed that ADDA enjoys significantly higher efficiency in terms of the average number of queries and attack success rate than Sign-opt, HSJA, and Bayes attack. Specifically, ADDA consistently achieves an attack success rate close to while requiring to fewer queries compared to the competitors across the three different victim models.

Table 1.

Performance evaluation of untargeted attacks on ImageNet; and query limit = 1000.

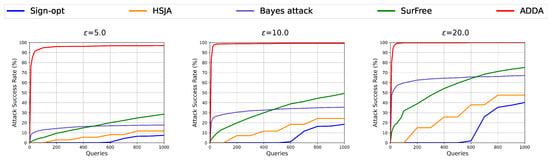

For untargeted settings, we compared the performance of ADDA with four advanced decision-based adversarial attacks. To ensure fair comparisons, we considered three different perturbation bounds: . We used a fixed step size for ADDA in PGD of . Table 2 summarizes the evaluation results of the success rate and average query amount. It can be seen that ADDA consistently outperforms its competitors across all classifiers and different . Furthermore, the results show that the attack success rate gap between the baseline approaches and ADDA increases to as decreases while maintaining leading query efficiency. Figure 6 displays the attack success rate versus the number of queries required to generate an adversarial example on the DenseNet121 classifier under perturbation constraints. Compared to the baseline methods, ADDA gains a dramatically faster increase in success rate. With a small query budget, ADDA achieves a success rate of almost , which dominates the current basic methods in all settings.

Table 2.

Performance evaluation of untargeted attacks on ImageNet with a maximum query number of 1000.

Figure 6.

Relationship between attack success rate and the number of queries on ImageNet for DenseNet121 model under the perturbation constraint.

5.3. Targeted Attacks on ImageNet

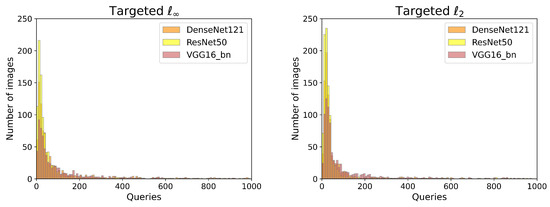

For each example in the targeted attacks, a random class other than the true one was chosen as the target class out of 1000 classes. Notably, we set the maximum perturbation size as in the targeted case, which has been used in several previous papers [23,30]. The step size in PGD and the perturbation bound under the perturbation constraint were the same as those in the untargeted setting. Due to the relatively larger dimensions of the images in ImageNet, previous methods have encountered difficulty when exploring them in targeted attacks, whereas our approach overcomes such problems. The experimental results are reported in Table 3 and Figure 7. The results in Table 3 show that ADDA achieves a high attack success rate while maintaining low query consumption. Regardless of the or setting, the performance of our approach on the DenseNet121 and ResNet50 classifiers is better than that of VGG16_bn in targeted attacks. In Figure 7, we plot the histogram of the number of model queries required for a successful targeted attack made by ADDA over three different victim models. Note that the query distributions are highly left-skewed, indicating that only a few images demanded a large number of queries, while most images required only about 100 or fewer model queries to construct adversarial examples.

Table 3.

Performance evaluation of ADDA under targeted settings on ImageNet with a maximum query number of 1000.

Figure 7.

Distribution of the number of queries needed for a successful targeted attack on the three different victim models.

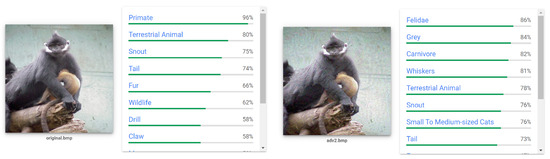

5.4. Attacks on Google Cloud Vision

To demonstrate the effectiveness and applicability of ADDA to real-world applications, we applied it to the cloud-based computer vision API by Google Cloud Vision. For a given picture, the API returns a series of top labels and corresponding confidence scores, although these scores are neither probabilities nor logits. Because the returned labels are a list of unspecified length that varies based on the image, attacking Google Cloud Vision is significantly more challenging than attacking ImageNet. The adversarial criterion can be formulated as removing the top-3 concepts from the classification output. To meet this adversarial criterion, it is possible to slightly modify the query function in Equation (4) to make it suitable for attacking commercial classifiers.

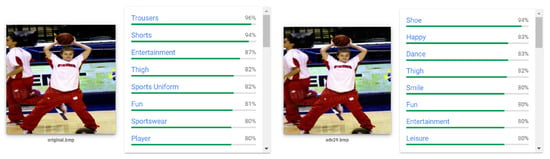

We conducted both and untargeted attacks against the Google Cloud Vision API. Figure 8 and Figure 9 show the classification results in a comparison of clean images and adversarial examples generated by 2 and 29 queries, respectively. In Figure 8, the original image contains concepts related to primates. After only two queries, the perturbed image is forced to be classified as ‘Felidae’. Note that ADDA successfully replaced the top-3 concepts with Felidae-related objects through an imperceptible change to the original image. Figure 9 shows that our attack produces a perturbation that is human-indistinguishable after only 29 queries, causing the model to ignore the existence of the player-related object and classify the image as the dancer-relevant object.

Figure 8.

Demonstration of Google Cloud Vision labeling of the original image and the adversarial image after 2 queries with constrained perturbation with . Interestingly, our adversarial image is classified as “Felidae”.

Figure 9.

Demonstration of Google Cloud Vision labeling on the original image and the adversarial image after 29 queries, where the perturbation size is . Interestingly, our adversarial pattern is classified as “Dance”.

6. Discussion and Conclusions

In this paper, we have proposed a query-efficient algorithm for decision-based black-box attack based on a random search scheme. We explored the decision boundary of three different victim models, observing that the adversarial directions provided by the pretrained models are optimal for searching for adversarial samples. Based on this finding, we developed a novel mechanism for random search designed to suggest the most promising search directions for adversarial examples. Along a random adversarial direction, this attempt obtains the best distortion decrease, contributing to a fast convergence to qualitative adversarial perturbations within an order of only tens of queries. Experimental results on the ImageNet dataset show that our ADDA proposal overwhelmingly outperforms existing state-of-the-art decision-based attacks in terms of query efficiency and attack success rate.

Unlike the performance in untargeted attacks, ADDA’s targeted attack success rate varies greatly across different models, which may be related to the selection of surrogate models. Because ADDA is sufficiently strong compared to the baseline methods in the current setting, we do not further discuss the impact on the attack strength of changes in the selection of reference models.

Considering the fact that ADDA is capable of crafting an imperceptible adversarial perturbation within a small query budget and can successfully fool real-world systems after only two queries, it is important to consider the impact of decision-based attacks on real-world systems. We hope that ADDA will inspire future work on adversarial defenses.

Author Contributions

Writing—original draft, W.L.; Supervision, X.L. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the National Natural Science Foundation of China under Grant No. 61966011.

Data Availability Statement

The data that support the findings of this study are available from the corresponding author upon reasonable request.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Gayathri, J.L.; Abraham, B.; Sujarani, M.S.; Nair, M.S. A computer-aided diagnosis system for the classification of COVID-19 and non-COVID-19 pneumonia on chest X-ray images by integrating CNN with sparse autoencoder and feed forward neural network. Comput. Biol. Med. 2022, 141, 105134. [Google Scholar]

- Wang, D.; Yu, H.; Wang, D.; Li, G. Face recognition system based on CNN. In Proceedings of the 2020 International Conference on Computer Information and Big Data Applications (CIBDA), Guiyang, China, 17–19 April 2020; pp. 470–473. [Google Scholar]

- Aladem, M.; Rawashdeh, S.A. A single-stream segmentation and depth prediction CNN for autonomous driving. IEEE Intell. Syst. 2020, 36, 79–85. [Google Scholar] [CrossRef]

- Szegedy, C.; Zaremba, W.; Sutskever, I.; Bruna, J.; Erhan, D.; Goodfellow, I.; Fergus, R. Intriguing properties of neural networks. arXiv 2013, arXiv:1312.6199. [Google Scholar]

- Goodfellow, I.J.; Shlens, J.; Szegedy, C. Explaining and harnessing adversarial examples. arXiv 2014, arXiv:1412.6572. [Google Scholar]

- Athalye, A.; Carlini, N.; Wagner, D. Obfuscated gradients give a false sense of security: Circumventing defenses to adversarial examples. In Proceedings of the 35th International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 274–283. [Google Scholar]

- Papernot, N.; McDaniel, P.; Goodfellow, I.; Jha, S.; Celik, Z.B.; Swami, A. Practical black-box attacks against machine learning. In Proceedings of the 2017 ACM on Asia Conference on Computer and Communications Security, Abu Dhabi, United Arab Emirates, 2–6 April 2017; pp. 506–519. [Google Scholar]

- Madry, A.; Makelov, A.; Schmidt, L.; Tsipras, D.; Vladu, A. Towards deep learning models resistant to adversarial attacks. arXiv 2017, arXiv:1706.06083. [Google Scholar]

- Kurakin, A.; Goodfellow, I.; Bengio, S. Adversarial machine learning at scale. arXiv 2016, arXiv:1611.01236. [Google Scholar]

- Moosavi-Dezfooli, S.M.; Fawzi, A.; Frossard, P. Deepfool: A simple and accurate method to fool deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 2574–2582. [Google Scholar]

- Kurakin, A.; Goodfellow, I.J.; Bengio, S. Adversarial examples in the physical world. In Artificial Intelligence Safety and Security; Chapman and Hall/CRC: Boca Raton, FL, USA, 2018; pp. 99–112. [Google Scholar]

- Papernot, N.; McDaniel, P.; Goodfellow, I. Transferability in machine learning: From phenomena to black-box attacks using adversarial samples. arXiv 2016, arXiv:1605.07277. [Google Scholar]

- Liu, Y.; Chen, X.; Liu, C.; Song, D. Delving into Transferable Adversarial Examples and Black-box Attacks. In Proceedings of the International Conference on Learning Representations, San Juan, Puerto Rico, 2–4 May 2016. [Google Scholar]

- Chen, P.Y.; Zhang, H.; Sharma, Y.; Yi, J.; Hsieh, C.J. Zoo: Zeroth order optimization based black-box attacks to deep neural networks without training substitute models. In Proceedings of the 10th ACM Workshop on Artificial Intelligence and Security, Dallas, TX, USA, 3 November 2017; pp. 15–26. [Google Scholar]

- Ilyas, A.; Engstrom, L.; Athalye, A.; Lin, J. Black-box adversarial attacks with limited queries and information. In Proceedings of the International Conference on Machine Learning, Stockholm, Sweden, 10–15 July 2018; pp. 2137–2146. [Google Scholar]

- Ilyas, A.; Engstrom, L.; Madry, A. Prior convictions: Black-box adversarial attacks with bandits and priors. arXiv 2018, arXiv:1807.07978. [Google Scholar]

- Tu, C.C.; Ting, P.; Chen, P.Y.; Liu, S.; Zhang, H.; Yi, J.; Hsieh, C.-J.; Cheng, S.M. Autozoom: Autoencoder-based zeroth order optimization method for attacking black-box neural networks. In Proceedings of the AAAI Conference on Artificial Intelligence, Honolulu, HI, USA, 27 January–1 February 2019; pp. 742–749. [Google Scholar]

- Brendel, W.; Rauber, J.; Bethge, M. Decision-based adversarial attacks: Reliable attacks against black-box machine learning models. arXiv 2017, arXiv:1712.04248. [Google Scholar]

- Cheng, M.; Le, T.; Chen, P.Y.; Yi, J.; Zhang, H.; Hsieh, C.J. Query-efficient hard-label black-box attack: An optimization-based approach. arXiv 2018, arXiv:1807.04457. [Google Scholar]

- Brunner, T.; Diehl, F.; Le, M.T.; Knoll, A. Guessing smart: Biased sampling for efficient black-box adversarial attacks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 4958–4966. [Google Scholar]

- Chen, J.; Jordan, M.I.; Wainwright, M.J. Hopskipjumpattack: A query-efficient decision-based attack. In Proceedings of the 2020 IEEE Symposium on Security and Privacy, San Francisco, CA, USA, 18–21 May 2020; pp. 1277–1294. [Google Scholar]

- Shukla, S.N.; Sahu, A.K.; Willmott, D.; Kolter, Z. Simple and efficient hard label black-box adversarial attacks in low query budget regimes. In Proceedings of the 27th ACM SIGKDD Conference on Knowledge Discovery & Data Mining, Virtual, 14–18 August 2021; pp. 1461–1469. [Google Scholar]

- Cheng, S.; Dong, Y.; Pang, T.; Su, H.; Zhu, J. Improving black-box adversarial attacks with a transfer-based prior. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2019; Volume 32. [Google Scholar]

- Huang, Z.; Zhang, T. Black-box adversarial attack with transferable model-based embedding. arXiv 2019, arXiv:1911.07140. [Google Scholar]

- Guo, Y.; Yan, Z.; Zhang, C. Subspace attack: Exploiting promising subspaces for query-efficient black-box attacks. In Advances in Neural Information Processing Systems; Curran Associates, Inc.: Red Hook, NY, USA, 2019; Volume 32. [Google Scholar]

- Shi, Y.; Han, Y.; Tian, Q. Polishing decision-based adversarial noise with a customized sampling. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 1030–1038. [Google Scholar]

- Cheng, M.; Singh, S.; Chen, P.; Chen, P.Y.; Liu, S.; Hsieh, C.J. Sign-opt: A query-efficient hard-label adversarial attack. arXiv 2019, arXiv:1909.10773. [Google Scholar]

- Maho, T.; Furon, T.; Le Merrer, E. Surfree: A fast surrogate-free black-box attack. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 10430–10439. [Google Scholar]

- Tramèr, F.; Kurakin, A.; Papernot, N.; Goodfellow, I.; Boneh, D.; McDaniel, P. Ensemble adversarial training: Attacks and defenses. arXiv 2017, arXiv:1705.07204. [Google Scholar]

- Yang, J.; Jiang, Y.; Huang, X.; Ni, B.; Zhao, C. Learning black-box attackers with transferable priors and query feedback. Adv. Neural Inf. Process. Syst. 2020, 33, 12288–12299. [Google Scholar]

- Suya, F.; Chi, J.; Evans, D.; Tian, Y. Hybrid batch attacks: Finding black-box adversarial examples with limited queries. In Proceedings of the 29th USENIX Security Symposium, Boston, MA, USA, 12–14 August 2020; pp. 1327–1344. [Google Scholar]

- Ma, C.; Chen, L.; Yong, J.H. Simulating unknown target models for query-efficient black-box attacks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 11835–11844. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 4700–4708. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Identity mappings in deep residual networks. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Part IV 14. pp. 630–645. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 1492–1500. [Google Scholar]

- Zagoruyko, S.; Komodakis, N. Wide residual networks. arXiv 2016, arXiv:1605.07146. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.J.; Li, K.; Li, F.-F. Imagenet: A large-scale hierarchical image database. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009; pp. 248–255. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. Pytorch: An imperative style, high-performance deep learning library. Adv. Neural Inf. Process. Syst. 2019, 32, 8026–8037. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).