Abstract

This paper presents a method for designing control laws to achieve output stabilization of linear systems within specified sets, even in the presence of unknown bounded disturbances. The approach consists of two stages. In the first stage, a coordinate transformation is utilized to convert the original system with output constraints into a new system without constraints. In the second stage, a controller is designed to ensure the boundedness of the controlled variable of the transformed system obtained in the first stage. Two distinct control strategies are presented in the second stage, depending on the measurability of the state vector. If the state vector is measurable, a controller is designed using state feedback based on the Lyapunov method and Linear Matrix Inequalities (LMIs). Alternatively, if only the output vector is measurable, an observer-based controller is designed using a Luenberger observer. In this case, the state estimation error does not need to converge to zero but must remain bounded. The efficacy of the proposed method and the validity of the theoretical results are demonstrated through simulations performed in MATLAB/Simulink.

Keywords:

linear dynamical system; change of coordinates; stability; control; linear matrix inequalities MSC:

93D15

1. Introduction

In practice, there are many control problems associated with a guarantee of finding outputs in given sets. For example, it is required to maintain the frequency and output voltage of electric generators within specified bounds in the electric power network [1,2,3,4,5,6,7] or pressure and flow rate at the wellhead in a given band when controlling the formation pressure stabilization process [8,9,10], etc. Violating these constraints during operation may degrade the performance and lead to system instability. Therefore, guarantee of the desired control performance, including transient and steady-state performance, in the practical systems is one of the key criteria in the synthesis of automatic control systems in past decades.

For linear systems without uncertainty in the plant model parameters, the classical control methods, such as modal control, optimal control, etc., refs. [11,12,13,14,15] can ensure that the control error between the controlled signal and reference signal converges to an arbitrarily small region after a finite time. The characteristics of the transient process can be easily obtained by analyzing the dynamic model via a transfer function or state-space model. The control problems become more complicated when uncertainties, unknown disturbances and nonlinearities exist in the systems. In this case, the classical adaptive methods can be used to stabilize a closed-loop system to guarantee that the control error belongs to a given set in asymptotics or finite time [16,17,18,19,20,21]. However, the estimates for calculating the characteristics of the limit set and the time of the transient process are either rather rough or remain in terms of existence. Moreover, these methods do not guarantee the specified deviation of the controlled signal from the reference signal in the transient mode, which can be arbitrarily large at the initial time if the initial conditions of the plant are unknown.

To solve the above problem with a significant value of the control error in transient mode, in 1991, Miller and Davison [22] proposed an adaptive method to “force” this error “to be less than an (arbitrarily small) prespecified constant after an (arbitrarily short) prespecified period of time, with an (arbitrarily small) prespecified upper bound on the amount of overshoot”. In this paper, the given sets are defined by a sequence of rectangles. The size of each rectangle corresponds to the desired maximum deviation of the error from the origin and to the desired time when the error belongs to the respective rectangle. However, these rectangular regions are rather rough, and this approach only applies to systems with scalar input and output. In the paper [23] in 2008, Bechlioulis and Rovitakis proposed a new control methodology, namely “prescribed performance control (PPC)”. PPC is understood as a control method that ensures the control error belongs to a given set, which is depicted by a performance function designed by users, and converges into an arbitrarily small neighborhood of the origin. However, implementing this method in [23] requires knowledge of the sign and the set of initial conditions. Moreover, the upper and the lower performance functions for transients are rather rough because these bounds are determined by the same function with different signs. Additionally, these performance functions exponentially converge to some constants.

The papers by Furtat and Gushchin [24,25] propose a novel method that generalizes the results of [23] and guarantees that the output controlled variables belong to a given set. The method consists of using the special coordinate transformation, which allows reducing the control problem with constraints on the output variable to the control problem without constraints. In contrast to [23], the performance functions of the given set can be chosen asymmetrically with respect to the origin and have arbitrary forms, and may not particularly converge to constants. This fact allows us to establish that the method of Furtat and Guschin can be used to stabilize the output signals in [1,2,3,4] in the given sets, which have arbitrary forms, while the method of Bechlioulis and Rovitakis often refers to the tracking problem.

The application of the method in [24,25] is limited to linear systems with scalar input and scalar output (SISO), and the parameters of the controller are manually selected. In this paper, we introduce a novel approach for designing nonlinear control laws based on results [24,25]. Our contribution differs from [24,25] in the following ways:

- We propose a general procedure for stabilizing unstable linear systems, including MIMO cases, using state feedback and observer-based feedback;

- The parameters for the control law are computed utilizing LMIs;

- We provide recommendations for selecting control law parameters to reduce the influence of disturbances.

The paper is organized as follows. Section 2 introduces the notation and gives an important lemma. Section 3 describes the problem of a linear plant control with a guarantee that the output signals belong to given sets. Section 4 gives a general approach to solving the problem. Section 5 proposes a design of state feedback controller for linear plants based on LMIs. Section 6 proposes a design of an observer-based controller when the state vector is not measurable. Section 7 illustrates the results obtained by simulation using MATLAB/Simulink and demonstrates the theoretical conclusions.

2. Notation and a Key Lemma

The following notation will be used throughout the paper:

- -

- is Euclidean space of dimension n with norm ;

- -

- denotes the set of all real matrices with norm ;

- -

- denotes the identity, zero, and diagonal matrix (of the corresponding dimension);

- -

- denotes the all-one vector with m values 1;

- -

- For quadratic matrices , means that A is a positive-definite matrix (negative-definite matrix). means that A is a non-negative definite matrix (non-positive definite matrix);

- -

- The symbol denotes a symmetric block in a symmetric matrix.

The following well-known lemma will be used to prove the main result of paper. According to [26,27,28], the S-procedure can be formulated as follows:

Lemma 1

([26,27,28]). Let the quadratic forms

where , , and the numbers . If there are numbers such that

then, the inequalities

imply the inequality

for all

3. Problem Statement

Consider the linear dynamical system with m-input and m-output

where ; is the state vector; is the output signal; is control signal; is an unknown bounded disturbance with is a known constant; the matrices are known. The pair is controllable, and the pair is observable. The system (1) has relative degree equals (i.e., [29,30]).

The goal of the paper is to design a control law that guarantees the output signal of the system (1) stays in the following set

Here, and are continuous functions and bounded with their first-time derivatives. These functions can be selected by designers based on the requirements for system operation. For example, in the problem of an electric generator control (see [2,4]), it is required to maintain the frequency and the output voltage within the specified bounds and .

4. Solution Method

This section presents the mathematical framework for solving the given problem. The method is based on the change of coordinates [25], which allows one to transform the control problem with constraints on the output variable to the problem of control without constraints on the controlled variable.

Let us introduce the change of coordinates

where is a new variable, the vector function satisfies the following conditions:

- (a)

- and there exists an inverse mapping

- (b)

- is a differentiable function with respect to and t, and ;

- (c)

- and ;

- (d)

- is a bounded function for and , , where is determined by (3).

Here, we consider functions depending only on and t, and the matrix has a diagonal form. To design a control law, the information about the dynamics of the variable is required. Calculate the time derivative of the function on (4)

As both and are bounded functions in (6), we introduce the substitution . Consequently, we have , where . Considering this substitution, we rewrite expression (6) as follows:

We recall the main result from [25] to solve the stated problem.

Theorem 1

This theorem allows one to transfer the control problem (1) with constraints (2) on the output of the system (1) to the control problem on the auxiliary variable of the system (7) without constraint.

In order to find a control that ensures that is bounded, we consider a Lyapunov function in the form

According to (7), we get

Let be a bounded set containing the origin. Moreover, it is planned to stabilize the trajectory of within this set. To maintain within , it is sufficient to ensure that the derivative of Lyapunov function is negative for all not belonging to . In other words, for all in order to maintain within (as per the concept of input to state stability (ISS) [31]). The derivative of the Lyapunov function in (9) involves the matrix , which is a positive definite. In particular, if the system (1) is one-dimensional, then reduces to a positive scalar value that does not impact the sign of the expression . Before formulating the controller, we will prove the following auxiliary proposition.

Proposition 1.

Let us consider the following block matrices:

where are real diagonal matrices. Then, the matrix multiplication

is a negative definite matrix.

Proof.

It is easy to see that the matrix is symmetric. Let be eigenvalues and eigenvectors of matrix , respectively. Then, we have the following relation

From the last equation we can express as follows

Given that and , it follows that , implying that . Additionally, considering that for all , we deduce that for . The symmetric matrix has all negative eigenvalues, then is a negative definite. □

Below, we will proceed to propose a control design for the system (1) in different scenarios: when the state vector is available for measurement, and when only the output signal is measurable.

5. State Feedback Control

Suppose the state vector of the system (1) is measured. We introduce the control law in the form

where is the control gain matrix to be designed. Next, we will demonstrate that selecting the control law (10) enables us to ensure the negativity of the derivative of Lyapunov function (8) when analyzing the stability of the closed-loop system.

The following theorem provides a method for determining the gain matrix K in (10).

Theorem 2.

Suppose the transformation (3) satisfies conditions (a)–(d), for any and . If for given numbers , and there exist a matrix and positive coefficients such that for the following linear inequalities are feasible

Proof.

Case 1. :

Lyapunov function (8) is reduced in the form

Let us define the set as follows

where c is a positive number.

For this case with , the set is the interval . It is required that for any given positive number : , i.e., . Since does not affect the sign of the expression (15), these conditions can be rewritten as

Let us denote , and express (17) in matrix form:

By applying the S-procedure, inequalities (18) are satisfied if condition (13) holds. Therefore, the system (11) is ISS stable. According to Theorem 1, goal condition (2) is satisfied.

Case 2. :

In this case, is a matrix and cannot be neglected in the analysis sign of , as in the previous case. We require the condition , with is defined in (16). One can rewrite the above conditions as

Denoting , rewrite (20) in matrix form:

According to S-procedure, the inequalities (21) are satisfied if the following inequalities hold

The first inequality in (22) is equivalent to

Since and according to Proposition 1 inequality (23) holds if the following inequality holds for any positive number

By condition (20), we care about the sign of the derivative of the Lyapunov function only for from the set . Moreover, , we obtain an interval type uncertainty of (24) with . Then, conditions (20) are satisfied if (12) is feasible for any and any given numbers . Furthermore, it is not hard to show that there always exists a diagonal matrix K and , such that the inequalities (12) are feasible. Indeed, using Schur Complement Lemma [28], we can rewrite (12) as follows:

It is evident that for a given and any fixed , inequalities (25) always have finite solutions . As a result, the control law (10) with K satisfying (12) guarantees the input-to-state stability in the closed-loop system (11), implying a boundedness of . According to Theorem 1, the goal (2) is achieved. Theorem 2 is proved. □

Remark 1.

The LMI technique and S-procedure allow us to design the controller for a MIMO system under the influence of unknown bounded disturbances based on analysis the input to state stability of the closed-loop system. Moreover, the problem of finding the control gain matrix of (10) can be reduced to the problem of finding the solutions to the feasible problems (12) and (13), which can be easily solved using popular solvers for semidefinite programming (such as SEDUMI [32], SDPT3 [33], CSDP [34] and others.)

Remark 2.

It can be seen that the parameter c in (16) is associated with the radius of the open ball in which the trajectories of the system (11) are attracted (the radius of the ball is equal to ). If we decrease the value of c, then the radius of the ball will decrease and, in turn, the limiting value of . Therefore, with a decrease in the limiting value of , the oscillation of the variable also decreases in the set , which is caused by the influence of the external disturbance .

6. Observer-Based Feedback Control

This section consider the system (1) with a measurable output vector. The feedback control law for this system is defined as follows:

where is the feeback control gain matrix to be designed; is the observer state referred to below as the state estimate, which is generated by the classical Luenberger observer [35]

where represents the observer feedback gain matrix.

Due to the observability of the system, it is feasible to choose a matrix such that the matrix is Hurwitz. Additionally, due to the boundedness of the disturbance , the observation error remains bounded. Assuming an initial observer state of zero, it follows that . The solution of system (28) is given by

In this case, there exist constants and , such that , , and the solution satisfies the upper bound

where .

Let with . Equation (31) can be expressed as

Now we introduce the main result of this section

Theorem 3.

Suppose the transformation (3) satisfies conditions (a)–(d), for any and . If for given numbers and , there exist a matrix and positive coefficients such that for the following linear inequalities are feasible

Proof.

The proof of Theorem 3 follows directly from the proof of Theorem 2. □

Remark 3.

As seen from (28) and (30), the observation error does not converge to zero due to the influence of the disturbance . Furthermore, this error can be large if the disturbance in the system is significant. However, in this paper, when designing the observer, it is not necessary to ensure that the state estimation error goes to zero; instead, it must be designed so that the estimation error is a bounded quantity for , and the bounded value must be estimated. With the utilization of control law (26), the observation error can be viewed as an externally bounded disturbance affecting the system (31). Using LMI and S-procedure techniques, the stability of the system (31) can be analyzed, and the control matrix gain of (26) can be determined, as shown in Section 5.

7. Examples

7.1. Example 1: SISO Case

Consider the system described by Equation (1) with the following parameter values

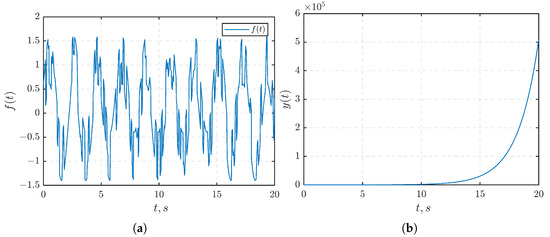

where represents the saturation function and is the signal modeled in Matlab/Simulink using the “Band-Limited White Noise” block with a noise power and a sampling time of 0.1. This results in . The graphic of the disturbance is depicted in Figure 1a.

Figure 1.

(a) The disturbance . (b) The open-loop step response .

In this example, the non-Hurwitz property of the matrix A in system (1) indicates system instability. The step response of the open-loop system (Figure 1b) shows that the output goes to infinity as t increases.

To design a closed-loop system, we select the function as follows

It is evident that this function satisfies conditions (a)–(d) of transformation (3). The inverse function is given by

For all and , it holds that

and

The parameters of the restriction functions and of the set are given as follows

For the control law based on the state feedback method (10), it is defined as

Similarly, for the observer-based feedback method (26), the control law is defined as

By choosing the eigenvalues of the observer matrix in (28) as and , the matrix is determined as . Consequently, the bound of can be computed as . The values of and are calculated as and , respectively, leading to . To solve the inequalities (13) for the state feedback method and (34) for the observer-based method, the YALMIP package [36] is employed with the SEDUMI solver. The resulting solutions for and are presented in Table 1.

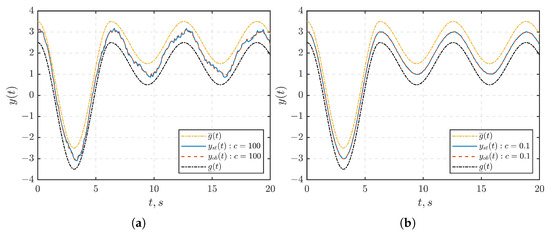

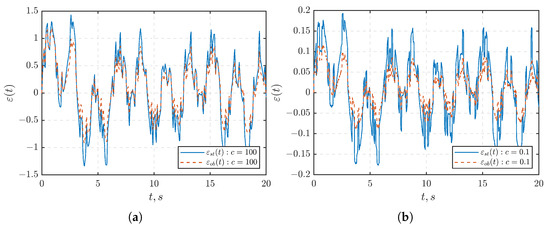

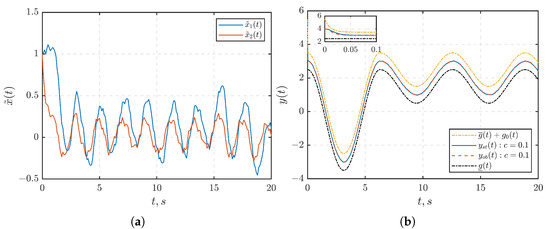

The transient responses of , and with an initial condition of are illustrated in Figure 2, Figure 3 and Figure 4, respectively. Figure 5a shows the plot illustrating the observation errors. In Figure 2, it becomes evident that the output signal remains within the given set at any time. Furthermore, never reaches the boundaries of the given set . This observation is grounded in the choice of the function in (35). Specifically, if the output signal reaches the boundaries or of the set , then the corresponding signal would go to infinity. This divergent behavior contradicts the boundedness of as obtained in Figure 3.

Figure 2.

The transient of output in the closed-loop system under: (a) . (b) . The yellow and black dashed lines represent the performance functions and . The solid blue line represents the output when the state feedback controller (10) is applied, and the red dashed line represents the output when the observed-based controller (26) is applied.

Figure 5.

(a) The observation errors . (b) The transient of output in the closed-loop system under for .

Furthermore, as depicted in Figure 3, the signal is stabilized within predefined ball regions. Specifically, at (Figure 3a), is stabilized within a ball with a radius (approximately ), whereas at (Figure 3b), it is stabilized within a ball with a radius (approximately ). Upon comparing Figure 2a,b, as well as Figure 3a,b, it is important to highlight that decreasing the value of the parameter c leads to enhanced suppression of disturbance effects.

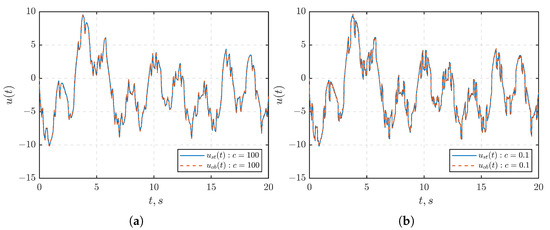

In Figure 4, the oscillations observed in the control signal can be attributed to the influence of the disturbance within the system (depicted in Figure 1a). Furthermore, as mentioned in Remark 3, owing to the presence of , the observation errors illustrated in Figure 5a do not converge to zero. Nevertheless, the observer-based control method effectively maintains the output signal within the given set. As a result, both proposed control methods demonstrate a high level of performance in stabilizing the output of the system (1).

Remark 4.

In the preceding example, we have assumed that the initial value of the output signal belongs to a given set. However, if the initial value of the output signal falls outside this given set, the proposed method becomes inapplicable. This limitation arises from the requirement established in transformation (3), demanding that the output signal be constrained within the predefined set. To address this limitation, we introduce an additional expedient: an exponentially decaying function with rapid decay, to augment the performance functions. By incorporating this modification, the newly defined bounds encompass the initial conditions as well.

Figure 5b illustrates the transient response of for the initial condition , where , a value that lies outside the original set . To rectify this, we introduce a term into the function . This modification ensures that the initial condition is bounded from above by the updated performance function .

7.2. Example 2: MIMO Case

Let us demonstrate the performance of control for a system (1) with two inputs and two outputs with the following parameters

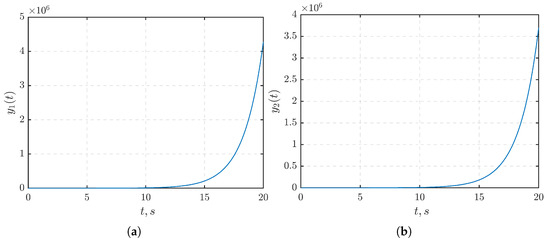

is defined as shown in Section 7.1. The given system is unstable since the matrix A is non-Hurwitz. Figure 6 illustrates the step response of the open-loop system, demonstrating that the output signals (Figure 6a) and (Figure 6b) go to infinity as time increases.

Figure 6.

The open-loop step response: (a) . (b) .

To design a closed-loop system, we formulate the function with as defined in (35)

Then, we derive the inverse functions as follows:

For all and , the following results are obtained

and

Consequently, the matrix is positive definite. Moreover, based on the last inequality, the upper bound of the function can be estimated as .

For we have , where

The parameters governing the restriction functions and are specified as follows:

Then, the value of is calculated as . By selecting the eigenvalues of the observer matrix to be , we determine that and . Utilizing the YALMIP package along with the SEDUMI solver for , we find solutions that satisfy the inequalities (12) and (33) at a specific value for . The detailed outcome is presented in Table 2.

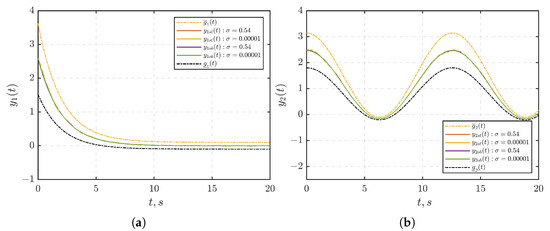

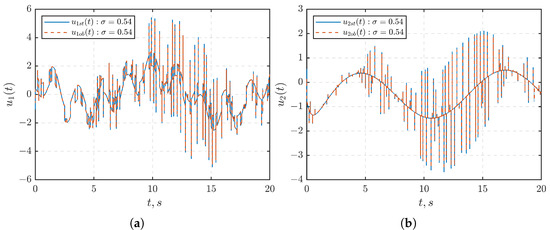

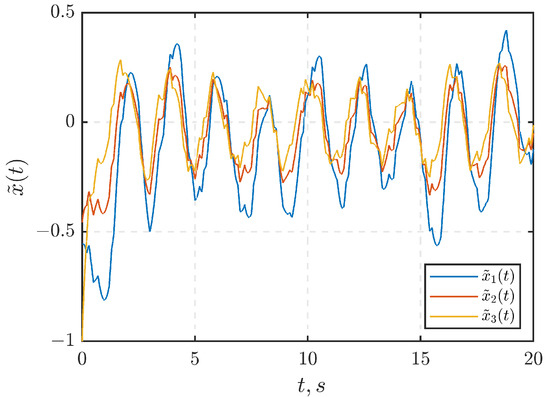

Similar to Section 7.1, it becomes evident from Figure 7 that the control laws (10) and (26) ensure the adherence of the output signals (Figure 7a) and (Figure 7b) of the MIMO system (1) to the prescribed sets across all times for both cases of choosing or . At the parameter value , the external disturbance is effectively suppressed. The behavior of the control signals and for is illustrated in Figure 8a and Figure 8b, respectively. Figure 9 further illustrates the observation errors. As demonstrated in Section 7.1, achieving convergence to zero in observation errors during the design of the state observer is not necessary.

Figure 7.

The transients of outputs , in the closed-loop system under with and : (a) . (b) . The yellow and black dashed lines represent the performance functions and . The red and orange solid lines represents the outputs when the state feedback controller (10) is applied, and the the purple and green solid lines represents the output when the observed-based controller (26) is applied.

Figure 9.

The observation errors .

8. Conclusions

This paper proposed a general procedure for designing nonlinear control laws for linear systems with a guarantee of finding output signals in given sets based on the transformation of coordinates [25]. The selection function in a diagonal form and using S-procedure Lemma enabled us to analyze the ISS stability of the closed-loop MIMO system consisting of a nonstationary matrix . In this paper, two control laws were proposed depending on the measurability of the state vector, one based on state feedback and the other on observer feedback. Furthermore, the parameters for the control laws were computed utilizing LMIs, extending the applicability of the method [25] in practice. These control laws guaranteed the boundedness of the auxiliary variable , leading to the maintenance of the output variable within the given set. By reducing the radius of the attractive set of via parameter c, we reduced the effect of disturbances on the output . Moreover, when designing the observer for the system (1) with unknown disturbances, it is not required to ensure the convergence of observation errors to zero. Instead, the observer should be designed to guarantee the boundedness of the estimation error. As the simulation results showed, both proposed control laws demonstrated a high-performance level in stabilizing the output of the system (1) within the given set.

Author Contributions

Conceptualization, B.H.N. and I.B.F; methodology, B.H.N. and I.B.F; validation, B.H.N. and I.B.F; writing—original draft, B.H.N.; writing—review and editing, B.H.N. and I.B.F; supervision, I.B.F. All authors have read and agreed to the published version of the manuscript.

Funding

Data Availability Statement

Data sharing is not applicable to this article.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Boldea, I. Control of Electric Generators: A Review. IECON Proc. Industrial Electron. Conf. 2003, 1, 972–980. [Google Scholar] [CrossRef]

- Furtat, I.; Nekhoroshikh, A.; Gushchin, P. Synchronization of multi-machine power systems under disturbances and measurement errors. Int. J. Adapt. Control. Signal Process. 2022, 36, 1272–1284. [Google Scholar] [CrossRef]

- Pavlov, G.; Merkurev, G. Energy Systems Automation; Papirus Publisher: St. Petersburg, Russia, 2001. (In Russian) [Google Scholar]

- Kundur, P. Power System Stability and Control; McGraw-Hill Education: New York, NY, USA, 1994; p. 1176. [Google Scholar]

- Landera, Y.G.; Zevallos, O.C.; Neves, F.A.S.; Neto, R.C.; Prada, R.B. Control of PV Systems for Multimachine Power System Stability Improvement. IEEE Access 2022, 10, 45061–45072. [Google Scholar] [CrossRef]

- Feydi, A.; Elloumi, S.; Braiek, N.B. Robustness of Decentralized Optimal Control Strategy Applied On Two Interconnected Generators System With Uncertainties. In Proceedings of the 2023 IEEE International Conference on Advanced Systems and Emergent Technologies, Hammamet, Tunisia, 29 April–1 May 2023; pp. 1–6. [Google Scholar] [CrossRef]

- Tan, H.; Cong, L. Modeling and Control Design for Distillation Columns Based on the Equilibrium Theory. Processes 2023, 11, 607. [Google Scholar] [CrossRef]

- Verevkin, A.; Kiriushin, O. Control of the formation pressure system using finite-state-machine models. Oil Gas Territ. 2008, 10, 14–19. (In Russian) [Google Scholar]

- Bouyahiaoui, C.; Grigoriev, L.I.; Laaouad, F.; Khelassi, A. Optimal fuzzy control to reduce energy consumption in distillation columns. Autom. Remote Control 2005, 66, 200–208. [Google Scholar] [CrossRef]

- Sunori, S.K.; Joshi, K.A.; Mittal, A.; Nainwal, D.; Khan, F.; Juneja, P. Improvement in Controller Performance for Distillation Column Using IMC Technique. In Proceedings of the 2023 International Conference for Advancement in Technology (ICONAT), Goa, India, 24–26 January 2023; pp. 1–5. [Google Scholar] [CrossRef]

- Ogata, K. Modern Control Engineering; Prentice Hall: Upper Saddle River, NJ, USA, 2010; Volume 5. [Google Scholar]

- Lewis, F.L.; Vrabie, D.; Syrmos, V.L. Optimal Control; John Wiley & Sons: Hoboken, NJ, USA, 2012. [Google Scholar]

- Filabadi, M.M.; Hashemi, E. Distributed Robust Control Framework for Adaptive Cruise Control Systems. In Proceedings of the 2023 American Control Conference (ACC), San Diego, CA, USA, 31 May–2 June 2023; pp. 3215–3220. [Google Scholar] [CrossRef]

- Wang, T.; Sun, Z.; Ke, Y.; Li, C.; Hu, J. Two-Step Adaptive Control for Planar Type Docking of Autonomous Underwater Vehicle. Mathematics 2023, 11, 3467. [Google Scholar] [CrossRef]

- Hassan, F.; Zolotas, A.; Halikias, G. New Insights on Robust Control of Tilting Trains with Combined Uncertainty and Performance Constraints. Mathematics 2023, 11, 3057. [Google Scholar] [CrossRef]

- Ioannou, P.A.; Sun, J. Robust Adaptive Control; PTR Prentice-Hall: Upper Saddle River, NJ, USA, 1996; Volume 1. [Google Scholar]

- Krstic, M.; Kokotovic, P.V.; Kanellakopoulos, I. Nonlinear and Adaptive Control Design; John Wiley & Sons, Inc.: Hoboken, NJ, USA, 1995. [Google Scholar]

- Narendra, K.S.; Annaswamy, A.M. Stable Adaptive Systems; Courier Corporation: Chelmsford, MA, USA, 2012. [Google Scholar]

- Tao, G. Adaptive Control Design and Analysis; John Wiley & Sons: Hoboken, NJ, USA, 2003; Volume 37. [Google Scholar]

- Xu, J.; Lin, N.; Chi, R. Improved High-Order Model Free Adaptive Control. In Proceedings of the 2021 IEEE 10th Data Driven Control and Learning Systems Conference (DDCLS), Suzhou, China, 14–16 May 2021; pp. 704–708. [Google Scholar] [CrossRef]

- Khlebnikov, M.V.; Polyak, B.T.; Kuntsevich, V.M. Optimization of linear systems subject to bounded exogenous disturbances: The invariant ellipsoid technique. Autom. Remote Control 2011, 72, 2227–2275. [Google Scholar] [CrossRef]

- Miller, D.E.; Davison, E.J. An Adaptive Controller Which Provides an Arbitrarily Good Transient and Steady-State Response. IEEE Trans. Autom. Control 1991, 36, 68–81. [Google Scholar] [CrossRef]

- Bechlioulis, C.P.; Rovithakis, G.A. Robust Adaptive Control of Feedback Linearizable MIMO Nonlinear Systems With Prescribed Performance. IEEE Trans. Autom. Control 2008, 53, 2090–2099. [Google Scholar] [CrossRef]

- Furtat, I.; Gushchin, P. Control of Dynamical Systems with Given Restrictions on Output Signal with Application to Linear Systems. IFAC Pap. 2020, 53, 6384–6389. [Google Scholar] [CrossRef]

- Furtat, I.B.; Gushchin, P.A. Control of Dynamical Plants with a Guarantee for the Controlled Signal to Stay in a Given Set. Autom. Remote Control 2021, 82, 654–669. [Google Scholar] [CrossRef]

- Polyak, B.T.; Khlebnikov, M.V.; Shcherbakov, P.S. Linear Matrix Inequalities in Control Systems with Uncertainty. Autom. Remote Control 2021, 82, 1–40. [Google Scholar] [CrossRef]

- Poznyak, A.; Polyakov, A.; Azhmyakov, V. Attractive Ellipsoids in Robust Control; Springer International Publishing: Berlin/Heidelberg, Germany, 2014. [Google Scholar] [CrossRef]

- Boyd, S.; El Ghaoui, L.; Feron, E.; Balakrishnan, V. Linear Matrix Inequalities in System and Control Theory; SIAM: Philadelphia, PA, USA, 1994. [Google Scholar]

- Fradkov, A.L.; Miroshnik, I.V.; Nikiforov, V.O. Nonlinear and Adaptive Control of Complex Systems; Springer: Berlin/Heidelberg, Germany, 1999. [Google Scholar] [CrossRef]

- Isidori, A. Nonlinear Control Systems II; Springer: Berlin/Heidelberg, Germany, 2013. [Google Scholar]

- Khalil, H.K. Nonlinear Control; Pearson: New York, NY, USA, 2015; Volume 406. [Google Scholar]

- Sturm, J.F. Using SeDuMi 1.02, a Matlab toolbox for optimization over symmetric cones. Optim. Methods Softw. 1999, 11, 625–653. [Google Scholar] [CrossRef]

- Toh, K.C.; Todd, M.J.; Toh, K.C.; Todd, M.J.; Tütüncü, R.H.; TOHal, K.C.; Tutuncu, R.H. SDPT3-a MATLAB software package for semidefinite programming, version 1.3. Optirniration Methods Softw. 1999, 11, 545–581. [Google Scholar] [CrossRef]

- Borchers, B. CSDP, A C library for semidefinite programming. Optim. Methods Softw. 1999, 11, 613–623. [Google Scholar] [CrossRef]

- Luenberger, D. An introduction to observers. IEEE Trans. Autom. Control 1971, 16, 596–602. [Google Scholar] [CrossRef]

- Löfberg, J. YALMIP: A toolbox for modeling and optimization in MATLAB. In Proceedings of the IEEE International Symposium on Computer-Aided Control System Design, Taipei, Taiwan, 2–4 September 2004; pp. 284–289. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).