A Multi-Scale Hybrid Attention Network for Sentence Segmentation Line Detection in Dongba Scripture

Abstract

1. Introduction

- (1)

- A new Dongba scripture sentence segmentation line detection dataset named DBS2022 is constructed, which consists of 2504 standard images and their annotations. It supports Dongba scripture sentence segmentation line detection (DS-SSLDD). As far as we know, this is the only dataset from the real Dongba scriptures, including images of Dongba scripture such as sacrifices and ceremonies.

- (2)

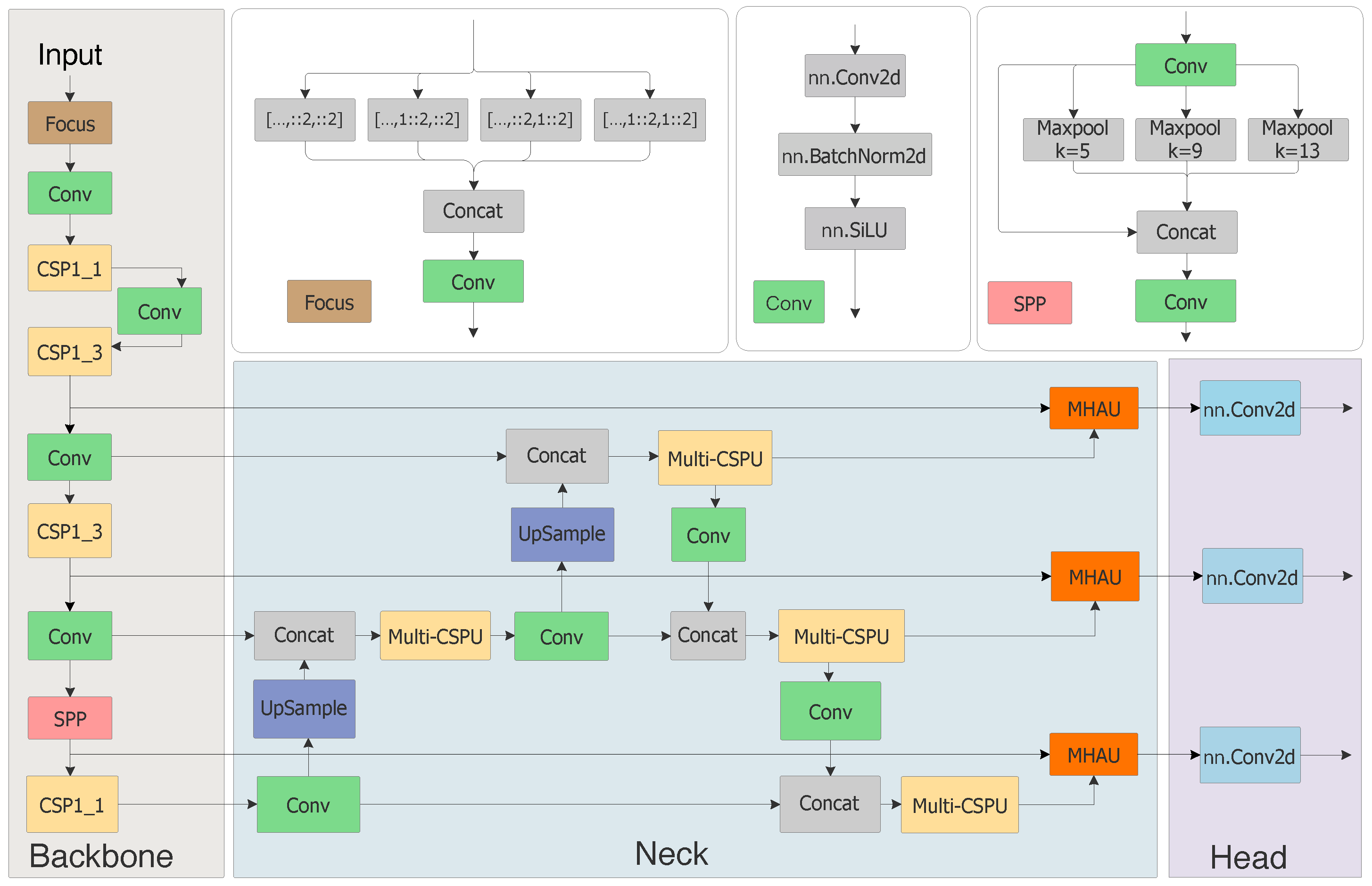

- A novel structure named multi-scale hybrid attention network (Multi-HAN) is proposed, where both novel multiple hybrid attention unit (MHAU) and multi-scale cross-stage partial unit (Multi-CSPU) are designed. MHAU is designed for establishing dependence between multi-scale channel attention. Multi-CSPU is proposed for multi-scale and richer feature extraction, and implements a gradient combination with richer scales, thus improving the learning ability of the network.

- (3)

- As an application, a new object detection framework named Multi-HAN for DS-SSLD is proposed. We conducted experiments on our constructed DBS2022, which validated the effectiveness of our methods, and the quantity of the experimental results demonstrates that our proposed Multi-HAN is superior to the state-of-the-art methods.

2. Related Work

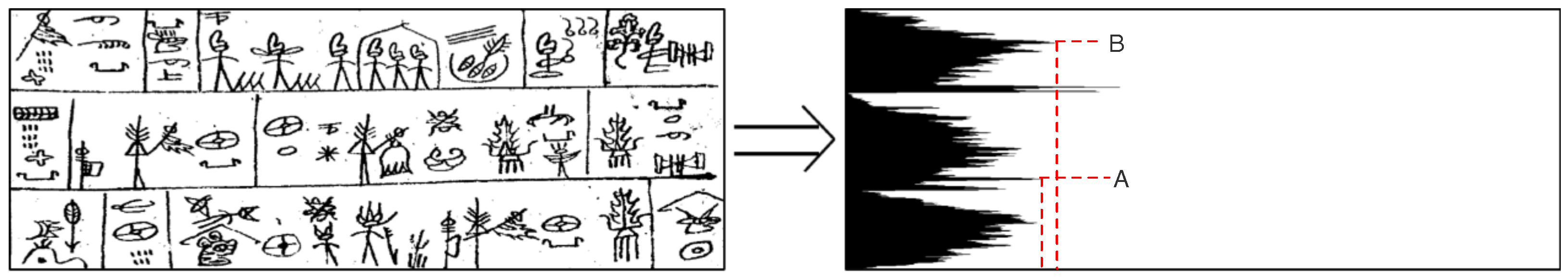

2.1. Traditional Approaches

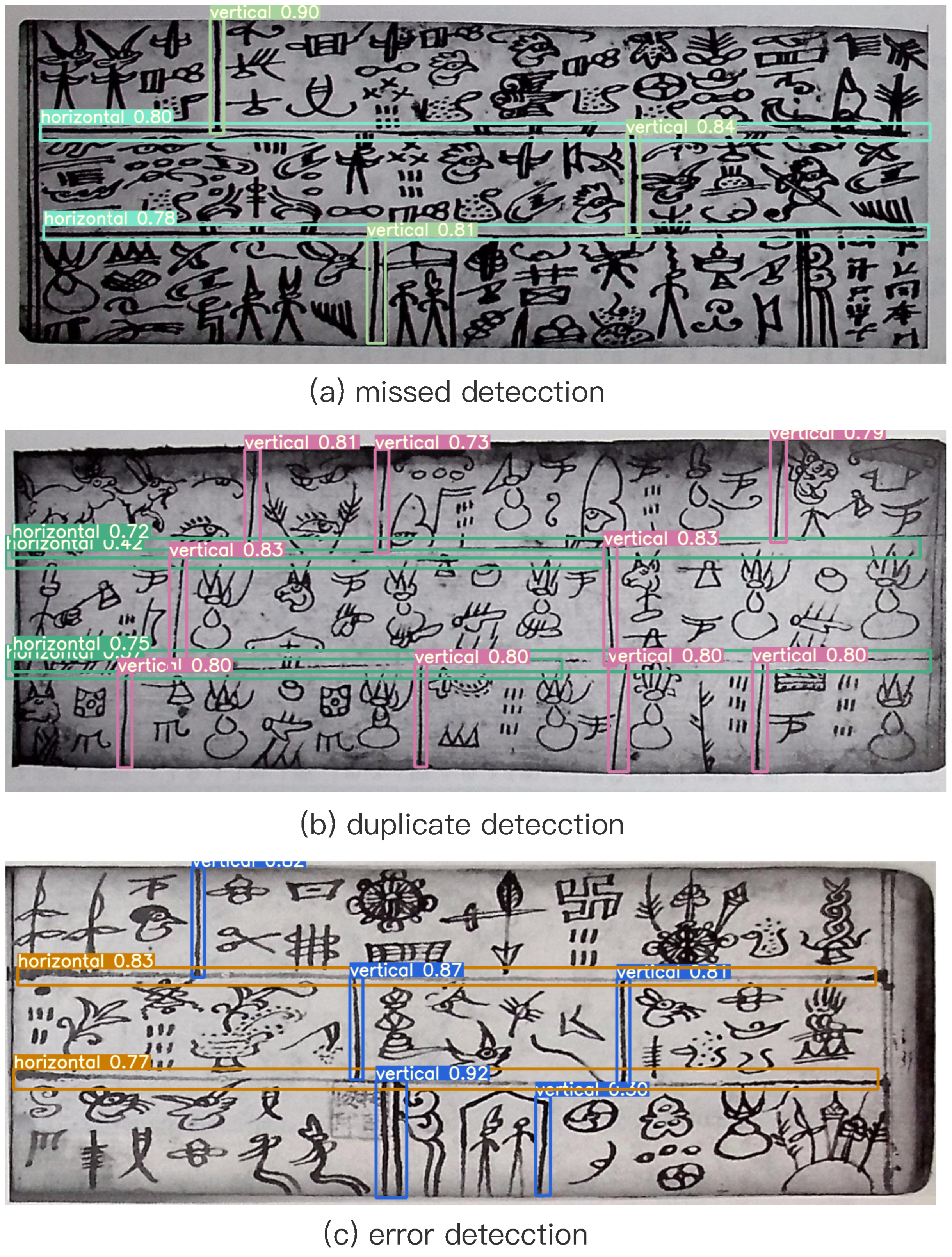

2.2. Deep Learning Network Approaches

2.3. Attention Mechanism in CNN

3. Method

3.1. Overview Structure of Multi-HAN

3.2. Multiple Hybrid Attention Unit

3.2.1. Squeeze and Concat Module

3.2.2. Attention Extraction Module

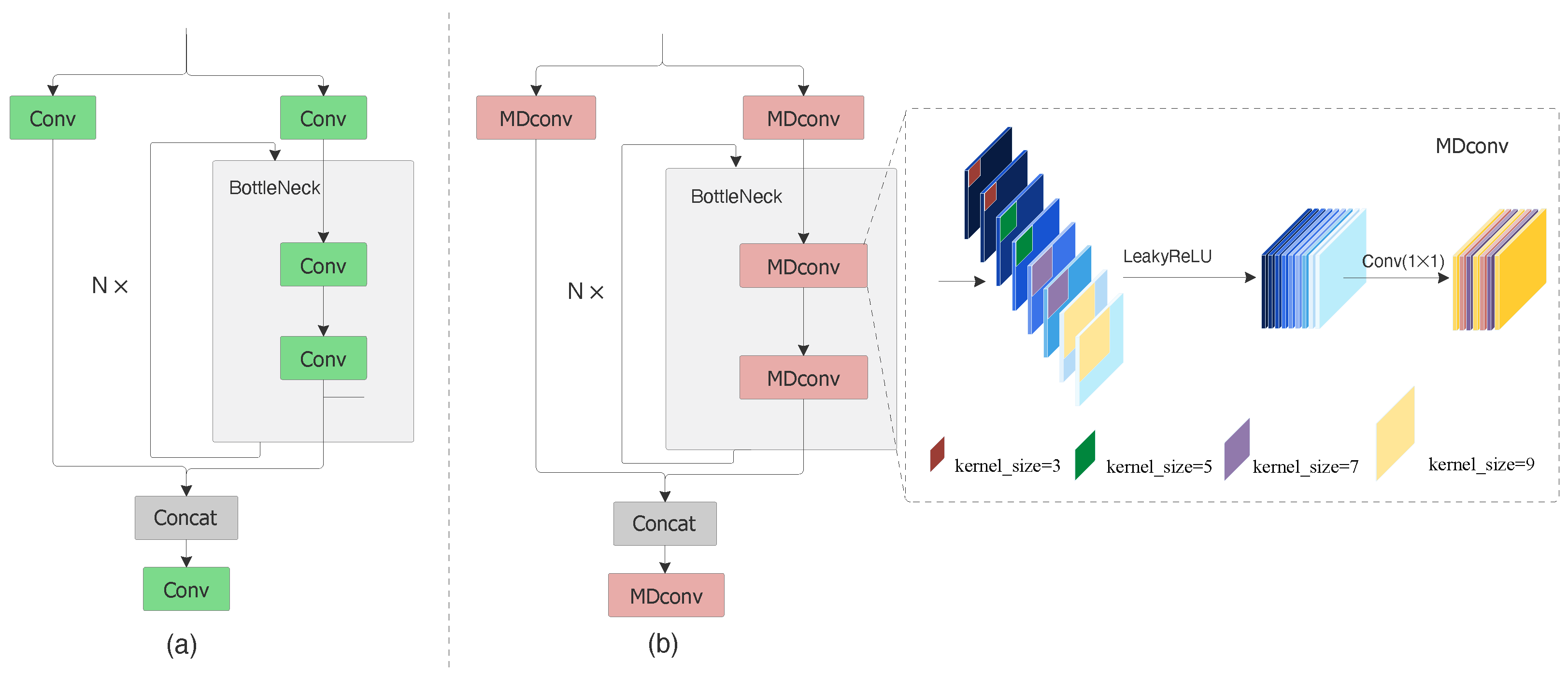

3.3. Multi-Scale Cross-Stage Partial Unit

4. The Construction of Dongba Scripture Sentence Segmentation Dataset

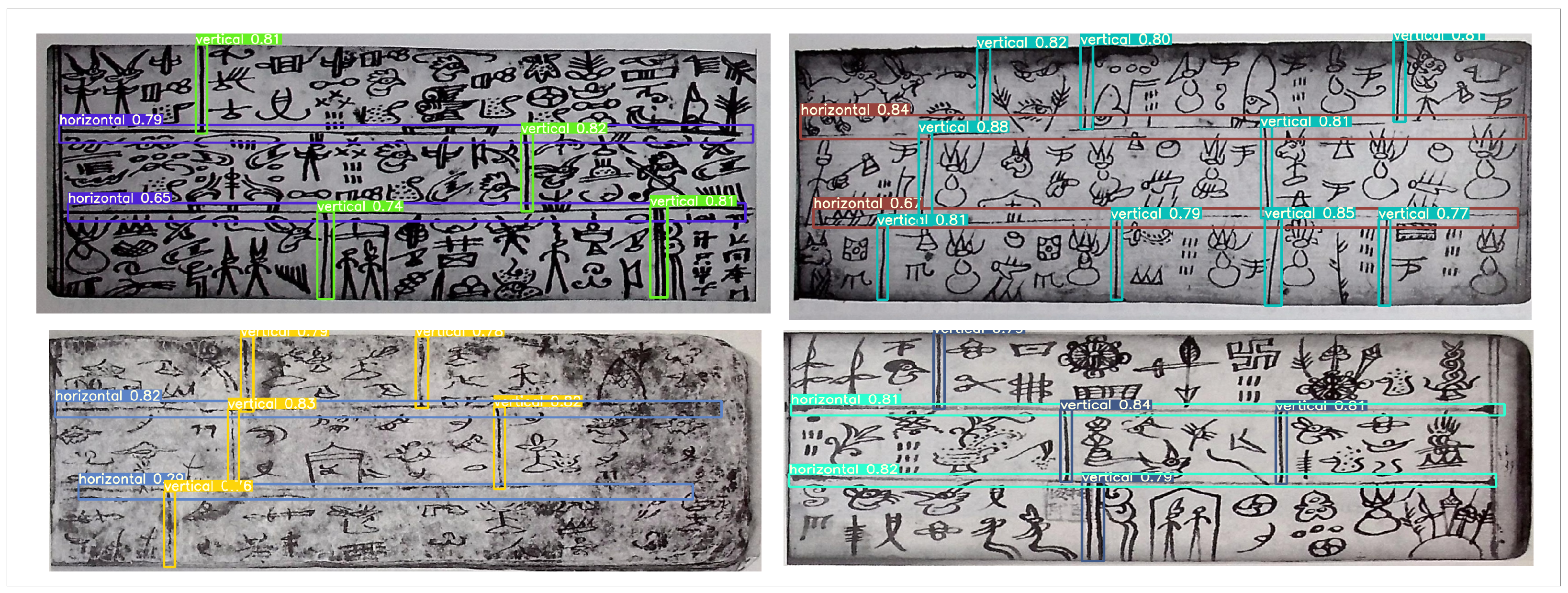

Quality Control and Annotations

5. Experiments

5.1. Experimental Setup

5.2. Evaluation Metrics

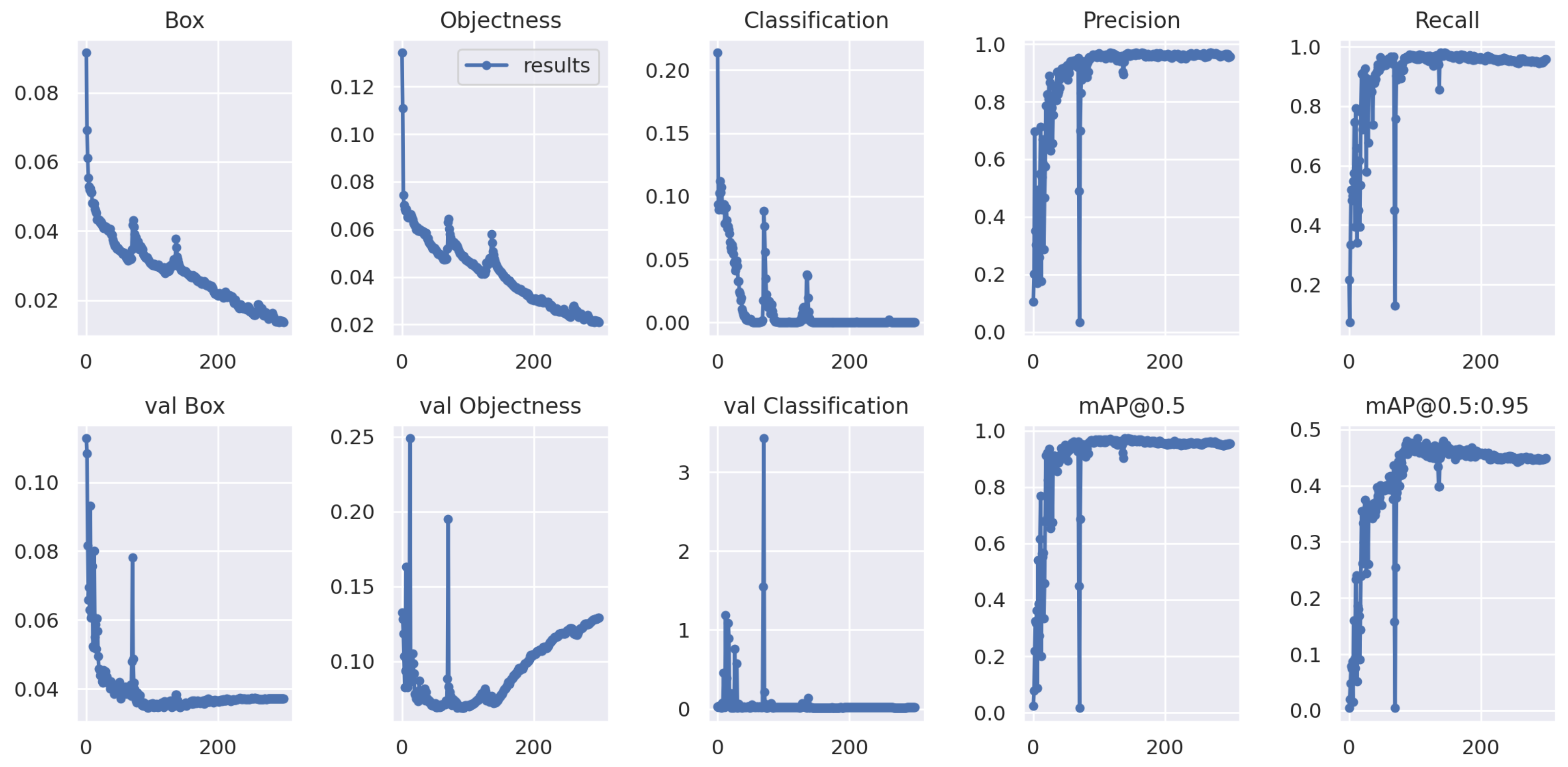

5.3. Training Result

5.4. Superiority Studies

5.4.1. Comparison with Deep Neural Network Models

5.4.2. Comparison with Recently Proposed Attention Mechanism

5.5. Ablation Studies

- (1)

- We find that adding the MHAU with the cross-stage connection mode can improve DS-SSLD performance on our DBS2022 according to the results of Baseline and Baseline + MHAU (cross-stage) (the first and third row). It may be concluded that the proposed MHAU is more beneficial for Multi-HAN to focus on effective features, However, the degree of improvement is not significant because the role of the attention mechanism is to increase weights for certain effective features. If the ability to enhance features is insufficient, the improvement brought by the attention mechanism is limited.

- (2)

- According to the results of Baseline + MHAU (downward) and Baseline + MHAU (cross-stage) (the second and third row), it can be found that the cross-stage connection mode can improve the performance of DS-SSLD more than the downward transmission connection mode. It can be concluded that when extracting attention maps from shallow feature maps of the network, the spatial feature map is large, and the number of channels is small; the extracted weights, especially the channel weights, are too general and not applied to some specific features. The extracted spatial attention is sensitive and difficult to capture due to limited channels. Downward transmission may directly and fundamentally affect the ability of the backbone network to extract features, and may have a negative impact, on the contrary. The cross-stage MHAU can fully aggregate shallow detailed features and deep semantic information. Without affecting feature extraction, attention maps captured with corresponding sizes of shallow detail information can enhance and constrain deep global information, helping to distinguish important features from redundant features at the head stage.

- (3)

- According to the results of Baseline and Baseline + Multi-CSPU (the first and forth row), we can know that Multi-CSPU-based MDconv [10] achieves more improvement. It benefits by the powerful ability of MDconv [10] to extract abundant multi-scale features, and also proves that rich feature expression is more important for improving performance.

- (4)

- Baseline + MHAU (downward) (the second row) decreased by 0.1% compared with Baseline (the first row), while Baseline + MHAU (cross-stage) + Multi-CSPU (the fifth row) increased by 1.2% compared with Baseline + Multi-CSPU (the forth row). It can further prove that MDconv [10] has a strong ability to enhance effective feature extraction.

- (5)

- Combining all the above components, the Multi-HAN model achieves the highest detection results, demonstrating further improvement in the combination of MHAU and Multi-CSPU. Moreover, on the basis of adding MDconv [10], increasing MHAU significantly improves the performance of the model, proving that strong feature extraction ability is a prerequisite for the attention mechanism to function.

5.6. Cross Validation

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Zheng, F. Analysis and Segmentation Algorithm of Dongba Pictograph Document; Nationalities Publishing House: Beijing, China, 2005; pp. 1–230. [Google Scholar]

- Institute, D.C.R. An Annotated Collection of Naxi Dongba Manuscripts; Yunnan People’s Publishing House: Kunming, China, 1999. [Google Scholar]

- Yang, Y.T.; Kang, H.L. Analysis and Segmentation Algorithm of Dongba Pictograph Document. In Proceedings of the 2020 4th Annual International Conference on Data Science and Business Analytics (ICDSBA), Changsha, China, 5–6 September 2020; pp. 91–93. [Google Scholar]

- Yang, Y.; Kang, H. Dongba Scripture Segmentation Algorithm Based on Discrete Curve Evolution. In Proceedings of the 2021 14th International Symposium on Computational Intelligence and Design (ISCID), Hangzhou, China, 11–12 December 2021; pp. 416–419. [Google Scholar]

- Yang, Y.; Kang, H. Text Line Segmentation Algorithm for Dongba Pictograph Document. In Proceedings of the 2021 14th International Symposium on Computational Intelligence and Design (ISCID), Hangzhou, China, 11–12 December 2021; pp. 412–415. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Proceedings of the Advances in Neural Information Processing Systems, Nevada, CA, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Liu, S.; Huang, S.; Wang, S.; Muhammad, K.; Bellavista, P.; Del Ser, J. Visual tracking in complex scenes: A location fusion mechanism based on the combination of multiple visual cognition flows. Inf. Fusion 2023, 96, 281–296. [Google Scholar] [CrossRef]

- Liu, S.; Gao, P.; Li, Y.; Fu, W.; Ding, W. Multi-modal fusion network with complementarity and importance for emotion recognition. Inf. Sci. 2023, 619, 679–694. [Google Scholar] [CrossRef]

- Chen, Z.; Sun, Y.; Bi, X.; Yue, J. Lightweight image de-snowing: A better trade-off between network capacity and performance. Neural Netw. 2023, 165, 896–908. [Google Scholar] [CrossRef] [PubMed]

- Tan, M.; Le, Q.V. Mixconv: Mixed depthwise convolutional kernels. arXiv 2019, arXiv:1907.09595. [Google Scholar]

- Gong, D.; Sha, F.; Medioni, G. Locally linear denoising on image manifolds. In Proceedings of the Thirteenth International Conference on Artificial Intelligence and Statistics. JMLR Workshop and Conference Proceedings, Sardinia, Italy, 13–15 May 2010; pp. 265–272. [Google Scholar]

- Chen, T.; Ma, K.K.; Chen, L.H. Tri-state median filter for image denoising. IEEE Trans. Image Process. 1999, 8, 1834–1838. [Google Scholar] [CrossRef]

- Zhang, X.P.; Desai, M.D. Adaptive denoising based on SURE risk. IEEE Signal Process. Lett. 1998, 5, 265–267. [Google Scholar] [CrossRef]

- Pan, Q.; Zhang, L.; Dai, G.; Zhang, H. Two denoising methods by wavelet transform. IEEE Trans. Signal Process. 1999, 47, 3401–3406. [Google Scholar] [CrossRef]

- Zhou, S.F.; Liu, C.P.; Liu, G.; Gong, S.R. Multi-step segmentation method based on minimum weight segmentation path for ancient handwritten Chinese character. J. Chin. Comput. Syst. 2012, 33, 614–620. [Google Scholar]

- Likas, A.; Vlassis, N.; Verbeek, J.J. The global k-means clustering algorithm. Pattern Recognit. 2003, 36, 451–461. [Google Scholar] [CrossRef]

- Paliwal, S.S.; Vishwanath, D.; Rahul, R.; Sharma, M.; Vig, L. Tablenet: Deep learning model for end-to-end table detection and tabular data extraction from scanned document images. In Proceedings of the 2019 International Conference on Document Analysis and Recognition (ICDAR), Sydney, NSW, Australia, 20–25 September 2019; pp. 128–133. [Google Scholar]

- Siddiqui, S.A.; Fateh, I.A.; Rizvi, S.T.R.; Dengel, A.; Ahmed, S. Deeptabstr: Deep learning based table structure recognition. In Proceedings of the 2019 International Conference on Document Analysis and Recognition (ICDAR), Sydney, NSW, Australia, 20–25 September 2019; pp. 1403–1409. [Google Scholar]

- Renton, G.; Soullard, Y.; Chatelain, C.; Adam, S.; Kermorvant, C.; Paquet, T. Fully convolutional network with dilated convolutions for handwritten text line segmentation. Int. J. Doc. Anal. Recognit. (IJDAR) 2018, 21, 177–186. [Google Scholar] [CrossRef]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28, 1137–1149. [Google Scholar] [CrossRef] [PubMed]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 2117–2125. [Google Scholar]

- Cai, Z.; Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6154–6162. [Google Scholar]

- Zhu, Y.; Zhao, C.; Wang, J.; Zhao, X.; Wu, Y.; Lu, H. Couplenet: Coupling global structure with local parts for object detection. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 4126–4134. [Google Scholar]

- Lee, H.; Eum, S.; Kwon, H. Me r-cnn: Multi-expert r-cnn for object detection. IEEE Trans. Image Process. 2019, 29, 1030–1044. [Google Scholar] [CrossRef] [PubMed]

- Li, Y.; Chen, Y.; Wang, N.; Zhang, Z. Scale-aware trident networks for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6054–6063. [Google Scholar]

- Cai, Z.; Fan, Q.; Feris, R.S.; Vasconcelos, N. A unified multi-scale deep convolutional neural network for fast object detection. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 11–14 October 2016; pp. 354–370. [Google Scholar]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the Computer Vision–ECCV 2016: 14th European Conference, Amsterdam, The Netherlands, 11–14 October 2016; Proceedings, Part I 14. Springer: Amsterdam, The Netherlands, 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Jocher, G.; Stoken, A.; Borovec, J.; Chaurasia, A.; Liu, C.; Laughing, A.; Hogan, A.; Hajek, J.; Diaconu, L.; Marc, Y.; et al. ultralytics/yolov5: V5. 0-YOLOv5-P6 1280 models AWS Supervise. ly and YouTube integrations. Zenodo 2021, 11. [Google Scholar] [CrossRef]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. Yolov4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Law, H.; Deng, J. CornerNet: Detecting Objects as Paired Keypoints. Int. J. Comput. Vis. 2020, 128, 642–656. [Google Scholar] [CrossRef]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. Centernet: Keypoint triplets for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6569–6578. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is all you need. arXiv 2017, arXiv:1706.03762. [Google Scholar]

- Zhu, X.; Su, W.; Lu, L.; Li, B.; Wang, X.; Dai, J. DD Deformable transformers for end-to-end object detection. In Proceedings of the 9th International Conference on Learning Representations, Virtual Event, 3–7 May 2021; pp. 3–7. [Google Scholar]

- Zheng, M.; Gao, P.; Zhang, R.; Li, K.; Wang, X.; Li, H.; Dong, H. End-to-end object detection with adaptive clustering transformer. arXiv 2020, arXiv:2011.09315. [Google Scholar]

- Beal, J.; Kim, E.; Tzeng, E.; Park, D.H.; Zhai, A.; Kislyuk, D. Toward transformer-based object detection. arXiv 2020, arXiv:2012.09958. [Google Scholar]

- Dosovitskiy, A.; Beyer, L.; Kolesnikov, A.; Weissenborn, D.; Zhai, X.; Unterthiner, T.; Dehghani, M.; Minderer, M.; Heigold, G.; Gelly, S.; et al. An image is worth 16x16 words: Transformers for image recognition at scale. arXiv 2020, arXiv:2010.11929. [Google Scholar]

- Qin, Z.; Zhang, P.; Wu, F.; Li, X. Fcanet: Frequency channel attention networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10-17 October 2021; pp. 783–792. [Google Scholar]

- Sang, H.; Zhou, Q.; Zhao, Y. Pcanet: Pyramid convolutional attention network for semantic segmentation. Image Vis. Comput. 2020, 103, 103997. [Google Scholar] [CrossRef]

- Chen, Y.; Kalantidis, Y.; Li, J.; Yan, S.; Feng, J. A2-nets: Double attention networks. arXiv 2018, arXiv:1810.11579. [Google Scholar]

- Zhang, H.; Wu, C.; Zhang, Z.; Zhu, Y.; Lin, H.; Zhang, Z.; Sun, Y.; He, T.; Mueller, J.; Manmatha, R.; et al. Resnest: Split-attention networks. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 2736–2746. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Wang, Q.; Wu, B.; Zhu, P.; Li, P.; Zuo, W.; ECA-Net, Q.H. Efficient Channel Attention for Deep Convolutional Neural Networks. arXiv 2019, arXiv:1910.03151. [Google Scholar]

- Zhang, H.; Zu, K.; Lu, J.; Zou, Y.; Meng, D. EPSANet: An efficient pyramid squeeze attention block on convolutional neural network. In Proceedings of the Asian Conference on Computer Vision, Macao, China, 4–8 December 2022; pp. 1161–1177. [Google Scholar]

- Woo, S.; Park, J.; Lee, J.Y.; Kweon, I.S. Cbam: Convolutional block attention module. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 3–19. [Google Scholar]

- Misra, D.; Nalamada, T.; Arasanipalai, A.U.; Hou, Q. Rotate to attend: Convolutional triplet attention module. In Proceedings of the IEEE/CVF Winter Conference on Applications of Computer Vision, Virtual Conference, 5–9 January 2021; pp. 3139–3148. [Google Scholar]

- Lin, M.; Chen, Q.; Yan, S. Network in network. arXiv 2013, arXiv:1312.4400. [Google Scholar]

- Nair, V.; Hinton, G.E. Rectified linear units improve restricted boltzmann machines. In Proceedings of the 27th International Conference on Machine Learning (ICML-10), Haifa, Israel, 21–24 June 2010; pp. 807–814. [Google Scholar]

- Nielsen, M.A. Neural Networks and Deep Learning; Determination Press: San Francisco, CA, USA, 2015; Volume 25. [Google Scholar]

- Luce, R.D. Individual Choice Behavior: A Theoretical Analysis; Courier Corporation: North Chelmsford, MA, USA, 2012. [Google Scholar]

- Wang, C.Y.; Liao, H.Y.M.; Wu, Y.H.; Chen, P.Y.; Hsieh, J.W.; Yeh, I.H. CSPNet: A new backbone that can enhance learning capability of CNN. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops, Seattle, WA, USA, 14–19 June 2020; pp. 390–391. [Google Scholar]

- Yu, F.; Koltun, V. Multi-scale context aggregation by dilated convolutions. arXiv 2015, arXiv:1511.07122. [Google Scholar]

- Chen, Z.; Bi, X.; Zhang, Y.; Yue, J.; Wang, H. LightweightDeRain: Learning a lightweight multi-scale high-order feedback network for single image de-raining. Neural Comput. Appl. 2022, 34, 5431–5448. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Chintala, S.; Chanan, G.; Yang, E.; DeVito, Z.; Lin, Z.; Desmaison, A.; Antiga, L.; Lerer, A. Automatic differentiation in pytorch. In NIPS 2017 Workshop; NIPS: Long Beach, CA, USA, 2017. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Newell, A.; Yang, K.; Deng, J. Stacked hourglass networks for human pose estimation. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016; pp. 483–499. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

| Name | DBS2022 |

|---|---|

| Object categories | 2 (horizontal, vertical) |

| Format | TXT |

| Image | 2504 |

| Bounding boxes of horizontal | 5250 |

| Bounding boxes of vertical | 15,776 |

| Models | Anchor-Based (Free) | Backbone | mAP@.5 |

|---|---|---|---|

| Faster-RCNN [20] | Anchor-based | ResNet101 | 87.7 |

| Anchor-based | Vgg16 | 82.4 | |

| SSD [27] | Anchor-based | ResNet50 | 79.7 |

| YOLOv3 [28] | Anchor-based | DarkNet53 | 88.8 |

| YOLOv5s [29] | Anchor-based | Focus + CSPDarkNet + SPP | 91.5 |

| CornerNet [31] | Anchor-free | Hourglass-104 | 79.9 |

| CenterNet [32] | Anchor-free | ResNet50 | 89.2 |

| CenterNet [32] | Anchor-free | Hourglass-104 | 91.6 |

| Anchor-based | Focus + CSPDarkNet + SPP | ||

| Models | Parameters | mAP@.5 |

|---|---|---|

| YOLOv5s + Multi-CSPU + Triplet [46] | 16.1 M | 90.5 |

| YOLOv5s + Multi-CSPU + CBAM [45] | 16.3 M | 92.8 |

| YOLOv5s + Multi-CSPU + SE [42] | 16.2 M | 93.2 |

| YOLOv5s + Multi-CSPU + EPSA [44] | 25.5 M | 93.8 |

| YOLOv5s + Multi-CSPU + ECA [43] | 16.2 M | 94.2 |

| 25.5 M | 94.7 |

| Baseline | MHAU | Multi-CSPU | mAP@0.5 | |

|---|---|---|---|---|

| Downward | Cross-Stage | |||

| √ | ||||

| YOLOv5s | √ | |||

| √ | ||||

| √ | √ | |||

| √ | √ | |||

| k-th | Test Set | Final Evaluation | ||

|---|---|---|---|---|

| mAP@.5 | mAP@.5:.95 | mAP@.5 | mAP@.5:.95 | |

| 1-th | 92.4 | 46.5 | 94.5 | 46.5 |

| 2-th | 92.7 | 47.1 | 94.7 | 47.5 |

| 3-th | 94.2 | 47.3 | 95.0 | 46.8 |

| 4-th | 94.0 | 48.8 | 95.1 | 49.9 |

| 5-th | 91.1 | 47.1 | 94.4 | 47.0 |

| 92.9 | 47.4 | 94.7 | 47.5 | |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xing, J.; Bi, X.; Weng, Y. A Multi-Scale Hybrid Attention Network for Sentence Segmentation Line Detection in Dongba Scripture. Mathematics 2023, 11, 3392. https://doi.org/10.3390/math11153392

Xing J, Bi X, Weng Y. A Multi-Scale Hybrid Attention Network for Sentence Segmentation Line Detection in Dongba Scripture. Mathematics. 2023; 11(15):3392. https://doi.org/10.3390/math11153392

Chicago/Turabian StyleXing, Junyao, Xiaojun Bi, and Yu Weng. 2023. "A Multi-Scale Hybrid Attention Network for Sentence Segmentation Line Detection in Dongba Scripture" Mathematics 11, no. 15: 3392. https://doi.org/10.3390/math11153392

APA StyleXing, J., Bi, X., & Weng, Y. (2023). A Multi-Scale Hybrid Attention Network for Sentence Segmentation Line Detection in Dongba Scripture. Mathematics, 11(15), 3392. https://doi.org/10.3390/math11153392