Abstract

This paper introduces an innovative extension of the DIRECT algorithm specifically designed to solve global optimization problems that involve Lipschitz continuous functions subject to linear constraints. Our approach builds upon recent advancements in DIRECT-type algorithms, incorporating novel techniques for partitioning and selecting potential optimal hyper-rectangles. A key contribution lies in applying a new mapping technique to eliminate the infeasible region efficiently. This allows calculations to be performed only within the feasible region defined by linear constraints. We perform extensive tests using a diverse set of benchmark problems to evaluate the effectiveness and performance of the proposed algorithm compared to existing DIRECT solvers. Statistical analyses using Friedman and Wilcoxon tests demonstrate the superiority of a new algorithm in solving such problems.

Keywords:

global optimization; derivative-free optimization; partitioning; DIRECT-type algorithms; linear constraints; constraint handling techniques; benchmark problems MSC:

90C56; 90C26; 65K05

1. Introduction

Global optimization is an active and important research area that focuses on discovering the best global solution for an objective function within a given domain. To tackle this challenging problem, numerous cutting-edge solution techniques have been devised. Among these, line search methods have gained widespread usage [1], involving iterative searches for the optimum by following a predetermined search direction. These methods have demonstrated their effectiveness in various domains of problems [2,3]. Another category of techniques comprises meta-heuristic algorithms, which draw inspiration from natural phenomena and problem-solving heuristics. Examples of such algorithms include genetic [4], particle swarm [5], and simulated annealing [6], which have achieved significant popularity and adoption. However, there have been developments in the form of more efficient methods and extensions [7,8,9]. Furthermore, advanced optimization methods such as Bayesian optimization [10] and the radial basis function [11] employ surrogate models to approximate the objective function, enabling an efficient guide of the search process. These techniques play a vital role in addressing complex and computationally demanding optimization problems.

This paper focus on a Lipschitz global optimization [12,13] problem presented in the following form:

where and . Here, D represents a full-dimensional convex polytope in which the minimization of the objective function takes place. The feasible region consists of points that satisfy all constraints and is denoted

For convergence, we assume that the objective function is Lipschitz continuous, at least in the vicinity of the globally optimal solution. However, the function can be non-linear, non-differentiable, non-convex, and multi-modal. Consequently, traditional optimization methods that rely on derivative information do not apply to such problems.

Global optimization of a non-linear objective function subject to linear constraints is a significant topic in mathematical programming, as it covers numerous real-world optimization problems. For example, in engineering design, the optimal design of structures, circuits, and manufacturing processes often involves linear constraints [14]. Similarly, portfolio optimization problems commonly involve linear constraints, such as budget restrictions or constraints on asset allocation percentages [15]. Furthermore, the optimal design and operation of chemical processes are subject to linear constraints arising from considerations such as material balances, equipment capacities, and safety constraints [16]. These examples highlight the diverse domains where linearly constrained non-convex global optimization problems arise.

The algorithm DIRECT [17] is widely recognized for its effectiveness in global optimization, particularly for box-constrained problems. It utilizes a deterministic sampling strategy that enables the discovery of global optima without derivative information. This makes it suitable for handling complex, multi-modal, and non-differentiable objective functions. Since the algorithm was first published, researchers have worked to improve its performance by introducing new and more efficient sequential and parallel variants [18,19,20,21,22,23,24,25,26,27,28]. Comprehensive numerical benchmark studies [29,30,31] demonstrated a very promising performance of DIRECT-type algorithms compared to other derivative-free global optimization methods.

The DIRECT-type algorithms have also shown their effectiveness in solving a wide range of problems with different types of constraints, including those described in Equation (1). Several studies, such as [18,24,32,33,34,35,36], have proposed DIRECT-type algorithms to handle general constraints and “hidden” constraints [37,38,39]. Most of these methods are based on penalties and auxiliary functions. These functions penalize violations of the constraints, ensuring that solutions adhere to the constraints. However, one of the main challenges with penalty-based approaches is the need to adjust the parameters, as the performance of these methods is highly sensitive to the chosen parameters [24,40]. To address this issue, researchers have explored techniques such as automatic penalty setting modification during optimization [18,24,34,35,36]. This leads to more reliable results than methods with manually selected penalty parameters. However, these methods primarily deal with bound constraints and face challenges when encountering infeasible regions. This becomes particularly difficult for DIRECT-type algorithms, as they need to subdivide these regions to uncover feasible regions, resulting in a significant number of wasted function evaluations. To address this issue, only two DIRECT-type algorithms have been specifically designed for problems with linear constraints. In [40], the authors proposed two simplicial partitioning approaches to handle linearly constrained problems, using simplices to cover the feasible region. However, these simplicial partitioning methods are mainly limited to lower-dimensional problems.

This paper presents a new algorithm of type DIRECT designed specifically to address the global optimization problems stated in Equation (1). Building on recent advances in DIRECT-type algorithms, we have integrated novel techniques for partitioning and selecting potential optimal hyper-rectangles. A notable innovation in our approach is the adoption of mapping techniques that efficiently eliminate the infeasible region, allowing computations exclusively within the region delimited by the linear constraints. Through extensive testing using the latest and substantially expanded version of the DIRECTGOLib v1.3 benchmark library [41], this study comprehensively investigates the performance and effectiveness of our proposed algorithm. Furthermore, to ensure the reliability and significance of our results, we employ statistical analyses, including the Friedman [42] and Wilcoxon [43] tests, to validate the superiority of our algorithm over existing DIRECT solvers for such problems.

Paper Contributions and Structure

The contributions of the paper can be summarized as follows:

- The review of techniques proposed to tackle linearly constrained problems within the framework of DIRECT-type algorithms.

- Introduction of a novel and distinctive DIRECT-type algorithm explicitly designed for non-convex problems involving linear constraints.

- The substantial enhancement of the DIRECTGOLib v1.3 benchmark library by incorporating 34 lineary-constrained test problems.

- The provision of the novel algorithm developed as an open-source resource to ensure the full reproducibility and reusability of all results.

The remaining sections of this paper are structured as follows. In Section 2.1, we provide a review of the original DIRECT algorithm, while Section 2.2 covers its relevant modifications for problems with constraints. Section 3 introduces a novel algorithm based on the DIRECT approach. In Section 4, we provide and discuss the results of our numerical investigation using a set of 67 test problems from the DIRECTGOLib v1.3 library. Finally, in Section 5, we conclude the paper and outline potential directions for future research.

2. Materials and Methods

This section provides an overview of the classical DIRECT algorithm and its extensions to handle constraints.

2.1. The Original DIRECT Algorithm for Box-Constrained Global Optimization

We begin by briefly introducing the original DIRECT algorithm [17], which is a recognized approach for box-constrained global optimization. This algorithm effectively explores the search space by partitioning it into hyper-rectangles and iteratively refining the search through function evaluations. Notably, the DIRECT algorithm is specifically designed to handle box-constrained optimization problems of the form:

In the initial stages, the DIRECT algorithm normalizes the original domain into a unit hyper-rectangle . The algorithm refers only to the original space that evaluates the objective function.

The search process in the DIRECT algorithm begins with an initial evaluation of the objective function at the midpoint of the first unit hyper-rectangle . This evaluation serves as the starting point for the algorithm’s search space exploration. The goal is to identify and select hyper-rectangles that are most promising.

Initially, there is only one hyper-rectangle available. Therefore, the selection process is straightforward. The DIRECT algorithm employs an n-dimensional trisection approach, dividing each selected hyper-rectangle into three equal sub-rectangles along each dimension. The objective function is evaluated only once for each newly created hyper-rectangle.

The selection process plays a crucial role in each subsequent iteration of the algorithm. The objective is to identify the most promising candidates for further investigation, allowing the DIRECT algorithm to effectively navigate the search space and prioritize the exploration of regions with the greatest promise of finding global optima. The formal requirement of potentially optimal hyper-rectangles (POH) in future iterations is stated in Definition 1.

Definition 1.

Let denote the sampling point as and the measure of the hyper-rectangle as . Let be a positive constant, and let be the best value currently found for the objective function. A hyper-rectangle , where (the index set identifying the current partition), is considered potentially optimal if there exists a positive constant (also known as the rate-of-change or Lipschitz constant) such that

where the measure of the hyper-rectangle is

In Equation (6), the on the right-hand side represents the standard Euclidean 2-norm. It is worth mentioning that certain studies [44,45] have examined alternative non-Euclidean norms in their research investigations. A hyper-rectangle is considered potentially optimal if it meets two requirements. First, the lower Lipschitz bound for the objective function, calculated using the left-hand side of (4), should be the smallest among all hyper-rectangles in the current partition, with some positive constant . The second requirement is that the lower bound of the hyper-rectangle must be better than the current best solution . Specifically, it should be less than or equal to . This condition serves as a threshold to prevent the DIRECT algorithm from wasting function evaluations on extremely small hyper-rectangles that are unlikely to lead to significant improvements. The value of used in practice can vary, and in work [17], good results were achieved with values of ranging from to . Once all the selected potentially optimal hyper-rectangles have been sampled and subdivided, the iterative process continues until some stopping criterion is met. Common stopping conditions employed in the DIRECT algorithm encompass reaching the maximum limit of objective function evaluations, iterations, execution time, or attaining a specific target value for the objective function.

2.2. Extensions of the DIRECT Algorithm for Problems with Constraints

Although the classical DIRECT algorithm is effective for box-constrained optimization problems, it requires modifications to handle optimization problems with constraints. We discuss the existing approaches and adaptations that have been proposed to extend the capabilities of the DIRECT algorithm for constrained optimization problems.

2.2.1. Approaches Based on Simplicial Partitioning

In our previous work [40], we extended the original DISIMPL algorithm, which is based on simplicial partitioning, to handle problems with linear constraints [46,47]. Simplicial partitioning is particularly suitable for addressing problems with linear constraints because simplices can effectively cover the search space defined by these constraints. This approach allows the simplicial partitioning algorithms (Lc-DISIMPLc and Lc-DISIMPLv) [40] to perform the search exclusively within the feasible region, distinguishing it from other approaches of the type DIRECT. However, it should be noted that calculating the feasible region requires solving linear n-dimensional systems, as demonstrated by the authors in [40]. This operation exhibits exponential complexity, which limits the effectiveness of the proposed algorithm for problems with relatively small values of n and m.

2.2.2. Penalty and Auxiliary Function Approaches

The first approach to handling constrained problems was introduced in [18] and implemented as glcSolve in TOMLAB software [21]. This approach employs an auxiliary function that penalizes any deviation of the function value from the global minimum value . The penalty function does not impose any penalty on function values below ; it only applies when violating the constraints. Moreover, a weighted sum of constraint violations is assigned to each value of the function. The penalty function achieves its minimum value of zero solely at the global minimum, while at any other point, it assumes positive values indicating sub-optimality or infeasibility. Additionally, the glcSolve algorithm removes hyper-rectangles where it can be demonstrated that the linear constraints cannot be satisfied.

Several years later, an alternative approach [33] based on DIRECT was introduced that utilizes an exact L1 penalty. Experimental results demonstrated promising results with this approach. However, a major drawback is the manual setting of the penalty parameters for each constraint function by the user. In practice, the selection of penalty parameters is a crucial task that can have a significant impact on the algorithm’s performance [24,34,40,47,48].

Two other approaches based on penalty functions were introduced in [35,36]. These algorithms feature an automatic update rule for the penalty parameter, and under certain weak assumptions, the penalty parameters are updated a finite number of times.

In [24], a novel extension of the DIRECT algorithm called DIRECT-GLce was introduced. This algorithm employs an auxiliary function approach that combines objective and constraint functions. The DIRECT-GLce algorithm operates in two distinct phases: one focuses on locating feasible points, while the second aims to improve the current feasible solution. During the initial phase, DIRECT-GLce samples the search space and minimizes the sum of constraint violations. Once feasible points are identified, the algorithm improves these feasible solutions. The proposed algorithm operates without penalty parameters and ensures convergence to a feasible solution.

2.2.3. Filtering Approach

Another recent DIRECT-type approach [32] also aims to simultaneously minimize constraint violations and objective function values. The suggested algorithm employs filter methodology [49] and divides the main set into three subsets. The filtering strategy prioritizes selecting potentially optimal candidates as follows: first, hyper-rectangles with feasible center points are chosen, followed by those with infeasible but non-dominated center points, and finally, those with infeasible and dominated center points.

2.2.4. Alternative Approaches without Utilizing Constraint Information

The first and most straightforward extension of the DIRECT algorithm to handle the constrained optimization problem is based on the barrier approach, as described in [37]. In this approach, infeasible points are assigned a very high value, ensuring that no infeasible hyper-rectangles are subdivided as long as there are feasible hyper-rectangles of the same size with a feasible midpoint. The strategy was extended in [38], where the authors suggested incorporating a subdividing step. After subdividing all traditional POHs, a new subdividing step is initiated, where all hyper-rectangles with infeasible midpoints are also subdivided. The authors demonstrated that this extra step effectively decomposes the boundaries of the hidden constraints and efficiently exposes the edges of the feasible region.

Another extension of the DIRECT algorithm is based on the neighborhood assignment strategy and was also proposed in [37]. In this approach, the value assigned to an infeasible point is based on the objective function values found in neighboring feasible points.

In the most recent proposals [39], the authors suggested a different approach to handling constraints. The proposed method assigns a value to an infeasible hyper-rectangle, depending on the distance of its center to the current best minimum point. This way, infeasible hyper-rectangles close to the current minimum point are not penalized with large values. This approach allows for a faster and more comprehensive examination near the feasible region boundary.

3. Description of the Proposed mBIRECTv-GL Algorithm

This section introduces our novel algorithm based on the DIRECT-type framework and incorporates mapping methods to handle linear constraints efficiently.

3.1. Efficient Bijective Mapping: Construction and Methodological Details

We describe our technique to map a hyper-rectangle onto a linearly constrained polytope. By employing this transformation (mapping), we aim to leverage the benefits of the DIRECT algorithm to address linearly constrained global optimization problems. The mapping allows us to work within a hyper-rectangular domain D, facilitating the application of the DIRECT algorithm’s efficient search strategies.

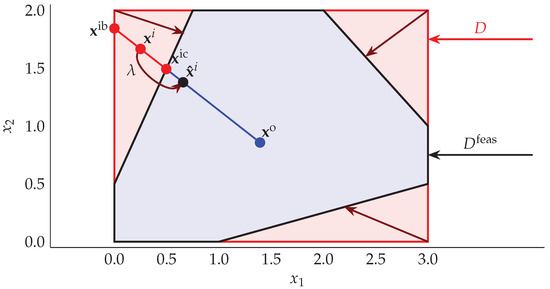

The linear constraints imposed in the problem formulation define a region in the original coordinate system that might not align with the DIRECT algorithm, which operates on hyper-rectangles. To bridge this gap, we introduce a transformation that maps the hyper-rectangle onto a linearly constrained region, as illustrated in Figure 1.

Figure 1.

Illustration of the transformation.

Using this approach, we avoid directly solving linearly constrained problems and instead utilize one-to-one mapping. This mapping transforms the points from the hyper-rectangular domain, D, to the feasible space, . Therefore, the solution to the problem:

yields the solution to the original problem. Here, represents the interior reference point, which coincides in both sets and plays an important role in the mapping. It is essential for the point to be strictly feasible:

Various methods can be used to find as described in [24,34,39,50]. We employ an adapted variation of the technique introduced in [51] that builds on the approach described in [50]. This method finds a set of vertices defined by linear constraints and uses them to calculate the midpoint of .

Let us formalize the mapping process using the Horst1 test problem as an example (see Figure 2). The mapping transforms any point to :

Figure 2.

Mapping the set D to : An illustrative example using Horst1 test problem (see Appendix A, Table A1 for a reference and description of the problem).

The parameter is calculated as:

where represents the intersection point of the closest linear constraint (to ) and the line passing through points and . Similarly, is the intersection point of the closest boundary constraint and the same line. The ratio is a relative multiplier used to move points in the direction of a vector . This ensures that the transformed point is adjusted in a controlled manner toward the center while maintaining its proximity to the original point. As shown in Lemma 1, the mapping defined by Equation (9) only maps points where there is infeasibility along the direction of a vector .

Lemma 1.

If , then also .

Proof.

Since and coincide, from Equation (10), it follows that . Substituting value in Equation (9), we obtain:

□

Next, we also demonstrate that the transformation is a bijective mapping. From the perspective of optimization, it is crucial to avoid conducting potentially expensive evaluations of the objective function at the same points.

Lemma 2.

Let and D be two convex and compact sets, where , and such that Equation (8) holds. Then, the mapping as defined in Equation (9) is a bijection.

Proof.

We will demonstrate it by the injectivity and subjectivity of T.

Injectivity: We need to show that

Assume that equality holds for any , such that . Then, from Equation (10), it follows

Since the points are mapped toward the same center in the direction of vectors and , equality is possible only then the direction of these vectors is the same. Therefore, from Equation (10), it follows that

From Equations (12) and (13), it follows

Simplifying it, we obtain the following:

Equation (14) is equal to zero when at least one of the multiplicands is zero. As , therefore . However, for to hold, it would require (see Equation (10)), but this contradicts Equation (8) to hold. Therefore, it follows that

Thus, the map T is injective.

Surjectivity: We need to show that

For any from the equality and Equation (9), we obtain

Mapping the point (from Equation (16)), we obtain

We showed that for any , we found (Equation (16)), such that , i.e., the mapping T is surjective. Since it is also injective, therefore, it is a bijection that guarantees a one-to-one correspondence between points in these two sets. □

3.2. Integrating Mapping Techniques in DIRECT-Based Framework

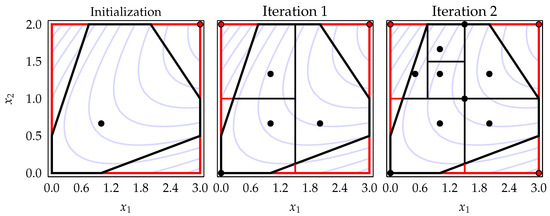

The original DIRECT algorithm cannot directly sample points at the boundary of the feasible region, limiting its convergence in such cases. Recent studies [23,52,53] have highlighted the impact of this limitation, demonstrating that it can lead to slow convergence when the optimal solution lies at the boundary of the feasible region. It is especially common when dealing with constrained problems [24]. The studies conducted in [23] have shown that employing strategies that sample points at the hyper-rectangle vertices offers significant advantages in converging to solutions located at the boundary. Based on these findings, we have incorporated one of the most recent versions of DIRECT-type algorithms (so-called BIRECTv) [54], which samples one point at the vertex and one point along the main diagonal of the hyper-rectangle. This modification allows for more effective exploration of the boundary regions, improving the algorithm’s convergence performance in such cases. Figure 3 depicts the process, illustrating the initialization and the first two iterations of the extended version (BIRECTv [54]) of the BIRECT algorithm [55] applied to the two-dimensional Horst1 test problem.

Figure 3.

Two-dimensional illustration on Horst1 test problem of sampling and bisection techniques employed in the extended version (BIRECTv [54]) of the original BIRECT algorithm [55]. The region enclosed by the black boundary represents , whereas the red contours depict .

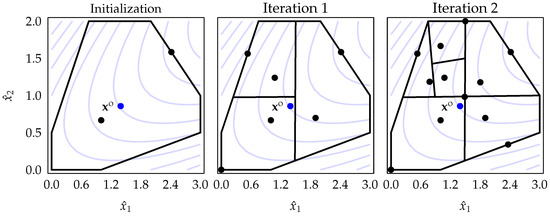

On the other hand, Figure 4 demonstrates the corresponding version of the algorithm when the introduced mapping technique is applied. It is evident that without the mapping, the algorithm cannot be used directly to solve the Equation (1) problem as it may converge to an infeasible region (). However, using the proposed mapping technique, all points sampled by the algorithm outside the feasible region (), as well as some of the inner points (), are shifted toward the center point along the direction of (see Figure 4).

Figure 4.

Application of the mapping technique embedded in the BIRECTv algorithm on Horst1 test problem. The region enclosed by the black boundary represents .

Finally, Figure 5 illustrates both algorithms: BIRECTv vs. BIRECTv with mapping techniques applied to the same Horst1 test problem. The mapping technique guarantees convergence to a feasible point and significantly improves the algorithm’s performance.

Figure 5.

An illustrative example of two algorithms: BIRECTv vs. BIRECTv with mapping techniques applied to the Horst1 test problem.

Selection of the Most Promising Regions Using a Two-Step-Based Approach

We employ a two-step approach [56] (Global and Local, GL) to identify the extended set of POHs to select the most promising regions. This approach is formally defined in Definition 2.

Definition 2.

The objective is to find all Pareto optimal hyper-rectangles that are non-dominated in terms of size (higher is better) and center point function value (lower is better) as well as those that are non-dominated in terms of size and distance from the current minimum point (closer is better). The unique union of these two identified sets of candidates is then considered.

Using Definition 2 instead of the original selection (Definition 1), we are able to expand the set of POHs by including more medium-sized hyper-rectangles and hyper-rectangles that are closer to the best solution. A recent study [23] on various partitioning and POH selection strategies has demonstrated the superior performance of the two-step Pareto selection technique.

3.3. Description of a Novel Algorithm (mBIRECTv-GL)

The step-by-step procedure for the new algorithm mBIRECTv-GL is presented in Algorithm 1. The algorithm takes inputs such as objective, constraint functions, and stopping conditions, including tolerance (), the maximum number of function evaluations (), and the maximum number of iterations (). Upon termination, mBIRECTv-GL provides the best value of the objective function found (), solution point (), and performance metrics, including percent error (), total number of function evaluations (m), and total number of iterations (k).

| Algorithm 1 The mBIRECTv-GL algorithm |

|

In the initialization step (lines 1 to 6), the algorithm begins by normalizing the hyper-rectangle D and determining the interior point . Next, the algorithm samples two points for each hyper-rectangle, converts them back to the original space D and maps them to . These points are then evaluated, and the performance metrics are initialized. The algorithm proceeds to iterate from lines 7 to 15. When selecting POHs, mBIRECTv-GL uses a two-step strategy. Newly sampled points for each POH are evaluated at their mapped locations. These steps are repeated until the specified stopping condition is met, ensuring the algorithm’s convergence.

3.4. Convergence Properties of the mBIRECTv-GL Algorithm

The literature extensively covers and investigates the convergence properties of DIRECT-type algorithms, as evidenced by numerous studies (e.g., [17,20,26,39,55,57,58]). Typically, these algorithms belong to the class of “divide the best” methods and demonstrate a type of convergence known as “everywhere dense.” This implies that they converge at every point within the feasible region. Each point explored during the algorithm acts as an accumulation point, progressively leading to the sampling of points that approach the global minima.

The convergence of mBIRECTv-GL follows a similar framework. In each iteration, the algorithm chooses the hyper-rectangle with the largest measure and guarantees that subdivision occurs across all dimensions of its longest side. The algorithm mBIRECTv-GL guarantees convergence to the global minimum based on two conditions: (1) the presence of a feasible non-empty region indicated by , and (2) the objective function exhibiting at least local continuity in the vicinity of . As the number of trial points generated approaches infinity , the convergence of mBIRECTv-GL is ensured.

Proposition 1.

For any global minimum point and any , there exist an iteration number and a point , such that .

Proof.

From Equation (2), it follows that .

First, assume . In this case, the behavior of mBIRECTv-GL is in line with that of a standard algorithm of type DIRECT for box-constrained optimization. Consequently, the convergence of mBIRECTv-GL follows from the convergence properties observed in other DIRECT-type algorithms.

Next, consider the case where . In each iteration k, mBIRECTv-GL always selects at least one hyper-rectangle from the group of hyper-rectangles with the largest measure :

The partitioning strategy implemented in mBIRECTv-GL effectively reduces the dimensions of along its longest sides, simultaneously decreasing the measure () of the corresponding hyper-rectangle . As the number of hyper-rectangles with the maximum diameter is finite, all hyper-rectangles with the current maximal diameter will eventually undergo partitioning after a sufficiently large number of iterations.

This iterative process continues with a fresh set of hyper-rectangles having the largest diameters until the largest hyper-rectangle within the original domain D reaches a diameter smaller than . Consequently, there exists a sampling point such that .

The transformation defined in Equation (9) is a linear map. Taking into account , therefore, in the current partition, there exists a sampling point such that . □

4. Results and Discussions

4.1. Foundation of Solver Comparisons and Design of Experimental Setup

In this section, we evaluate the performance of six algorithms of the type DIRECT that were specifically designed to solve global optimization problems with linear constraints taken from the DIRECTGOLib v1.3 [41] library. DIRECTGOLib v1.3 is a comprehensive collection of benchmark problems for global optimization. It encompasses both test and practical engineering problems with box and general constraints, which serve as benchmarks for various DIRECT-type algorithms.

The latest version of the DIRECTGOLib v1.3 library includes an additional 34 linearly constrained problems. This update expands on the previous version, DIRECTGOLib v1.2, which contained only 33 linearly constrained test problems. For a comprehensive overview of all linearly constrained optimization problems in DIRECTGOLib v1.3 and their respective properties, refer to Appendix A, specifically Table A1. The table presents essential details such as the ID of the problem (#), name (Name), original reference (Ref.), dimension (n), number of constraints (Con.), number of active constraints (AC), search domain (D), and known solution value ().

To compare our newly developed mBIRECTv-GL algorithm, we selected five existing competitors of DIRECTtype. Four of these algorithms (DIRECT-GLc, DIRECT-GLce, Lc-DISIMPLc, and Lc-DISIMPLv) are accessible through the recently introduced toolbox DIRECTGO v1.2 [59], while glcSolve is a solver included in the commercial TOMLAB toolbox [21].

Among the selected algorithms, three are auxiliary function-based approaches that have demonstrated high efficiency for global optimization problems with general constraints [24,59]. They are directly applicable to problems with linear constraints. The other two algorithms are simplicial partitioning-based DIRECT-type methods specifically designed to handle linearly-constrained optimization problems as stated in Equation (1). All computations were performed on a computer with an 8th Generation Intel Core i7-8750H processor (6 cores), 16 GB of RAM, and MATLAB R2023a.

To determine the stopping condition, we used established criteria commonly employed in evaluating the performance of different algorithms of DIRECT, as discussed in previous works [17,34,59,60]. Given that global minima are known for all test problems, we concluded the evaluation of the algorithms once a point was discovered that satisfied a defined percent error (pe). The specific formula for calculating the percent error was as follows:

which was smaller than the tolerance value , i.e., . Furthermore, the algorithms tested were terminated if the number of function evaluations exceeded the maximum limit of or the computation time surpassed one hour. In such cases, the final result was set to for further processing of the results. The value of was set to as the default value. In cases where the optimal value of the objective function is large, the algorithm may terminate even when the distance to the optimum is relatively large. On the other hand, when the optimal value is very close to zero (but ), Equation (19) can require an extremely precise solution. However, there were only a few such tasks in the test set where these exclusions may have had an impact.

The experimental results presented in this article are also available in digital form through the Results/MDPI directory of the GitHub repository [59]. Additionally, the MATLAB script for cycling through all the DIRECTGOLib v1.3 test problems used in this paper can be found in the Scripts/MDPI directory of the same GitHub repository [59]. This script is useful for reproducing results as well as for comparing and evaluating newly developed algorithms.

4.2. Analysis of the Overall Performance of Algorithms

Table 1 summarizes the experimental results obtained from six algorithms. The second column shows the number of problems that were not solved within the specified relative error, while columns three to seven display the average number of function evaluations for different subsets of problems.

Table 1.

Performance evaluation of DIRECT-type algorithms on 67 linearly constrained optimization problems.

Among the algorithms analyzed, our new mBIRECTv-GL algorithm demonstrated the highest success rate, with only 9 of the 67 problems remaining unsolved. The DIRECT-GLce algorithm and the DIRECT-GLc algorithm closely followed, with 14 and 16 unsolved problems, respectively, placing them as the second and third most effective algorithms.

When considering the overall average of function evaluations, the algorithm mBIRECTv-GL outperformed all other algorithms, showing nearly twice the effectiveness of the second-best algorithm, Lc-DISIMPLv. However, for problems with smaller dimensions (), the Lc-DISIMPLv algorithm displayed the best average results. Although our mBIRECTv-GL required approximately more function evaluations compared to Lc-DISIMPLv, it outperformed the latter by requiring about fewer evaluations for larger-dimensional () problems.

The final two columns display the average number of function evaluations for problems with and without active constraints. When none of the constraint functions are active, most algorithms exhibit similar performance, except for the glcSolve algorithm, which shows comparatively inferior results in such cases (see column NAC). However, when the solution lies on the boundary of the feasible region, our developed algorithm exhibits a clear advantage (see column AC).

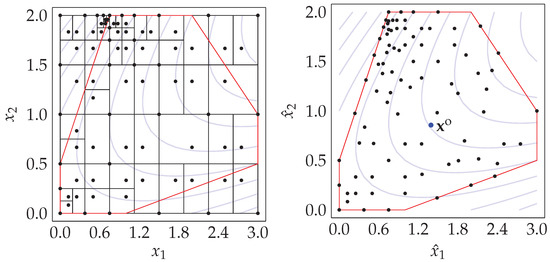

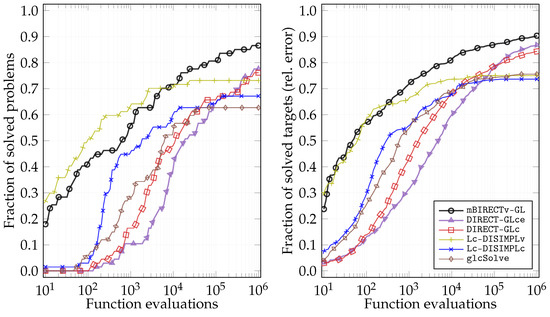

Figure 6 presents the data profiles [61,62] and empirical cumulative distributions (ECD) using the entire dataset considered. To construct the ECD, we established 51 targets with relative precisions ranging from (a similar setup as in [63]). These ECD plots, as exemplified on the right-hand side of Figure 6, provide information on the performance of the algorithms in different search stages. Both the data profiles and the ECD plots highlight the dominance of two algorithms, mBIRECTv-GL and Lc-DISIMPLv. This is attributed to their sampling strategy, which directly samples points on the boundaries. mBIRECTv-GL and Lc-DISIMPLv exhibit higher success rates while requiring fewer function evaluations, making them more efficient and cost-effective than other algorithms.

Figure 6.

Data profiles and empirical cumulative distributions (ECD) of function evaluations for various target precisions on all problems.

The data profiles displayed on the left side of Figure 6 indicate that within a small evaluation budget (≤4), the Lc-DISIMPLv algorithm is slightly more efficient than our algorithm developed and can solve a greater number of test problems. However, the ECD plot on the right side of Figure 6 reveals that these algorithms solve similar percentages of target errors within ≤3. When larger evaluation budgets are considered, the mBIRECTv-GL algorithm outperforms all other algorithms.

4.3. Statistical Analysis of the Results

The validity of the results and comparisons between algorithms and the significance of the improvements achieved by mBIRECTv-GL were evaluated using the non-parametric Wilcoxon signed test at a significance level of . A p-value greater than suggests that the difference in results between the two methods is insignificant. Table 2 displays the p-values obtained comparing mBIRECTv-GL with other DIRECT-type competitor solvers. For all instances, the p-values are below , which indicates that mBIRECTv-GL exhibits significantly superior performance compared to other techniques and surpasses the performance of other algorithms for the benchmark problems examined. However, as the evaluation budgets increase, the p-values of the penalty-based DIRECT-type algorithms closely approach the significance level.

Table 2.

p-values of the Wilcoxon signed test with significance, mBIRECTv-GL vs. other competitors, using different objective function evaluation budgets.

Taking into account the results of the Friedman mean rank test presented in Table 3, mBIRECTv-GL achieves the highest ranking among the approaches for all budgets for the evaluation of objective functions. The developed algorithm is most advantageous when smaller evaluation budgets are used. The Friedman test, conducted at a level of significance , indicates a significant difference in the performance of the various algorithms. Nevertheless, as the evaluation budgets are raised, the Friedman mean rank values exhibit decreased dispersion, implying that there will come the point where the algorithms will demonstrate similar performance.

Table 3.

Friedman mean rank values, using different objective function evaluation budgets.

5. Conclusions and Future Prospects

This paper adds a novel approach to the class of DIRECT-type algorithms. The proposed algorithm (mBIRECTv-GL) is specifically designed to tackle global optimization problems involving Lipschitz continuous functions subject to linear constraints. By integrating the most recent partitioning and selection techniques and applying mapping techniques to eliminate the infeasible region, the novel algorithm demonstrates remarkable efficiency and superior performance compared to the existing DIRECT counterparts.

Although existing solution techniques, such as simplicial partitioning approaches, operate exclusively within feasible regions, their effectiveness decreases significantly in larger dimensions. In contrast, penalty- and auxiliary-function-based approaches exhibit slower convergence rates and often require a considerably higher number of function evaluations due to their handling of large infeasible regions. These methods also face notable challenges when dealing with problems where the optimal solution lies precisely at the boundaries of feasibility.

To validate the effectiveness of our approach, we conducted extensive experimentation using a diverse set of benchmark problems. Our results highlight the superior performance of our algorithm, particularly when solutions are located at the boundary of feasible regions. The statistical analyses, including the Friedman and Wilcoxon tests, further support our results.

This research opens up novel possibilities for addressing Lipschitz-continuous optimization problems with linear constraints, providing an improved algorithmic solution with enhanced computational efficiency. Therefore, the new algorithm will have an important place among all other DIRECT-type algorithms available in the open-source DIRECTGO repository (see the Data Availability Statement below). To achieve even greater usability, as a potential direction, we consider integrating DIRECT-type algorithms into the new web-based tool for algebraic modeling and mathematical optimization [64,65]. Alternatively, at least the most efficient DIRECT-type algorithms could be hosted on the NEOS Server (https://neos-server.org/neos/, (accessed on 30 May 2023))—a free internet-based service for solving numerical optimization problems.

Author Contributions

All authors contributed equally to this work. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Data Availability Statement

The DIRECTGOLib (DIRECT Global Optimization test problems Library) is an open-source GitHub repository that serves as a comprehensive collection of test problems for global optimization. It is designed to grow continuously and welcomes contributions from anyone. The data used in this article, sourced from DIRECTGOLib v1.3, are available on GitHub and Zenodo:

- https://github.com/blockchain-group/DIRECTGOLib (accessed on 15 June 2023),

- https://zenodo.org/record/8046086 (accessed on 16 June 2023).

They are released under the MIT license, allowing users to access and utilize the data. We encourage

contributions and corrections to enhance the library’s content further.

The original mBIRECTv-GL algorithm, along with four of its competitors (Lc-DISIMPLc, Lc-DISIMPLv, DIRECT-GLc, and DIRECT-GLce), can be accessed on GitHub:

- https://github.com/blockchain-group/DIRECTGO (accessed on 15 June 2023).

Conflicts of Interest

The authors declare no conflict of interest.

Appendix A. Linearly Constrained Test Problems from the DIRECTGOLib v1.3 Benchmark Library

Table A1.

Key characteristics of global optimization test problems with linear constraints from DIRECTGOLib v1.3 library [41].

Table A1.

Key characteristics of global optimization test problems with linear constraints from DIRECTGOLib v1.3 library [41].

| # | Name | Ref. | n | Con. | AC | Variable Bounds | Optimum |

|---|---|---|---|---|---|---|---|

| 1 | avgasa | [66] | 8 | 10 | 3 | ||

| 2 | avgasb | [66] | 8 | 10 | 4 | ||

| 3 | biggsc4 | [66] | 4 | 13 | 3 | ||

| 4 | Bunnag1 | [66] | 3 | 1 | 1 | ||

| 5 | Bunnag2 | [66] | 4 | 2 | 1 | ||

| 6 | Bunnag3 | [66] | 5 | 3 | 1 | ||

| 7 | Bunnag4 | [66] | 6 | 2 | 1 | ||

| 8 | Bunnag5 | [66] | 6 | 5 | 1 | ||

| 9 | Bunnag6 | [66] | 10 | 11 | 3 | ||

| 10 | Bunnag7 | [66] | 10 | 5 | 0 | ||

| 11 | Bunnag8 | [66] | 5 | 1 | 0 | ||

| 12 | Bunnag10 | [66] | 20 | 10 | 5 | ||

| 13 | Bunnag11 | [66] | 20 | 10 | 5 | ||

| 14 | Bunnag12 | [66] | 20 | 10 | 5 | ||

| 15 | Bunnag13 | [66] | 20 | 10 | 0 | ||

| 16 | Bunnag14 | [66] | 20 | 10 | 0 | ||

| 17 | Bunnag15 | [66] | 20 | 10 | 2 | ||

| 18 | ex2_1_1 | [66] | 5 | 1 | 1 | ||

| 19 | ex2_1_2 | [66] | 6 | 2 | 1 | ||

| 20 | expfita | [66] | 5 | 2 | 0 | ||

| 21 | expfitb | [66] | 5 | 2 | 0 | ||

| 22 | expfitc | [66] | 5 | 2 | 0 | ||

| 23 | G01 | [66] | 13 | 9 | 6 | ||

| 24 | Genocop7 | [66] | 6 | 2 | 1 | ||

| 25 | Genocop9 | [66] | 3 | 5 | 2 | ||

| 26 | Genocop10 | [66] | 4 | 2 | 1 | ||

| 27 | Horst1 | [67] | 2 | 3 | 1 | ||

| 28 | Horst2 | [67] | 2 | 3 | 2 | ||

| 29 | Horst3 | [67] | 2 | 3 | 0 | ||

| 30 | Horst4 | [67] | 3 | 4 | 2 | ||

| 31 | Horst5 | [67] | 3 | 4 | 2 | ||

| 32 | Horst6 | [67] | 3 | 7 | 2 | ||

| 33 | Horst7 | [67] | 3 | 4 | 2 | ||

| 34 | hs021 | [66] | 2 | 1 | 0 | ||

| 35 | hs021mod | [66] | 7 | 1 | 1 | ||

| 36 | hs024 | [66] | 2 | 3 | 2 | ||

| 37 | hs036 | [66] | 3 | 1 | 1 | ||

| 38 | hs037 | [66] | 3 | 2 | 1 | ||

| 39 | hs038 | [66] | 4 | 2 | 0 | ||

| 40 | hs044 | [66] | 4 | 6 | 2 | ||

| 41 | hs076 | [66] | 4 | 3 | 1 | ||

| 42 | hs086 | [66] | 5 | 1 | 0 | ||

| 43 | hs118 | [66] | 15 | 17 | 9 | ||

| 44 | hs268 | [66] | 5 | 5 | 2 | ||

| 45 | Ji1 | [66] | 3 | 4 | 1 | ||

| 46 | Ji2 | [66] | 3 | 2 | 0 | ||

| 47 | Ji3 | [66] | 2 | 1 | 0 | ||

| 48 | ksip | [66] | 10 | 20 | 2 | ||

| 49 | Michalewicz1 | [66] | 2 | 3 | 0 | ||

| 50 | P9 | [66] | 3 | 9 | 2 | ||

| 51 | P14 | [66] | 3 | 4 | 2 | ||

| 52 | s224 | [66] | 2 | 4 | 1 | ||

| 53 | s231 | [66] | 2 | 2 | 0 | ||

| 54 | s232 | [66] | 2 | 3 | 2 | ||

| 55 | s250 | [66] | 3 | 2 | 1 | ||

| 56 | s251 | [66] | 3 | 1 | 1 | ||

| 57 | s253 | [66] | 3 | 1 | 0 | ||

| 58 | s268 | [66] | 5 | 5 | 2 | ||

| 59 | s277 | [66] | 4 | 4 | 4 | ||

| 60 | s278 | [66] | 6 | 6 | 6 | ||

| 61 | s279 | [66] | 8 | 8 | 8 | ||

| 62 | s280 | [66] | 10 | 10 | 10 | ||

| 63 | s331 | [66] | 2 | 1 | 0 | ||

| 64 | s340 | [66] | 3 | 1 | 1 | ||

| 65 | s354 | [66] | 4 | 1 | 1 | ||

| 66 | s359 | [66] | 5 | 14 | 4 | ||

| 67 | zecevic2 | [66] | 2 | 2 | 1 |

References

- Lucidi, S.; Sciandrone, M. A Derivative-Free Algorithm for Bound Constrained Optimization. Comput. Optim. Appl. 2002, 21, 119–142. [Google Scholar] [CrossRef]

- Giovannelli, T.; Liuzzi, G.; Lucidi, S.; Rinaldi, F. Derivative-free methods for mixed-integer nonsmooth constrained optimization. Comput. Optim. Appl. 2022, 82, 293–327. [Google Scholar] [CrossRef]

- Kimiaei, M.; Neumaier, A. Efficient unconstrained black box optimization. Math. Program. Comput. 2022, 14, 365–414. [Google Scholar] [CrossRef]

- Holland, J. Adaptation in Natural and Artificial Systems; The University of Michigan Press: Ann Arbor, MI, USA, 1975. [Google Scholar]

- Eberhart, R.; Kennedy, J. A new optimizer using particle swarm theory. In Proceedings of the Sixth International Symposium on Micro Machine and Human Science (MHS’95), Nagoya, Japan, 4–6 October 1995; pp. 39–43. [Google Scholar] [CrossRef]

- Kirkpatrick, S.; Gelatt, C.D.; Vecchi, M.P. Optimization by simulated annealing. Science 1983, 220, 671–680. [Google Scholar] [CrossRef]

- Bujok, P.; Kolenovsky, P. Eigen crossover in cooperative model of evolutionary algorithms applied to cec 2022 single objective numerical optimisation. In Proceedings of the 2022 IEEE Congress on Evolutionary Computation (CEC), Padua, Italy, 18–23 July 2022; pp. 1–8. [Google Scholar] [CrossRef]

- Hadi, A.A.; Mohamed, A.W.; Jambi, K.M. Single-objective real-parameter optimization: Enhanced LSHADE-SPACMA algorithm. Heuristics Optim. Learn. 2021, 906, 103–121. [Google Scholar] [CrossRef]

- Paulavičius, R.; Stripinis, L.; Sutavičiūtė, S.; Kočegarov, D.; Filatovas, E. A novel greedy genetic algorithm-based personalized travel recommendation system. Expert Syst. Appl. 2023, 230, 120580. [Google Scholar] [CrossRef]

- Jones, D.R.; Schonlau, M.; Welch, W.J. Efficient Global Optimization of Expensive Black-Box Functions. J. Glob. Optim. 1998, 13, 455–492. [Google Scholar] [CrossRef]

- Björkman, M.; Holmström, K. Global Optimization of Costly Nonconvex Functions Using Radial Basis Functions. Optim. Eng. 2000, 1, 373–397. [Google Scholar] [CrossRef]

- Lera, D.; Sergeyev, Y.D. Lipschitz and Hölder global optimization using space-filling curves. Appl. Numer. Math. 2010, 60, 115–129. [Google Scholar] [CrossRef]

- Sergeyev, Y.D.; Kvasov, D.E. Lipschitz global optimization. In Wiley Encyclopedia of Operations Research and Management Science (in 8 Volumes); Cochran, J.J., Cox, L.A., Keskinocak, P., Kharoufeh, J.P., Smith, J.C., Eds.; John Wiley & Sons: New York, NY, USA, 2011; Volume 4, pp. 2812–2828. [Google Scholar]

- Martins, J.R.R.A.; Ning, A. Engineering Design Optimization; Cambridge University Press: Cambridgem, UK, 2021. [Google Scholar]

- Best, M.J. Portfolio Optimization; CRC Press: Boca Raton, FL, USA, 2010. [Google Scholar]

- Floudas, C.A.; Pardalos, P.M.; Adjiman, C.; Esposito, W.R.; Gümüs, Z.H.; Harding, S.T.; Klepeis, J.L.; Meyer, C.A.; Schweiger, C.A. Handbook of Test Problems in Local and Global Optimization; Springer: Berlin/Heidelberg, Germany, 2013; Volume 33. [Google Scholar]

- Jones, D.R.; Perttunen, C.D.; Stuckman, B.E. Lipschitzian Optimization Without the Lipschitz Constant. J. Optim. Theory Appl. 1993, 79, 157–181. [Google Scholar] [CrossRef]

- Jones, D.R. The Direct Global Optimization Algorithm. In The Encyclopedia of Optimization; Floudas, C.A., Pardalos, P.M., Eds.; Kluwer Academic Publishers: Dordrect, The Netherlands, 2001; pp. 431–440. [Google Scholar]

- Jones, D.R.; Martins, J.R.R.A. The DIRECT algorithm: 25 years later. J. Glob. Optim. 2021, 79, 521–566. [Google Scholar] [CrossRef]

- Sergeyev, Y.D.; Kvasov, D.E. Global search based on diagonal partitions and a set of Lipschitz constants. SIAM J. Optim. 2006, 16, 910–937. [Google Scholar] [CrossRef]

- Holmstrom, K.; Goran, A.O.; Edvall, M.M. User’s Guide for TOMLAB 7. Technical Report. Tomlab Optimization Inc. 2010. Available online: https://tomopt.com/docs/TOMLAB.pdf (accessed on 15 June 2023).

- Stripinis, L.; Žilinskas, J.; Casado, L.G.; Paulavičius, R. On MATLAB experience in accelerating DIRECT-GLce algorithm for constrained global optimization through dynamic data structures and parallelization. Appl. Math. Comput. 2021, 390, 125596. [Google Scholar] [CrossRef]

- Stripinis, L.; Paulavičius, R. An empirical study of various candidate selection and partitioning techniques in the DIRECT framework. J. Glob. Optim. 2022, 1–31. [Google Scholar] [CrossRef]

- Stripinis, L.; Paulavičius, R.; Žilinskas, J. Penalty functions and two-step selection procedure based DIRECT-type algorithm for constrained global optimization. Struct. Multidiscip. Optim. 2019, 59, 2155–2175. [Google Scholar] [CrossRef]

- Stripinis, L.; Paulavičius, R. Experimental Study of Excessive Local Refinement Reduction Techniques for Global Optimization DIRECT-Type Algorithms. Mathematics 2022, 10, 3760. [Google Scholar] [CrossRef]

- Stripinis, L.; Paulavičius, R. Lipschitz-inspired HALRECT algorithm for derivative-free global optimization. J. Glob. Optim. 2023, 1–31. [Google Scholar] [CrossRef]

- Liuzzi, G.; Lucidi, S.; Piccialli, V. A direct-based approach exploiting local minimizations for the solution for large-scale global optimization problems. Comput. Optim. Appl. 2010, 45, 353–375. [Google Scholar] [CrossRef]

- Liuzzi, G.; Lucidi, S.; Piccialli, V. Exploiting derivative-free local searches in direct-type algorithms for global optimization. Comput. Optim. Appl. 2016, 65, 449–475. [Google Scholar] [CrossRef]

- Kvasov, D.E.; Mukhametzhanov, M.S. Metaheuristic vs. deterministic global optimization algorithms: The univariate case. Appl. Math. Comput. 2018, 318, 245–259. [Google Scholar] [CrossRef]

- Rios, L.M.; Sahinidis, N.V. Derivative-free optimization: A review of algorithms and comparison of software implementations. J. Glob. Optim. 2013, 56, 1247–1293. [Google Scholar] [CrossRef]

- Sergeyev, Y.D.; Kvasov, D.E.; Mukhametzhanov, M.S. On the efficiency of nature-inspired metaheuristics in expensive global optimization with limited budget. Sci. Rep. 2018, 8, 453. [Google Scholar] [CrossRef] [PubMed]

- Costa, M.F.P.; Rocha, A.M.A.C.; Fernandes, E.M.G.P. Filter-based DIRECT method for constrained global optimization. J. Glob. Optim. 2018, 71, 517–536. [Google Scholar] [CrossRef]

- Finkel, D.E. MATLAB Source Code for DIRECT. 2004. Available online: http://www4.ncsu.edu/~ctk/Finkel_Direct/ (accessed on 22 March 2017).

- Liu, H.; Xu, S.; Chen, X.; Wang, X.; Ma, Q. Constrained global optimization via a DIRECT-type constraint-handling technique and an adaptive metamodeling strategy. Struct. Multidiscip. Optim. 2017, 55, 155–177. [Google Scholar] [CrossRef]

- Pillo, G.D.; Liuzzi, G.; Lucidi, S.; Piccialli, V.; Rinaldi, F. A DIRECT-type approach for derivative-free constrained global optimization. Comput. Optim. Appl. 2016, 65, 361–397. [Google Scholar] [CrossRef]

- Pillo, G.D.; Lucidi, S.; Rinaldi, F. An approach to constrained global optimization based on exact penalty functions. J. Optim. Theory Appl. 2010, 54, 251–260. [Google Scholar] [CrossRef]

- Gablonsky, J.M. Modifications of the DIRECT Algorithm. Ph.D. Thesis, North Carolina State University, Raleigh, NC, USA, 2001. [Google Scholar]

- Na, J.; Lim, Y.; Han, C. A modified DIRECT algorithm for hidden constraints in an LNG process optimization. Energy 2017, 126, 488–500. [Google Scholar] [CrossRef]

- Stripinis, L.; Paulavičius, R. A new DIRECT-GLh algorithm for global optimization with hidden constraints. Optim. Lett. 2021, 15, 1865–1884. [Google Scholar] [CrossRef]

- Paulavičius, R.; Žilinskas, J. Advantages of simplicial partitioning for Lipschitz optimization problems with linear constraints. Optim. Lett. 2016, 10, 237–246. [Google Scholar] [CrossRef]

- Stripinis, L.; Paulavičius, R. DIRECTGOLib—DIRECT Global Optimization Test Problems Library. Version v1.3, Zenodo. 2023. Available online: https://zenodo.org/record/8046086/export/hx (accessed on 15 June 2023).

- Friedman, M. The Use of Ranks to Avoid the Assumption of Normality Implicit in the Analysis of Variance. J. Am. Stat. Assoc. 1937, 32, 675–701. [Google Scholar] [CrossRef]

- Hollander, M.; Wolfe, D. Nonparametric Statistical Methods, Solutions Manual; Wiley Series in Probability and Statistics; Wiley: Hoboken, NJ, USA, 1999. [Google Scholar]

- Gablonsky, J.M.; Kelley, C.T. A locally-biased form of the DIRECT algorithm. J. Glob. Optim. 2001, 21, 27–37. [Google Scholar] [CrossRef]

- Paulavičius, R.; Žilinskas, J. Analysis of different norms and corresponding Lipschitz constants for global optimization in multidimensional case. Inf. Technol. Control 2007, 36, 383–387. [Google Scholar]

- Paulavičius, R.; Žilinskas, J. Simplicial Lipschitz optimization without the Lipschitz constant. J. Glob. Optim. 2014, 59, 23–40. [Google Scholar] [CrossRef]

- Paulavičius, R.; Žilinskas, J. Simplicial Global Optimization; SpringerBriefs in Optimization; Springer: New York, NY, USA, 2014. [Google Scholar] [CrossRef]

- Finkel, D.E. Global Optimization with the Direct Algorithm. Ph.D. Thesis, North Carolina State University, Raleigh, NC, USA, 2005. [Google Scholar]

- Fletcher, R.; Leyffer, S. Nonlinear programming without a penalty function. Math. Program. 2002, 91, 239–269. [Google Scholar] [CrossRef]

- Barber, C.B.; Dobkin, D.P.; Huhdanpaa, H. The quickhull algorithm for convex hulls. ACM Trans. Math. Softw. (TOMS) 1996, 22, 469–483. [Google Scholar] [CrossRef]

- Becker, S. CON2VERT—Constraints to Vertices, MATLAB Central File Exchange. 2023. Available online: https://www.mathworks.com/matlabcentral/fileexchange/7894-con2vert-constraints-to-vertices (accessed on 16 May 2023).

- Huyer, W.; Neumaier, A. Global Optimization by Multilevel Coordinate Search. J. Glob. Optim. 1999, 14, 331–355. [Google Scholar] [CrossRef]

- Liu, H.; Xu, S.; Wang, X.; Wu, X.; Song, Y. A global optimization algorithm for simulation-based problems via the extended DIRECT scheme. Eng. Optim. 2015, 47, 1441–1458. [Google Scholar] [CrossRef]

- Chiter, L. Experimental Data for the Preprint “Diagonal Partitioning Strategy Using Bisection of Rectangles and a Novel Sampling Scheme”. Mendeley Data, V2. 2023. Available online: https://data.mendeley.com/datasets/x9fpc9w7wh/2 (accessed on 16 June 2023).

- Paulavičius, R.; Chiter, L.; Žilinskas, J. Global optimization based on bisection of rectangles, function values at diagonals, and a set of Lipschitz constants. J. Glob. Optim. 2018, 71, 5–20. [Google Scholar] [CrossRef]

- Stripinis, L.; Paulavičius, R.; Žilinskas, J. Improved scheme for selection of potentially optimal hyper-rectangles in DIRECT. Optim. Lett. 2018, 12, 1699–1712. [Google Scholar] [CrossRef]

- Finkel, D.E.; Kelley, C.T. Additive scaling and the DIRECT algorithm. J. Glob. Optim. 2006, 36, 597–608. [Google Scholar] [CrossRef]

- Paulavičius, R.; Sergeyev, Y.D.; Kvasov, D.E.; Žilinskas, J. Globally-biased DISIMPL algorithm for expensive global optimization. J. Glob. Optim. 2014, 59, 545–567. [Google Scholar] [CrossRef]

- Stripinis, L.; Paulavičius, R. DIRECTGO: A New DIRECT-Type MATLAB Toolbox for Derivative-Free Global Optimization. ACM Trans. Math. Softw. 2022, 48, 41. [Google Scholar] [CrossRef]

- Paulavičius, R.; Sergeyev, Y.D.; Kvasov, D.E.; Žilinskas, J. Globally-biased BIRECT algorithm with local accelerators for expensive global optimization. Expert Syst. Appl. 2020, 144, 11305. [Google Scholar] [CrossRef]

- Moré, J.J.; Wild, S.M. Benchmarking derivative-free optimization algorithms. SIAM J. Optim. 2009, 20, 172–191. [Google Scholar] [CrossRef]

- Grishagin, V.A. Operating characteristics of some global search algorithms. In Problems of Stochastic Search; Zinatne: Riga, Latvia, 1978; Volume 7, pp. 198–206. (In Russian) [Google Scholar]

- Hansen, N.; Auger, A.; Ros, R.; Mersmann, O.; Tušar, T.; Brockhoff, D. COCO: A platform for comparing continuous optimizers in a black-box setting. Optim. Methods Softw. 2021, 36, 114–144. [Google Scholar] [CrossRef]

- Jusevičius, V.; Oberdieck, R.; Paulavičius, R. Experimental Analysis of Algebraic Modelling Languages for Mathematical Optimization. Informatica 2021, 32, 283–304. [Google Scholar] [CrossRef]

- Jusevičius, V.; Paulavičius, R. Web-Based Tool for Algebraic Modeling and Mathematical Optimization. Mathematics 2021, 9, 2751. [Google Scholar] [CrossRef]

- Vaz, A.; Vicente, L. Pswarm: A hybrid solver for linearly constrained global derivative-free optimization. Optim. Methods Softw. 2009, 24, 669–685. [Google Scholar] [CrossRef]

- Horst, R.; Pardalos, P.M.; Thoai, N.V. Introduction to Global Optimization; Nonconvex Optimization and Its Application; Kluwer Academic Publishers: Berlin, Germany, 1995. [Google Scholar]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).