Multi-Scale Feature Selective Matching Network for Object Detection

Abstract

1. Introduction

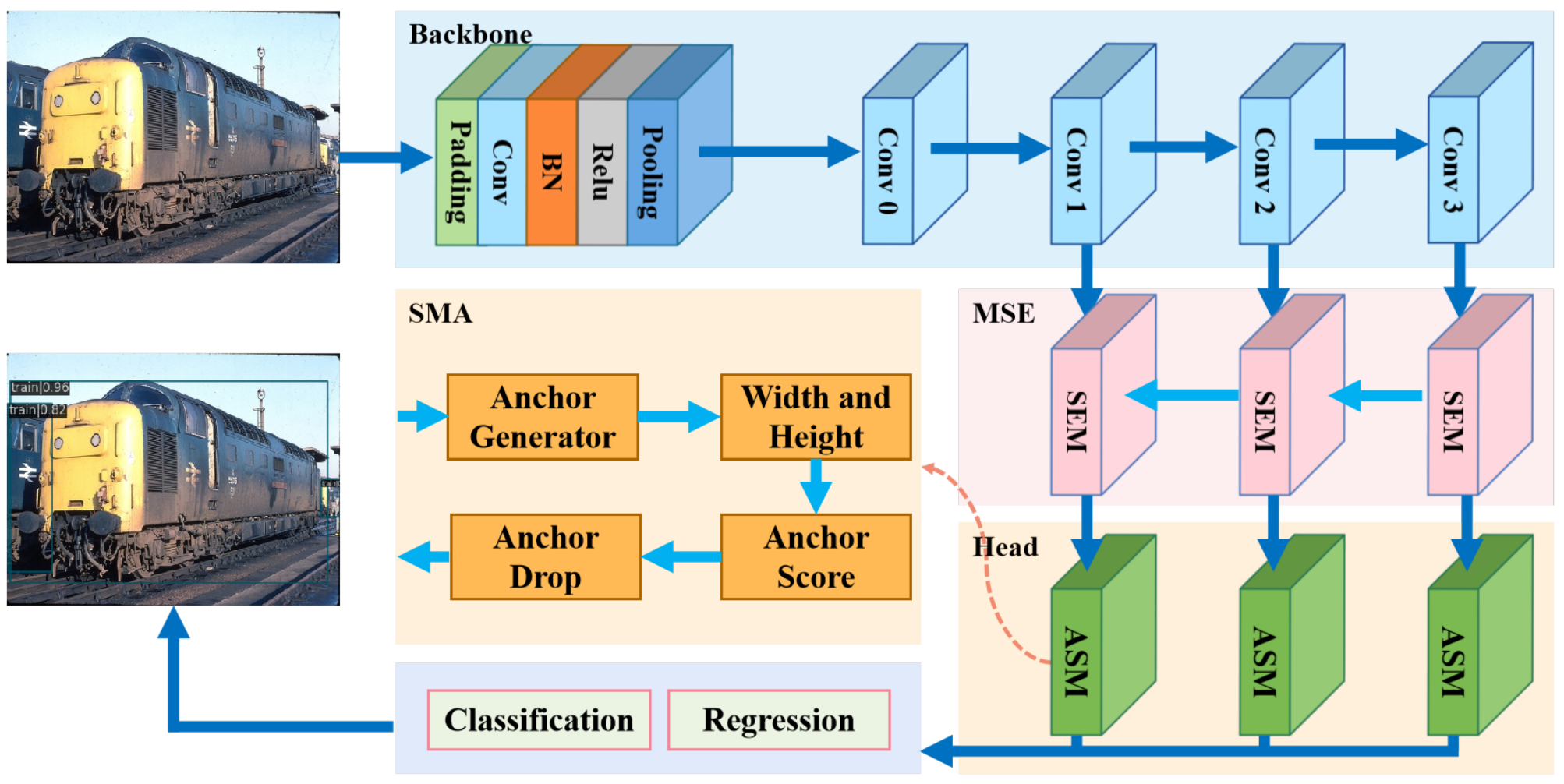

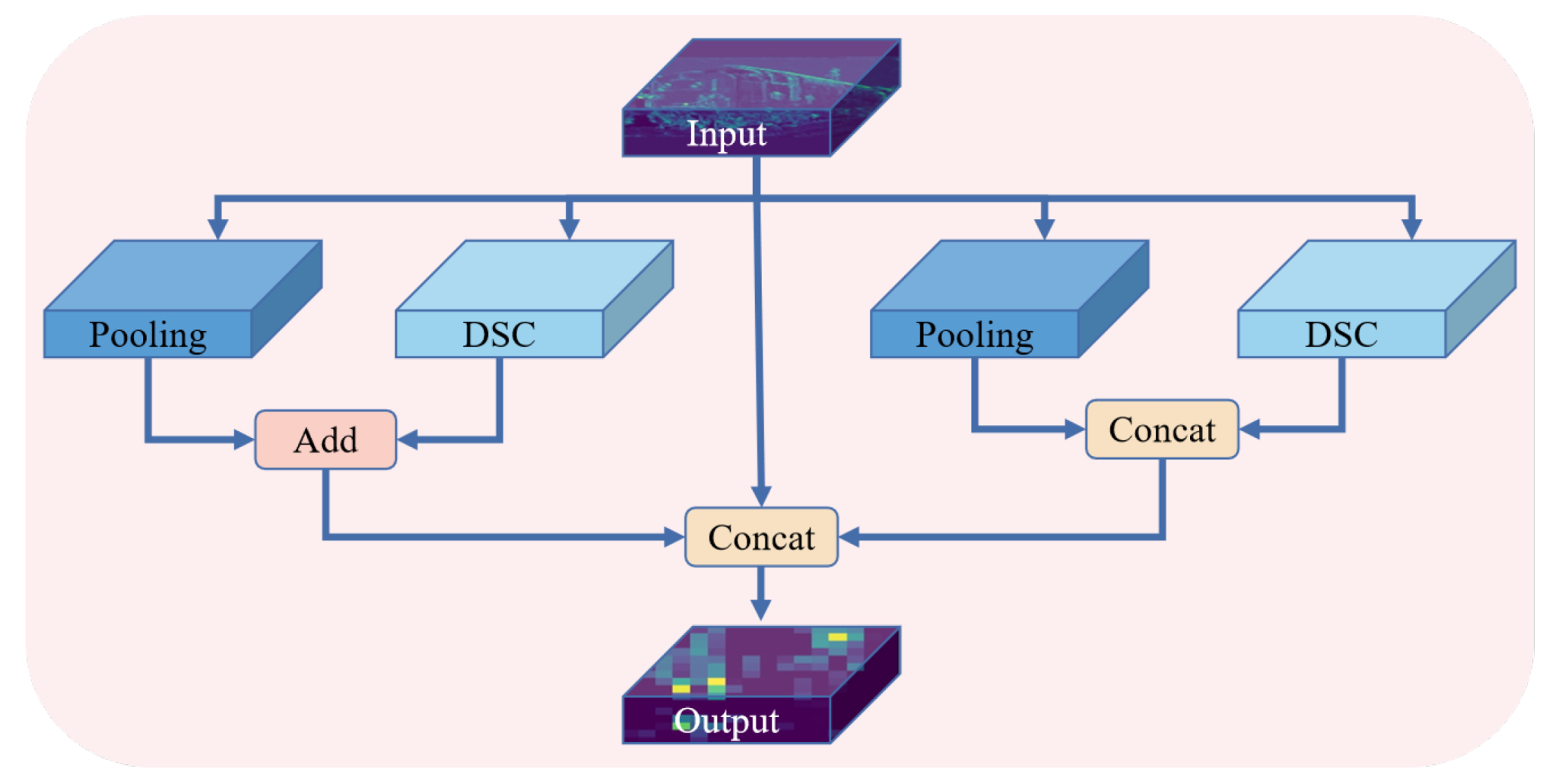

- In order to improve the detection performance of small-size objects, we propose a multi-scale semantic enhancement module (MSEM) architecture. The MSEM can accomplish semantic enhancement at multi-scales and enrich the semantic features of MFSMNet. The MSEM can improve the detection performance of small-size objects.

- In order to alleviate the performance constraints caused by positive and negative sample imbalance, we propose a anchor selective matching (ASM) strategy. It uses an anchor scoring mechanism to discard low-quality localized anchors as a way to alleviate positive and negative sample imbalance and improve MFSMNet detection performance.

- Our proposed multi-scale feature selective matching network (MFSMNet) has good experimental results on PASCAL VOC 2007 + 2012 and Microsoft COCO datasets, which effectively improves the performance of object detection.

2. Related Work

2.1. Anchor-Free Methods

2.1.1. Keypoint-Based Methods

2.1.2. Center-Based Methods

2.2. Anchor-Based Methods

2.2.1. Two-Stage Methods

2.2.2. One-Stage Methods

3. Our Proposed Method

3.1. Network Structure Design

3.2. Multi-Scale Semantic Enhancement Module

3.3. Anchor Selective Matching Strategy

4. Experimental Result

4.1. Datasets and Metrics

4.2. Experimental Setup

4.3. Quantitative Analysis of Ablation Experiments

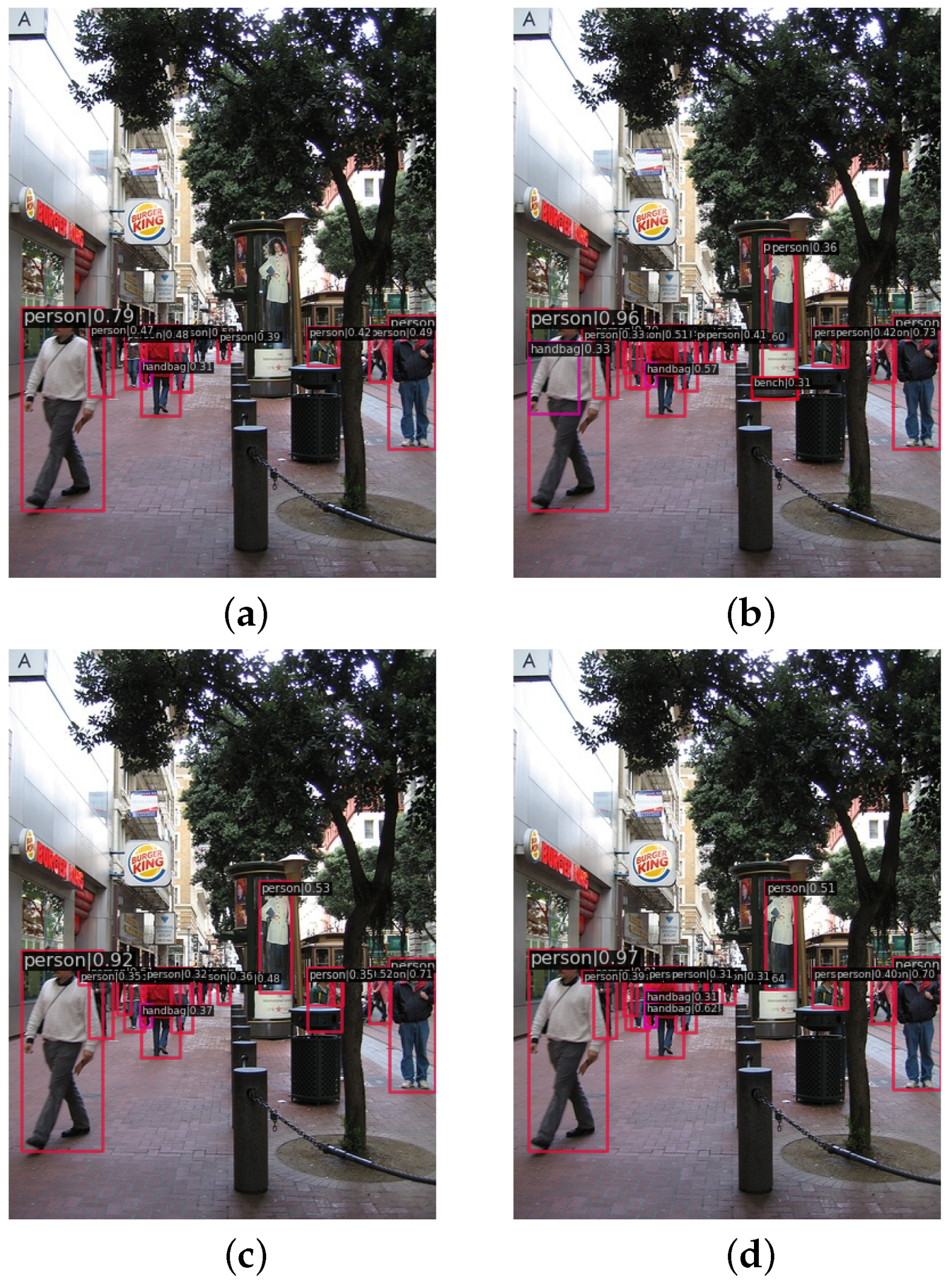

4.4. Qualitative Analysis of Ablation Experiments

4.5. Quantitative Analysis of Comparative Experiments

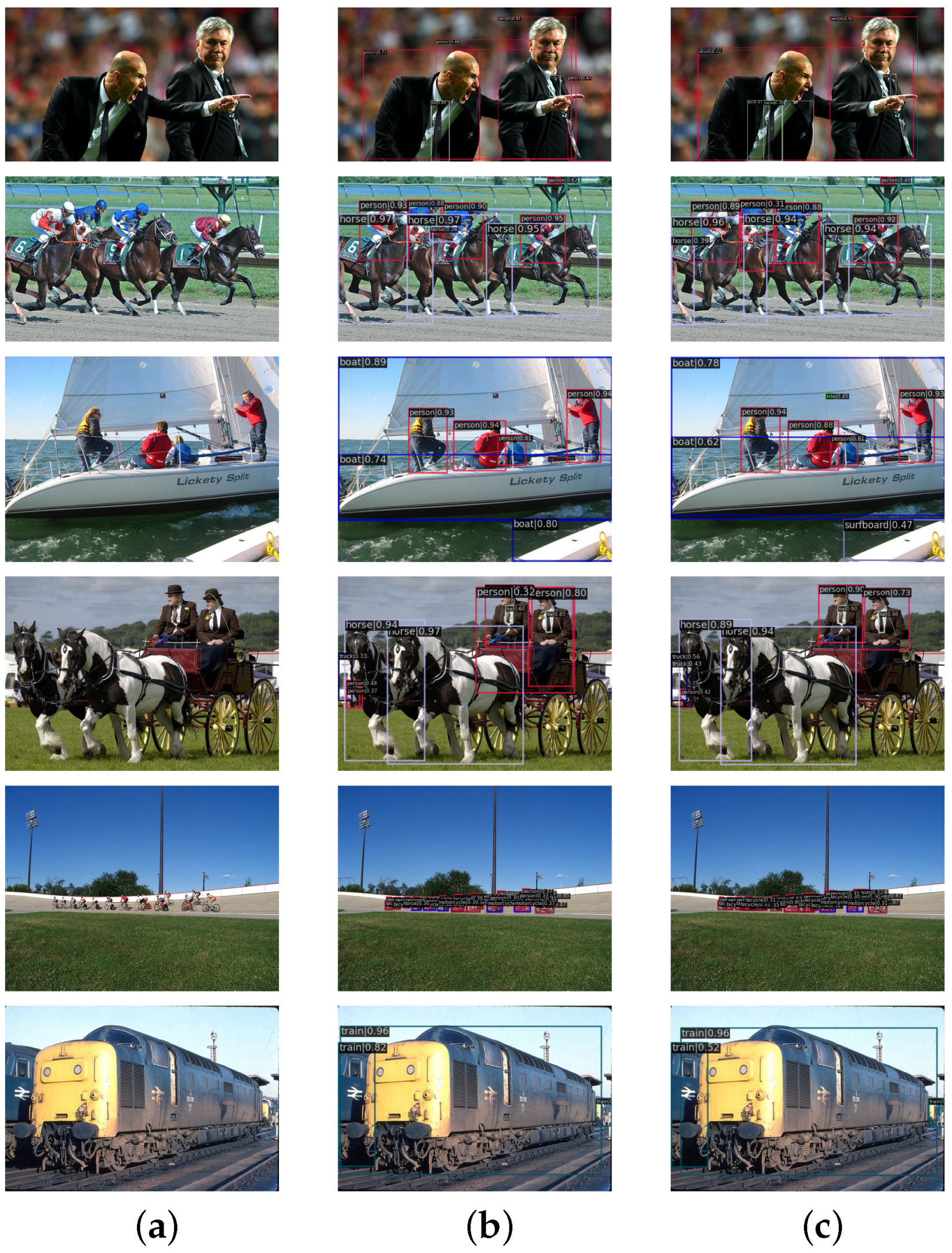

4.6. Qualitative Analysis of Comparative Experiments

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Rezatofighi, H.; Tsoi, N.; Gwak, J.; Sadeghian, A.; Reid, I.; Savarese, S. Generalized intersection over union: A metric and a loss for bounding box regression. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 658–666. [Google Scholar]

- Zhou, D.; Liu, Z.; Wang, J.; Wang, L.; Hu, T.; Ding, E.; Wang, J. Human-object interaction detection via disentangled transformer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, New Orleans, LA, USA, 18–24 June 2022; pp. 19568–19577. [Google Scholar]

- Lee, S.J.; Lee, S.; Cho, S.I.; Kang, S.J. Object detection-based video retargeting with spatial–temporal consistency. IEEE Trans. Circuits Syst. Video Technol. 2020, 30, 4434–4439. [Google Scholar] [CrossRef]

- Li, Z.; Lang, C.; Liang, L.; Zhao, J.; Feng, S.; Hou, Q.; Feng, J. Dense attentive feature enhancement for salient object detection. IEEE Trans. Circuits Syst. Video Technol. 2021, 32, 8128–8141. [Google Scholar] [CrossRef]

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y.; Berg, A.C. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2016; pp. 21–37. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLO9000: Better, faster, stronger. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 21–26 July 2017; pp. 7263–7271. [Google Scholar]

- Redmon, J.; Farhadi, A. YOLOv3: An incremental improvement. arXiv 2018, arXiv:1804.02767. [Google Scholar]

- Bochkovskiy, A.; Wang, C.Y.; Liao, H.Y.M. YOLOv4: Optimal speed and accuracy of object detection. arXiv 2020, arXiv:2004.10934. [Google Scholar]

- Ge, Z.; Liu, S.; Wang, F.; Li, Z.; Sun, J. Yolox: Exceeding yolo series in 2021. arXiv 2021, arXiv:2107.08430. [Google Scholar]

- Dong, Y.; Tan, W.; Tao, D.; Zheng, L.; Li, X. CartoonLossGAN: Learning surface and coloring of images for cartoonization. IEEE Trans. Image Process. 2021, 31, 485–498. [Google Scholar] [CrossRef] [PubMed]

- Kamilaris, A.; Prenafeta-Boldú, F.X. Deep learning in agriculture: A survey. Comput. Electron. Agric. 2018, 147, 70–90. [Google Scholar] [CrossRef]

- Law, H.; Deng, J. Cornernet: Detecting objects as paired keypoints. In Proceedings of the European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2018; pp. 734–750. [Google Scholar]

- Zhou, X.; Zhuo, J.; Krahenbuhl, P. Bottom-up object detection by grouping extreme and center points. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 850–859. [Google Scholar]

- Maninis, K.K.; Caelles, S.; Pont-Tuset, J.; Van Gool, L. Deep extreme cut: From extreme points to object segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 616–625. [Google Scholar]

- Yang, Z.; Liu, S.; Hu, H.; Wang, L.; Lin, S. Reppoints: Point set representation for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 9657–9666. [Google Scholar]

- Dai, J.; Qi, H.; Xiong, Y.; Li, Y.; Zhang, G.; Hu, H.; Wei, Y. Deformable convolutional networks. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 764–773. [Google Scholar]

- Duan, K.; Bai, S.; Xie, L.; Qi, H.; Huang, Q.; Tian, Q. Centernet: Keypoint triplets for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2019; pp. 6569–6578. [Google Scholar]

- Dong, Z.; Li, G.; Liao, Y.; Wang, F.; Ren, P.; Qian, C. Centripetalnet: Pursuing high-quality keypoint pairs for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 10519–10528. [Google Scholar]

- Redmon, J.; Divvala, S.; Girshick, R.; Farhadi, A. You only look once: Unified, real-time object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 779–788. [Google Scholar]

- Huang, L.; Yang, Y.; Deng, Y.; Yu, Y. Densebox: Unifying landmark localization with end to end object detection. arXiv 2015, arXiv:1509.04874. [Google Scholar]

- Yu, J.; Jiang, Y.; Wang, Z.; Cao, Z.; Huang, T. Unitbox: An advanced object detection network. In Proceedings of the 24th ACM International Conference on Multimedia, Amsterdam, The Netherlands, 15–19 October 2016; pp. 516–520. [Google Scholar]

- Tian, Z.; Shen, C.; Chen, H.; He, T. Fcos: Fully convolutional one-stage object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Repulic of Korea, 27 October–2 November 2019; pp. 9627–9636. [Google Scholar]

- Dong, Y.; Jiang, Z.; Tao, F.; Fu, Z. Multiple spatial residual network for object detection. Complex Intell. Syst. 2022, 9, 1347–1362. [Google Scholar] [CrossRef]

- Girshick, R.; Donahue, J.; Darrell, T.; Malik, J. Rich feature hierarchies for accurate object detection and semantic segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Columbus, OH, USA, 23–28 June 2014; pp. 580–587. [Google Scholar]

- Girshick, R. Fast r-cnn. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Santiago, Chile, 7–13 December 2015; pp. 1440–1448. [Google Scholar]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster r-cnn: Towards real-time object detection with region proposal networks. Adv. Neural Inf. Process. Syst. 2015, 28. [Google Scholar] [CrossRef] [PubMed]

- Xie, X.; Cheng, G.; Wang, J.; Yao, X.; Han, J. Oriented R-CNN for object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Seoul, Republic of Korea, 27 October–2 November 2021; pp. 3520–3529. [Google Scholar]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask r-cnn. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Lee, Y.; Park, J. Centermask: Real-time anchor-free instance segmentation. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 13906–13915. [Google Scholar]

- Dong, Y.; Zhao, K.; Zheng, L.; Yang, H.; Liu, Q.; Pei, Y. Refinement Co-supervision network for real-time semantic segmentation. IET Comput. Vis. 2023, in press. [Google Scholar] [CrossRef]

- Dong, Y.; Yang, H.; Pei, Y.; Shen, L.; Zheng, L.; Peiluan, L. Compact interactive dual-branch network for real-time semantic segmentation. Complex Intell. Syst. 2023, in press. [Google Scholar] [CrossRef]

- Fang, F.; Li, L.; Zhu, H.; Lim, J.H. Combining faster R-CNN and model-driven clustering for elongated object detection. IEEE Trans. Image Process. 2019, 29, 2052–2065. [Google Scholar] [CrossRef] [PubMed]

- Dong, Y.; Shen, L.; Pei, Y.; Yang, H.; Li, X. Field-matching attention network for object detection. Neurocomputing 2023, 535, 123–133. [Google Scholar] [CrossRef]

- Fu, C.Y.; Liu, W.; Ranga, A.; Tyagi, A.; Berg, A.C. Dssd: Deconvolutional single shot detector. arXiv 2017, arXiv:1701.06659. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal loss for dense object detection. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2980–2988. [Google Scholar]

- Zhang, S.; Wen, L.; Bian, X.; Lei, Z.; Li, S.Z. Single-shot refinement neural network for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Beijing, China, 17–20 September 2017; pp. 4203–4212. [Google Scholar]

- Fang, F.; Xu, Q.; Li, L.; Gu, Y.; Lim, J.H. Detecting objects with high object region percentage. In Proceedings of the 2020 25th International Conference on Pattern Recognition, Milan, Italy, 10–15 January 2021; pp. 7173–7180. [Google Scholar]

- Lin, T.Y.; Dollár, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature pyramid networks for object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, San Diego, CA, USA, 23–27 July 2018; pp. 2117–2125. [Google Scholar]

- Everingham, M.; Winn, J. The Pascal Visual Object Classes Challenge 2007 (voc2007) Development Kit; Tech. Rep; University of Leeds: Leeds, UK, 2007. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft coco: Common objects in context. In Proceedings of the European Conference on Computer Vision; Springer: Berlin/Heidelberg, Germany, 2014; pp. 740–755. [Google Scholar]

- Chen, K.; Wang, J.; Pang, J.; Cao, Y.; Xiong, Y.; Li, X.; Sun, S.; Feng, W.; Liu, Z.; Xu, J.; et al. MMDetection: Open mmlab detection toolbox and benchmark. arXiv 2019, arXiv:1906.07155. [Google Scholar]

- Zhu, C.; He, Y.; Savvides, M. Feature selective anchor-free module for single-shot object detection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Long Beach, CA, USA, 15–20 June 2019; pp. 840–849. [Google Scholar]

- Zhang, S.; Chi, C.; Yao, Y.; Lei, Z.; Li, S.Z. Bridging the gap between anchor-based and anchor-free detection via adaptive training sample selection. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Seattle, WA, USA, 13–19 June 2020; pp. 9759–9768. [Google Scholar]

- Kong, T.; Sun, F.; Liu, H.; Jiang, Y.; Li, L.; Shi, J. Foveabox: Beyound anchor-based object detection. IEEE Trans. Image Process. 2020, 29, 7389–7398. [Google Scholar] [CrossRef]

- Li, X.; Wang, W.; Wu, L.; Chen, S.; Hu, X.; Li, J.; Tang, J.; Yang, J. Generalized focal loss: Learning qualified and distributed bounding boxes for dense object detection. Adv. Neural Inf. Process. Syst. 2020, 33, 21002–21012. [Google Scholar]

- Zhang, H.; Wang, Y.; Dayoub, F.; Sunderhauf, N. Varifocalnet: An iou-aware dense object detector. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 20–25 June 2021; pp. 8514–8523. [Google Scholar]

- Zhang, X.; Wan, F.; Liu, C.; Ji, X.; Ye, Q. Learning to match anchors for visual object detection. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 44, 3096–3109. [Google Scholar] [CrossRef] [PubMed]

| Method | ||||||

|---|---|---|---|---|---|---|

| Baseline | 38.6 | 56.4 | 41.7 | 21.6 | 42.4 | 49.0 |

| +MSEM | 38.9 | 56.5 | 41.9 | 22.7 | 42.4 | 49.4 |

| +ASM | 39.6 | 56.9 | 43.2 | 22.2 | 43.8 | 51.4 |

| Baseline + MSEM + ASM | 39.9 | 57.1 | 43.6 | 23.2 | 44.2 | 51.9 |

| Method | Plane | Bike | Bird | Boat | Cup | Bus | Car | Cat | Chair | Cow | Table | Dog | Horse | Mbike | Human | Plant | Sheep | Sofa | Train | TV | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| RetinaNet [35] | 79.13 | 87.2 | 86.2 | 78.1 | 66.4 | 71.0 | 84.7 | 87.7 | 88.4 | 62.9 | 85.2 | 72.6 | 85.8 | 85.7 | 82.7 | 84.1 | 53.4 | 82.9 | 77.0 | 82.9 | 77.7 |

| FSAF [42] | 76.31 | 79.3 | 79.3 | 76.0 | 65.1 | 67.6 | 83.1 | 86.7 | 87.1 | 59.8 | 83.2 | 69.5 | 85.3 | 85.1 | 81.9 | 84.4 | 48.2 | 76.3 | 71.6 | 82.8 | 74.0 |

| Repponits [15] | 79.47 | 83.5 | 82.4 | 77.1 | 72.4 | 71.6 | 85.1 | 87.8 | 88.3 | 63.4 | 86.3 | 75.7 | 87.5 | 85.8 | 84.1 | 83.8 | 50.7 | 84.0 | 76.2 | 86.3 | 77.4 |

| FCOS [22] | 71.59 | 78.5 | 78.7 | 68.3 | 61.8 | 57.6 | 78.0 | 82.2 | 83.0 | 54.8 | 80.2 | 65.8 | 80.4 | 78.4 | 77.4 | 76.5 | 41.4 | 74.5 | 66.9 | 81.1 | 66.2 |

| ATSS [43] | 77.77 | 84.7 | 81.9 | 76.8 | 67.9 | 69.5 | 85.4 | 86.4 | 88.1 | 61.7 | 86.4 | 72.3 | 85.1 | 85.4 | 80.2 | 83.1 | 48.3 | 81.1 | 72.3 | 81.7 | 77.1 |

| Foveabox [44] | 76.67 | 79.8 | 80.2 | 77.0 | 66.9 | 66.7 | 82.5 | 86.9 | 87.3 | 62.1 | 85.6 | 69.4 | 85.1 | 85.9 | 78.9 | 84.4 | 48.8 | 79.1 | 71.2 | 79.4 | 76.5 |

| GFL [45] | 77.04 | 85.4 | 83.6 | 76.1 | 63.9 | 67.6 | 82.2 | 86.5 | 86.9 | 59.6 | 83.4 | 72.8 | 83.9 | 84.9 | 83.2 | 83.3 | 48.7 | 78.2 | 70.6 | 83.9 | 76.2 |

| VFNet [46] | 77.83 | 83.1 | 84.3 | 76.7 | 68.4 | 69.5 | 84.5 | 86.7 | 87.3 | 61.3 | 83.7 | 70.5 | 84.8 | 85.4 | 83.4 | 84.2 | 49.8 | 79.0 | 73.1 | 84.3 | 76.6 |

| Free Anchor [47] | 78.16 | 85.0 | 83.6 | 76.0 | 65.5 | 69.7 | 85.4 | 86.9 | 87.6 | 62.5 | 82.3 | 72.3 | 85.1 | 86.0 | 84.4 | 85.0 | 47.5 | 82.3 | 74.9 | 85.1 | 76.1 |

| YOLOv5-s 1 | 77.30 | 87.2 | 87.4 | 71.8 | 66.4 | 68.6 | 86.2 | 91.0 | 83.5 | 52.0 | 81.3 | 72.2 | 80.0 | 86.9 | 85.8 | 85.3 | 51.1 | 80.3 | 67.8 | 82.5 | 79.0 |

| Ours | 79.73 | 87.3 | 85.6 | 79.0 | 70.8 | 70.9 | 85.7 | 87.4 | 89.0 | 63.2 | 85.9 | 73.4 | 85.9 | 86.7 | 84.3 | 85.4 | 53.8 | 82.4 | 75.5 | 84.4 | 78.0 |

| Method | Backbone | Input Shape | Params | FPS | ||||||

|---|---|---|---|---|---|---|---|---|---|---|

| RetinaNet [35] | ResNet-50 | 3, 1280, 800 | 37.7 | 25.5 | 36.2 | 55.1 | 38.7 | 20.4 | 39.8 | 46.8 |

| FSAF [42] | ResNet-50 | 3, 1280, 800 | 35.3 | 28.3 | 37.0 | 56.2 | 39.4 | 20.3 | 40.1 | 47.8 |

| Repponits [15] | ResNet-50 | 3, 1280, 800 | 36.6 | 27.1 | 37.4 | 56.8 | 40.3 | 21.9 | 41.4 | 48.3 |

| FCOS [22] | ResNet-50 | 3, 1280, 800 | 32.0 | 19.6 | 36.9 | 45.8 | 39.3 | 20.7 | 40.1 | 47.2 |

| ATSS [43] | ResNet-50 | 3, 1280, 800 | 32.1 | 28.3 | 38.6 | 56.4 | 41.7 | 21.6 | 42.4 | 49.0 |

| Foveabox [44] | ResNet-50 | 3, 1280, 800 | 36.2 | 29.6 | 35.5 | 54.9 | 37.8 | 19.8 | 39.1 | 46.1 |

| GFL [45] | ResNet-50 | 3, 1280, 800 | 32.2 | 28.4 | 39.6 | 57.3 | 42.7 | 21.8 | 43.5 | 51.8 |

| VFNet [46] | ResNet-50 | 3, 1280, 800 | 32.7 | 26.3 | 37.5 | 53.9 | 40.5 | 21.0 | 41.0 | 49.0 |

| Free Anchor [47] | ResNet-50 | 3, 1280, 800 | 38.3 | 25.3 | 38.2 | 56.7 | 40.7 | 20.8 | 41.6 | 49.8 |

| YOLOv5-s 1 | Draknet-53 | 3, 640, 640 | 7.2 | 140.8 | 37.1 | 57.0 | 39.6 | 20.9 | 42.6 | 47.6 |

| Ours | ResNet-50 | 3, 1280, 800 | 32.9 | 22.8 | 39.9 | 57.1 | 43.6 | 23.2 | 44.2 | 51.9 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pei, Y.; Dong, Y.; Zheng, L.; Ma, J. Multi-Scale Feature Selective Matching Network for Object Detection. Mathematics 2023, 11, 2655. https://doi.org/10.3390/math11122655

Pei Y, Dong Y, Zheng L, Ma J. Multi-Scale Feature Selective Matching Network for Object Detection. Mathematics. 2023; 11(12):2655. https://doi.org/10.3390/math11122655

Chicago/Turabian StylePei, Yuanhua, Yongsheng Dong, Lintao Zheng, and Jinwen Ma. 2023. "Multi-Scale Feature Selective Matching Network for Object Detection" Mathematics 11, no. 12: 2655. https://doi.org/10.3390/math11122655

APA StylePei, Y., Dong, Y., Zheng, L., & Ma, J. (2023). Multi-Scale Feature Selective Matching Network for Object Detection. Mathematics, 11(12), 2655. https://doi.org/10.3390/math11122655