Bicluster Analysis of Heterogeneous Panel Data via M-Estimation

Abstract

1. Introduction

2. Materials and Methods

2.1. Model Setting

2.2. Proposed Estimator

2.3. Proposed Algorithm

2.4. Asymptotic Properties

3. Simulation

3.1. Simulation Setting

- RMSE: root mean square error between the estimated parameter and the real parameter .

- Bias: bias between the estimated parameter and the real parameter .

- Per: the percentage that the estimated number of blocks and the real number of blocks are equal.

- ERI: The Rand Index (RI) is used to evaluate the accuracy of clustering, which ranges between 0 and 1, with higher values indicating better performance. Motivated by the formation of , we can calculate individual or period-specific s, denoted as or , respectively. We define as the averages over all periods and individuals, as follows,

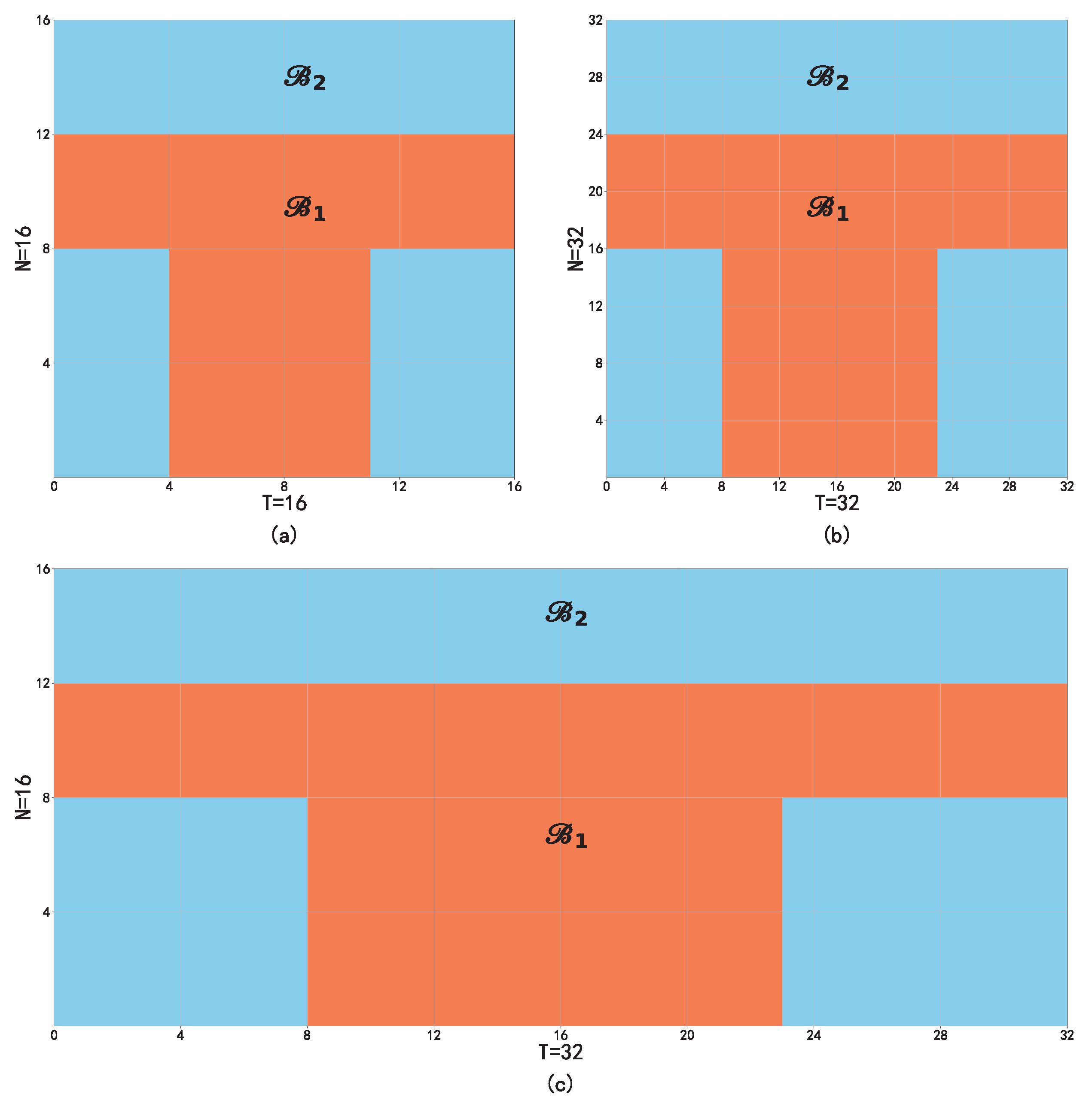

3.2. Simulation Examples

3.3. Simulation Results

4. Discussion

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

Appendix A

Appendix A.1. Proof of Theorem 1

Appendix A.2. Proof of Theorem 2

Appendix A.3. Proof of Theorem 3

References

- Su, L.; Shi, Z.; Phillips, P.C. Identifying latent structures in panel data. Econometrica 2016, 84, 2215–2264. [Google Scholar] [CrossRef]

- Tibshirani, R.; Saunders, M.; Rosset, S.; Zhu, J.; Knight, K. Sparsity and smoothness via the fused LASSO. J. R. Stat. Soc. Ser. B 2005, 67, 91–108. [Google Scholar] [CrossRef]

- Wang, W.; Zhu, Z. Group structure detection for a high-dimensional panel data model. Can. J. Stat. 2022, 50, 852–866. [Google Scholar] [CrossRef]

- Qian, J.; Su, L. Shrinkage estimation of regression models with multiple structural changes. Econom. Theory 2016, 32, 1376–1433. [Google Scholar] [CrossRef]

- Qian, J.; Su, L. Shrinkage estimation of common breaks in panel data models via adaptive group fused Lasso. J. Econom. 2016, 191, 86–109. [Google Scholar] [CrossRef]

- Okui, R.; Wang, W. Heterogeneous structural breaks in panel data models. J. Econom. 2021, 220, 447–473. [Google Scholar] [CrossRef]

- Lumsdaine, R.L.; Okui, R.; Wang, W. Estimation of panel group structure models with structural breaks in group memberships and coefficients. J. Econom. 2023, 233, 45–65. [Google Scholar] [CrossRef]

- Zhang, Y.; Wang, H.J.; Zhu, Z. Robust subgroup identification. Stat. Sin. 2019, 29, 1873–1889. [Google Scholar] [CrossRef]

- Zou, H.; Li, R. One-step sparse estimates in nonconcave penalized likelihood models. Ann. Stat. 2008, 36, 1509–1533. [Google Scholar]

- Cheng, C.; Feng, X.; Li, X.; Wu, M. Robust analysis of cancer heterogeneity for high-dimensional data. Stat. Med. 2022, 41, 5448–5462. [Google Scholar] [CrossRef]

- Boyd, S.; Parikh, N.; Chu, E.; Peleato, B.; Eckstein, J. Distributed optimization and statistical learning via the alternating direction method of multipliers. Found. Trends® Mach. Learn. 2011, 3, 1–122. [Google Scholar]

- Tibshirani, R. Regression shrinkage and selection via the lasso. J. R. Stat. Soc. Ser. B (Methodol.) 1996, 58, 267–288. [Google Scholar] [CrossRef]

- Hoerl, A.E.; Kennard, R.W. Ridge regression: Biased estimation for nonorthogonal problems. Technometrics 1970, 12, 55–67. [Google Scholar] [CrossRef]

- Zou, H.; Hastie, T. Regularization and variable selection via the elastic net. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 2005, 67, 301–320. [Google Scholar] [CrossRef]

- Fan, J.; Li, R. Variable selection via nonconcave penalized likelihood and its oracle properties. J. Am. Stat. Assoc. 2001, 96, 1348–1360. [Google Scholar] [CrossRef]

- Zhang, C.H. Nearly unbiased variable selection under minimax concave penalty. Ann. Stat. 2010, 38, 894–942. [Google Scholar] [CrossRef]

- Ma, S.; Huang, J. A concave pairwise fusion approach to subgroup analysis. J. Am. Stat. Assoc. 2017, 112, 410–423. [Google Scholar] [CrossRef]

- Wang, W.; Yan, X.; Ren, Y.; Xiao, Z. Bi-Integrative Analysis of Two-Dimensional Heterogeneous Panel Data Model. arXiv 2021, arXiv:econ.EM/2110.10480. Available online: http://xxx.lanl.gov/abs/2110.10480 (accessed on 10 October 2021).

- Hunter, D.R.; Li, R. Variable selection using MM algorithms. Ann. Stat. 2005, 33, 1617. [Google Scholar] [CrossRef]

- Wang, H.; Li, R.; Tsai, C.L. Tuning Parameter Selectors for the Smoothly Clipped Absolute Deviation Method. Biometrika 2007, 94, 553–568. [Google Scholar] [CrossRef]

- Wang, H.; Li, B.; Leng, C. Shrinkage tuning parameter selection with a diverging number of parameters. J. R. Stat. Soc. Ser. B (Stat. Methodol.) 2009, 71, 671–683. [Google Scholar] [CrossRef]

- Ma, S.; Huang, J.; Zhang, Z.; Liu, M. Exploration of heterogeneous treatment effects via concave fusion. Int. J. Biostat. 2019, 16, 20180026. [Google Scholar] [CrossRef] [PubMed]

- He, X.; Shao, Q.M. On parameters of increasing dimensions. J. Multivar. Anal. 2000, 73, 120–135. [Google Scholar] [CrossRef]

- Fang, K.; Chen, Y.; Ma, S.; Zhang, Q. Biclustering analysis of functionals via penalized fusion. J. Multivar. Anal. 2022, 189, 104874. [Google Scholar] [CrossRef] [PubMed]

| (N, T) | Model | Rmse | Bias | Per | ERI |

|---|---|---|---|---|---|

| (16, 16) | Oracle | 0.034 (0.025) | 0.056 (0.021) | ||

| 0.040 (0.031) | 0.087 (0.041) | 0.99 | 0.998 (0.002) | ||

| 0.039 (0.026) | 0.073 (0.030) | 1 | 0.999 (0.001) | ||

| Huber | 0.039 (0.026) | 0.075 (0.031) | 1 | 0.998 (0.001) | |

| (16, 32) | Oracle | 0.020 (0.015) | 0.039 (0.014) | ||

| 0.024 (0.020) | 0.068 (0.034) | 1 | 0.999 (0.001) | ||

| 0.021 (0.016) | 0.040 (0.020) | 1 | 0.999 (0.001) | ||

| Huber | 0.022 (0.016) | 0.041 (0.018) | 1 | 0.998 (0.001) | |

| (32, 32) | Oracle | 0.015 (0.012) | 0.028 (0.011) | ||

| 0.016 (0.014) | 0.035 (0.021) | 1 | 0.998 (0.001) | ||

| 0.016 (0.011) | 0.028 (0.012) | 1 | 0.999 (0.001) | ||

| Huber | 0.016 (0.010) | 0.028 (0.012) | 1 | 0.999 (0.001) |

| (N, T) | Model | Rmse | Bias | Per | ERI |

|---|---|---|---|---|---|

| (16, 16) | Oracle | 0.024 (0.020) | 0.046 (0.022) | ||

| 0.023 (0.018) | 0.058 (0.032) | 0.98 | 0.998 (0.001) | ||

| 0.026 (0.021) | 0.063 (0.039) | 0.89 | 0.983 (0.011) | ||

| Huber | 0.024 (0.019) | 0.060 (0.035) | 0.99 | 0.998 (0.001) | |

| (16, 32) | Oracle | 0.016 (0.013) | 0.030 (0.011) | ||

| 0.017 (0.013) | 0.041 (0.019) | 1 | 0.999 (0.001) | ||

| 0.023 (0.018) | 0.054 (0.033) | 0.91 | 0.987 (0.006) | ||

| Huber | 0.018 (0.014) | 0.043 (0.028) | 1 | 0.998 (0.002) | |

| (32, 32) | Oracle | 0.012 (0.009) | 0.021 (0.009) | ||

| 0.012 (0.009) | 0.032 (0.012) | 1 | 0.999 (0.001) | ||

| 0.015 (0.012) | 0.037 (0.023) | 0.94 | 0.993 (0.004) | ||

| Huber | 0.012 (0.010) | 0.033 (0.022) | 1 | 0.999 (0.001) |

| (N, T) | Model | Rmse | Bias | Per | ERI |

|---|---|---|---|---|---|

| (16, 16) | Oracle | 0.044 (0.037) | 0.084 (0.038) | ||

| 0.054 (0.038) | 0.122 (0.056) | 0.79 | 0.986 (0.012) | ||

| 0.081 (0.066) | 0.162 (0.098) | 0.42 | 0.943 (0.037) | ||

| Huber | 0.057 (0.065) | 0.151 (0.117) | 0.74 | 0.980 (0.011) | |

| (16, 32) | Oracle | 0.034 (0.025) | 0.062 (0.025) | ||

| 0.049 (0.034) | 0.112 (0.530) | 0.81 | 0.986 (0.010) | ||

| 0.088 (0.075) | 0.207 (0.129) | 0.49 | 0.953 (0.028) | ||

| Huber | 0.051 (0.040) | 0.118 (0.073) | 0.76 | 0.984 (0.010) | |

| (32, 32) | Oracle | 0.023 (0.017) | 0.044 (0.017) | ||

| 0.032 (0.024) | 0.086 (0.066) | 0.89 | 0.989 (0.005) | ||

| 0.061 (0.073) | 0.149 (0.106) | 0.62 | 0.971 (0.021) | ||

| Huber | 0.044 (0.046) | 0.093 (0.068) | 0.83 | 0.987 (0.007) |

| (N, T) | Model | Rmse | Bias | Per | ERI |

|---|---|---|---|---|---|

| (16, 16) | Oracle | 0.044 (0.037) | 0.084 (0.038) | ||

| L1 | 0.054 (0.038) | 0.122 (0.056) | 0.79 | 0.986 (0.012) | |

| L2 | 0.073 (0.062) | 0.157 (0.093) | 0.42 | 0.943 (0.037) | |

| Huber | 0.057 (0.045) | 0.151 (0.087) | 0.74 | 0.980 (0.011) | |

| (16, 32) | Oracle | 0.034 (0.025) | 0.062 (0.025) | ||

| L1 | 0.049 (0.034) | 0.112 (0.053) | 0.81 | 0.986 (0.010) | |

| L2 | 0.070 (0.063) | 0.154 (0.091) | 0.49 | 0.953 (0.028) | |

| Huber | 0.051 (0.040) | 0.118 (0.073) | 0.76 | 0.984 (0.010) | |

| (32, 32) | Oracle | 0.023 (0.017) | 0.044 (0.017) | ||

| L1 | 0.032 (0.024) | 0.086 (0.066) | 0.89 | 0.989 (0.005) | |

| L2 | 0.061 (0.073) | 0.149 (0.106) | 0.62 | 0.971 (0.002) | |

| Huber | 0.044 (0.036) | 0.093 (0.068) | 0.83 | 0.987 (0.007) |

| Model | RMSE | Bias |

|---|---|---|

| Oracle | 0.015 (0.012) | 0.028 (0.011) |

| Double Penalties | 0.016 (0.011) | 0.028 (0.012) |

| Individual Penalty Only | 0.155 (0.119) | 0.799 (0.342) |

| Temporal Penalty Only | 0.226 (0.130) | 0.440 (0.154) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cui, W.; Li, Y. Bicluster Analysis of Heterogeneous Panel Data via M-Estimation. Mathematics 2023, 11, 2333. https://doi.org/10.3390/math11102333

Cui W, Li Y. Bicluster Analysis of Heterogeneous Panel Data via M-Estimation. Mathematics. 2023; 11(10):2333. https://doi.org/10.3390/math11102333

Chicago/Turabian StyleCui, Weijie, and Yong Li. 2023. "Bicluster Analysis of Heterogeneous Panel Data via M-Estimation" Mathematics 11, no. 10: 2333. https://doi.org/10.3390/math11102333

APA StyleCui, W., & Li, Y. (2023). Bicluster Analysis of Heterogeneous Panel Data via M-Estimation. Mathematics, 11(10), 2333. https://doi.org/10.3390/math11102333