Cost-Sensitive Laplacian Logistic Regression for Ship Detention Prediction

Abstract

1. Introduction

2. Literature Review

3. Preliminaries

3.1. The Learning Problem and Regularization

3.2. Smoothness and Laplacian

4. Models and Algorithms

4.1. Predictive Model

4.2. Cost-Sensitive Semi-Supervised Learning Framework

| Algorithm 1: Cost-sensitive Laplacian Logistic Regression (CosLapLR). |

Require:n inspected ships , u uninspected ships . Ensure: Estimated decision function .

|

4.3. Performance Metrics

5. Computational Experiments

5.1. Data Description

5.2. Results

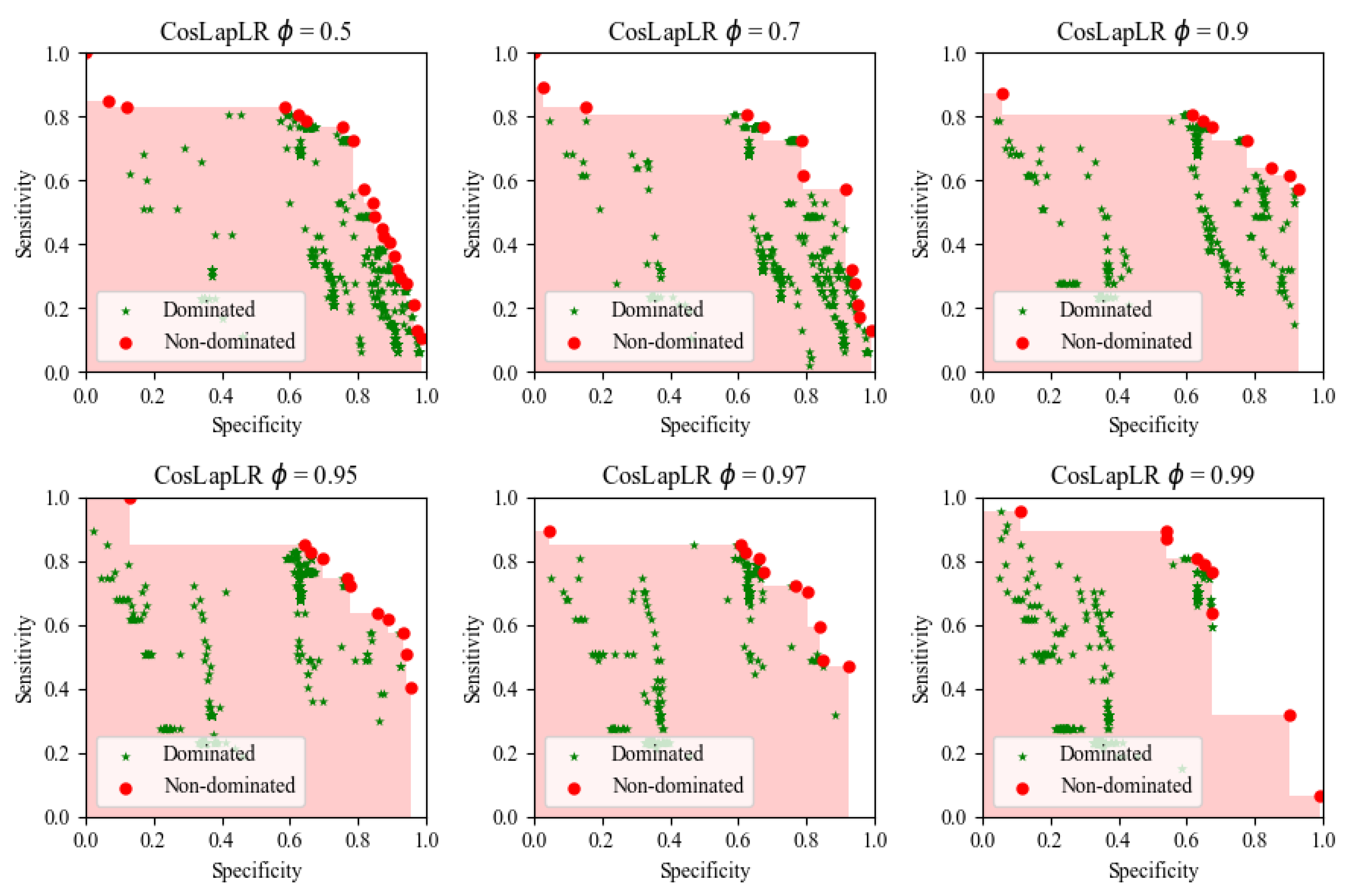

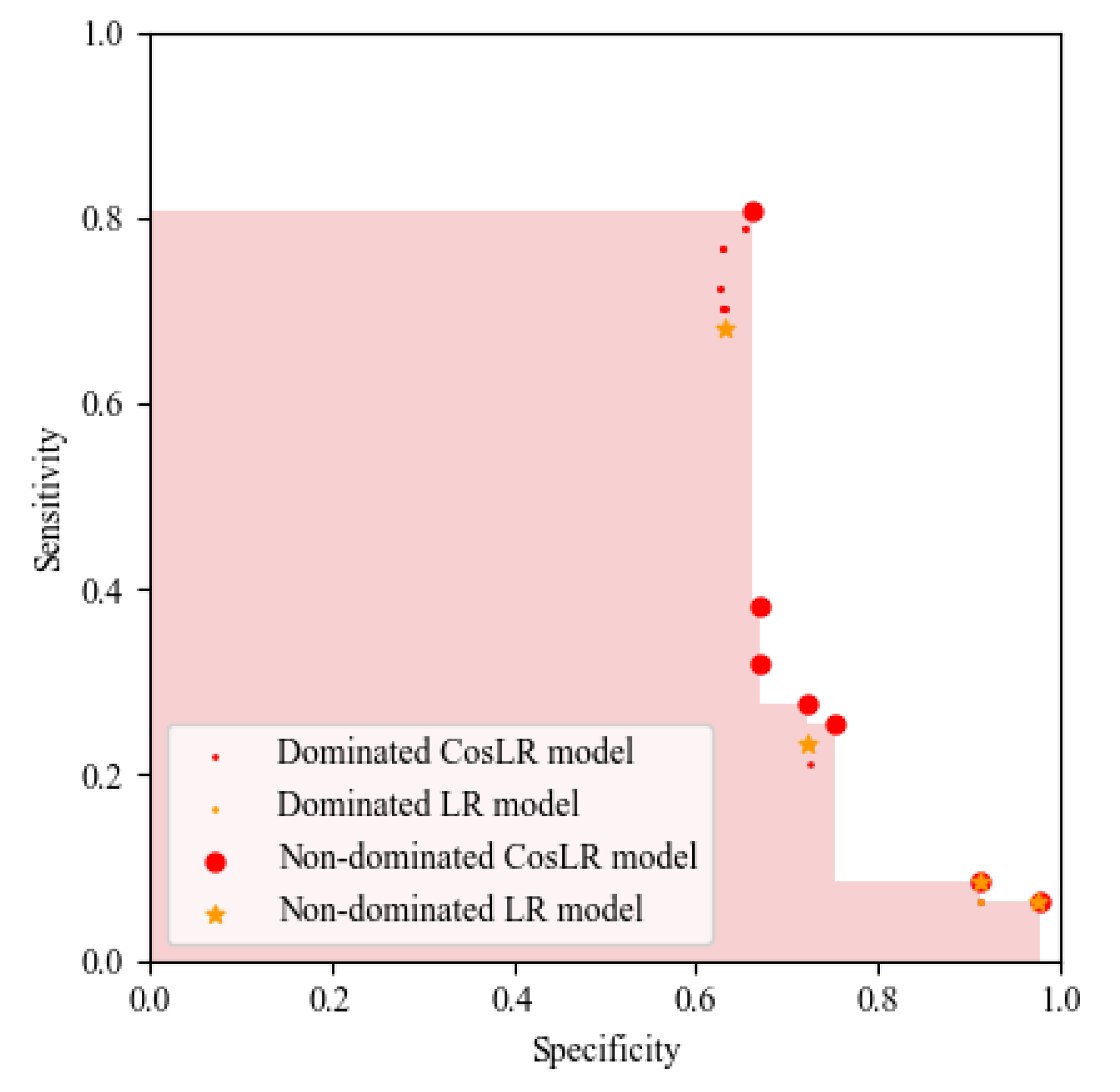

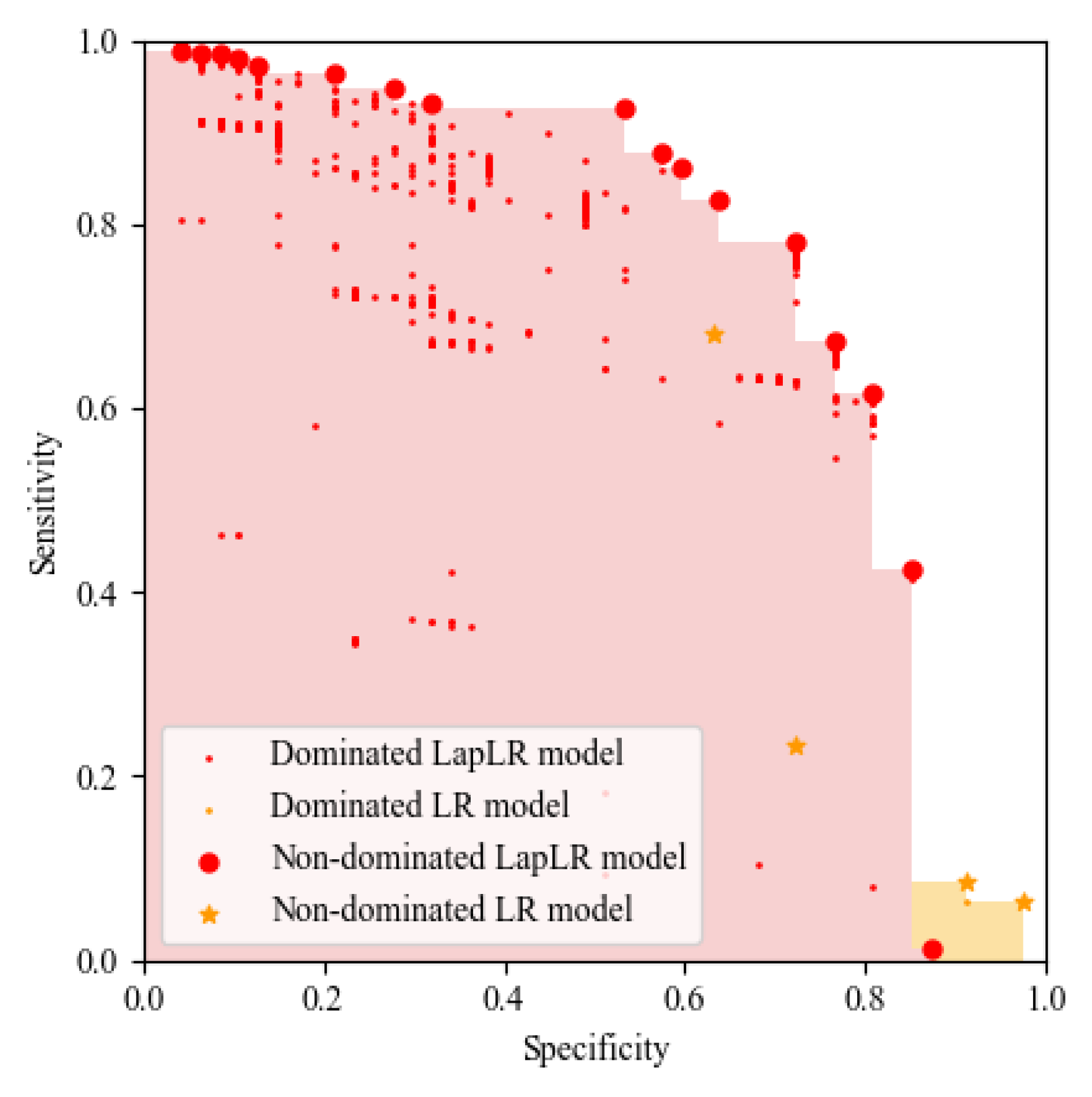

5.2.1. Pareto Frontier Graphs of Binary Classification Models

5.2.2. Prediction Performance of Binary Classification Models

6. Conclusions and Future Research Directions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ng, M. Container vessel fleet deployment for liner shipping with stochastic dependencies in shipping demand. Transp. Res. Part B Methodol. 2015, 74, 79–87. [Google Scholar] [CrossRef]

- Tian, S.; Zhu, X. Data analytics in transport: Does Simpson’s paradox exist in rule of ship selection for port state control? Electron. Res. Arch. 2023, 31, 251–272. [Google Scholar] [CrossRef]

- Yan, R.; Wang, S.; Peng, C. An artificial intelligence model considering data imbalance for ship selection in port state control based on detention probabilities. J. Comput. Sci. 2021, 48, 101257. [Google Scholar] [CrossRef]

- Fazi, S.; RoodbergenYan, K. Effects of demurrage and detention regimes on dry-port-based inland container transport. Transp. Res. Part C Emerg. Technol. 2018, 89, 1–18. [Google Scholar] [CrossRef]

- Yan, R.; Wang, S. Ship inspection by port state control—Review of current research. In Smart Transportation Systems 2019; Springer: Singapore, 2019. [Google Scholar]

- Annual Report on Port State Control in the Asia-Pacific Region 2021. Available online: https://www.tokyo-mou.org/doc/ANN21-web.pdf (accessed on 10 October 2022).

- Krawczyk, B. Learning from imbalanced data: Open challenges and future directions. Prog. Artif. Intell. 2016, 5, 221–232. [Google Scholar] [CrossRef]

- Weiss, G. Mining with rarity: A unifying framework. ACM Sigkdd Explor. Newsl. 2004, 6, 7–19. [Google Scholar] [CrossRef]

- He, H.; Garcia, E. Learning from imbalanced data. IEEE Trans. Knowl. Data Eng. 2009, 21, 1263–1284. [Google Scholar]

- Domingos, P. Metacost: A general method for making classifiers cost-sensitive. In Proceedings of the Fifth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, San Diego, CA, USA, 15–18 August 1999. [Google Scholar]

- Elkan, C. The foundations of cost-sensitive learning. In Proceedings of the International Joint Conference on Artificial Intelligence, Seattle, WA, USA, 4–10 August 2001. [Google Scholar]

- Ting, K. An instance-weighting method to induce cost-sensitive trees. IEEE Trans. Knowl. Data Eng. 2002, 14, 659–665. [Google Scholar] [CrossRef]

- Maloof, M. Learning when data sets are imbalanced and when costs are unequal and unknown. In Proceedings of the ICML-2003 Workshop on Learning from Imbalanced Data Sets II, Washington, DC, USA, 21 August 2003. [Google Scholar]

- McCarthy, K.; Zabar, B.; Weiss, G. Does cost-sensitive learning beat sampling for classifying rare classes? In Proceedings of the 1st International Workshop on Utility-Based Data Mining, Chicago, IL, USA, 21 August 2005. [Google Scholar]

- Liu, X.; Zhou, Z. The influence of class imbalance on cost-sensitive learning: An empirical study. In Proceedings of the Sixth International Conference on Data Mining, Hong Kong, China, 18–22 December 2006. [Google Scholar]

- Zhou, Z.; Liu, X. Training cost-sensitive neural networks with methods addressing the class imbalance problem. IEEE Trans. Knowl. Data Eng. 2005, 18, 63–77. [Google Scholar] [CrossRef]

- Sun, Y.; Kamel, M.; Wong, A.; Wang, Y. Cost-sensitive boosting for classification of imbalanced data. Pattern Recognit. 2007, 40, 3358–3378. [Google Scholar] [CrossRef]

- Zhu, X.; Goldberg, A. Introduction to semi-supervised learning. Synth. Lect. Artif. Intell. Mach. Learn. 2009, 3, 1–130. [Google Scholar]

- Zhou, Z.; Li, M. Semi-supervised learning by disagreement. Knowl. Inf. Syst. 2010, 24, 415–439. [Google Scholar] [CrossRef]

- Greiner, R.; Grove, A.; Roth, D. Learning cost-sensitive active classifiers. Artif. Intell. 2002, 139, 137–174. [Google Scholar] [CrossRef]

- Qin, Z.; Zhang, S.; Liu, L.; Wang, T. Cost-sensitive semi-supervised classification using CS-EM. In Proceedings of the 8th IEEE International Conference on Computer and Information Technology, Sydney, NSW, Australia, 8–11 July 2008. [Google Scholar]

- Liu, A.; Jun, G.; Ghosh, J. Spatially cost-sensitive active learning. In Proceedings of the 2009 SIAM International Conference on Data Mining, Sparks, NV, USA, 30 April–2 May 2009. [Google Scholar]

- Li, Y.; Kwok, J.; Zhou, Z. Cost-sensitive semi-supervised support vector machine. In Proceedings of the AAAI Conference on Artificial Intelligence, Palo Alto, CA, USA, 11–13 October 2010. [Google Scholar]

- Belkin, M.; Niyogi, P.; Sindhwani, V. Manifold regularization: A geometric framework for learning from labeled and unlabeled examples. J. Mach. Learn. Res. 2006, 7, 2399–2434. [Google Scholar]

- Xu, R.; Lu, Q.; Li, W.; Li, K. Web mining for improving risk assessment in port state control inspection. In Proceedings of the 2007 International Conference on Natural Language Processing and Knowledge Engineering, Beijing, China, 30 August–1 September 2007. [Google Scholar]

- Xu, R.; Lu, Q.; Li, K.; Li, W. A risk assessment system for improving port state control inspection. In Proceedings of the 2007 International Conference on Machine Learning and Cybernetics, Hong Kong, China, 19–22 August 2007. [Google Scholar]

- Gao, Z.; Lu, G.; Liu, M.; Cui, M. A novel risk assessment system for port state control inspection. In Proceedings of the 2008 IEEE International Conference on Intelligence and Security Informatics, Taipei, Taiwan, 17–20 June 2008. [Google Scholar]

- Wang, S.; Yan, R.; Qu, X. Development of a non-parametric classifier: Effective identification, algorithm, and applications in port state control for maritime transportation. Transp. Res. Part B Methodol. 2019, 128, 129–157. [Google Scholar] [CrossRef]

- Chung, W.; Kao, S.; Chang, C.; Yuan, C. Association rule learning to improve deficiency inspection in port state control. Marit. Policy Manag. 2020, 47, 332–351. [Google Scholar] [CrossRef]

- Yan, R.; Zhuge, D.; Wang, S. Development of two highly-efficient and innovative inspection schemes for PSC inspection. Asia-Pac. J. Oper. Res. 2021, 38, 2040013. [Google Scholar] [CrossRef]

- Yan, R.; Wang, S.; Fagerholt, K. A semi-“smart predict then optimize” (semi-SPO) method for efficient ship inspection. Transp. Res. Part B Methodol. 2020, 142, 100–125. [Google Scholar] [CrossRef]

- Yan, R.; Wang, S.; Cao, J.; Sun, D. Shipping domain knowledge informed prediction and optimization in port state control. Transp. Res. Part B Methodol. 2021, 149, 52–78. [Google Scholar] [CrossRef]

- Yan, R.; Wang, S.; Peng, C. Ship selection in port state control: Status and perspectives. Marit. Policy Manag. 2022, 49, 600–615. [Google Scholar] [CrossRef]

- Wu, S.; Chen, X.; Shi, C.; Fu, J.; Yan, Y.; Wang, S. Ship detention prediction via feature selection scheme and support vector machine (SVM). Marit. Policy Manag. 2022, 49, 140–153. [Google Scholar] [CrossRef]

- Cariou, P.; Wolff, F. Identifying substandard vessels through port state control inspections: A new methodology for concentrated inspection campaigns. Mar. Policy 2015, 60, 27–39. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, S.; Xu, L.; Wan, Z.; Fei, Y.; Zheng, T. Identification of key factors of ship detention under port state control. Mar. Policy 2019, 102, 21–27. [Google Scholar] [CrossRef]

- Cariou, P.; Mejia, M.; Wolff, F. Evidence on target factors used for port state control inspections. Mar. Policy 2009, 33, 847–859. [Google Scholar] [CrossRef]

- Yan, R.; Wang, S. Ship detention prediction using anomaly detection in port state control: Model and explanation. Electron. Res. Arch. 2022, 30, 3679–3691. [Google Scholar] [CrossRef]

- Tsou, M. Big data analysis of port state control ship detention database. J. Mar. Eng. Technol. 2019, 18, 113–121. [Google Scholar] [CrossRef]

- Şanlıer, Ş. Analysis of port state control inspection data: The Black Sea Region. J. Mar. Eng. Technol. 2020, 112, 103757. [Google Scholar] [CrossRef]

- Hänninen, M.; Kujala, P. Bayesian network modeling of port state control inspection findings and ship accident involvement. Expert Syst. Appl. 2014, 41, 1632–1646. [Google Scholar] [CrossRef]

- Yang, Z.; Yang, Z.; Yin, J. Realising advanced risk-based port state control inspection using data-driven Bayesian networks. Transp. Res. Part A Policy Pract. 2018, 110, 38–56. [Google Scholar] [CrossRef]

- Yang, Z.; Yang, Z.; Yin, J.; Qu, Z. A risk-based game model for rational inspections in port state control. Transp. Res. Part E Logist. Transp. Rev. 2018, 118, 477–495. [Google Scholar] [CrossRef]

- Vapnik, V. The Nature of Statistical Learning Theory; Springer Science & Business Media: Berlin/Heidelberg, Germany, 1999. [Google Scholar]

- Shalev-Shwartz, S.; Ben-David, S. Understanding Machine Learning: From Theory to Algorithms; Cambridge University Press: Cambridge, UK, 2014. [Google Scholar]

- Melas-Kyriazi, L. The mathematical foundations of manifold learning. arXiv 2020, arXiv:2011.01307. [Google Scholar]

- Sindhwani, V.; Niyogi, P.; Belkin, M.; Keerthi, S. Linear manifold regularization for large scale semi-supervised learning. In Proceedings of the 22nd ICML Workshop on Learning with Partially Classified Training Data, Bonn, Germany, 7–11 August 2005. [Google Scholar]

- Spielman, D. Spectral graph theory and its applications. In Proceedings of the 48th Annual IEEE Symposium on Foundations of Computer Science (FOCS’07), Providence, RI, USA, 21–23 October 2007. [Google Scholar]

- Merdan, S.; Barnett, C.; Denton, B.; Montie, J.; Miller, D. OR practice–Data analytics for optimal detection of metastatic prostate cancer. Oper. Res. 2021, 69, 774–794. [Google Scholar] [CrossRef]

- Hsu, C.; Chang, C.; Lin, C. A Practical Guide to Support Vector Classification. Available online: https://www.csie.ntu.edu.tw/~cjlin/papers/guide/guide.pdf (accessed on 10 October 2022).

- Yan, R.; Wang, S. Integrating prediction with optimization: Models and applications in transportation management. Multimodal Transp. 2022, 1, 100018. [Google Scholar] [CrossRef]

- Wang, S.; Yan, R. “Predict, then optimize” with quantile regression: A global method from predictive to prescriptive analytics and applications to multimodal transportation. Multimodal Transp. 2022, 69, 100035. [Google Scholar] [CrossRef]

- Yi, W.; Zhen, L.; Jin, Y. Stackelberg game analysis of government subsidy on sustainable off-site construction and low-carbon logistics. Clean. Logist. Supply Chain. 2021, 2, 100013. [Google Scholar] [CrossRef]

- Yi, W.; Wu, S.; Zhen, L.; Chawynski, G. Bi-level programming subsidy design for promoting sustainable prefabricated product logistics. Clean. Logist. Supply Chain. 2021, 1, 100005. [Google Scholar] [CrossRef]

- Yan, R.; Wang, S.; Zhen, L.; Laporte, G. Emerging approaches applied to maritime transport research: Past and future. Commun. Transp. Res. 2021, 1, 100011. [Google Scholar] [CrossRef]

- Wang, W.; Wu, Y. Is uncertainty always bad for the performance of transportation systems? Commun. Transp. Res. 2021, 1, 100021. [Google Scholar] [CrossRef]

| Literature | Prediction Target | Prediction Method | Data Imbalance | Unlabeled Data |

|---|---|---|---|---|

| Xu et al. [25,26] | Ship deficiency number | SVM | – | No |

| Gao et al. [27] | Ship deficiency number | kNN and SVM | – | No |

| Wang et al. [28] | Ship deficiency number | TAN | – | No |

| Chung et al. [29] and Yan et al. [30] | The type and sequence of inspected deficiency codes | Apriori | – | No |

| Yan et al. [31] | Ship deficiency number | Random forest | – | No |

| Yan et al. [32] | Ship deficiency number | Xgboost | – | No |

| Cariou and Wolff [35] | Ship detention outcome | Quantile regression | No | No |

| Yang et al. [42] | Ship detention outcome | BN | No | No |

| Tsou [39] | Ship detention outcome | Association rule mining techniques | No | No |

| Wu et al. [34] | Ship detention outcome | SVM | No | No |

| Yan et al. [3] | Ship detention outcome | BRF | Yes | No |

| Our work | Ship detention outcome | Cost-sensitive Laplacian logistic regression | Yes | Yes |

| Model | k | ||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0.5 | LR | – | – | 2 | – | 555 | 325 | 9 | 19 | 0.632 | 0.631 | 0.679 | 167.00 |

| LapLR | 3 | 32 | 591 | 289 | 6 | 22 | 0.675 | 0.672 | 0.786 | 147.50 | |||

| 0.7 | CosLR | – | – | 1/2 | – | 555 | 325 | 9 | 19 | 0.632 | 0.631 | 0.679 | 103.80 |

| CosLapLR | 7 | 8 | 591 | 289 | 6 | 22 | 0.675 | 0.672 | 0.786 | 90.90 | |||

| 0.9 | CosLR | – | – | – | 554 | 326 | 8 | 20 | 0.632 | 0.630 | 0.714 | 39.80 | |

| CosLapLR | 3 | 32 | 570 | 310 | 6 | 22 | 0.652 | 0.648 | 0.786 | 36.40 | |||

| 0.95 | CosLR | – | – | – | 583 | 297 | 5 | 23 | 0.667 | 0.663 | 0.821 | 19.60 | |

| CosLapLR | 7 | 2 | 582 | 298 | 5 | 23 | 0.666 | 0.661 | 0.821 | 19.65 | |||

| 0.97 | CosLR | – | – | – | 576 | 304 | 6 | 22 | 0.659 | 0.655 | 0.786 | 14.94 | |

| CosLapLR | 7 | 32 | 581 | 299 | 5 | 23 | 0.665 | 0.660 | 0.821 | 13.82 | |||

| 0.99 | CosLR | – | – | – | 575 | 305 | 6 | 22 | 0.657 | 0.653 | 0.786 | 8.99 | |

| CosLapLR | 3 | 32 | 554 | 326 | 5 | 23 | 0.635 | 0.630 | 0.821 | 8.21 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Tian, X.; Wang, S. Cost-Sensitive Laplacian Logistic Regression for Ship Detention Prediction. Mathematics 2023, 11, 119. https://doi.org/10.3390/math11010119

Tian X, Wang S. Cost-Sensitive Laplacian Logistic Regression for Ship Detention Prediction. Mathematics. 2023; 11(1):119. https://doi.org/10.3390/math11010119

Chicago/Turabian StyleTian, Xuecheng, and Shuaian Wang. 2023. "Cost-Sensitive Laplacian Logistic Regression for Ship Detention Prediction" Mathematics 11, no. 1: 119. https://doi.org/10.3390/math11010119

APA StyleTian, X., & Wang, S. (2023). Cost-Sensitive Laplacian Logistic Regression for Ship Detention Prediction. Mathematics, 11(1), 119. https://doi.org/10.3390/math11010119