1. Introduction

Let

,

,

, an independent sequence of standardized normal random variables with correlation

,

, and let

with

, then

is a random variable with

marginal distribution. According to [

1], the distribution of

has a correlated bivariate chi-square distribution with

degrees of freedom parameter and probability density function (pdf) given by

where

denotes the generalized hypergeometric function defined by

for

, and with

,

, being the Pochhammer symbol [

2]. Note that in this case, the correlation function of

U is given by

.

Transformation

,

defines a random variable

distributed with density

The pdf of

has a Kibble-type correlated bivariate gamma distribution [

3], given by

where

,

and

. In Equations (

1) and (

4), the case

implies the product of two independent chi-square and gamma random variables (i.e., the same result obtained by the bivariate normal distribution in the independency case).

The univariate and bivariate gamma distributions are basic distributions that have been used to model data in many applications [

4,

5]. More recently, several examples of bivariate distributions and their applications emerged: streamflow data [

6], drought data modeling [

7], rainfall data modeling [

8], wind speed data spatio-temporal modeling [

9], flood volume-peak data modeling [

10], wireless communications models [

11], and transmit antennas system modeling [

12].

In this paper, we build a generalization of bivariate gamma distribution using a Kibble type bivariate gamma distribution. The stochastic representation was obtained by the sum of a Kibble-type bivariate random vector and a bivariate random vector builded by two independent gamma random variables. In addition, the resulting bivariate density considers an infinite series of products of two confluent hypergeometric functions. In particular, we derive the pdf and cumulative distribution function (cdf), moment generation and characteristic functions, Hazard, Bonferroni and Lorenz functions, and an approximation for the differential entropy and mutual information index. Numerical examples showed the behavior of exact and approximated expressions. All numerical examples were calculated using the

hypergeo package of

R software [

13].

The paper is organized as follows.

Section 2 presents the generalization of the bivariate gamma distribution, with its pdf (with simulations), cdf, moment generation and characteristic functions, cross-product moment, covariance and correlation (with simulations), and some special expected values. Moreover, the Hazard, Bonferroni and Lorenz functions are computed.

Section 3 presents the approximation for the differential entropy and mutual information index (with simulations) for the generalized bivariate gamma distribution with some numerical results. The paper ends with a discussion in

Section 4. Proofs are available in

Appendix A section.

2. Bivariate Gamma Generalization

Let

where

R is a random variable,

,

and the distribution of

W is defined by Equation (

3); thus

is a random variable with marginal gamma distribution and arbitrary shape,

, and scale,

, based on

,

and

parameters. This type of construction has been proposed by [

5,

6,

14,

15,

16,

17,

18,

19] to build a bivariate gamma distribution. Specifically, the authors considered the case

in (

3). Properties of the bivariate gamma distribution can be found in [

15,

18,

20,

21].

In line with the stochastic representation (

5), we consider the bivariate distribution of

as a generalization of the Cheriyan distribution [

15], where

is given in (

3) and (

4) with

, and

,

,

,

,

,

. Thus,

,

.

In the following theorem, we provide a new bivariate distribution with gamma marginal distributions obtained using a Kibble-type bivariate gamma distribution [

3].

Theorem 1. Let where , , , is a finite sequence of independent normal random variables with zero mean, unit variance, and correlation ρ. Let , with and , . The pdf of is given bywhere is the confluent hypergeometric function defined in (2) for . Theorem 1 shows that the pdf considers an infinite series of products of two confluent hypergeometric functions. Note that when

, pdf in Theorem 1 becomes the product of two independent gamma random variables,

,

, i.e., the same property of the bivariate normal distribution is accomplished. When

, then

, i.e.,

is Kibble-type gamma distributed (see

Figure 1).

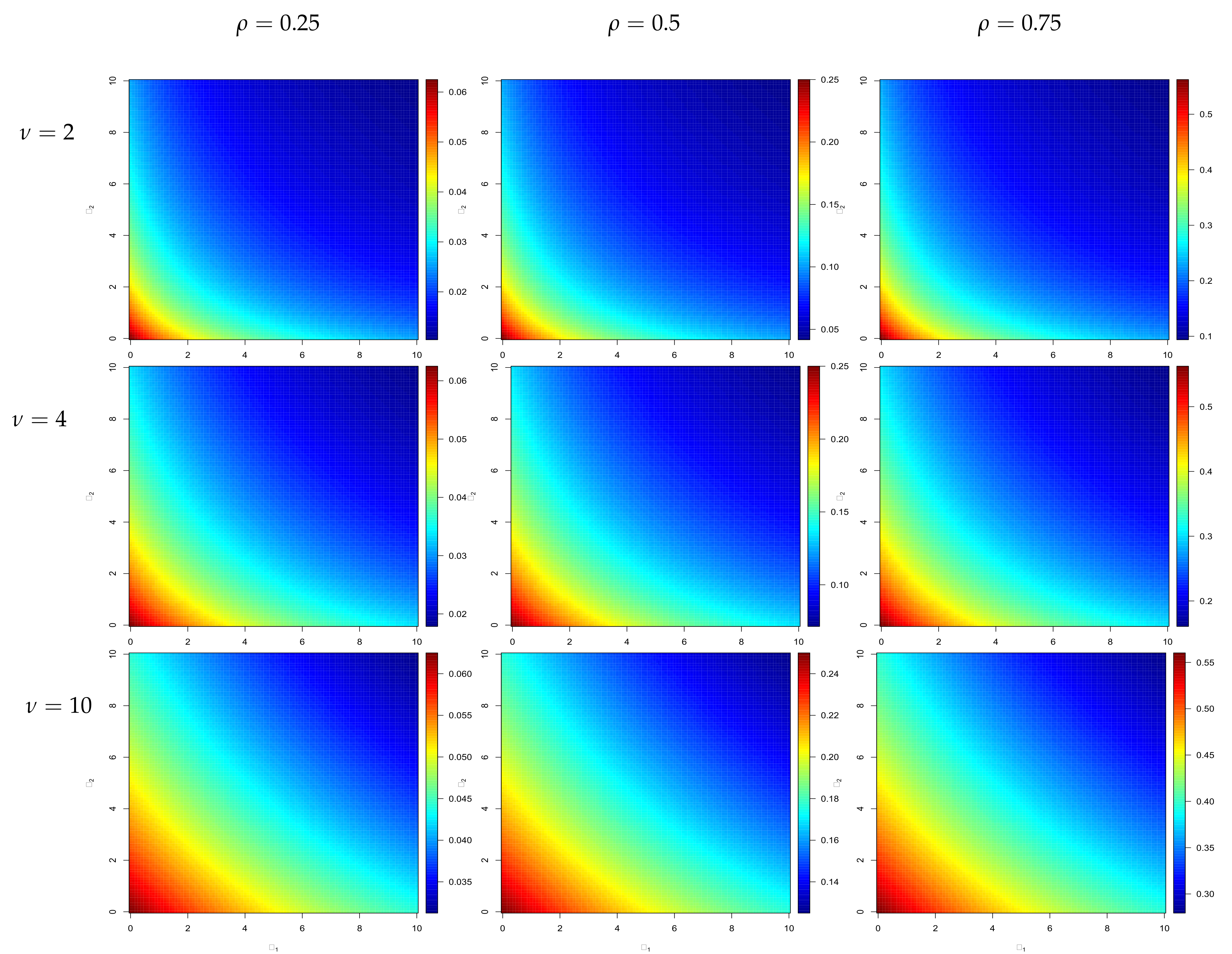

Figure 1 shows the pdf of Equation (

6) for some parameters of

. When

increases, is produced the largest values of

and

in the pdf. When

, the pdf is close at origin (

), has positive bias and decays exponentially. When

, the pdf has less bias, but more symmetry and variability. When

, the pdf has a bias to the right, but with less bias than in the case

. When

increases (decreases), its variance increases (decreases). When

increases, the pdf shows heavy-tailed behavior, at the same time as parameter

,

increases (as the usual gamma distribution).

Theorem 2. The joint cdf of in Equation (6) can be expressed aswhere denotes the incomplete gaussian hypergeometric function aswith incomplete Pochhammer symbols given by for , [2,22]. Theorem 2 shows that the joint pdf considers an infinite series of products of two incomplete gaussian hypergeometric functions.

2.1. Moment Generation Function

In this section, we analyze the moment generation function of , the cross-product moment between and , and some particular expected values involving these variables.

Proposition 1. The joint moment generation (mgf) and characteristic functions of given in Equation (6) areandrespectively. Assuming , thus and , which are the mgf’s of a gamma random variable. The proof of the characteristic function of is trivial, following the proof of Proposition 1 for .

Proposition 2. The cross-product moment of in Equation (6) can be expressed aswhere is the gaussian hypergeometric function defined in (2) for and . Proposition 2 shows that the cross-product moment considers an infinite series of products of two gaussian hypergeometric functions. A direct result of Proposition 2 is the following Corollary 1, that presents the expected value and variance of marginal gamma random variable , and the covariance and correlation between two marginal gamma random variables, and .

Corollary 1. If , it has pdf according to (6). According to Proposition 2, we have - (a)

, .

- (b)

, .

- (c)

- (d)

The proofs of parts (a) and (b) are trivial. For parts (c) and (d), the Euler formula (9.131.1.11) of [

23] is used,

, and Proposition 2 with

.

Figure 2 shows the correlation

of Corollary 1d for some pdf parameters of

(

6). For all cases, when

and

increase, the correlation

decreases. When

increases, correlation

slowly decreases from small to large values of

and

. When parameter

(the normal distribution correlation) increases, the correlation

increases, as does its maximum value (from 0.06 to 0.55). We can observe in Corollary 1d that correlation

does not depend on

.

The following Proposition 3 is useful for computing differential entropy and mutual information index of

Section 3.

Proposition 3. If have pdf given in (6), then - (a)

.

- (b)

, ; where is the digamma function.

2.2. Hazard, Bonferroni and Lorenz Functions

The Bonferroni and Lorenz curves [

24] have many practical applications not only in economy, but also in fields like reliability, lifetime testing, insurance, and medicine. For random vector

with cdf

, the Hazard, Bonferroni and Lorenz functions are defined by

with

;

with

q a real scalar,

; and

respectively.

The Bonferroni curve for pdf (

6) considered

and

, obtained from Theorem 2 and Proposition 2 (by replacing

), respectively. The double integral of the left side of (

14) is obtained with the following Proposition.

Proposition 4. Let be a random vector with pdf given in (6) and q a real scalar, , thuswhere is denoted in Theorem 2. Given that and depend on incomplete and complete gaussian hypergeometric functions, respectively, the Hazard, Bonferroni and Lorenz curves can be computed using these functions.

3. Differential Entropy and Mutual Information Index

The differential entropy of a random variable

Y is a variation measure of information uncertainty [

25]. In particular, the differential entropy of

with pdf

is defined by

and measures the contained information in

based on its pdf’s parameters. The following Remarks 1 and 2 will be used in the Proposition 5 to approximate the differential entropy of

.

Remark 1 ([

26])

. For a positive and fixed , and fixed parameters , , ρ and β, we haveas . Remark 2. (Formula 1.511 of [

23])

. If and (i.e., x turn around 0, , and there exists a constant such that , see Lemma 3.2 of [27]), we get Proposition 5. The differential entropy of a random vector with probability density function given in (6) can be approximated aswhere and , , can be computed using parts (a) and (b) of Proposition 3, respectively. Under dependence assumption (

), the mutual information index (MII) [

25,

28,

29] between

and

is defined by

It is clear from (

17) that MII between

and

can be expressed in terms of marginal and joint differential entropies,

[

25]. According to (

5), the differential entropy of each gamma distribution is

Therefore, the MII between

and

can be computed using (

18) and Proposition (5). Under independence assumption

, the mutual information index between

and

is 0; otherwise, this index is positive [

28,

29]. Moreover, the MII increases with the degree of dependence between the components of

and

. Therefore, the MII is an association measure between

and

, which could be compared with correlation

.

Figure 3 illustrates the behavior of MII assuming several values for parameters of

. The case

was omitted for the above mentioned reasons, and the case

was omitted because results are similar to the case

. We observed that

for small values of

, which is related to approximation (

A16) being wrongly utilized when the argument is outside

in Remark 2. However, when the argument is inside

, we get

and increases for large values of

and any values of

, as in the analysis of

in

Figure 2.

4. Concluding Remarks

In this paper, we presented a generalization of bivariate gamma distribution based on a Kibble type bivariate gamma distribution. The stochastic representation was obtained by the sum of a Kibble-type bivariate random vector and a bivariate random vector builded by two independent gamma random variables. Moreover, the resulting bivariate density considers an infinite series of products of two confluent hypergeometric functions. In particular, we derived the probability and cumulative distribution functions, moment generation and characteristic functions, covariance, correlation and cross-product moment, Hazard, Bonferroni and Lorenz functions, and an approximation for the differential entropy and mutual information index. Numerical examples showed the behavior of exact and approximated expressions.

Previous work by [

30] considered the generalization of this paper to represent bivariate Superstatistics based on Boltzmann factors. However, further work derived from this study could extend to the multivariate case (

d-dimensional). A possible extension is considering Equation (

6) of [

31] for the joint pdf of our vector

, corresponding to a pdf based on a gamma distribution with simple Markov chain-type correlation. When

, this pdf coincides with Kibble distribution defined in (

4). Thus,

where

,

,

are independent and gamma distributed random variables. However, the properties obtained in this study could be difficult to obtain for this multivariate version and the use of generalized hypergeometric function must be carefully handled. In addition, it is possible to consider the Probability Transformation method using the theory of the space transformation of random variables and the probability conservation principle. Thus, we could evaluate the pdf of a

d-dimensional invertible transformation [

32].

Inferential aspects could also be considered in future work. For example: (i) a numerical approach could be used in the optimization of log-likelihood function; (ii) the pseudo-likelihood method by considering the optimization of an objective function that depends on a bivariate pdf could be used; and (iii) a Bayesian approach could be useful.