Zeroing Neural Network for Pseudoinversion of an Arbitrary Time-Varying Matrix Based on Singular Value Decomposition

Abstract

:1. Introduction and Preliminaries

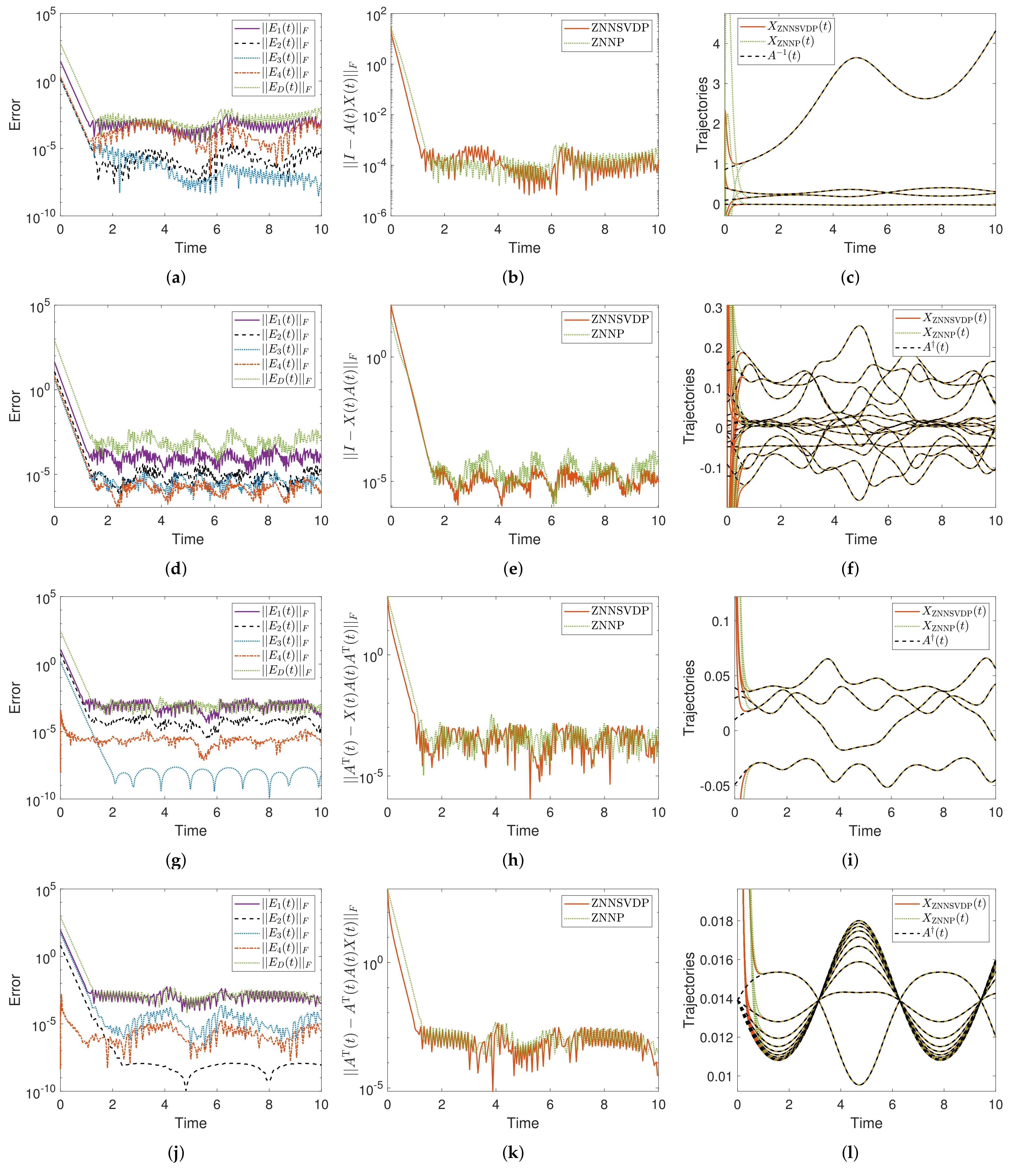

- A novel ZNN approach, which is based on SVD, is employed for solving the problem of calculating the pseudoinverse of an arbitrary TV real matrix.

- Two ZNN models for calculating the pseudoinverse of an arbitrary TV matrix are offered: one called ZNNSVDP, which is based on SVD, and the other called ZNNP, which is based on a more direct approach to the problem and is offered for comparison purposes.

- Four numerical experiments, involving the pseudoinversion of square, rectangular, singular, and nonsingular input matrices, indicate that both models are effective for solving the problem and that the ZNNSVDP model converges to the problem’s solution faster than the ZNNP model.

2. Time-Varying Pseudoinverse Computation Based on SVD

| Algorithm 1 Permutation matrix calculation |

| Require: The rows or columns number m of a square matrix . |

| 1: procedurePermutation_Matrix(m) |

| 2: Set eye and reshape |

| 3: return reshape |

| 4: end procedure |

| Ensure:, i.e., the permutation matrix. |

| Algorithm 2 Operational matrix calculation |

| Require: The number of the rows and columns, respectively, m and n of a matrix , |

| and . |

| 1: procedureOperational_Matrix() |

| 2: if then |

| 3: Set |

| 4: else |

| 5: Set |

| 6: end if |

| 7: Set zeros |

| 8: for do |

| 9: Set mod and floor |

| 10: if then |

| 11: Set |

| 12: end if |

| 13: end for |

| 14: return G |

| 15: end procedure |

| Ensure: The operational matrix G. |

3. Alternative Time-Varying Pseudoinverse Computation

4. Numerical Experiments

4.1. Experiment 1

4.2. Experiment 2

4.3. Experiment 3

4.4. Experiment 4

4.5. Analysis of Numerical Experiments—Results and Comparison

5. Conclusions

- It is possible to explore the streams of the ZNNSVDP and ZNNP models that are accelerated by a nonlinear activation function, as well as nonlinear ZNNSVDP and ZNNP model flows, with a terminal convergence in this direction.

- Another option is to use carefully chosen fuzzy parameters to define future ZNN dynamics upgrades.

- The presented ZNNSVDP and ZNNP models have the drawback of not being noise tolerant, because all types of noise have a substantial impact on the accuracy of the proposed ZNN approaches. As a consequence, future research could focus on adapting the ZNNSVDP and ZNNP models to an integration enhanced and noise-handling ZNN class of dynamical systems.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Penrose, R. A generalized inverse for matrices. Proc. Cambridge Philos. Soc. 1955, 51, 406–413. [Google Scholar] [CrossRef] [Green Version]

- Sayevand, K.; Pourdarvish, A.; Machado, J.A.T.; Erfanifar, R. On the Calculation of the Moore-Penrose and Drazin Inverses: Application to Fractional Calculus. Mathematics 2021, 9, 2501. [Google Scholar] [CrossRef]

- Chien, M.T. Numerical Range of Moore-Penrose Inverse Matrices. Mathematics 2020, 8, 830. [Google Scholar] [CrossRef]

- Crane, D.K.; Gockenbach, M.S. The Singular Value Expansion for Arbitrary Bounded Linear Operators. Mathematics 2020, 8, 1346. [Google Scholar] [CrossRef]

- Valverde-Albacete, F.J.; Peláez-Moreno, C. The Singular Value Decomposition over Completed Idempotent Semifields. Mathematics 2020, 8, 1577. [Google Scholar] [CrossRef]

- Hazarika, A.; Barthakur, M.; Dutta, L.; Bhuyan, M. F-SVD based algorithm for variability and stability measurement of bio-signals, feature extraction and fusion for pattern recognition. Biomed. Signal Process. Control 2019, 47, 26–40. [Google Scholar] [CrossRef]

- Wang, J.; Le, N.T.; Lee, J.; Wang, C. Illumination compensation for face recognition using adaptive singular value decomposition in the wavelet domain. Inf. Sci. 2018, 435, 69–93. [Google Scholar] [CrossRef]

- Chen, J.; Zhang, Y. Online singular value decomposition of time-varying matrix via zeroing neural dynamics. Neurocomputing 2020, 383, 314–323. [Google Scholar] [CrossRef]

- Katsikis, V.N.; Stanimirović, P.S.; Mourtas, S.D.; Xiao, L.; Karabasević, D.; Stanujkić, D. Zeroing Neural Network with Fuzzy Parameter for Computing Pseudoinverse of Arbitrary Matrix. IEEE Trans. Fuzzy Syst. 2021; Early Access. [Google Scholar] [CrossRef]

- Zhang, Y.; Ge, S.S. Design and analysis of a general recurrent neural network model for time-varying matrix inversion. IEEE Trans. Neural Netw. 2005, 16, 1477–1490. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, X.; Che, M.; Wei, Y. Recurrent neural network for computation of generalized eigenvalue problem with real diagonalizable matrix pair and its applications. Neurocomputing 2016, 216, 230–241. [Google Scholar] [CrossRef]

- Stanimirović, P.S.; Katsikis, V.N.; Zhang, Z.; Li, S.; Chen, J.; Zhou, M. Varying-parameter Zhang neural network for approximating some expressions involving outer inverses. Optim. Methods Softw. 2020, 35, 1304–1330. [Google Scholar] [CrossRef]

- Ma, H.; Li, N.; Stanimirović, P.S.; Katsikis, V.N. Perturbation theory for Moore–Penrose inverse of tensor via Einstein product. Comput. Appl. Math. 2019, 38, 111. [Google Scholar] [CrossRef]

- Katsikis, V.N.; Mourtas, S.D.; Stanimirović, P.S.; Zhang, Y. Solving Complex-Valued Time-Varying Linear Matrix Equations via QR Decomposition With Applications to Robotic Motion Tracking and on Angle-of-Arrival Localization. IEEE Trans. Neural Netw. Learn. Syst. 2021; Early Access. [Google Scholar] [CrossRef] [PubMed]

- Stanimirović, P.S.; Katsikis, V.N.; Li, S. Hybrid GNN-ZNN models for solving linear matrix equations. Neurocomputing 2018, 316, 124–134. [Google Scholar] [CrossRef]

- Stanimirović, P.S.; Katsikis, V.N.; Li, S. Integration enhanced and noise tolerant ZNN for computing various expressions involving outer inverses. Neurocomputing 2019, 329, 129–143. [Google Scholar] [CrossRef]

- Zhang, Z.; Yang, S.; Zheng, L. A Penalty Strategy Combined Varying-Parameter Recurrent Neural Network for Solving Time-Varying Multi-Type Constrained Quadratic Programming Problems. IEEE Trans. Neural Netw. Learn. Syst. 2021, 32, 2993–3004. [Google Scholar] [CrossRef] [PubMed]

- Katsikis, V.N.; Stanimirović, P.S.; Mourtas, S.D.; Li, S.; Cao, X. Chapter Towards Higher Order Dynamical Systems. In Generalized Inverses: Algorithms and Applications; Mathematics Research Developments, Nova Science Publishers, Inc.: Hauppauge, NY, USA, 2021; pp. 207–239. [Google Scholar]

- Katsikis, V.N.; Mourtas, S.D.; Stanimirović, P.S.; Zhang, Y. Continuous-Time Varying Complex QR Decomposition via Zeroing Neural Dynamics. Neural Process. Lett. 2021, 53, 3573–3590. [Google Scholar] [CrossRef]

- Zhang, Y.; Guo, D. Zhang Functions and Various Models; Springer: Berlin/Heidelberg, Germany, 2015. [Google Scholar] [CrossRef]

- Ben-Israel, A.; Greville, T.N.E. Generalized Inverses: Theory and Applications, 2nd ed.; CMS Books in Mathematics; Springer: New York, NY, USA, 2003. [Google Scholar] [CrossRef]

- Golub, G.H.; Pereyra, V. The Differentiation of Pseudo-Inverses and Nonlinear Least Squares Problems Whose Variables Separate. SIAM J. Numer. Anal. 1973, 10, 413–432. [Google Scholar] [CrossRef]

- Graham, A. Kronecker Products and Matrix Calculus with Applications; Courier Dover Publications: Mineola, NY, USA, 2018. [Google Scholar]

- Gupta, A.K. Numerical Methods Using MATLAB; MATLAB Solutions Series; Springer: New York, NY, USA, 2014. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kornilova, M.; Kovalnogov, V.; Fedorov, R.; Zamaleev, M.; Katsikis, V.N.; Mourtas, S.D.; Simos, T.E. Zeroing Neural Network for Pseudoinversion of an Arbitrary Time-Varying Matrix Based on Singular Value Decomposition. Mathematics 2022, 10, 1208. https://doi.org/10.3390/math10081208

Kornilova M, Kovalnogov V, Fedorov R, Zamaleev M, Katsikis VN, Mourtas SD, Simos TE. Zeroing Neural Network for Pseudoinversion of an Arbitrary Time-Varying Matrix Based on Singular Value Decomposition. Mathematics. 2022; 10(8):1208. https://doi.org/10.3390/math10081208

Chicago/Turabian StyleKornilova, Mariya, Vladislav Kovalnogov, Ruslan Fedorov, Mansur Zamaleev, Vasilios N. Katsikis, Spyridon D. Mourtas, and Theodore E. Simos. 2022. "Zeroing Neural Network for Pseudoinversion of an Arbitrary Time-Varying Matrix Based on Singular Value Decomposition" Mathematics 10, no. 8: 1208. https://doi.org/10.3390/math10081208

APA StyleKornilova, M., Kovalnogov, V., Fedorov, R., Zamaleev, M., Katsikis, V. N., Mourtas, S. D., & Simos, T. E. (2022). Zeroing Neural Network for Pseudoinversion of an Arbitrary Time-Varying Matrix Based on Singular Value Decomposition. Mathematics, 10(8), 1208. https://doi.org/10.3390/math10081208