Abstract

High-dimensional optimization problems are more and more common in the era of big data and the Internet of things (IoT), which seriously challenge the optimization performance of existing optimizers. To solve these kinds of problems effectively, this paper devises a dimension group-based comprehensive elite learning swarm optimizer (DGCELSO) by integrating valuable evolutionary information in different elite particles in the swarm to guide the updating of inferior ones. Specifically, the swarm is first separated into two exclusive sets, namely the elite set (ES) containing the top best individuals, and the non-elite set (NES), consisting of the remaining individuals. Then, the dimensions of each particle in NES are randomly divided into several groups with equal sizes. Subsequently, each dimension group of each non-elite particle is guided by two different elites randomly selected from ES. In this way, each non-elite particle in NES is comprehensively guided by multiple elite particles in ES. Therefore, not only could high diversity be maintained, but fast convergence is also likely guaranteed. To alleviate the sensitivity of DGCELSO to the associated parameters, we further devise dynamic adjustment strategies to change the parameter settings during the evolution. With the above mechanisms, DGCELSO is expected to explore and exploit the solution space properly to find the optimum solutions for optimization problems. Extensive experiments conducted on two commonly used large-scale benchmark problem sets demonstrate that DGCELSO achieves highly competitive or even much better performance than several state-of-the-art large-scale optimizers.

Keywords:

large-scale optimization; particle swarm optimization; dimension group-based comprehensive elite learning; high-dimensional problems; elite learning MSC:

37N40; 46N10; 47N10

1. Introduction

Large-scale optimization problems, also called high-dimensional problems, are ubiquitous in daily life and industrial engineering in the era of big data and the Internet of Things (IoT), such as water distribution optimization problems [1], cyber-physical systems design problems [2], control of pollutant spreading on social networks [3], and offshore wind farm collector system planning problems [4]. As the dimensionality of optimization problems increases, most existing optimization methods encounter the degradation of optimization effectiveness, due to the “curse of dimensionality” [5,6].

Specifically, the increase of dimensionality results in the following challenges for existing optimization algorithms: (1) With the growth of dimensionality, the properties of optimization problems become much more complicated. In particular, in the high-dimensional environment, optimization problems usually are non-convex, non-differentiable, or even non-continuous [7,8,9]. This makes traditional gradient-based optimization algorithms become infeasible. (2) The solution space grows exponentially as the dimensionality increases [10,11,12,13]. This greatly challenges the optimization efficiency of most existing algorithms. (3) The landscape of optimization problems becomes more complex in a high-dimensional space. On the one hand, some unimodal problems may become multimodal with the increase of dimensionality; on the other hand, in some multimodal problems, not only does the number of local optimal regions increase rapidly, but also the local regions become much wider and flatter [11,12,14]. This likely leads to premature convergence and stagnation of existing optimization techniques.

As a kind of metaheuristic algorithm, particle swarm optimization (PSO) maintains a population of particles, each of which represents a feasible solution to optimization problems, to search the solution space for the global optimum solutions [15,16,17]. By means of its great merits, such as strong global search ability, independence in the mathematic properties of optimization problems, and inherent parallelism [17], PSO has witnessed rapid development and excellent success in solving complex optimization problems [18,19,20,21,22] since it was proposed in 1995 [15]. As a result, PSO has been widely employed to solve real-world optimization problems in daily life and industrial engineering [1,23].

However, most existing PSOs are initially designed for low-dimensional optimization problems. Confronted with large-scale optimization problems, their effectiveness usually deteriorates due to the previously mentioned challenges [24,25,26]. To improve the optimization effectiveness of PSO in tackling high-dimensional problems, researchers have been devoted to designing novel and effective evolution mechanisms for PSO. Broadly speaking, existing large-scale PSOs can be divided into two categories [27], namely cooperative coevolutionary large-scale PSOs [6,28,29] and holistic large-scale PSOs [24,26,30,31,32].

Cooperative coevolutionary PSOs (CCPSOs) [6,28,29,33] adopt the divide-and-conquer technique to decompose one large-scale optimization problem into several exclusive smaller sub-problems and then optimize these sub-problems individually by traditional PSOs designed for low-dimensional problems to find the optimal solution to the large-scale optimization problem. Since the decomposed subproblems are separately optimized, the key component of CCPSOs is the decomposition strategy [6,28]. Ideally, a good decomposition strategy should place interacted variables into the same sub-problem, so that they can be optimized together. However, without prior knowledge, it is considerably difficult to decompose a large-scale problem accurately. As a result, current research on CCPSOs lies in developing novel decomposition strategies to divide the large-scale optimization problem as accurately as possible. Hence, many effective decomposition strategies [6,34,35,36,37,38] have been put forward.

However, CCPSOs heavily rely on the quality of the decomposition strategies. According to the no free lunch theorem, there is no decomposition strategy suitable for all large-scale problems. Therefore, some researchers attempt to design large-scale PSOs from another perspective, namely the holistic large-scale PSOs [5,26,30,39].

In contrast to CCPSOs, holistic large-scale PSOs [5,26,30,39,40] still optimize all variables simultaneously such as traditional PSOs. Since the learning strategy in updating the velocity of particles plays the most important role in PSO [15,16,18], the key to improving the effectiveness of PSO in coping with large-scale optimization is to devise effective learning strategies for particles, which should not only help particles explore the solution space efficiently to locate promising areas fast, but also aid particles to exploit the promising areas effectively to obtain high-quality solutions. Along this line, researchers have developed many remarkable learning strategies for PSO to solve high-dimensional problems, such as the competitive learning scheme [26], the social learning strategy [30], the two-phase learning method [1], and the level-based learning approach [25]. Recently, some researchers even have attempted to develop novel coding schemes for PSO to improve its optimization performance in solving large-scale optimization problems [41].

Although the above-mentioned large-scale PSOs have presented excellent optimization performance in solving some large-scale optimization problems, they still encounter limitations, such as premature convergence and stagnation into local areas, in solving complicated high-dimensional problems, especially those with overlapping correlated variables or fully non-separable variables. Therefore, the optimization performance of PSOs in tackling large-scale optimization still deserves improvement, which still remains an open and hot topic to study in the evolutionary computation community.

In nature, individuals with better fitness usually preserve more valuable evolutionary information than those with worse fitness, to guide the evolution of one species [42]. Moreover, in general, different individuals usually preserve different useful genes. Inspired by these observations, in this paper, we propose a dimension group-based comprehensive elite learning swarm optimizer (DGCELSO) by integrating useful genes embedded in different elite individuals to guide the update of particles to search the large-scale solution space effectively and efficiently. Specifically, the main components of the proposed DGCELSO are summarized as follows:

- (1)

- A dimension group-based comprehensive elite learning scheme is proposed to guide the update of inferior particles by learning from multiple superior ones. Instead of learning from only at most two exemplars in existing holistic large-scale PSOs [24,25,26,30], the devised learning strategy first randomly divides the dimensions of each inferior particle into several equally sized groups and then employs different superior particles to guide the update of different dimension groups. Moreover, unlike existing elite strategies that only use one elite to direct the evolution of an individual [43,44], it employs a random dimension group-based recombination techniques to try to integrate valuable evolutionary information in multiple elites to guide the update of each non-elite particle. In this way, the learning diversity of particles could be largely promoted, which is beneficial for particles to avoid falling into local traps. Moreover, it is also possible that useful evolutionary information embedded in different superior particles could be integrated to direct the learning of inferior particles, which may be profitable for particles to approach promising areas quickly.

- (2)

- Dynamic adjustment strategies for the control parameters involved in the proposed learning strategy are further designed to cooperate with the learning strategy to help PSO search the large-scale solution space properly. With these dynamic strategies, the developed DGCELSO could appropriately compromise the intensification and diversification of the search process at the swarm level and the particle level.

To verify the effectiveness of the proposed DGCELSO, extensive experiments are conducted to compare DGCELSO with several state-of-the-art large-scale optimizers on the widely used CEC’2010 [7] and CEC’2013 [8] large-scale benchmark optimization problem sets. Meanwhile, deep investigations on DGCELSO are also conducted to discover what contributes to its good performance.

The rest of this paper is organized as follows. Section 2 introduces the classical PSO and large-scale PSO variants. Then, the proposed DGCELSO is elucidated in detail in Section 3. Section 4 conducts extensive experiments to verify the effectiveness of the proposed DGCELSO. Finally, Section 5 concludes this paper.

2. Related Work

In this paper, a -dimensional single-objective minimization optimization problem is considered, which is defined as follows:

where x consisting of variables is a feasible solution to the optimization problem, and is the dimension size. In this paper, we directly use the function value as the fitness value of one particle.

2.1. Canonical PSO

In the canonical PSO [15,16], each particle is represented by two vectors, namely the position vector x and the velocity vector v. During the evolution, in the canonical PSO [15,16], each particle is guided by its historically personal best position and the historically best position of the whole swarm. Specifically, each particle is updated as follows:

where is the th dimension of the velocity of the th particle, is the th dimension of the position of the th particle, is the th dimension of the historically personal best position found by the th particle, and is the th dimension of the historically global best position found by the whole swarm. As for the parameters, and are two acceleration coefficients, while and are two real random numbers uniformly generated within [0, 1]. represents the inertia weight.

As shown in Equation (2), in the canonical PSO, each particle is cognitively directed by its pbest (the second part in the right hand of Equation (2) and socially guided by gbest of the whole swarm (the third part in the right hand of Equation (2). Due to the greedy attraction of gbest, the swarm in the canonical PSOs usually becomes trapped in local areas when tackling multimodal problems [18,45]. Therefore, to improve the effectiveness of PSO in searching multimodal space with many local areas, researchers developed many novel learning strategies to guide the learning of particles, such as the comprehensive learning strategy [46], the genetic learning strategy [47], the scatter learning strategy [18], and the orthogonal learning strategy [48], etc.

Though a lot of novel learning strategies have helped PSO achieve very promising performance in solving multimodal problems, most of them are particularly designed for low-dimensional optimization problems. Encountered with large-scale optimization problems, most existing PSOs lose their effectiveness due to the “curse of dimensionality” and the aforementioned challenges in high-dimensional problems.

2.2. Large-Scale PSO

To solve the previously mentioned challenges of large-scale optimization, researchers devoted extensive attention to designing novel PSOs. As a result, numerous large-scale PSO variants have sprung up [1,26]. In a broad sense, existing large-scale PSOs can be classified into the following two categories.

2.2.1. Cooperative Coevolutionary Large-Scale PSO (CCPSO)

Cooperative coevolutionary PSOs (CCPSOs) [6,29,49] mainly use the divide-and-conquer technique to separate all variables of one high-dimensional problem into several exclusive groups, and then optimize each group of variables independently to obtain the optimal solution to the high-dimensional problem. Bergh and Engelbrecht put forward the earliest CCPSO [49]. In this algorithm, all variables in a large-scale optimization problem are randomly divided into K groups with each containing D/K variables (where D is the dimension size). Then the canonical PSO described in Section 2.1 is employed to optimize each group of variables. Nevertheless, the performance of this algorithm heavily relies on the setting of the number of groups (namely K). To alleviate this issue, in [29], an improved CCPSO, named CCPSO2, was proposed by first predefining a set of group numbers and then randomly selecting a group number in each iteration to separate variables into groups. In the above two algorithms, the correlations between variables are not taken into account explicitly. Hence, their optimization effectiveness degrades dramatically in solving problems with many interacted variables [11,12].

To alleviate the above issue, researchers have attempted to design effective variable grouping strategies to separate variables into groups by detecting the correlations between variables [6,35,36,37]. In the literature, the most representative grouping strategy is the differential grouping (DG) method [6], which uses the differential function values to detect the correlation between any two variables by exerting the same disturbance on the two variables. Based on the detected correlations between variables, DG could separate variables into groups satisfactorily. However, this method has two drawbacks. (1) It cannot detect the indirect interaction between variables [36], and (2) it consumes a lot of fitness evaluations (O(D2), D is the number of variables) in the variable decomposition stage [35,37].

To fill the first gap, Sun et al. devised an extended DG (XDG) [36], and Mei et al. brought up a global DG (GDG) [50] to detect both the direct and indirect interactions between variables. To alleviate the second predicament, a fast DG, named DG2 [35], and a recursive DG (RDG) [37] were put forward to reduce the consumption of fitness evaluations in the variable grouping stage. To further improve the detection efficiency of RDG, an efficient recursive differential grouping (ERDG) [51] was devised to reduce the used fitness evaluations in the decomposition stage, and to alleviate the sensitivity of RDG to parameters, an improved version, named RDG2, was developed [52] by adaptively adjusting the setting of parameters. In [53], Ma et al. proposed a merged differential grouping method based on subset-subset interaction and binary search by first identifying separable variables and non-separable variables, and putting all separable variables into the same subset, while dividing the non-separable variables into multiple subsets via a binary-tree-based iterative merging method. To further promote the variable grouping accuracy, Liu et al. proposed a deep grouping method by considering both the variable interaction and the essentialness of the variable to decompose one high-dimensional problem [54]. Instead of decomposing a large-scale optimization problem into fixed variable groups, Zhang et al. developed a dynamic grouping strategy to dynamically separate variables into groups during the evolution [55]. Specifically, the proposed algorithm first evaluates the contribution of variables based on the historical information and then constructs dynamic variable groups for the next generation based on the evaluated contribution and the detected interaction information.

By means of their promising performance in solving large-scale optimization problems, cooperative coevolutionary algorithms have been widely applied to solve various industrial engineering problems. For instance, Neshat et al. [56] proposed a novel multi-swarm cooperative co-evolution algorithm with the multi verse optimizer algorithm, the equilibrium optimization method, and the moth flame optimization approach, to optimize the layout of offshore wave energy converters. To tackle distributed flowshop group scheduling problems, Pan et al. [57] proposed a cooperative co-evolutionary algorithm with a collaboration model and a re-initialization scheme to tackle them. In [58], a hybrid cooperative co-evolution algorithm with a symmetric local search plus Nelder–Mead was devised to optimize the positions and the power-take-off settings of wave energy converters. In [59], Liang et al. developed a cooperative coevolutionary multi-objective evolutionary algorithm to tackle the transit network design and frequency setting problem.

Although the above-mentioned cooperative coevolutionary algorithms including CCPSOs achieved good performance in dealing with certain kinds of high-dimensional problems and have been applied to solve real-world problems, they are still confronted with limitations in tackling complicated high-dimensional problems. On the one hand, according to the theorem of No Free Lunch, there is no universal grouping method that could accurately separate variables into groups for all types of large-scale optimization problems; on the other hand, faced with high-dimensional problems with overlapping variable correlations, most existing variable grouping strategies would separate all these variables into the same group, leading to a very large variable group. Under this situation, traditional PSOs designed for low-dimensional problems used in CCPSO still cannot effectively optimize such a large group of variables. As a result, some researchers have attempted to design large-scale PSOs from another perspective to be elucidated next.

2.2.2. Holistic Large-Scale PSO

Unlike CCPSOs, holistic large-scale PSOs [18,26] still consider all variables as a whole and optimize them simultaneously like in traditional low-dimensional PSOs [16]. To solve the previously mentioned challenges of large-scale optimization, the key to holistic large-scale PSOs is to devise effective and efficient learning strategies for particles to largely promote the swarm diversity so that particles could explore the exponentially increased solution space efficiently and exploit the promising areas extensively to obtain high-quality solutions.

In [60], a dynamic multi-swarm PSO along with the Quasi-Newton local search method (DMS-L-PSO) was proposed to optimize large-scale optimization problems by dynamically separating particles into smaller sub-swarms in each generation. Taking inspiration from the competitive learning scheme in human society, Cheng and Jin proposed a competitive swarm optimizer (CSO) [26]. Specifically, this optimizer first separates particles into exclusive pairs and then lets each pair of particles compete with each other. After the competition, the winner is not updated and thus directly enters the next generation, while the loser is updated by learning from the winner. Likewise, inspired by the social learning strategy in animals, a social learning PSO (SLPSO) [61] was devised to let each particle probabilistically learn from those which are better than itself. By extending the pairwise competition mechanism in CSO to a tri-competitive strategy, Mohapatra et al. [62] developed a modified CSO (MCSO) to accelerate the convergence speed of the swarm to tackle high-dimensional problems. Taking inspiration from the comprehensive learning strategy designed for low-dimensional problems [46] and the competitive learning approach in CSO [26], Yang et al. designed a segment-based predominant learning swarm optimizer (SPLSO) [30] to cope with large-scale optimization. Specifically, this optimizer first uses the pairwise competition mechanism in CSO to divide particles into two groups, namely the relatively good particles and the relatively poor particles. Then, it further randomly separates the dimensions of each relatively poor particle into a certain number of exclusive segments, and subsequently randomly selects a relatively good particle to direct the update of each segment of the inferior particle.

Unlike the above large-scale PSOs [26,30,62], which let the updated particle learn from only one superior, Yang et al. devised a level-based learning swarm optimizer (LLSO) [25] by taking inspiration from the teaching theory in pedagogy. Specifically, this optimizer first separates particles into different levels and then lets each particle in lower levels learn from two random superior exemplars selected from higher levels. Inspired by the cooperative learning behavior in human society, Lan et al. put forward a two-phase learning swarm optimizer (TPLSO) [24]. This optimizer separates the learning of each particle into the mass learning phase and the elite learning phase. In the former learning phase, the tri-competitive mechanism is employed to update particles, while in the elite learning phase, the elite particles are picked out to learn from each other to further exploit promising areas to refine the found solutions. Similarly, Wang et al. proposed a multiple strategy learning particle swarm optimization (MSL-PSO) [40], in which different learning strategies are used to update particles in different evolution stages. In the first stage, each particle learns from those with better fitness and the mean position of the swarm to probe promising positions. Then, all the best probed positions are sorted based on their fitness and the top best ones are used to update particles in the second stage. In [41], Jian et al. developed a novel region encoding scheme to extend the solution representation from a single point to a region, and a novel adaptive region search strategy to keep the search diversity. These two schemes are then embedded into SLPSO to tackle large-scale optimization problems.

To find a good compromise between exploration and exploitation, Li et al. devised a learning structure to decouple exploration and exploitation for PSO in [63] to solve large-scale optimization. In particular, an exploration learning strategy was devised to direct particles to sparse areas based on a local sparseness degree measurement, and then an adaptive exploitation learning strategy was developed to let particles exploit the found promising areas. Deng et al. [39] devised a ranking-based biased learning swarm optimizer (RBLSO) based on the principle that the fitness difference between learners and exemplars should be maximized. In particular, in this algorithm, a ranking paired learning (RPL) scheme was designed to let the worse particles learn peer-to-peer from the better ones, and at the same time, a biased center learning (BCL) strategy was devised to let each particle learn from the weighted mean position of the whole swarm. Lan et al. [64] proposed a hierarchical sorting swarm optimizer (HSSO) to tackle large-scale optimization. Specifically, this optimizer first divides particles into a good swarm and a bad swarm with equal sizes based on their fitness. Then, particles in the bad group are updated by learning from those in the good one. Subsequently, the good swarm is taken as a new swarm to execute the above swarm division and particle updating operations until there is only one particle in the good swarm. Kong et al. [65] devised an adaptive multi-swarm particle swarm optimizer to cope with high-dimensional problems. Specifically, it first adaptively divides particles into several sub-swarms and then employs the competition mechanism to select exemplars for particle updating. Huang et al. [66] put forward a convergence speed controller to cooperate with PSO to deal with large-scale optimization. Specifically, this controller is triggered periodically to produce an early warning to PSO before it falls into premature convergence.

Though most existing large-scale PSOs have presented their success in solving certain kinds of high-dimensional problems, their effectiveness still degrades in solving complicated high-dimensional problems [11,12,27,67], especially on those with many wide and flat local areas. Therefore, promoting the effectiveness and efficiency of PSO in solving large-scale optimization still deserves extensive attention and thus this research direction is still an active and hot topic in the evolutionary computation community.

3. Dimension Group-Based Comprehensive Elite Learning Swarm Optimizer

In nature, during the evolution of one species, those elite individuals with better adaptability to the environment usually preserve more valuable evolutionary information, such as genes, to direct the evolution of the species [42]. Moreover, different individuals may preserve different useful genes. Likewise, during the evolution of the swarm in PSO, different particles may contain useful variable values that may be close to the true global optimal solutions. Therefore, a natural idea is to integrate those useful values embedded in different particles to guide the evolution of the swarm. To this end, this paper proposes a dimension group-based comprehensive elite learning swarm optimizer (DGCELSO) to tackle large-scale optimization. The detailed components of this optimizer are elucidated as follows.

3.1. Dimension Group-Based Comprehensive Elite Learning

Given that NP particles are maintained in the swarm, the proposed DGCEL strategy first partitions the swarm into two exclusive sets, namely the elite set, denoted by ES, and the non-elite set, denoted by NES. Specifically, ES contains the best es particles in the swarm, while NES consists of the rest nes = (NP − es) particles. Since the size of ES, namely es, is related to NP, we set es = (where tp is the ratio of the elite particles in ES out of the whole swarm), for the convenience of parameter fine-tuning.

Since elite particles usually preserve more valuable evolutionary information than the non-elite ones, in this paper, we first develop an elite learning strategy (EL). Specifically, we let the elite particles in ES directly enter the next generation, while only updating the non-elite particles in NES. Moreover, the elite particles in ES are employed to guide the learning of non-elite particles in NES.

With respect to the elite particles, during the evolution, though they may be far from the global optimal area, they usually contain valuable genes that are very close to the true global optimal solution. To integrate the useful evolutionary information embedded in different elites, we propose a dimension group-based comprehensive learning strategy (DGCL). Specifically, during the update of each non-elite particle, the whole dimensions of this particle are first randomly shuffled and then are partitioned into NDG dimension groups (where NDG denotes the number of dimension groups), with each group containing D/NDG dimensions. In this way, the dimensions of each non-elite particle are randomly divided into NDG groups, namely DG = [].

Here, it should be mentioned that for each non-elite particle, the dimensions are randomly shuffled, and thus it is likely that the division of dimension groups is different for different non-elite particles. In addition, if D%NDG is not zero, then the remaining dimensions are equally allocated to the first (D%NDG) groups, i.e., each of the first (D%NDG) groups contains (D/NDG + 1) dimensions.

Subsequently, unlike most existing large-scale PSOs [25,26,30] which use the same exemplars to update all dimensions of one inferior particle, the proposed DGCL uses one exemplar to update each dimension group of each non-elite particle, and thus one non-elite particle could learn from different exemplars.

Incorporating the proposed EL into the DGCL, the DGCEL is developed by using the elite particles in ES to direct the update of each dimension group of a non-elite particle. Specifically, each non-elite particle is updated as follows:

where represents the jth non-elite particle in NES; denotes the ith dimension group of the jth non-elite particle; and are the ith dimension group of the position and velocity of the jth particle in NES, respectively; and are two different elite particles randomly selected from ES; , , and are three random real parameters uniformly sampled within [0, 1]; is a control parameter in charge of the influence of the second elite particle.

As for the update of each non-elite particle in NES, as shown in Equation (4), the following details should be paid careful attention:

- (1)

- As previously mentioned, for each non-elite particle, the dimensions are randomly shuffled. As a result, the partition of dimension groups is different for different non-elite particles.

- (2)

- For each dimension group , two different elite particles and are first randomly selected from ES. Then, the better one between these two elites (suppose it is ) acts as the first exemplar in Equation (4), while the worse one (suppose it is ) acts as the second exemplar to guide the update of the dimension group of the non-elite particle.

- (3)

- The two elite particles guiding the update of each dimension group are both randomly selected. Therefore, they are likely to be different for different dimension groups.

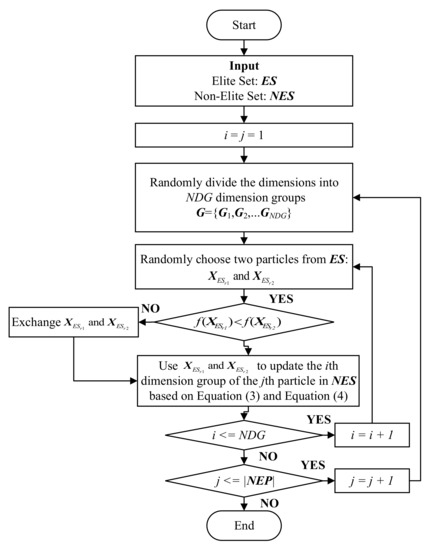

As a whole, a complete flowchart of the proposed DGCEL is shown in Figure 1. Taking deep analysis on Equation (4) and Figure 1, we find that the proposed DGCEL strategy brings the following advantages to PSO:

Figure 1.

Flowchart of the proposed DGCEL strategy.

- (1)

- Instead of using historical evolutionary information, such as the historically global best position (gbest), the personal best positions (pbest), and the neighborhood best position (nbest), in traditional PSOs [18,47], the devised DGCEL employs the elite particles in the current swarm to direct the learning of the non-elite particles. In contrast to the historical information, which may remain unchanged for many generations, particles in the swarm are usually updated generation by generation. Therefore, in the proposed DGCEL, the selected two guiding exemplars are not only likely different for different particles but also probably different for the same particle in different generations. This is very beneficial for the promotion of swarm diversity.

- (2)

- Instead of updating each particle with the same exemplars for all dimensions in most existing large-scale PSOs [5,24,25,26,30], the proposed DGCEL updates non-elite particles at the dimension group level. Therefore, for different dimension groups, the two guiding exemplars are likely different. In this way, not only could one non-elite particle learn from multiple different elite ones, but also the useful genes hidden in different elites could be incorporated to direct the evolution of the swarm. As a result, not only the learning diversity of particles could be improved, but also the learning efficiency of particles could be promoted.

- (3)

- In DGCEL, each dimension group of a non-elite particle is guided by two randomly selected elite particles in ES. With the guidance of multiple elites, each non-elite particle is expected to approach promising areas quickly. In addition, since the elite particles in ES are not updated and directly enter the next generation, the useful evolutionary information in the current swarm is protected from being destroyed by uncertain updates. Therefore, the elites in ES become better and better as the evolution iterates, and at last, it is expected that these elites converge to the optimal areas.

Remark

To the best of our knowledge, there are four existing PSOs that are very similar to the proposed DGCELSO. They are CLPSO [46], OLPSO [48], GLPSO [47], and SPLSO [30]. The first three were originally designed for low-dimensional problems, while the last one was initially devised for large-scale optimization. Compared with these existing PSOs, the developed DGCELSO distinguishes from them in the following ways:

- (1)

- In contrast to the three low-dimensional PSOs [46,47,48], the proposed DGCELSO uses the elite particles in the swarm to comprehensively guide the learning of the non-elite particles at the dimension group level. First, the three low-dimensional PSOs all use the personal best positions (pbests) of particles to construct only one guiding exemplar for each updated particle, whereas DGCELSO leverages the elite particles in the current swarm to construct two different guiding exemplars for each non-elite particle. Second, the three low-dimensional PSOs construct the guiding exemplar dimension by dimension. Nevertheless, DGCELSO constructs the two guiding exemplars group by group. With these two differences, DGCELSO is expected to construct more promising guiding exemplars for the updated particles, and thus the learning effectiveness and efficiency of particles could be largely promoted to explore the large-scale solution space.

- (2)

- In contrast to the large-scale PSO, namely SPLSO [30], DGCELSO uses two different elite particles to direct the update of each dimension group of each non-elite particle. First, the partition of the swarm in DGCELSO is very different from the one in SPLSO. In DGCELSO, the swarm is divided into two exclusive sets according to the fitness of particles, with the best es particles entering ES and the rest entering NES. However, in SPLSO, particles in the swarm are paired together and each paired two particles compete with each other, with the winner entering the relatively good set and the loser entering the relatively poor set. Second, for each non-elite particle, DGCELSO adopts two random elites in ES to guide the update of each dimension group, whereas in SPLSO, each dimension group of a loser is updated by only one random relatively good particle with the other exemplar being the mean position of the relatively good set, which is shared by all updated particles. Therefore, it is expected that the learning effectiveness and efficiency of particles in DGCELSO are higher than in SPLSO. Hence, DGCELSO is expected to explore and exploit the large-scale solution space more appropriately than SPLSO.

3.2. Adaptive Strategies for Control Parameters

Taking deep investigation on the proposed DGCELSO, we find that except for the swarm size NP, it has three control parameters, namely the ratio of elite particles out of the whole swarm tp, the number of dimension groups NDG, and the control parameter in Equation (4). The swarm size NP is a common parameter for all evolutionary algorithms, which is usually problem-dependent and thus remains fine-tuned. As for , it subtly controls the influence of the second guiding exemplar in the velocity update. We also leave it to be fine-tuned in the experiment as NP. For the other two control parameters, we devise the following dynamic adjustment schemes to alleviate the sensitivity of DGCELSO to them.

3.2.1. Dynamic Adjustment for tp

With respect to the ratio of elite particles out of the whole swarm tp, it determines the size of the elite set ES. When tp is large, on the one hand, a large number of particles are preserved and enter the next generation directly; on the other hand, the learning of non-elite particles is diversified due to a large number of candidate exemplars, namely the elite particles. In this situation, the swarm biases to explore the solution space. In contrast, when tp is small, only a small number of elites are preserved. In this case, the learning of non-elite particles is concentrated to exploit the promising areas where the elites locate. Therefore, the swarm biases to exploit the solution space. However, it should be mentioned that such a bias is not at the serious sacrifice of swarm diversity because the guiding exemplars are both randomly selected for each dimension group of each non-elite particle.

Based on the above consideration, it seems rational not to keep tp fixed during the evolution. To this end, we devise a dynamic adjustment strategy for tp as follows:

where fes represents the number of fitness evaluations used so far, and is the maximum number of fitness evaluations.

From Equation (6), it is found that tp is linearly decreased from 0.4 to 0.2. Therefore, at the early stage, tp is high, while at the late stage, tp is small. As a result, as the evolution proceeds, the swarm gradually tends to exploit the solution space. This just matches the expectation that the swarm should explore the solution fully in the early stages to find promising areas while exploiting the found promising areas in the late stage to obtain high-quality solutions. The effectiveness of this dynamic adjustment scheme will be verified in the experiments in Section 4.3.

3.2.2. Dynamic Adjustment for NDG

In terms of the number of dimension groups NDG, it directly affects the learning of non-elite particles. A large NDG leads to a large number of elite particles that might participate in the learning of non-elite particles. This might be useful when the useful genes are scattered in very diversified dimensions. In this situation, with a large NDG, the chance of integrating the useful genes together to direct the learning of non-elite particles could be promoted. By contrast, when the useful genes are scattered in centered dimensions, a small NDG is preferred. However, without prior knowledge of the positions of useful genes embedded in the elite particles, it is difficult to give a proper setting of NDG.

To alleviate the above concern, we devise the following dynamic adjustment of NDG for each non-elite particle based on the Cauchy distribution:

where denotes the setting of NDG for the jth particle in NES, Cauchy (60, 10) is a Cauchy distribution with the position parameter 60 and scaling parameter 10. floor(x) is a function that returns the largest integer smaller than x. mod(x,y) is a function that returns the remainder when x/y.

In Equations (7) and (8), two details deserve careful attention. First, the Cauchy distribution is used here because it can generate values around the position parameter with a long fat tail. With this distribution, the generated NDGs for different non-elite particles are likely diversified. Second, with Equation (8), we keep the setting of NDG for each non-elite particle at multiple times of 10. This setting is adopted here for promoting the difference between two different values of NDG to improve the learning diversity of non-elite particles and for the convenience of computation.

From Equations (7) and (8), it is found that different non-elite particles likely preserve different NDGs. On the one hand, the learning diversity of non-elite particles could be further improved. On the other hand, the chance of integrating useful genes embedded in different elite particles is likely promoted with different settings of NDG. The effectiveness of this dynamic adjustment scheme for NDG will be verified in the experiments in Section 4.3.

3.3. Overall Procedure of DGCELSO

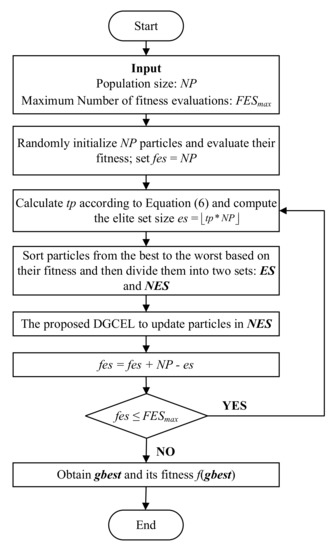

By integrating the above components, DGCELSO is developed with the overall procedure outlined in Algorithm 1 and the complete flowchart shown in Figure 2. Specifically, after the swarm is initialized and evaluated (Line 1), the algorithm goes to the main iteration loop (Lines 2~17). First, the swarm is partitioned into the elite set (ES) and the non-elite set (NES) as shown in Lines 3 and 4. Then, each particle in NES is updated as shown in Lines 5~16. During the update of one non-elite particle, the dimensions of this particle are first separated into several dimension groups (Lines 6 and 7). Then, for each dimension group of the non-elite particle, two different elite particles are randomly selected from ES (Line 9), and then the dimension group is updated by learning from these two elites (Line 13). The above process iterates until the termination condition is met. At the end of the algorithm, the best solution in the swarm is output (Line 18).

Figure 2.

Flowchart of the proposed DGCELSO.

With respect to the computational complexity in time, from Algorithm 1, it is found that in each generation, it takes O(NPlog2NP) to sort the swarm and O(NP) to partition the swarm into two sets in Line 4; then, it takes O(NP∗D) to shuffle the dimensions and O(NP∗D) to partition the shuffled dimensions into groups for all non-elite particles (Line 7); at last, it takes O(NP∗D) to update all non-elite particles (Lines 8~14). To sum up, the time complexity of DGCELSO is O(NP∗D) based on the consideration that the swarm size is usually much smaller than the dimension size in large-scale optimization.

| Algorithm 1: The Pseudocode of DGCELSO. | |

| Input: | Population size NP, Maximum number of fitness evaluations FESmax, Control parameter ; |

| 1: | Initialize NP particles randomly and calculate their fitness; fes = NP; |

| 2: | While (fes ≤ FESmax) do |

| 3: | Calculate tp according to Equation (6) and obtain the elite set size es = ; |

| 4: | Sort particles based on their fitness and divide them into two sets, namely ES and NES; |

| 5: | For each non-elite particle in NES do |

| 6: | Generate based on Equation (7); |

| 7: | Random shuffle the dimensions and then split the dimensions into groups; |

| 8: | For each dimension group do |

| 9: | Randomly select two different elite particles from ES: ; |

| 10: | If (f() < f()) then |

| 11: | Swap ESr1 and ESr2; |

| 12: | End If |

| 13: | Update the dimension group of according to Equations (3) and (4); |

| 14: | End For |

| 15: | Calculate the fitness of the updated , and fes ++; |

| 16: | End For |

| 17: | End While |

| 18: | Obtain the best solution in the swarm gbest and its fitness f(gbest) |

| Output: f(gbest) and gbest | |

Regarding the computational complexity in space occupation, in Algorithm 1, we can see that except for O(NP∗D) to store the positions of all particles and O(NP∗D) to store the velocities of all particles, it only takes extra O(NP) to store the index of particles in the two sets, and O(D) to store the dimension groups. Comprehensively, DGCELSO only takes O(NP∗D) space.

Based on the above time and space complexity analysis, it is found that the proposed DGCELSO remains as efficient as the classical PSO, which also takes O(NP∗D) time in each generation and O(NP∗D) space.

4. Experimental Section

To verify the effectiveness of the proposed DGCELSO, extensive experiments are conducted on two sets of large-scale optimization problems, namely the CEC’2010 [7] and the CEC’2013 [8] large-scale benchmark sets in this section. The CEC’2010 set contains 20 high-dimensional problems with 1000 dimensions, while the CEC’2013 set consists of 15 problems with 1000 dimensions as well. In particular, the CEC’2013 set is an extension of the CEC’2010 set by introducing more complicated features, such as overlapping interactions among variables and imbalance contribution of variables. Therefore, compared with the CEC’2010 problems, the CEC’2013 problems are more complicated and more difficult to optimize. For more detailed information on the two benchmark large-scale problem sets, readers are referred to [7,8].

In this section, we first investigate the settings of two key parameters (namely the swarm size NP and the control parameter ) for DGCELSO in Section 4.1. Then, extensive experiments are conducted on the two benchmark sets to compare DGCELSO with several state-of-the-art large-scale optimizers in Section 4.2. At last, a deep investigation into the proposed DGCELSO is performed to observe what contributes to the good performance of DGCELSO.

In the experiments, unless otherwise stated, the maximum number of fitness evaluations is set as 3000 × D, where D is the dimension size. In this paper, the dimension size of all optimization problems is 1000, and thus the total number of fitness evaluations is 3 × 106. To make fair and comprehensive comparisons, the median, the mean, and the standard deviation (Std) values over 30 independent runs are used to evaluate the performance of all algorithms. Moreover, to tell the statistical significance, the Wilcoxon rank-sum test at the significance level of “α = 0.05” was conducted to compare two different algorithms. Furthermore, to obtain the overall ranks of different algorithms on one whole benchmark set, the Friedman test at the significance level of “α = 0.05” was conducted on each benchmark set.

Lastly, it is worth noting that we use the C programming language and Code Blocks software to implement the proposed DGCELO. Moreover, all experiments were run on a PC with 8 Intel Core i7-10700 2.90-GHz CPUs, 8-GB memory, and the 64-bit Ubuntu 12.04 LTS system.

4.1. Parameter Setting

Due to the proposed two dynamic adjustment strategies of the associated parameters in DGCELSO, there are only two parameters, namely the swarm size NP and the control parameter that need fine-tuning. Therefore, to investigate the optimal setting of the two parameters for DGCELSO in solving 1000-D large-scale optimization problems, we conduct experiments by varying NP from 100 to 600 and ranging from 0.1 to 0.9 for DGCELSO on the CEC’2010 benchmark set. Table 1 shows the mean fitness values obtained by DGCELSO with different settings of NP and on the CEC’2010 set. In this table, the best results are highlighted in bold, and the average rank of each configuration is also presented, which was obtained using the Friedman test at the significance level of “α = 0.05”.

Table 1.

Comparison results among DGCELSO with different settings of NP and on the 1000-D CEC’2010 problems.

From this table, we obtain the following findings. (1) From the perspective of the Friedman test, when NP is fixed, the setting of parameter is neither too small nor too large, and the optimal setting is usually within [0.3, 0.6]. Specifically, when NP is 100 and 200, the optimal is 0.6 and 0.5 respectively. When NP is within [300, 500], the optimal is consistently 0.4. When NP is 600, the optimal is 0.3. (2) More specifically, we find that when NP is small, such as 100, the optimal is usually large. This is because a small NP could not afford enough diversity for DGCELPSO to explore the solution space. Therefore, to improve the diversity, should be large to enhance the influence of the second guiding exemplar in Equation (4), which is in charge of preventing the updated particle from being greedily attracted by the first guiding exemplar. On the contrary, when NP is large, such as 600, a small is preferred. This is because a large NP offers too high diversity for DGCELPSO to slow down its convergence. Consequently, to let particles fully exploit the found promising areas, should be small to decrease the influence of the second guiding exemplar in Equation (4). (3) Taking comprehensive comparisons among all settings of NP along with the associated optimal settings of , we find that DGCELSO with NP = 300 and = 0.4 achieves the best overall performance.

Based on the above observation, NP = 300 and = 0.4 are adopted for DGCELSO in the experiments related to 1000-D optimization problems.

4.2. Comparisons with State-of-the-Art Methods

To comprehensively verify the effectiveness of the devised DGCELSO, this section conducts extensive comparison experiments to compare DGCELSO with several state-of-the-art large-scale algorithms. Specifically, nine popular and latest large-scale methods are selected, namely TPLSO [24], SPLSO (The source code can be downloaded from https://gitee.com/mmmyq/SPLSO, accessed on 1 January 2022) [30], LLSO (The source code can be downloaded from https://gitee.com/mmmyq/LLSO, accessed on 1 January 2022) [25], CSO (The source code can be downloaded from http://www.soft-computing.de/CSO_Matlab_New.zip, accessed on 1 January 2022) [26], SLPSO (The source code can be downloaded from http://www.soft-computing.de/SL_PSO_Matlab.zip, accessed on 1 January 2022) [61], DECC-GDG (The source code can be downloaded from https://ww2.mathworks.cn/matlabcentral/mlc-downloads/downloads/submissions/45783/versions/1/download/zip/CC-GDG-CMAES.zip, accessed on 1 January 2022) [50], DECC-DG2 (The source code can be downloaded from https://bitbucket.org/mno/differential-grouping2/src/master/, accessed on 1 January 2022) [35], DECC-RDG (The source code can be downloaded from https://www.researchgate.net/profile/Yuan-Sun-18/publications, accessed on 1 January 2022) [37], and DECC-RDG2 (The source code can be downloaded from https://www.researchgate.net/profile/Yuan-Sun-18/publications, accessed on 1 January 2022) [52]. The former five large-scale optimizers are state-of-the-art holistic large-scale PSO variants, while the latter four algorithms are state-of-the-art cooperative coevolutionary evolutionary algorithms. Compared with these nine different state-of-the-art large-scale optimizers, the effectiveness of DGCELSO is expected to be demonstrated.

Table 2 and Table 3 display the comparison results between DGCELSO and the nine compared algorithms on the 1000-D CEC’2010 and the 1000-D CEC’2013 large-scale benchmark sets, respectively. In these two tables, the symbols, “+”, “−”, and “=” above the p-values obtained from the Wilcoxon rank test denote that the proposed DGCELSO is significantly superior to, significantly inferior to, and equivalent to the associated compared algorithms on the related functions, respectively. “w/t/l” in the second to last rows of the two tables count the numbers of functions where DGCELSO performs significantly better, equivalently, and significantly worse than the associated compared methods. Actually, they are the numbers of “+”, “=” and “−”, respectively. In the last rows of the two tables, the averaged ranks of all algorithms obtained from the Friedman test are presented as well.

Table 2.

Fitness comparison between DECELSO and the compared algorithms on the 1000-D CEC’2010 problems with 3 × 106 fitness evaluations.

Table 3.

Fitness comparison between DECELSO and the compared algorithms on the 1000-D CEC’2013 problems with 3 × 106 fitness evaluations.

In Table 2, the comparison results on the CEC’2010 set are summarized as follows. (1) From the perspective of the Friedman test, as shown in the last row, it is found that the proposed DGCELSO has the lowest rank value, which is much smaller than those of the compared algorithms. This means that DGCELSO achieves the best overall performance and shows great superiority to the compared algorithms. (2) With respect to the Wilcoxon rank-sum test, as shown in the second last row, it is observed that DGCELSO performs significantly better than the compared algorithms on at least 14 problems. In particular, competed with the four cooperative coevolutionary evolutionary algorithms, DGCELSO presents significant superiority to them on at least 16 problems and only shows inferiority in at most four problems. In comparison with the five holistic large-scale PSO variants, DGCELSO is significantly superior to SLPSO on 18 problems, achieves much better performance than TPLSO on 16 problems, outperforms both LLSO and CSO on 15 problems, and beats SPLSO down on 14 problems. The superiority of DGCELSO to the five holistic large-scale PSOs demonstrates the effectiveness of the proposed DGCEL strategy.

In Table 3, we summarize the comparison results on the CEC’2013 set as follows. (1) From the perspective of the Friedman test, as shown in the last row, it is found that the rank value of the proposed DGCELSO is still the lowest among the ten algorithms, and such a rank is still much smaller than those of the nine compared algorithms. This demonstrates that DGCELSO still achieves the best overall performance on the complicated CEC’2013 benchmark set and shows great dominance to the compared algorithms. (2) With respect to the Wilcoxon rank-sum test, as shown in the second to last row, it is observed that except for SPLSO, DGCELSO shows significantly better performance than the other eight compared algorithms on at least 10 problems and shows inferiority on at most three problems. Competed with SPLSO, DGCELSO beats it on eight problems and is defeated on only three problems. The superiority of DGCELSO to the compared algorithms on the CEC’2013 benchmark set demonstrates that it is promising for complicated large-scale optimization problems.

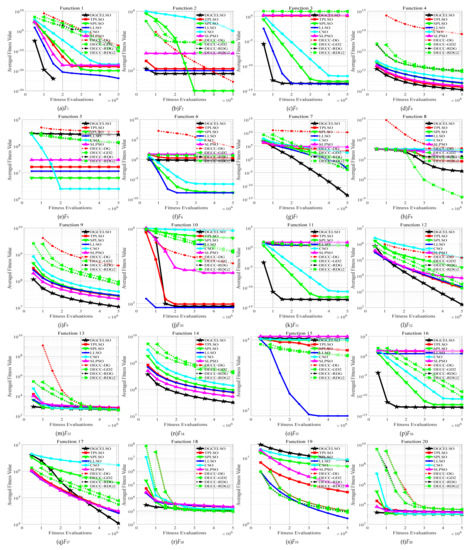

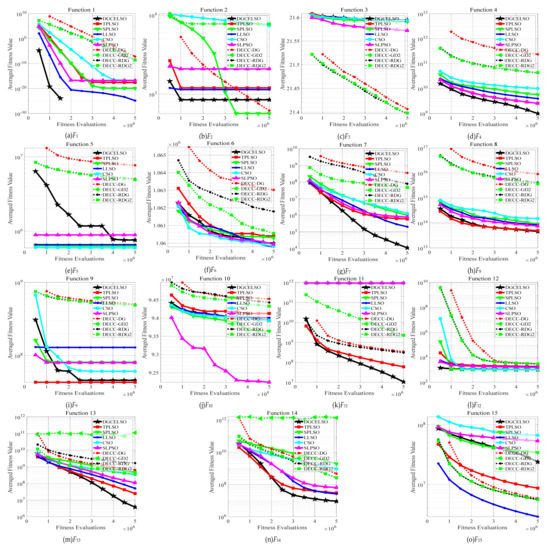

The above experiments demonstrated the effectiveness of the proposed DGCELSO. To further demonstrate its efficiency in solving large-scale optimization problems, we conduct experiments on the two large-scale benchmark sets to investigate the convergence speed of the proposed DGCELSO in comparison with the nine compared methods. In this experiment, the maximum number of fitness evaluations is set as 5 × 106. Figure 3 and Figure 4 show the convergence comparison results on the CEC’2010 and the CEC’2013 benchmark sets, respectively.

Figure 3.

Convergence behavior comparison between DGCELSO and the compared algorithms on each 1000-D CEC’2010 benchmark problem.

Figure 4.

Convergence behavior comparison between DGCELSO and the compared algorithms on each 1000-D CEC’2013 benchmark problem.

In Figure 3, on the CEC’2010 benchmark set, the following findings can be obtained. (1) At first glance, it is found that the proposed DGCELSO obviously obtains faster convergence along with better solutions than all the nine compared algorithms on nine problems (F1, F4, F7, F9, F11, F12, F14, F16, and F17). On F3, F13, F18, and F20, DGCELSO achieves very similar performance with some compared algorithms in terms of the solution quality but obtains much faster convergence than the associated compared algorithms. (2) More specifically, we find that DGCELSO obviously shows much better performance in both convergence speed and solution quality than the five holistic large-scale PSO variants, namely TPLSO, SPLSO, LLSO, CSO, and SLPSO on 17, 16, 15, 16, and 17, respectively. In the competition with the four cooperative coevolutionary evolutionary algorithms, namely DECC-DG, DECC-GD2, DECC-RDG, and DECC-RDG2, DGCELSO shows clear superiority in both convergence speed and solution quality on 17, 17, 17, and 15 problems, respectively.

From Figure 4, similar observations on the CEC’2013 benchmark set can be attained. (1) At first glance, it is found that the proposed DGCELSO obtains faster convergence along with better solutions than all the nine compared algorithms on six problems (F1, F4, F7, F11, F13, and F14). On F8, F9, and F12, DGCELSO shows superiority in both convergence speed and solution quality to eight compared algorithms and is inferior to only one compared algorithm. (2) More specifically, we find that DGCELSO performs better with faster convergence speed and higher solution quality than TPLSO, SPLSO, LLSO, CSO, and SLPSO on 11, 11, 9, 12, and 10 problems, respectively. In competition with DECC-DG, DECC-GD2, DECC-RDG, and DECC-RDG2, DGCELSO presents great dominance to them on 11, 9, 11, and 12 problems, respectively.

To sum up, compared with these state-of-the-art large-scale algorithms, DGCELSO performs much better in both convergence speed and solution quality. The superiority of DGCELSO mainly benefits from the proposed DGCEL strategy, which could implicitly assemble useful information embedded in elite particles to guide the evolution of the swarm. In particular, the superiority of DGCELSO to the five holistic large-scale PSOs, which also adopt elite particles in the current swarm to direct the evolution of the swarm, demonstrates that the assembly of evolutionary information in elites is effective. Such assembly not only improves the learning diversity of particles due to the random selection of guiding exemplars from the elites but also promotes the learning effectiveness of particles because each updated particle could learn from multiple different elites with the help of the dimension group-based learning. As a result, DGCELSO could compromise search intensification and diversification well to explore and exploit the large-scale solution appropriately to locate satisfactory solutions.

4.3. Deep Investigation on DGCELSO

In this section, we conduct extensive experiments on the 1000-D CEC’2010 benchmark set to verify the effectiveness of the main components in the proposed DGCELSO.

4.3.1. Effectiveness of the Proposed DGCEL

First, we conduct experiments to investigate the effectiveness of the proposed DGCEL strategy. To this end, we first incorporate the segment-based predominance learning strategy (SPL) in SPLSO, which is the most similar work to the proposed DGCELSO, to replace the DGCEL strategy, leading to a new variant of DGCELSO, which we denote as “DGCELSO-SPL”. In addition, we also develop two extreme cases of DGCELSO, where the number of dimension groups (NDG) is set as 1 and 1000, respectively. The former, which we denote as “DGCELSO-1”, con all dimensions as a group, and thus can be considered a DGCELSO without the dimension group-based comprehensive learning, while the latter, which we denote as “DGCELSO-1000”, considers each dimension as a group. This can be considered a DGCELSO by introducing the comprehensive learning strategy in CLPSO [46] to replace the dimension group-based comprehensive learning in DGCELSO. Then, we conduct experiments on the CEC’2010 benchmark set to compare the above four versions of DGCELSO. Table 4 shows the comparison results among the four versions of DGCELSO. In this table, the best results are highlighted in bold.

Table 4.

Comparison results among different versions of DGCELSO on the 1000-D CEC’2010 problems.

From Table 4, the following observations can be attained. (1) From the perspective of the Friedman test, it is found that the rank value of DGCELSO is the smallest among the four versions of DGCELSO. This demonstrates that DGCELSO achieves the best overall performance. (2) Comparing DGCELSO with DGCELSO-SPL, DGCELSO shows great superiority. This demonstrates that the proposed DGCEL strategy is much better than SPL. It should be mentioned that, like DGCEL, SPL also lets each particle learn from multiple elites in the swarm, based on the dimension group. The differences between DGCEL and SPL lie in two aspects. On the one hand, SPL lets particles learn from relatively better elites which are determined by the competition between randomly paired two particles, while DGCEL lets particles learn from absolutely better elites which are the top tp∗NP best particles in the swarm. On the other hand, the second exemplar in the velocity update in SPL is the mean position of the whole swarm, which is shared by all updated particles, while the second exemplar in DGCEL is also randomly selected from the elite particles. With the observed superiority of DGCEL to SPL, it is demonstrated that the exemplar selection in DGCEL is better than that in SPL. (3) Competed with DGCELSO-1 and DGCELSO-1000, DGCELSO presents great superiority. This superiority demonstrates the effectiveness of the proposed dimension group-based comprehensive learning strategy. Instead of learning from only two exemplars in DGCELSO-1, which consider all dimensions as a group, and learning from multiple exemplars dimension by dimension in DGCELSO-1000, which considers each dimension as a group, DGCELSO lets each updated particle learn from multiple exemplars based on dimension group. In this way, the potentially useful information embedded in different exemplars is more likely to be assembled in DGCELSO than in DGCELSO-1 and DGCELSO-1000.

Based on the above observations, it is found that the proposed DGCEL strategy is effective and plays a crucial role in helping DGCELSO achieve promising performance.

4.3.2. Effectiveness of the Proposed Dynamic Adjustment Schemes for Parameters

In this subsection, we conduct experiments to verify the effectiveness of the proposed dynamic adjustment schemes for the two control parameters, namely the elite ratio tp and the number of dimension groups NDG.

First, we conduct experiments to investigate the effectiveness of the proposed dynamic scheme for tp. To this end, we first set tp as different fixed values from 0.1 to 0.9. Then, we compare the DGCELSO with the dynamic scheme with these DGCELSOs with different fixed tp values. Table 5 shows the comparison results between the DGCELSO with the dynamic scheme and the ones with different values of tp on the CEC’2010 benchmark set. In this table, the best results are highlighted in bold.

Table 5.

Comparison results between DGCELSO with the dynamic strategy for tp and the ones with different fixed settings of tp on the 1000-D CEC’2010 problems.

From Table 5, the following findings can be obtained. (1) From the perspective of the Friedman test, it is found that DGCELSO with the dynamic tp ranks first among all versions of DGCELSO with different settings of tp. This demonstrates that DGCELSO with the dynamic tp achieves the best overall performance. (2) More specifically, we find that DGCELSO with the dynamic strategy obtains the best results on 4 problems and its results on the other problems are very close to the best ones obtained by the DGCELSO with the associated optimal settings of tp. These two observations demonstrate that the dynamic strategy for tp is helpful in achieving good performance for DGCELSO.

Then, we conduct experiments to verify the dynamic scheme for the number of dimension groups (NDG). To this end, we first set NDG as different fixed values from 20 to 100. Subsequently, we conduct experiments on the CEC’2010 set to compare the DGCELSO with the dynamic scheme for NDG and the ones with different fixed values of NDG. Table 6 shows the comparison results among the above versions of DGCELSO. In this table, the best results are highlighted in bold.

Table 6.

Comparison results between DGCELSO with the dynamic strategy for NDG and the ones with different fixed settings of NDG on the 1000-D CEC’2010 problems.

From Table 6, we can obtain the following findings. (1) From the perspective of the Friedman test, it is found that the rank value of the DGCELSO with the dynamic scheme for NDG is the smallest among all versions of DGCELSO with different settings of NDG. This demonstrates that DGCELSO with the dynamic strategy achieves the best overall performance. (2) More specifically, we find that DGCELSO with the dynamic strategy obtains the best results on nine problems, while DGCELSO with fixed NDG obtains the best results on at most four problems. In particular, on the other 11 problems where DGCELSO with the dynamic strategy does not achieve the best results, its optimization results are very close to the best ones obtained by DGCELSO with the associated optimal NDG. These two observations verify the effectiveness of the dynamic strategy for NDG.

To sum up, the above comparative experiments demonstrated the effectiveness and efficiency of DGCELSO in solving large-scale optimization problems. In particular, the deep investigation experiments have validated that it is the proposed DGCEL strategy along with the two dynamic strategies that play a crucial role in helping DGCELSO achieve promising performance.

5. Conclusions

This paper proposed a dimension group-based comprehensive elite learning swarm optimizer (DGCELSO) to effectively solve large-scale optimization problems. Specifically, this optimizer first partitions the swarm into two exclusive sets, namely the elite set and the non-elite set. Then, the non-elite particles are updated by learning from the elite ones with the elite particles directly entering the next generation. During the update of each non-elite particle, the dimensions are separated into several dimension groups. Subsequently, for each dimension group, two elites are randomly selected from the elite set and then act as the guiding exemplars to direct the update of the dimension group. In this way, each non-elite particle could comprehensively learn from multiple elites. Moreover, not only are the guiding exemplars for different non-elite particles different, but the guiding exemplars for different dimension groups of the same non-elite particle are also likely to be different. As a result, not only could the learning diversity of particles be improved, but the learning efficiency of particles could also be promoted. To further aid the optimizer to explore and exploit the solution space properly, we designed two dynamic adjustment strategies for the associated control parameters in the proposed DGCELSO.

Experiments conducted on the 1000-D CEC’2010 and CEC’2013 large-scale benchmark sets verified the effectiveness of the proposed DGCELSO by comparing it with nine state-of-the-art large-scale methods. Experimental results demonstrate that DGCELSO achieves highly competitive or even much better performance than the compared methods in terms of both the solution quality and the convergence speed.

Author Contributions

Q.Y.: Conceptualization, supervision, methodology, formal analysis, and writing—original draft preparation. K.-X.Z.: Implementation, formal analysis, and writing—original draft preparation. X.-D.G.: Methodology, and writing—review, and editing. D.-D.X.: Writing—review and editing. Z.-Y.L.: Writing—review and editing, and funding acquisition. S.-W.J.: Writing—review and editing. J.Z.: Conceptualization and writing—review and editing. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported in part by the National Natural Science Foundation of China under Grant 62006124 and U20B2061, in part by the Natural Science Foundation of Jiangsu Province under Project BK20200811, in part by the Natural Science Foundation of the Jiangsu Higher Education Institutions of China under Grant 20KJB520006, in part by the National Research Foundation of Korea (NRF-2021H1D3A2A01082705), and in part by the Startup Foundation for Introducing Talent of NUIST.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Jia, Y.H.; Mei, Y.; Zhang, M. A Two-Stage Swarm Optimizer with Local Search for Water Distribution Network Optimization. IEEE Trans. Cybern. 2021. [Google Scholar] [CrossRef]

- Cao, K.; Cui, Y.; Liu, Z.; Tan, W.; Weng, J. Edge Intelligent Joint Optimization for Lifetime and Latency in Large-Scale Cyber-Physical Systems. IEEE Internet Things J. 2021. [Google Scholar] [CrossRef]

- Chen, W.N.; Tan, D.Z.; Yang, Q.; Gu, T.; Zhang, J. Ant Colony Optimization for the Control of Pollutant Spreading on Social Networks. IEEE Trans. Cybern. 2020, 50, 4053–4065. [Google Scholar] [CrossRef]

- Zuo, T.; Zhang, Y.; Meng, K.; Tong, Z.; Dong, Z.Y.; Fu, Y. A Two-Layer Hybrid Optimization Approach for Large-Scale Offshore Wind Farm Collector System Planning. IEEE Trans. Ind. Inform. 2021, 17, 7433–7444. [Google Scholar] [CrossRef]

- Yang, Q.; Chen, W.N.; Gu, T.; Jin, H.; Mao, W.; Zhang, J. An Adaptive Stochastic Dominant Learning Swarm Optimizer for High-Dimensional Optimization. IEEE Trans. Cybern. 2020, 52, 1960–1976. [Google Scholar] [CrossRef]

- Omidvar, M.N.; Li, X.; Mei, Y.; Yao, X. Cooperative Co-Evolution with Differential Grouping for Large Scale Optimization. IEEE Trans. Evol. Comput. 2014, 18, 378–393. [Google Scholar] [CrossRef] [Green Version]

- Tang, K.; Li, X.; Suganthan, P.; Yang, Z.; Weise, T. Benchmark Functions for the CEC 2010 Special Session and Competition on Large-Scale Global Optimization; Nature Inspired Computation and Applications Laboratory, University of Science and Technology of China: Hefei, China, 2009. [Google Scholar]

- Li, X.; Tang, K.; Omidvar, M.N.; Yang, Z.; Qin, K.; China, H. Benchmark Functions for the CEC 2013 Special Session and Competition on Large-Scale Global Optimization; Technical Report; Evolutionary Computation and Machine Learning Group, RMIT University: Melbourne, Australia, 2013. [Google Scholar]

- Yang, Q.; Li, Y.; Gao, X.-D.; Ma, Y.-Y.; Lu, Z.-Y.; Jeon, S.-W.; Zhang, J. An Adaptive Covariance Scaling Estimation of Distribution Algorithm. Mathematics 2021, 9, 3207. [Google Scholar] [CrossRef]

- Yang, Q.; Chen, W.N.; Gu, T.; Zhang, H.; Yuan, H.; Kwong, S.; Zhang, J. A Distributed Swarm Optimizer with Adaptive Communication for Large-Scale Optimization. IEEE Trans. Cybern. 2020, 50, 3393–3408. [Google Scholar] [CrossRef]

- Omidvar, M.N.; Li, X.; Yao, X. A Review of Population-Based Metaheuristics for Large-Scale Black-Box Global Optimization: Part A. IEEE Trans. Evol. Comput. 2021. in press. Available online: https://ieeexplore.ieee.org/document/9627116 (accessed on 1 January 2022).

- Omidvar, M.N.; Li, X.; Yao, X. A Review of Population-Based Metaheuristics for Large-Scale Black-Box Global Optimization: Part B. IEEE Trans. Evol. Comput. 2021. in press. Available online: https://ieeexplore.ieee.org/document/9627138 (accessed on 1 January 2022).

- Yang, Q.; Xie, H.; Chen, W.; Zhang, J. Multiple Parents Guided Differential Evolution for Large Scale Optimization. In Proceedings of the IEEE Congress on Evolutionary Computation, Vancouver, BC, Canada, 24–29 July 2016; pp. 3549–3556. [Google Scholar]

- Yang, Q.; Chen, W.N.; Li, Y.; Chen, C.L.P.; Xu, X.M.; Zhang, J. Multimodal Estimation of Distribution Algorithms. IEEE Trans. Cybern. 2017, 47, 636–650. [Google Scholar] [CrossRef] [Green Version]

- Eberhart, R.; Kennedy, J. A New Optimizer Using Particle Swarm Theory. In Proceedings of the International Symposium on Micro Machine and Human Science, Nagoya, Japan, 4–6 October 1995; pp. 39–43. [Google Scholar]

- Shi, Y.; Eberhart, R. A Modified Particle Swarm Optimizer. In Proceedings of the IEEE International Conference on Evolutionary Computation Proceedings: IEEE World Congress on Computational Intelligence, Anchorage, AK, USA, 4–9 May 1998; pp. 69–73. [Google Scholar]

- Tang, J.; Liu, G.; Pan, Q. A Review on Representative Swarm Intelligence Algorithms for Solving Optimization Problems: Applications and Trends. IEEE/CAA J. Autom. Sin. 2021, 8, 1627–1643. [Google Scholar] [CrossRef]

- Ren, Z.; Zhang, A.; Wen, C.; Feng, Z. A Scatter Learning Particle Swarm Optimization Algorithm for Multimodal Problems. IEEE Trans. Cybern. 2014, 44, 1127–1140. [Google Scholar] [CrossRef]

- Zhang, J.; Lu, Y.; Che, L.; Zhou, M. Moving-Distance-Minimized PSO for Mobile Robot Swarm. IEEE Trans. Cybern. 2021. [Google Scholar] [CrossRef]

- Villalón, C.L.C.; Dorigo, M.; Stützle, T. PSO-X: A Component-Based Framework for the Automatic Design of Particle Swarm Optimization Algorithms. IEEE Trans. Evol. Comput. 2021. [Google Scholar] [CrossRef]

- Ding, W.; Lin, C.T.; Cao, Z. Deep Neuro-Cognitive Co-Evolution for Fuzzy Attribute Reduction by Quantum Leaping PSO with Nearest-Neighbor Memeplexes. IEEE Trans. Cybern. 2019, 49, 2744–2757. [Google Scholar] [CrossRef]

- Yang, Q.; Hua, L.; Gao, X.; Xu, D.; Lu, Z.; Jeon, S.-W.; Zhang, J. Stochastic Cognitive Dominance Leading Particle Swarm Optimization for Multimodal Problems. Mathematics 2022, 10, 761. [Google Scholar] [CrossRef]

- Bonavolontà, F.; Noia, L.P.D.; Liccardo, A.; Tessitore, S.; Lauria, D. A PSO-MMA Method for the Parameters Estimation of Interarea Oscillations in Electrical Grids. IEEE Trans. Instrum. Meas. 2020, 69, 8853–8865. [Google Scholar] [CrossRef]

- Lan, R.; Zhu, Y.; Lu, H.; Liu, Z.; Luo, X. A Two-Phase Learning-Based Swarm Optimizer for Large-Scale Optimization. IEEE Trans. Cybern. 2020, 51, 6284–6293. [Google Scholar] [CrossRef]

- Yang, Q.; Chen, W.; Deng, J.D.; Li, Y.; Gu, T.; Zhang, J. A Level-Based Learning Swarm Optimizer for Large-Scale Optimization. IEEE Trans. Evol. Comput. 2018, 22, 578–594. [Google Scholar] [CrossRef]

- Cheng, R.; Jin, Y. A Competitive Swarm Optimizer for Large Scale Optimization. IEEE Trans. Cybern. 2015, 45, 191–204. [Google Scholar] [CrossRef]

- Mahdavi, S.; Shiri, M.E.; Rahnamayan, S. Metaheuristics in Large-Scale Global Continues Optimization: A Survey. Inf. Sci. 2015, 295, 407–428. [Google Scholar] [CrossRef]

- Ma, X.; Li, X.; Zhang, Q.; Tang, K.; Liang, Z.; Xie, W.; Zhu, Z. A Survey on Cooperative Co-Evolutionary Algorithms. IEEE Trans. Evol. Comput. 2018, 23, 421–441. [Google Scholar] [CrossRef]

- Li, X.; Yao, X. Cooperatively Coevolving Particle Swarms for Large Scale Optimization. IEEE Trans. Evol. Comput. 2011, 16, 210–224. [Google Scholar]

- Yang, Q.; Chen, W.; Gu, T.; Zhang, H.; Deng, J.D.; Li, Y.; Zhang, J. Segment-Based Predominant Learning Swarm Optimizer for Large-Scale Optimization. IEEE Trans. Cybern. 2017, 47, 2896–2910. [Google Scholar] [CrossRef] [Green Version]

- Xie, H.Y.; Yang, Q.; Hu, X.M.; Chen, W.N. Cross-Generation Elites Guided Particle Swarm Optimization for Large Scale Optimization. In Proceedings of the IEEE Symposium Series on Computational Intelligence, Athens, Greece, 6–9 December 2016; pp. 1–8. [Google Scholar]

- Song, G.W.; Yang, Q.; Gao, X.D.; Ma, Y.Y.; Lu, Z.Y.; Zhang, J. An Adaptive Level-Based Learning Swarm Optimizer for Large-Scale Optimization. In Proceedings of the IEEE International Conference on Systems, Man, and Cybernetics, Melbourne, Australia, 17–20 October 2021; pp. 152–159. [Google Scholar]

- Potter, M.A.; De Jong, K.A. A Cooperative Co-Evolutionary Approach to Function Optimization. In Proceedings of the International Conference on Parallel Problem Solving from Nature, Berlin, Germany, 22–26 September 1994; pp. 249–257. [Google Scholar]

- Yang, Q.; Chen, W.N.; Zhang, J. Evolution Consistency Based Decomposition for Cooperative Coevolution. IEEE Access 2018, 6, 51084–51097. [Google Scholar] [CrossRef]

- Omidvar, M.N.; Yang, M.; Mei, Y.; Li, X.; Yao, X. DG2: A Faster and More Accurate Differential Grouping for Large-Scale Black-Box Optimization. IEEE Trans. Evol. Comput. 2017, 21, 929–942. [Google Scholar] [CrossRef] [Green Version]

- Sun, Y.; Kirley, M.; Halgamuge, S.K. Extended Differential Grouping for Large Scale Global Optimization with Direct and Indirect Variable Interactions. In Proceedings of the Annual Conference on Genetic and Evolutionary Computation, Madrid, Spain, 11–15 July 2015; pp. 313–320. [Google Scholar]

- Sun, Y.; Kirley, M.; Halgamuge, S.K. A Recursive Decomposition Method for Large Scale Continuous Optimization. IEEE Trans. Evol. Comput. 2017, 22, 647–661. [Google Scholar] [CrossRef]

- Song, A.; Chen, W.N.; Gong, Y.J.; Luo, X.; Zhang, J. A Divide-and-Conquer Evolutionary Algorithm for Large-Scale Virtual Network Embedding. IEEE Trans. Evol. Comput. 2020, 24, 566–580. [Google Scholar] [CrossRef]

- Deng, H.; Peng, L.; Zhang, H.; Yang, B.; Chen, Z. Ranking-Based Biased Learning Swarm Optimizer for Large-Scale Optimization. Inf. Sci. 2019, 493, 120–137. [Google Scholar] [CrossRef]

- Wang, H.; Liang, M.; Sun, C.; Zhang, G.; Xie, L. Multiple-Strategy Learning Particle Swarm Optimization for Large-Scale Optimization Problems. Complex Intell. Syst. 2021, 7, 1–16. [Google Scholar] [CrossRef]

- Jian, J.R.; Chen, Z.G.; Zhan, Z.H.; Zhang, J. Region Encoding Helps Evolutionary Computation Evolve Faster: A New Solution Encoding Scheme in Particle Swarm for Large-Scale Optimization. IEEE Trans. Evol. Comput. 2021, 25, 779–793. [Google Scholar] [CrossRef]

- Kampourakis, K. Understanding Evolution; Cambridge University Press: Cambridge, UK, 2014. [Google Scholar]

- Ju, X.; Liu, F. Wind Farm Layout Optimization Using Self-Informed Genetic Algorithm with Information Guided Exploitation. Appl. Energy 2019, 248, 429–445. [Google Scholar] [CrossRef]

- Ju, X.; Liu, F.; Wang, L.; Lee, W.-J. Wind Farm Layout Optimization Based on Support Vector Regression Guided Genetic Algorithm with Consideration of Participation among Landowners. Energy Convers. Manag. 2019, 196, 1267–1281. [Google Scholar] [CrossRef]

- Xia, X.; Gui, L.; Yu, F.; Wu, H.; Wei, B.; Zhang, Y.L.; Zhan, Z.H. Triple Archives Particle Swarm Optimization. IEEE Trans. Cybern. 2020, 50, 4862–4875. [Google Scholar] [CrossRef]

- Liang, J.J.; Qin, A.K.; Suganthan, P.N.; Baskar, S. Comprehensive Learning Particle Swarm Optimizer for Global Optimization of Multimodal Functions. IEEE Trans. Evol. Comput. 2006, 10, 281–295. [Google Scholar] [CrossRef]

- Gong, Y.; Li, J.; Zhou, Y.; Li, Y.; Chung, H.S.; Shi, Y.; Zhang, J. Genetic Learning Particle Swarm Optimization. IEEE Trans. Cybern. 2016, 46, 2277–2290. [Google Scholar] [CrossRef] [Green Version]

- Zhan, Z.; Zhang, J.; Li, Y.; Shi, Y. Orthogonal Learning Particle Swarm Optimization. IEEE Trans. Evol. Comput. 2011, 15, 832–847. [Google Scholar] [CrossRef] [Green Version]

- Van den Bergh, F.; Engelbrecht, A.P. A Cooperative Approach to Particle Swarm Optimization. IEEE Trans. Evol. Comput. 2004, 8, 225–239. [Google Scholar] [CrossRef]

- Mei, Y.; Omidvar, M.N.; Li, X.; Yao, X. A Competitive Divide-and-Conquer Algorithm for Unconstrained Large-Scale Black-Box Optimization. ACM Trans. Math. Softw. 2016, 42, 1–24. [Google Scholar] [CrossRef]

- Yang, M.; Zhou, A.; Li, C.; Yao, X. An Efficient Recursive Differential Grouping for Large-Scale Continuous Problems. IEEE Trans. Evol. Comput. 2021, 25, 159–171. [Google Scholar] [CrossRef]