Abstract

Creating optimal logic mining is strongly dependent on how the learning data are structured. Without optimal data structure, intelligence systems integrated into logic mining, such as an artificial neural network, tend to converge to suboptimal solution. This paper proposed a novel logic mining that integrates supervised learning via association analysis to identify the most optimal arrangement with respect to the given logical rule. By utilizing Hopfield neural network as an associative memory to store information of the logical rule, the optimal logical rule from the correlation analysis will be learned and the corresponding optimal induced logical rule can be obtained. In other words, the optimal logical rule increases the chances for the logic mining to locate the optimal induced logic that generalize the datasets. The proposed work is extensively tested on a variety of benchmark datasets with various performance metrics. Based on the experimental results, the proposed supervised logic mining demonstrated superiority and the least competitiveness compared to the existing method.

MSC:

68T07

1. Introduction

In the area of artificial intelligence (AI), two important perspectives stand out. The first is the applied rule that represents the given problem. The applied rule is vital in decision making in order to explain the nature of the problem. The second perspective is the automation process based on the rule which leads to neuro symbolic integration. These two perspectives rely heavily on the practicality of the symbolic rule that governs the AI system. The use of a satisfiability (SAT) perspective in software and hardware system theories is currently one of the most effective methods in bridging the two perspectives. SAT offers the promise, and often even the reality, that the model checks efforts with feasible industrial application. There were several practical applications of SAT that can be mentioned in this section. Ref. [1] utilized Boolean SAT by integrating satisfiability modulo theories (SMT) in tackling the scheduling problem. The proposed SMT method was reported to outperform other existing methods. Ref. [2] discovered vesicle traffic network by model checking that incorporates Boolean SAT. The proposed SAT model established a connection between vesicle transport graph connectedness and underlying rules of SNARE protein. In another development, [3] developed several SAT formulations to deal with the resource-constrained project scheduling problem (RCPSP). The proposed method is reported to solve various benchmark instances and outperform the existing work in terms of computation time and optimality. SAT formulation is a dynamic language that can be used in representing problem in hand. Ref. [4] proposed a special SAT in modelling the circuit. The proposed method reconstructed the accurate circuit configuration up to 90%. The application of SAT in very-large-scale integration (VLSI) inspires the authors to extend the application of SAT into pattern reconstruction [5] where they used the variable in SAT as a building block of the desired pattern. The practicality of SAT motivates researchers to implement SAT in navigating the structure in an artificial neural network (ANN).

Logic programming in ANN has been initially proposed by [6]. In his work, logic programming can be embedded into the Hopfield neural network (HNN) by minimizing the logical inconsistencies. This is also a pioneer to the Wan Abdullah method which obtains the synaptic weight by comparing cost function with Lyapunov energy function. Ref. [7] further developed the idea of the logic programming in HNN by implementing Horn satisfiability (HornSAT) as a logical structure of HNN. The proposed network achieved more than 80% global minima ratio but high computation time due to the complexity of the learning phase. Since then, logic programming in ANN was extended to another type of ANN. Ref. [8] initially proposed logic programming in radial basis function neural network (RBFNN) by calculating the centre and width of the hidden neurons that corresponds to the logical rule. In the proposed method, the dimensionality of the logical rule from input to output can be reduced by implementing Gaussian activation function. The further development of logic programming in RBFNN were proposed in [9] where the centre and the width of the RBFNN are systematically calculated. In another development, [10] proposed a systematic logical rule by implementing a 2-satisfiability logical rule (2SAT) in HNN. The proposed hybrid network is incorporated with effective learning methods, such as genetic algorithm [11] and artificial bee colony [12]. The proposed network managed to achieve more than 95% of global minima ratio and can sustain a high number of neurons. In another development, [13] proposed the higher order non-systematic logical rule, namely random k satisfiability (RANkSAT) that consists of random first-, second-, and third-order logical rule. The proposed works run a critical comparison among a combination of RANkSAT and demonstrate the capability of non-systematic logical rule to achieve optimal final state. The practicality of the SAT in HNN was explored in pattern satisfiability [5] and circuit satisfiability [4] where the user can capture the visual interpretation of logic programming in HNN. However, up to this point, the choice of SAT structure in HNN has received very little research attention, despite its practical importance.

Current data mining were reported to achieve good accuracy but the interpretability of the output is poorly understood due to emphasize of the black box model. In other words, the output makes sense for the AI but not for the user. One of the most useful applications of logic programming in HNN is logic mining. Logic mining is a relatively new perspective in extracting the behaviour of the dataset via logical rule. This method is a pioneer work of [14]. In this work, the proposed RA extracted individual logical rule that represents the performance of the students. The logical rule extracted from the datasets is based on the number of induced Horn logics produced by HNN. Thus, there is very limited effort to identify the “best” induced logical rule that represent the datasets. To complement the limitation of the previous RA, several studies include specific SAT logical rules to be embedded into HNN. Ref. [15] introduced 3-satisfiability (3SAT) as a logical rule in HNN, thus creating the first systematic logic mining technique, i.e., the k satisfiability reverse analysis method (kSATRA). The proposed hybrid logic mining is used to extract logical rule in several fields of studies, such as social media analysis [15] and cardiovascular disease [16]. In another development, different types of logical rule (2SAT) have been implemented by [17]. They proposed 2SATRA by incorporating the 2SAT logical rule in extracting a diabetes dataset [17] and student’s performance dataset [18]. Ref. [19] utilized 2SATRA by extracting logical rule for football datasets in several established football league in the world. Pursuing that, the ability of 2SATRA is further tested when the proposed method is implemented in e-games. The 2SATRA has been proposed to extract the logical rule that explains the simulation game of the League of Legend (LOL) [20]. The proposed method achieved an acceptable range of logical accuracy. The application of logic mining was extended to several prominent areas, such as extracting the price information from commodities [21]. Another interesting development for kSATRA is by incorporating energy in induced logic. Ref. [22] proposed an energy-based 2-satisfiability-based reverse analysis method (E2SATRA) for e-recruitment. The proposed method reduced the suboptimal induced logic and increased the classification accuracy of the network. Despite the increase in application in data mining, the existing logic mining endured a significant drawback. The induced logic produced by the proposed method suffers from a limited amount of search space. This is due to the positioning of the neurons in kSAT formulation which affects the classification ability of 2SATRA. In this case, the optimal choice of the neuron pair in the kSAT clause in logic mining is crucial to avoid possible overfitting.

There were various studies that implemented regression analysis in ANN. Standalone regression analysis was prone to data overfitting [23], easily affected by outlier [24], and mostly limited to a linear relationship [25]. Due to the above weaknesses, regression analysis was implemented to complement the intelligent system. In most studies, regression analysis will be utilized in the pre-processing layer before it can be processed by the ANN. Ref. [26] proposed a combination of regression analysis with a RBFNN. The proposed method formed a prediction model for national economic data. Ref. [27] proposed an ANN that combines with regression analysis via a mean impact value. The proposed hybrid network identifies and extracts input variables that deal with irregularity and vitality of Beijing International Airport’s passenger flow dataset. In [28], ANN is used to predict the water turbidity level by using optical tomography. The proposed ANN utilized the regression analysis value as an objective function of the network. Ref. [29] fully utilized logistic regression to identify significant microseismic parameters. The significant parameters will be trained by a simple neural network which results in the highly accurate seismic model. By nature, ANN is purely unsupervised learning and logistic regression analysis displays a major improvement to the overall performance. Although there were many studies conducted to confirm the benefit logistic regression analysis in classification and prediction paradigm, regression analysis has never been implemented in classifying the SAT logical rule. Regression analysis has the ability to restructure the logical rule based on the strength of relationship for each k variables in the kSAT clause. In that regard, the ANN will learn the correct logical structure and the probability to achieve highly accurate induced logical rule will increase dramatically. In that regard, relatively few studies have examined the effectiveness of regression in analysing data features that correspond to the kSAT. The choice of variable pair in the 2SAT clause can be made optimally by implementing regression analysis without interrupting the value of the cost function.

Unfortunately, there is no recent effort to discover the optimal choice that leads to the true outcome of the kSAT. The closest work that addresses this issue is shown by [30]. This work [30] utilized neuron permutation to obtain the most accurate induced logical rule by considering neuron arrangement in kSAT. Hence, the aim of this paper is to effectively explore the various possible logical structures in 2SATRA. The proposed logic mining model identifies the optimal neuron pair for 2SAT clause forming a new logical formula. Pearson chi-square association analysis will be conducted to examine the connectedness of the neuron with respect to the outcome. By doing so, the new 2SAT formula learned by HNN as an input logic and the new induced logical rule can be obtained. Thus, the contributions of this paper are:

- (a)

- To formulate a novel supervised learning that capitalize correlation filter among variables in the logical rule with respect to the logical outcome;

- (b)

- To implement the obtained supervised logical rule into HNN by minimizing the cost function which minimizes the final energy;

- (c)

- To develop a novel logic mining based on the hybrid HNN integrated with the 2-satisfiability logical rule;

- (d)

- To construct the extensive analysis for the proposed logic mining in doing various datasets. The proposed logic mining will be compared to the existing state of the art logic mining.

An effective 2SATRA model incorporating a new supervised model will be compared with the existing 2SATRA model for several established datasets. In Section 2, we describe satisfiability programming in HNN in detail. In Section 3, we describe some simulation of HNN by using simulated result. Discussion follows in Section 4. The concluding remarks in Section 5 complete the paper.

2. Motivation

2.1. Optimal Attribute Selection Strategy

Optimal attribute selection is vital to ensure HNN learn the correct logical rule during the learning phase. Ref. [30] proposed logic mining that capitalize random attribute combination that leads to creation of 2SAT logic. In this study, the synaptic weight connection obtained from 2SAT is purely based on the most frequent logical incidence in the datasets. The main question to ask: what happen if the 2SAT logical rule selected the wrong attribute? Hence, there is a huge possibility of the logic mining to learn the wrong synaptic which leads to suboptimal induced logic. A similar observation was made in the study by [31] which proposed 3SAT for induced logic, with a heavy focus on the random attribute selection. It is agreeable that the induced logic might produce accurate induced logic, but this issue leads logic mining to choose the random attributes that reduce the interpretability of induced logic. To solve this issue, the latest study by [30] proposed permutation operator to optimize the random selection proposed by [20]. The permutation operator will increase the accuracy of the induced logic when we change the attribute in the logical formula. Despite the increase in the accuracy and other metrics, the interpretability issue remains unsolvable. This is due to the random selection that contributes to a lack of interpretability of the learned logic in HNN. In this paper, we capitalize the work of [20,30] by constructing the dataset in the form of 2SAT logical rule and permutation operator. By selecting the optimal attribute combination of 2SAT, we can obtain more search space which leads to optimal induced logic.

2.2. Energy Optimization Strategy

Energy optimization in HNN is vital to ensure that every induced logic produced during retrieval phase is always achieved by global minimum energy. This creates an important question is: why HNN must achieve global minimum energy? Global minimum energy indicates a good agreement between the learned logic during pre-processing stage with the induced logic during retrieval phase. Induced logic that achieved global minimum energy can be interpreted. In contrast, induced logic that can achieve local minimum energy might achieve good accuracy, but this is difficult to interpret. In [22], the proposed logic mining is mainly the focus on the energy stability. The main issue when the induced logic is solely focusing on global minimum energy is limit on the possible search space of the HNN. The proposed HNN tends to overfit and produce more redundant induced logic. This will worsen when the proposed HNN selects the wrong attribute to learn. Non-optimal induced logic obtained a lack of interpretability and generalization during the retrieval phase. We tend to achieve similar induced logic which will lead to lower accuracy. Another factor that might affect overfitting of the induced logic structure is the monotonous behaviour of HNN that always converges to the nearest minimum energy. Hence, the feature of energy optimization with the optimal attributes selection will lead to a result that is optimal and easy to interpret.

2.3. Lack of Effective Metric to Assess the Performance of Logic Mining

Effective metric in logic mining is crucial to ensure the actual performance of the induced logic in doing clustering and classification. According to the previous studies, the point of assessment and type of metric are still shallow and do not represent the performance of the logic mining. For instance, the work of [21] reported the error analysis learning phase of HNN but a failure to provide metrics that are related to the contingency table. As a result, the actual performance of the induced logic is still not well understood. Similar limitation reported in [14] where only metric of global minima ratio is used to demonstrate the connection between neurons. The local minimum solution signifies the induced logic rule does not correspond to the learned logic which contribute to the lack of generalization capability. In this case, if the measurement is solely based on the energy metric, then quantifying each element, in terms of confusion metric, is necessary so that the induced logic can carry out the classification task. In addition, the building block that leads to intermediate logics is solely based on the obtained synaptic weight. In this context, without synaptic weight analysis, the connection of the induced logic is poorly understood. For instance, logic mining [20] does not report the result of the strength of connection between variables in the induced logic. As a result, there is no method to assess the logical pattern stored in the content addressable memory (CAM). In this paper, comprehensive analysis, such as error analysis, synaptic weight analysis, and statistical analysis will be employed to get an overall view on the actual performance of all the logic mining models.

3. Satisfiability Representation

SAT is a representation of determining the interpretation that satisfies the given Boolean formula. According to [32], SAT is proven to be an NP-complete problem and is included to cover wide range of optimization problem. Extensive research on SAT leads to the creation of variant SAT which is 2SAT. In this paper, the choice of is due to the two-dimensional decision-making system. Generally, 2SAT consist of the following properties [19]:

- (a)

- A set of defined variables, where that exemplify false and true, respectively.

- (b)

- A set of literals. A literal can be variable or the negation of variable such that .

- (c)

- A set of definite clauses, . Every consecutive is connected to logical AND . Each two literals in (b) are connected by logical OR .

By taking property (a) into account until (c), one can define the explicit definition of as follows:

where is a list of clause with two variables each

By considering the Equations (1) and (2), a simple example of can be written as

where the clauses in Equation (3) are , , and . Note that each clauses mentioned above must be satisfied with specific interpretations [10]. For example, if the interpretation reads , will evaluate false or . Since contains an information storage mechanism and is easy to classify, we implemented into ANN as a logical system.

4. Satisfiability in Discrete Hopfield Neural Network

HNN [33] consists of interconnected neurons without a hidden layer. Each neuron in HNN is defined in bipolar state that represents true and false, respectively. An interesting feature about HNN is the ability to restructure the neuron state until the network reached its minimum state. Hence, the proposed HNN achieved the optimal final state if the collection of neurons in the network reached the lowest value of the minimum energy. The general definition of HNN with the i-th activation is given as follows

where and represent a threshold and synaptic weight of the network, respectively. Without compromising the generality of HNN, some study used as the threshold value. Note that is the number of 2SAT variables. is also defined as the connection between neuron and . The idea of implementing in HNN (HNN-2SAT) is due to the need of some symbolic rule that can govern the output of the network. The cost function of the proposed in HNN is given as follows:

where is the number of clause. The definition of the clause is given as follows [9]

where is the negation of literal in . It is also worth mentioning that if the is because the neuron state associated to is fully satisfied. Each variable inside a particular will be connected by . Structurally, the synaptic weight of is always symmetrical for both the second- and third-order logical rule:

with no self-connection between neurons:

Note that Equations (5)–(8) only account for a non-redundant logical rule because the logical redundancies will result in the diminishing effect of the synaptic weight. The goal of the learning in HNN is to minimize the logical inconsistency that leads to or . Although synaptic weight of the HNN can be properly trained by using conventional method, such as Hebbian learning [33], ref. [14] demonstrated that the Wan Abdullah method can obtain the optimal synaptic weight with minimal neuron oscillation compared to Hebbian learning. For example, if the embedded logical clause is , the synaptic weights will read . During retrieval phase of HNN-2SAT, the neuron state will be updated asynchronously based on the following equation.

where is a final neuron state with pre-defined threshold . In terms of output squashing, the Sigmoid function can be used to provide non-linearity effects during neuron classification. Potentially, the final state of the neuron must contain information that lead to , and the quality of the obtained state can be computed by using Lyapunov energy function:

According to [33], the symmetry of the synaptic weight is sufficient condition for the existence of the Lyapunov function. Hence, the value of in Equation (10) decreases monotonically with network. The absolute minimum energy can pre-determined by substituting interpretation that leads to . In this case, if the obtained neuron state can satisfy , the final neuron state achieved global minimum energy. Note that the current conventions of can be converted to binary by implementing different a Lyapunov function coined by [6].

5. Proposed Method

2SATRA is a logic mining method that can extract a logical rule from the dataset. The philosophy of the 2SATRA is to find the most optimal logical rule of Equation (1), which corresponds to the dynamic system of Equation (9). In the conventional 2SATRA proposed by [20], the choice of variable in 2SATRA will be determined randomly which leads to poor quality of the induced logic. The choices of the neurons are arranged randomly before the learning of HNN can take place. In this section, chi-square analysis will be used during the pre-processing stage. The aim of the association method is to assign the two best neurons/clauses that correspond to the outcome . These neurons will take part during the learning phase of HNN-2SAT which leads to better induced logic. In other words, the additional optimization layer is added to reduce the pre-training effort for 2SATRA to find the best logical rule.

Let the number of neurons represent the attribute of the datasets where each neuron is converted into bipolar interpretation . Necessarily, 2SATRA is required to select neurons that will be learned by HNN-2SAT. In this case, the number of possible neuron permutation after considering the learning logic structure is . By considering the relationship between and neuron , we can optimally select the pair of for each clause . The selection for each is given as follows:

where is the P value between and the neuron . signifies the minimized value of recorded between and , and the value of is pre-defined by the network. Note that does not significy a self-connection between the same neurons. By considering the best- and worst-case scenario, the neuron will be chosen at random if . If the examined neurons do not achieve the pre-determined association, HNN-2SAT will reset the search space, which fulfils the threshold association value. Hence, by using Equation (11), the proposed 2SATRA is able to learn the early feature of the dataset. After obtaining the right set of neurons for , the dataset will be converted into bipolar representation:

Note that we only consider the second-order clause or for each clause in . Hence, the collection of that leads to positive outcome of the learning data or will be segregated. By calculating the collection of that leads to , the optimum logic is given as follows:

where is the number of that leads to . Hence, the logical feature of the can be learned by obtaining the synaptic weight of the HNN. In this case, the cost function in Equation (11) which corresponds to will be compared to Equation (5). By using Equation (9), we obtain the final neuron state .

Since the proposed HNN-2SAT only allows an optimal final neuron state, the quality of the will be verified by using . In this case, that leads to local minima will not be considered. Hence, the classification of the induced is as follows:

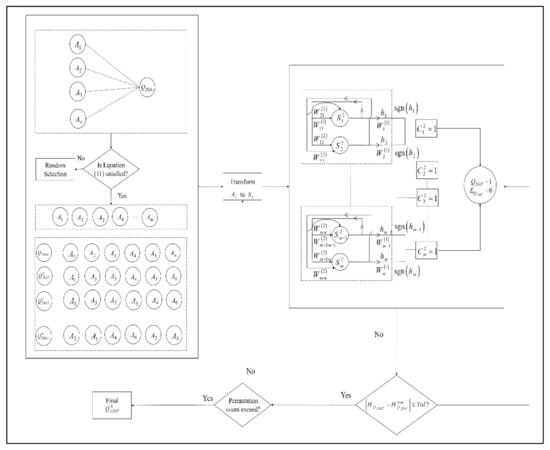

where can be obtained from Equation (10). It is worth mentioning that if the two neurons do not have the strong association, the neurons will not be considered. Thus, if the association value for all neurons does not achieve the threshold variable , the proposed network will be reduced to conventional kSATRA proposed by [21,31]. Figure 1 shows the implementation of the proposed supervised logic mining or (S2SATRA). Algorithm 1 shows Pseudo code of the Proposed S2SATRA.

| Algorithm 1. Pseudo code of the Proposed S2SATRA. | |||||

| Input: Set all attributes with respect to . | |||||

| Output: The best induced logic . | |||||

| 1 | Begin | ||||

| 2 | Initialize algorithm parameters; | ||||

| 3 | Define the Attribute for with respect to ; | ||||

| 4 | Find the correlation value between with ; | ||||

| 5 | fordo | ||||

| 6 | if | Equation (11) is satisfied then | |||

| 7 | Assign as , and continue; | ||||

| 8 | while | do | |||

| 9 | Using the found attributes, find using Equation (13); | ||||

| 10 | Check the clause satisfaction for ; | ||||

| 11 | Compute using Equation (10); | ||||

| 12 | Compute the synaptic weight associated with using the WA method; | ||||

| 13 | Initialize the neuron state; | ||||

| 14 | for | ||||

| 15 | Compute using Equation (9); | ||||

| 16 | Convert to the logical form using Equation (14); | ||||

| 17 | Evaluate the by using Equation (10); | ||||

| 18 | If | Condition (15) is satisfied then | |||

| 19 | Convert to induced logic ; | ||||

| 20 | Compare the outcome of the with and continue; | ||||

| 21 | ; | ||||

| 22 | end for | ||||

| 23 | ; | ||||

| 24 | end while | ||||

| 25 | end for | ||||

| 26 | End | ||||

Figure 1.

The implementation of the proposed S2SATRA.

6. Experiment and Discussion

6.1. Experiment Setup

In this section, we describe the components of the experiments carried out here. The purpose of this experiment is to elucidate the different logic mining mechanism that leads to before it can be learned by HNN. To guarantee the reproducibility of the experiment, we set up our experiment as follows.

6.1.1. Benchmark Datasets

In this experiment, 12 publicly available datasets are obtained from UCI repository https://archive.ics.uci.edu/mL/datasets.php (accessed on 10 December 2021). These datasets are widely used in the classification field and are representative of practical classification problem. The details of the datasets are summarized in Table 1.

Table 1.

List of datasets.

To avoid possible field bias, the area of interest in the dataset varies from science to social datasets. The choice of datasets is based on two aspects. First, we only select a dataset that contains more than 100 instances to preserve the statistical property of a distribution. For example, we avoid choosing balloon datasets because the number of instances is statistically too small to assess the capability learning phase of the proposed model. Second, we only select a dataset that contains more than six attributes. The choice of having more than six attributes is to check the effectiveness of the proposed model in adapting the concept of an optimal attribute selection. In other words, this experiment is unable to assess the effectiveness of the proposed model using association analysis and permutation if the number of attributes is low. Note that the state of the data will be stored in neuron by using bipolar representation and each state can represent the behaviour of the dataset with respect to . In terms of data normalization, k-mean clustering [34] will be used to normalize the continuous datasets into 1 and −1. For a dataset that contains categorical data, the proposed model and the existing model will randomly select . Since the number of missing values for all datasets is very small and negligible, we replaced the missing value with a random neuron state. The experiment employs a train-split method where 60% of the dataset will be trained and 40% of the dataset will be tested [31]. Note that multi-fold validation was not implemented in this paper because we wanted to ensure that learned by HNN has a similar starting point for all logic mining models. A multi-fold validation method will eliminate the original point of assessment during the training phase of logic mining. Hence, the comparison among logic mining is not possible.

6.1.2. Performance Metrics

In terms of metric evaluation performance, several performance metrics were selected to measure the robustness of the proposed method compared to the other existing work. We divided performance metrics into a few parts. Error evaluations consist of a standard error metric, such as a root mean square error (RMSE) and a mean absolute error (MAE). The formulation for both errors are as follows:

where is the state of the data . In detail, the best logic mining model will produce the with the lowest error evaluation. Next, standard classification metrics, such as accuracy, F-score, precision, and sensitivity will be utilized in the experiment. According to [35], the sensitivity metric analyses how well a case correctly produces a positive result for an instance that has a specific condition. Note that, (true positive) is the number of positive instances that correctly classified, (false negative) is the number of positive instances that incorrectly classified, (true negative) is the number of negative instances that correctly classified, and (false positive) is the number of incorrectly classified positive instances.

Meanwhile, precision is utilized to measure the algorithm’s predictive ability. Precision refers to how precise the prediction is from those positively predicted with how many of them are actually positive. The calculation for precision (Pr) is defined as follows:

Accuracy (Acc) is generally the common metric for determining the performance of the classification. This metric measures the percentage of instances categorized correctly:

As stated by [36], F-score is a significant necessity that reflects the highest probability of correct result, explicitly representing the ability of the algorithm. Additionally, F1-score is described as the harmonic mean of precision and sensitivity. Next, the Matthews correlation coefficient (MCC) will be used to examine the performance of the logic mining based on the eight major derived ratios from the combination of all components of a confusion matrix. MCC is regarded as a good metric that represents the global model quality and can be used for classes of a different size [37].

It is worth mentioning that this is our first encounter to approach logic mining with various performance metrics. In [20,22], the only metric used is only accuracy and testing error.

6.1.3. Baseline Methods

Since the main focus of this paper is to examine the performance of the induced logic produced by S2SATRA, we limit our comparison to only method that produce induced logic. Despite the fact that we respect the capability of the existing model in classifying the dataset, we will not compare S2SATRA with the existing classification model, such as random forest, decision tree, etc., because these models do not produce any logical rule that classifies the dataset. For consistency purposes, all the experiments will employ the same type of logical rule, i.e., . For comparison purposes, the proposed S2SATRA will be compared with all the existing logic mining models, such as 2SATRA [20], the energy-based 2-satisfiability reverse analysis method (E2SATRA) [22], the 2-satisfiability reverse analysis method with permutation element (P2SATRA) [30], and the state-of-the-art reverse analysis method (RA) [14]. This section will discuss the implementation of each logic mining models.

- (a)

- The conventional 2SATRA model proposed by [20] utilizes integrated with the Wan Abdullah method. The determination of follows the Equation (13) and the selected attributes are randomized. During the retrieval phase, HNN-2SAT will retrieve the optimal that leads to optimal induced logic which then leads to the potential generalization of the datasets. There is no layer of verification around whether the final state produced is the global minimum energy.

- (b)

- In E2SATRA [22], Lyapunov energy function in Equation (10) will be used to verify the . The final state of the HNN will converge to the nearest minimum solution. In this case, that achieve local minimum energy will be filtered out during retrieval phase of HNN-2SAT. The dataset generalization of E2SATRA does not consider the optimal attribute selection.

- (c)

- In P2SATRA [30], the permutation operator will be used to permutate the attribute in . The permutation operator will explore the possibility of search space related to the chosen attributes. Note that redundant permutation will not be considered during the attribute selection. The retrieval property of the P2SATRA will have the same property as conventional 2SATRA.

- (d)

- As for RA proposed by [14], we introduced RA that can only produce HornSAT property [7] while still maintaining the two attributes per . To make the proposed RA comparable with our proposed method, calibration is required. The main calibration from the previous RA is the number of produced by the datasets. Instead of assigning neuron for each instance, we assign each neuron with attributes. The neuron redundancy is also introduced to avoid the net-zero effect of the synaptic weight.

During the learning phase, learning optimization is implemented to ensure that the synaptic weight obtained is purely due to the HNN. Note that the effective synaptic weight management will change the final state of HNN, leading to different . Since the HNN has a recurrent learning property [33], the neuron will change states until and until the learning threshold is reached. According to [14], if the learning of exceeds the proposed , the HNN will use the current optimal synaptic weight for the retrieval phase. During the retrieval phase of HNN, the neuron state will be initially randomized to reduce the possible bias. Noise function is not added, such as in [22,31], because the main objective of this experiment is to investigate the type of attributes that retrieve the most optimal final . To obtain consistent results throughout all 2SATRA models, the only squashing function employed by the neurons in 2SATRA models is the hyperbolic activation function in [38]. By considering only one fixed learning rule, we can examine the effect of supervised learning towards the 2SATRA model. Table 1, Table 2, Table 3, Table 4 and Table 5 illustrate the list of parameters involved in the experiment.

Table 2.

List of parameters in S2SATRA.

Table 3.

List of parameters in E2SATRA [22].

Table 4.

List of parameters in 2SATRA [20].

Table 5.

List of parameters in P2SATRA [30].

6.1.4. Experimental Design

The simulations were all implemented using Dev C++ Version 5.11 (manufactured by Bloodshed Company from USA) for Windows 10 (Microsoft from USA) in 2 GB RAM with Intel Core I3 (Intel from USA) as a workstation. As for association analysis, will be obtained by using IBM SPSS Statistics Version 27 (manufactured by IBM from New York, NY, USA). All the experiments were implemented in the same device to avoid a possibly bad sector during the simulation. Each 2SATRA model will undergo 10 independent runs to reduce the impact of bias caused by the random initialization of a neuron state.

7. Results and Discussion

7.1. Synaptic Weight Analysis

Figure 1 demonstrates that the optimal 2SATRA model requires pre-processing structure for neurons before the can be learned by HNN. The currently available 2SATRA model specifically optimizes the logic extraction from the dataset without considering the optimal . Hence, the mechanism that optimizes the optimal neuron relationship before the learning can occur remains unclear. Identifying a specific pair of neurons for will facilitate the logic mining to obtain the optimal induced logic.

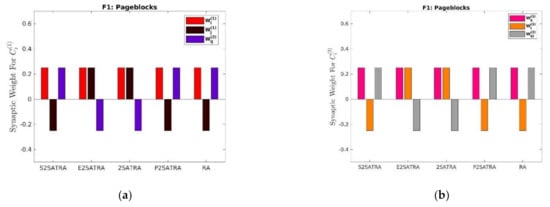

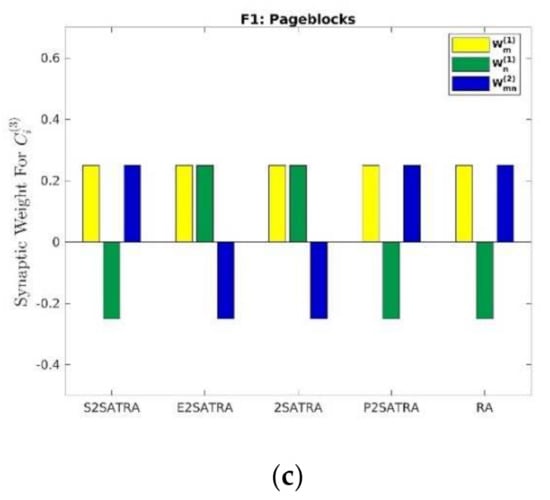

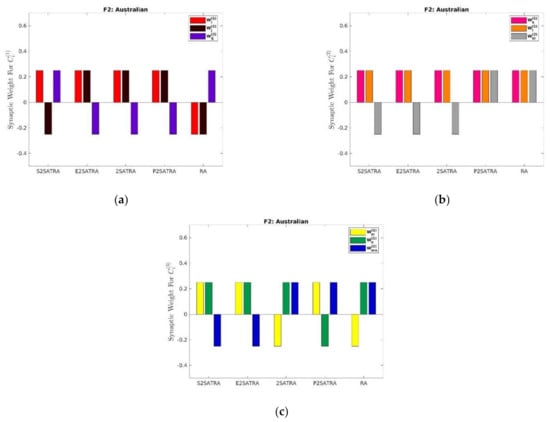

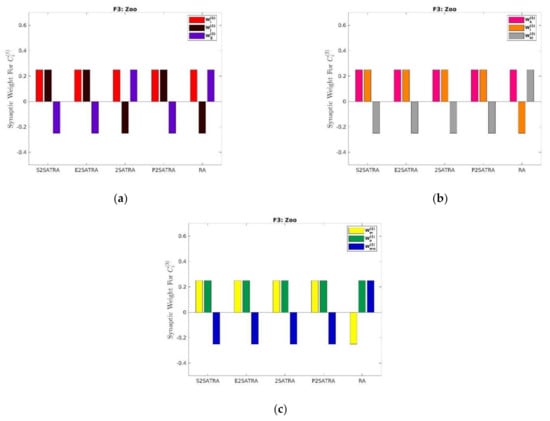

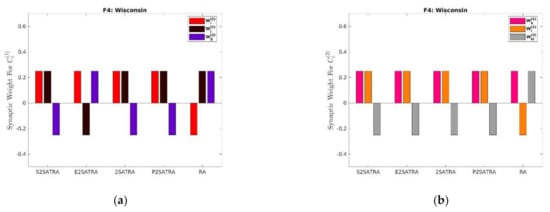

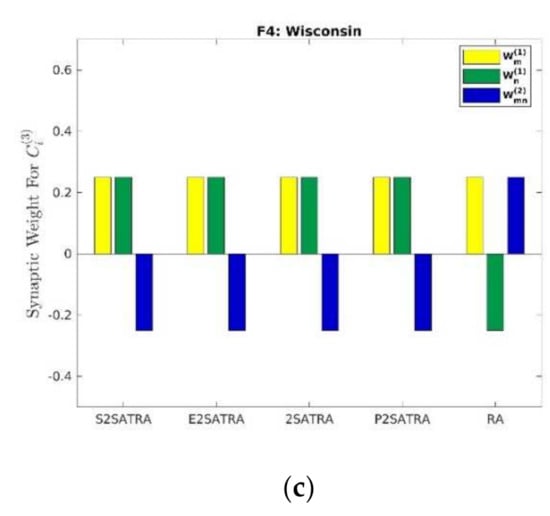

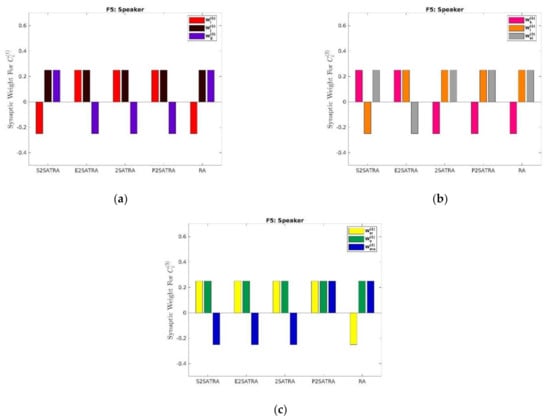

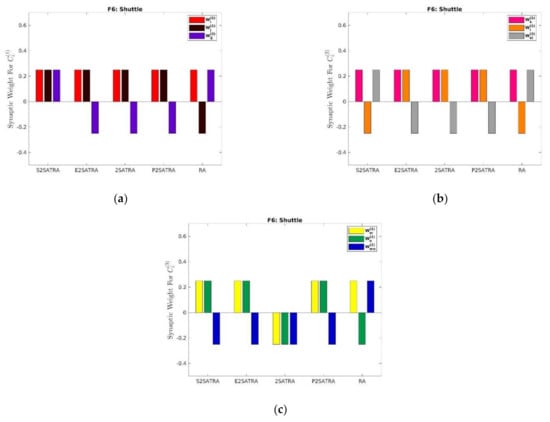

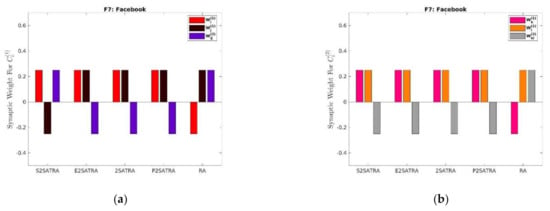

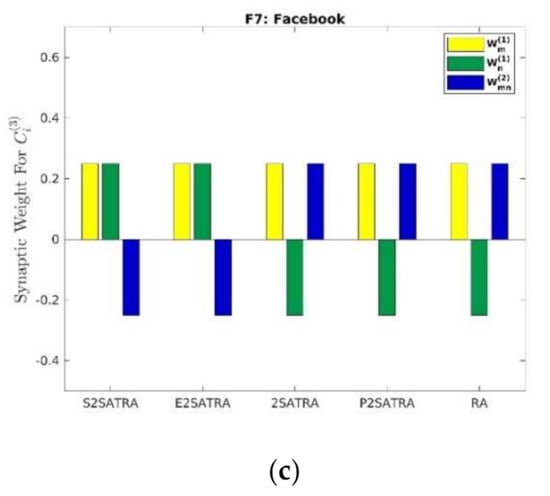

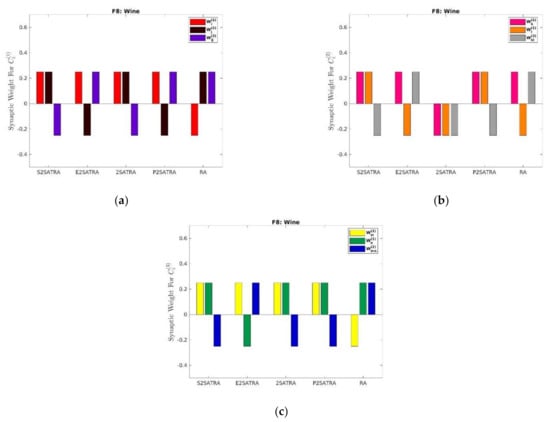

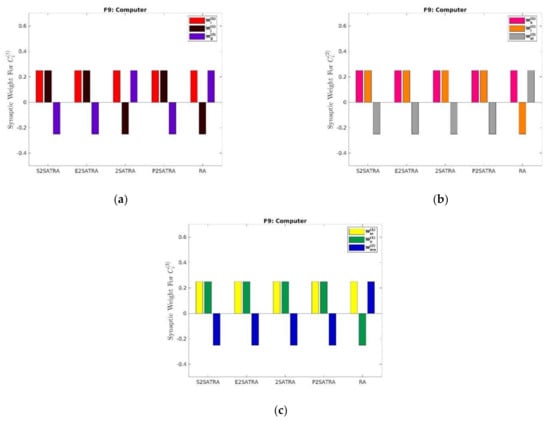

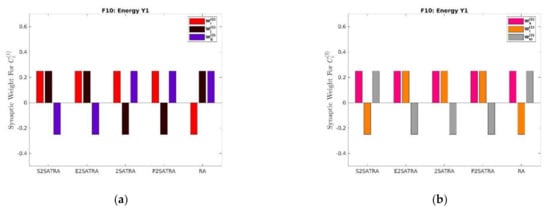

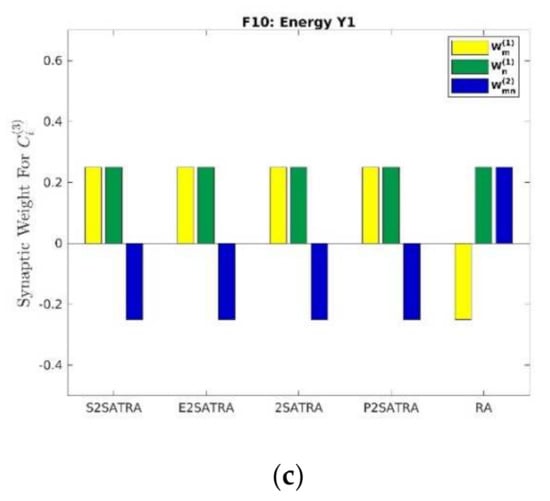

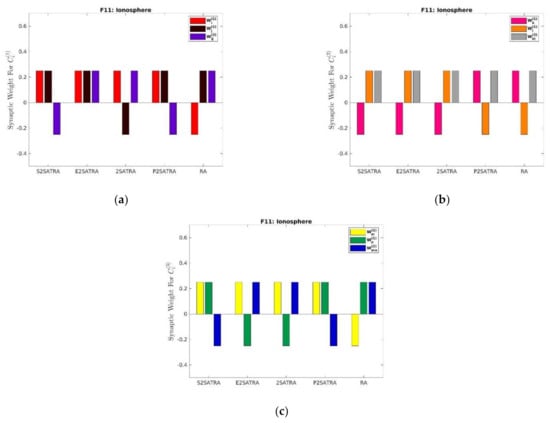

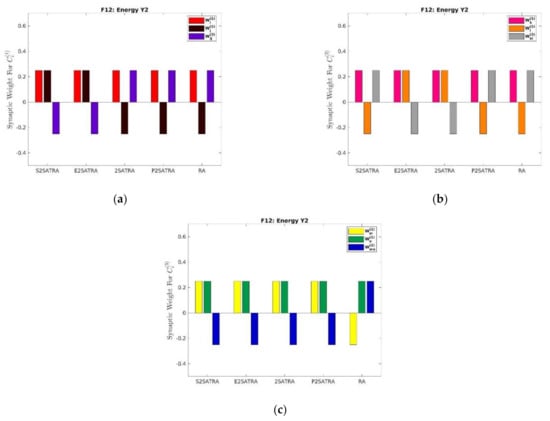

Figure 2, Figure 3, Figure 4, Figure 5, Figure 6, Figure 7, Figure 8, Figure 9, Figure 10, Figure 11, Figure 12 and Figure 13 demonstrate the synaptic weight for all logic mining models in extracting logical information for F1–F12. Note that and represent the first- and second-order connection in the clause. In this section, we will check the optimality of the synaptic weight with respect to the obtained accuracy value. Several interesting points can be made from Figure 2, Figure 3, Figure 4, Figure 5, Figure 6, Figure 7, Figure 8, Figure 9, Figure 10, Figure 11, Figure 12 and Figure 13.

- (a)

- Despite different attribute selection for S2SATRA compared to the other logic mining model, the induced logic for S2SATRA shows more logical variation compared to other existing work. For instance, the synaptic weight for S2SATRA has a bias towards a positive literal for only four datasets while maintaining high accuracy.

- (b)

- RA demonstrates logical rigidness because the synaptic weight must produce a final state with at least one positive literal. According to Figure 2, Figure 3, Figure 4, Figure 5, Figure 6, Figure 7, Figure 8, Figure 9, Figure 10, Figure 11, Figure 12 and Figure 13, the induced logic tends to overfit with the datasets. The structure of the induced logic obtained in RA might exhibit some diversity compared to S2SATRA but remains suboptimal, leading to a lower accuracy value. Hence, great diversity with wrong attribute selection reduces the effectiveness of logic mining model.

- (c)

- In terms of energy optimization strategy, the energy filter in S2SATRA is able to retrieve global induced logic that contains more negated neurons compared to E2SATRA. This shows that the choice of attribute will definitely influence the choice of synaptic weight learning. For example, E2SATRA managed to achieve 10 similar global induced logic as an optimal logic for F1, F2, F3, F4, F5, F6, F7, F9, F10, and F12 compared to S2SATRA which can only retrieve 4 similar induced logic for F3, F4, F8, and F9. Despite having similar global induced logic, S2SATRA can still obtain a high accuracy level.

- (d)

- Another interesting insight is that permutation operators improve P2SATRA in learning optimal synaptic weight, but the improvement seems more obvious in S2SATRA. For instance, with the same synaptic weight for neuron A and D but a different attribute representation, S2SATRA is able to achieve higher accuracy. A similar observation is made for other neurons from A to E. This implies the need of the optimal attribute selection before learning of HNN can take place.

Figure 2.

Synaptic weight analysis for F1: (a) ; (b) and (c) .

Figure 3.

Synaptic weight analysis for F2: (a) ; (b) and (c) .

Figure 4.

Synaptic weight analysis for F3: (a) ; (b) and (c) .

Figure 5.

Synaptic weight analysis for F4: (a) ; (b) and (c) .

Figure 6.

Synaptic weight analysis for F5: (a) ; (b) and (c) .

Figure 7.

Synaptic weight analysis for F6: (a) ; (b) and (c) .

Figure 8.

Synaptic weight analysis for F7: (a) ; (b) and (c) .

Figure 9.

Synaptic weight analysis for F8: (a) ; (b) and (c) .

Figure 10.

Synaptic weight analysis for F9: (a) ; (b) and (c) .

Figure 11.

Synaptic weight analysis for F10: (a) ; (b) and (c) .

Figure 12.

Synaptic weight analysis for F11: (a) ; (b) and (c) .

Figure 13.

Synaptic weight analysis for F13: (a) ; (b) and (c) .

7.2. Correlation Analysis for S2SATRA

Table 6 and Table 7 demonstrate the correlation value between the attribute for F1 until F12 with respect to . For a clear illustration, signifies that there is no correlation between the attribute with . Hence, if the correlation exists between the attributes and the outcome, we will “reject” the decision of and the connotation of “Accept” means the otherwise [39]. In other words, the aim of this analysis is to verify which will be chosen to represent the in . Based on Table 8, most of the attributes selected in S2SATRA have a high correlation with . The non-correlated attributes will be disregarded in the right way before it can be introduced in the learning phase of HNN. The main concern in the conventional logic mining model is the possible choice of that construct purely based on the random selection. For example, in F12, the logic mining model without a supervised layer might choose and to construct and will have to learn unnecessary attributes that lead to . In this context, HNN-2SAT will learn non-optimal that corresponds to the datasets which has no correlation with the final outcome. Hence, the effectiveness of knowledge extraction for logic mining will be reduced dramatically because one of the is not correlated to the desired outcome. Based on the result, the correlation layer is vital to avoid S2SATRA from choosing the wrong attributes.

- (a)

- According to Table 7 and Table 8, the worst performing correlation values which account for most of the weakly correlated values are F1, F5, F7, and F11. The weak correlation is determined after considering the absolute value of the correlation. Despite the low correlation value, S2SATRA is still able to avoid attributes with no correlation at all.

- (b)

- The best performing correlation datasets are F4, F9, F10, and F12 where all the attributes of interest are selected for learning. The optimal selection by S2SATRA has a good agreement with high accuracy of the induced logic compared to the existing model.

- (c)

- F6 and F8 are the only datasets that partially achieve the optimal number of attributes with a high correlation with . These datasets are reported to be highly correlated and the results have slightly low accuracy in terms of induced logic.

- (d)

- Overall, we can also conclude that S2SATRA does not require any randomized attribute selection because all correlation values agree with the association threshold value.

Table 6.

Correlation analysis for 8 sampled attributes for F1–F6.

Table 6.

Correlation analysis for 8 sampled attributes for F1–F6.

| F1 | ||||||||

| Correlation | 0.352 | −0.004 | 0.335 | 0.097 | 0.211 | −0.178 | 0.166 | 0.157 |

| 5.4 × 10−159 | 7.7 × 10−1 | 2.1 × 10−69 | 7.8 × 10−13 | 4.3 × 10−56 | 2.7 × 10−40 | 3.9 × 10−35 | 1.6 × 10−31 | |

| Decision | Reject | Accept | Reject | Reject | Reject | Reject | Reject | Reject |

| F2 | ||||||||

| Correlation | −0.014 | 0.374 | 0.247 | 0.720 | 0.458 | 0.406 | 0.032 | 0.115 |

| 7.2 × 10−1 | 2.7 × 10−24 | 5.1 × 10−11 | 1.9 × 10−111 | 3.9 × 10−37 | 7.9 × 10−29 | 4.1 × 10−1 | 2.0 × 10−3 | |

| Decision | Accept | Reject | Reject | Reject | Reject | Reject | Accept | Reject |

| F3 | ||||||||

| Correlation | 0.366 | 0.202 | 0.344 | 0.230 | 0.376 | 0.581 | −0.338 | 0.432 |

| 2.0 × 10−3 | 1.0 × 10−1 | 4.0 × 10−3 | 6.2 × 10−2 | 2.0 × 10−3 | 2.4 × 10−7 | 5.0 × 10−3 | 3.0 × 10−4 | |

| Decision | Reject | Accept | Reject | Accept | Reject | Reject | Reject | Reject |

| F4 | ||||||||

| Correlation | 0.687 | 0.678 | 0.686 | 0.580 | 0.752 | 0.636 | 0.604 | 0.284 |

| 9.3 × 10−99 | 4.2 × 10−95 | 2.3 × 10−98 | 5.4 × 10−64 | 1 × 10−127 | 1.4 × 10−80 | 1.1 × 10−70 | 2.1 × 10−14 | |

| Decision | Reject | Reject | Reject | Reject | Reject | Reject | Reject | Reject |

| F5 | ||||||||

| Correlation | 0.081 | −0.278 | 0.250 | 0.269 | 0.077 | 0.189 | −0.271 | 0.214 |

| 1.4 × 10−1 | 2.8 × 10−7 | 4.4 × 10−6 | 7.5 × 10−7 | 1.6 × 10−1 | 5 × 10−4 | 5.8 × 10−7 | 0.0 × 10−1 | |

| Decision | Accept | Reject | Reject | Reject | Accept | Reject | Reject | Reject |

| F6 | ||||||||

| Correlation | 0.737 | 0.144 | −0.010 | −0.447 | −0.016 | −0.595 | 0.521 | 0.735 |

| 0.0 × 10−1 | 8.8 × 10−68 | 2.3 × 10−1 | 0.0 × 10−1 | 5.5 × 10−2 | 0.0 × 10−1 | 0.0 × 10−1 | 0.0 × 10−1 | |

| Decision | Reject | Reject | Accept | Reject | Accept | Reject | Reject | Reject |

Table 7.

Correlation analysis for 8 sampled attributes for F7–F12.

Table 7.

Correlation analysis for 8 sampled attributes for F7–F12.

| F7 | ||||||||

| Correlation | −0.086 | −0.0324 | −0.397 | 0.393 | −0.091 | −0.180 | −0.072 | −0.133 |

| 4.1 × 10−13 | 7.9 × 10−172 | 2.0 × 10−264 | 4.3 × 10−259 | 2.7 × 10−14 | 1.4 × 10−52 | 1.8 × 10−9 | 5.4 × 10−29 | |

| Decision | Reject | Reject | Reject | Reject | Reject | Reject | Reject | Reject |

| F8 | ||||||||

| Correlation | 0.518 | −0.847 | 0.489 | −0.499 | 0.266 | −0.617 | −0.788 | −0.634 |

| 1.3 × 10−13 | 2.7 × 10−50 | 4.3 × 10−12 | 1.3 × 10−12 | 3.0 × 10−4 | 4.4 × 10−20 | 5.9 × 10−39 | 2.2 × 10−21 | |

| Decision | Reject | Reject | Reject | Reject | Reject | Reject | Reject | Reject |

| F9 | ||||||||

| Correlation | 0.178 | 0.009 | 0.819 | 0.901 | 0.649 | 0.611 | 0.592 | 0.966 |

| 1.0 × 10−2 | 8.9 × 10−1 | 6.7 × 10−52 | 4.2 × 10−77 | 2.5 × 10−26 | 9.7 × 10−23 | 3.6 × 10−21 | 3.4 × 10−124 | |

| Decision | Reject | Accept | Reject | Reject | Reject | Reject | Reject | Reject |

| F10 | ||||||||

| Correlation | 0.671 | −0.704 | 0.473 | −0.914 | 0.933 | 0.995 | 0.156 | −0.055 |

| 4.4 × 10−50 | 4.2 × 10−57 | 3.3 × 10−22 | 3.8 × 10−147 | 2.2 × 10−166 | 0.0 × 10−1 | 3.0 × 10−3 | 2.9 × 10−1 | |

| Decision | Reject | Reject | Reject | Reject | Reject | Reject | Reject | Reject |

| F11 | ||||||||

| Correlation | 0.011 | 0.072 | 0.310 | 0.315 | 0.345 | 0.581 | 0.336 | 0.306 |

| 8.4 × 10−1 | 1.8 × 10−1 | 3.0 × 10−9 | 1.6 × 10−9 | 3.1 × 10−11 | 5.0 × 10−33 | 9.9 × 10−11 | 4.9 × 10−9 | |

| Decision | Accept | Accept | Reject | Reject | Reject | Reject | Reject | Reject |

| F12 | ||||||||

| Correlation | 0.674 | −0.710 | 0.435 | −0.900 | 0.924 | 0.022 | 0.136 | −0.051 |

| 8.4 × 10−51 | 2.1 × 10−58 | 1.2 × 10−18 | 3.7 × 10−136 | 6.2 × 10−157 | 6.8 × 10−1 | 9.0 × 10−3 | 3.3 × 10−1 | |

| Decision | Reject | Reject | Reject | Reject | Reject | Accept | Reject | Accept |

Table 8.

Improved RA [14].

Table 8.

Improved RA [14].

| Parameter | Parameter Value |

|---|---|

| Neuron Combination | 100 |

| Number of Learning | 100 |

| Logical Rule | |

| No_Neuron String | 100 |

| Selection_Rate | 0.1 |

7.3. Error Analysis

Table 9 and Table 10 demonstrate the error evaluation for all the logic mining models. The S2SATRA model outperforms all logic mining models in terms of RMSE and MAE. Note that the improvement ratio is considered by taking into account the differences between the error value divided with the error produced by logic mining.

Table 9.

RMSE for all logic mining models. The bracket indicates the ratio of improvement and * indicates division by zero. A negative ratio implies the method outperform the proposed method. is obtained from the paired Wilcoxon rank test and ** indicates the model with significant inferiority compared to the superiority model.

Table 10.

MAE for all logic mining models. The bracket indicates the ratio of improvement and * indicates division by zero. A negative ratio implies the method outperform the proposed method. is obtained from the paired Wilcoxon rank test and ** indicates the model with significant inferiority compared to the superiority model.

A high value of RMSE demonstrates the high deviation of the error compared with the . S2SATRA ranks first on 12 datasets. The “+”, “−“, and “=” in the results column indicate that S2SATRA is superior, inferior, and equal to the comparison algorithm, respectively. The “Avg” indicates the corresponding algorithm’s average of the Friedman test for 12 datasets. The rank represents the ranking of the “Avg Rank”. Although the value S2SATRA is the lowest compared to other logic mining model, the RMSE value is high, which shows that the error is deviated from the mean of the error for the whole . According to Table 9 and Table 10, there are several winning points for S2SATRA, which are as follows.

- (a)

- In terms of individual RMSE and MAE, S2SATRA outperforms all the existing logic mining models which extract the logical rule from the datasets.

- (b)

- There were several datasets that recorded zero error, such as in F3 and F10. In terms of MAE, S2SATRA achieved less than 0.5 for all the datasets, resulting in a lower mean MAE (0.168).

- (c)

- Despite showing the best performance compared to all existing methods, the RMSE value for S2SATRA is still high for several datasets, such as in F1, F6, and F7. Although a high value of RMSE is recorded, the value is much lower compared to the other existing work.

- (d)

- The Friedman test rank is conducted for all the datasets with and a degree of freedom of . The for both RMSE and MAE are 1.27 × 10−7 and 2.09 × 10−7 , respectively. Hence, the null hypothesis of equal performance for all the logic mining models is rejected. According to Table 9 and Table 10 for all the datasets, S2SATRA has an average rank of 1.25 and 1.333 for RMSE, respectively, which is highest compared to other existing methods. The closest method that competes with S2SATRA is P2SATRA with an average rank of 2.083 and 2.000, respectively.

- (e)

- Overall, the average RMSE and MAE for S2SATRA shows an improvement by 83.9% compared to the second best method which is P2SATRA. In this case, the optimal attribute selection contributes towards a lower value of RMSE and MAE.

- (f)

- In addition, the Wilcoxon rank test is conducted to statistically validate the superiority of S2SATRA [40]. From the table, we observe that S2SATRA is the top-ranked logic mining model in terms of error analysis followed by P2SATRA, E2SATRA, 2SATRA, and RA.

P2SATRA is observed to achieve a competitive result where the 5 out of 12 datasets have the same error during the retrieval phase. This indicates that the conventional 2SATRA model can be further improved with a permutation operator. Despite the high permutation value (up to 1000 permutation/run) implemented in each dataset, most of the attributes in the P2SATRA are insignificant with respect to the final output. Hence, the accumulated testing error will be higher than the proposed S2SATRA. It is also worth noting that implementation of the permutation operator from P2SATRA benefits S2SATRA in terms of search space. In another perspective, an energy-based approach, E2SATRA, is able to obtain which can achieve the global minima energy but tends to get trapped in suboptimal solution. According to Table 9 and Table 10, E2SATRA showed improvement in terms of error compared to the conventional 2SATRA but the induced logic only explores a limited search space. For example, the high accumulation error in F2–F8 were due to small number of produced by E2SATRA. The only advantage for E2SATRA compared to RA is the stability of the in finding the correction dataset generalisation. E2SATRA is reported to be slightly worse compared to P2SATRA, except for F8 and F10 where the error difference is 86.3% and 47.2%, respectively. Conventional 2SATRA and RA were reported to produce with the worst quality due to the wrong choice of attribute selection. Another interesting insight is that the modified RA from [14] tends to overlearn, which results in an accumulation of error. For instance, RA accumulates a large RMSE value in F1, F6, and F7, due to the rigid structure of during the learning phase and the testing phase of RA. Additionally, the rigid structure for in RA does not contribute to effective attribute representation. Overall, it can be seen that, compared with each comparison algorithm, S2SATRA has the greatest advantages on more than 10 datasets in terms of RMSE and MAE.

7.4. Accuracy, Precision, Sensitivity, F1-Score, and MCC

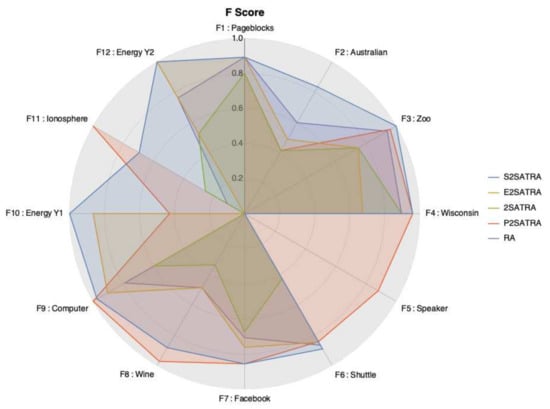

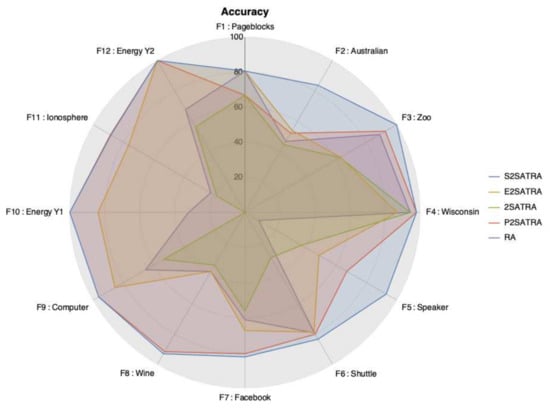

Figure 14 and Figure 15 demonstrate the result for F-score and Acc for all the logic mining models. There are several winning points for S2SATRA according to both figures, which are as follows.

- (a)

- In terms of Acc, S2SATRA achieved the highest Acc value in 11 out of 12 datasets. The closest model that competes with S2SATRA is P2SATRA. A similar observation in F-score is that S2SATRA achieves the highest value in 8 out of 12 datasets, while the closest model that competes with S2SATRA is P2SATRA.

- (b)

- There were three datasets (F3, F10, and F12) that achieve , which means that S2SATRA can correctly predict the for all values of and . For the F-score value, there were three datasets that achieved value, meaning that S2SATRA can correctly produce during the retrieval phase of HNN. In this context, indicates the perfect precision and recall.

- (c)

- There is no value for F-score for F5 for all the logic mining models because there is no in the testing data.

- (d)

- According to the Figure 14 and Figure 15, no value for is reported and only F11 reports the lowest value of F-score. No F-score value in F5 indicates that there is no value of during the testing data. This justifies the superiority of the S2SATRA in differentiating and cases which is very crucial in logic mining.

- (e)

- S2SATRA shows an average improvement in the Acc value ranging from 27.1% to 97.9%. This shows that the clustering capability of S2SATRA significantly improved while the error value remains low (refer Table 7 (A)). A similar observation is reported in F-score. S2SATRA shows an average improvement ranging from 30.1% until 75.7%. This also shows that the clustering capability of S2SATRA significantly improved while the error value remains low.

- (f)

- The Friedman test rank is conducted for all the datasets with and a degree of freedom of . The both for Acc and F-score are 4.26 × 10−7 and 8.00 × 10−6 , respectively. Hence, the null hypothesis of equal performance for all the logic mining models is rejected. S2SATRA has an average rank of 1.375 which is the highest compared to other existing method for Acc. The closest method that competes with S2SATRA is P2SATRA with an average rank of 2.083. On the other hand, S2SATRA has an average rank of 1.458 which is the highest compared to other existing logic mining models for F-score. The closest method that competes with S2SATRA is P2SATRA with an average rank of 2.333. Both results statistically validate the superiority of S2SATRA compared to the existing work.

- (g)

- In addition, the paired Wilcoxon rank test is conducted to statistically validate the superiority of the S2SATRA. From the table, we observed that S2SATRA is the top-ranked logic mining model in terms of and F-score followed by P2SATRA, E2SATRA, 2SATRA, and RA.

Figure 14.

F-score for all logic mining models.

Figure 15.

Accuracy for all the logic mining models.

Table 11 and Table 12 demonstrate the result for Pr and Se for all the 2SATRA models. According to Table 7 (A), there are several winning points for S2SATRA, which are as follows.

- (a)

- In terms of Pr, S2SATRA outperforms other logic mining model in 6 out of 12 datasets. The closest model that competes with S2SATRA is P2SATRA. For Se, S2SATRA outperforms other 2SATRA models in 7 out of 12 datasets. Similar to the Pr value, the closest model that competes with S2SATRA is P2SATRA.

- (b)

- There were three datasets that achieve Pr = 1 value, which means that S2SATRA can correctly predict the in comparison with all the positive outcomes. For the Se value, four datasets achieved an value, which means that S2SATRA can correctly produce a positive result during the retrieval phase of HNN.

- (c)

- No value for both Pr and Se is reported for F5 because there is no positive outcome for these datasets.

- (d)

- The only datasets that achieved Pr < 0.8 were F8 and F11. This shows that 2SATRA has good capability in differentiating a positive result with a negative result. A similar observation is reported in Se where the only datasets that achieved were F8 and F11. Hence, S2SATRA has a competitive capability to produce a positive result compared to other existing 2SATRA model.

- (e)

- S2SATRA shows an average improvement in the Pr value, ranging from 12.3% to 61.2%. This shows that the clustering capability of S2SATRA significantly improved while the error value remained low (refer Table 11). A similar observation is reported in the Se result. S2SATRA shows an average improvement ranging from 1.8% to 63.9%. This also shows that the clustering capability of S2SATRA significantly improved while the error value remained low.

- (f)

- According to the Friedman test rank for all the datasets, S2SATRA has an average rank of 1.458 which is the highest compared to other existing methods for Pr. The closest method that competes with S2SATRA is P2SATRA, with an average rank of 2.333. On the other hand, S2SATRA has an average rank of 1.375 which is the highest compared to other existing method for Se. The closest method that competes with S2SATRA is P2SATRA, with an average rank of 2.083. Both results statistically validate the superiority of S2SATRA compared to the other logic mining.

- (g)

- In addition, the paired Wilcoxon rank test is conducted to statistically validate the superiority of S2SATRA. From the table, we observed that S2SATRA is the top-ranked logic mining model in terms of Pr and Se, as compared to most of the existing work.

Table 11.

Precision (Pr) for all models. The bracket indicates the ratio of improvement and * indicates division by zero. A negative ratio implies the method outperform the proposed method. is obtained from the paired Wilcoxon rank test and ** indicates the model with significant inferiority compared to the superiority model.

Table 11.

Precision (Pr) for all models. The bracket indicates the ratio of improvement and * indicates division by zero. A negative ratio implies the method outperform the proposed method. is obtained from the paired Wilcoxon rank test and ** indicates the model with significant inferiority compared to the superiority model.

| Dataset | S2SATRA | E2SATRA | 2SATRA | P2SATRA | RA |

|---|---|---|---|---|---|

| F1 | 0.826 | 0.826 (0) | 0.685 (0.205) | 0.685 (0.206) | 0.826 (0) |

| F2 | 0.934 | 0.984 (−0.051) | 0.443 (1.108) | 0.385 (1.426) | 0.902 (0.035) |

| F3 | 1.000 | 0.600 (0.667) | 0.6 (0.667) | 1.000 (0) | 0.960 (0.042) |

| F4 | 0.942 | 0.519 (0.815) | 0.923 (0.021) | 0.942 (0) | 0.923 (0.021) |

| F5 | - | - | - | - | - |

| F6 | 0.854 | 0.737 (0.159) | 0.330 (1.588) | 0.922 (−0.074) | 0.875 (−0.024) |

| F7 | 0.992 | 0.980 (0.012) | 0.850 (0.167) | 0.979 (0.013) | 0.880 (0.127) |

| F8 | 0.792 | 0.875 (−0.095) | 0.500 (0.584) | 0.750 (0.056) | 0.875 (0.095) |

| F9 | 0.966 | 0.983 (−0.017) | 0.500 (0.932) | 0.966 (0) | 0.948 (0.019) |

| F10 | 1.000 | 1.000 (0) | 0.000 (*) | 1.000 (0) | 0.000 (*) |

| F11 | 0.696 | 0.000 (-) | 0.909 (−0.2343) | 0.273 (1.549) | 0.261 (1.667) |

| F12 | 1.000 | 1.000 (0) | 0.468 (1.137) | 1.000 (0) | 1.000 (0) |

| (+/=/−) | - | 6/5/2 | 10/1/1 | 5/6/1 | 7/4/1 |

| Avg | 0.909 | 0.773 (0.175) | 0.564 (0.612) | 0.809 (0.123) | 0.765 (0.188) |

| Std | 0.103 | 0.307 | 0.274 | 0.261 | 0.324 |

| Min | 0.696 | 0.000 | 0.000 | 0.273 | 0.000 |

| Max | 1.000 | 1.000 | 0.923 | 1.000 | 1.000 |

| Avg Rank | 1.458 | 3.417 | 4.417 | 2.333 | 3.375 |

| 0.003 ** | 0.003 ** | 0.003 ** | 0.003 ** |

Table 12.

Sensitivity (Se) for all logic mining models. The bracket indicates the ratio of improvement and * indicates division by zero. A negative ratio implies the method that outperforms the proposed method. ** due to no positive outcome in the dataset. is obtained from the paired Wilcoxon rank test and ** indicates a model with significant inferiority compared to the superiority model.

Table 12.

Sensitivity (Se) for all logic mining models. The bracket indicates the ratio of improvement and * indicates division by zero. A negative ratio implies the method that outperforms the proposed method. ** due to no positive outcome in the dataset. is obtained from the paired Wilcoxon rank test and ** indicates a model with significant inferiority compared to the superiority model.

| Dataset | S2SATRA | E2SATRA | 2SATRA | P2SATRA | RA |

|---|---|---|---|---|---|

| F1 | 0.971 | 0.971 (0) | 0.966 (0.0052) | 0.966 (0.005) | 0.971 (0) |

| F2 | 0.755 | 0.490 (0.541) | 0.388 (0.946) | 0.452 (0.670) | 0.449 (−0.682) |

| F3 | 1.000 | 1.000 (0) | 1.000 (0) | 0.926 (0.080) | 0.923 (0.083) |

| F4 | 0.980 | 0.964 (0.017) | 0.8723 (0.123) | 0.980 (0) | 0.873 (0.123) |

| F5 | 0.000 ** | 0.000 (**) | 0.000 (**) | 0.000 (**) | 0.000 (**) |

| F6 | 0.934 | 0.997 (−0.063) | 0.611 (0.528) | 0.844 (0.107) | 0.867 (0.078) |

| F7 | 0.755 | 0.624 (0.210) | 0.560 (0.348) | 0.741 (0.019) | 0.592 (0.275) |

| F8 | 1.000 | 0.339 (1.950) | 0.255 (2.922) | 1.000 (0) | 0.339 (1.950) |

| F9 | 0.982 | 0.838 (0.171) | 0.744 (0.320) | 0.982 (0) | 0.679 (0.446) |

| F10 | 1.000 | 0.762 (0.312) | 0.000 (*) | 1.000 (0) | 0.000 (*) |

| F11 | 0.696 | 0.000 (*) | 0.150 (3.64) | 1.000 (−0.304) | 0.073 (8.534) |

| F12 | 1.000 | 1.000 (0) | 0.600 (0.667) | 1.000 (0) | 0.616 (0.6234) |

| (+/=/−) | 7/4/1 | 10/2/0 | 5/6/1 | 10/2/0 | |

| Avg | 0.839 | 0.666 | 0.512 | 0.824 | 0.532 |

| Std | 0.287 | 0.379 | 0.355 | 0.306 | 0.360 |

| Min | 0.000 ** | 0.000 ** | 0.000 ** | 0.000 ** | 0.000 ** |

| Max | 1.000 | 1.000 | 1.000 | 1.000 | 0.923 |

| Avg Rank | 1.375 | 3.167 | 4.708 | 2.083 | 3.667 |

| 0.612 | 0.086 | 0.003 ** | 0.084 |

Table 13 demonstrates MCC analysis for all logic mining models. According to Table 13, several winning points for S2SATRA are as follows.

- (a)

- In terms of MCC, S2SATRA achieved the most optimal MCC value for 7 out of 12 datasets. The closest model that competes with S2SATRA is P2SATRA. On average, the logic mining model is reported to obtain the worst result where the MCC value approaches zero.

- (b)

- There were three datasets (F3, F10, and F12) that achieve an value which means that S2SATRA which produced represents perfect prediction.

- (c)

- No value for MCC is reported for F5 because there is no positive outcome for this dataset.

- (d)

- The only dataset that approaches zero MCC is F1. This shows that S2SATRA has good capability in differentiating all domain of the confusion matrix (TP, FP, TN, and FN).

- (e)

- By taking into account the absolute value of MCC, S2SATRA shows an average improvement in the MCC value ranging from 35.9% until 3839%. This shows that the clustering capability of S2SATRA significantly improved while the error value remained low (refer Table 13).

- (f)

- The Friedman test rank is conducted for all the datasets with and a degree of freedom of . The for MCC is . Hence, the null hypothesis of equal performance for all the logic mining models was rejected. S2SATRA has an average rank of 1.363 which is the highest compared to other existing logic mining for MCC. The closest method that competes with S2SATRA is P2SATRA with an average rank of 2.955. This result statistically validates the superiority of S2SATRA compared to the existing work.

- (g)

- In addition, the paired Wilcoxon rank test is conducted to statistically validate the superiority of S2SATRA. From the table, we observed that S2SATRA is the top-ranked logic mining model in terms of MCC compared to most existing work.

Table 13.

MCC for all logic mining models. is obtained from the paired Wilcoxon rank test and ** indicates the models with significant inferiority compared to the superiority model.

Table 13.

MCC for all logic mining models. is obtained from the paired Wilcoxon rank test and ** indicates the models with significant inferiority compared to the superiority model.

| Dataset | S2SATRA | E2SATRA | 2SATRA | P2SATRA | RA |

|---|---|---|---|---|---|

| F1 | −0.070 | −0.071 | −0.104 | −0.104 | −0.071 |

| F2 | 0.693 | 0.270 | −0.109 | 0.015 | 0.039 |

| F3 | 1.000 | 0.316 | 0.316 | - | −0.055 |

| F4 | 0.948 | 0.647 | 0.860 | 0.948 | 0.859 |

| F5 | - | - | - | - | - |

| F6 | 0.556 | 0.595 | −0.406 | 0.301 | 0.489 |

| F7 | 0.679 | 0.411 | 0.106 | 0.642 | 0.226 |

| F8 | 0.847 | 0.028 | 0.365 | 0.816 | 0.028 |

| F9 | 0.918 | 0.659 | 0.107 | 0.918 | −0.129 |

| F10 | 1.000 | 0.713 | −1.000 | 1.000 | −0.453 |

| F11 | 0.623 | −0.101 | −0.064 | 0.490 | −0.442 |

| F12 | 1.000 | 1.000 | 0.137 | 1.000 | 0.453 |

| Avg Rank | 1.363 | 3.045 | 3.818 | 2.955 | 3.818 |

| Mean | 0.745 | 0.406 | 0.019 | 0.548 | 0.086 |

| Std | 0.316 | 0.355 | 0.469 | 0.412 | 0.397 |

| (+/=/−) | 9/2/1 | 10/1/1 | 6/5/1 | 10/1/1 | |

| 0.011 ** | 0.003 ** | 0.018 ** | 0.005 ** |

7.5. McNemar’s Statistical Test

To evaluate whether there is any significant difference between the performance of the two logic mining models, McNemar’s test is performed. According to [38], McNemar is the only test that has acceptable Type 1 error and can validate the performance of the 2SATRA model. The normal test statistics are as follows:

where is a measure of significance of the accuracy obtained by model and , while is the number of cases where logic mining is correctly classified by model i but incorrectly classified by model j. A similar description is given for the notation . In this experiment, a 5% level of significance is used. The null hypothesis dictates a pair from the logic mining model with no difference in disagreement. The performance of classification accuracy is said to differ significantly if . Note that, a positive value of means the model i performs better than model j. Table 14 and Table 15 presents the result of the McNemar’s test for all the logic mining models. Several winning points for S2SATRA are discussed below.

- (a)

- S2SATRA is reported to be statistically significant (in bold) in more than half of the datasets. The only dataset that has no statistical significance is F4 where S2SATRA only significantly differs with E2SATRA.

- (b)

- In terms of statistical performance, S2SATRA is shown to be significantly better compared to other logic mining model. For instance, there is no negative test regarding the statistics found for S2SATRA (refer row) in comparison to the other 2SATRA model. The lowest test statistics value for S2SATRA is zero.

- (c)

- The best statistical performance for S2SATRA is in F2, F5, and F6 where all the existing methods are significantly different and worse (indicated in the positive value). The second best statistical performances are F7 and F8 where at least one logic mining model is statistically insignificant but with a statistically better result.

- (d)

- In addition, results from the McNemar test indicates the superiority of S2SATRA in distinguishing both correct and incorrect outcomes compared to the existing method.

Table 14.

McNemar’s statistical test for F1–F5.

Table 14.

McNemar’s statistical test for F1–F5.

| S2SATRA | E2SATRA | 2SATRA | P2SATRA | RA | ||

|---|---|---|---|---|---|---|

| F1 | S2SATRA | - | 0.000 | 9.128 | 9.128 | 0.000 |

| ES2SATRA | - | 9.128 | 9.128 | 0.000 | ||

| 2SATRA | - | 0.000 | −9.128 | |||

| P2SATRA | - | −9.128 | ||||

| RA | - | |||||

| F2 | S2SATRA | - | 6.980 | 9.194 | 7.406 | −23.263 |

| ES2SATRA | - | 2.213 | 0.426 | −26.567 | ||

| 2SATRA | - | −15.449 | 78.536 | |||

| P2SATRA | - | 1.277 | ||||

| RA | - | |||||

| F3 | S2SATRA | - | 3.051 | 2.722 | 0.544 | 0.816 |

| ES2SATRA | - | −0.278 | −2.496 | −2.219 | ||

| 2SATRA | - | −2.177 | −1.905 | |||

| P2SATRA | - | 0.272 | ||||

| RA | - | |||||

| F4 | S2SATRA | - | 2.211 | 0.704 | 0.000 | 0.704 |

| ES2SATRA | - | −1.508 | −2.211 | −1.508 | ||

| 2SATRA | - | −0.704 | 0.000 | |||

| P2SATRA | - | 0.704 | ||||

| RA | - | |||||

| F5 | S2SATRA | - | 7.264 | 9.020 | 4.201 | 13.592 |

| ES2SATRA | - | 1.723 | −3.077 | 6.278 | ||

| 2SATRA | - | −4.819 | 4.572 | |||

| P2SATRA | - | 9.391 | ||||

| RA | - |

Table 15.

McNemar’s statistical test for F6–F11.

Table 15.

McNemar’s statistical test for F6–F11.

| S2SATRA | E2SATRA | 2SATRA | P2SATRA | RA | ||

|---|---|---|---|---|---|---|

| F6 | S2SATRA | - | 4.996 | 57.849 | 3.529 | 4.457 |

| ES2SATRA | - | 52.853 | −1.467 | −0.539 | ||

| 2SATRA | - | −54.321 | −53.392 | |||

| P2SATRA | - | 0.929 | ||||

| RA | - | |||||

| F7 | S2SATRA | - | 11.339 | 19.744 | 1.348 | 16.070 |

| ES2SATRA | - | 8.405 | −9.991 | 4.731 | ||

| 2SATRA | - | −18.396 | −3.674 | |||

| P2SATRA | - | 14.722 | ||||

| RA | - | |||||

| F8 | S2SATRA | - | 6.500 | 7.000 | 0.167 | 6.500 |

| ES2SATRA | - | −0.500 | −6.333 | 0.000 | ||

| 2SATRA | - | −6.833 | −4.157 | |||

| P2SATRA | - | 6.333 | ||||

| RA | - | |||||

| F9 | S2SATRA | - | 1.389 | 5.555 | 0.000 | 4.012 |

| ES2SATRA | - | 4.166 | −1.389 | 2.623 | ||

| 2SATRA | - | −5.555 | −1.543 | |||

| P2SATRA | - | 4.012 | ||||

| RA | - | |||||

| F10 | S2SATRA | - | 2.781 | 17.263 | 0.000 | 11.702 |

| ES2SATRA | - | 14.482 | −2.781 | 8.921 | ||

| 2SATRA | - | −17.263 | −5.561 | |||

| P2SATRA | - | 11.702 | ||||

| RA | - | |||||

| F11 | S2SATRA | - | 1.807 | 11.204 | −1.052 | 10.199 |

| ES2SATRA | - | 9.470 | −2.785 | 8.391 | ||

| 2SATRA | - | −11.791 | −1.424 | |||

| P2SATRA | - | 10.832 | ||||

| RA | - | |||||

| F12 | S2SATRA | - | 0.000 | 7.531 | 0.000 | 5.561 |

| ES2SATRA | - | 7.531 | 0.000 | 5.561 | ||

| 2SATRA | - | −7.531 | −1.970 | |||

| P2SATRA | - | 5.561 | ||||

| RA | - |

8. Discussion

The optimal logic mining model requires pre-processing structures for neurons before the can be learned by HNN. Currently, the logic mining model specifically optimizes the logic extraction from the dataset without considering the optimal . The mechanism that optimizes the optimal neuron relationship before the learning can occur is remain unclear. In this sense, identifying a specific pair of neurons for will facilitate the dataset generalization and reduce computational burden.

As mentioned in the theory section, S2SATRA is not merely a modification of a conventional logic mining model, but rather it is a generalization that absorbs all the conventional models. Thus, S2SATRA not only inherits many properties from a conventional logic mining model but it adds supervised property that reduces the search space of the optimal . The question that we should ponder is: what is the optimal for the logic mining model? Therefore, it is important to discuss the properties of S2SATRA against the conventional logic mining model in extracting optimal logical rule from the dataset. According to the previous logic mining model, such as [20,21,31], the quality of attributes is not well assessed since the attributes were randomly assigned. For instance, [21] achieved high accuracy for specific combination of attributes but the quality of different combination of the attributes will result in low accuracy due to a high local minima solution. A similar neuron structure can be observed in E2SATRA, as proposed by [24], because the choice of neurons is similar during the learning phase. Practically speaking, this learning mechanism [20,21,22,31] is natural in real life because the neuron assignment is based on trial and error. However, the 2SATRA model needs to sacrifice the accuracy if there is no optimum neuron assignment. By adding permutation property, as carried out in Kasihmuddin et al. [30], P2SATRA is able to increase the search space of the model in the expense of higher computational complexity. To put things into perspective, 10 neurons require learning 18,900 of learning for each neuron combination before the model can arrive to the optimal result. Unlike our proposed model, S2SATRA can narrow down the search space by checking the degree of association among the neurons before permutation property can take place. Supervised features of S2SATRA recognized the pattern produced by the neurons and align it with the clause. Thus, the mutual interaction between association and permutation will optimally select the best neuron combination.

As reported in Table 7 and Table 8, the number of associations for analysis required for n attributes to create optimal was reduced by . In other words, the probability of P2SATRA to extract optimal is lower compared to the S2SATRA. As the supplied to the network has changed, the retrieval property of the S2SATRA model will improve. The best logic mining model demonstrates a high value of and with a minimized value of and . P2SATRA is observed to outperform the conventional logic mining in terms of performance metrics because P2SATRA can utilize the permutation attributes. In this case, the higher the number of permutations, the higher probability for the P2SATRA to achieve correct and . Despite a robust permutation feature, P2SATRA failed to disregard the non-significant attributes which leads to . Despite achieving high accuracy, the retrieved final neuron state is not interpretable. E2SATRA is observed to outperform 2SATRA in terms of accuracy because the induced logic in E2SATRA is the only amount in the final state that reached global minimum energy. The dynamic of the induced logic in E2SATRA follows the convergence of the final state proposed in [22] where the final state will converge to the nearest minima. Although all the final state in E2SATRA is guaranteed to achieve global minimum energy, the choice of attribute that is embedded to the logic mining model is not well structured. Similar to 2SATRA and P2SATRA, the interpretation of the final attribute will be difficult to design. In another development, 2SATRA is observed to outperform the RA proposed by [14] in terms of all performance metric. Although the structure of RA is not similar to 2SATRA in creating the , the induced logic has a general property of . In this case, is observed to create a rigid induced logic (at most 1 positive literal) and can reduce the possible solution space of the RA. In this case, we only consider the dataset that satisfies the that will lead to .

In contrast, S2SATRA employs a flexible logic which accounts for both positive and negative literal. This structure is the main advantage over the traditional RA proposed by [14]. S2ATRA is observed to outperform the rest of the logic mining model due the optimal choice of attributes. In terms of feature, S2SATRA can capitalize the energy feature of E2SATRA and the permutation feature of P2SATRA. Hence, the induced logic obtained will always achieve global minimum energy and only relevant attribute will be chosen to be learned in HNN. Another way to explain the effectiveness of logic mining is the ability to consistently find the correct logical rule to be learned by HNN. Initially, all logic mining models begin with HNN which has too many ineffective synaptic weights due to suboptimal features. In this case, S2SATRA can reduce the inconsistent logical rule that leads to suboptimal synaptic weight.

S2SATRA is reported to outperform almost all the existing logic mining models in terms of all performance metrics. S2SATRA has the capability to differentiate between and , which leads to high Acc and F-score values. Since S2SATRA is able to obtain more , the Pr and Sen will increase compared to the other existing methods. In terms of Pr and Sen, S2SATRA is reported to succesfully predict during the retrieval phase. In other words, the existing 2SATRA model is less sensitive to the positive outcome which leads to a lower value of Pr and Se. It is worth mentioning that the overfitting nature of the retrieval phase will lead to which can only produce more positive neuron states. This phenomenon was obvious in the existing method where the HNN tends to converge to only a few final states. This result has a good agreement with the McNemar’s test where the performance of S2SATRA is significantly different from the existing method. The optimal arrangement of the signifies the importance of the association among the attributes towards the retrieval capability of the S2SATRA. Without proper arrangement, the obtained tends to overfit which leads to a high classification error. S2SATRA can only utilize correlation analysis during the pre-processing stage because correlation analysis provides preliminary connection between the attribute and .