Classification of Driver Distraction Risk Levels: Based on Driver’s Gaze and Secondary Driving Tasks

Abstract

1. Introduction

2. Literature Review and Main Contributions

2.1. Driver Distraction

2.2. Contributions

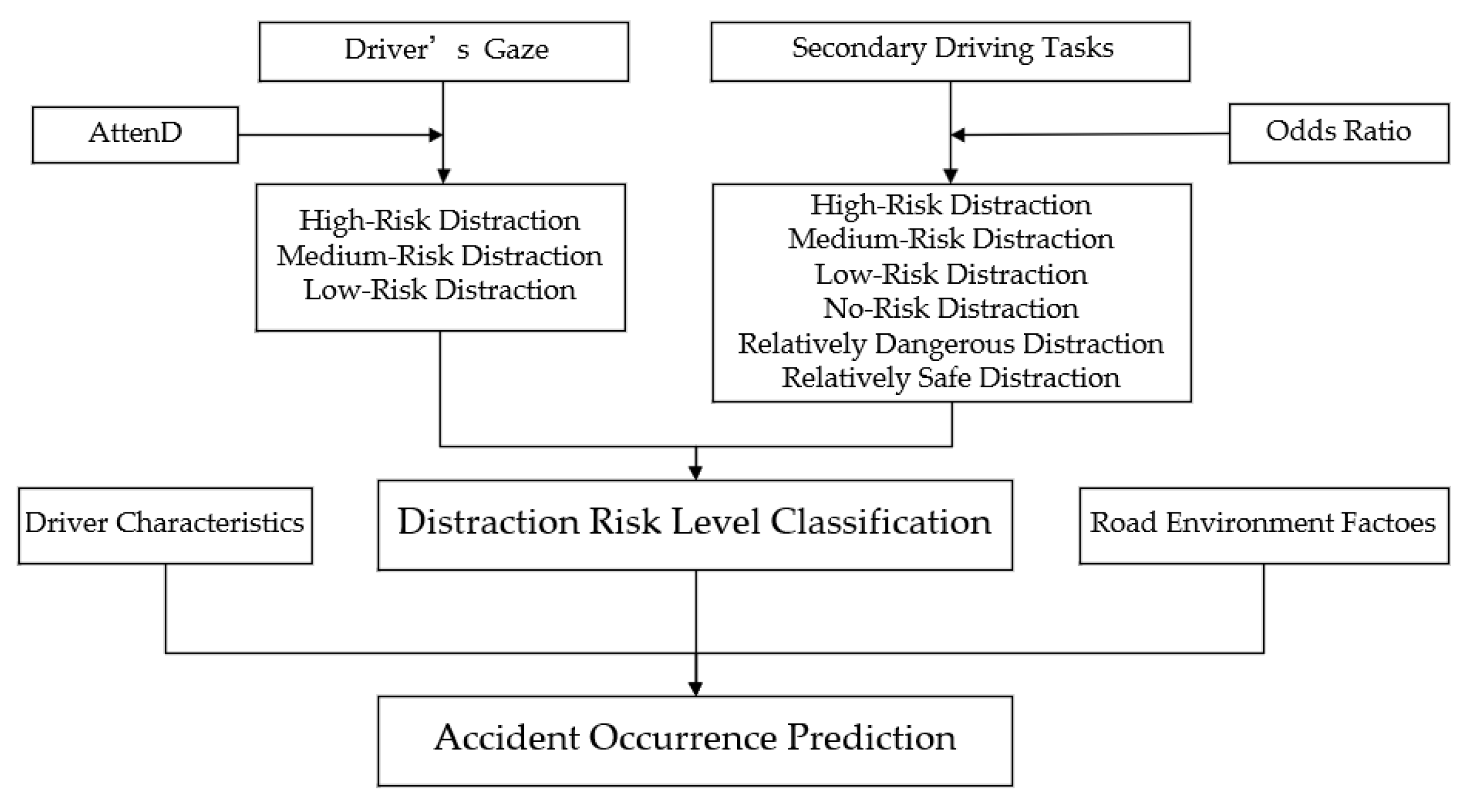

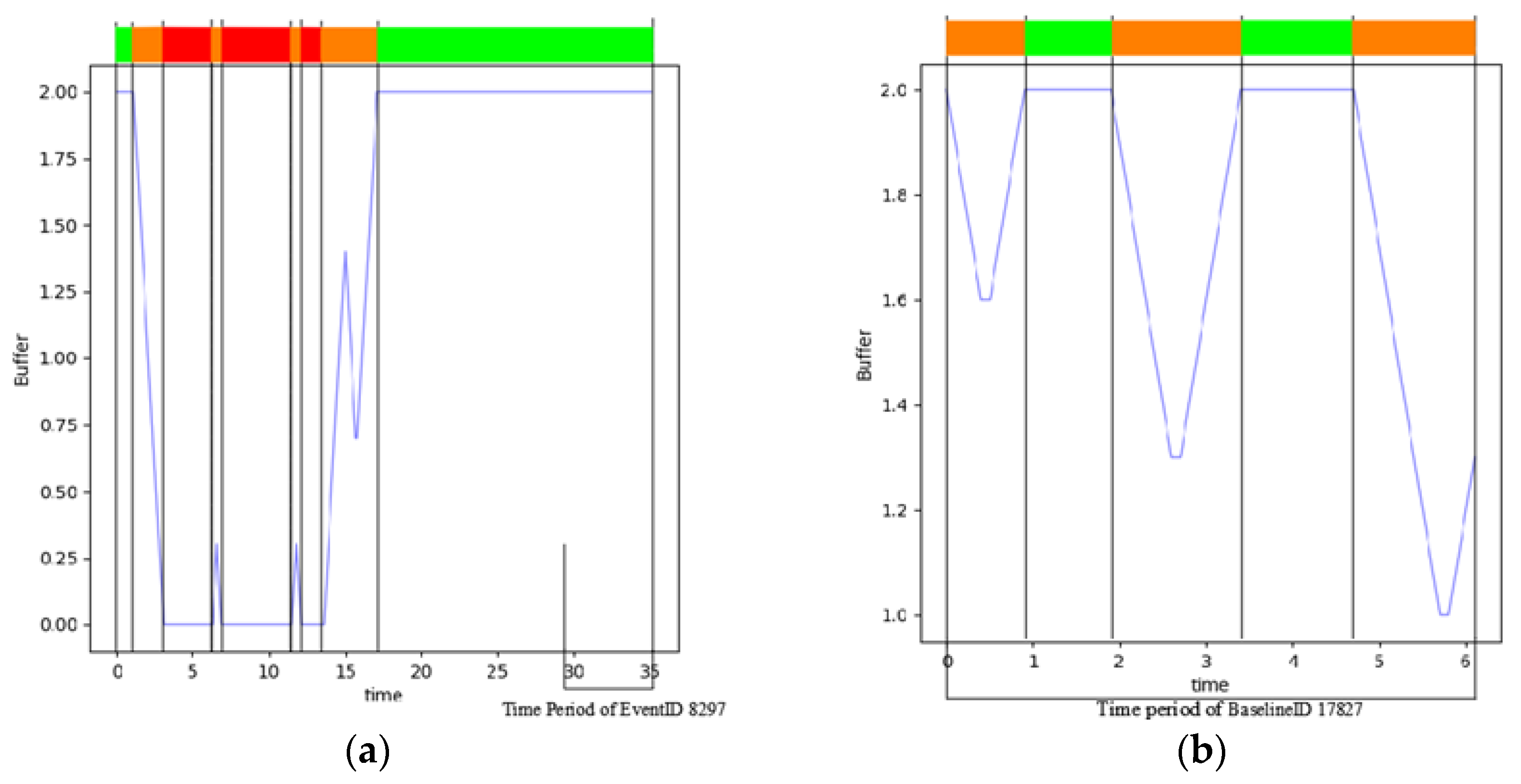

3. Distraction Risk Level Classification Methods

3.1. Experimental Data

- Crash: any contact between the subject vehicle and an object, whether moving or stationary, at any speed, with measurable transfer or dissipation of kinetic energy;

- Near-crash: any situation that requires the subject vehicle or any other vehicle, pedestrian, bicyclist, or animal to quickly evade maneuvers to avoid a crash;

- Baseline: any “normal driving” and “typical driver behavior” in the sample.

3.2. Distraction Risk-Level Classification Based on Driver’s Gaze

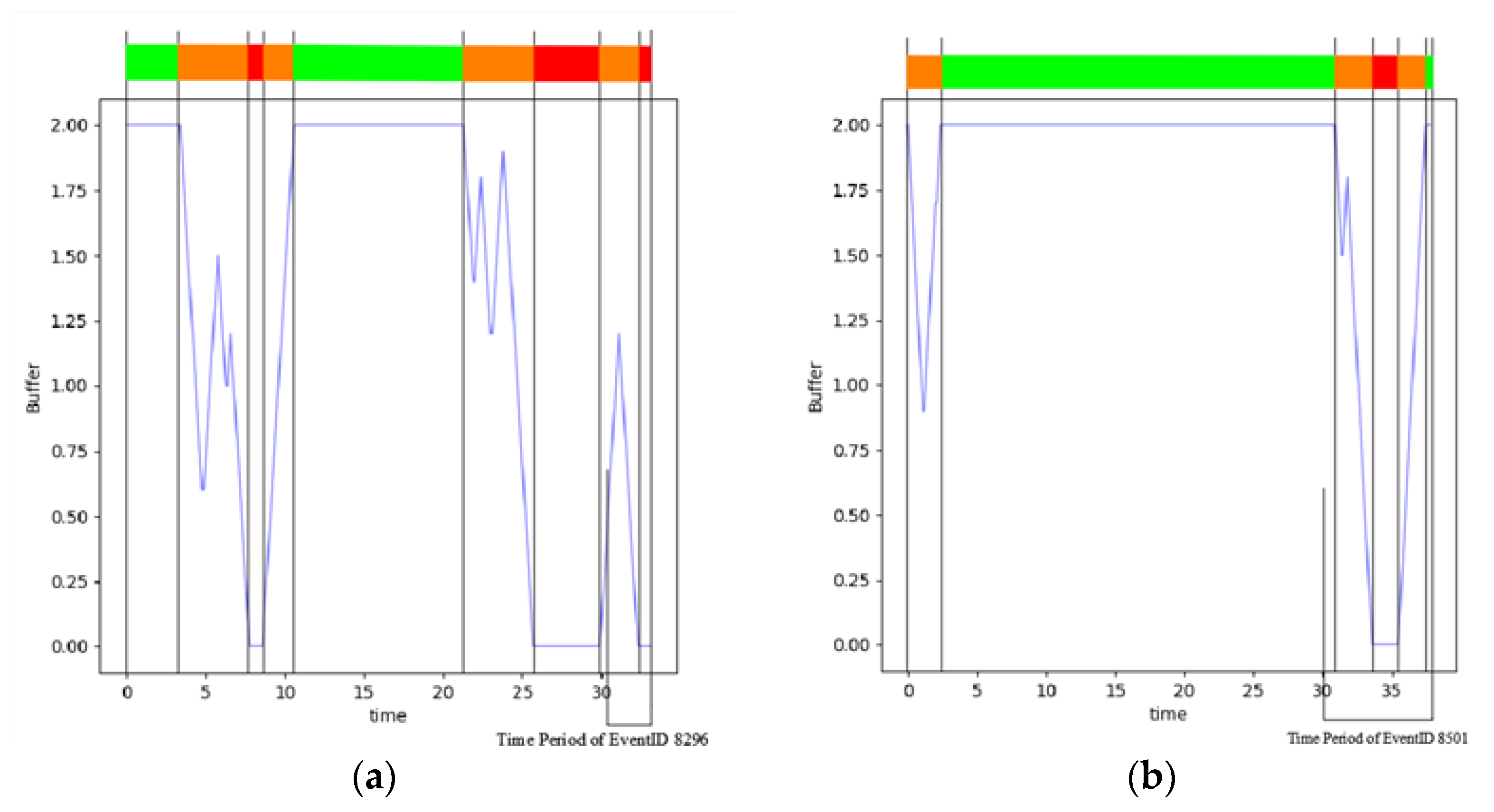

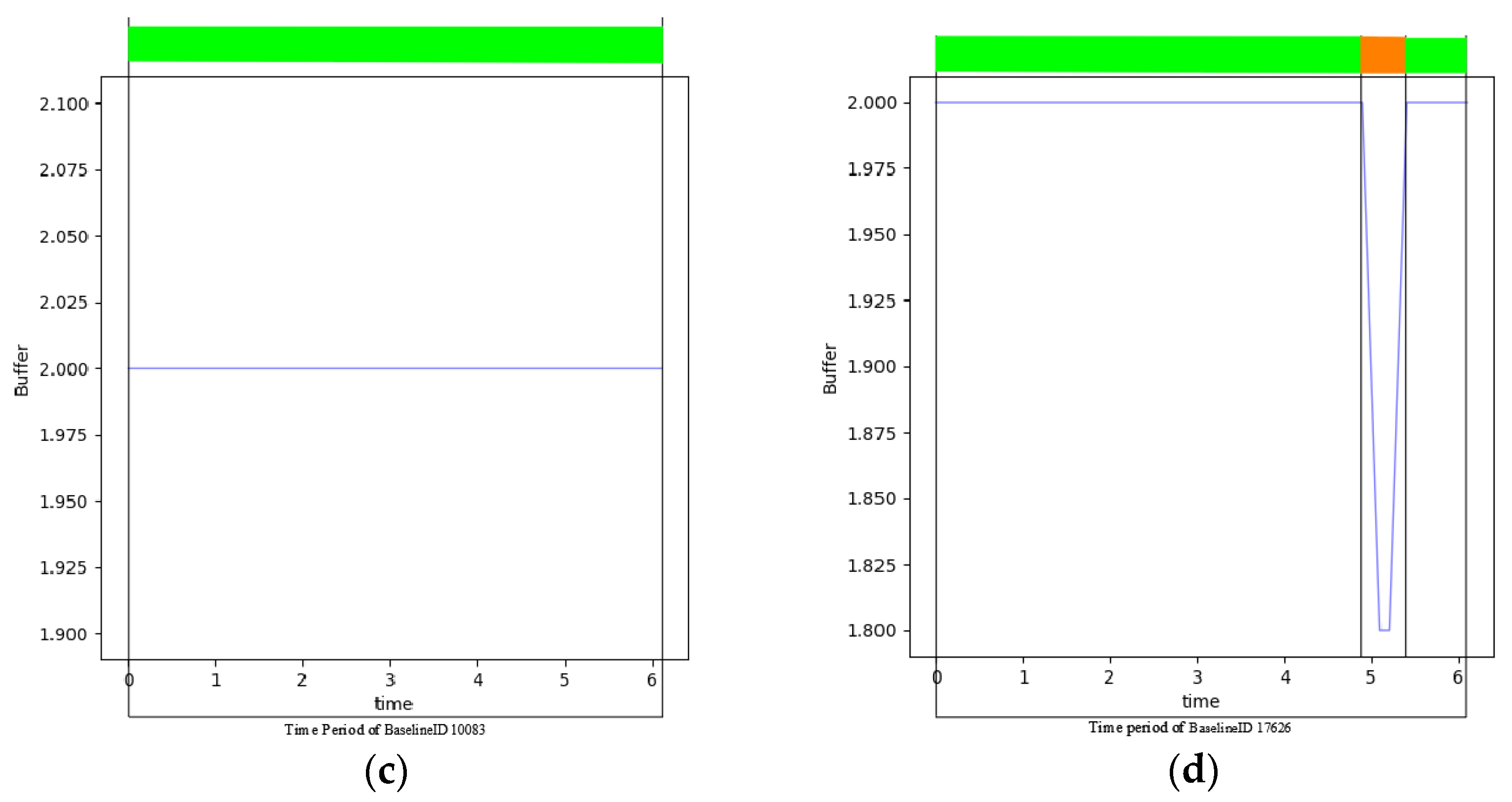

3.2.1. AttenD Algorithm

- Initially set the visual buffer time to 2 s;

- When the driver’s gaze leaves the front of the road, the visual buffer decreases at a rate of 1 s per second;

- When the driver’s gaze returns to the front of the road, the visual buffer remains unchanged for 0.1 s and rises at 1 s per second beyond 0.1 s;

- When the driver’s gaze changes to the instrument cluster, rearview mirror, etc., the visual buffer remains unchanged for 1 s. After 1 s, the visual buffer decreases at 1 s per second.

3.2.2. Gaze Location Categories

3.2.3. Results

3.3. Distraction Risk-Level Classification Based on Secondary Driving Tasks

3.3.1. Secondary Driving Tasks

3.3.2. Odds Ratio

3.3.3. Results

4. Accident Occurrence Prediction

4.1. Factors Influencing the Occurrence of Accidents

4.1.1. Driver Characteristics

4.1.2. Road Environment Factors

4.2. Prediction Model

4.2.1. Hyperparameter Optimization

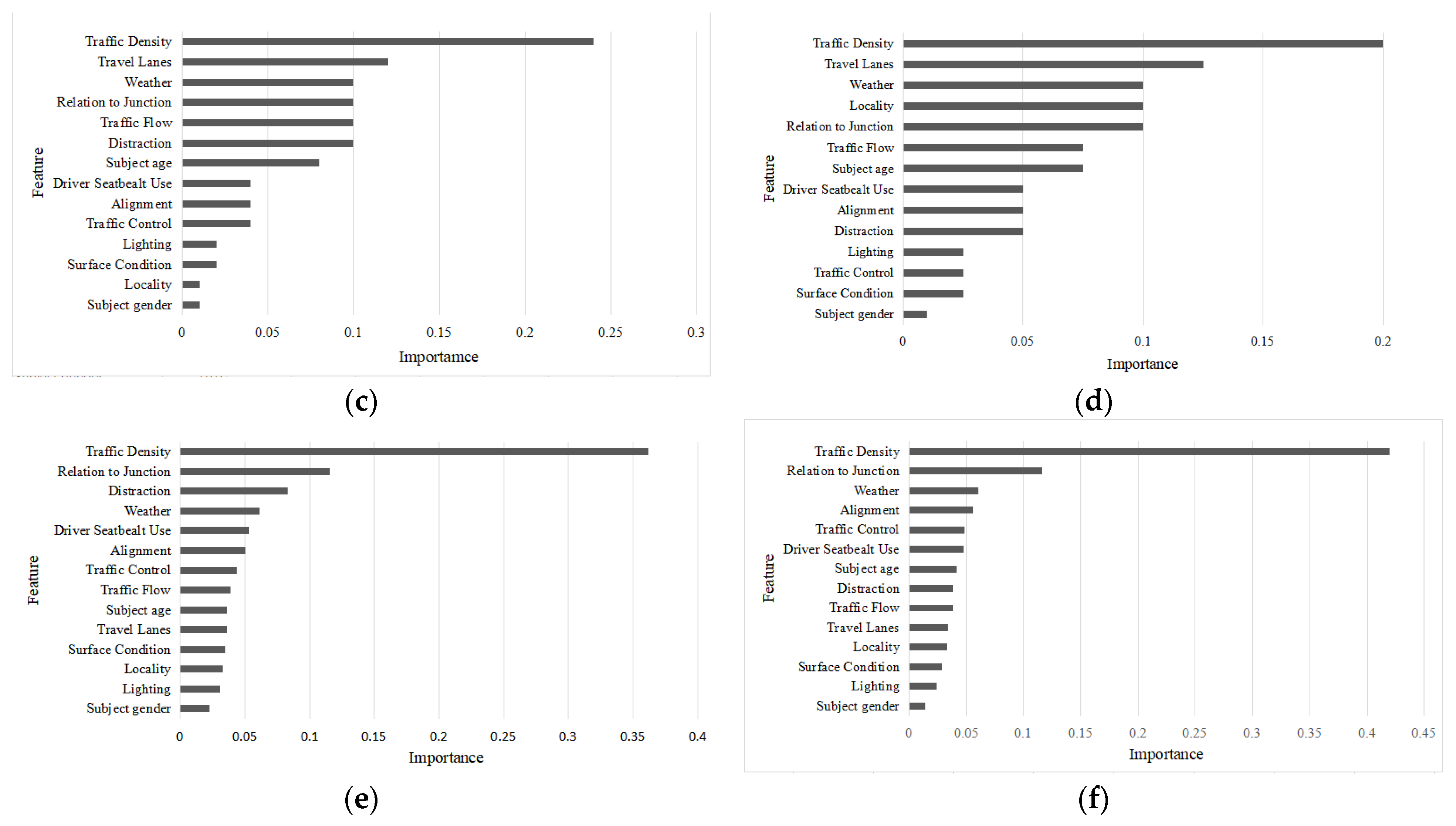

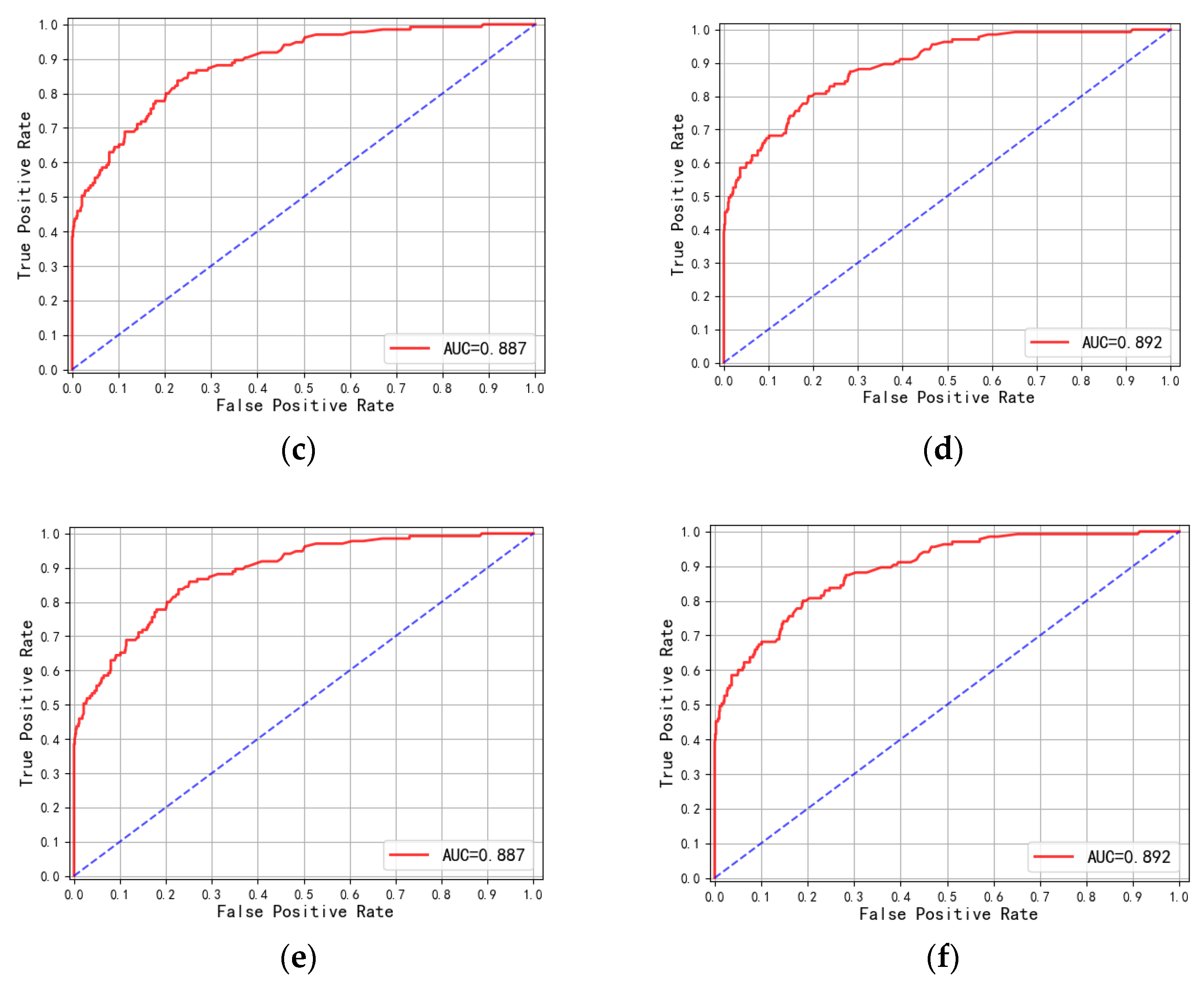

4.2.2. Results

4.2.3. Comparison of Prediction Model Results

5. Discussion

6. Conclusions and Future Works

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Siya, A.; Ssentongo, B.; Abila, D.B.; Kato, A.M.; Onyuth, H.; Mutekanga, D.; Ongom, I.; Aryampika, E.; Lukwa, A.T. Perceived factors associated with boda-boda (motorcycle) accidents in Kampala, Uganda. Traffic Inj. Prev. 2019, 20, S133–S136. [Google Scholar] [CrossRef] [PubMed]

- NHTSA’s National Center for Statistics and Analysis. Distracted Driving 2020 (Research Note. Report No. DOT HS 813 309). Available online: https://crashstats.nhtsa.dot.gov/Api/Public/ViewPublication/813309 (accessed on 5 December 2022).

- Liberty, E.; Mazzae, E.; Garrott, W.; Goodman, M. NHTSA Driver Distraction Research: Past Present and Future. In Proceedings of the 17th International Technical Conference on the Enhanced Safety of Vehicles, Amsterdam, The Netherlands, 4–7 June 2001. [Google Scholar]

- Jegham, I.; Ben Khalifa, A.; Alouani, I.; Mahjoub, M.A. A novel public dataset for multimodal multiview and multispectral driver distraction analysis: 3MDAD. Signal Process. Image Commun. 2020, 88, 115960. [Google Scholar] [CrossRef]

- Wester, A.E.; Bockner, K.B.E.; Volkerts, E.R.; Verster, J.C.; Kenemans, J.L. Event-related potentials and secondary task. performance during simulated driving. Accid. Anal. Prev. 2008, 40, 1–7. [Google Scholar] [CrossRef] [PubMed]

- Yang, S.; Kuo, J.; Lenné, M.G. Effects of Distraction in On-Road Level 2 Automated Driving: Impacts on Glance Behavior and Takeover Performance. Hum. Factors 2021, 63, 1485–1497. [Google Scholar] [CrossRef] [PubMed]

- Harbluk, J.L.; Noy, Y.I.; Trbovich, P.L.; Eizenman, M. An on-road assessment of cognitive distraction: Impacts on drivers’ visual behavior and braking performance. Accid. Anal. Prev. 2007, 39, 372–379. [Google Scholar] [CrossRef]

- Su, L.; Sun, C.; Cao, D.; Khajepour, A. Efficient Driver Anomaly Detection via Conditional Temporal Proposal and Classification Network. IEEE Trans. Comput. Soc. Syst. 2022, 1–10. [Google Scholar] [CrossRef]

- Raouf, I.; Khan, A.; Khalid, S.; Sohail, M.; Azad, M.M.; Kim, H.S. Sensor-Based Prognostic Health Management of Advanced Driver Assistance System for Autonomous Vehicles: A Recent Survey. Mathematics 2022, 10, 3233. [Google Scholar] [CrossRef]

- Rogers, M.; Zhang, Y.; Kaber, D.; Liang, Y.; Gangakhedkar, S. The Effects of Visual and Cognitive Distraction on Driver Situation Awareness; Springer: Berlin/Heidelberg, Germany, 2011; pp. 186–195. [Google Scholar]

- Gao, J.; Davis, G.A. Using naturalistic driving study data to investigate the impact of driver distraction on driver’s brake reaction time in freeway rear-end events in car-following situation. J. Saf. Res. 2017, 63, 195–204. [Google Scholar] [CrossRef]

- Kashevnik, A.; Shchedrin, R.; Kaiser, C.; Stocker, A. Driver Distraction Detection Methods: A Literature Review and Framework. IEEE Access 2021, 9, 60063–60076. [Google Scholar] [CrossRef]

- Chand, A.; Bhasi, A.B. Effect of Driver Distraction Contributing Factors on Accident Causations—A Review. AIP Conf. Proc. 2019, 2134, 060004. [Google Scholar] [CrossRef]

- Elvik, R. Effects of Mobile Phone Use on Accident Risk Problems of Meta-Analysis When Studies Are Few and Bad. Transp. Res. Rec. 2011, 2236, 20–26. [Google Scholar] [CrossRef]

- Drews, F.A.; Pasupathi, M.; Strayer, D.L. Passenger and cell phone conversations in simulated driving. J. Exp. Psychol. Appl. 2008, 14, 392–400. [Google Scholar] [CrossRef] [PubMed]

- Choudhary, P.; Velaga, N.R. Performance Degradation During Sudden Hazardous Events: A Comparative Analysis of Use of a Phone and a Music Player During Driving. Ieee Trans. Intell. Transp. Syst. 2019, 20, 4055–4065. [Google Scholar] [CrossRef]

- Pettitt, M.; Burnett, G.; Stevens, A. Defining Driver Distraction. In Proceedings of the 12th World Congress on Intelligent Transport Systems, San Francisco, CA, USA, 6–10 November 2005. [Google Scholar]

- Wu, Q. An Overview of Driving Distraction Measure Methods. In Proceedings of the 10th IEEE International Conference on Computer-Aided Industrial Design and Conceptual Design, Wenzhou, China, 26–29 November 2009; pp. 2391–2394. [Google Scholar]

- Yekhshatyan, L.; Lee, J.D. Changes in the Correlation Between Eye and Steering Movements Indicate Driver Distraction. IEEE Trans. Intell. Transp. Syst. 2013, 14, 136–145. [Google Scholar] [CrossRef]

- Miyaji, M.; Kawanaka, H.; Oguri, K. Driver’s cognitive distraction detection using physiological features by the adaboost. In Proceedings of the 2009 12th International IEEE Conference on Intelligent Transportation Systems, St Louis, MO, USA, 4–7 October 2009; pp. 1–6. [Google Scholar]

- Tivesten, E.; Dozza, M. Driving context and visual-manual phone tasks influence glance behavior in naturalistic driving. Transp. Res. Part F-Traffic Psychol. Behav. 2014, 26, 258–272. [Google Scholar] [CrossRef]

- Victor, T.; Dozza, M.; Bärgman, J.; Boda, C.-N.; Engström, J.; Markkula, G. Analysis of Naturalistic Driving Study Data: Safer Glances, Driver Inattention, and Crash Risk; TRB: Washington, DC, USA, 2014. [Google Scholar]

- Sodhi, M.; Reimer, B.; Llamazares, I. Glance analysis of driver eye movements to evaluate distraction. Behav. Res. Methods Instrum. Comput. 2002, 34, 529–538. [Google Scholar] [CrossRef]

- Strayer, D.L.; Turrill, J.; Cooper, J.M.; Coleman, J.R.; Medeiros-Ward, N.; Biondi, F. Assessing Cognitive Distraction in the Automobile. Human Factors 2015, 57, 1300–1324. [Google Scholar] [CrossRef]

- Kong, X.; Das, S.; Zhang, Y. Mining patterns of near-crash events with and without secondary tasks. Accid. Anal. Prev. 2021, 157, 106162. [Google Scholar] [CrossRef]

- Carney, C.; Harland, K.K.; McGehee, D.V. Using event-triggered naturalistic data to examine the prevalence of teen driver distractions in rear-end crashes. J. Saf. Res. 2016, 57, 47–52. [Google Scholar] [CrossRef]

- Neyens, D.M.; Boyle, L.N. The effect of distractions on the crash types of teenage drivers. Accid. Anal. Prev. 2007, 39, 206–212. [Google Scholar] [CrossRef]

- Liang, O.S.; Yang, C.S.C. Determining the risk of driver-at-fault events associated with common distraction types using naturalistic driving data. J. Saf. Res. 2021, 79, 45–50. [Google Scholar] [CrossRef] [PubMed]

- Pešić, D.; Pešić, D.; Trifunović, A.; Čičević, S. Application of Logistic Regression Model to Assess the Impact of Smartwatch on Improving Road Traffic Safety: A Driving Simulator Study. Mathematics 2022, 10, 1403. [Google Scholar] [CrossRef]

- Xing, Y.; Lv, C.; Wang, H.; Cao, D.; Velenis, E.; Wang, F.Y. Driver Activity Recognition for Intelligent Vehicles: A Deep Learning Approach. IEEE Trans. Veh. Technol. 2019, 68, 5379–5390. [Google Scholar] [CrossRef]

- Bharadwaj, N.; Edara, P.; Sun, C. Sleep disorders and risk of traffic crashes: A naturalistic driving study analysis. Saf. Sci. 2021, 140, 105295. [Google Scholar] [CrossRef]

- Kircher, K.; Ahlström, C. Issues Related to the Driver Distraction Detection Algorithm AttenD. In Proceedings of the 1st International Conference on Driver Distraction and Inattention (DDI 2009), Gothenburg, Sweden, 28–29 September 2009. [Google Scholar]

- Ahlstrom, C.; Georgoulas, G.; Kircher, K. Towards a Context-Dependent Multi-Buffer Driver Distraction Detection Algorithm. IEEE Trans. Intell. Transp. Syst. 2022, 23, 4778–4790. [Google Scholar] [CrossRef]

- Yao, Y.; Zhao, X.H.; Feng, X.F.; Rong, J. Assessment of Secondary Tasks Based on Drivers’ Eye-Movement Features. IEEE Access 2020, 8, 136108–136118. [Google Scholar] [CrossRef]

- Jenkins, S.; Codjoe, J.; Alecsandru, C.; Ishak, S. Exploration of the SHRP 2 NDS: Development of a Distracted Driving Prediction Model. In Proceedings of the Advances in Human Aspects of Transportation, Cham, Germany, 7 July 2016; pp. 231–242. [Google Scholar]

- Dupépé, E.B.; Kicielinski, K.P.; Gordon, A.S.; Walters, B.C. What is a Case-Control Study? Neurosurgery 2019, 84, 819–826. [Google Scholar] [CrossRef]

- Yang, L.; Aghaabbasi, M.; Ali, M.; Jan, A.; Bouallegue, B.; Javed, M.F.; Salem, N.M. Comparative Analysis of the Optimized KNN, SVM, and Ensemble DT Models Using Bayesian Optimization for Predicting Pedestrian Fatalities: An Advance towards Realizing the Sustainable Safety of Pedestrians. Sustainability 2022, 14, 10467. [Google Scholar] [CrossRef]

- Chen, F.; Chen, S.; Ma, X. Analysis of hourly crash likelihood using unbalanced panel data mixed logit model and real-time driving environmental big data. J. Saf. Res. 2018, 65, 153–159. [Google Scholar] [CrossRef]

- Chen, L.H.; Wang, P. Risk Factor Analysis of Traffic Accident for Different Age Group Based on Adaptive Boosting. In Proceedings of the 4th International Conference on Transportation Information and Safety (ICTIS), Banff, Canada, 8–10 August 2017; pp. 812–817. [Google Scholar]

- Malik, S.; El Sayed, H.; Khan, M.A.; Khan, M.J. Road Accident Severity Prediction—A Comparative Analysis of Machine Learning Algorithms. In Proceedings of the IEEE Global Conference on Artificial Intelligence and Internet of Things (GCAIoT), Dubai, The United Arab Emirates, 12–16 December 2021; pp. 69–74. [Google Scholar]

- Osman, O.; Hajij, M.; Bakhit, P.; Ishak, S. Prediction of Near-Crashes from Observed Vehicle Kinematics using Machine Learning. Transp. Res. Rec. J. Transp. Res. Board 2019, 2673, 036119811986262. [Google Scholar] [CrossRef]

- Mokoatle, M.; Marivate, D.; Bukohwo, E. Predicting Road Traffic Accident Severity using Accident Report Data in South Africa. In Proceedings of the 20th Annual International Conference on Digital Government Research, New York, NY, USA, 18–20 June 2019; pp. 11–17. [Google Scholar]

- Guo, F.; Fang, Y.J. Individual driver risk assessment using naturalistic driving data. Accid. Anal. Prev. 2013, 61, 3–9. [Google Scholar] [CrossRef] [PubMed]

- Xiong, X.; Chen, L.; Liang, J. Analysis of Roadway Traffic Accidents Based on Rough Sets and Bayesian Networks. PROMET-Traffic Transp. 2018, 30, 71. [Google Scholar] [CrossRef]

- Australian Bureau of Statistics. Age Standard. Available online: https://www.abs.gov.au/statistics/standards/age-standard/latest-release#cite-window1 (accessed on 5 December 2022).

- Ma, Z.; Shao, C.; Yue, H.; Ma, S. Analysis of the Logistic Model for Accident Severity on Urban Road Environment. In Proceedings of the 2009 IEEE Intelligent Vehicles Symposium, Xi’an, China, 3–5 June 2009; pp. 983–987. [Google Scholar]

- Noland, R.B.; Oh, L. The effect of infrastructure and demographic change on traffic-related fatalities and crashes: A case study of Illinois county-level data. Accid. Anal. Prev. 2004, 36, 525–532. [Google Scholar] [CrossRef]

- Hyodo, S.; Hasegawa, K. Factors Affecting Analysis of the Severity of Accidents in Cold and Snowy Areas Using the Ordered Probit Model. Asian Transp. Stud. 2021, 7, 100035. [Google Scholar] [CrossRef]

- Usman, T.; Fu, L.; Miranda-Moreno, L.F. Quantifying safety benefit of winter road maintenance: Accident frequency modeling. Accid. Anal. Prev. 2010, 42, 1878–1887. [Google Scholar] [CrossRef] [PubMed]

- Khorashadi, A.; Niemeier, D.; Shankar, V.; Mannering, F. Differences in rural and urban driver-injury severities in accidents involving large-trucks: An exploratory analysis. Accid. Anal. Prev. 2005, 37, 910–921. [Google Scholar] [CrossRef]

- Chimba, D.; Sando, T.; Kwigizile, V. Effect of bus size and operation to crash occurrences. Accid. Anal. Prev. 2010, 42, 2063–2067. [Google Scholar] [CrossRef]

- Awan, H.H.; Hussain, A.; Javed, M.F.; Qiu, Y.J.; Alrowais, R.; Mohamed, A.M.; Fathi, D.; Alzahrani, A.M. Predicting Marshall Flow and Marshall Stability of Asphalt Pavements Using Multi Expression Programming. Buildings 2022, 12, 314. [Google Scholar] [CrossRef]

- Eraqi, H.M.; Abouelnaga, Y.; Saad, M.H.; Moustafa, M.N. Driver Distraction Identification with an Ensemble of Convolutional Neural Networks. J. Adv. Transp. 2019, 2019, 4125865. [Google Scholar] [CrossRef]

- Klauer, S.; Neale, V.; Dingus, T.; Ramsey, D.; Sudweeks, J. Driver Inattention: A Contributing Factor to Crashes and Near-Crashes. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2005, 49, 1922–1926. [Google Scholar] [CrossRef]

- Née, M.; Contrand, B.; Orriols, L.; Gil-Jardiné, C.; Galéra, C.; Lagarde, E. Road safety and distraction, results from a responsibility case-control study among a sample of road users interviewed at the emergency room. Accid. Anal. Prev. 2019, 122, 19–24. [Google Scholar] [CrossRef] [PubMed]

- Violanti, J.M.; Marshall, J.R. Cellular phones and traffic accidents: An epidemiological approach. Accid. Anal. Prev. 1996, 28, 265–270. [Google Scholar] [CrossRef] [PubMed]

- Mousa, S.R.; Bakhit, P.R.; Ishak, S. An extreme gradient boosting method for identifying the factors contributing to crash/near-crash events: A naturalistic driving study. Can. J. Civ. Eng. 2019, 46, 712–721. [Google Scholar] [CrossRef]

- Naji, H.A.H.; Xue, Q.; Lyu, N.; Wu, C.; Zheng, K. Evaluating the Driving Risk of Near-Crash Events Using a Mixed-Ordered Logit Model. Sustainability 2018, 10, 2868. [Google Scholar] [CrossRef]

- Akalin, K.B.; Karacasu, M.; Altin, A.Y.; Ergul, B. Curve Estimation of Number of People Killed in Traffic Accidents in Turkey. In Proceedings of the World Multidisciplinary Earth Sciences symposium (WMESS), Prague, Czech Republic, 5–9 September 2016. [Google Scholar]

- Clarke, D.D.; Ward, P.; Bartle, C.; Truman, W. The role of motorcyclist and other driver behaviour in two types of serious accident in the UK. Accid. Anal. Prev. 2007, 39, 974–981. [Google Scholar] [CrossRef]

- Fior, J.; Cagliero, L. Correlating Extreme Weather Conditions With Road Traffic Safety: A Unified Latent Space Model. IEEE Access 2022, 10, 73005–73018. [Google Scholar] [CrossRef]

- Edwards, J.B. The relationship between road accident severity and recorded weather. J. Saf. Res. 1998, 29, 249–262. [Google Scholar] [CrossRef]

- Jamal, A.; Zahid, M.; Tauhidur Rahman, M.; Al-Ahmadi, H.M.; Almoshaogeh, M.; Farooq, D.; Ahmad, M. Injury severity prediction of traffic crashes with ensemble machine learning techniques: A comparative study. Int. J. Inj. Contr. Saf. Promot. 2021, 28, 408–427. [Google Scholar] [CrossRef]

- Janicak, C.A. Differences in relative risks for fatal occupational highway transportation accidents. J. Saf. Res. 2003, 34, 539–545. [Google Scholar] [CrossRef]

- Zhang, R.; Qu, X. The effects of gender, age and personality traits on risky driving behaviors. J. Shenzhen Univ. Sci. Eng. 2016, 33, 646. [Google Scholar] [CrossRef]

- Wanvik, P.O. Effects of road lighting: An analysis based on Dutch accident statistics 1987–2006. Accid. Anal. Prev. 2009, 41, 123–128. [Google Scholar] [CrossRef] [PubMed]

- Yannis, G.; Kondyli, A.; Mitzalis, N. Effect of lighting on frequency and severity of road accidents. Proc. Inst. Civ. Eng.-Transp. 2013, 166, 271–281. [Google Scholar] [CrossRef]

- Xiong, X.X.; Chen, L.; Liang, J. Vehicle Driving Risk Prediction Based on Markov Chain Model. Discret. Dyn. Nat. Soc. 2018, 2018, 4954621. [Google Scholar] [CrossRef]

| Category | Gaze Location | Definitions |

|---|---|---|

| Ahead | Forward | Any glance out the straight forward windshield. When the vehicle is turning, these glances may not be directed straight forward but toward the vehicle’s heading. |

| Left Forward | Any glance out the left forward windshield. | |

| Right Forward | Any glance out the right forward windshield. | |

| Right Window | Any glance to the right side window. | |

| Left Window | Any glance to the left side window. | |

| Non-driving Needs | Eyes Closed | Any time that the participant’s eyes are closed outside of normal blinking. |

| Cell Phone | Any glance at a cell phone, no matter where it is located. | |

| Interior Object | Any glance at an identifiable object in the vehicle other than a cell phone. | |

| Passenger | Any glance to a passenger, whether in the front seat or rear seat of the vehicle. | |

| Center Stack | Any glance at the vehicle’s center stack. | |

| Driving Needs | Instrument cluster | Any glance to the instrument cluster underneath the dashboard. |

| Rearview mirror | Any glance to the rearview mirror or equipment located around it. | |

| Left Mirror | Any glance to the left side mirror. | |

| Right Mirror | Any glance to the right side mirror. |

| No. | Secondary Driving Task | Count | Percentage |

|---|---|---|---|

| 1 | Lost in thought | 6 | 0.06% |

| 2 | Reading | 62 | 0.60% |

| 3 | Dialing hand-held cell phone | 105 | 1.02% |

| 4 | Talking/singing | 593 | 5.76% |

| 5 | Smoking cigar/cigarette | 203 | 1.97% |

| 6 | Biting nails/cuticles | 101 | 0.98% |

| 7 | Passenger in adjacent seat | 1499 | 14.55% |

| 8 | Talking/listening on cell phone | 897 | 8.71% |

| 9 | Adjusting radio | 437 | 4.24% |

| 10 | Adjusting other devices integral to vehicle | 156 | 1.51% |

| 11 | Other external distraction | 507 | 4.92% |

| 12 | Inattention to the Forward Roadway Left mirror | 787 | 7.64% |

| 13 | Inattention to the Forward Roadway Center mirror | 1628 | 15.80% |

| 14 | Inattention to the Forward Roadway Right mirror | 206 | 2.00% |

| 15 | Eating without utensils | 230 | 2.23% |

| 16 | Drinking with lid and straw | 30 | 0.29% |

| 17 | Drinking from an open container | 55 | 0.53% |

| 18 | Combing/brushing/fixing hair | 57 | 0.55% |

| 19 | Other personal hygiene | 247 | 2.40% |

| 20 | Passenger in rear seat | 58 | 0.56% |

| 21 | Child in rear seat | 35 | 0.34% |

| 22 | Reaching for object (not cell phone) | 141 | 1.37% |

| 23 | Cell phone-Other | 85 | 0.83% |

| 24 | Adjusting climate control | 61 | 0.59% |

| 25 | Inattention to the Forward Roadway Left window | 829 | 8.05% |

| 26 | Dancing | 42 | 0.41% |

| 27 | Cognitive-Other | 19 | 0.18% |

| 28 | Applying make-up | 46 | 0.45% |

| 29 | Moving object in vehicle | 8 | 0.08% |

| 30 | Animal/Object in Vehicle-- Other | 201 | 1.95% |

| 31 | Locating/reaching/answering cell phone | 18 | 0.17% |

| 32 | Operating PDA | 5 | 0.05% |

| 33 | Inserting/retrieving CD | 5 | 0.05% |

| 34 | Looking at pedestrian | 13 | 0.13% |

| 35 | Inattention to the Forward Roadway Right window | 303 | 2.94% |

| 36 | Eating with utensils | 230 | 2.23% |

| 37 | Reaching for cigar/cigarette | 9 | 0.09% |

| 38 | Lighting cigar/cigarette | 10 | 0.10% |

| 39 | Shaving | 1 | 0.01% |

| 40 | Brushing/flossing teeth | 24 | 0.23% |

| 41 | Removing/adjusting jewelry | 14 | 0.14% |

| 42 | Removing/inserting contact lenses | 10 | 0.10% |

| 43 | fatigue | 458 | 4.45% |

| 44 | Child in adjacent seat | 3 | 0.03% |

| 45 | Pet in vehicle | 7 | 0.07% |

| 46 | Dialing hand-held cell phone using quick keys | 1 | 0.01% |

| 47 | Dialing hands-free cell phone using voice-activated software | 1 | 0.01% |

| 48 | PDA-other | 3 | 0.03% |

| 49 | Viewing PDA | 1 | 0.01% |

| 50 | Inserting/retrieving cassette | 2 | 0.02% |

| 51 | Looking at an object | 67 | 0.65% |

| 52 | Distracted by construction | 3 | 0.03% |

| 53 | Looked but did not see | 2 | 0.02% |

| 54 | Insect in vehicle | 1 | 0.01% |

| 55 | Looking at previous crash or incident | 1 | 0.01% |

| Frequency of Secondary Driving Tasks | Frequency of Undistracted Samples | |

|---|---|---|

| Frequency of Events | ||

| Frequency of Baselines |

| Category | Category Criteria |

|---|---|

| High-Risk Distraction | ai > 1, LCL > 1 |

| Medium-Risk Distraction | ai > 1, LCL < 1 |

| Low-Risk Distraction | ai < 1, UCL > 1 |

| No-Risk Distraction | ai < 1, UCL < 1 |

| Category | Category Criteria |

|---|---|

| Relatively Dangerous Distraction | Secondary Driving Task Only Present in Event |

| Relatively Safe Distraction | Secondary Driving Task Only Present in Baseline |

| Secondary Driving Task | Category | Odds Ratio | UCL | LCL |

|---|---|---|---|---|

| Lost in thought | High-Risk Distraction | 12.33 | 61.35 | 2.48 |

| Applying make-up | Medium-Risk Distraction | 1.17 | 3.30 | 0.42 |

| Eating without utensils | Low-Risk Distraction | 0.86 | 1.47 | 0.50 |

| Smoking cigar/cigarette | No-Risk Distraction | 0.44 | 0.94 | 0.20 |

| Looked but did not see | Relatively Dangerous Distraction | - | - | - |

| Lighting cigar/cigarette | Relatively Safe Distraction | - | - | - |

| Category | Number of the Secondary Driving Tasks |

|---|---|

| High-Risk Distraction | No. 1–3 |

| Medium-Risk Distraction | No. 26–35 |

| Low-Risk Distraction | No. 15–25 |

| No-Risk Distraction | No. 4–14 |

| Relatively Dangerous Distraction | No. 53–55 |

| Relatively Safe Distraction | No. 36–52 |

| Attributes | Subcategories | Count | Percentage |

|---|---|---|---|

| Age | 18–24 | 1920 | 45.38% |

| 25–44 | 1408 | 33.38% | |

| 45–64 | 835 | 19.74% | |

| 65+ | 68 | 1.61% | |

| Gender | Male | 2457 | 58.07% |

| Female | 1774 | 41.93% | |

| Driver Seatbelt Use | Lap/shoulder belt | 3581 | 84.64% |

| None used | 650 | 15.36% | |

| Driver Distraction Classification Based on Driver’s Gaze | High–Risk Distraction | 136 | 3.21% |

| Medium–Risk Distraction | 2694 | 63.67% | |

| Low–Risk Distraction | 1401 | 33.11% | |

| Driver distraction classification based on secondary driving tasks | High–Risk Distraction | 67 | 1.58% |

| Medium–Risk Distraction | 211 | 4.99% | |

| Low–Risk Distraction | 530 | 12.53% | |

| No–Risk Distraction | 3237 | 76.51% | |

| Relatively Dangerous Distraction | 4 | 0.09% | |

| Relatively Safe Distraction | 182 | 4.30% |

| Attributes | Subcategories | Count | Percentage |

|---|---|---|---|

| Surface Condition | Dry | 3782 | 89.39% |

| Wet | 414 | 9.78% | |

| Snowy | 27 | 0.64% | |

| Icy | 7 | 0.17% | |

| Muddy | 1 | 0.02% | |

| Traffic Density | A | 2132 | 50.39% |

| B | 1807 | 42.71% | |

| C | 177 | 4.18% | |

| D | 65 | 1.54% | |

| E | 22 | 0.52% | |

| F | 28 | 0.66% | |

| Traffic Flow | Divided (median strip or barrier) | 2708 | 64.00% |

| Not divided | 1286 | 30.39% | |

| One-way traffic | 138 | 3.26% | |

| No lanes | 99 | 2.34% | |

| Traffic Control | Traffic signal | 350 | 8.27% |

| No traffic control | 3674 | 86.84% | |

| Stop sign | 33 | 0.78% | |

| Traffic lanes marked | 131 | 3.10% | |

| Yield sign | 17 | 0.40% | |

| Officer or watchman | 2 | 0.05% | |

| One-way road or street | 2 | 0.05% | |

| Other | 22 | 0.52% | |

| Relation to Junction | Non-Junction | 3432 | 81.12% |

| Intersection-related | 229 | 5.41% | |

| Intersection | 313 | 7.40% | |

| Entrance/exit ramp | 137 | 3.24% | |

| Driveway, alley access, etc. | 16 | 0.38% | |

| Parking lot | 81 | 1.91% | |

| Interchange Area | 16 | 0.38% | |

| Other | 7 | 0.17% | |

| Alignment | Straight level | 3626 | 85.70% |

| Straight grade | 95 | 2.25% | |

| Curve level | 475 | 11.23% | |

| Curve grade | 34 | 0.80% | |

| Straight hillcrest | 1 | 0.02% | |

| Travel Lanes | 0 | 5 | 0.12% |

| 1 | 199 | 4.70% | |

| 2 | 2122 | 50.15% | |

| 3 | 1182 | 27.94% | |

| 4 | 595 | 14.06% | |

| 5 | 113 | 2.67% | |

| 6 | 13 | 0.31% | |

| 7 | 1 | 0.02% | |

| 8 | 1 | 0.02% | |

| Locality | 8+ | 5 | 0.12% |

| Business/industrial | 1403 | 33.16% | |

| Interstate | 1163 | 27.49% | |

| Residential | 443 | 10.47% | |

| Open Country | 1162 | 27.46% | |

| Church | 2 | 0.05% | |

| Construction Zone | 17 | 0.40% | |

| School | 4 | 0.09% | |

| Other | 37 | 0.87% | |

| Lighting | Daylight | 2798 | 66.13% |

| Darkness, lighted | 748 | 17.68% | |

| Darkness, not lighted | 411 | 9.71% | |

| Dusk | 259 | 6.12% | |

| Dawn | 15 | 0.35% | |

| Weather | Clear | 3667 | 86.65% |

| Cloudy | 226 | 5.34% | |

| Raining | 323 | 7.63% | |

| Mist | 4 | 0.09% | |

| Snowing | 12 | 0.28% |

| Model | Hyperparameter | Gaze | Secondary Driving Task |

|---|---|---|---|

| Random Forest | n_estimators | 40 | 100 |

| max_features | 6 | 8 | |

| AdaBoost | n_estimators | 50 | 40 |

| learning_rate | 0.6 | 1 | |

| XGBoost | n_estimators | 100 | 40 |

| learning_rate | 0.11 | 0.15 |

| Model | Category | Accuracy | AUC |

|---|---|---|---|

| Random Forest | Gaze | 89.30% | 0.861 |

| Secondary Driving Task | 88.90% | 0.857 | |

| Secondary Driving Task Without Classification | 88.68% | 0.835 | |

| AdaBoost | Gaze | 89.96% | 0.887 |

| Secondary Driving Task | 89.72% | 0.892 | |

| Secondary Driving Task Without Classification | 89.61% | 0.850 | |

| XGBoost | Gaze | 90.67% | 0.874 |

| Secondary Driving Task | 90.67% | 0.872 | |

| Secondary Driving Task Without Classification | 89.84% | 0.868 |

| Study | Model | Accuracy |

|---|---|---|

| [44] | Bayesian Networks | 60.4% |

| [68] | Markov Chain Model | 85.3% |

| Our paper | Gaze—Random Forest | 89.30% |

| Secondary Driving Task—Random Forest | 88.90% | |

| Gaze—AdaBoost | 89.96% | |

| Secondary Driving Task—AdaBoost | 89.72% | |

| Gaze—XGBoost | 90.67% | |

| Secondary Driving Task—XGBoost | 90.67% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zheng, L.; Zhang, Y.; Ding, T.; Meng, F.; Li, Y.; Cao, S. Classification of Driver Distraction Risk Levels: Based on Driver’s Gaze and Secondary Driving Tasks. Mathematics 2022, 10, 4806. https://doi.org/10.3390/math10244806

Zheng L, Zhang Y, Ding T, Meng F, Li Y, Cao S. Classification of Driver Distraction Risk Levels: Based on Driver’s Gaze and Secondary Driving Tasks. Mathematics. 2022; 10(24):4806. https://doi.org/10.3390/math10244806

Chicago/Turabian StyleZheng, Lili, Yanlin Zhang, Tongqiang Ding, Fanyun Meng, Yanlin Li, and Shiyu Cao. 2022. "Classification of Driver Distraction Risk Levels: Based on Driver’s Gaze and Secondary Driving Tasks" Mathematics 10, no. 24: 4806. https://doi.org/10.3390/math10244806

APA StyleZheng, L., Zhang, Y., Ding, T., Meng, F., Li, Y., & Cao, S. (2022). Classification of Driver Distraction Risk Levels: Based on Driver’s Gaze and Secondary Driving Tasks. Mathematics, 10(24), 4806. https://doi.org/10.3390/math10244806