Predicting Fraud in Financial Payment Services through Optimized Hyper-Parameter-Tuned XGBoost Model

Abstract

1. Introduction

1.1. Types of Payment Frauds

- (1)

- Identity Theft: this type of fraud occurs when fraudsters steal personal and banking information and use the owner’s identity to make false purchases and transactions. No new identity is created.

- (2)

- Friendly Fraud: Another prevalent type of payment fraud occurs after the delivery of service, the customer initiates a false chargeback and denies receiving it. In addition to becoming aware of the service, the amount is refunded back to the customer.

- (3)

- Clean Fraud: It is the hardest to detect fraud. Fraudsters very carefully analyze business fraud-detection systems and make use of stolen valid payment information.

1.2. Background

- Rapid data collection: As data volumes continue to grow at a rapid pace, it is becoming increasingly vital to implement efficient, time-saving measures such as machine learning to identify fraudulent activity. As a result of their efficient design, machine learning algorithms are capable of quickly assessing massive datasets. The capacity to gather and analyze data in real-time allows them to quickly identify fraudulent activity [16].

- Easy scaling: With more data available, ML models and algorithms have improved in effectiveness. In order for the ML model to better identify similarities and differences between various actions, more data is required for machine learning to progress. Once both legitimate and fraudulent transactions have been identified, the system may begin filtering them out [17].

- Improved efficiency: Machines have several advantages over humans, including the ability to perform menial chores and spot patterns in vast data sets, both of which are difficult for people to do. The ability to detect fraud in a shorter length of time is greatly enhanced by this. It is possible for algorithms to examine hundreds of thousands of transactions per second with high accuracy. This makes the process more efficient by cutting down on the time and money needed to review transactions.

- Decrease in the number of security breach cases: Financial institutions can combat fraud and provide the greatest degree of protection for their consumers with the help of machine learning technologies. To do this, it compares each fresh transaction (including personal information, data, IP address, location, etc.) to the prior ones. Banking institutions are, therefore, protected against payment and credit card fraud [18].

1.3. Motivation

- Inspectability Issues: Data filtering, processing, and interpretation of risk scores all need methods to function optimally. Despite rule-based methods serving as a yardstick, certain machine learning-based systems may suffer from a lack of observability.

- Cold Start: Insufficient information might lead to inaccurate or irrelevant fraud evaluations by the robots. There needs to be enough information to establish credible ties. Large corporations do not have this problem, but smaller ones need enough data points to establish causality. In this way, it is beneficial to apply a simple set of rules initially and then give the machine learning models time to “warm up” with more information.

- Blind to Data Connections: Models of machine learning focus on doing things, or behavior and action. At first, when the dataset is relatively tiny, they are blind to patterns in the information. To combat it, graph networks are deployed. Using graph databases, we can prevent fraudulent activity from questionable and fake accounts even before they have had a chance to do any damage.

2. Related Work

3. Material and Methods

3.1. Dataset

3.1.1. Data Preprocessing

Handling Missing Values

Detecting Outliers

3.2. Methods

XGBoost

| Algorithm 1. XGBoost Algorithm | ||

| Step 1 | Initialize: Make an initial prediction and calculate residuals where residuals = Observed values–predicted values. | |

| Step 2 | Construct XBoost tree Build an XGBoost tree with similarity score of leaf | |

| leafs = (sum_of_residual)2/(number of residuals+ regularization parameter) | (1) | |

| Step 3 | Prune the tree. | |

| Step 4 | Calculate the output value as given below: | |

| Output value = (sum_of_residual)/(number_of_residuals + regularization parameter) | (2) | |

| Step 5 | Make new predictions. | |

| Step 6 | Calculate residuals using new predictions. | |

| Step 7 | Repeat steps 2–6. | |

3.3. Proposed Nature-Inspired-Based Hyperparameter Optimization in XGBoost

- Step 1.

- Parameter Initialization: (a) Initialize harmony memory size (HMS), Harmony Memory Considering Rate (HMCR), Pitch Adjusting Rate (PAR), Stopping criteria

- Step 2.

- Initialize Harmony Memory (HM)

- Step 3.

- Improvise New Harmony.

- Step 4.

- Check if New Harmony is better than the worst harmony in HM.

- Step 5.

- Repeat steps 2–3 until termination criteria is reached.

| Algorithm 2. Modified XGBoost Algorithm | |

| Step 1 | Separating Dataset into Target and Independent Features: First it will now separate the target feature from the other features in the dataset. Our focus is on the target feature. |

| Step 2 | Optimized Space of Parameters: In this work, the authors select various parameters of XGBoost’s num class, alpha, base_score, booster and eta hyperparameters. |

| Step 3 | Figuring out What to Minimize (Objective Function): Hyperparamter tuning is the name of the function whose value the authors wish to reduce. |

| Step 4 | Harmony Search (HS) Optimization is being applied to optimize the hyperparameters of the classification algorithm. |

| Step 5 | Execute Algorithm 1 with new optimized hyperparameters values |

- Select a high pace of learning. In most cases, a learning rate of 0.1 is appropriate, while rates between 0.05 and 0.3 should be effective as well. Figure out how many trees need be used to achieve this level of learning. A great feature of XGBoost is the “cv” function, which uses cross-validation to determine the optimal tree size for each boosting iteration [51].

- Customize the number of trees to be used and the learning rate by adjusting the tree-specific parameters (max depth, min child weight, gamma, subsample, colsamplebytree).

- Adjusting XGBoost’s regularization settings (lambda, alpha) can cut down on model complexity and improve performance.

- Slow down the learning pace and pick the best settings.

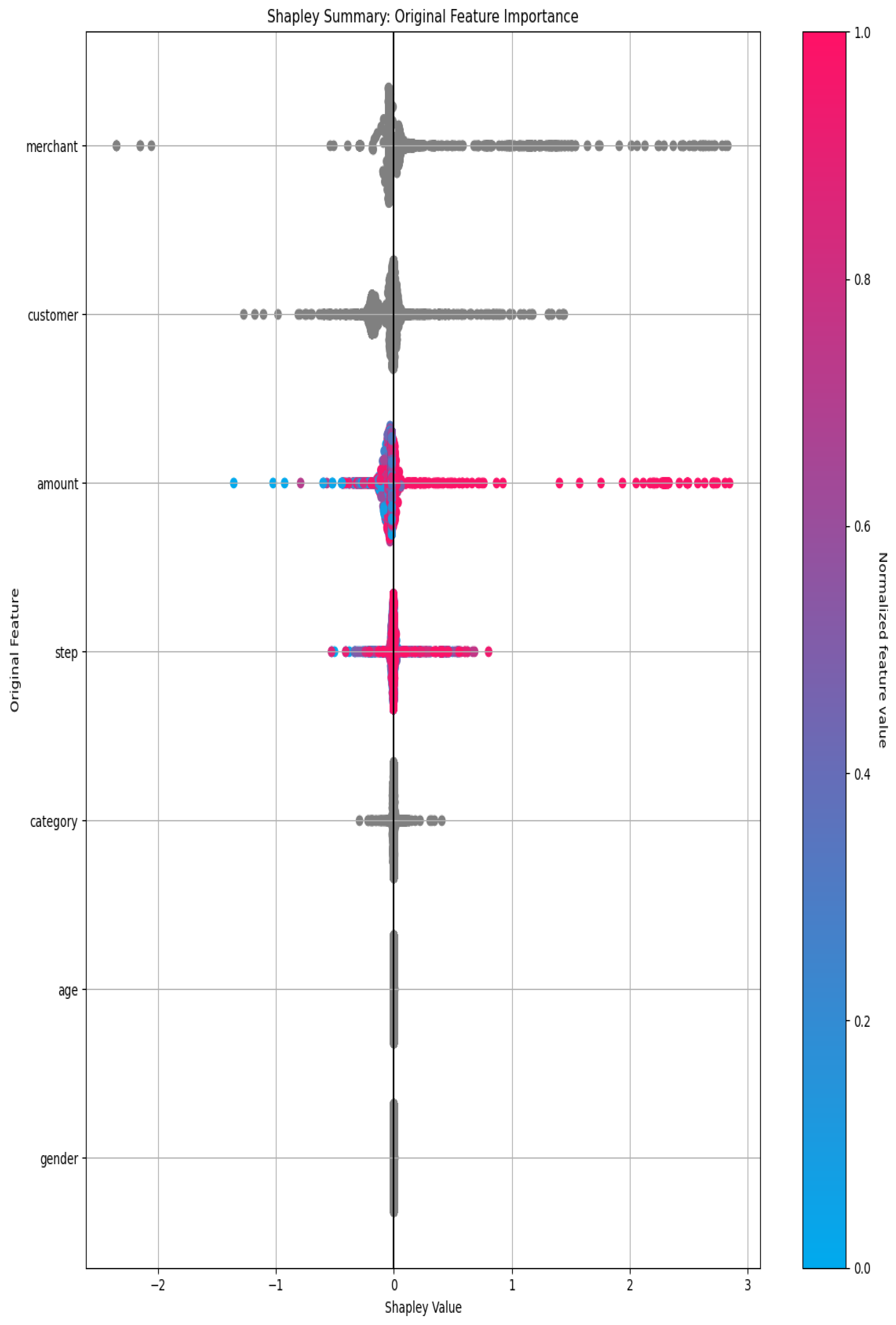

4. Result and Discussion

4.1. Experiment Settings

4.2. Performance Evalutaion

4.3. Validation Confusion Matrix

- TP = 586,597;

- FP = 846;

- FN = 1090;

- TN = 6110.

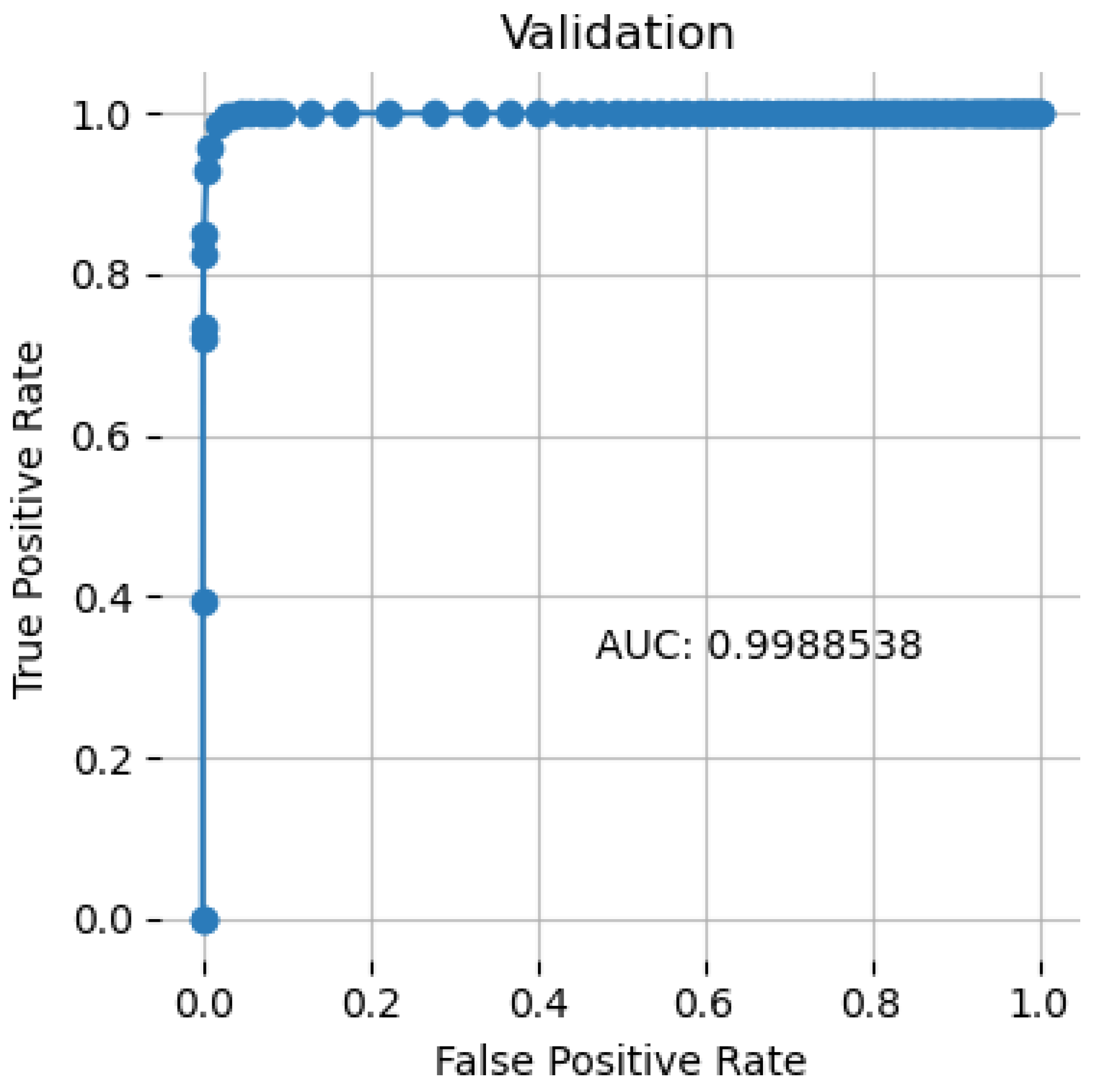

4.4. Receiver Operating Characteristic Curve

- True Positive Rate: Since recall is synonymous with True Positive Rate (TPR), its definition is as follows:TPR = TP/(TP + FN)

- False Positive Rate: An explanation of the term “false positive rate” (FPR) is as follows:FPR = FP/(FP + TN)

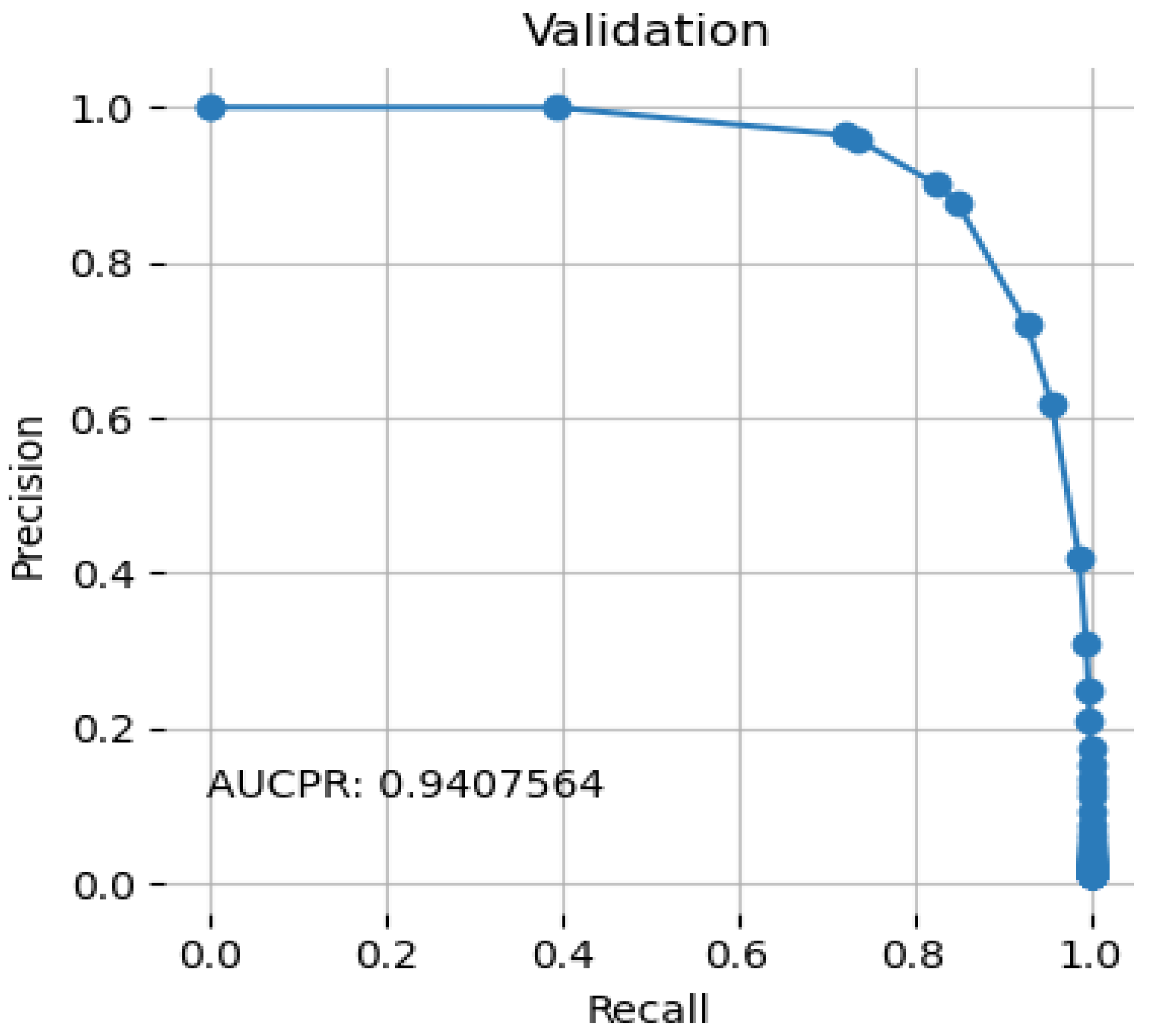

4.5. Precision Recall Curve

4.6. Cumulative Lift

4.7. Performance Comparison with Other Machine Learning Algorithms

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Takahashi, M.; Azuma, H.; Tsuda, K. A Study on Effect Evaluation of Payment Method Change in the Mail-order Industry. Procedia Comput. Sci. 2014, 35, 871–878. [Google Scholar] [CrossRef]

- Al-Hasan, A.A.; El-Alfy, E.-S.M. Dendritic Cell Algorithm for Mobile Phone Spam Filtering. Procedia Comput. Sci. 2015, 52, 244–251. [Google Scholar] [CrossRef]

- Sanusi, Z.M.; Rameli, M.N.F.; Isa, Y.M. Fraud Schemes in the Banking Institutions: Prevention Measures to Avoid Severe Financial Loss. Procedia Econ. Financ. 2015, 28, 107–113. [Google Scholar] [CrossRef]

- Takahashi, M.; Azuma, H.; Tsuda, K. A Study on Deliberate Presumptions of Customer Payments with Reminder in the Absence of Face-to-face Contact Transactions. Procedia Comput. Sci. 2015, 60, 968–975. [Google Scholar] [CrossRef][Green Version]

- Takahashi, M.; Azuma, H.; Tsuda, K. A Study on the Efficient Estimation of the Payment Intention in the Mail Order Industry. Procedia Comput. Sci. 2016, 96, 1122–1128. [Google Scholar] [CrossRef]

- Takahashi, M.; Azuma, H.; Tsuda, K. A Study on Validity Detection for Shipping Decision in the Mail-order Industry. Procedia Comput. Sci. 2017, 112, 1318–1325. [Google Scholar] [CrossRef]

- Leite, R.A.; Gschwandtner, T.; Miksch, S.; Gstrein, E.; Kuntner, J. Visual analytics for event detection: Focusing on fraud. Vis. Inform. 2018, 2, 198–212. [Google Scholar] [CrossRef]

- Barbieri, A.L.; Fadare, O.; Fan, L.; Singh, H.; Parkash, V. Challenges in communication from referring clinicians to pathologists in the electronic health record era. J. Pathol. Inform. 2018, 9, 6. [Google Scholar] [CrossRef]

- Takahashi, M.; Azuma, H.; Tsuda, K. A Study on Delivery Evaluation under Asymmetric Information in the Mail-order Industry. Procedia Comput. Sci. 2018, 126, 1298–1305. [Google Scholar] [CrossRef]

- Ozili, P.K. Impact of digital finance on financial inclusion and stability. Borsa Istanb. Rev. 2018, 18, 329–340. [Google Scholar] [CrossRef]

- Reddy, K. Pot the ball? Sovereign wealth funds’ outward FDI in times of global financial market turbulence: A yield institutions-based view. Cent. Bank Rev. 2019, 19, 129–139. [Google Scholar] [CrossRef]

- Dada, E.G.; Bassi, J.S.; Chiroma, H.; Abdulhamid, S.M.; Adetunmbi, A.O.; Ajibuwa, O.E. Machine learning for email spam filtering: Review, approaches and open research problems. Heliyon 2019, 5, e01802. [Google Scholar] [CrossRef]

- Setiawan, N.; Suharjito; Diana, A. Comparison of Prediction Methods for Credit Default on Peer to Peer Lending using Machine Learning. Procedia Comput. Sci. 2019, 157, 38–45. [Google Scholar] [CrossRef]

- Hitam, N.A.; Ismail, A.R.; Saeed, F. An Optimized Support Vector Machine (SVM) based on Particle Swarm Optimization (PSO) for Cryptocurrency Forecasting. Procedia Comput. Sci. 2019, 163, 427–433. [Google Scholar] [CrossRef]

- Hammouchi, H.; Cherqi, O.; Mezzour, G.; Ghogho, M.; El Koutbi, M. Digging Deeper into Data Breaches: An Exploratory Data Analysis of Hacking Breaches over Time. Procedia Comput. Sci. 2019, 151, 1004–1009. [Google Scholar] [CrossRef]

- Stavinova, E.; Bochenina, K. ScienceDirect Forecasting of foreign trips by transactional data: Comparative study Forecasting of a foreign trips by transactional data: A comparative study. Procedia Comput. Sci. 2019, 156, 225–234. [Google Scholar] [CrossRef]

- De Souza, M.J.S.; Almudhaf, F.W.; Henrique, B.M.; Negredo, A.B.S.; Ramos, D.G.F.; Sobreiro, V.A.; Kimura, H. Can artificial intelligence enhance the Bitcoin bonanza. J. Financ. Data Sci. 2019, 5, 83–98. [Google Scholar] [CrossRef]

- Rtayli, N.; Enneya, N. Selection Features and Support Vector Machine for Credit Card Risk Identification. Procedia Manuf. 2020, 46, 941–948. [Google Scholar] [CrossRef]

- Pikulík, T.; Štarchoň, P. Public registers with personal data under scrutiny of DPA regulators. Procedia Computer Sci. 2020, 170, 1170–1179. [Google Scholar] [CrossRef]

- León, C.; Barucca, P.; Acero, O.; Gage, G.; Ortega, F. Pattern recognition of financial institutions’ payment behavior. Lat. Am. J. Cent. Bank. 2020, 1, 100011. [Google Scholar] [CrossRef]

- Chen, M.-Y. Bankruptcy prediction in firms with statistical and intelligent techniques and a comparison of evolutionary computation approaches. Comput. Math. Appl. 2011, 62, 4514–4524. [Google Scholar] [CrossRef]

- Chen, J.; Tao, Y.; Wang, H.; Chen, T. Big data based fraud risk management at Alibaba. J. Financ. Data Sci. 2015, 1, 1–10. [Google Scholar] [CrossRef]

- Chouiekh, A.; Ibn EL Haj, E.H. ConvNets for Fraud Detection analysis. Procedia Comput. Sci. 2018, 127, 133–138. [Google Scholar] [CrossRef]

- Subudhi, S.; Panigrahi, S. Use of optimized Fuzzy C-Means clustering and supervised classifiers for automobile insurance fraud detection. J. King Saud Univ. Comput. Inf. Sci. 2020, 32, 568–575. [Google Scholar] [CrossRef]

- Nicholls, J.; Kuppa, A.; Le-Khac, N.-A. Financial Cybercrime: A Comprehensive Survey of Deep Learning Approaches to Tackle the Evolving Financial Crime Landscape. IEEE Access 2021, 9, 163965–163986. [Google Scholar] [CrossRef]

- Thejas, G.S.; Dheeshjith, S.; Iyengar, S.S.; Sunitha, N.R.; Badrinath, P. A hybrid and effective learning approach for Click Fraud detection. Mach. Learn. Appl. 2021, 3, 100016. [Google Scholar] [CrossRef]

- Domashova, J.; Kripak, E. Identification of non-typical international transactions on bank cards of individuals using machine learning methods. Procedia Comput. Sci. 2021, 190, 178–183. [Google Scholar] [CrossRef]

- Pourhabibi, T.; Ong, K.-L.; Kam, B.H.; Boo, Y.L. Fraud detection: A systematic literature review of graph-based anomaly detection approaches. Decis. Support Syst. 2020, 133, 113303. [Google Scholar] [CrossRef]

- Rocha-Salazar, J.-D.; Segovia-Vargas, M.-J.; Camacho-Miñano, M.-D. Money laundering and terrorism financing detection using neural networks and an abnormality indicator. Expert Syst. Appl. 2021, 169, 114470. [Google Scholar] [CrossRef]

- Chen, L.; Jia, N.; Zhao, H.; Kang, Y.; Deng, J.; Ma, S. Refined analysis and a hierarchical multi-task learning approach for loan fraud detection. J. Manag. Sci. Eng. 2022, 7, 589–607. [Google Scholar] [CrossRef]

- Pinto, S.O.; Sobreiro, V.A. Literature review: Anomaly detection approaches on digital business financial systems. Digit. Bus. 2022, 2, 100038. [Google Scholar] [CrossRef]

- Muheidat, F.; Patel, D.; Tammisetty, S.; Tawalbeh, L.A.; Tawalbeh, M. Emerging Concepts Using Blockchain and Big Data. Procedia Comput. Sci. 2021, 198, 15–22. [Google Scholar] [CrossRef]

- Sánchez-Paniagua, M.; Fidalgo, E.; Alegre, E.; Alaiz-Rodríguez, R. Phishing websites detection using a novel multipurpose dataset and web technologies features. Expert Syst. Appl. 2022, 207, 118010. [Google Scholar] [CrossRef]

- Li, Y.; Saxunova, D. A perspective on categorizing Personal and Sensitive Data and the analysis of practical protection regulations. Procedia Comput. Sci. 2020, 170, 1110–1115. [Google Scholar] [CrossRef]

- Amponsah, A.A.; Adekoya, A.F.; Weyori, B.A. Improving the Financial Security of National Health Insurance using Cloud-Based Blockchain Technology Application. Int. J. Inf. Manag. Data Insights 2022, 2, 100081. [Google Scholar] [CrossRef]

- Sabetti, L.; Heijmans, R. Shallow or deep? Training an autoencoder to detect anomalous flows in a retail payment system. Lat. Am. J. Cent. Bank. 2021, 2, 100031. [Google Scholar] [CrossRef]

- Severino, M.K.; Peng, Y. Machine learning algorithms for fraud prediction in property insurance: Empirical evidence using real-world microdata. Mach. Learn. Appl. 2021, 5, 100074. [Google Scholar] [CrossRef]

- Olowookere, T.; Adewale, O.S. A framework for detecting credit card fraud with cost-sensitive meta-learning ensemble approach. Sci. Afr. 2020, 8, e00464. [Google Scholar] [CrossRef]

- Misra, S.; Thakur, S.; Ghosh, M.; Saha, S.K. An Autoencoder Based Model for Detecting Fraudulent Credit Card Transaction. Procedia Comput. Sci. 2020, 167, 254–262. [Google Scholar] [CrossRef]

- Lee, J.; Shin, H.; Cho, S. A medical treatment based scoring model to detect abusive institutions. J. Biomed. Inform. 2020, 107, 103423. [Google Scholar] [CrossRef]

- Rahman, M.; Ismail, I.; Bahri, S. Analysing consumer adoption of cashless payment in Malaysia. Digit. Bus. 2020, 1, 100004. [Google Scholar] [CrossRef]

- Li, J.; Chen, W.-H.; Xu, Q.; Shah, N.; Kohler, J.C.; Mackey, T.K. Detection of self-reported experiences with corruption on twitter using unsupervised machine learning. Soc. Sci. Hum. Open 2020, 2, 100060. [Google Scholar] [CrossRef]

- Rubio, J.; Barucca, P.; Gage, G.; Arroyo, J.; Morales-Resendiz, R. Classifying payment patterns with artificial neural networks: An autoencoder approach. Lat. Am. J. Cent. Bank. 2020, 1, 100013. [Google Scholar] [CrossRef]

- Bagga, S.; Goyal, A.; Gupta, N.; Goyal, A. Credit Card Fraud Detection using Pipeling and Ensemble Learning. Procedia Comput. Sci. 2020, 173, 104–112. [Google Scholar] [CrossRef]

- Wyrobek, J. Application of machine learning models and artificial intelligence to analyze annual financial statements to identify companies with unfair corporate culture. Procedia Comput. Sci. 2020, 176, 3037–3046. [Google Scholar] [CrossRef]

- Świecka, B.; Terefenko, P.; Paprotny, D. Transaction factors’ influence on the choice of payment by Polish consumers. J. Retail. Consum. Serv. 2021, 58, 102264. [Google Scholar] [CrossRef]

- Seth, B.; Dalal, S.; Jaglan, V.; Le, D.N.; Mohan, S.; Srivastava, G. Integrating encryption techniques for secure data storage in the cloud. Transact. Emerg. Telecommun. Technol. 2020, 33, e4108. [Google Scholar] [CrossRef]

- Domashova, J.; Zabelina, O. Detection of fraudulent transactions using SAS Viya machine learning algorithms. Procedia Comput. Sci. 2021, 190, 204–209. [Google Scholar] [CrossRef]

- Rb, A.; Kr, S.K. Credit card fraud detection using artificial neural network. Glob. Transit. Proc. 2021, 2, 35–41. [Google Scholar] [CrossRef]

- Dalal, S.; Onyema, E.M.; Romero, C.A.T.; Ndufeiya-Kumasi, L.C.; Maryann, D.C.; Nnedimkpa, A.J.; Bhatia, T.K. Machine learning-based forecasting of potability of drinking water through adaptive boosting model. Open Chem. 2022, 20, 816–828. [Google Scholar] [CrossRef]

- Candrian, C.; Scherer, A. Rise of the machines: Delegating decisions to autonomous AI. Comput. Hum. Behav. 2022, 134, 107308. [Google Scholar] [CrossRef]

- Li, T.; Kou, G.; Peng, Y. Improving malicious URLs detection via feature engineering: Linear and nonlinear space transformation methods. Inf. Syst. 2020, 91, 101494. [Google Scholar] [CrossRef]

- Johnson, O.V.; Jinadu, O.T.; Aladesote, O.I. On experimenting large dataset for visualization using distributed learning and tree plotting techniques. Sci. Afr. 2020, 8, e00466. [Google Scholar] [CrossRef]

| Authors | Technologies | Dataset | Results |

|---|---|---|---|

| Chen et al. [21] | PCA, C5.0, CART, SVM, PSO | 200 Taiwan Stock Exchange Corporation | 95% |

| Chen et al. [22] | Big data | Alibaba | 88.4% |

| Chouiekh et al. [23] | ConvNets | 300 users of different subscribers | 82% |

| Subudhi et al. [24] | Decision Tree, SVM, GMDH and MLP. | Unbalanced insurance dataset | 84.34% |

| Nicholls et al. [25] | Graph-based anomaly detection (GBAD) | Financial cybercrime dataset | 90% |

| Thejaset al. [26] | Cascaded Forest and XGBoost | Advertising dataset | 87.37% |

| Domashovaet al. [27] | Statistical Analysis System | SAS Institute | 94.6% |

| Pourhabibi et al. [28] | Graph-based anomaly detection (GBAD) | CERT | 87.2% |

| Rocha-Salazar et al. [29] | Clustering process with transaction abnormality indicator | 3527 Financial transactions | 94.6% |

| Chen et al. [30] | Hierarchical multi-task learning | Chinese automobile finance company | 94.47% |

| Severino et al. [37] | GBM | 409 cases of fraud | 83.21% |

| (a) | ||||||||

|---|---|---|---|---|---|---|---|---|

| Name | Logical_Type | Storage_Type | min | Mean | Max | std | Unique | Freq of Mode |

| step | numeric, categorical, catlabel | int | 0.00 | 94.987 | 179.000 | 51.054 | 180 | 3774 |

| amount | numeric | real | 0.00 | 37.890 | 8329.96 | 111.403 | 23,767 | 146 |

| (b) | ||||||||

| Name | Logical_Type | Storage_Type | min | Mean | Max | std | Freq of Max Value | |

| fraud | N/A | bool | False | 0.0121 | True | 0.1094 | 7200 | |

| (c) | ||||||||

| Name | Logical_Type | Storage_Type | Unique | Top | Freq of Top Value | |||

| customer | categorical, catlabel | str | 4112 | ‘C1978250683′ | 265 | |||

| age | categorical, catlabel, ohe_categorical | str | 8 | ‘2′ | 187,310 | |||

| gender | categorical, catlabel, ohe_categorical | str | 4 | ‘F’ | 324,565 | |||

| zipcodeOri | N/A | str | 1 | ‘28007′ | 594,643 | |||

| merchant | categorical, catlabel | str | 50 | ‘M1823072687′ | 299,693 | |||

| zipMerchant | N/A | str | 1 | ‘28007′ | 594,643 | |||

| category | categorical, catlabel, ohe_categorical | str | 15 | ‘es_transportation’ | 505,119 | |||

| Scorer | Better Score Is | Final Ensemble Scores on Validation (Internal or External Holdout(s)) Data | Final Ensemble Standard Deviation on Validation (Internal or External Holdout(s)) Data |

|---|---|---|---|

| LOGLOSS | lower | 0.008576734 | 0.000464448 |

| ACCURACY | higher | 0.9968288 | 0.0002251821 |

| AUC | higher | 0.9988538 | 6.816163× 10−5 |

| AUCPR | higher | 0.9407564 | 0.004141402 |

| F05 | higher | 0.9076005 | 0.009049342 |

| F1 | higher | 0.8656693 | 0.007394145 |

| F2 | higher | 0.8814611 | 0.004108097 |

| FDR | lower | 0.1217813 | 0.01726242 |

| FNR | lower | 0.1459401 | 0.01123624 |

| FOR | lower | 0.001800103 | 0.0001338188 |

| FPR | lower | 0.001473484 | 0.0002626462 |

| GINI | higher | 0.9977075 | 0.0001363233 |

| MACROAUC | higher | 0.9988538 | 6.816163× 10−5 |

| MACROF1 | higher | 0.8656693 | 0.007394145 |

| MACROMCC | higher | 0.8643508 | 0.0075584 |

| MCC | higher | 0.8643508 | 0.0075584 |

| NPV | higher | 0.9981999 | 0.0001338188 |

| PRECISION | higher | 0.8782187 | 0.01726242 |

| RECALL | higher | 0.8540599 | 0.01123624 |

| TNR | higher | 0.9985265 | 0.0002626462 |

| Predicted: 0 | Predicted: 1 | Error | |

|---|---|---|---|

| Actual: 0 | 586,597 | 846 | 0% |

| Actual: 1 | 1090 | 6110 | 15% |

| S. No. | Algorithm | Accuracy | AUC |

|---|---|---|---|

| 1 | Bayesian Network | 91.06 | 0.5 |

| 2 | Neural Network | 92.18 | 0.172 |

| 3 | Logistic Regression | 92.737 | 0.655 |

| 4 | Random Tree | 96.089 | 0.652 |

| 5 | Random Forest | 96.15 | 0.573 |

| 6 | C5.0 | 96.648 | 0.5 |

| 7 | Tree-AS | 96.648 | 0.681 |

| 8 | Quest | 96.648 | 0.569 |

| 9 | Discriminant algorithm | 97.207 | 0.667 |

| 10 | Modified XGBoost | 99.68 | 0.9988 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Dalal, S.; Seth, B.; Radulescu, M.; Secara, C.; Tolea, C. Predicting Fraud in Financial Payment Services through Optimized Hyper-Parameter-Tuned XGBoost Model. Mathematics 2022, 10, 4679. https://doi.org/10.3390/math10244679

Dalal S, Seth B, Radulescu M, Secara C, Tolea C. Predicting Fraud in Financial Payment Services through Optimized Hyper-Parameter-Tuned XGBoost Model. Mathematics. 2022; 10(24):4679. https://doi.org/10.3390/math10244679

Chicago/Turabian StyleDalal, Surjeet, Bijeta Seth, Magdalena Radulescu, Carmen Secara, and Claudia Tolea. 2022. "Predicting Fraud in Financial Payment Services through Optimized Hyper-Parameter-Tuned XGBoost Model" Mathematics 10, no. 24: 4679. https://doi.org/10.3390/math10244679

APA StyleDalal, S., Seth, B., Radulescu, M., Secara, C., & Tolea, C. (2022). Predicting Fraud in Financial Payment Services through Optimized Hyper-Parameter-Tuned XGBoost Model. Mathematics, 10(24), 4679. https://doi.org/10.3390/math10244679