Abstract

Recently, research on detecting SNP interactions has attracted considerable attention, which is of great significance for exploring complex diseases. The formulation of effective swarm intelligence optimization algorithms is a primary resolution to this issue. To achieve this goal, an important problem needs to be solved in advance; that is, designing and selecting lightweight scoring criteria that can be calculated in time and can accurately estimate the degree of association between SNP combinations and disease status. In this study, we propose a high-accuracy scoring criterion (HSIC) by measuring the degree of causality dedicated to assessing the degree. First, we approximate two kinds of dependencies according to the structural equation of the causal relationship between epistasis SNP combination and disease status. Then, inspired by these dependencies, we put forward this scoring criterion that integrates a widely used method of measuring statistical dependencies based on kernel functions (HSIC). However, the computing time complexity of HSIC is , which is too costly to be an integral part of the scoring criterion. Since the sizes of the sample space of the disease status, SNP loci and SNP combination are small enough, we propose an efficient method of computing HSIC for variables with a small sample in time. Eventually, HSIC can be computed in time in practice. Finally, we compared HSIC with five representative high-accuracy scoring criteria that detect SNP interactions for 49 simulation disease models. The experimental results show that the accuracy of our proposed scoring criterion is, overall, state-of-the-art.

Keywords:

lightweight scoring criterion; causality; SNP interactions; measuring statistical dependencies MSC:

62H20

1. Introduction

Since many complex diseases are usually caused by multiple genes and multiple factors, in recent years, with the emergence of high-throughput genotypic technology, genome-wide association analysis (GWAS) has been one of the main methods used to study complex diseases. Furthermore, the identification of single-nucleotide polymorphism (SNP) interactions from GWAS data is of great importance for exploring the explanation, prevention and treatment of complex diseases [1,2]. Therefore, over the past decade, this research topic has attracted considerable attention [3,4,5,6,7,8,9].

It is well known that SNP interactions represent combinations of multiple SNPs that affect complex diseases in a linear or non-linear manner, also known as k-order epistasis SNPs. The research topic of detecting k-order epistasis SNPs is a typical case of combinatorial optimization problems in k-dimensional discrete space ( in practice), and swarm intelligence optimization (SIO) algorithms are one of the main methods used to solve the problems [9,10,11]. For this study to be successful, an important problem needs to be solved in advance; that is, designing and selecting lightweight scoring criteria that can be calculated in time and can accurately estimate the degree of association between SNP combinations and disease status.

To date, few lightweight scoring criteria can accurately estimate the degree of association of SNP combinations with disease status in most disease models due to the widely varying characteristics of different disease models. As one of the primary methods used to work on this combinatorial optimization problem, SIO algorithms mostly tackle this issue by combining multiple criteria [9,10,11,12,13]; however, using too many objective functions will often make the proposed algorithm difficult to converge effectively. Therefore, picking a few high-accuracy objective functions instead of using too many objective functions can dramatically improve the performance of the used algorithms [14,15].

This paper’s goal is not to contribute toward fixing the issue entirely but to use a different methodology to propose a scoring criterion that can accurately estimate the associations in most disease models. The contributions of this paper are:

- We propose a high-accuracy scoring criterion based on measuring the degree of causality that integrates a widely used method of measuring statistical dependencies (HSIC);

- We put forward an efficient algorithm of computing HSIC on two variables with a small sample in time, thus enabling us to compute HSIC in time in practice.

2. Related Works

So far, the proposed lightweight scoring criteria can be roughly divided into two categories.

The first category covers various approaches, which are so-called Bayesian scoring criteria. The Bayesian scoring criteria calculate the posterior probability distribution, proceeding from a prior belief on the possible DAG models, conditional on the data [16]. The K2-Score is an efficient Bayesian scoring criterion that obtains priors under the assumption that all DAG models are equally likely. Other such scoring criteria representatives include the Bayesian Dirichlet equivalent (BDe) scoring criterion and the Bayesian Dirichlet equivalent uniform (BDeu) scoring criterion [4,17,18,19].

The second category is usually known as the information-theoretic scoring criteria. Mutual information (Mi) is a lightweight method but has preferences for certain disease models [6,20]. The JS divergence is a symmetrized divergence measure, derived from the Kullback–Leibler (KL) divergence, which is an asymmetric divergence measure of two probability distributions [21]. This approach can be utilized to evaluate the SNP genotype deviation between control samples and case samples. Lately, joint entropy (JE) and normalized distance with joint entropy (ND-JE) have been proposed as criteria for guiding harmony search algorithms to discover clues for exploring the epistasis of SNP combinations [9].

There are also a few approaches that do not fall into any of the above two main categories. For example, the LR is a composite indicator that reflects both sensitivity and specificity and can be used for a related measure to find the likelihood difference between a disease-causing SNP combination and an SNP combination that is not involved in the disease process [22,23].

In statistics, G-test is a significant test method of natural ratio or maximum likelihood. In recent years, scholars have tended to use the G-test independence test instead of the chi-square independence test recommended in the past. In genome association analysis, G-test has been extensively used. Different from other scoring criteria, G-test will provide its p-value when measuring the relationship between an SNP combination and sample state, which can indicate whether the SNP combination has a significant relationship with the sample state [24].

Published research has found that the results were different when employing different scoring criteria, The K2-Score has been widely used to evaluate the association. This measure has a high capacity for detecting SNP interactions and is superior in discriminating certain disease models with low marginal effects. However, for the interaction model with low minor allele frequencies (MAFs) and low genetic heritability (h), the K2-Score has a low performance in detecting high-order SNP interactions. The ND-JE is proposed based on the properties of the disease-causing SNP combination models without marginal effects, so this metric is more suitable for evaluating diseases with this type of mode. The LR-score aims to discover the relationship between likelihood differences in functional SNP combinations and non-functional SNP combinations. The method is well adapted to unbalanced datasets of cases and controls. In practice, the use of the G-test as a single evaluation criterion for detection is found to be inadequate, as there are often many SNP combinations with G-test values close to 0 [6,9,11].

The scoring criterion proposed in this work is based on the theory of causality. It is distinct from the theoretical approach taken by the current existing criteria. From the perspective of a comparison with correlation, causality strictly distinguishes “cause” variables and “result” variables, and plays an irreplaceable role in revealing the mechanism of the occurrence of things and guiding intervention behaviors [25]. Thus, the proposed criterion is a useful for and complementary to the current existing criteria.

3. Methodology

3.1. Concepts and Terms

In this work, x = {, , …, } represents a set of n SNP loci, and X = is a set of m samples of x; denotes a set of m samples of disease status y. For , , , {0, 1, 2}, is equal to 0, 1 and 2, which implies that it is the homozygous major allele (AA), heterozygous allele (AT), and homozygous minor allele (TT), respectively; for control and for case; D = (X,Y) is a dataset with m samples.

Definition 1

(k-order epistasis SNP combination). Let = {{, , …, }} be a collection of a set with k SNP loci (). : is a score function used for measuring the association between any k SNP loci and disease status y based on a dataset D. If x has the k-order epistasis SNP combination on y (denoted as s), and is a correct score function (or scoring criterion), then, for , s, (s) < (s) or (s) > (s).

3.2. Causal Relationship

According to how the data are generated, the structural equation of the causal relationship between epistasis SNP combination and disease status can be modeled as [26]:

where is the noise variable.

From the above equation, we can find that and are independent (denoted as ). In other words, among all , and have the lowest degree of dependency. However, it is unrealistic to measure the dependence degree of any and as the evaluation criterion for epistasis detection, because it requires too high a computational cost to obtain data generated by based on the regression method.

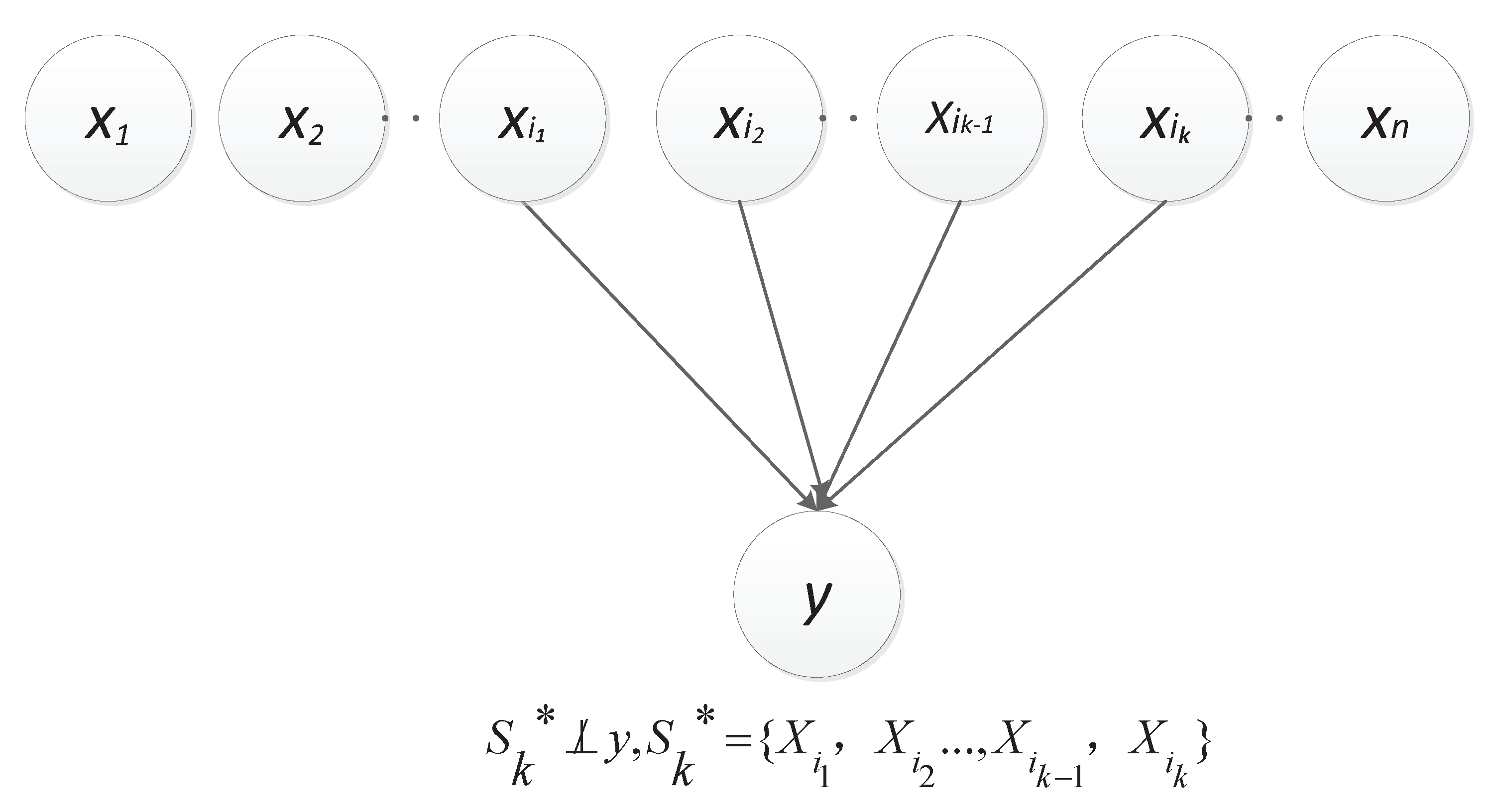

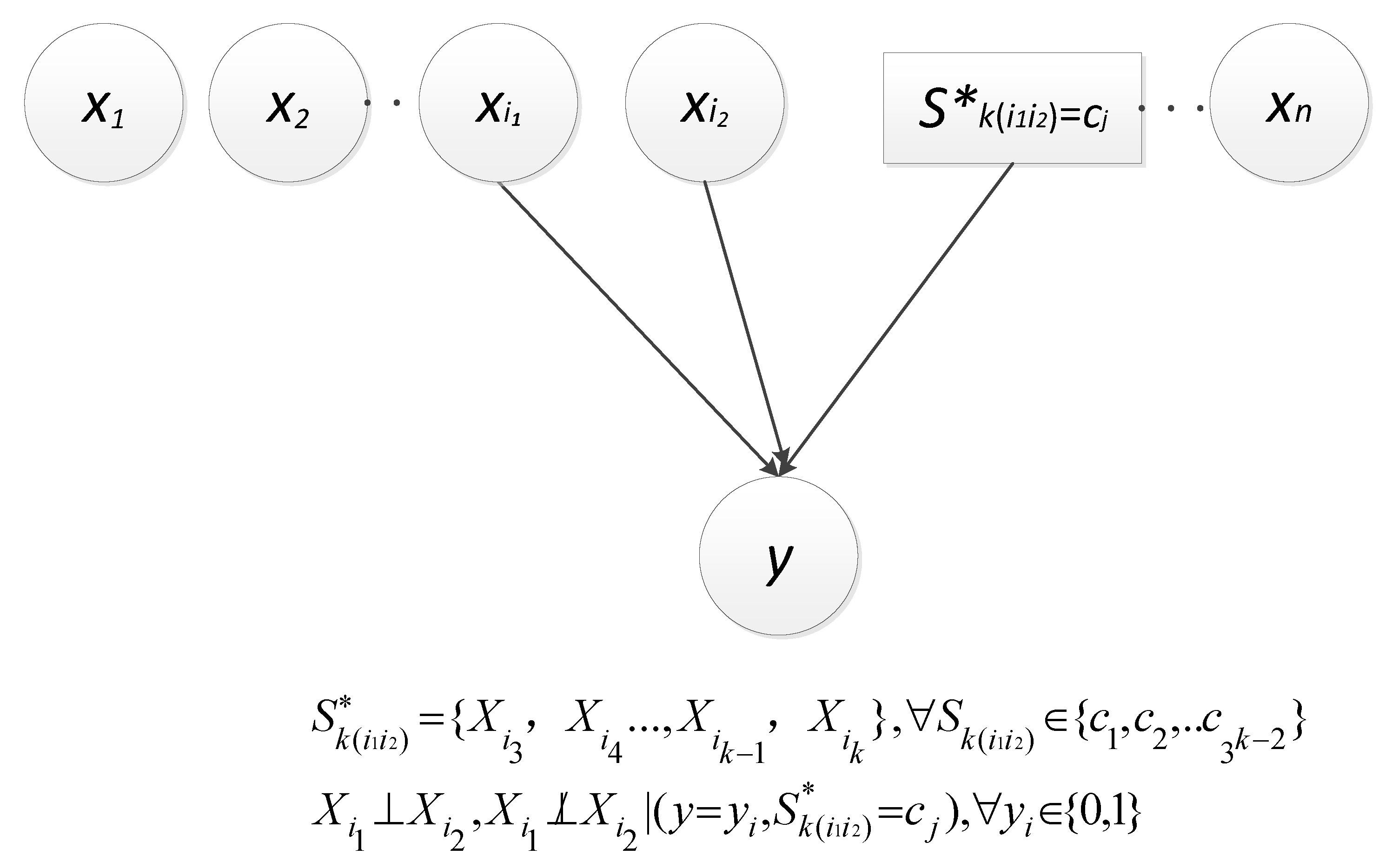

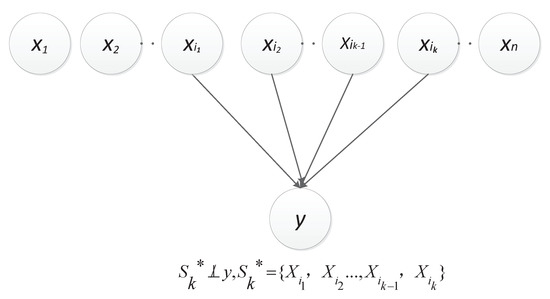

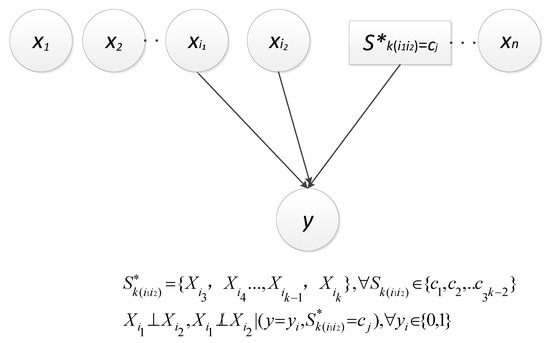

Thus, this paper herein let be the constant 0, i.e., y , which approximately introduces two kinds of dependencies as described in Figure 1 and Figure 2, respectively [25]. Obviously, the dependence between and y is direct (denoted as ); and the other is derived from the v-structure, i.e., and are dependent given a value of y and (denoted as ). Let , represent . In particular, the set is empty when k is equal to 2.

Figure 1.

Direct dependence.

Figure 2.

V-structure-related dependence.

3.3. Scoring Criterion

These two kinds of dependencies described above inspire us to raise this scoring criterion, which integrates a widely used method of measuring statistical dependencies based on kernel functions (HSIC).

For , then, for , given () and (), let be a slice of D on under the constraint; is the number of rows of the data slice (); HSIC is used to measure the degree of statistical dependence of two random variables (x and y) based on dataset (X, Y); the scoring criterion can be computed by the following Equations (2)–(6).

To facilitate the reader’s understanding, we now define following notations:

- 1.

- The value of is a linear weighted sum of and based on respective sample sizes;

- 2.

- For all , the value of is a linear weighted sum of all since there are v-structures given ;

- 3.

- The value of is a linear weighted sum of and based on sample size of and average sample size of all , which is a component and basis of ;

- 4.

- In particular, as is when ;

- 5.

- For robustness purposes, let = 0 if and only if the denominator of the weighted factor term is 0, like ;

- 6.

- The effort to calculate scoring criterion is reduced to calculate once, and is reduced up to times to calculate the type of problem (fortunately, in practice).

Thus, our estimate of can eventually be obtained by solving the problem

3.4. Method for Measuring Statistical Dependence

3.4.1. HSIC

HSIC is a measuring statistical dependence criterion proposed by other authors [27,28] based on the eigenspectrum of covariance operators in reproducing kernel Hilbert spaces (RKHSs), denoted by as follows:

where and are two kernel functions.

Let and be the separable sample spaces of random variables x and y, respectively, assuming that () and () are furnished with probability measures , respectively ( being the Borel sets on , and the Borel sets on ); is a joint measure over ; (the higher the degree of dependence of x and y, the greater the value) and is zero if and only if x and y are independent.

In order to show that HSIC is a practical criterion for measuring independence or the degree of dependence given a finite number of observations, it consists of an empirical estimator with expectation bias, denoted by , formulated as follows:

where D:=, ,, ,

.

An advantage of HSIC compared with other kernel-based independence criteria is that it can be computed in time. However, such computational costs are too high as an integral part of the scoring criterion. Fortunately, the sample space of the disease status, SNP loci and k-order SNP combination is finite discrete. Thus, immediately below, we put forward an efficient HSIC calculation method for variables with a small sample, which can be approximately calculated in time. Thus, as , we can compute in time in practice.

3.4.2. Efficient Computation

Proposition 1

(Efficient computation). Let x and y be two random discrete variables with p and q states, respectively, where , or . Then, we can compute in time.

Proof.

Let e be a column vector with a length of m, , and I be an identity matrix with a size of ; we have and .

As , which implies that each i-th element of is the mean of the corresponding row elements of L, we have , where each is the sum of the corresponding row elements of L.

Let be an matrix () and be an matrix (); we have .

Let P be an row transformation matrix; we have =.

Thus, without a loss of generality, we can assume that: D= , i.e., the number of observed instances of x with the value of (denoted as ) is (); K can be viewed as a partitioned matrix. Let be the th block having elements, all having the same value ( ). Let , where all elements in have the same value equal to (denoted as ).

Let be the number of observed instances with the value (, is the j-th state of y); the definition of and is similar to that of and . We also view as a partitioned matrix, where each has the same number of rows and columns as ; for , (denoted as ), where .

As (<.,.> denoted as inner product operator) and , we can obtain that the computational complexity of is .

As described above, we can know that the total computational complexity of , and is , and that those of and are and , respectively.

Hence, we have that the total computational complexity of is , where , or .

The proof is complete. □

In fact, the proof above gives the simplified process of efficient computation to . The detailed processes are shown in Algorithms 1–5. Algorithm 1 is the main process of the method, consisting of three functions:

- (X, Y) is m observations of a tuple of x and y with p and q states, respectively;

- includes two kernel functions used to calculate and , the parameters of which are and , respectively;

- (see Algorithm 2) is used to calculate , and ;

- For all , (see Algorithm 3) is used to calculate ;

- (see Algorithm 4) is used to calculate ;

- (see Algorithm 5) is used to calculate and .

| Algorithm 1 Calculate |

|

| Algorithm 2 Calculate = |

|

| Algorithm 3 Calculate |

|

| Algorithm 4 Calculate |

|

| Algorithm 5 Calculate |

|

4. Experiments

We employed representation of the data in a matrix to calculate by using a Gaussian kernel (). In addition, we mapped onto to compute by also using a Gaussian kernel (), i.e., , and . The advantages and disadvantages of the two representations have been explained by the other authors [29].

4.1. Evaluation Criterion

The evaluation criterion that we adopted in the experiments is by [9]:

where is the number of found disease-causing SNP combinations (the epistasis SNPs score the highest) and is the number of datasets. Each dataset includes one disease-causing SNP combination. is a measure of the accuracies of scoring criteria from genome data.

4.2. Simulated Datasets

For any data set, the worst-case scenario for checking the correctness of the scoring criteria is extensive testing of all SNP combinations. It is too computationally expensive for k = 4 and k = 5 cases. Therefore, tests were only conducted for k = 2 and k = 3.

4.2.1. Disease Models with k = 2

For k = 2, we used thirty-five disease models without marginal effects (DNME1–35) and six disease models with marginal effects (DME1–6). The models were designed based on interaction structures with different diseases, MAFs, prevalence (p) and h (the parameter settings are described in the supplementary files). Each data set contains 1000 SNPs and includes pairs of interacting SNPs (M0P0 and M1P1) generated according to the disease model setting, while other SNPs are generated using MAFs uniformly selected in [0.05, 0.5). For each model, we generated two simulated 100 data sets using the software GAMETES2.1 [30] with sample sizes of 400 (200 controls and 200 cases) and with sample sizes of 800 (400 control and 400 cases) [31].

Disease Models without Marginal Effects

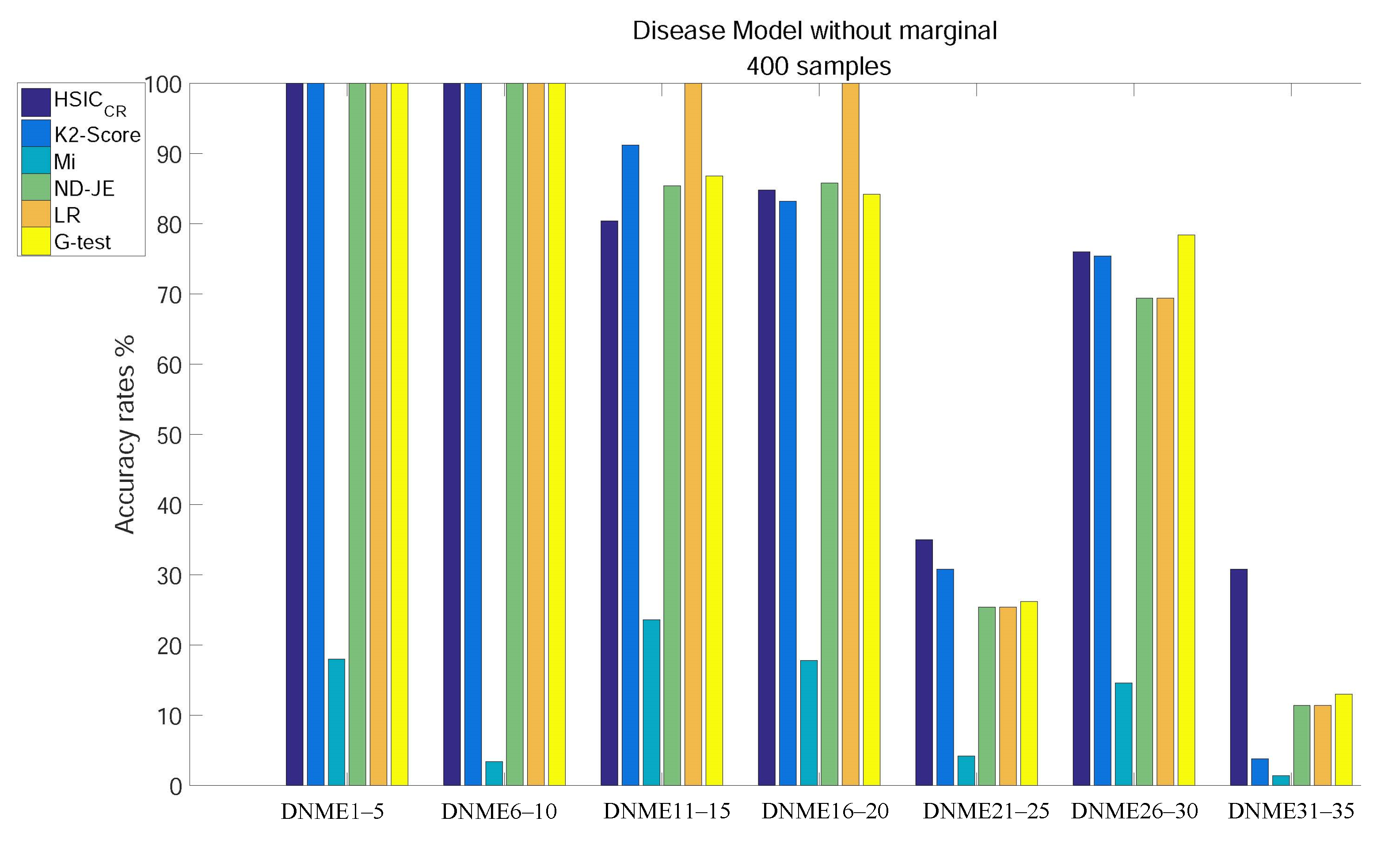

We divided all DNMEs into seven subgroups for analysis according to the different combined values of h and MAF (DNME1–5 MAF = 0.2, h = 0.2; DNME6–10 MAF = 0.4, h = 0.2; DNME11–15 MAF = 0.2, h = 0.1; DNME16–20 MAF = 0.4, h = 0.1; DNME21–25 MAF = 0.2, h = 0.05; DNME25–30 MAF = 0.4, h = 0.05; DNME1–5 MAF = 0.2, h = 0.025).

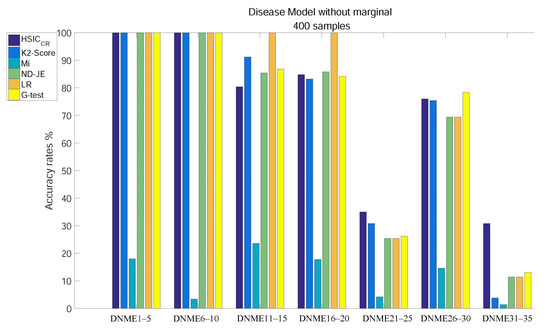

The analysis results of subgroups of DNME1–35, each of which has 400 samples, are shown in Figure 3:

Figure 3.

Disease model without marginal effects, with 400 samples and with .

- Except for Mi, using tests on DNME1–10, the accuracy of all scoring criteria is close to 100%;

- All criteria are not very accurate using tests on DNME21–25 and DNME31–35;

- Mi has an extremely poor accuracy on all subgroup tests;

- LR has the highest accuracy using tests on DNME11–15 and DNME16–20, close to 100%, but is only a little more accurate than Mi on DNME21–25, DNME26–30 and DNME31–35 tests;

- The accuracy rates of both ND-JE and G-test rank in the middle overall, but G-test has the highest accuracy on the DNME26–30 test;

- The accuracy rate of K2-Score ranks second on DNME11–15 and DNME21–25 tests, third on the DNME26–30 test and slightly worse than ND-JE, HS and G-test on the DNME16–20 test, but is only a little more accurate than Mi on the most difficult model (DNME31–35) test;

- HSIC has the highest accuracy on the two most difficult model subgroup (DNME21–25 and DNME31–35) tests, especially on DNME31–35, where the accuracy is much higher than other criteria, and the overall accuracy on other model subgroups tests is similar to the other four criteria.

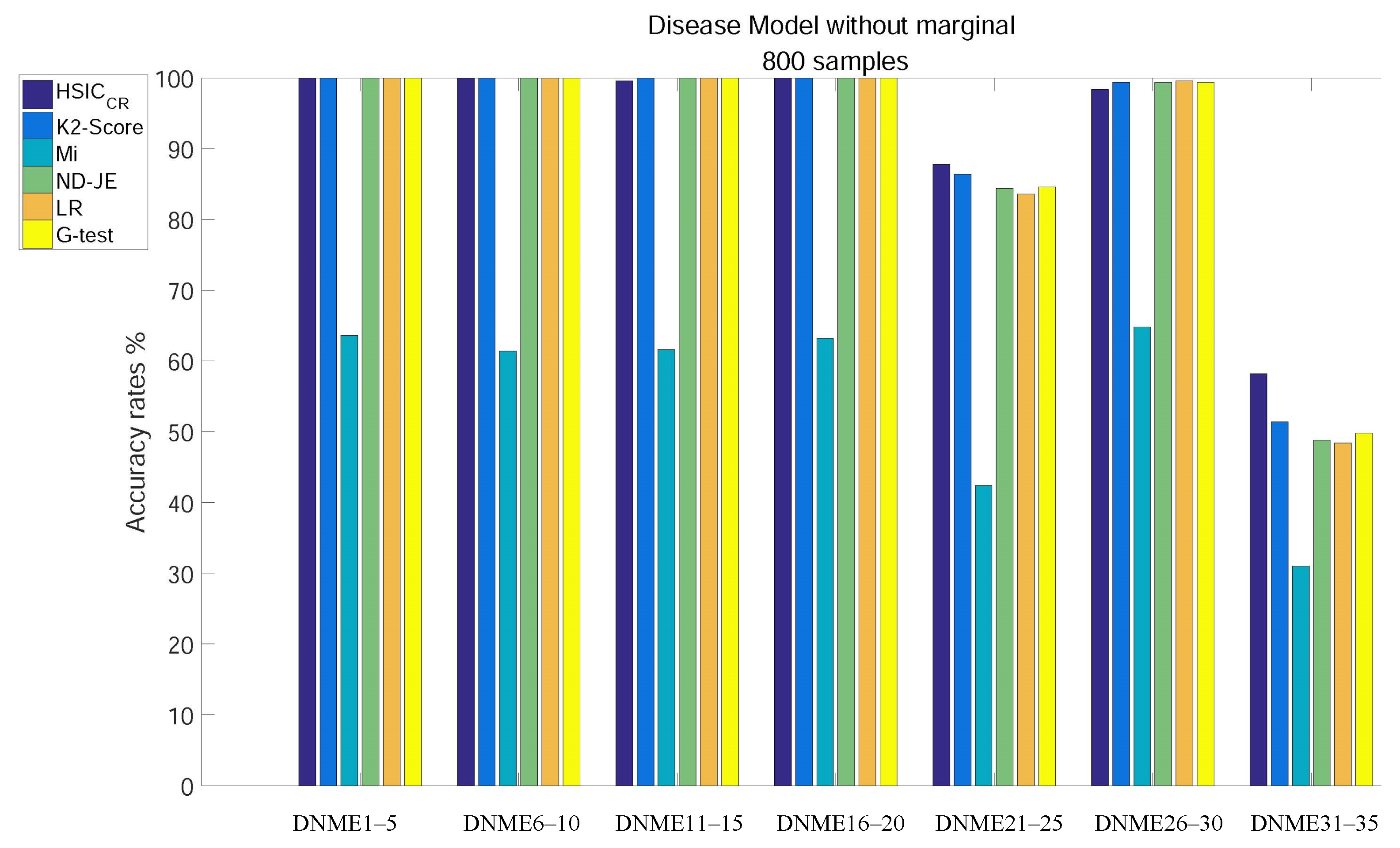

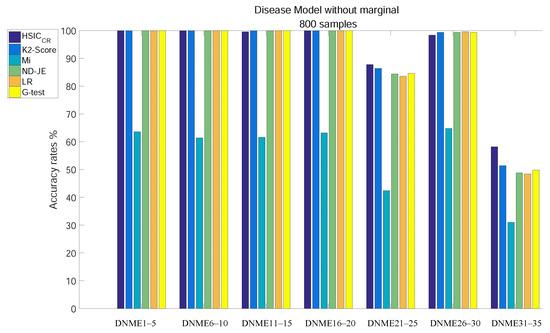

When the size of samples increased from 400 to 800, the accuracy of all criteria was greatly improved. The analysis results of subgroups of DNME1–35, each of which has 800 samples, are shown in Figure 4:

Figure 4.

Disease model without marginal effects, with 800 samples and with .

- Except for Mi, the accuracy of all criteria is close to 100% excluding tests on the two most difficult model subgroups (DNME21–25 and DNME31–35);

- Although the accuracy rate of Mi can be significantly improved with the increase in the size of samples, it is still relatively poor overall;

- With the number of samples increasing, there is still no change in the overall ranking, but the accuracy of the K2-Score on the DNME31–35 test rises to second;

- HSIC has the highest accuracy on the two most difficult model subgroup tests.

Table 1 reveals the total average accuracy. From Table 1, we can find that Mi has a poor average accuracy; HSIC has the best average accuracy regardless of the model’s sample scale of 400 or 800; although HSIC is only slightly higher than the other four criteria on the total average accuracy, and the average accuracy on the most difficult model subgroup test is much better than other criteria.

Table 1.

The number of times, out of 3500 data sets generated by 35 models without marginal effects, where , that each scoring criterion identified epistasis SNPs of snp1000 for sample sizes of 400 and 800. The fourth column gives the total accuracy over all sample sizes. The last column gives the accuracy over all sample sizes in the most difficult subgroup models. The scoring criteria are listed in descending order of total accuracy.

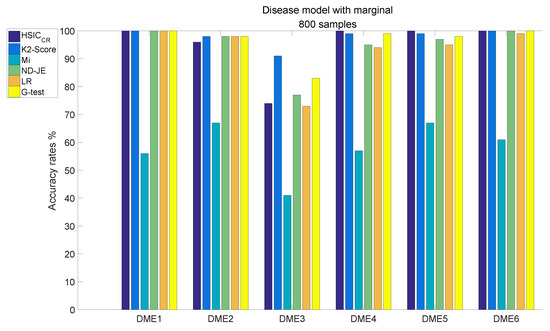

Disease Models with Marginal Effects

We tested six DMEs for analysis according to MAF = 0.1 and the different combined values of heritability and prevalence (DME1 h = 0.031 and p = 0.050; DME2 h = 0.014 and p = 0.050; DME3 h = 0.01 and p = 0.050; DME4 h = 0.016 and p = 0.046; DME5 h = 0.009 and p = 0.026; DME6 h = 0.008 and p = 0.017).

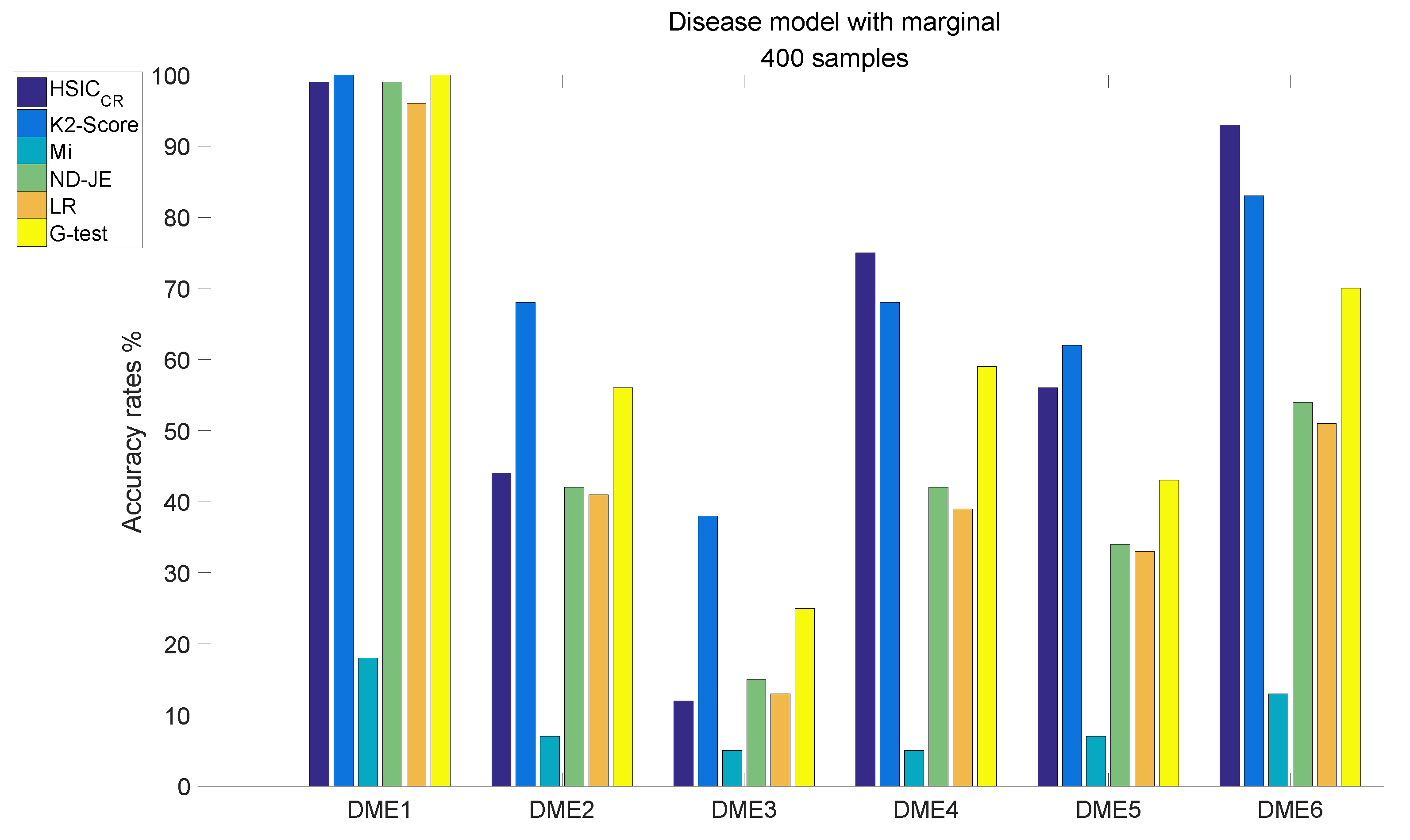

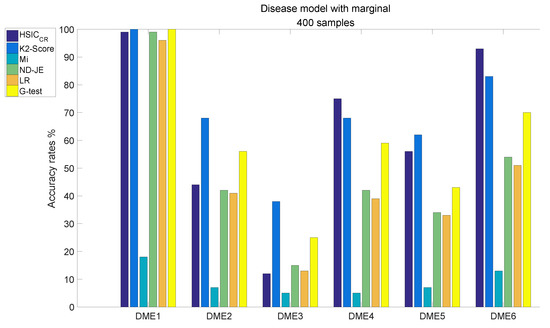

The analysis results of DME1–6, each of which has 400 samples, are shown in Figure 5:

Figure 5.

Disease model with marginal effects, with 400 samples and .

- The accuracy of all scoring criteria is close to 100% tested on DME1, except for Mi;

- Mi has extremely poor accuracy on all six models tests;

- Except for Mi, the accuracy rate of LR is worse than the other four criteria on DME2–6 tests, except that the accuracy on the DME3 test is nearly the same as that of HSIC;

- The accuracy rate of ND-JE ranks third on DME1 and DME3 tests, and fourth on the other four models tests;

- The accuracy rate of G-test ranks first on the DME1 test, second on DME2 and DME3 tests and third on the other four models tests;

- HSIC has the highest accuracy rate on DME4 and DME6 tests, its accuracy rate on the DME5 test is slightly worse than LR and the accuracy rate on DME1–2 tests ranks third, whereas the accuracy rate on the DME3 test is a little better than Mi;

- K2-Score has the highest accuracy rate on DME1–3 and DME5 tests, its accuracy rate on DME4 and DME6 tests ranks second and it significantly outperforms the others on the most difficult model (DME3) test (although its accuracy rate in DME3 is below 50%).

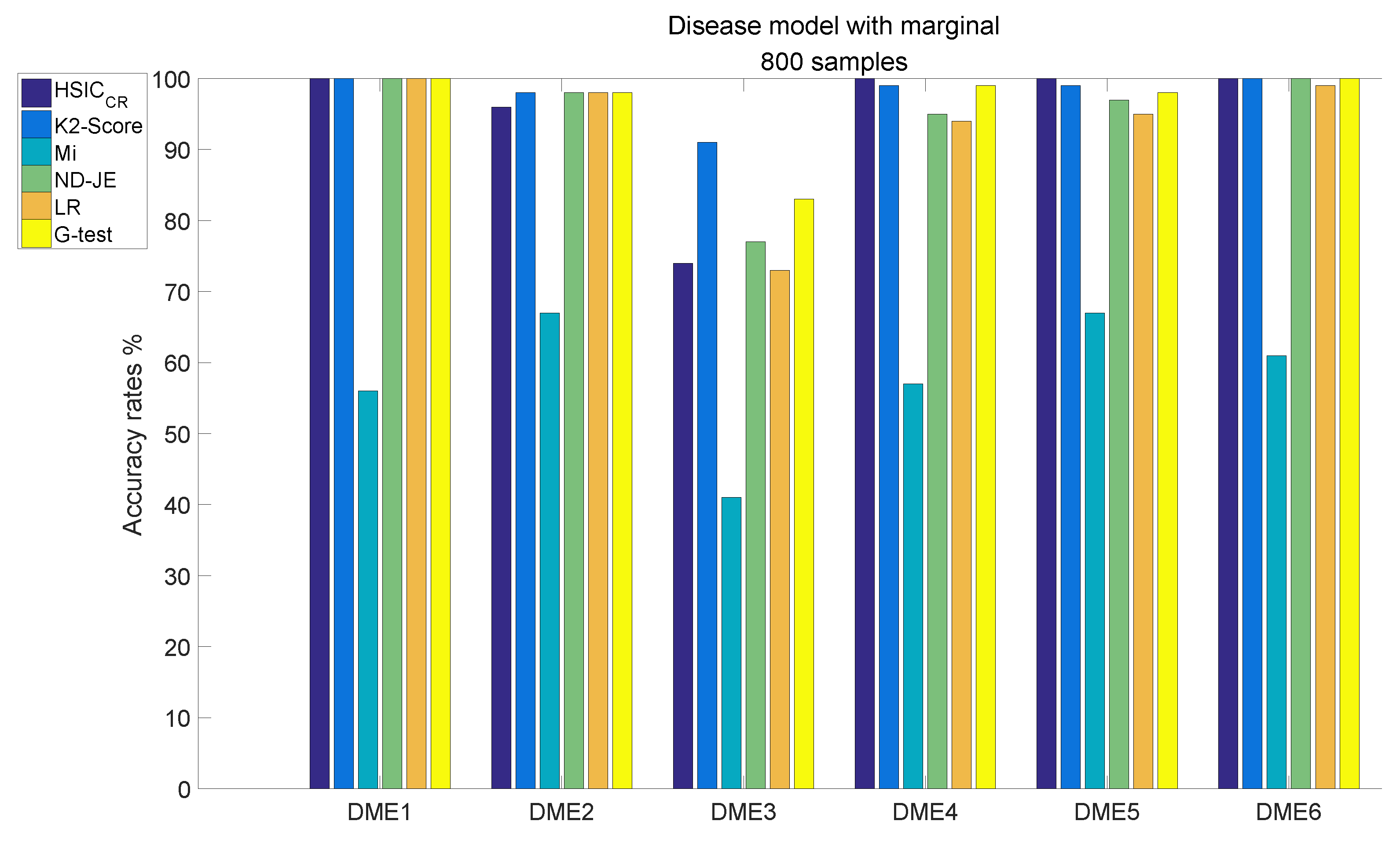

When the size of samples increased from 400 to 800, the accuracy of all criteria was greatly improved. The analysis results of DME1–6, each of which has 800 samples, are shown in Figure 6:

Figure 6.

Disease model with marginal effects, with 800 samples and .

- Although the accuracy of Mi can be significantly improved with the increase in the size of samples, it is still relatively poor overall;

- The accuracy rates of the other five scoring criteria all exceed 95% tested by the models, except on DME3;

- K2-Score has the highest accuracy rate on the most difficult model test (over 90%), the accuracy rate of G-test ranks second (over 80 %) and the accuracy rates of ND-JE, HSIC and LR are not good enough, at just over .

Table 2 reveals the total average accuracy. From Table 2, we can find that Mi has a poor average accuracy; the K2-Score has the best average accuracy rate regardless of the model’s sample scale of 400 or 800, where the main reason is that its accuracy rate on the DME3 test is much better than the other five scoring criteria; although the average accuracy of HSIC tested on DME3 is not good enough, it ranks second in the overall average accuracy rate.

Table 2.

The number of times, out of 600 data sets generated by six models with marginal effects, where , that each scoring criterion identified epistasis SNPs of snp1000 for sample sizes of 400 and 800. The fourth column gives the total accuracy over all sample sizes. The last column gives the accuracy over all sample sizes in the most difficult model. The scoring criteria are listed in descending order of total accuracy.

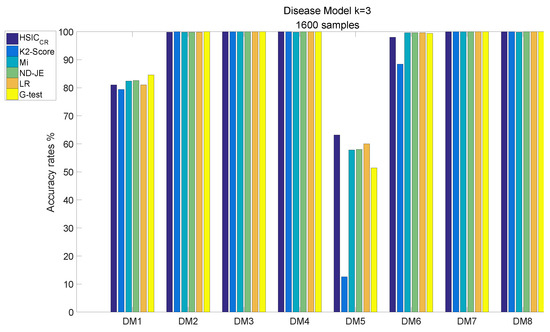

4.2.2. Disease Models with k = 3

For , the data sets are generated by eight third-order epistasis pathogenic models (DM1–8), which are modeled by GAMETES2.1 according to the combinations of different MAFs ([0.2, 0.4]) and different heritability ([0.025, 0.05, 0.1, 0.2]) (DM1 MAF = 0.2, h = 0.025; DM2 MAF = 0.2, h = 0.05; DM3 MAF = 0.2, h = 0.1; DM4 MAF = 0.2, h = 0.2; DM5 MAF = 0.4, h = 0.025; DM6 MAF = 0.4, h = 0.05; DM7 MAF = 0.4, h = 0.1; DM8 MAF = 0.4 h = 0.2). The ♯quantiles of each combination is five. Every quantile of each pathogenic model corresponds to 100 simulated data files. Each file contains 100 SNPs and 1600 samples (800 normal, 800 diseased), and includes three interacting SNPs (M0P0, M1P1 and M2P2) generated according to the disease model settings, while other SNPs were generated using MAFs uniformly selected in [0.05, 0.5]. Therefore, the total number of the data sets is 4000 [6]. Detailed parameter settings are described in the supplementary file.

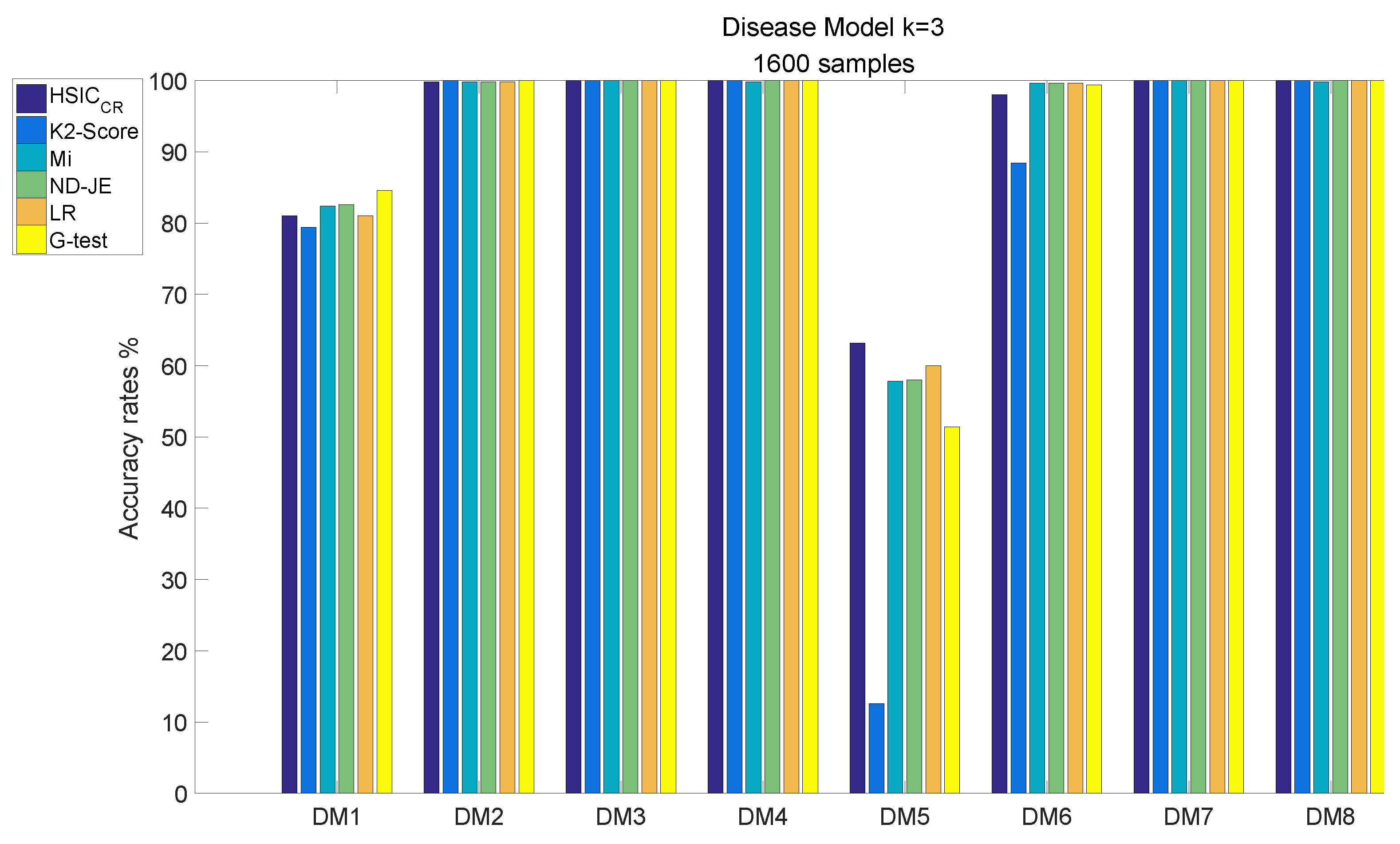

The analysis results of DM1–8, each of which has 1600 samples, are shown in Figure 7:

Figure 7.

Disease model with 1600 samples and .

- The accuracy of all scoring criteria is close to 100% tested on DM2, DM3–4 and DM7–8;

- The accuracy of all scoring criteria is close to 80% on the DM1 test;

- The K2-Score has a poor accuracy rate on the most difficult model (DM5) test, whose accuracy is just close to ;

- The accuracy rates of the scoring criteria on the DM6 test are good enough, and the accuracy rates of the scoring criteria are close to 100%, except the K2-Score;

- HSIC has the highest accuracy rate on the DM5 test, and its accuracy rate is the only one that exceeds 60% among all scoring criteria.

Table 3 reveals the total average accuracy. From Table 3, we can find that the K2-Score has a poor average accuracy on the most difficult model test; the total average accuracy rates for all scoring criteria are good enough, with all criteria except the K2-Score achieving over 90% accuracy. Although HSIC has the best total average accuracy, its accuracy is not significantly better than other four criteria; however, tested on the most difficult model, the accuracy rate of HSIC outperforms LR by 3.2%, and significantly outperforms the other four criteria, especially the K2-Score.

Table 3.

The number of times, out of 4000 data sets generated by eight models, where , that each scoring criterion identified epistasis SNPs of snp100 for 1600 samples. The second column gives the total accuracy over a sample size of 1600. The last column gives the accuracy over a sample size of 1600 in the most difficult model. The scoring criteria are listed in descending order of total accuracy.

4.2.3. The Running Time Analysis

To demonstrate that our proposed method can be used as a lightweight scoring criterion, we proved in the previous section that its time complexity is . Furthermore, we calculated the average running time per dataset (unit in seconds) for the two-order with a sample size of 800 and the three-order in the simulation experiments, and we found that the average running time per dataset of our proposed method is between the other five lightweight methods (see Table 4). This further demonstrates the applicability of our proposed method as a lightweight scoring criterion.

Table 4.

Average running time (s) for the six scoring criteria per dataset for both the two-order tests and the three-order tests in the simulation experiments.

In the experiment, all scoring criteria were implemented based on Matlab, and all tests were run on the environment of Windows 10 64 desktop computer with 11th Gen Intel(R) Core(TM) i7-1165G7 @ 2.80 GHz, and 16.0 GB memory.

4.3. Case Study: A Real Chronic Dialysis Data

A real data set of 193 cases and 704 controls was selected from the mitochondrial D-loop region of chronic dialysis patients who were observed in a study by other authors [32]. The genotypes and locations of 77 SNPs are presented in Table 5 [33].

Table 5.

Positions of chronic dialysis-associated 77 SNPS in mitochondrial d-loop region. Left and right letters are major and minor genotypes, respectively. The number is the SNP position in the mitochondrial D-loop region.

The 77 SNPs contained in the subset of the chronic dialysis data set were used in the case study, which aims to give our readers more specificity regarding our proposed scoring criterion. First, for this dataset, we performed a full-space two-order SNP combination detection, meaning that the HSIC values were evaluated for 2926 () possible combinations. Then, we selected the top ten HISC-valued combinations as candidate two-order epistasis SNP combinations to be raised for medical researchers, and the 10 candidate combinations are presented in Table 6.

Table 6.

The top ten highest HISC-valued two-order SNPs were used as ten candidate combinations.

5. Conclusions

In this paper, we verified with rigorous mathematical proof that HSIC can be computed in time. Moreover, we compared HSIC with five representative scoring criteria for 49 simulation disease models. The experimental results show that: Mi has a poor accuracy on two-order disease models; the K2-Score has a poor accuracy on three-order difficult disease models; the accuracy rates of LR are not good enough on two-order disease models tests; HSIC, G-test and ND-JE have a high accuracy on all three classes of disease models tests; the accuracy rates of HSIC rank first on two-order disease models without marginal effects tests and three-order disease model tests, and rank second on two-order disease models with marginal effects tests, although its advantage is not significant.

The advantages of HSIC are: the methodology used is different from other scoring criteria, which makes it more complementary to other scoring criteria; it has a high accuracy on most disease models.

In the future, we will further investigate proposing efficient SIO algorithms to solve this problem by combining HSIC and other effective lightweight criteria that already exist as weighted single or multi-objective functions. In addition, we will work with several local medical research institutions to use their real disease case-control study data to mine for disease-related SNP combinations by using our proposed approach. This will ultimately provide new guidance for drug development in complex diseases.

Supplementary Materials

The following supporting information can be downloaded at: https://www.mdpi.com/article/10.3390/math10214134/s1, Table S1: Model with marginal effects when k = 2; Table S2: Models 1 to 10 without marginal effects when k = 2; Table S3: Models 11 to 20 without marginal effects when k = 2; Table S4: Models 21 to 30 without marginal effects when k = 2; Table S5: Models 31 to 35 without marginal effects when k = 2.

Author Contributions

Conceptualization, J.Z. (Junxi Zheng); data curation, J.Z. (Junxi Zheng) and J.Z. (Jiaxian Zhu); formal analysis, J.Z. (Juan Zeng), J.Z. (Jiaxian Zhu) and F.W.; funding acquisition, J.Z. (Junxi Zheng); investigation, G.L. and F.W.; methodology, J.Z. (Junxi Zheng); project administration, D.T. and X.W.; supervision, D.T. and X.W.; validation, J.Z. (Junxi Zheng) and J.Z. (Juan Zeng); visualization, G.L.; writing—original draft, J.Z. (Junxi Zheng) and X.W. All authors have read and agreed to the published version of the manuscript.

Funding

This work was supported by the Guangdong provincial medical research foundation of China (No. A2022531), the national natural science foundation of China (No. 61976239), and the natural science foundation of Guangdong province, China (No. 2020A1515010783).

Data Availability Statement

The data that support the findings of this study can be acquired from the corresponding author.

Conflicts of Interest

No potential conflict of interest was reported by the authors.

Abbreviations

The following abbreviations are used in this manuscript:

| SNP | single-nucleotide polymorphism |

| GWAS | genome-wide association analysis |

References

- Carlson, C.S.; Eberle, M.A.; Kruglyak, L.; Nickerson, D.A. Mapping complex disease loci in whole-genome association studies. Nature 2004, 429, 446–452. [Google Scholar] [CrossRef] [PubMed]

- Wei, W.H.; Hemani, G.; Haley, C.S. Detecting epistasis in human complex traits. Nat. Rev. Genet. 2014, 15, 722–733. [Google Scholar] [CrossRef]

- Guo, X.; Meng, Y.; Yu, N.; Pan, Y. Cloud computing for detecting high-order genome-wide epistatic interaction via dynamic clustering. BMC Bioinform. 2014, 15, 102. [Google Scholar] [CrossRef]

- Guo, X.; Zhang, J.; Cai, Z.; Du, D.Z.; Pan, Y. Searching genome-wide multi-locus associations for multiple diseases based on bayesian inference. IEEE/ACM Trans. Comput. Biol. Bioinform. 2016, 14, 600–610. [Google Scholar] [CrossRef]

- Gyenesei, A.; Moody, J.; Semple, C.A.; Haley, C.S.; Wei, W.H. High-throughput analysis of epistasis in genome-wide association studies with BiForce. Bioinformatics 2012, 28, 1957–1964. [Google Scholar] [CrossRef][Green Version]

- Liyan, S. The Research on Epistasis Detection Algorithm in Genome-wide Association Study. Ph.D. Thesis, Jilin University, Changchun, China, 2020. [Google Scholar]

- Ritchie, M.D.; Hahn, L.W.; Roodi, N.; Bailey, L.R.; Dupont, W.D.; Parl, F.F.; Moore, J.H. Multifactor-dimensionality reduction reveals high-order interactions among estrogen-metabolism genes in sporadic breast cancer. Am. J. Hum. Genet. 2001, 69, 138–147. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.; Cao, X.; Feng, Y.; Guo, M.; Yu, G.; Wang, J. ELSSI: Parallel SNP–SNP interactions detection by ensemble multi-type detectors. Brief. Bioinform. 2022, 23, bbac213. [Google Scholar] [CrossRef] [PubMed]

- Tuo, S.; Liu, H.; Chen, H. Multipopulation harmony search algorithm for the detection of high-order SNP interactions. Bioinformatics 2020, 36, 4389–4398. [Google Scholar] [CrossRef]

- Sun, Y.; Shang, J.; Liu, J.X.; Li, S.; Zheng, C.H. epiACO—A method for identifying epistasis based on ant Colony optimization algorithm. BioData Min. 2017, 10, 23. [Google Scholar] [CrossRef]

- Tuo, S.; Zhang, J.; Yuan, X.; He, Z.; Liu, Y.; Liu, Z. Niche harmony search algorithm for detecting complex disease associated high-order SNP combinations. Sci. Rep. 2017, 7, 11529. [Google Scholar] [CrossRef]

- Aflakparast, M.; Salimi, H.; Gerami, A.; Dubé, M.; Visweswaran, S.; Masoudi-Nejad, A. Cuckoo search epistasis: A new method for exploring significant genetic interactions. Heredity 2014, 112, 666–674. [Google Scholar] [CrossRef] [PubMed]

- Jing, P.J.; Shen, H.B. MACOED: A multi-objective ant colony optimization algorithm for SNP epistasis detection in genome-wide association studies. Bioinformatics 2015, 31, 634–641. [Google Scholar] [CrossRef] [PubMed]

- Cheng, R.; Jin, Y.; Olhofer, M.; Sendhoff, B. A reference vector guided evolutionary algorithm for many-objective optimization. IEEE Trans. Evol. Comput. 2016, 20, 773–791. [Google Scholar] [CrossRef]

- Shouheng, T.; Hong, H. DEaf-MOPS/D: An improved differential evolution algorithm for solving complex multi-objective portfolio selection problems based on decomposition. Econ. Comput. Econ. Cybernet. Stud. Res. 2019, 53, 151–167. [Google Scholar]

- Verzilli, C.J.; Stallard, N.; Whittaker, J.C. Bayesian graphical models for genomewide association studies. Am. J. Hum. Genet. 2006, 79, 100–112. [Google Scholar] [CrossRef]

- Cooper, G.F.; Herskovits, E. A Bayesian method for the induction of probabilistic networks from data. Mach. Learn. 1992, 9, 309–347. [Google Scholar] [CrossRef]

- Jiang, X.; Neapolitan, R.E.; Barmada, M.M.; Visweswaran, S. Learning genetic epistasis using Bayesian network scoring criteria. BMC Bioinform. 2011, 12, 89. [Google Scholar] [CrossRef]

- Zhang, Y.; Liu, J.S. Bayesian inference of epistatic interactions in case-control studies. Nat. Genet. 2007, 39, 1167–1173. [Google Scholar] [CrossRef]

- Paninski, L. Estimation of entropy and mutual information. Neural Comput. 2003, 15, 1191–1253. [Google Scholar] [CrossRef]

- Lin, J. Divergence measures based on the Shannon entropy. IEEE Trans. Inf. Theory 1991, 37, 145–151. [Google Scholar] [CrossRef]

- Bush, W.S.; Edwards, T.L.; Dudek, S.M.; McKinney, B.A.; Ritchie, M.D. Alternative contingency table measures improve the power and detection of multifactor dimensionality reduction. BMC Bioinform. 2008, 9, 238. [Google Scholar] [CrossRef] [PubMed]

- Neyman, J.; Pearson, E.S. On the use and interpretation of certain test criteria for purposes of statistical inference: Part I. Biometrika 1928, 20A, 175–240. [Google Scholar]

- Stamatis, D.H. Essential Statistical Concepts for the Quality Professional; CRC Press: Boca Raton, FL, USA, 2012. [Google Scholar]

- Pearl, J. Models, Reasoning and Inference; Cambridge University Press: Cambridge, UK, 2000; Volume 19. [Google Scholar]

- Schaid, D.J. Genomic similarity and kernel methods I: Advancements by building on mathematical and statistical foundations. Hum. Hered. 2010, 70, 109–131. [Google Scholar] [CrossRef]

- Gretton, A.; Bousquet, O.; Smola, A.; Schölkopf, B. Measuring statistical dependence with Hilbert-Schmidt norms. In Proceedings of the International Conference on Algorithmic Learning Theory, Singapore, 8–11 October 2005; Springer: Berlin/Heidelberg, Germany, 2005; pp. 63–77. [Google Scholar]

- Gretton, A.; Fukumizu, K.; Teo, C.; Song, L.; Schölkopf, B.; Smola, A. A kernel statistical test of independence. Adv. Neural Inf. Process. Syst. 2007, 20, 585–592. [Google Scholar]

- Kodama, K.; Saigo, H. KDSNP: A kernel-based approach to detecting high-order SNP interactions. J. Bioinform. Comput. Biol. 2016, 14, 1644003. [Google Scholar] [CrossRef]

- Urbanowicz, R.J.; Kiralis, J.; Sinnott-Armstrong, N.A.; Heberling, T.; Fisher, J.M.; Moore, J.H. GAMETES: A fast, direct algorithm for generating pure, strict, epistatic models with random architectures. BioData Min. 2012, 5, 16. [Google Scholar] [CrossRef] [PubMed]

- Yang, C.H.; Chuang, L.Y.; Lin, Y.D. Multiobjective multifactor dimensionality reduction to detect SNP–SNP interactions. Bioinformatics 2018, 34, 2228–2236. [Google Scholar] [CrossRef]

- Chen, J.B.; Yang, Y.H.; Lee, W.C.; Liou, C.W.; Lin, T.K.; Chung, Y.H.; Chuang, L.Y.; Yang, C.H.; Chang, H.W. Sequence-based polymorphisms in the mitochondrial D-loop and potential SNP predictors for chronic dialysis. PLoS ONE 2012, 7, e41125. [Google Scholar] [CrossRef]

- Yang, C.H.; Kao, Y.K.; Chuang, L.Y.; Lin, Y.D. Catfish Taguchi-based binary differential evolution algorithm for analyzing single nucleotide polymorphism interactions in chronic dialysis. IEEE Trans. Nanobiosci. 2018, 17, 291–299. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).