Abstract

The paper presents an optimal control problem for the partially observable stochastic differential system driven by an external Markov jump process. The available controlled observations are indirect and corrupted by some Wiener noise. The goal is to optimize a linear function of the state (output) given a general quadratic criterion. The separation principle, verified for the system at hand, allows examination of the control problem apart from the filter optimization. The solution to the latter problem is provided by the Wonham filter. The solution to the former control problem is obtained by formulating an equivalent control problem with a linear drift/nonlinear diffusion stochastic process and with complete information. This problem, in turn, is immediately solved by the application of the dynamic programming method. The applicability of the obtained theoretical results is illustrated by a numerical example, where an optimal amplification/stabilization problem is solved for an unstable externally controlled step-wise mechanical actuator.

1. Introduction

The linear quadratic Gaussian (LQG) control problem is one of the most productive results in the control theory. It represents the synthesis of a control law for a linear-Gaussian stochastic system state, which minimizes the expected value of a quadratic utility function [1]. The application area of the result is extremely broad: Aerospace applications were and still are important and include, for example, re-entry vehicle tracking and orbit determination [1]; other uses include navigation and guidance systems, radar tracking, and sonars, i.e., the same tasks that became the first applications for the Kalman filter [2]. Non-stationary technological objects management also became a significant source of applications. These industrial applications arise in paper and mineral processing industries, biochemistry and energy, and many other domains [3]. Industrial applications are often represented by very specific examples, for example, dry process rotary cement kiln, cement raw material mixing, and thickness control of a plastic film [4,5]. Of particular interest are the tasks connected with the mechanics of drives and manipulators, e.g., two-link manipulator stabilization [6] or output feedback stabilization for robot control [7]. In fact, these models do not have stochastic elements, but the applications served as a source of motivation for the present research.

One of the cornerstones of the contemporary LQG technique is the separation theorem, which is immanent to control problems with incomplete information [8,9]. This result justifies a transparent engineering approach, according to which the initial control problem splits into the unobservable state estimation stage and control synthesis based on the obtained state estimate. In the general nonlinear/non-Gaussian case, the separation procedure does not deliver an absolute optimum in the class of the admissible controls. Nevertheless, forcing the separation of the state estimation and control optimization allows synthesizing high-performance controls for various applied problems. This approach is called separation principle. Its applications are not only the aforementioned traditional tasks for applying Kalman filtering and LQG control. Recent applications cover rather modern areas, e.g., of telecommunications: In [10,11], LQG and Risk-Sensitive LQG controls are used in Denial-of-Service (DoS) attack conditions, and [12] uses similar models and tasks for control applications in lossy data networks. In [13], user activity measurements are used to manage computational resources of a data center or deep learning. In [14], the ideas of estimation and control separation are adapted for neural network training tasks. We can also note the development of the meaning of the term “separation”. In [15], for hybrid systems containing real-time control loops and logic-based decision makers, the control loops are separated. In [16], separation is interpreted in the sense that the output feedback controller is based on some separate properties related to state feedback on the one hand and output to state stability on the other hand.

Control problems with incomplete information, especially in stochastic settings, are usually of much higher relevance than those with complete information. The separation principle, being applied in a general control problem, provides a chance to use known theoretical results and corresponding numerical procedures developed for optimal stochastic control with complete information [17,18,19]. The separation principle implies the necessity to calculate a most precise state estimate given the available indirect noisy observations, which results in the optimal filtering problem. Its general solution, in turn, is characterized by either Kushner–Sratonovich [20,21] or Duncan–Mortensen–Zakai [22,23,24] equations, which describe posterior state distribution evolution in time. An approach developed in [25,26,27] results in easily calculated state estimates only in a limited number of special cases. Some examples can be found in [28] for a controlled Markov jump process (MJP).

For the quadratic criterion, the most general model where the separation theorem is valid was considered in [29,30,31]. The main goal of our research is to apply these results to a typical linear model of a mechanical actuator augmented by MJP to simulate changes in the actuator operating modes or external drift. The difference is that, in mentioned papers, there was no quadratic term involving the observed state; the criterion in [31] is more general but still does not include a term with an unobservable MJP. In this case, the obtained results provide an optimal control in the form of linear dependency on the observed state and the optimal estimate of the unobservable component. The latter is interpreted as an uncontrollable disturbance and is not included in the criterion. In the target application of our work, unobservable MJP represents the state of a controlled system. This state can be called an operating mode, and the purpose of control is to monitor this mode and to stabilize the system under the conditions determined by the mode switches. For the analysis of such a system, we do not need general models of the unobserved component as in [29,31] and the corresponding optimal filtering equations, which are difficult to implement in practice. For our purposes, an MJP model as in [30] and optimal Wonham filter [32] are sufficient. Thus, the main theoretical goal of our research is to develop the result of [30] for the case of a general quadratic criterion containing a term with unobservable MJP. The practical goal is to implement this statement in order to stabilize the mechanical actuator in conditions of changing operating modes.

The paper is organized as follows. Section 2 contains a thorough statement of an optimal control problem. The controlled observable output represents a solution to a linear stochastic differential system governed by some unobservable finite-state Markov jump process. The control appears on the right-hand side (RHS) as an additive term with some nonrandom matrix gain. The optimality criterion is a generalization of the traditional quadratic one. It allows investigating various optimal control problems (MJP state tracking in terms of integral performance index, control assistance in systems with noisy controls, damping of the statistically uncertain exogenous step-wise disturbances, etc.) using a unified approach.

On the one hand, a slight modification of the Wonham filter admits the calculation of the conditional expectation of an unobservable MJP state given output observations. On the other hand, the investigated dynamic system can be rewritten in form, which can be treated as a controlled system with complete information. In turn, the optimality criterion can be transformed to a form where the dependency on the unobserved MJP is only through its estimate. These manipulations, performed in Section 3 legitimize the usage of the separation principle in the investigated control problem (see Proposition 1).

Generally, in order to synthesize the optimal control, one has to solve the dynamic programming equation. However, the similarity of the considered control problem to the “classic” LQG case gives a hint for the form of the Bellman function. One can observe that it is a quadratic function of the controlled state. Hence, the problem is reduced to the characterization of corresponding coefficients. Section 4 and Section 5 contain their derivation.

Section 6 presents an illustrative example of the obtained theoretical results application. The object of the control is a mechanical actuator (e.g., the cart of a gantry crane). This actuator performs step-wise movements governed by an external unobservable controlling signal, which is an MJP corrupted by noise. The goal is the assistance of the actuator to carry out external commands more accurately. Section 7 contains concluding remarks.

2. Problem Statement

On the canonical probability space with filtration , , we consider a partially observable stochastic dynamic system:

where is an observable system state governed by a statistically uncertain (i.e., unobservable random) drift . We suppose that is a finite-state Markov jump process (MJP) with values in the set formed by unit coordinate vectors of the Euclidean space . The initial value Y has a known distribution , is a transition intensity matrix, and is a -adapted martingale with quadratic characteristic [33].

We denote natural filtration generated by system state z as and assume that . Furthermore, is a standard vector Wiener process. is a random vector with a finite variance. Z, , and are mutually independent; is an admissible control. We suppose that ; thus, the admissible control is a feedback control: [19]. We also assume that state is uniformly non-degenerate, i.e., , where is some positive value, and stands for a unit matrix of appropriate dimensionality.

The quality of the control law is defined by the following objective:

where , , and are known bounded matrix functions; is the Euclidean norm of a vector x; and below we also use the Euclidean norm of a matrix x. In order to eliminate the possibility of zero penalties for individual components of the control vector , which would make objective (3) physically incorrect, we assume that the common non-degeneracy assumption is valid.

Note that the stochastic system models (1) and (2) are the same as in [30] and are special cases of models in [29,31]. On the contrary, objective (3) includes additional terms. Namely, the objective from [29,30] is obtained at , and the one from [31] is obtained at . This reflects the key terminological difference of the problem under consideration: We consider as an element of the observation system, external drift, and not as a complex measurement error. Thus, we represent objective (3), which may find its application in tracking the drift with state or tracking the drift with control problems, which take into account control .

The matrix functions , , , and are bounded: for all . Thus, the existence of a strong solution to (1) is guaranteed for any admissible control . Moreover, we assume that all used time functions , , , , , , , , and are piecewise continuous to ensure that the typical conditions for the existence of solutions to the ordinary differential equations (ODE) obtained below are satisfied.

Our goal is to find an admissible control , which delivers the minimum to the quadratic criterion :

under the assumption of its existence.

3. Separation of Filtering and Control

In order to solve the problem formulated in Section 2, we need to transform it into a form that permits revealing two necessary properties. We note that for system (1) and (2) an optimal filtering problem solution is known: The expectation is given by the Wonham filter [33]. First, for the separation, we need to find such a representation of this filter that shows that the quality of the optimal filter estimate does not depend on the chosen control law. The second goal is to decompose objective (3) into independent additive terms, which determine separately the quality of control and the quality of estimation.

To that end, we propose a change of variables in (1) to eliminate terms and . Denote by a solution to equation , which is the matrix exponential . Moreover, denote by the following linear output transform:

By differentiating, we obtain the following:

or

with additional notation , .

Taking into account , for what follows, note the following:

The transformations we made do not affect the filtering problem solution, i.e., . That is because the change of variables used to obtain is a linear non-degenerate transformation of . Hence, the optimal filtering estimate does not depend on , and we can use as observations and obtain the same drift estimate no matter which admissible control law is chosen. Consequently, in place of the estimate problem given the control-dependent observations , we pass to an equivalent problem of estimation given the transformed observations , which are described by Equation (5) and do not depend on .

Denoting the optimal estimate , we write the equation for it. The uniform non-degeneracy of along with the one of the matrix exponential [34] legitimizes the use of the Wonham filter [33]:

Replacing variables , , and , we obtain the following:

Taking into account , we have the final representation for the Wohnam filter as follows:

where

is a differential of the -adapted standard vector Wiener process. This provides us the final observation equation:

where and the final new state equation is as follows:

Furthermore, by denoting the following:

we obtain a control system with complete information:

where both variables and are observable.

For final formulation of the equivalent control problem, one needs to perform the change of variables in objective (3). This can be conducted by using the law of total expectation [35] as follows:

Since the last additive term in the expression for does not depend on but merely characterises the quality of the optimal filter estimate , it can be excluded from the criterion. Hence, the new objective takes the following form:

The final statement is formulated as follows.

Proposition 1

4. Solution to Control Problem

In order to find the control for the system (9), which is optimal with respect to the criterion (3), we use the classical dynamic programming method [18,19].

Denote the following Bellman function:

where . Then, with the dynamic programming equation is as follows:

where admissible feedback control corresponds to system (9) designations; thus, . Note that this is the same control that appeared in the original statement, since .

The existence of a solution to (11) is a sufficient optimality condition. Moreover, the optimal control is the one that minimizes to the corresponding additive term. Hence, under condition and under the assumption of (11) solution existence, this minimum is delivered by the following control:

By substituting in (11) and regrouping the additive terms, we obtain the following:

The linear observations (9) and quadratic objective (10) suggest that a solution of the dynamic programming Equation (13) might be presented as follows:

which reduces the optimal solution search to a problem of finding a symmetric matrix function , vector function , and a scalar . Moreover, the explicit representation for is not necessary for optimal control. One only needs a derivative ; nevertheless, will be useful in determining the quality of control.

The Bellman function representation (14) can be simplified further by using the fact that the term with derivative in (13) contains only the multiplier . This suggests that is an affine transform of y:

where matrix . The boundary condition at the same time takes the following form:

Thus, contains two terms: The first term is linear in observations (output variable) z; the second one is linear in state (state estimate given by Wonham filter) y. In order to justify the assumptions made, it is necessary to derive , , and such that under assumptions (14) and (15) made about the Bellman function and the affinity of , from (14) would in fact be a solution of (13). To that end, we substitute (14) into Equation (13) and take into account that the derivatives of according to (15) are equal to and also that the following is the case:

After this substitution and minor transformations, grouping the terms at and z, at and at , we obtain equations for , and , respectively:

where the following is the case:

Finally, from (19), we obtain the equation for :

The obtained equations are solved with boundary conditions (16). In this case, Equation (18) is a matrix Riccati equation for a square symmetric matrix . The above assumptions regarding the piecewise continuity of the coefficients of this equation and condition are sufficient for the existence and uniqueness of a positive semidefinite solution for every . Indeed, such an equation occurs in the classical linear-quadratic problem, which admits a unique optimal feedback control—linear with respect to the output —and with a gain described by this Riccati equation [36].

Equation (21) is a system of linear ODEs with respect to variable with piecewise continuous coefficients; hence, the existence and uniqueness of a solution is ensured by the usual theorem for linear differential equations [37].

Equation (20) for is a linear partial differential equation of parabolic type, but unlike the equation for , it can not be simplified. We will assume that this equation has a solution, and we interpret this assumption as a sufficient condition the same as the above dynamic programming Equation (11).

The following statement summarizes the reasoning in the section.

5. Stochastic Representation of Gamma Functional

In order to implement control (12), one needs functions and . These can be calculated by means of any effective numerical method of ODE solution; hence, the practical solution of Equations (18) and (21) presents no difficulties. At the same time, the quality of optimal control is given by a solution of the dynamic programming equation:

Therefore, functional is also required. An approximate solution to (20) can be also obtained numerically by traditional grid methods, especially since these methods are well studied for equations of parabolic type. Nevertheless, growth of the dimensionality of Y may cause certain difficulties. However, the main thing is that, for Equation (20), there is only an initial condition defined by (16), more precisely, a terminal condition, and there is no boundary condition such as , which is necessary for numerical treatments.

That is why, in our opinion, an alternative approach based on the known relation between solutions of partial differential equations of parabolic type and stochastic equations is more promising. An example of such relation is the Kolmogorov equation [38], which determines the connection between the solution of the Cauchy problem with the terminal condition for the parabolic equation, on the one hand, and the solution of the related stochastic equation, on the other hand. Moreover, here, we need a simpler integral representation of the solution of a parabolic equation, known as the Feynman–Kac formula [39]. The application of such tools for the numerical solution of parabolic equations has been well studied in a more general setting than we need [40].

For differential Equation (20), the Feynman–Kac formula has the following form:

Here, we deliberately use the same notation for two different random processes:

- Random process, which generates a terminal condition under the mathematical expectation and passes through point ;

- The Wonham filter estimate, which substitutes the state in observation Equation (9).

This emphasizes the fact that both these processes are described by the same equation and have the same probabilistic characteristics.

For practical applications of (22), we rewrite this relation by adding an auxiliary variable in a differential form:

First, (23) is a stochastic representation of , which explains the meaning of this coefficient. Secondly, (23) provides a basis for an approximate calculation of this coefficient. To be more precise, the proposed method of the numerical calculation of coefficient consists in applying the Monte Carlo method to system (23). To be more specific, one needs a series of sample solutions of this system for different initial conditions in order to obtain an approximate value of as a statistical estimate of the terminal condition in (23).

6. Assistance of Mechanical Actuator Control

We investigate a controllable mechanical actuator designed for step-wise movement. Its model is described by the following dynamic system:

Here, we have the following:

- is an observable actuator position;

- is an observable actuator velocity;

- is an unobservable external control action, supposed to be an MJP with known transition intensity matrix and initial distribution vector ;

- is an external control noise supposed to be a standard Wiener process;

- is an admissible assisting control.

Thus, in the original notation, we have three-dimensional external unobservable control corrupted by the one-dimensional Wiener noise , two-dimensional observable state vector , and one-dimensional control . The other notations are as follows:

It is easy to verify that in the case of noiseless (i.e., ) external constant control action , the actuator coordinate tends to its steady-state value as . Without loss of generality, we suppose that all components of vector are different.

In the case of piecewise constant control action and constant , the mechanical actuator cannot implement the expected “ideal” step-wise trajectory due to the inertia and noise accompanying the external control. Thus, the aim of the assisting control is to improve actuator performance, and the corresponding criterion has the following form:

where is a dimensionless unit cost of the assisting control.

We perform the calculations for the following parameter values:

Stochastic system (24) is solved by the Euler–Maruyama numerical scheme with step ; meanwhile, Equations (18) and (21) are solved using the implicit Euler’s scheme with the same step.

Note, that the violation of condition in (24) does not prevent the use of the obtained result, since coordinate in the state is a simple integral of the velocity ; thus, in the filtering problem, one can use only observation .

It is easy to verify that the actuator is stable if and . In the example, we investigate only the stable case.

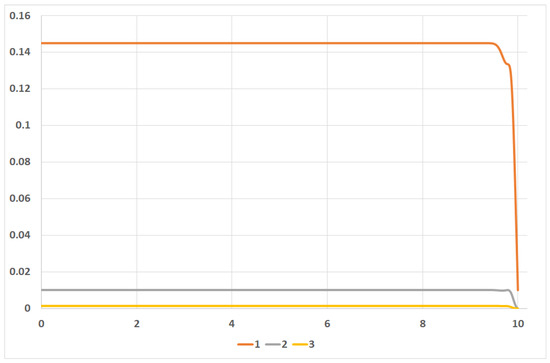

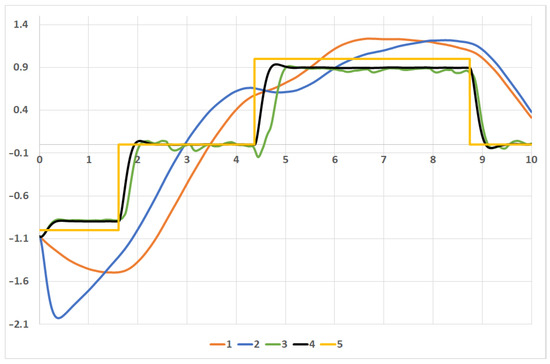

Figure 1.

Optimal control coefficients 1 −, 2 −, and 3 −.

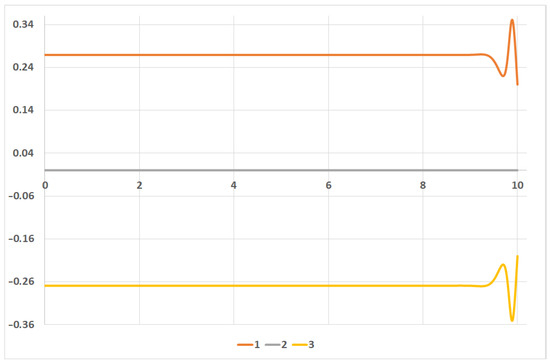

Figure 2.

Optimal control coefficients 1 −, 2 −, and 3 −.

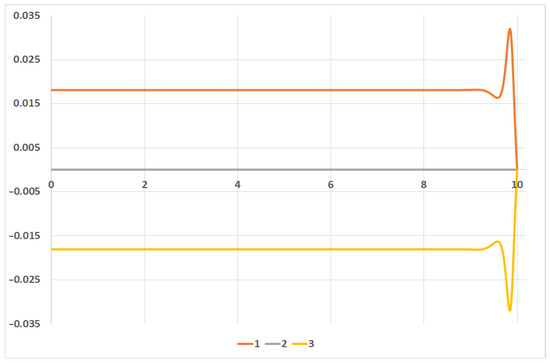

Figure 3.

Optimal control coefficients 1 −, 2 −, and 3 −.

Figure 1 contains plots of the evolution in time for coefficients , , and ; Figure 2 stands for coefficients , and ; Figure 3 stands for coefficients , and . Here, we denote by the element of matrix x with indexes .

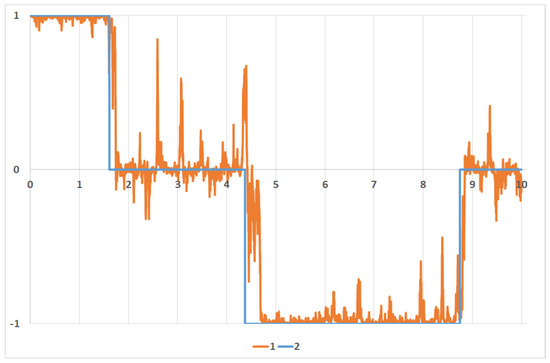

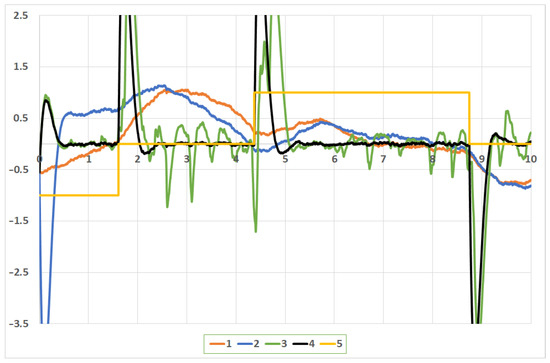

Figure 4 presents the comparison of the unobserved external control term and its estimate calculated by the Wonham filter.

Figure 4.

Trajectory and its estimate: 1 − and 2 −.

Figure 5 contains plots of the actuator coordinate governed by various types of assisting control:

Figure 5.

Actuator coordinate: 1 −, 2 −, 3 −, 4 −, and 5 − target .

- obtained without assisting control, i.e., for ;

- obtained under the trivial assisting control, i.e., for where control law is defined in (17), , ;

- the optimal trajectory calculated for the optimal control ;

- the ”ideal” trajectory calculated for control i.e., optimal control law defined in (17) with full information , .

A comparison with the step-wise “target” trajectory is conducted.

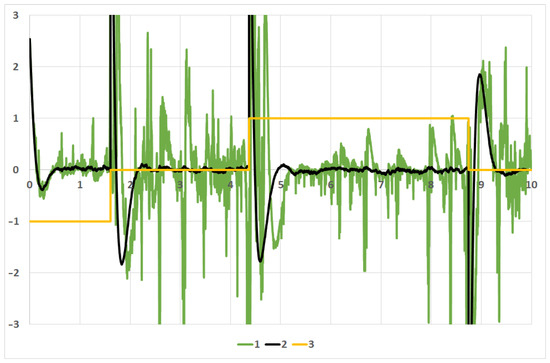

Figure 6 contains plots of the actuator velocity governed by various types of the assisting control:

Figure 6.

Actuator velocity: 1 −, 2 −, 3 −, 4 −, and 5 − target .

- obtained under control ;

- obtained under control ;

- The optimal trajectory calculated for the optimal control ;

- The ”ideal” trajectory calculated for the control .

Finally, Figure 7 contains the following:

Figure 7.

Controls: 1 −, 2 −, and 3 − target .

- The optimal control ;

- The “ideal” control .

We also calculate objective values for various control types: . Meanwhile, and , i.e., the optimal assistance improves the actuator performance significantly. Additionally, we calculate the performance index for the “ideal” control: , i.e., it does not demonstrate superiority in comparison with optimal control .

7. Conclusions

The principal results of the paper are as follows: the derived explicit form (17) of the optimal control in the problem at hand and the fact that this optimal solution is indeed a feedback control, in which the key role is played by the solution of the auxiliary optimal filtering problem, i.e., conditional expectation .

This provided grounds to formulate the main result, which is the separation theorem, generalizing the results of [29,30,31] to a general criterion (3). The fundamental difference between the classical LQG and the alternatives proposed in the referenced papers and the present research is that the transformed problem is not similar to the original one: the martingale representation of MJP (2) is replaced by a completely different object—the stochastic Ito equation with a Wiener process (9). Thus, the key to the solution turned out to be a unique property of the Wonham filter, which presents the MJP estimate given the indirect noisy observations in the form of the classical Ito equation with a Wiener process.

The obtained results admit the following interpretation and extensions. First, in the initial partially observable system, process can be treated as an unobservable system state; meanwhile, process plays the role of the controlled observable output. This point of view can be useful for various applications. Second, the obtained results remain valid in general if one complements the initial optimality criterion (3) by the additional terms for the output optimal tracking and by and for the optimal output/cost control. Third, the MJP can be replaced by any random process with a finite moment of the second order described by some nonlinear stochastic differential equation. In this case, the separation theorem is still correct, but coefficient in the optimal control is defined by some partial differential equation, and the optimal filtering estimate is described via the solution to the Kushner–Strtonovich or Duncan–Mortensen–Zakai equation. Fourth, if is an MJP, the diffusion coefficient in (1) can be replaced by function . In this manner, we extend the class dynamics by the systems with both the statistically uncertain piecewise constant drift and diffusion. All separation theorems and optimal control formulae remain valid. However, the solution to the optimal filtering problem is not expressed by the original Wonham filter but via its generalization [41,42].

Author Contributions

Conceptualization, A.B. (Andrey Borisov); methodology, A.B. (Alexey Bosov); software, G.M.; validation, A.B. (Andrey Borisov); formal analysis and investigation, A.B. (Alexey Bosov); writing—original draft preparation, A.B. (Alexey Bosov), G.M.; writing—review and editing, A.B. (Alexey Bosov); visualization, G.M.; supervision, A.B. (Andrey Borisov). All authors have read and agreed to the published version of the manuscript.

Funding

The research was supported by the Ministry of Science and Higher Education of the Russian Federation, project No. 075-15-2020-799.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Data sharing is not applicable to this article.

Acknowledgments

The research was carried out using the infrastructure of the Shared Research Facilities «High Performance Computing and Big Data» (CKP «Informatics») of FRC CSC RAS (Moscow).

Conflicts of Interest

The authors declare no conflict of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| LQG | Linear quadratic Gaussian; |

| MJP | Markov jump process; |

| ODE | Ordinary differential equation; |

| RHS | Right-hand side. |

References

- Athans, M. The Role and Use of the Stochastic Linear-Quadratic-Gaussian Problem in Control System Design. IEEE Trans. Autom. Control 1971, 16, 529–552. [Google Scholar] [CrossRef]

- Cipra, B.A. Engineers look to Kalman filtering for guidance. SIAM News 1993, 26, 8–9. [Google Scholar]

- Johnson, A. LQG applications in the process industries. Chem. Eng. Sci. 1993, 48, 2829–2838. [Google Scholar] [CrossRef]

- Mäkilä, P.; Westerlund, T.; Toivonen, H. Constrained linear quadratic gaussian control with process applications. Automatica 1984, 20, 15–29. [Google Scholar] [CrossRef]

- Westerlund, T. A digital quality control system for an industrial dry process rotary cement kiln. IEEE Trans. Autom. Control 1981, 26, 885–890. [Google Scholar] [CrossRef]

- Ammar, S.; Mabrouk, M.; Vivalda, J.C. Observer and global output feedback stabilisation for some mechanical systems. Int. J. Control 2009, 82, 1070–1081. [Google Scholar] [CrossRef]

- Battilotti, S.; Lanari, L.; Ortega, R. On the Role of Passivity and Output Injection in the Output Feedback Stabilisation Problem: Application to Robot Control. Eur. J. Control 1997, 3, 92–103. [Google Scholar] [CrossRef]

- Wonham, W.M. On the Separation Theorem of Stochastic Control. SIAM J. Control 1968, 6, 312–326. [Google Scholar] [CrossRef]

- Georgiou, T.T.; Lindquist, A. The Separation Principle in Stochastic Control, Redux. IEEE Trans. Autom. Control 2013, 58, 2481–2494. [Google Scholar] [CrossRef]

- Amin, S.; Cárdenas, A.A.; Sastry, S.S. Safe and Secure Networked Control Systems under Denial-of-Service Attacks. In Hybrid Systems: Computation and Control; Majumdar, R., Tabuada, P., Eds.; Springer: Berlin/Heidelberg, Germany, 2009; pp. 31–45. [Google Scholar]

- Befekadu, G.K.; Gupta, V.; Antsaklis, P.J. Risk-Sensitive Control Under Markov Modulated Denial-of-Service (DoS) Attack Strategies. IEEE Trans. Autom. Control 2015, 60, 3299–3304. [Google Scholar] [CrossRef]

- Sinopoli, B.; Schenato, L.; Franceschetti, M.; Poolla, K.; Sastry, S. An LQG Optimal Linear Controller for Control Systems with Packet Losses. In Proceedings of the 44th IEEE Conference on Decision and Control, Seville, Spain, 15 December 2005; pp. 458–463. [Google Scholar] [CrossRef] [Green Version]

- Bosov, A.V. Discrete stochastic system linear output control with respect to a quadratic criterion. J. Comput. Syst. Sci. Int. 2016, 55, 349–364. [Google Scholar] [CrossRef]

- Achille, A.; Soatto, S. A Separation Principle for Control in the Age of Deep Learning. Annu. Rev. Control Robot. Auton. Syst. 2018, 1, 287–307. [Google Scholar] [CrossRef] [Green Version]

- Bencze, W.; Franklin, G. A separation principle for hybrid control system design. IEEE Control Syst. Mag. 1995, 15, 80–84. [Google Scholar] [CrossRef]

- Besancon, G.; Battilottib, S.; Lanari, L. A new separation result for a class of quadratic-like systems with application to Euler–Lagrange models. Automatica 2003, 39, 1085–1093. [Google Scholar] [CrossRef]

- Kushner, H.; Dupuis, P.G. Numerical Methods for Stochastic Control Problems in Continuous Time; Stochastic Modelling and Applied Probability Series; Springer: New York, NY, USA, 2001; Volume 24. [Google Scholar] [CrossRef]

- Bertsekas, D.P. Dynamic Programming and Optimal Control; Athena Scientific: Cambridge, MA, USA, 2013. [Google Scholar]

- Fleming, W.H.; Rishel, R.W. Deterministic and Stochastic Optimal Control; Stochastic Modelling and Applied Probability Series; Springer: New York, NY, USA, 1975; Volume 1. [Google Scholar] [CrossRef]

- Stratonovich, R.L. Conditional Markov Processes. Theory Probab. Appl. 1960, 5, 156–178. [Google Scholar] [CrossRef]

- Kushner, H.J. On the differential equations satisfied by conditional probability densities of Markov processes with applications. J. Soc. Ind. Appl. Math. Ser. A Control 1964, 2, 106–119. [Google Scholar] [CrossRef]

- Mortensen, R.E. Stochastic Optimal Control with Noisy Observations. Int. J. Control 1966, 4, 455–464. [Google Scholar] [CrossRef]

- Duncan, T.E. On the Absolute Continuity of Measures. Ann. Math. Stat. 1970, 41, 30–38. [Google Scholar] [CrossRef]

- Zakai, M. On the optimal filtering of diffusion processes. Z. Wahrsch. Verw. Geb. 1969, 11, 230–243. [Google Scholar] [CrossRef]

- Davis, M.H.A.; Varaiya, P. Dynamic Programming Conditions for Partially Observable Stochastic Systems. SIAM J. Control 1973, 11, 226–261. [Google Scholar] [CrossRef]

- Beneš, V.; Karatzas, I. On the Relation of Zakai’s and Mortensen’s Equations. SIAM J. Control Optim. 1983, 21, 472–489. [Google Scholar] [CrossRef]

- Bensoussan, A. Stochastic Control of Partially Observable Systems; Cambridge University Press: Cambridge, UK, 1992. [Google Scholar]

- Miller, B.M.; Avrachenkov, K.E.; Stepanyan, K.V.; Miller, G.B. Flow Control as a Stochastic Optimal Control Problem with Incomplete Information. Probl. Inf. Transm. 2005, 41, 150–170. [Google Scholar] [CrossRef] [Green Version]

- Beneš, V. Quadratic Approximation by Linear Systems Controlled From Partial Observations. In Stochastic Analysis; Mayer-Wolf, E., Merzbach, E., Shwartz, A., Eds.; Academic Press: Cambridge, MA, USA, 1991; pp. 39–50. [Google Scholar] [CrossRef]

- Helmes, K.; Rishel, R. The solution of a partially observed stochastic optimal control problem in terms of predicted miss. IEEE Trans. Autom. Control 1992, 37, 1462–1464. [Google Scholar] [CrossRef]

- Rishel, R. A Strong Separation Principle for Stochastic Control Systems Driven by a Hidden Markov Model. SIAM J. Control Optim. 1994, 32, 1008–1020. [Google Scholar] [CrossRef]

- Wonham, W.M. Some Applications of Stochastic Differential Equations to Optimal Nonlinear Filtering. J. Soc. Ind. Appl. Math. Ser. A Control 1964, 2, 347–369. [Google Scholar] [CrossRef]

- Elliott, R.J.; Aggoun, L.; Moore, J.B. Hidden Markov Models. Estimation and Control; Stochastic Modelling and Applied Probability; Springer: New York, NY, USA, 1995. [Google Scholar] [CrossRef]

- Bhatia, R. Matrix Analysis; Graduate Texts in Mathematics; Springer: New York, NY, USA, 1997. [Google Scholar] [CrossRef]

- Shiryaev, A.N. Probability; Graduate Texts in Mathematics; Springer: New York, NY, USA, 1996. [Google Scholar] [CrossRef]

- Davis, M. Linear Estimation and Stochastic Control; A Halsted Press Book; Chapman and Hall: London, UK, 1977. [Google Scholar]

- Hurewicz, W. Lectures on Ordinary Differential Equations; Dover Phoenix Editions; Dover Publications, Incorporated: New York, NY, USA, 2002. [Google Scholar]

- Gihman, I.I.; Skorohod, A.V. The Theory of Stochastic Processes III; Springer: New York, NY, USA, 1979. [Google Scholar] [CrossRef]

- Oksendal, B. Stochastic Differential Equations: An Introduction with Applications; Universitext; Springer: Berlin/Heidelberg, Germany, 2003. [Google Scholar] [CrossRef]

- Fahim, A.; Touzi, N.; Warin, X. A probabilistic numerical method for fully nonlinear parabolic pdes. Ann. Appl. Probab. 2011, 21, 1322–1364. [Google Scholar] [CrossRef]

- Borisov, A.; Sokolov, I. Optimal Filtering of Markov Jump Processes Given Observations with State-Dependent Noises: Exact Solution and Stable Numerical Schemes. Mathematics 2020, 8, 506. [Google Scholar] [CrossRef] [Green Version]

- Borisov, A.; Bosov, A.; Miller, G.; Sokolov, I. Partial Diffusion Markov Model of Heterogeneous TCP Link: Optimization with Incomplete Information. Mathematics 2021, 9, 1632. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).