Abstract

A math word problems (MWPs) comprises mathematical logic, numbers, and natural language. To solve these problems, a solver model requires an understanding of language and the ability to reason. Since the 1960s, research on the design of a model that provides automatic solutions for mathematical problems has been continuously conducted, and numerous methods and datasets have been published. However, the published datasets in Korean are insufficient. In this study, we propose a Korean data generator for the first time to address this issue. The proposed data generator comprised problem types and data variations. Moreover, it has 4 problem types and 42 subtypes. The data variation has four categories, which adds robustness to the model. In total, 210,311 pieces of data were used for the experiment, of which 210,000 data points were generated. The training dataset had 150,000 data points. Each validation and test dataset had 30,000 data points. Furthermore, 311 problems were sourced from commercially available books on mathematical problems. We used these problems to evaluate the validity of our data generator on actual math word problems. The experiments confirm that models developed using the proposed data generator can be applied to real data. The proposed generator can be used to solve Korean MWPs in the field of education and the service industry, as well as serve as a basis for future research in this field.

Keywords:

math word problem; natural language processing; Korean data generator; Transformer; machine learning MSC:

68T50; 03B65; 91F20

1. Introduction

Advances in deep learning have significantly improved the performance of natural language processing (NLP) in comparison with traditional rules and statistics-based methods. The development of recurrent neural networks (RNNs) initiated the processing of time-series data, such as sentences, via neural structures. In [1], a long short-term memory (LSTM) unit was proposed to alleviate the long-term dependency problem that occurs when the input data is lengthened by adding a memory cell to the RNNs system. In [2], a gated recurrent unit (GRU) was implemented, which simplified the structure of the LSTM and, subsequently, reduced the parameter size while maintaining the performance. The aforementioned studies have established a solid position in the sequence-to-sequence (seq2seq) framework, which comprises an encoder–decoder structure that outputs an input as a sequence in a different domain [3]. Among several studies on sequence modeling, an attention mechanism was proposed to [4,5]. More specifically, the vanilla seq2seq model converts the input into a single context vector of a fixed size during encoding. However, including all the information without omission is challenging. Instead of using only a single fixed-length vector, the attention mechanism additionally employs an attention vector. The attention vector is obtained such that whenever the decoder predicts an output word, it refers to the input associated with that word in the encoder. Owing to the attention vector, each word can acquire more meaningful contextual information. Inspired by the attention mechanism, the Transformer architecture was proposed in [6], which comprises an encoder–decoder structure designed solely using attention mechanisms, without an RNNs-based network. The architecture exhibited fast learning rates and transformed the models commonly used in machine translation. Recently, large-scale language models [7,8] based on the Transformer structures have emerged and have various applications, such as chatbots [9], translation [10], and text-to-image conversion [11,12].

Designing a math word problems (MWPs) solver, where MWPs refer to problems involving mathematical logic, numbers, and natural language, is another field that has been attracting attention owing to advances in artificial intelligence. This task has been continuously addressed since the 1960s [13,14]. When the MWPs solver receives a question as input, it understands the given situation and extracts the necessary information from the sentence. Based on this information, the solver derives a new piece of information: an equation. For this task to be carried out smoothly, the model must have the ability to acquire various domain knowledge, such as that of humans (e.g., the linguistic domain: dozen = 12, and the geometric domain: circle area = radius × radius × pi). Moreover, it should also be able to use learned knowledge to understand the context and infer logical expressions. This task primarily requires an understanding of natural language and reasoning skills. Owing to these aspects, the MWPs task has recently been reported to be more suitable than the Turing test for evaluating the intelligence of a model [15].

According to a comprehensive survey on MWPs [16], this task tends to be primarily conducted in English and Chinese, which is also reflected in the published datasets. Meanwhile, research on Korean MWPs remains scarce. The reason for this may be the absence of a Korean dataset.To overcome this problem, a previous Korean study [17] adopted a method for translating datasets containing English MWPs. In this case, word replacement is required during translation. As some words appear in the dataset that reflects cultural differences (e.g., proper nouns and U.S. customary units), this does not fit the Korean context. Additionally, following this process for all data is inefficient. Therefore, in this study, we proposed a data generator instead of translating the datasets. We trained a Korean arithmetic problem solver using machine translation model structures and experimentally evaluated the validity of the data generator by measuring the performance of the solver.

The remainder of this paper is organized as follows. Section 2 provides an overview of the mathematical problem-solving model based on traditional machine learning algorithms and modern approaches. Section 3 presents the problem types and examples, variations that apply to the data, rules for the data, and components of the generated data. Section 4 describes the solver structure and training methods considered in this study. In Section 5, we present the experimental results and discussion. Lastly, Section 6 presents the conclusions of the study and directions for future research.

2. Related Work

The previous research can be broadly divided into three categories:

- A rule-based system is a method to derive an expression by matching the text of the problem to a manually created rule and schema pattern. Ref. [18] used four predefined schemas: change-in, change-out, combine, and compare. The text was transformed into suggested propositions, and the answer was obtained via simple reasoning. Furthermore, Ref. [19] developed a system that can solve multistep arithmetic problems by dividing the schema of [18] in more detail.

- A statistic-based method employs traditional machine learning to identify objects, variables, and operators within the text of a given problem, and the required answer is derived by adopting logical reasoning procedures. Ref. [20] used three classifiers to select problem-solving elements within a sentence. More specifically, the quantity pair classifier extracts the quantity associated with the derivation of an answer. The operator classifier then selects the operator with the highest probability for a given problem from among the basic operators (e.g., {+, −, ×, and /}). The order classifier is used for problems that require an operator (i.e., subtraction and division) related to the order of the operands. Ref. [21] proposed a logic template called Formula that analyzes text and selects key elements for equation inference to solve multistep math problems. The given problem is identified as the most probable equation using a log-linear model and converted into an arithmetic expression.

These approaches are influenced by predefined data such as annotations for mathematical reasoning; therefore, we cannot obtain satisfactory results if the data is insufficient. Moreover, working with large datasets is time-consuming and expensive.

- Deep learning has attracted increasing attention owing to the activation of big data and the development of hardware and algorithms. The primary advantage of deep learning is that it can effectively learn the features of the large datasets without the need for human intervention. In [22], the Deep Neural Solver was proposed, which introduced deep learning solvers using seq2seq structures and equation templates. Subsequently, this influenced the emergence of various solvers with seq2seq structures structures [23,24]. Thereafter, a solver with other structures emerged with the advent of the Transformer. Ref. [25] used a Transformer to analyze the notations, namely prefix, infix, and postfix that resulted in enhanced performance when deriving arithmetic expressions. Additionally, Ref. [26] proposed an equation generation model that yielded mathematical expressions from the problem text without equation templates using expression tokens and operand-context pointers.

For more sophisticated MWPs solvers, datasets have been proposed besides these methods. Some of the actively used datasets are as follows: Illinois (IL) Data [27] is a dataset composed of 562 items collected from k5learning.com and dadworksheets.com, with one-operation and single-step problems. Problems requiring domain knowledge (e.g., pineapple is a fruit and two weeks comprise 14 days) are removed. The common core (CC) [27] contains 600 data points that were harvested from commoncoresheets.com and comprises multi-operator and multi-step problems. The dataset does not contain irrelevant quantities in the problem text. Math word problems (MAWPS) [28] consists of 2373 one-unknown variable arithmetic problems, and this dataset combines datasets published in previous studies studies [27,29,30,31]. Academia sinica diverse mwp dataset-a (ASDiv-A) [32] has 1218 problems with annotations for problem types, grade levels, and equations. Math23 [22] was constructed by crawling several online educational websites. This is a large Chinese dataset comprising 23,161 one-variable linear mathematical word problems. The hybrid math word problems dataset (HMWP) [33] is another Chinese-based dataset that consists of multi-unknown variable problems requiring non-linear equations. The aforementioned datasets comprise English and Chinese, which are not suitable for the Korean MWPs task.

3. Data Generator

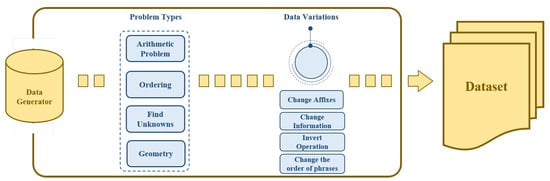

This section introduces the Korean math problem generator. An outline of the proposed generator, which has four problem types tailored to the elementary curriculum level, is depicted in Figure 1. Each of these types has subtypes, and the problems are generated for a total of 42 subtypes. The main types of problems and descriptions of the examples are covered in Section 3.1. During the training process, we applied variations to the data to avoid a scenario in which the model derives an answer by simply memorizing the sentence structure of the problem type. Additionally, this enabled the model to handle agglutinative expressions in Korean. Section 3.2 presents these variations. Section 3.3 describes the equation templates used in the previous studies on MWPs and shows the components of the generated math problem data. Finally, Section 3.4 describes the rules for writing Korean math problems.

Figure 1.

Outline of data generator.

3.1. Types of Problems

Data generators have four types of problems: arithmetic, ordering, finding unknowns, and geometry. The arithmetic problems involve finding an arithmetic expression and the desired answer for a specific situation. Ordering involves answering questions regarding the position or rank of objects in a queue; this is similar to the arithmetic problem. However, this requires an understanding of the sequential situation. Finding unknowns involves identifying a value that satisfies a condition for a given equation filled with unknowns or correcting the result for arithmetic errors and returning the correct result according to the original procedure. Geometry involves finding the area of a figure, perimeter, or length of a side for a given a geometric figure. Other studies [34,35,36] presented geometric figures as images. However, as the focus of our study was on the linguistic abilities of the model, we only used sentences.

Table 1, Table 2, Table 3 and Table 4 presents examples of problems. The number in parentheses indicates the number of subtypes of a given type. A total of 42 subtype problems are present.

Table 1.

Examples of the arithmetic problem type.

Table 2.

Examples of the ordering type.

Table 3.

Examples of the finding unknowns type.

Table 4.

Examples of the geometry type.

3.2. Data Variations

In MWPs, questions are presented in different forms even for the same problem type. As Korean has agglutinative characteristics, providing additional linguistic expressions is possible by changing the suffix of the root in the problem text. An agglutinative language is a form of language characterized by the addition of prefixes, suffixes, and other morphemes to the roots to form words. To develop a robust MWPs solver, the model must learn as many sentences as possible. Therefore, we apply variations when generating problems, such that the model can capture many phrases. The variation method can be classified into four types: alternate affixes, change information, replace operator, and change the order of a phrase. The alternate affixes type changes the affixes of the root, such that the model learns various phrasing. The change information type converts a proper noun or object, which is the information provided in the problem. The replace operator type transforms a word provided for operator inference with another operator. These three variations guide the model to achieve proper reasoning without being constrained to the specific information of a given question. The change in the order of phrase type swaps the order of the sentences. This prevents the model from merely memorizing the structure of the problem superficially. In addition to these techniques, we applied the random.seed function from Python for the generator to prevent operands and variants from appearing consistently. When generating a dataset, the random numbers generated from each uniquely assigned seed value select the variations. This enables each dataset to receive less consistent data. Examples of the data variations are listed in Table 5.

Table 5.

Types and examples of variations. Colored text represents changes via variation.

3.3. Components of the Generated Data

The main aspect of the previous studies was the method using an equation template. This template is an abstract form of an equation when the types and positions of the operators are the same, and only the operands are different depending on the question. More specifically, if the equation expressed by the problem is , a generalized form such as X = + is indicated. In this approach, a template suitable for the problem is first determined via classification to solve a given mathematical problem [37]. Sequentially, numerical information is extracted from the text within the problem and the template is populated. This enables the model to easily draw mathematical inferences. However, the lack of a predefined template has the disadvantage of low prediction accuracy. On the other hand, Ref. [26] proposed a template-independent equation generation model that is trained to generate equations by extracting equation-related information from the problem text. We leveraged this approach not to consider the template sparsity issue. In conclusion, our generated data comprises questions, equations, and answers.

3.4. Rule of Data

The problem text consists of the Korean language, alphabets, Arabic numerals, the SI international system of units, and punctuation marks. In particular, punctuation marks only include those mentioned in the Hangeul Spelling Appendix. If uppercase letters of the alphabet are listed without punctuation marks or spaces, this indicates each digit (i.e., Table 3, Finding Unknowns Example 1). Words expressing different units, such as km, m, cm, and kg are included in the question text and can be expressed in lowercase English or Korean (i.e., Table 4, Geometry Examples 1 and 2). This is one of the methods for the model to learn various linguistic expressions. The level of words and difficulty of the problems are limited to the Korean elementary curriculum. The equation has the basic operators {+, −, /, ×} and Python operators {//, %}. Only one correct answer exists for each question. Even if units and names are mentioned in the question, only numbers are specified in the correct answers, which are written as positive rational numbers. When a decimal answer is required, it is rounded to three decimal places.

4. Materials and Methods

In this section, we describe the solver structure and training methods. We consider that extracting keywords from a given problem text to generate mathematical formulae is equivalent to translating from natural language to mathematical formulae. Therefore, we adopted the model used for machine translation as the structure of the solver. Section 4.1 describes the machine translation framework that we adopted. Section 4.2 provides the hyperparameters, optimizer, and the number of epochs applied to these models. Section 4.3 presents a word embedding method that was applied to the solver to improve its ability to understand the meanings of words and improve accuracy.

4.1. Architecture

4.1.1. Vanilla Seq2seq

Seq2seq is a basic model in machine translation and comprises an encoder and a decoder module. The encoder compresses the information in the input sequence into a vector called a context vector. The decoder takes a context vector as initial hidden states and generates a sequential output. The encoder and decoder consist of an RNNs-based system. In our experiments, we used GRU cells and not vanilla RNNs.

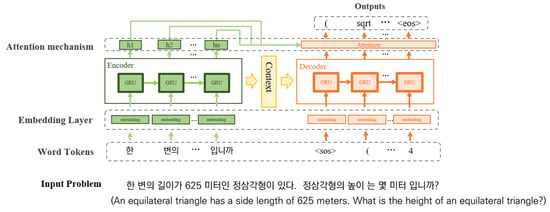

4.1.2. Seq2Seq with Attention Mechanism

Vanilla Seq2seq has two drawbacks. The first is gradient vanishing, which is a chronic problem of RNN systems. The second is the loss of input sequence information caused by compressing all information into a fixed-size vector. These result in a decrease in prediction accuracy as the input sentence increases in length. The attention mechanism is a method for tackling this issue. The basic idea is that for each time step the decoder predicts the output word, it once again consults the entire input sentence from the encoder. However, instead of referring to the entire input sentence at the same rate, the decoder pays close attention to the portion of the input word that is connected to the word that will be predicted at that instant. The structure of the seq2seq with the attention model that we considered in our experiment is similar to the aforementioned vanilla structure. However, the difference is that the attention mechanism is added to the decoder. Figure 2 illustrates this structure. The attention equation is expressed as follows [5]:

where represents the attention score function that calculates the similarity for two given vectors, represents the hidden state of the decoder at each time step t, denotes the hidden state of the encoder at each word s. , represent the model parameters, which are trainable weights. Moreover, represents the attention weight, which considers the softmax from all scores obtained at time t. is the encoder context vector for time t, which is obtained by the weighted summing of the attention weights and the words information of the encoder, and represents the predicted word obtained via the context vector and the hidden state of the decoder.

Figure 2.

Structure of the seq2seq with the attention mechanism. The green square h represents the hidden state of each word acquired via the encoder.

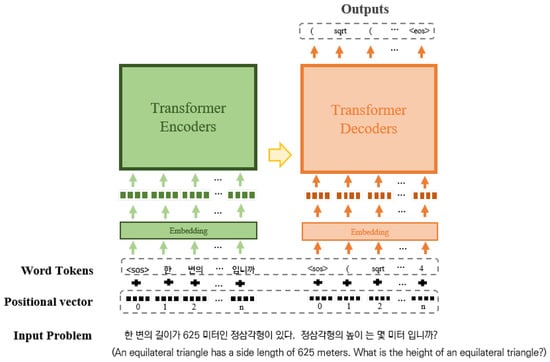

4.1.3. Transformer

The attention mechanism is used to alleviate the long-term dependence problem; however, the problem of sequential nature remains. The Transformer is a method of constructing an encoder–decoder structure by only using attention mechanisms by removing the RNNs system from the existing structure. The structure is depicted in Figure 3. The Transformer does not receive and process data sequentially but receives a sequence at a time and processes it using attention. This approach accelerates the computation of the model, as it is relatively easy to train and parallelize.

Figure 3.

Structure of the Transformer model. The encoder of the Transformer delivers the semantic information of the input sentence obtained through self-attention and multi-head attention to the decoder.

Representative technologies used by a Transformer to replace RNNs are as follows: self-attention, multi-head attention, and positional encoding. Self-attention is a method of calculating the relevance of words appearing in an input sentence and reflecting these on the network. This technology generates query, key, and value vectors from the vectors of each word and subsequently uses these to calculate the attention score of each word. The advantage of self-attention is that it can calculate the association between input words and is not affected by the long-term dependency problem because it can connect the words directly without sequential processing. Multi-head attention is a method of dividing self-attention into parallel head units. Each head calculates a self-attention for the word. Then, concatenates the values obtained from the heads to represent the word. It serves to reflect the various information in the word. Positional encoding is a technology for reflecting the positional information of each word. A Transformer does not receive sequences sequentially; therefore, the context information indicated by the word order cannot be grasped. To avoid this issue, a Transformer gets the sequence information via a periodic function and adds it to the word vector to figure out the word order.

4.2. Hyperparameters of Models

Table 6 lists the hyperparameters and values that each model should consider. Furthermore, Adam was used as the optimizer, and early stopping, which stops training if the validation loss does not decrease for 20 epochs, was employed to prevent overfitting.

Table 6.

Hyperparameters and values to consider for each model. Scratch indicates that the model is trained from scratch without an embedding algorithm.

4.3. Word Embedding

In NLP, text must be properly converted to numbers such that the computer can understand it. Research to efficiently capture the meaning of words is being actively conducted because the performance of downstream operations depends on the expression of words. We used the representative word-embedding algorithm that has been studied thus far to capture the meaning of the words in the problem text. The three algorithms adopted are as follows:

- Skip-gram with negative sampling (SGNS) is a method of predicting the context word, which is the surrounding word of the target word. Vanilla skip-gram is very inefficient because it updates all word vectors during backpropagation. Therefore, negative sampling was proposed as a method to increase computational efficiency. First, this method randomly selects words to generate a subword set that is substantially smaller than the entire word set. Subsequently, this method performs positive and negative binary classification of whether the subset words are near the target word. This method updates only the word vectors that belong to the subset. In this manner, SGNS can perform vector computation efficiently [38].

- Global vectors for word representation (GloVe) is a method to compensate for the shortcomings of Word2Vec and latent semantic analysis (LSA). Because LSA is a count-based method, comprehensive statistical information can be obtained about words that appear together with a specific word. However, the performance of LSA is poor in the analogy task. By contrast, Word2Vec outperforms LSA in this task but cannot reflect statistical information because Word2Vec can only see context words. The GloVe is a method for using both embedding mechanisms [39].

- FastText uses an embedding learning mechanism identical to that of Word2Vec. However, Word2Vec treats words as indivisible units, whereas FastText treats each word as the sum of character unit n-grams (e.g., tri-gram, apple = app, ppl, ple). Owing to this characteristic, FastText has the advantage of being able to estimate the embedding of a word even if out-of-vocabulary problems or typos are present [40].

We trained word vectors with the generated training dataset because pretrained Korean word embeddings in identical situations have not been published. The representation of the trained word vector spanned 256 dimensions, and the embedding vector was applied to the embedding layer of the models.

5. Result and Discussion

We adopted accuracy and the bilingual evaluation understudy (BLEU) score as the evaluation metrics. Accuracy represents the percentage of correct answers to the predicted equation. In this case, the predicted expressions indicate those that have successfully navigated the exception handling process of Python without producing a syntax error. The BLEU score measures the similarity between the predicted and correct equations. The BLEU score is computed according to the following [41]:

where represents the modified n-gram precision, C indicates the number of n-gram word s in a given sentence, c denotes the predicted sentence and r denotes the correct sentence, N indicates the maximum length of the n-gram, which was set to four. Moreover, denotes the weight applied to each n-gram. In this experiment, the weight of 0.25 was applied. Additionally, represents the brevity penalty, and its value is determined by the lengths of c and r.

A total of 210,000 data points were generated, and 150,000 data points constituted the training set. The validation and test datasets contained 30,000 data points each.

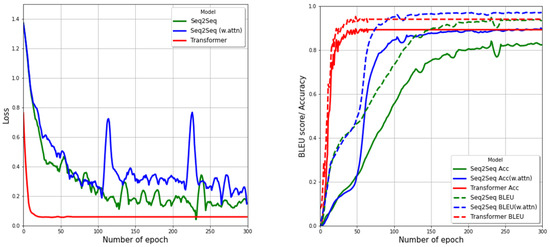

Figure 4 illustrates the losses (left) and performances (right) of the model for the validation dataset. Green indicates the seq2seq model, blue indicates seq2seq with attention, and red indicates the Transformer. In the plot on the left, the Transformer model converges at 46 epochs and displays the fastest training speed by stopping early at 66 epochs, whereas the seq2seq structure-based models completed all 300 epochs. The loss in the seq2seq model with attention tended to be higher than that in the vanilla seq2seq model. However, the loss was not a definite indicator of performance. The performances of each model are shown in the plot on the right. The solid lines represent the accuracy, and the dashed lines represent the BLEU scores. We confirmed that the BLEU score and accuracy of the seq2seq with attention were higher than those of the vanilla seq2seq model. This demonstrates that the attention mechanism focuses on keywords to generate equations from sentences. Moreover, we affirmed that the Transformer achieves an accuracy similar to that of the model with attention applied even with a reduced number of epochs.

Figure 4.

(Left) Losses of the models on the validation dataset. (Right) Accuracy and BLEU scores obtained from the validation dataset of the model.

Table 7 presents quantitative performance data of the models and embedding method for the validation and test datasets. Between the two datasets, the performances of the models are almost the same. The Transformer from scratch model showed the largest performance difference, although this model only differed by 0.49% in BLEU score and 0.29% in accuracy. We assigned each dataset with a different random seed value, which suggested that the model faces different variations for each dataset. Nevertheless, these results demonstrated that the model is robust to variations. More specifically, our data generator enables the model to concentrate on critical terms for equation derivation rather than only surface data.

Table 7.

Quantitative performance results obtained using the validation and test datasets for each model and embedding algorithm.

The attention model with FastText embedding achieved the highest BLEU score of over 97%, and the Transformer with GloVe recorded achieved 90.9% accuracy. The Transformer is more accurate than the attention applied seq2seq; however, the BLEU score is lower than the seq2seq with attention. Table 8 lists the reasons for this finding.

Table 8.

Equation form that manifests when a Transformer anticipates an equation.

Three equation forms are produced by the Transformer: predict the correct equation, change the order, and add parentheses. The predict the correct equation forms refers to the situation in which the anticipated and actual equations are identical. Changing the order indicates a prediction result obtained by changing the order of the numbers. Adding parentheses represents a case of solving by adding parentheses that are not in the correct equation. The order is changed and parentheses are added frequently in the Transformer. The equations are considered identical in both cases for the human domain; however, the BLEU score reflecting the n-gram is not, which appears to be the cause of the score gap.

We conducted additional experiments to verify that our data generator is valid in real mathematical problems. The actual dataset is composed of 311 problems harvested from a commercially available book on problems. It includes both types that were utilized in training and those that were not, for which two reasons exist. The first is to confirm that the model is overfitting the generated dataset and evaluate the performance of the solver on real problems. The second is to evaluate the closeness of the predictions of the model and those of the correct equation, even when the type is unknown. Table 9 presents the performance of the models on a real-world dataset. In contrast to Table 7, the best performance was obtained when FastText was used as the embedding layer. The Transformer with FastText performed the best by scoring 34.71% and 22.32% in the accuracy and BLEU scores, respectively. When this embedding was applied to the seq2seq-based models, the vanilla seq2seq model achieved an accuracy of 16.39% and a BLEU score of 13.52%. In addition, the seq2seq model with attention achieved 20.52% accuracy and 17.02% BLEU score. Compared with the validation results, the performance drop of the seq2seq-based model is more significant than that of the Transformer. These findings suggest that the seq2seq-based model tended to overfit the training data. The outcome of the Transformer also demonstrates that our data generator is valid for solving some of the actual math problems. Table 10 lists several samples with success and failure of the Transformer using FastText.

Table 9.

Outcomes of the model when applied to real-world data.

Table 10.

Examples of correct and incorrect equations from the Transformer with FastText, which perform the best on the real-world problem dataset.

The examples up to the fourth row were from the test set. To the model, these were familiar types; therefore, a high concordance rate was observed. The following two rows were cases of accurate responses to real-world questions. Even though some discrepancies were present between the expressions, the model provided answers through knowledge learned from the generated data. The last two rows were cases of errors found in the actual dataset. Even in these cases, the solver was able to find the required values to derive the answer within the text. However, this agent had limitations because it did not learn all the types of real-world problems and the contextual meaning of words, which eventually resulted in incorrect arithmetic expressions.

6. Conclusions

This study presented a novel Korean data generator for MWPs tasks. The proposed generators consist of problem types and data variations. We primarily dealt with arithmetic problems, ordering, finding unknowns, and geometry. These had subtypes, which were the syntactic expressions of the problem that may exist in each category, and a total of 42 subtypes were prepared. Variations were applied to the subtype problems when generating the dataset. Two reasons exist for the data variations. The first is to enable the model to understand the meaning of the root without being confused by the agglutination of Korean. The second is to prevent the model from deriving the answer through the extrinsic structure of the sentence.

We created a total of 210,000 data points via the proposed data generator. The training dataset was composed of 150,000 data points, and the validation and test datasets were allocated 30,000 data points each. As the problem solver structures, seq2seq, seq2seq with attention, and the Transformer were employed, which are often used in the machine translation domain. The FastText, GloVe, and SGNS word-embedding algorithms were employed to enable the models to sufficiently understand the meaning of words.

The experimental results confirmed that the Transformer structure solver recorded an accuracy of 90.97% on the generated dataset. We performed further experiments to confirm that the trained model could solve the actual data. The real dataset comprises 311 pieces of data harvested from a commercially available book on mathematical problems, which also included non-learned types for objective evaluation of the model. In the final result, the Transformer with FastText recorded an accuracy of 34.72%. This performance demonstrated that the trained model can respond to the generated data and real-world problems.

This study can provide training data for models that automatically solve Korean math problems in fields such as education and the service industry. In addition, this data can be used for Korean language learning and evaluating intellectual ability for future multilingual language models.

Future Research Directions

We confirmed that the proposed data generator partially reflects real-world data. However, we cannot overlook the difference between the generated and the actual data. In future research, additional problem types and variations are needed to make the model robust to exceptional data that do not belong to the four categories described. Furthermore, by using a large-scale language model that adopts the Transformer structure, an approach that captures rich word expressions and improves the accuracy of equations is required.

The research on Korean data generation for MWPs is still in its early stages; however, we expect that this study will serve as a basis for future research.

Author Contributions

Writing—original draft preparation, methodology, and experimental setup, K.K. and supervision and project administration, C.C. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by the research fund from Chosun University, 2021.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Hochreiter, S.; Schmidhuber, J. Long short-term memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Empirical evaluation of gated recurrent neural networks on sequence modeling. In Proceedings of the NIPS 2014 Workshop on Deep Learning, Montreal, ON, Canada, 12 December 2014. [Google Scholar]

- Sutskever, I.; Vinyals, O.; Le, Q.V. Sequence to sequence learning with neural networks. In Advances in Neural Information Processing Systems 27 (NIPS 2014); MIT Press: Cambridge, MA, USA, 2014; Volume 27, pp. 3104–3112. [Google Scholar]

- Bahdanau, D.; Cho, K.H.; Bengio, Y. Neural machine translation by jointly learning to align and translate. In Proceedings of the 3rd International Conference on Learning Representations, ICLR 2015, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

- Luong, M.T.; Pham, H.; Manning, C.D. Effective Approaches to Attention-based Neural Machine Translation. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 1412–1421. [Google Scholar]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, Ł.; Polosukhin, I. Attention is all you need. In Advances in Neural Information Processing Systems 30 (NIPS 2017); MIT Press: Cambridge, MA, USA, 2017; Volume 30. [Google Scholar]

- Kenton, J.D.M.W.C.; Toutanova, L.K. BERT: Pre-training of Deep Bidirectional Transformers for Language Understanding. In Proceedings of the NAACL-HLT, Los Angeles, CA, USA, 2–7 June 2019; pp. 4171–4186. [Google Scholar]

- Brown, T.; Mann, B.; Ryder, N.; Subbiah, M.; Kaplan, J.D.; Dhariwal, P.; Neelakantan, A.; Shyam, P.; Sastry, G.; Askell, A.; et al. Language models are few-shot learners. In Advances in Neural Information Processing Systems 33 (NeurIPS 2020); MIT Press: Cambridge, MA, USA, 2020; Volume 33, pp. 1877–1901. [Google Scholar]

- Caldarini, G.; Jaf, S.; McGarry, K. A literature survey of recent advances in chatbots. Information 2022, 13, 41. [Google Scholar] [CrossRef]

- Dabre, R.; Chu, C.; Kunchukuttan, A. A survey of multilingual neural machine translation. ACM Comput. Surv. (CSUR) 2020, 53, 1–38. [Google Scholar] [CrossRef]

- Ramesh, A.; Pavlov, M.; Goh, G.; Gray, S.; Voss, C.; Radford, A.; Chen, M.; Sutskever, I. Zero-shot text-to-image generation. Proc. Mach. Learn. Res. 2021, 139, 8821–8831. [Google Scholar]

- Saharia, C.; Chan, W.; Saxena, S.; Li, L.; Whang, J.; Denton, E.; Ghasemipour, S.K.S.; Ayan, B.K.; Mahdavi, S.S.; Lopes, R.G.; et al. Photorealistic Text-to-Image Diffusion Models with Deep Language Understanding. arXiv 2022, arXiv:2205.11487. [Google Scholar]

- Boblow, D. Natural language input for a computer problem-solving system. Sem. Inform. Proc. 1968, 146–226. [Google Scholar]

- Charniak, E. Computer solution of calculus word problems. In Proceedings of the 1st International Joint Conference on Artificial Intelligence; Morgan Kaufmann Publishers Inc.: San Francisco, CA, USA, 1969; pp. 303–316. [Google Scholar]

- Clark, P.; Etzioni, O. My computer is an honor student—but how intelligent is it? Standardized tests as a measure of AI. AI Mag. 2016, 37, 5–12. [Google Scholar] [CrossRef]

- Zhang, D.; Wang, L.; Zhang, L.; Dai, B.T.; Shen, H.T. The gap of semantic parsing: A survey on automatic math word problem solvers. IEEE Trans. Patt. Anal. Mach. Intell. 2019, 42, 2287–2305. [Google Scholar] [CrossRef] [PubMed]

- Ki, K.S.; Lee, D.G.; Gweon, G. KoTAB: Korean template-based arithmetic solver with BERT. In Proceedings of the 2020 IEEE International Conference on Big Data and Smart Computing (BigComp), Busan, Korea, 19–22 February 2020; pp. 279–282. [Google Scholar]

- Fletcher, C.R. Understanding and solving arithmetic word problems: A computer simulation. Behav. Res. Methods Instrume. Comput. 1985, 17, 565–571. [Google Scholar] [CrossRef]

- Bakman, Y. Robust understanding of word problems with extraneous information. arXiv 2007, arXiv:math/0701393. [Google Scholar]

- Roy, S.; Vieira, T.; Roth, D. Reasoning about quantities in natural language. Trans. Assoc. Comput. Linguist. 2015, 3, 1–13. [Google Scholar] [CrossRef]

- Mitra, A.; Baral, C. Learning to use formulas to solve simple arithmetic problems. In Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Berlin, Germany, 7–12 August 2016; pp. 2144–2153. [Google Scholar]

- Wang, Y.; Liu, X.; Shi, S. Deep neural solver for math word problems. In Proceedings of the 2017 Conference on Empirical Methods in Natural Language Processing, Copenhagen, Denmark, 7–11 September 2017; pp. 845–854. [Google Scholar]

- Wang, L.; Wang, Y.; Cai, D.; Zhang, D.; Liu, X. Translating a Math Word Problem to a Expression Tree. In Proceedings of the 2018 Conference on Empirical Methods in Natural Language Processing, Brussels, Belgium, 31 October–4 November 2018; pp. 1064–1069. [Google Scholar]

- Chiang, T.R.; Chen, Y.N. Semantically-Aligned Equation Generation for Solving and Reasoning Math Word Problems. In Proceedings of the 2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, Volume 1 (Long and Short Papers), Minneapolis, MN, USA, 2–7 June 2019; pp. 2656–2668. [Google Scholar]

- Griffith, K.; Kalita, J. Solving arithmetic word problems automatically using transformer and unambiguous representations. In Proceedings of the 2019 International Conference on Computational Science and Computational Intelligence (CSCI), Las Vegas, NV, USA, 5–7 December 2019; pp. 526–532. [Google Scholar]

- Kim, B.; Ki, K.S.; Lee, D.; Gweon, G. Point to the expression: Solving algebraic word problems using the expression-pointer transformer model. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 3768–3779. [Google Scholar]

- Roy, S.; Roth, D. Solving General Arithmetic Word Problems. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 1743–1752. [Google Scholar]

- Koncel-Kedziorski, R.; Roy, S.; Amini, A.; Kushman, N.; Hajishirzi, H. MAWPS: A math word problem repository. In Proceedings of the 2016 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies, San Diego, CA, USA, 12–17 June 2016; pp. 1152–1157. [Google Scholar]

- Hosseini, M.J.; Hajishirzi, H.; Etzioni, O.; Kushman, N. Learning to solve arithmetic word problems with verb categorization. In Proceedings of the EMNLP, Doha, Qatar, 25–29 October 2014; pp. 523–533. [Google Scholar]

- Koncel-Kedziorski, R.; Hajishirzi, H.; Sabharwal, A.; Etzioni, O.; Ang, S.D. Parsing algebraic word problems into equations. Trans. Assoc. Comput. Linguist. 2015, 3, 585–597. [Google Scholar] [CrossRef]

- Kushman, N.; Artzi, Y.; Zettlemoyer, L.; Barzilay, R. Learning to automatically solve algebra word problems. In Proceedings of the 52nd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), Baltimore, Maryland, 22–27 June 2014; pp. 271–281. [Google Scholar]

- Miao, S.Y.; Liang, C.C.; Su, K.Y. A Diverse Corpus for Evaluating and Developing English Math Word Problem Solvers. In Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics, Online, 5–10 July 2020; pp. 975–984. [Google Scholar]

- Qin, J.; Lin, L.; Liang, X.; Zhang, R.; Lin, L. Semantically-Aligned Universal Tree-Structured Solver for Math Word Problems. In Proceedings of the 2020 Conference on Empirical Methods in Natural Language Processing (EMNLP), Online, 16–20 November 2020; pp. 3780–3789. [Google Scholar]

- Lin, X.; Shimotsuji, S.; Minoh, M.; Sakai, T. Efficient diagram understanding with characteristic pattern detection. Comput. Vis. Graph. Image Proc. 1985, 30, 84–106. [Google Scholar] [CrossRef]

- Seo, M.; Hajishirzi, H.; Farhadi, A.; Etzioni, O.; Malcolm, C. Solving geometry problems: Combining text and diagram interpretation. In Proceedings of the 2015 Conference on Empirical Methods in Natural Language Processing, Lisbon, Portugal, 17–21 September 2015; pp. 1466–1476. [Google Scholar]

- Alvin, C.; Gulwani, S.; Majumdar, R.; Mukhopadhyay, S. Synthesis of problems for shaded area geometry reasoning. In Proceedings of the International Conference on Artificial Intelligence in Education; Springer: Berlin/Heidelberg, Germany, 2017; pp. 455–458. [Google Scholar]

- Graepel, T.; Obermayer, K. Large margin rank boundaries for ordinal regression. In Advances in Large Margin Classifiers; MIT Press: Cambridge, MA, USA, 2000; pp. 115–132. [Google Scholar]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Dean, J. Distributed representations of words and phrases and their compositionality. Adv. Neural Inform. Proc. Syst. 2013, 26, 3111–3119. [Google Scholar]

- Pennington, J.; Socher, R.; Manning, C.D. Glove: Global vectors for word representation. In Proceedings of the 2014 conference on empirical methods in natural language processing (EMNLP), Doha, Qatar, 25–29 October 2014; pp. 1532–1543. [Google Scholar]

- Bojanowski, P.; Grave, E.; Joulin, A.; Mikolov, T. Enriching word vectors with subword information. Trans. Assoc. Comput. Linguist. 2017, 5, 135–146. [Google Scholar] [CrossRef]

- Papineni, K.; Roukos, S.; Ward, T.; Zhu, W.J. Bleu: A method for automatic evaluation of machine translation. In Proceedings of the 40th annual meeting of the Association for Computational Linguistics, Philadelphia, PA, USA, 7–12 July 2002; pp. 311–318. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).