Abstract

In this paper, in order to solve systems of nonlinear equations, a new class of frozen Jacobian multi-step iterative methods is presented. Our proposed algorithms are characterized by a highly convergent order and an excellent efficiency index. The theoretical analysis is presented in detail. Finally, numerical experiments are presented for showing the performance of the proposed methods, when compared with known algorithms taken from the literature.

Keywords:

iterative method; frozen Jacobian multi-step iterative method; system of nonlinear equations; high-order convergence MSC:

65Hxx

1. Introduction

Approximating a locally unique solution of the nonlinear system

has many applications in engineering and mathematics [1,2,3,4]. In (1), we have n equations with n variables. In fact, F is a vector-valued function with n variables. Several problems arising from the different areas in natural and applied sciences take the form of systems of nonlinear Equation (1) that need to be solved, where such that for all , is a scalar nonlinear function. Additionally, there are many real life problems for which, in the process of finding their solutions, one needs to solve a system of nonlinear equations, see for example [5,6,7,8,9]. It is known that finding an exact solution of the nonlinear system (1) is not an easy task, especially when the equation contains terms consisting of logarithms, trigonometric and exponential functions, or a combination of transcendental terms. Hence, in general, one cannot find the solution of Equation (1) analytically, therefore, we have to use iterative methods. Any iterative method starts from one approximation and constructs a sequence such that it converges to the solution of the Equation (1) (for more details, see [10]).

The most commonly used iterative method to solve (1) is the classical Newton method, given by

where is the Jacobian matrix of function F, and is the k-th approximation of the root of (1) with the initial guess . It is well known that Newton’s method is a quadratic convergence method with the efficiency index [11]. The third and higher-order methods such as the Halley and Chebyshev methods [12] have little practical value because of the evaluation of the second Frechèt-derivative. However, third and higher-order multi-step methods can be good substitutes because they require the evaluation of the function and its first derivative at different points.

In the recent decades, many authors tried to design iterative procedures with better efficiency and higher order of convergence than the Newton scheme, see, for example, ref. [13,14,15,16,17,18,19,20,21,22,23,24] and references therein. However, the accuracy of solutions is highly dependent on the efficiency of the utilized algorithm. Furthermore, at each step of any iterative method, we must find the exact solution of an obtained linear system which is expensive in actual applications, especially when the system size n is very large. However, the proposed higher-order iterative methods are futile unless they have high-order convergence. Therefore, the important aim in developing any new algorithm is to achieve high convergence order with requiring as small as possible the evaluations of functions, derivatives and matrix inversions. Thus, here, we focus on the technique of the frozen Jacobian multi-step iterative algorithms. It is shown that this idea is computationally attractive and economical for constructing iterative solvers because the inversion of the Jacobian matrix (regarding -decomposition) is performed once. Many researchers have reduced the computational cost of these algorithms by frozen Jacobian multi-step iterative techniques [25,26,27,28].

In this work, we construct a new class of frozen Jacobian multi-step iterative methods for solving the nonlinear systems of equations. This is a high-order convergent algorithm with an excellent efficiency index. The theoretical analysis is presented completely. Further, by solving some nonlinear systems, the ability of the methods is compared with some known algorithms.

The rest of this paper is organized as follows. In the following section, we present our new methods with obtaining of their order of convergence. Additionally, their computational efficiency are discussed in general. Some numerical examples are considered in Section 3 and Section 4 to show the asymptotic behavior of these methods. Finally, a brief concluding remark is presented in Section 5.

2. Constructing New Methods

In this section, two high-order frozen Jacobian multi-step iterative methods to solve systems of nonlinear equations are presented. These come by increasing the convergence in Newton’s method and simultaneously decreasing its computational costs. The framework of these Frozen Jacobian multi-step iterative Algorithms (FJA) can be described as

In (2), for an m-step method (), one needs m function evaluations and only one Jacobian evaluation. Further, the number of decompositions is one. The order of convergence for such FJA method is . In the right-hand side column of (2), the algorithm is briefy described.

In the following subsections, by choosing two different values for m, a third- and a fourth-order frozen Jacobian multi-step iterative algorithm are presented.

2.1. The Third-Order FJA

First, we investigate case , that is,

we denote this by .

2.1.1. Convergence Analysis

In this part, we prove that the order of convergence of method (3) is three. First, we need to definition of the Frechèt derivative.

Definition 1

([29]). Let F be an operator which maps a Banach space X into a Banach space Y. If there exists a bounded linear operator T from X into Y such that

then F is said to be Frechèt differentiable and .

For more details on the Frechèt differentiability and Frechèt derivative, we refer the interested readers to a review article by Emmanuel [30] and references therein.

Theorem 1.

Let be a Frechèt differentiable function at each point of an open convex neighborhood I of α, the solution of system . Suppose that is continuous and nonsingular in α, then, the sequence obtained using the iterative method (3) converges to α and its rate of convergence is three.

Proof.

Suppose that using Taylor’s expansion [31], we obtain

as is the root of F so . As a matter of fact, one may yield the following equations of and in a neighborhood of by using Taylor’s series expansions [32],

wherein and I is the identity matrix whose order is the same as the order of the Jacobian matrix. Note that . Using (4) and (5) we obtain

Since , we find

By the definition of error term , the error term of as an approximation of , that is, is obtained from the second term of the right-hand side of Equation (6). Similarly, the Taylor’s expansion of the function is

Thus,

Finally, since

we have

2.1.2. The Computational Efficiency

In this section, we compare the computational efficiency of our third-order scheme (3), denoted as , with some existing third-order methods. We will assess the efficiency index of our new frozen Jacobian multi-step iterative method in contrast with the existing methods for systems of nonlinear equations, using two famous efficiency indices. The first one is the classical efficiency index [33] as

where p is the rate of convergence and c stands for the total computational cost per iteration in terms of the number of functional evaluations, such that where r refers to the number of function evaluations needed per iteration and m is the number of Jacobian matrix evaluations needed per iteration.

It is well known that the computation of factorization by any of the existing methods in the literature normally needs flops in floating point operations, while the floating point operations to solve two triangular systems needs flops.

The second criterion is the flops-like efficiency index () which was defined by Montazeri et al. [34] as

where p is the order of convergence of the method, c denotes the total computational cost per loop in terms of the number of functional evaluations, as well as the cost of factorization for solving two triangular systems (based on the flops).

As the first comparison, we compare with the third-order method given by Darvishi [35], which is denoted as

The second iterative method shown by is the following third-order method introduced by Hernández [36]

Another method is the following third-order iterative method given by Babajee et al. [37], ,

Finally, the following third-order iterative method, , ref. [38] is considered

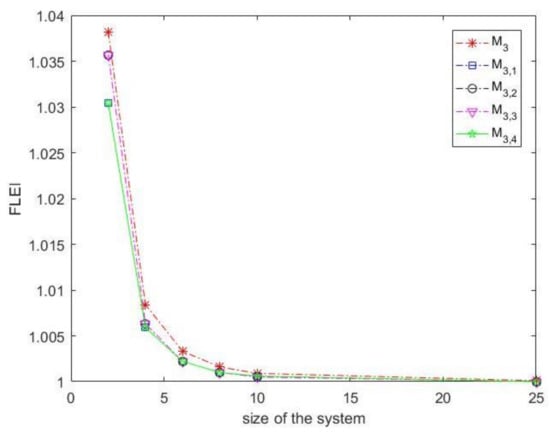

The computational efficiency of our third-order method revealed that our method, , is the best one in respect with methods , , and , as presented in Table 1, and Figure 1 and Figure 2.

Table 1.

Comparison of efficiency indices between and other third-order methods.

Figure 1.

The classical efficiency index for methods , , , and .

Figure 2.

The flops-like efficiency index for methods , , , and .

2.2. The Fourth-Order FJA

By setting in FJA, the following three-step algorithm is deduced

.

In the following subsections, the order of convergence and efficiency indices are obtained for the method described in (9).

2.2.1. Convergence Analysis

The frozen Jacobian three-step iterative process (9) has the rate of convergence order four by using three evaluations of function F and one first-order Frechèt derivative F per full iterations. To avoid any repetition, we take a sketch of proof on this subject. Similar to the proof of Theorem 1, by setting in (8) we obtain

Hence,

2.2.2. The Computational of Efficiency

Now, we compare the computational efficiency of our fourth-order scheme (9), called by , with some existing fourth-order methods. The considered methods are: the third-order method given by Sharma et al. [39],

the fourth-order iterative method given by Darvishi and Barati [40],

the fourth-order iterative method given by Soleymani et al. [34,41],

and the following Jarratt fourth-order method [42],

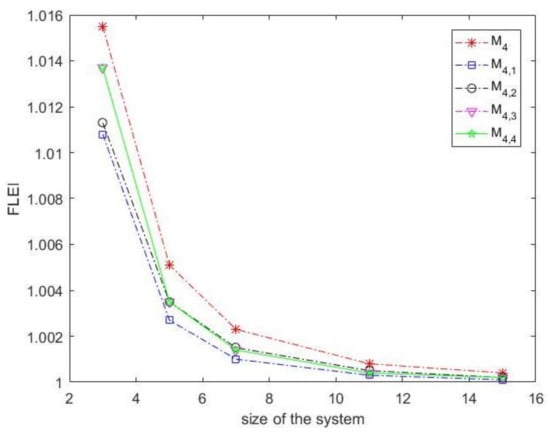

The computational efficiency of our fourth-order method showed that our method is better than methods , , and as the comparison results are presented in Table 2, and Figure 3 and Figure 4. As we can see from Table 2, the indices of our method are better than similar ones in methods , , and . Furthermore, Figure 3 and Figure 4 show the superiority of our method in respect with the another schemes.

Table 2.

Comparison of efficiency indices between and other fourth-order methods.

Figure 3.

The classical efficiency index for methods , , , and .

Figure 4.

The Flops-like efficiency index for methods , , , and .

3. Numerical Results

In order to check the validity and efficiency of our proposed frozen Jacobian multi-step iterative methods, three test problems are considered to illustrate convergence and computation behaviors such as efficiency index and some another indices of the frozen Jacobian multi-step iterative methods. Numerical computations have been performed using variable precision arithmetic that uses floating point representation of 100 decimal digits of mantissa in MATLAB. The computer specifications are: Intel(R) Core(TM) i7-1065G7 CPU 1.30 GHz with 16.00 GB of RAM on Windows 10 pro.

Experiment 1. We begin with the following nonlinear system of n equations [43],

The exact zero of is . To solve (13), we set the initial guess as . The stopping criterion is selected as .

Experiment 2. The next test problem is the following system of nonlinear equations [44],

The exact root of is . To solve (14), the initial guess is taken as . The stopping criterion is selected as .

Experiment 3. The last test problem is the following nonlinear system [9],

with the exact solution . To solve (15), the initial guess and the stopping criterion are respectively considered as and .

Table 3 shows the comparison results between our third-order frozen Jacobian two-step iterative method and some third-order frozen Jacobian iterative methods, namely, , , and . For all test problems, two different values for n are considered, namely, . As this table shows, in all cases, our method works better than the others. Similarly, in Table 4, CPU time and number of iterations are presented for our fourth-order method, namely, and methods , , and . Similar to , the CPU time for is less than the CPU time for the other methods. These tables show superiority of our methods in respect with the other ones. In Table 3 and Table 4, shows the number of iterations.

Table 3.

Comparison results between and other third-order methods.

Table 4.

Comparison results between and other fourth-order methods.

4. Another Comparison

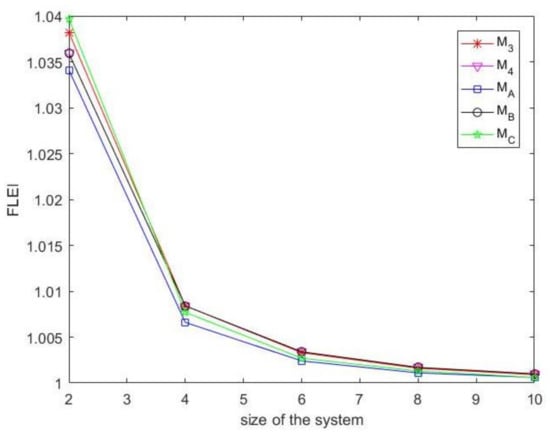

In the previous parts, we presented some comparison results between our methods and with some another frozen Jacobian multi-step iterative methods from third- and fourth-order methods. In this section, we compare our presented methods with three other methods which are fourth- and fifth-order ones. As Table 5 and Table 6 and Figure 5 and Figure 6 show, our methods are also better than these methods.

Table 5.

Numerical results for comparing of and with , and .

Table 6.

Comparison results between , , , and .

Figure 5.

The classical efficiency index for , , and .

Figure 6.

The Flops-like efficiency index for , , and .

- First. The fourth-order method given by Qasim et al. [25], ,

- Second. The fourth-order Newton-like method by Amat et al. [26], ,.

- Third. The fifth-order iterative method by Ahmad et al. [28], ,.

The comparison results of computational efficiency between our methods and with selected methods , and are presented in Table 5. Additionally, Figure 5 and Figure 6 show the graphical comparisons between these methods. Finally, Table 6 shows CPU time and number of iterations to solve our test problems by methods , , , and . These numerical and graphical reports show the quality of our algorithms.

5. Conclusions

In this article, two new frozen Jacobian two- and three-step iterative methods to solve systems of nonlinear equations are presented. For the first method, we proved that the order of convergence is three, while for the second one, a fourth-order convergence is proved. By solving three different examples, one may see our methods work as well. Further, the CPU time of our methods is less than some selected frozen Jacobian multi-step iterative methods in the literature. Moreover, other indices of our methods such as number of steps, functional evaluations, the classical efficiency index, and so on, are better than these indices for other methods. This class of the frozen Jacobian multi-step iterative methods can be a pattern for new research on the frozen Jacobian iterative algorithms.

Author Contributions

Investigation, R.H.A.-O. and M.T.D.; Project administration, M.T.D.; Resources, R.H.A.-O.; Supervision, M.T.D.; Writing—original draft, M.T.D. All authors have read and agreed to the published version of the manuscript.

Funding

This research received no external funding.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors would like to thank the editor of the journal and three anonymous reviewers for their generous time in providing detailed comments and suggestions that helped us to improve the paper.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Fay, T.H.; Graham, S.D. Coupled spring equations. Int. J. Math. Educ. Sci. Technol. 2003, 34, 65–79. [Google Scholar] [CrossRef]

- Petzold, L. Automatic selection of methods for solving stiff and non stiff systems of ordinary differential equations. SIAM J. Sci. Stat. Comput. 1983, 4, 136–148. [Google Scholar] [CrossRef]

- Ehle, B.L. High order A-stable methods for the numerical solution of systems of D.E.’s. BIT Numer. Math. 1968, 8, 276–278. [Google Scholar] [CrossRef]

- Wambecq, A. Rational Runge-Kutta methods for solving systems of ordinary differential equations. Computing 1978, 20, 333–342. [Google Scholar] [CrossRef]

- Liang, H.; Liu, M.; Song, M. Extinction and permanence of the numerical solution of a two-prey one-predator system with impulsive effect. Int. J. Comput. Math. 2011, 88, 1305–1325. [Google Scholar] [CrossRef]

- Harko, T.; Lobo, F.S.N.; Mak, M.K. Exact analytical solutions of the Susceptible-Infected-Recovered (SIR) epidemic model and of the SIR model with equal death and birth rates. Appl. Math. Comput. 2014, 236, 184–194. [Google Scholar] [CrossRef]

- Zhao, J.; Wang, L.; Han, Z. Stability analysis of two new SIRs models with two viruses. Int. J. Comput. Math. 2018, 95, 2026–2035. [Google Scholar] [CrossRef]

- Kröger, M.; Schlickeiser, R. Analytical solution of the SIR-model for the temporal evolution of epidemics, Part A: Time-independent reproduction factor. J. Phys. A Math. Theor. 2020, 53, 505601. [Google Scholar] [CrossRef]

- Ullah, M.Z.; Behl, R.; Argyros, I.K. Some high-order iterative methods for nonlinear models originating from real life problems. Mathematics 2020, 8, 1249. [Google Scholar] [CrossRef]

- Argyros, I.K. Concerning the “terra incognita” between convergence regions of two Newton methods. Nonlinear Anal. 2005, 62, 179–194. [Google Scholar] [CrossRef]

- Drexler, M. Newton Method as a Global Solver for Non-Linear Problems. Ph.D Thesis, University of Oxford, Oxford, UK, 1997. [Google Scholar]

- Guti’errez, J.M.; Hern’andez, M.A. A family of Chebyshev-Halley type methods in Banach spaces. Bull. Aust. Math. Soc. 1997, 55, 113–130. [Google Scholar] [CrossRef]

- Cordero, A.; Jordán, C.; Sanabria, E.; Torregrosa, J.R. A new class of iterative processes for solving nonlinear systems by using one divided differences operator. Mathematics 2019, 7, 776. [Google Scholar] [CrossRef]

- Stefanov, S.M. Numerical solution of systems of non linear equations defined by convex functions. J. Interdiscip. Math. 2022, 25, 951–962. [Google Scholar] [CrossRef]

- Lee, M.Y.; Kim, Y.I.K. Development of a family of Jarratt-like sixth-order iterative methods for solving nonlinear systems with their basins of attraction. Algorithms 2020, 13, 303. [Google Scholar] [CrossRef]

- Cordero, A.; Jordán, C.; Sanabria-Codesal, E.; Torregrosa, J.R. Design, convergence and stability of a fourth-order class of iterative methods for solving nonlinear vectorial problems. Fractal Fract. 2021, 5, 125. [Google Scholar] [CrossRef]

- Amiri, A.; Cordero, A.; Darvishi, M.T.; Torregrosa, J.R. A fast algorithm to solve systems of nonlinear equations. J. Comput. Appl. Math. 2019, 354, 242–258. [Google Scholar] [CrossRef]

- Argyros, I.K.; Sharma, D.; Argyros, C.I.; Parhi, S.K.; Sunanda, S.K. A family of fifth and sixth convergence order methods for nonlinear models. Symmetry 2021, 13, 715. [Google Scholar] [CrossRef]

- Singh, A. An efficient fifth-order Steffensen-type method for solving systems of nonlinear equations. Int. J. Comput. Sci. Math. 2018, 9, 501–514. [Google Scholar] [CrossRef]

- Ullah, M.Z.; Serra-Capizzano, S.; Ahmad, F. An efficient multi-step iterative method for computing the numerical solution of systems of nonlinear equations associated with ODEs. Appl. Math. Comput. 2015, 250, 249–259. [Google Scholar] [CrossRef]

- Pacurar, M. Approximating common fixed points of Pres̆ic-Kannan type operators by a multi-step iterative method. An. St. Univ. Ovidius Constanta 2009, 17, 153–168. [Google Scholar]

- Rafiq, A.; Rafiullah, M. Some multi-step iterative methods for solving nonlinear equations. Comput. Math. Appl. 2009, 58, 1589–1597. [Google Scholar] [CrossRef]

- Aremu, K.O.; Izuchukwu, C.; Ogwo, G.N.; Mewomo, O.T. Multi-step iterative algorithm for minimization and fixed point problems in p-uniformly convex metric spaces. J. Ind. Manag. Optim. 2021, 17, 2161. [Google Scholar] [CrossRef]

- Soleymani, F.; Lotfi, T.; Bakhtiari, P. A multi-step class of iterative methods for nonlinear systems. Optim. Lett. 2014, 8, 1001–1015. [Google Scholar] [CrossRef]

- Qasim, U.; Ali, Z.; Ahmad, F.; Serra-Capizzano, S.; Ullah, M.Z.; Asma, M. Constructing frozen Jacobian iterative methods for solving systems of nonlinear equations, associated with ODEs and PDEs using the homotopy method. Algorithms 2016, 9, 18. [Google Scholar] [CrossRef]

- Amat, S.; Busquier, S.; Grau, À.; Grau-Sánchez, M. Maximum efficiency for a family of Newton-like methods with frozen derivatives and some applications. Appl. Math. Comput. 2013, 219, 7954–7963. [Google Scholar] [CrossRef]

- Kouser, S.; Rehman, S.U.; Ahmad, F.; Serra-Capizzano, S.; Ullah, M.Z.; Alshomrani, A.S.; Aljahdali, H.M.; Ahmad, S.; Ahmad, S. Generalized Newton multi-step iterative methods GMNp,m for solving systems of nonlinear equations. Int. J. Comput. Math. 2018, 95, 881–897. [Google Scholar] [CrossRef]

- Ahmad, F.; Tohidi, E.; Ullah, M.Z.; Carrasco, J.A. Higher order multi-step Jarratt-like method for solving systems of nonlinear equations: Application to PDEs and ODEs. Comput. Math. Appl. 2015, 70, 624–636. [Google Scholar] [CrossRef]

- Kaplan, W.; Kaplan, W.A. Ordinary Differential Equations; Addison-Wesley Publishing Company: Boston, MA, USA, 1958. [Google Scholar]

- Emmanuel, E.C. On the Frechet derivatives with applications to the inverse function theorem of ordinary differential equations. Aian J. Math. Sci. 2020, 4, 1–10. [Google Scholar]

- Ortega, J.M.; Rheinboldt, W.C.; Werner, C. Iterative Solution of Nonlinear Equations in Several Variables; Society for Industrial and Applied Mathematics: Philadelphia, PA, USA, 2000. [Google Scholar]

- Behl, R.; Bhalla, S.; Magre nán, A.A.; Kumar, S. An efficient high order iterative scheme for large nonlinear systems with dynamics. J. Comput. Appl. Math. 2022, 404, 113249. [Google Scholar] [CrossRef]

- Ostrowski, A.M. Solutions of the Equations and Systems of Equations; Prentice-Hall: England Cliffs, NJ, USA; New York, NY, USA, 1964. [Google Scholar]

- Montazeri, H.; Soleymani, F.; Shateyi, S.; Motsa, S.S. On a new method for computing the numerical solution of systems of nonlinear equations. J. Appl. Math. 2012, 2012, 751975. [Google Scholar] [CrossRef]

- Darvishi, M.T. A two-step high order Newton-like method for solving systems of nonlinear equations. Int. J. Pure Appl. Math. 2009, 57, 543–555. [Google Scholar]

- Hernández, M.A. Second-derivative-free variant of the Chebyshev method for nonlinear equations. J. Optim. Theory Appl. 2000, 3, 501–515. [Google Scholar] [CrossRef]

- Babajee, D.K.R.; Dauhoo, M.Z.; Darvishi, M.T.; Karami, A.; Barati, A. Analysis of two Chebyshev-like third order methods free from second derivatives for solving systems of nonlinear equations. J. Comput. Appl. Math. 2010, 233, 2002–2012. [Google Scholar] [CrossRef]

- Noor, M.S.; Waseem, M. Some iterative methods for solving a system of nonlinear equations. Comput. Math. Appl. 2009, 57, 101–106. [Google Scholar] [CrossRef]

- Sharma, J.R.; Guha, R.K.; Sharma, R. An efficient fourth order weighted-Newton method for systems of nonlinear equations. Numer. Algorithms 2013, 62, 307–323. [Google Scholar] [CrossRef]

- Darvishi, M.T.; Barati, A. A fourth-order method from quadrature formulae to solve systems of nonlinear equations. Appl. Math. Comput. 2007, 188, 257–261. [Google Scholar] [CrossRef]

- Soleymani, F. Regarding the accuracy of optimal eighth-order methods. Math. Comput. Modell. 2011, 53, 1351–1357. [Google Scholar] [CrossRef]

- Cordero, A.; Hueso, J.L.; Martínez, E.; Torregrosa, J.R. A modified Newton-Jarratt’s composition. Numer. Algorithms 2010, 55, 87–99. [Google Scholar] [CrossRef]

- Shin, B.C.; Darvishi, M.T.; Kim, C.H. A comparison of the Newton–Krylov method with high order Newton-like methods to solve nonlinear systems. Appl. Math. Comput. 2010, 217, 3190–3198. [Google Scholar] [CrossRef]

- Waziri, M.Y.; Aisha, H.; Gambo, A.I. On performance analysis of diagonal variants of Newton’s method for large-scale systems of nonlinear equations. Int. J. Comput. Appl. 2011, 975, 8887. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).