Adaptive BP Network Prediction Method for Ground Surface Roughness with High-Dimensional Parameters

Abstract

:1. Introduction

2. Data Source and Processing

2.1. Experimental Setup

2.2. Data Processing and Selection

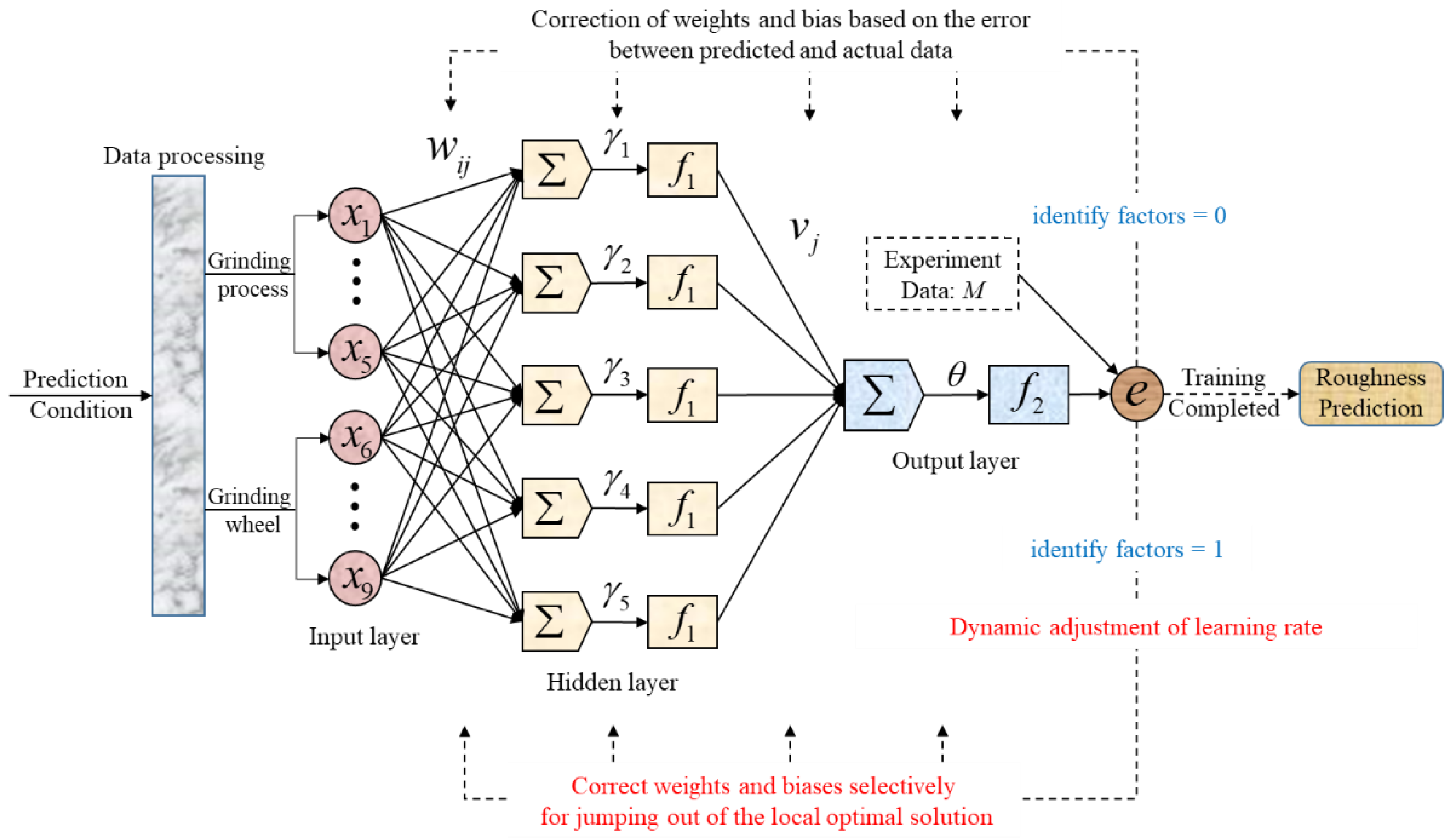

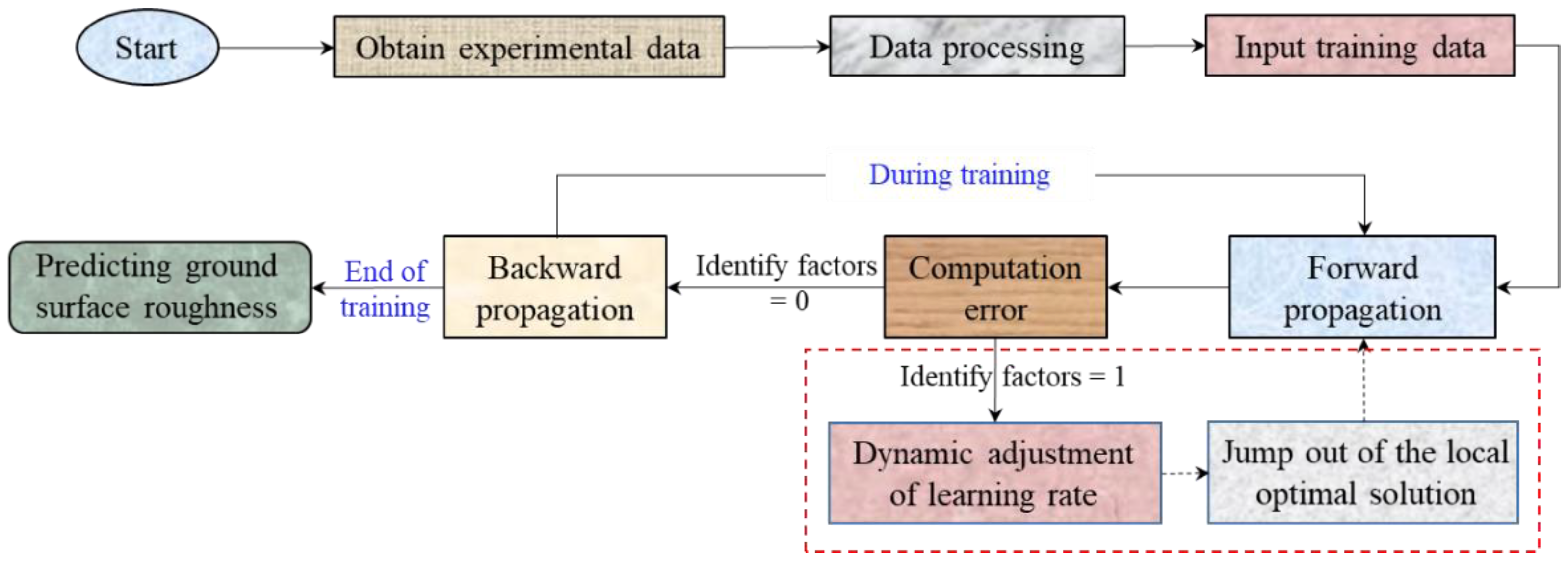

3. Presented BP Neural Network Prediction Model

3.1. The Standard BP Algorithm

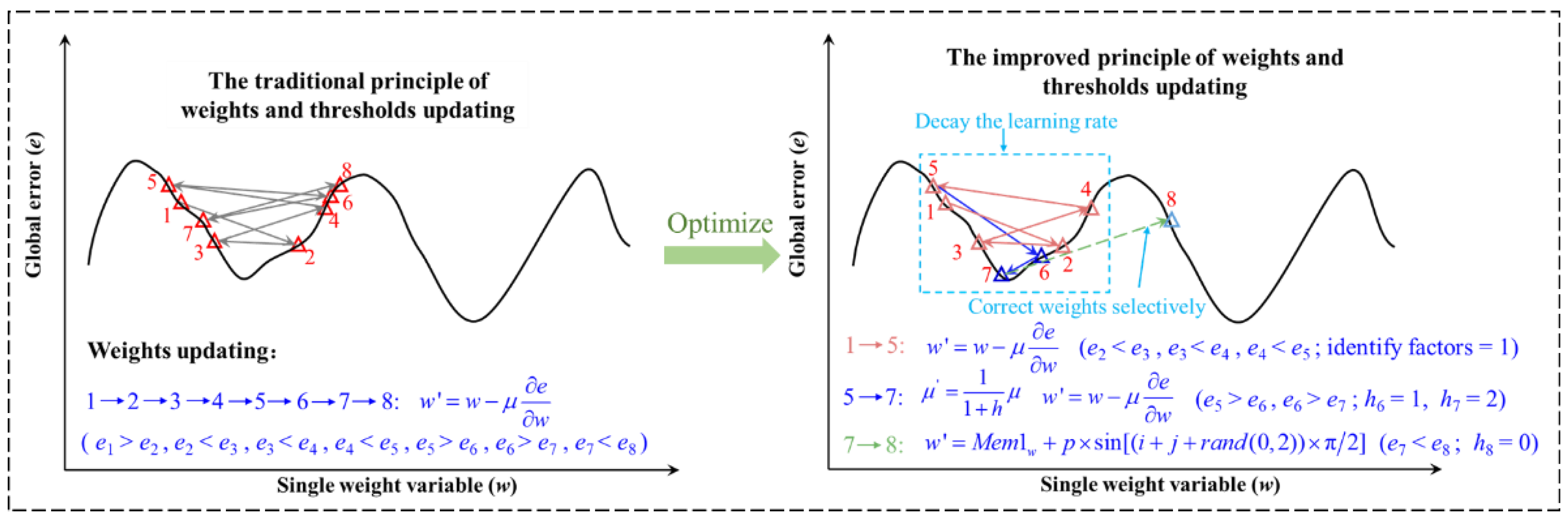

3.2. The Local Optimal Solution

3.3. The Development of the Presented BP Algorithm

3.4. The Performance Evaluation of the Presented BP

4. Results and Discussion

4.1. Influence of Grinding Wheel Wear Features

4.2. Comparison of Prediction Models before and after Optimization

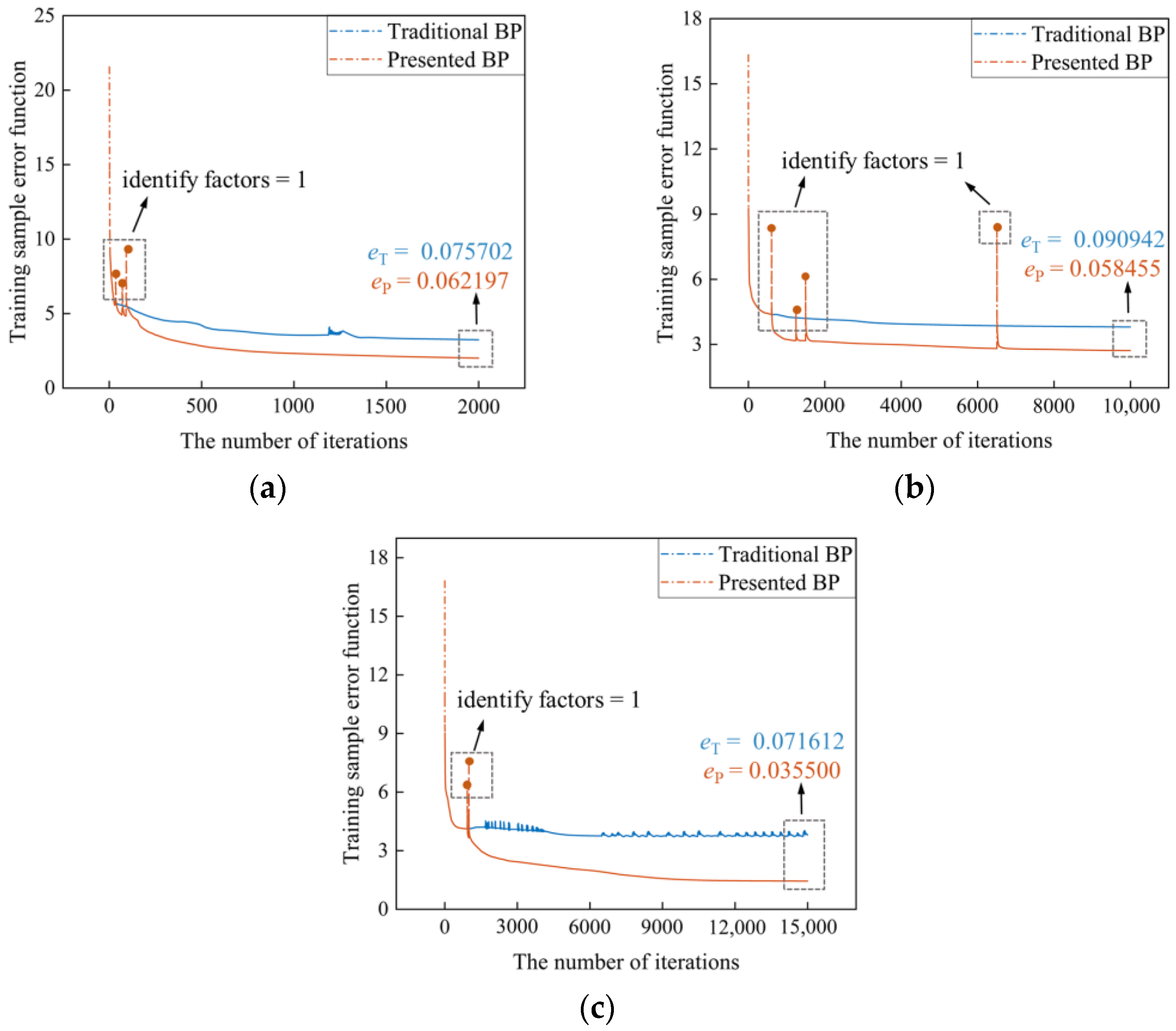

4.2.1. Influence of “Identify Factors”

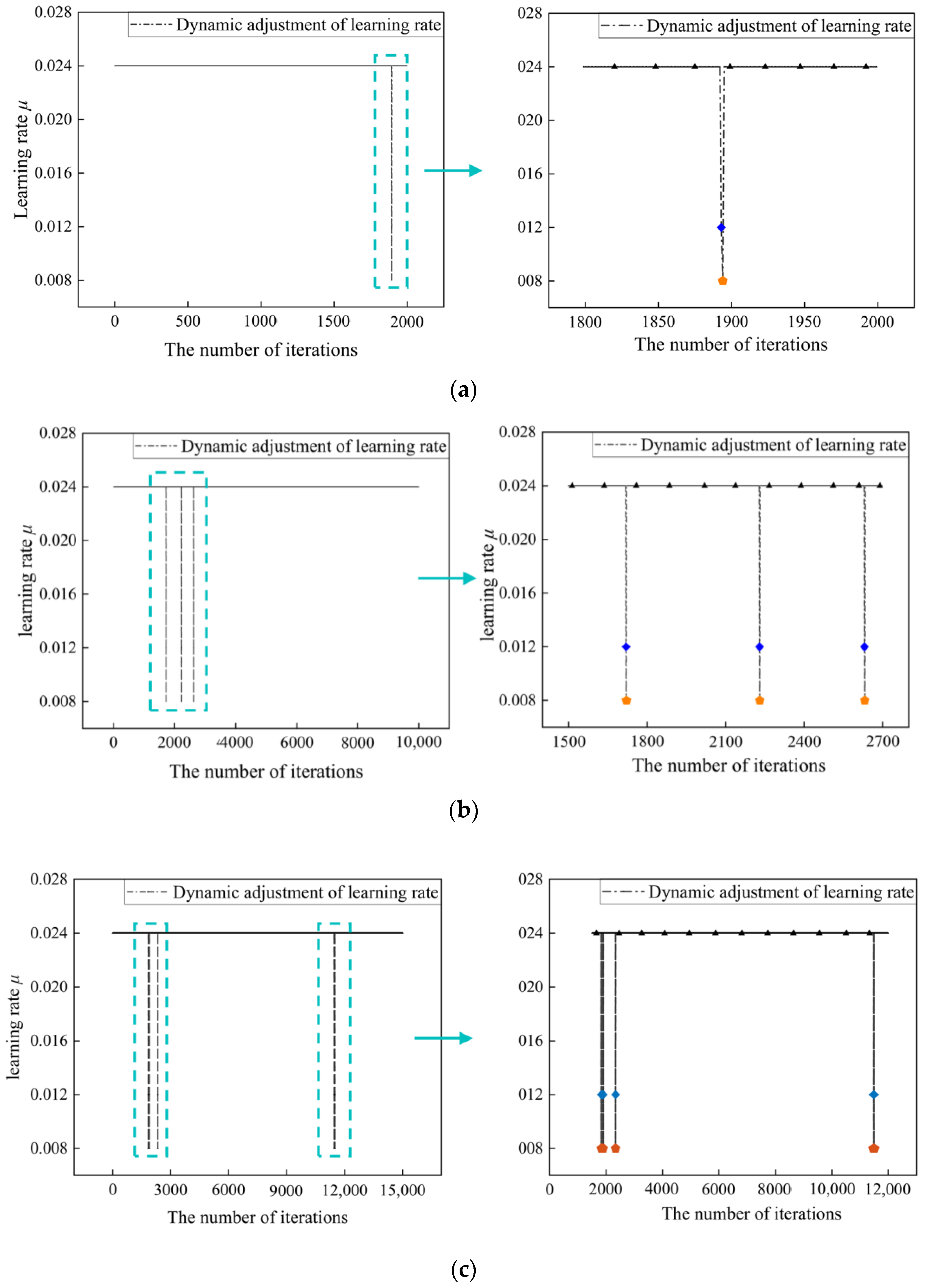

4.2.2. Influence of Dynamic Learning Rate

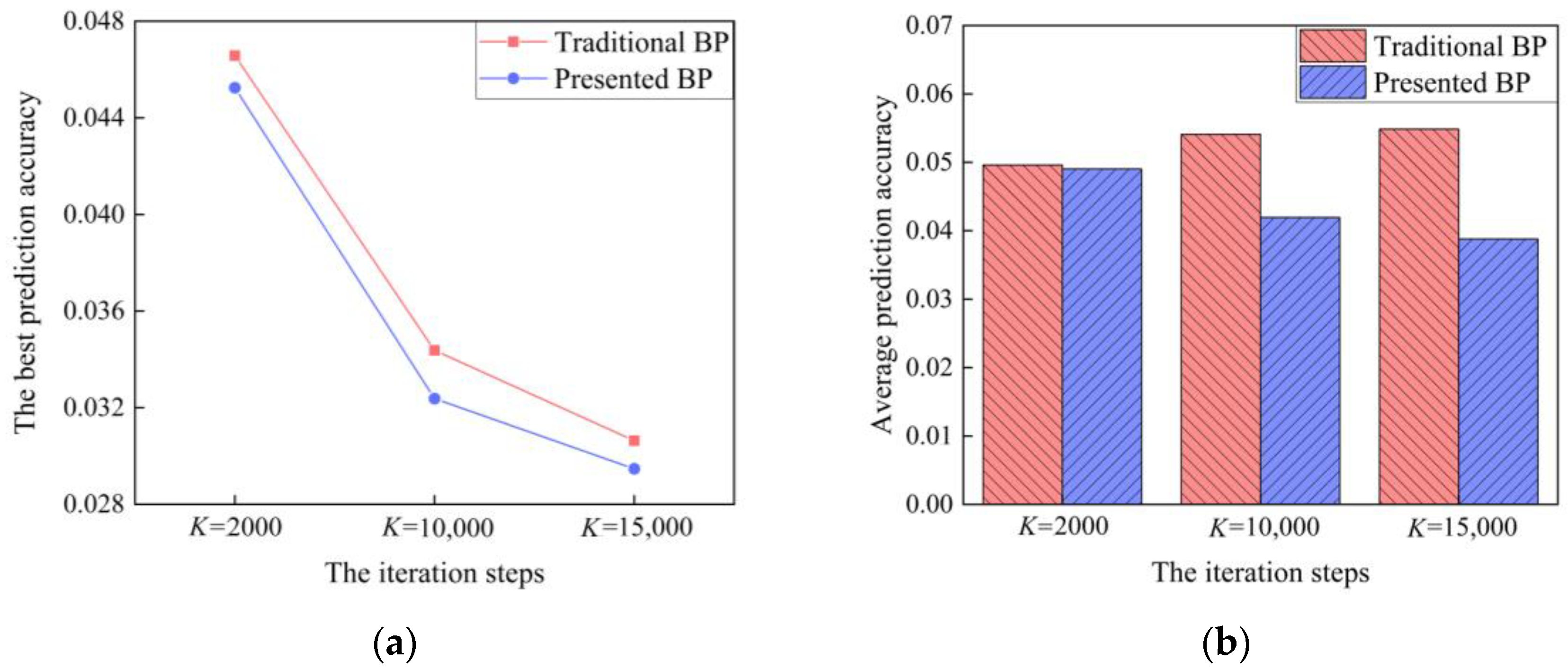

4.2.3. Comparison of Prediction Accuracy

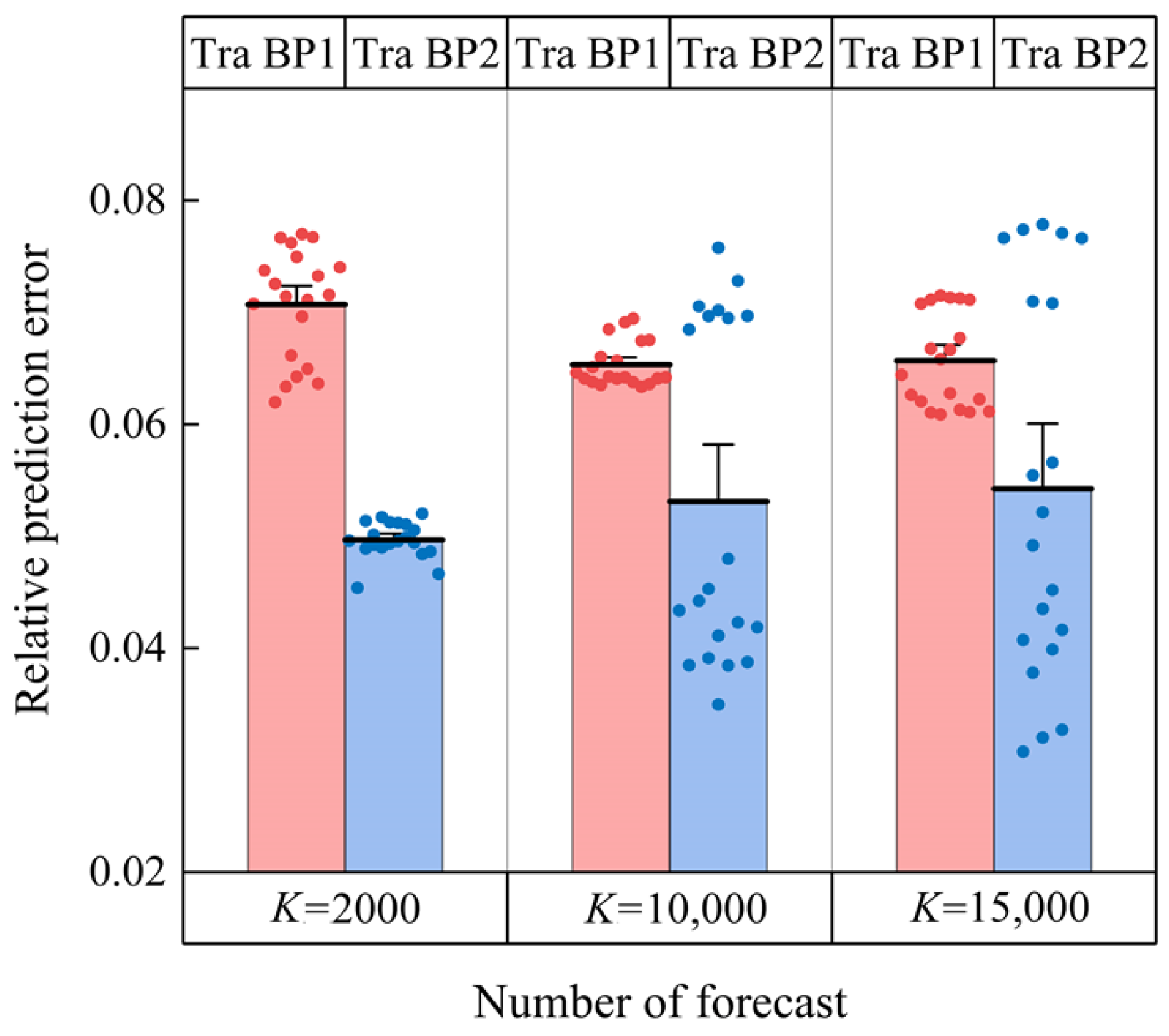

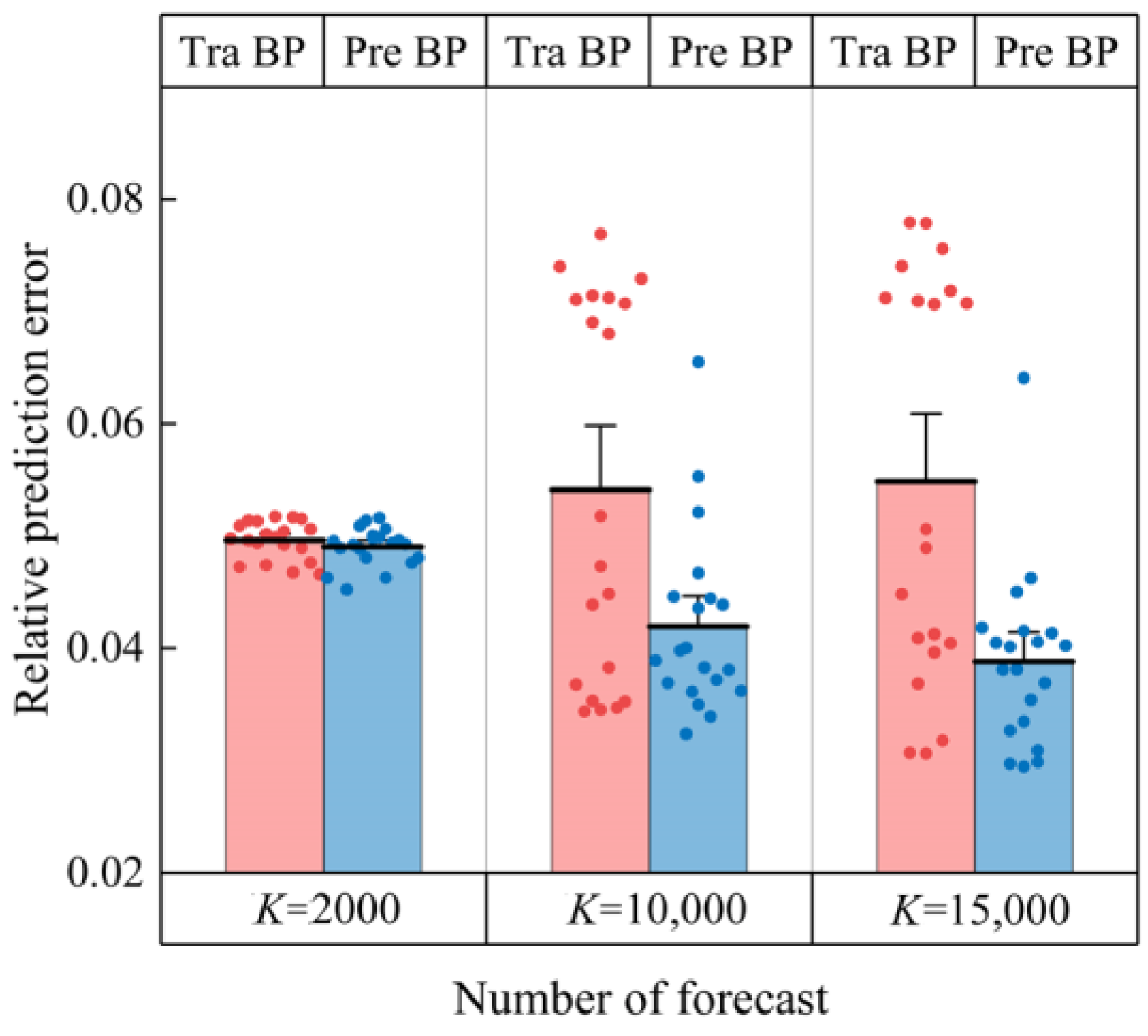

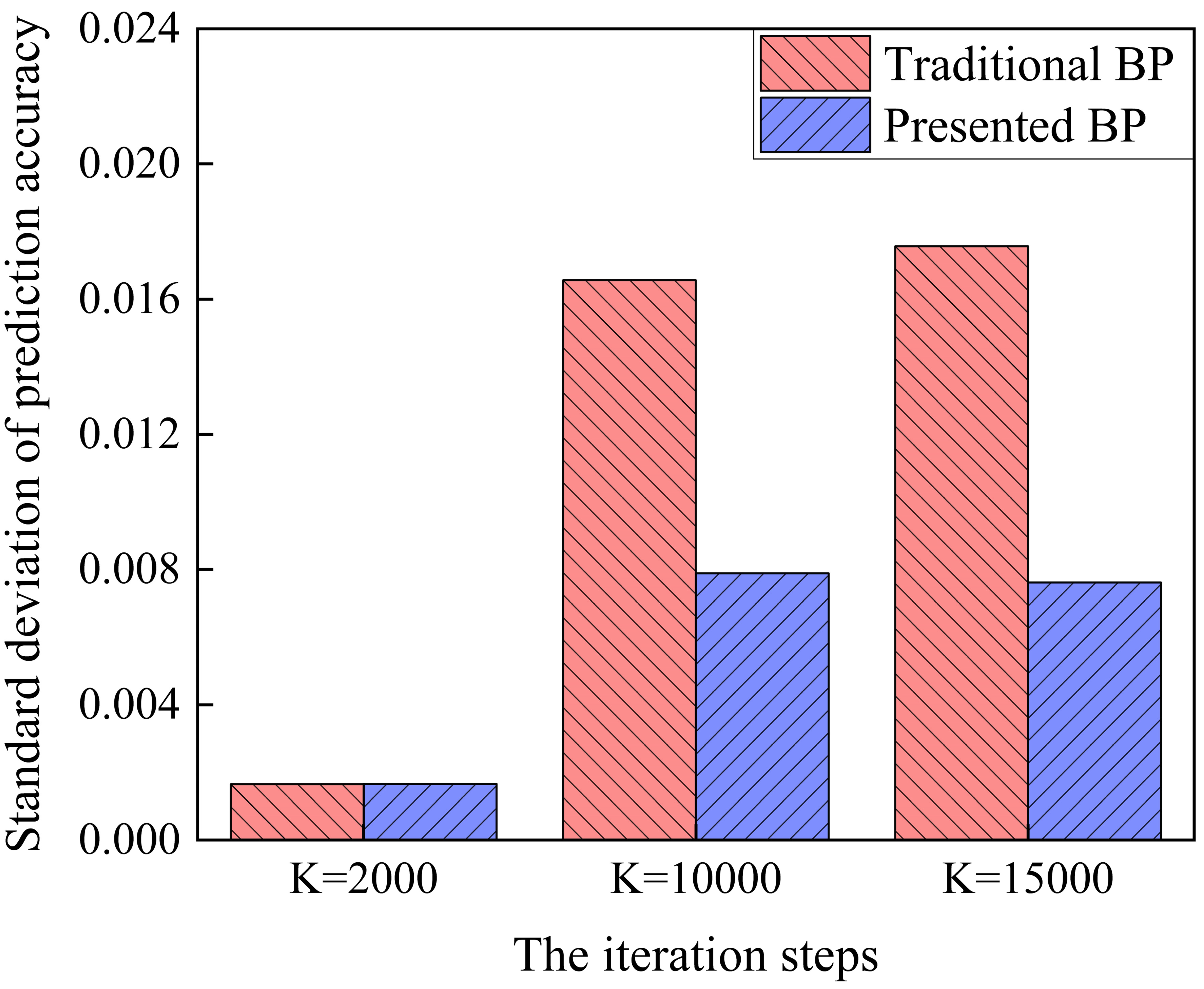

4.2.4. Comparison of Predictive Stability

5. Conclusions

- (1)

- The features of the force signal selected in this paper contain enough grinding wheel state information, which enhances the correlation between the input parameters and the ground surface roughness Ra and improves prediction performance of the model.

- (2)

- The “identify factors” effectively judge whether the BP network falls into the local optimal solution and reduces the influence of human factors. The “memory factors” can update and store the best weights in real time during network training.

- (3)

- The improvement of the iterative termination conditions of the prediction model and the adjustment of the weight update rules are effective measures for solving the local optimal solution problem, reducing the influence of human factors, and maximizing the prediction performance of the model itself. Dynamic adjustment of learning rate improves the search accuracy of the target weights with the premise of ensuring rapid convergence.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

Appendix A.1

| Data Number | Wheel Speed vs (rpm) | Workpiece Speed vw (mm/min) | Depth of Cut ap (μm) | Normal Force Fn (N) | Tangential Force Ft (N) | Experimental Value Ra (μm) |

| 1 | 500 | 100 | 50 | 11.9 | 3 | 6.1736 |

| 2 | 500 | 100 | 50 | 51 | 7.4 | 11.1541 |

| 3 | 500 | 100 | 50 | 103 | 5 | 21.9520 |

| 4 | 500 | 200 | 100 | 34.8 | 10.3 | 10.2449 |

| 5 | 500 | 200 | 100 | 100 | 9.2 | 18.3258 |

| 6 | 500 | 200 | 100 | 151 | 17 | 26.6484 |

| 7 | 500 | 500 | 150 | 72.7 | 29.6 | 15.2052 |

| 8 | 500 | 500 | 150 | 167 | 42.6 | 25.1561 |

| 9 | 500 | 500 | 150 | 321 | 50 | 48.0556 |

| 10 | 2000 | 100 | 100 | 4.3 | 1.5 | 6.1047 |

| 11 | 2000 | 100 | 100 | 78 | 9 | 17.0280 |

| 12 | 2000 | 100 | 100 | 103 | 13 | 20.9814 |

| 13 | 2000 | 200 | 150 | 18 | 5.1 | 8.1575 |

| 14 | 2000 | 200 | 150 | 72 | 14.4 | 13.9500 |

| 15 | 2000 | 200 | 150 | 136 | 27.6 | 26.1880 |

| 16 | 2000 | 500 | 50 | 14.3 | 3.7 | 7.7320 |

| 17 | 2000 | 500 | 50 | 75 | 7.2 | 14.0218 |

| 18 | 2000 | 500 | 50 | 105 | 17.5 | 17.9756 |

| 19 | 5000 | 100 | 150 | 24 | 4.8 | 10.8010 |

| 20 | 5000 | 100 | 150 | 42.3 | 13.3 | 11.5534 |

| 21 | 5000 | 200 | 50 | 4.13 | 1 | 7.1582 |

| 22 | 5000 | 200 | 50 | 11.7 | 1.8 | 8.2657 |

| 23 | 5000 | 200 | 50 | 4.3 | 1 | 9.1648 |

| 24 | 5000 | 500 | 100 | 9.5 | 3.3 | 11.6318 |

| 25 | 5000 | 500 | 100 | 42 | 8.2 | 11.6829 |

| 26 | 5000 | 500 | 100 | 93 | 26 | 19.8032 |

Appendix A.2

| Data Number | Experimental Value Ra (μm) | Traditional BP1—Predictive Value Ra (μm) | Traditional BP2—Predictive Value Ra (μm) | ||||

| K = 2000 | K = 10,000 | K = 15,000 | K = 2000 | K = 10,000 | K = 15,000 | ||

| 1 | 6.1736 | 5.9377 | 6.0387 | 6.2356 | 6.1662 | 6.1155 | 6.1012 |

| 2 | 11.1541 | 12.0337 | 11.6825 | 11.5120 | 10.5000 | 11.1706 | 11.2820 |

| 3 | 21.9520 | 21.5702 | 21.2504 | 21.2368 | 20.5791 | 22.4294 | 22.5386 |

| 4 | 10.2449 | 10.0019 | 10.3689 | 10.4252 | 9.8667 | 10.3491 | 10.3809 |

| 5 | 18.3258 | 18.8424 | 19.2144 | 19.2610 | 18.7863 | 18.9356 | 19.0346 |

| 6 | 26.6484 | 28.3290 | 28.2966 | 28.7689 | 25.2541 | 27.1364 | 27.2721 |

| 7 | 15.2052 | 15.0605 | 15.2562 | 15.1439 | 15.4312 | 16.1145 | 16.1340 |

| 8 | 25.1561 | 25.2395 | 24.9701 | 24.9139 | 23.5573 | 24.5187 | 24.5491 |

| 9 | 48.0556 | 46.0416 | 47.0087 | 45.4198 | 45.3688 | 46.1008 | 46.2746 |

| 10 | 6.1047 | 6.4816 | 6.6989 | 6.6483 | 6.2067 | 5.8297 | 5.7838 |

| 11 | 17.0280 | 17.2812 | 16.7244 | 16.4367 | 15.6231 | 16.6271 | 16.5679 |

| 12 | 20.9814 | 24.2625 | 21.6212 | 21.7584 | 22.9222 | 22.8483 | 23.1165 |

| 13 | 8.1575 | 9.2555 | 8.8188 | 8.8718 | 8.2594 | 8.7358 | 8.7104 |

| 14 | 13.9500 | 14.5951 | 14.4810 | 14.2326 | 13.4412 | 13.9532 | 13.9405 |

| 15 | 26.1880 | 24.8701 | 23.6104 | 24.0026 | 22.1440 | 22.6590 | 22.6393 |

| 16 | 7.7320 | 7.5589 | 7.3077 | 7.1534 | 6.4606 | 6.8010 | 6.7185 |

| 17 | 14.0218 | 14.4235 | 14.1043 | 14.0486 | 13.1745 | 13.8179 | 13.8261 |

| 18 | 17.9756 | 19.3721 | 19.6324 | 19.6932 | 16.7902 | 17.5231 | 17.5951 |

| 19 | 10.8010 | 11.7955 | 12.0829 | 12.0662 | 11.1319 | 11.3751 | 11.2238 |

| 20 | 11.5534 | 14.1061 | 13.4673 | 13.4241 | 12.1707 | 12.7432 | 12.7371 |

| 21 | 7.1582 | 8.1107 | 7.2854 | 7.1587 | 6.7721 | 6.8446 | 6.6590 |

| 22 | 8.2657 | 9.1981 | 8.7790 | 8.5764 | 9.1519 | 8.8483 | 8.7494 |

| 23 | 9.1648 | 8.1302 | 7.3138 | 7.1842 | 7.8731 | 9.9086 | 10.0518 |

| 24 | 11.6318 | 11.7538 | 11.9479 | 11.8384 | 10.6782 | 12.6480 | 12.3767 |

| 25 | 11.6829 | 14.1239 | 13.1856 | 12.8342 | 11.3742 | 11.3799 | 11.3417 |

| 26 | 19.8032 | 19.3947 | 19.2437 | 19.2250 | 18.7686 | 19.7676 | 19.6758 |

Appendix B

| Data Number | Experimental Value Ra (μm) | Traditional BP—Predictive Value Ra (μm) | Presented BP—Predictive Value Ra (μm) | ||||

| K = 2000 | K = 10,000 | K = 15,000 | K = 2000 | K = 10,000 | K = 15,000 | ||

| 1 | 6.1736 | 6.4858 | 6.0926 | 6.1053 | 6.4803 | 6.3844 | 6.3914 |

| 2 | 11.1541 | 11.0681 | 11.1851 | 11.3842 | 11.0661 | 11.0914 | 11.1930 |

| 3 | 21.9520 | 21.6907 | 22.2608 | 22.2202 | 21.8527 | 22.7907 | 22.5332 |

| 4 | 10.2449 | 10.3773 | 10.3049 | 10.3420 | 10.3619 | 10.3389 | 10.2789 |

| 5 | 18.3258 | 18.8043 | 19.4418 | 19.3012 | 18.8031 | 18.7728 | 18.6973 |

| 6 | 26.6484 | 26.4874 | 26.9640 | 27.3843 | 26.4996 | 26.9174 | 26.8206 |

| 7 | 15.2052 | 16.2578 | 16.1387 | 16.4480 | 16.2493 | 15.9223 | 15.9661 |

| 8 | 25.1561 | 24.8344 | 24.6758 | 24.8958 | 24.8125 | 24.7823 | 24.8555 |

| 9 | 48.0556 | 47.7151 | 46.1312 | 47.2702 | 47.9425 | 46.3802 | 45.8345 |

| 10 | 6.1047 | 6.5375 | 5.7150 | 5.7788 | 6.5362 | 6.2685 | 6.2314 |

| 11 | 17.0280 | 16.4552 | 16.6609 | 16.4891 | 16.4355 | 16.4205 | 16.4199 |

| 12 | 20.9814 | 22.9105 | 21.7201 | 21.6857 | 22.9364 | 21.2162 | 21.2612 |

| 13 | 8.1575 | 8.7020 | 8.7292 | 8.7220 | 8.6979 | 8.5404 | 8.6626 |

| 14 | 13.9500 | 14.1399 | 14.0282 | 13.9418 | 14.1267 | 14.0676 | 14.1740 |

| 15 | 26.1880 | 23.3289 | 22.7952 | 23.4145 | 23.3002 | 23.0938 | 23.7798 |

| 16 | 7.7320 | 6.7857 | 6.7942 | 6.8517 | 6.7897 | 6.6948 | 6.6982 |

| 17 | 14.0218 | 13.8670 | 13.8426 | 13.9006 | 13.8532 | 13.9206 | 13.9464 |

| 18 | 17.9756 | 17.6694 | 17.5528 | 17.6178 | 17.6093 | 17.3578 | 17.4885 |

| 19 | 10.8010 | 11.6912 | 11.4173 | 11.3406 | 11.6866 | 11.1868 | 11.0692 |

| 20 | 11.5534 | 12.7916 | 12.6634 | 12.7060 | 12.7486 | 12.7000 | 12.6143 |

| 21 | 7.1582 | 7.1516 | 6.8157 | 6.5673 | 7.1731 | 6.9274 | 6.9051 |

| 22 | 8.2657 | 9.6312 | 8.9185 | 8.8806 | 9.6243 | 8.6722 | 8.4149 |

| 23 | 9.1648 | 8.3259 | 10.4199 | 10.7947 | 8.4040 | 9.0801 | 9.2246 |

| 24 | 11.6318 | 11.2833 | 12.8674 | 12.5209 | 11.2640 | 11.2953 | 11.4915 |

| 25 | 11.6829 | 11.9769 | 11.4133 | 11.3775 | 11.9692 | 11.5338 | 11.5788 |

| 26 | 19.8032 | 19.7932 | 19.6678 | 20.0712 | 19.8338 | 19.9598 | 19.9507 |

References

- Lin, X.K.; Li, H.L.; Yuan, B. Research on PSO-SVR based Intelligent Prediction of Surface Roughness for CNC Surface Grinding Process. J. Syst. Simul. 2009, 21, 7805–7808. [Google Scholar]

- Pan, Y.H.; Wang, Y.H.; Zhou, P. Activation functions selection for BP neural network model of ground surface roughness. J. Intell. Manuf. 2020, 31, 1825–1836. [Google Scholar] [CrossRef]

- Pan, Y.H.; Zhou, P.; Yan, Y. New insights into the methods for predicting ground surface roughness in the age of digitalisation. Precis. Eng. 2021, 67, 393–418. [Google Scholar] [CrossRef]

- Fountas, N.; Papantoniou, L.; Kechagias, J. Modeling and optimization of flexural properties of FDM-processed PET-G specimens using RSM and GWO algorithm. Eng. Fail. Anal. 2022, 138, 106340. [Google Scholar] [CrossRef]

- Fountas, N.; Papantoniou, L.; Kechagias, J. An experimental investigation of surface roughness in 3D-printed PLA items using design of experiments. J. Eng. Tribol. 2021, 135065012110593. [Google Scholar] [CrossRef]

- Asiltürk, İ.; Tinkir, M.; El Monuayri, H. An intelligent system approach for surface roughness and vibrations prediction in cylindrical grinding. Int. J. Comput. Integr. Manuf. 2012, 25, 750–759. [Google Scholar] [CrossRef]

- Yin, S.H.; Nguyen, D.; Chen, F.J. Application of compressed air in the online monitoring of surface roughness and grinding wheel wear when grinding Ti-6Al-4V titanium alloy. Int. J. Adv. Manuf. Technol. 2018, 101, 1315–1331. [Google Scholar] [CrossRef]

- Sudheer, K.; Varma, I.; Rajesh, S. Prediction of surface roughness and MRR in grinding process on Inconel 800 alloy using neural networks and ANFIS. Mater. Today Proc. 2018, 5, 5445–5451. [Google Scholar] [CrossRef]

- Liang, Z.W.; Liao, S.P.; Wen, Y.H. Working parameter optimization of strengthen waterjet grinding with the orthogonal-experiment-design-based ANFIS. J. Intell. Manuf. 2019, 30, 833–854. [Google Scholar] [CrossRef]

- Li, G.; Wang, L.; Ding, N. On-line prediction of surface roughness in cylindrical longitudinal grinding based on evolutionary neural networks. China Mech. Eng. 2005, 16, 223–226. [Google Scholar]

- Vaxevanidis, N.; Kechagias, J.; Fountas, N. Evaluation of Machinability in Turning of Engineering Alloys by Applying Artificial Neural Networks. Open Constr. Build. Technol. J. 2014, 8, 389–399. [Google Scholar] [CrossRef] [Green Version]

- Jiao, Y.; Ler, S.; Pei, Z.J. Fuzzy adaptive networks in machining process modeling: Surface roughness prediction for turning operations. Int. J. Mach. Tools Manuf. 2004, 44, 1643–1651. [Google Scholar] [CrossRef]

- Kechagias, J.; Fountas, Y.H.; Fountas, N. Surface characteristics investigation of 3D-printed PET-G plates during CO2 laser cutting. Mater. Manuf. Process. 2021, 1–11. [Google Scholar] [CrossRef]

- Baseri, H. Workpiece surface roughness prediction in grinding process for different disc dressing conditions. In Proceedings of the 2010 International Conference on Mechanical and Electrical Technology, Singapore, 10–12 September 2010; pp. 209–212. [Google Scholar]

- Shrivastava, Y.; Singh, B. Stable cutting zone prediction in CNC turning using adaptive signal processing technique merged with artificial neural network and multi-objective genetic algorithm. Eur. J. Mech. 2018, 70, 238–248. [Google Scholar] [CrossRef]

- Jiang, J.L.; Ge, P.Q.; Bi, W.B. 2D/3D ground surface topography modeling considering dressing and wear effects in grinding process. Int. J. Mach. Tools Manuf. 2013, 74, 29–40. [Google Scholar] [CrossRef]

- Zhou, J.H.; Pang, C.K.; Lewis, F.L. Intelligent Diagnosis and Prognosis of Tool Wear Using Dominant Feature Identification. IEEE Trans. Ind. Inform. 2009, 5, 454–464. [Google Scholar] [CrossRef]

- Elbestawi, M.A.; Marks, J.; Papazafiriou, T. Process Monitoring in Milling by Pattern-Recognition. Mech. Syst. Signal Process. 1989, 3, 305–315. [Google Scholar] [CrossRef]

- Yang, Z.X.; Yu, P.P.; Gu, J.N. Study on Prediction Model of Grinding Surface Roughness Based on PSO-BP Neural Network. Tool Eng. 2017, 51, 36–40. [Google Scholar]

- Li, S.; Liu, L.J.; Zhai, M. Prediction for short-term traffic flow based on modified PSO optimized BP neural network. Syst. Eng. Theory Pract. 2012, 32, 2045–2049. [Google Scholar]

- Li, Q.; Lin, Y.Q.; Yang, Y.P. Fault diagnosis method of wind turbine gearbox based on BP neural network trained by particle swarm optimization algorithm. Acta Energ. Sol. Sin. 2012, 33, 120–125. [Google Scholar]

- Pan, H.; Hou, Q.L. A BP neural networks learning algorithm research based on particle swarm optimizer. Comput. Eng. Appl. 2006, 16, 41–43. [Google Scholar]

- Wang, R.X.; Chen, B.; Qiu, S.H. Hazardous source estimation using an artificial neural network, particle swarm optimization and a simulated annealing algorithm. Atmosphere 2018, 9, 119. [Google Scholar] [CrossRef] [Green Version]

- Gao, H.B.; Gao, L.; Zhou, C. Particle swarm optimization based algorithm for neural network learning. Acta Electonica Sin. 2004, 32, 1572. [Google Scholar]

- Chen, M.R.; Chen, B.P.; Zeng, G.Q. An adaptive fractional-order BP neural network based on extremal optimization for handwritten digits recognition. Neurocomputing 2020, 391, 260–272. [Google Scholar] [CrossRef]

- Chu, L.N.; Chen, C.L. Design and Application of BP Neural Network Optimization Method Based on SIWSPSO Algorithm. Secur. Commun. Netw. 2022, 2022, 2960992. [Google Scholar] [CrossRef]

- Zhang, Y.H.; Hu, D.J.; Zhang, K. Prediction of the Surface Roughness in Curve Grinding Based on Evolutionary Neural Networks. J. Shanghai Jiaotong Univ. 2005, 39, 373–376. [Google Scholar]

- Liu, C.Y.; Ling, J.C.; Kou, L.Y. Performance comparison between GA-BP neural network and BP neural network. Chin. J. Health Stat. 2013, 30, 173–176. [Google Scholar]

- Wang, D.M.; Wang, L.; Zhang, G.M. Short-term wind speed forecast model for wind farms based on genetic BP neural network. J. Zhejiang Univ. 2012, 46, 837–841. [Google Scholar]

- Li, S.; Liu, L.J.; Xie, Y.L. Chaotic prediction for short-term traffic flow of optimized BP neural network based on genetic algorithm. Control Decis. 2011, 26, 1581–1585. [Google Scholar]

- Ding, S.F.; Su, C.Y.; Yu, J.Z. An optimizing BP neural network algorithm based on genetic algorithm. Artif. Intell. Rev. 2011, 36, 153–162. [Google Scholar] [CrossRef]

- Liu, T.S. The Research and Application on BP Neural Network Improvement. Northeast Agric. Univ. 2011, 31, 115–117. [Google Scholar]

- Gao, Y.H. A method of improving the performance of BP neural network. Microcomput. Appl. 2017, 36, 53–57. [Google Scholar]

| Parameter Level | Wheel Speed vs (rpm) | Workpiece Speed vw (mm/min) | Depth of Cut ap (μm) |

|---|---|---|---|

| 1 | 500 | 100 | 50 |

| 2 | 2000 | 200 | 100 |

| 3 | 5000 | 500 | 150 |

| Analytical Method | Features | Calculated Equations |

|---|---|---|

| Time domain analysis | The mean value AVG | |

| The root mean square value RMS | RMS | |

| Frequency domain analysis | The barycenter frequency FC | FC |

| Time–frequency domain analysis | The proportion of energy in each frequency band Eij |

| Input Parameters | Features |

|---|---|

| Basic process parameters | Wheel speed vs |

| Workpiece speed vw | |

| Depth of cut ap | |

| Tangential force Ft | |

| Normal force Fn | |

| Time–frequency domain parameters | 4th frequency band energy ratio of normal grinding force (16.5–19.5 Hz) E4 |

| The energy ratio of the first 8 frequency bands of the normal grinding force (0–31.25 Hz) E0~8 | |

| Time domain parameters | The root mean square value of normal force FRMS |

| Frequency domain parameters | The barycenter frequency of normal force FC |

| Prediction Accuracy | Traditional BP1 | Traditional BP2 | ||||

|---|---|---|---|---|---|---|

| K = 2000 | K = 10,000 | K = 15,000 | K = 2000 | K = 10,000 | K = 15,000 | |

| Best value | 0.062 | 0.062 | 0.061 | 0.045 | 0.035 | 0.031 |

| Average value | 0.071 | 0.065 | 0.066 | 0.050 | 0.053 | 0.054 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, X.; Pan, Y.; Yan, Y.; Wang, Y.; Zhou, P. Adaptive BP Network Prediction Method for Ground Surface Roughness with High-Dimensional Parameters. Mathematics 2022, 10, 2788. https://doi.org/10.3390/math10152788

Liu X, Pan Y, Yan Y, Wang Y, Zhou P. Adaptive BP Network Prediction Method for Ground Surface Roughness with High-Dimensional Parameters. Mathematics. 2022; 10(15):2788. https://doi.org/10.3390/math10152788

Chicago/Turabian StyleLiu, Xubao, Yuhang Pan, Ying Yan, Yonghao Wang, and Ping Zhou. 2022. "Adaptive BP Network Prediction Method for Ground Surface Roughness with High-Dimensional Parameters" Mathematics 10, no. 15: 2788. https://doi.org/10.3390/math10152788

APA StyleLiu, X., Pan, Y., Yan, Y., Wang, Y., & Zhou, P. (2022). Adaptive BP Network Prediction Method for Ground Surface Roughness with High-Dimensional Parameters. Mathematics, 10(15), 2788. https://doi.org/10.3390/math10152788