Enhancing the Performance of Software Authorship Attribution Using an Ensemble of Deep Autoencoders

Abstract

1. Introduction

- RQ1

- How to design a supervised classifier based on an ensemble of autoencoders for predicting the software developer that is likely to author a certain source code, considering the encoded coding-style for the developers?

- RQ2

- Does the proposed classifier improve the software authorship performance compared to conventional classifiers from the machine learning literature?

- RQ3

- Could such a classification model that works in a closed-set configuration, be extended to work in an open-set configuration, with the aim not only to recognize the classes of developers it was trained on, but to detect an “unknown” class/developer as well?

2. Problem Relevance and Difficulty

3. Literature Review

3.1. Features and Algorithms Used in the SAA Task

3.2. The Google Code Jam Data Set

3.3. Related Work

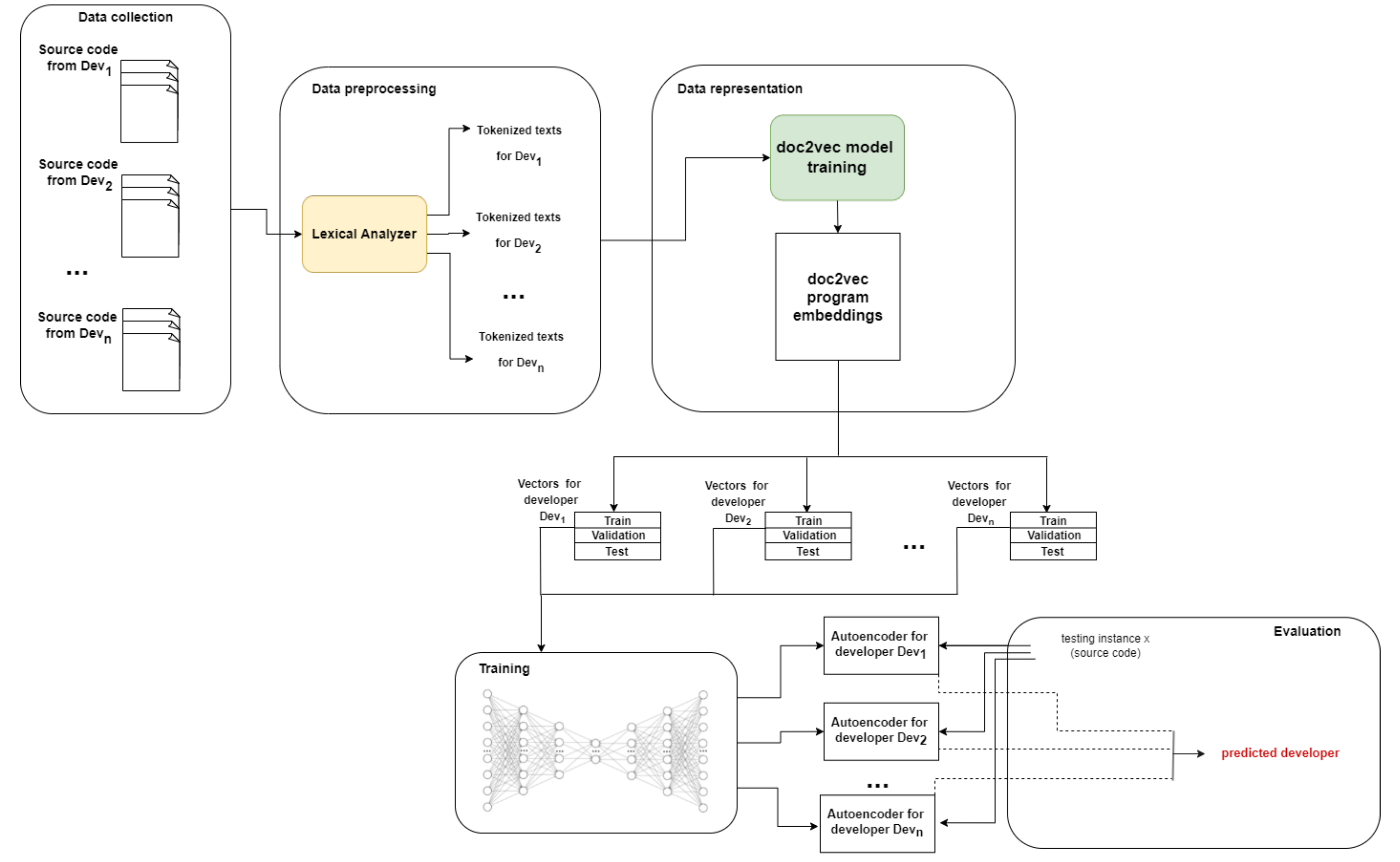

4. Methodology

4.1. Data Preprocessing and Representation

4.2. Training

4.3. Evaluation

4.3.1. Classification

4.3.2. Experimental Methodology

5. Experimental Results

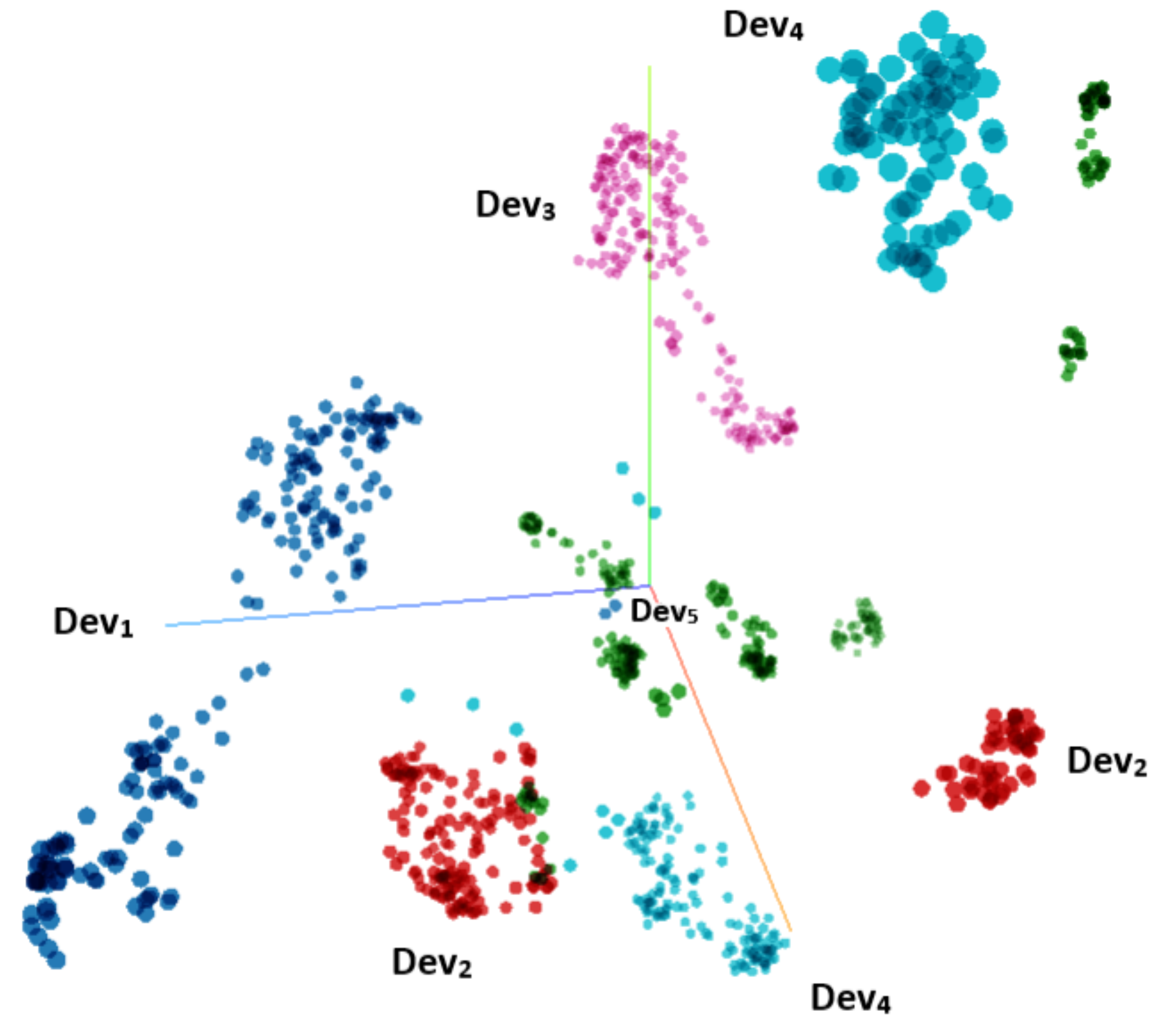

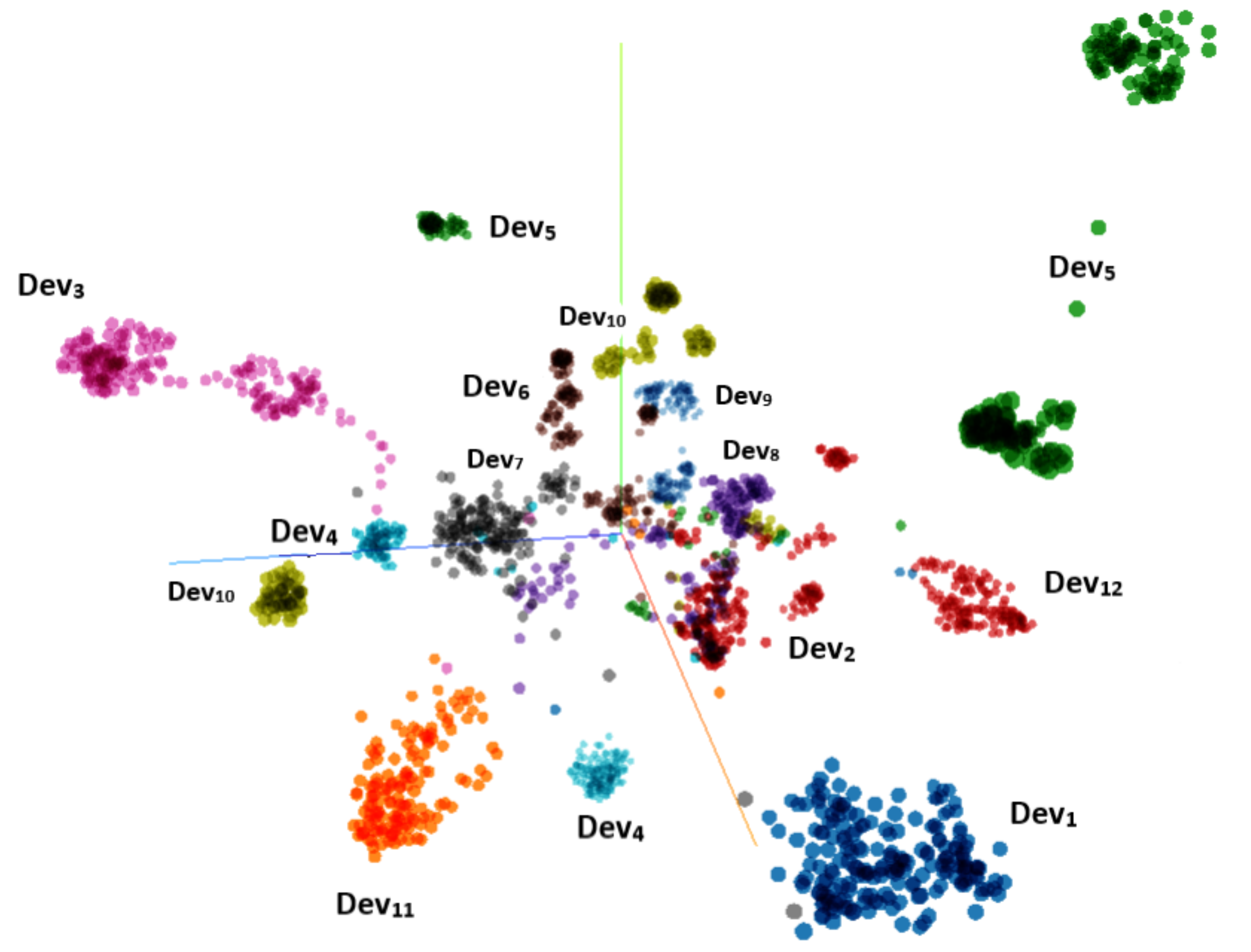

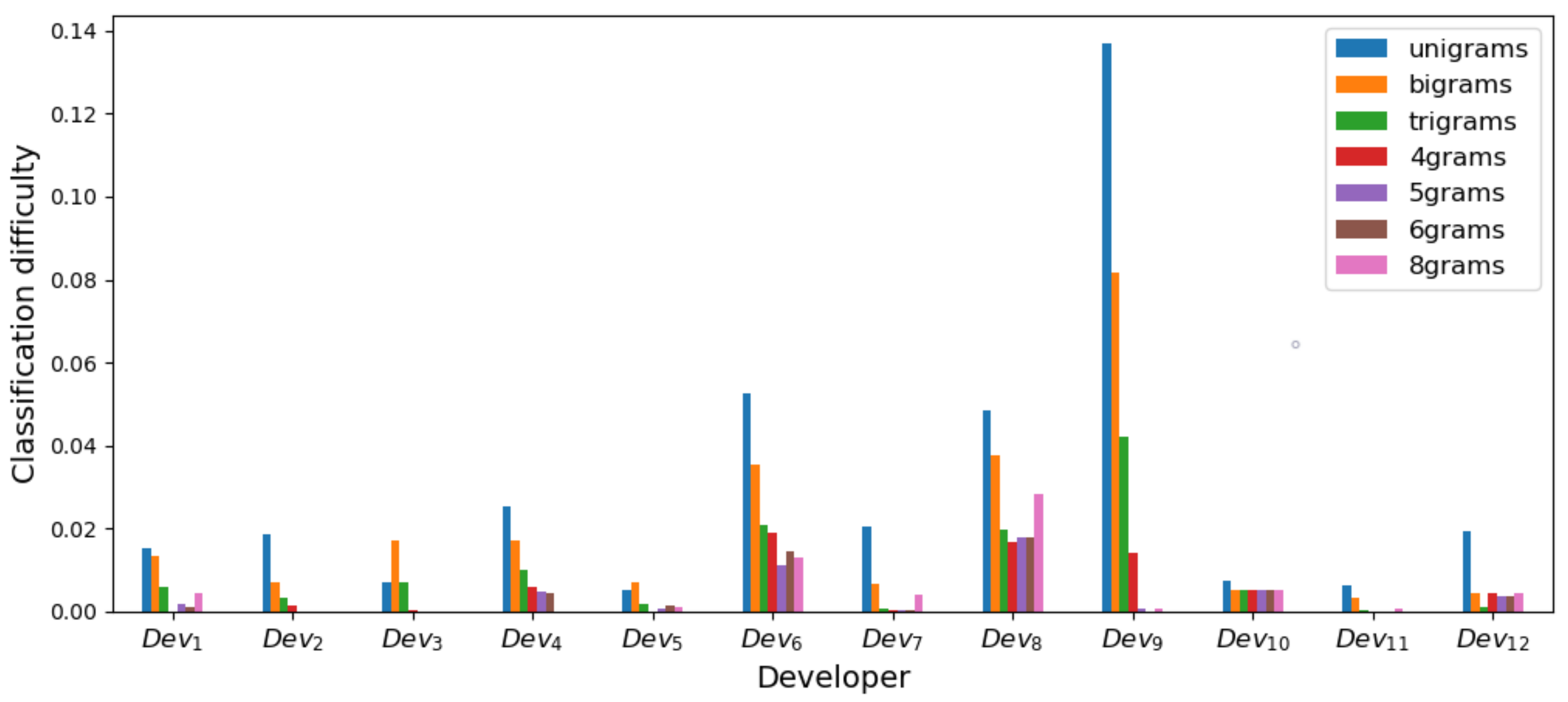

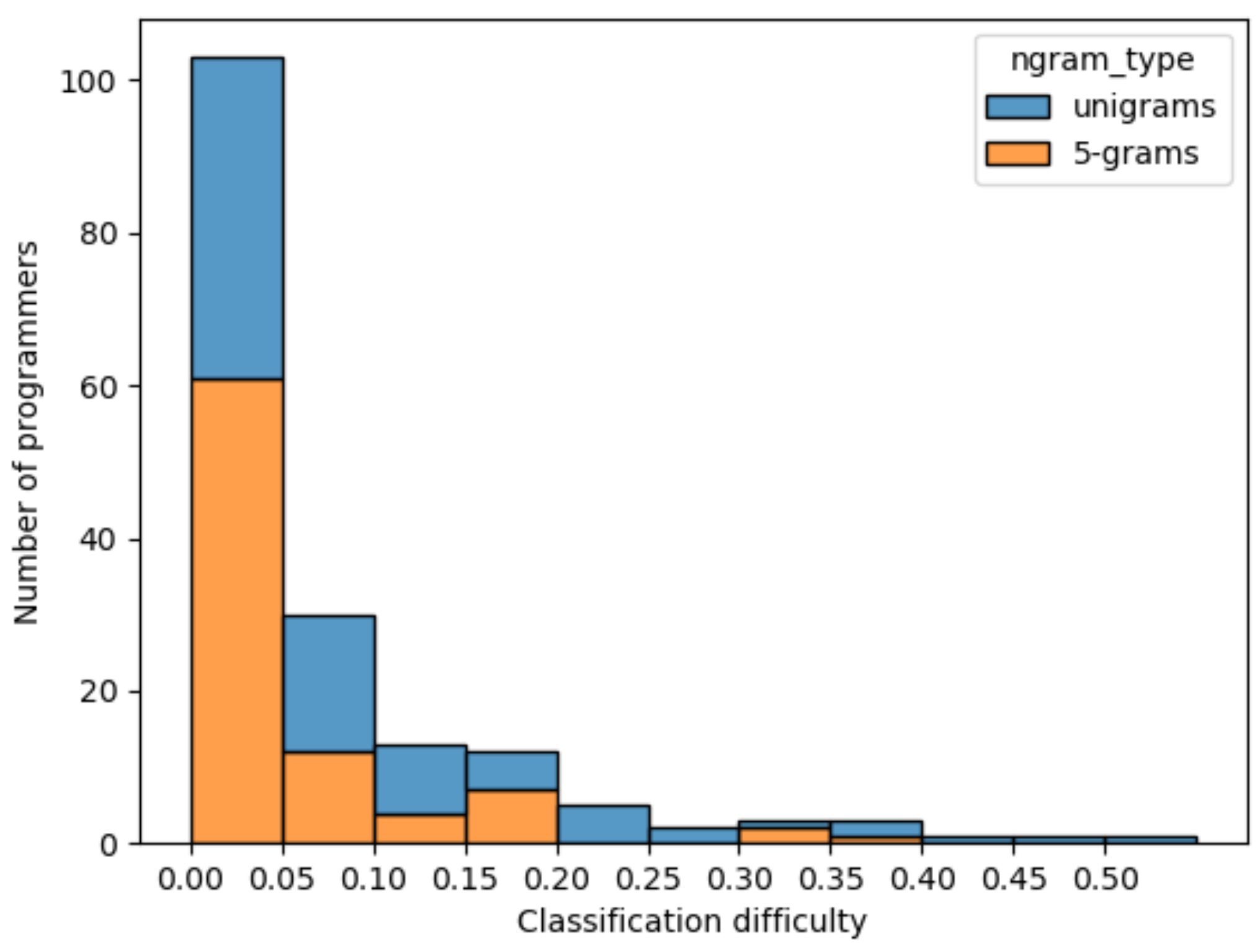

5.1. Data Description and Analysis

5.2. Results and Discussion

5.2.1. Results

5.2.2. Discussion

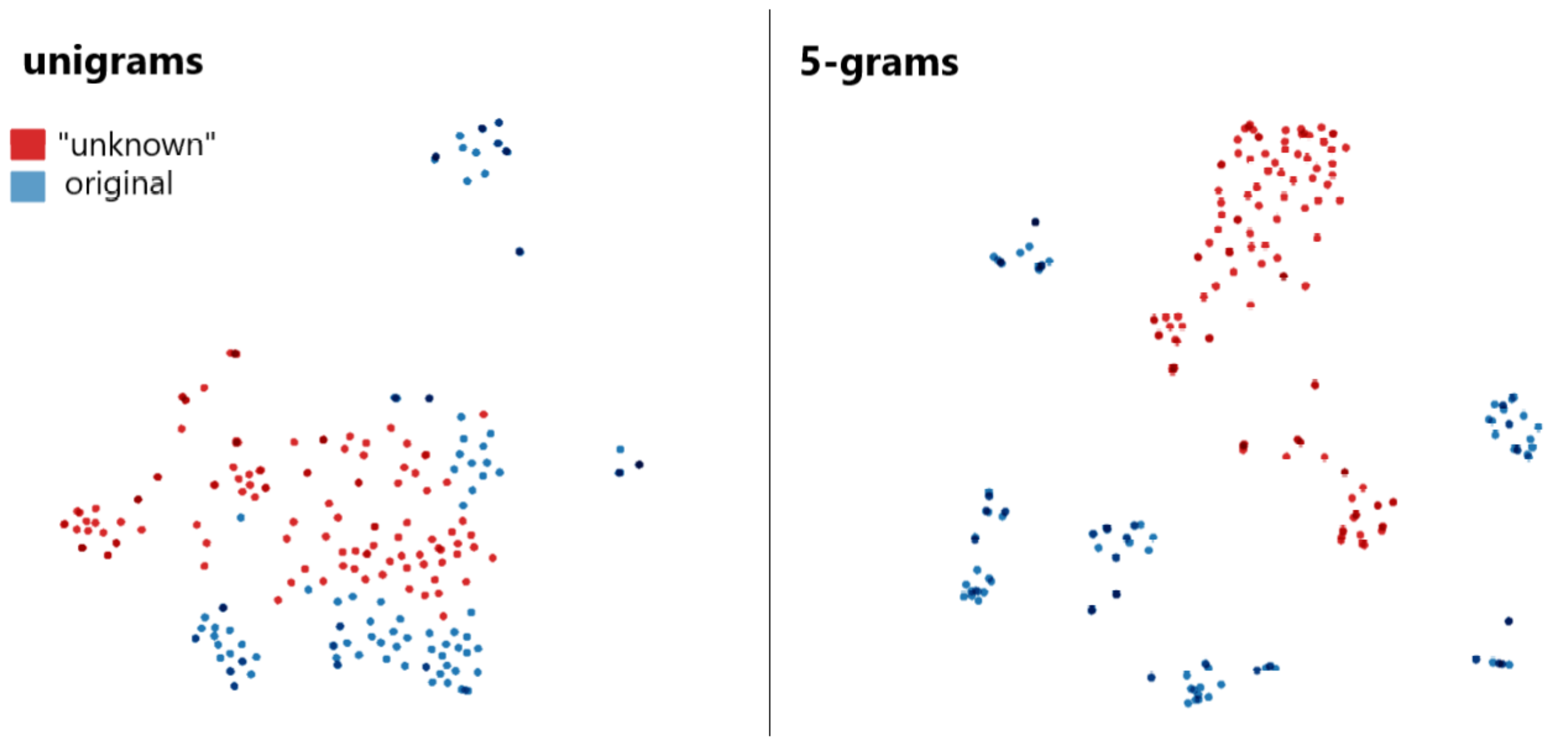

6. Extension of the AutoSoft Classifier

6.1. Classification Stage for the AutoSoft Classifier

6.2. Evaluation of AutoSoft

| Algorithm 1 Classification for the testing instance , considering an additional “unknown” class. |

functionClassify() Require:n —the number of original software developers A —the set of n trained autoencoders —the testing instance (software program) to be classified Ensure:return the predicted class “unknown”} if then “unknown” else // The output class c belongs to // The decision-making process from Section 4.3.1 is applied for deciding // the output class end if return c end function |

6.2.1. Testing

6.2.2. Comparison to

7. Threats to Validity

8. Conclusions and Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Abuhamad, M.; Rhim, J.S.; AbuHmed, T.; Ullah, S.; Kang, S.; Nyang, D. Code authorship identification using convolutional neural networks. Future Gener. Comput. Syst. 2019, 95, 104–115. [Google Scholar] [CrossRef]

- Sallis, P.; Aakjaer, A.; MacDonell, S. Software forensics: Old methods for a new science. In Proceedings of the 1996 International Conference Software Engineering: Education and Practice, Dunedin, New Zealand, 24–27 January 1996; pp. 481–485. [Google Scholar]

- Tian, Q.; Fang, C.C.; Yeh, C.W. Software Release Assessment under Multiple Alternatives with Consideration of Debuggers; Learning Rate and Imperfect Debugging Environment. Mathematics 2022, 10, 1744. [Google Scholar] [CrossRef]

- Bogomolov, E.; Kovalenko, V.; Rebryk, Y.; Bacchelli, A.; Bryksin, T. Authorship attribution of source code: A language-agnostic approach and applicability in software engineering. In Proceedings of the 29th ACM Joint Meeting on European Software Engineering Conference and Symposium on the Foundations of Software Engineering, Athens, Greece, 23–28 August 2021; pp. 932–944. [Google Scholar]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Le, Q. Building high-level features using large scale unsupervised learning. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013; pp. 8595–8598. [Google Scholar]

- Teletin, M.; Czibula, G.; Codre, C. AutoSimP: An Approach for Predicting Proteins’ Structural Similarities Using an Ensemble of Deep Autoencoders. In Knowledge Science, Engineering and Management; Douligeris, C., Karagiannis, D., Apostolou, D., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 49–54. [Google Scholar]

- Czibula, G.; Albu, A.I.; Bocicor, M.I.; Chira, C. AutoPPI: An Ensemble of Deep Autoencoders for Protein–Protein Interaction Prediction. Entropy 2021, 23, 643. [Google Scholar] [CrossRef] [PubMed]

- Deng, J.; Zhang, Z.; Marchi, E.; Schuller, B. Sparse autoencoder-based feature transfer learning for speech emotion recognition. In Proceedings of the 2013 Humaine Association Conference on Affective Computing and Intelligent Interaction, Geneva, Switzerland, 2–5 September 2013; pp. 511–516. [Google Scholar]

- Tatar, D.; Czibula, G.S.; Mihis, A.D.; Mihalcea, R. Textual Entailment as a Directional Relation. J. Res. Pract. Inf. Technol. 2009, 41, 53–64. [Google Scholar]

- Le, Q.; Mikolov, T. Distributed Representations of Sentences and Documents. In Proceedings of the 31st International Conference on Machine Learning, Beijing, China, 21–26 June 2014; pp. 1188–1196. [Google Scholar]

- Chuanxing, G.; Sheng-Jun, H.; Songcan, C. Recent Advances in Open Set Recognition: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 3614–3631. [Google Scholar]

- Anvik, J.; Hiew, L.; Murphy, G.C. Who should fix this bug? In Proceedings of the 28th International Conference on Software Engineering, Shanghai, China, 20–28 May 2006; pp. 361–370. [Google Scholar]

- Fritz, T.; Ou, J.; Murphy, G.C.; Murphy-Hill, E. A degree-of-knowledge model to capture source code familiarity. In Proceedings of the 32nd ACM/IEEE International Conference on Software Engineering, Cape Town, South Africa, 2–8 May 2010; Volume 1, pp. 385–394. [Google Scholar]

- Girba, T.; Kuhn, A.; Seeberger, M.; Ducasse, S. How developers drive software evolution. In Proceedings of the Eighth International Workshop on Principles of Software Evolution (IWPSE’05), Lisbon, Portugal, 5–6 September 2005; pp. 113–122. [Google Scholar]

- Bird, C.; Nagappan, N.; Murphy, B.; Gall, H.; Devanbu, P. Don’t touch my code! Examining the effects of ownership on software quality. In Proceedings of the 19th ACM SIGSOFT Symposium and the 13th European Conference on Foundations of Software Engineering, Szeged, Hungary, 5–9 September 2011; pp. 4–14. [Google Scholar]

- Thongtanunam, P.; McIntosh, S.; Hassan, A.E.; Iida, H. Revisiting code ownership and its relationship with software quality in the scope of modern code review. In Proceedings of the 38th International Conference on Software Engineering, Austin, TX, USA, 14–22 May 2016; pp. 1039–1050. [Google Scholar]

- Rahman, F.; Devanbu, P. Ownership, experience and defects: A fine-grained study of authorship. In Proceedings of the 33rd International Conference on Software Engineering, Honolulu, HI, USA, 21–28 May 2011; pp. 491–500. [Google Scholar]

- Krsul, I.; Spafford, E.H. Authorship analysis: Identifying the author of a program. Comput. Secur. 1997, 16, 233–257. [Google Scholar] [CrossRef]

- Oman, P.W.; Cook, C.R. Programming style authorship analysis. In Proceedings of the 17th Conference on ACM Annual Computer Science Conference, Kentucky, Louisville, 21–23 February 1989; pp. 320–326. [Google Scholar]

- Spafford, E.H.; Weeber, S.A. Software forensics: Can we track code to its authors? Comput. Secur. 1993, 12, 585–595. [Google Scholar] [CrossRef][Green Version]

- Rosenblum, N.; Zhu, X.; Miller, B.P. Who wrote this code? Identifying the authors of program binaries. In European Symposium on Research in Computer Security; Springer: Berlin/Heidelberg, Germany, 2011; pp. 172–189. [Google Scholar]

- Burrows, S.; Tahaghoghi, S.M. Source code authorship attribution using n-grams. In Proceedings of the Twelth Australasian Document Computing Symposium, Melbourne, Australia, 10 December 2007; pp. 32–39. [Google Scholar]

- Frantzeskou, G.; Stamatatos, E.; Gritzalis, S.; Katsikas, S. Source code author identification based on n-gram author profiles. In IFIP International Conference on Artificial Intelligence Applications and Innovations; Springer: Boston, MA, USA, 2006; pp. 508–515. [Google Scholar]

- Tennyson, M.F. A Replicated Comparative Study of Source Code Authorship Attribution. In Proceedings of the 2013 3rd International Workshop on Replication in Empirical Software Engineering Research, Baltimore, MD, USA, 9 October 2013; pp. 76–83. [Google Scholar] [CrossRef]

- Frantzeskou, G.; Stamatatos, E.; Gritzalis, S.; Chaski, C.E.; Howald, B.S. Identifying authorship by byte-level n-grams: The source code author profile (SCAP) method. Int. J. Digit. Evid. 2007, 6, 1–18. [Google Scholar]

- Ullah, F.; Jabbar, S.; AlTurjman, F. Programmers’ de-anonymization using a hybrid approach of abstract syntax tree and deep learning. Technol. Forecast. Soc. Chang. 2020, 159, 120186. [Google Scholar] [CrossRef]

- Alsulami, B.; Dauber, E.; Harang, R.; Mancoridis, S.; Greenstadt, R. Source code authorship attribution using long short-term memory based networks. In European Symposium on Research in Computer Security; Springer: Berlin/Heidelberg, Germany, 2017; pp. 65–82. [Google Scholar]

- Alon, U.; Zilberstein, M.; Levy, O.; Yahav, E. Code2vec: Learning Distributed Representations of Code. CoRR. 2018. Available online: http://xxx.lanl.gov/abs/1803.09473 (accessed on 15 March 2021).

- Ullah, F.; Naeem, M.R.; Naeem, H.; Cheng, X.; Alazab, M. CroLSSim: Cross-language software similarity detector using hybrid approach of LSA-based AST-MDrep features and CNN-LSTM model. Int. J. Intell. Syst. 2022, 2022, 1–28. [Google Scholar] [CrossRef]

- Mateless, R.; Tsur, O.; Moskovitch, R. Pkg2Vec: Hierarchical package embedding for code authorship attribution. Future Gener. Comput. Syst. 2021, 116, 49–60. [Google Scholar] [CrossRef]

- Mou, L.; Li, G.; Zhang, L.; Wang, T.; Jin, Z. Convolutional neural networks over tree structures for programming language processing. In Proceedings of the Thirtieth AAAI Conference on Artificial Intelligence, Phoenix, AZ, USA, 12–17 February 2016; pp. 1287–1293. [Google Scholar]

- Piech, C.; Huang, J.; Nguyen, A.; Phulsuksombati, M.; Sahami, M.; Guibas, L. Learning program embeddings to propagate feedback on student code. In Proceedings of the International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 1093–1102. [Google Scholar]

- Google. Google Code Jam Competition. Available online: https://codingcompetitions.withgoogle.com/codejam. (accessed on 15 September 2021).

- Petrik, J. GCJ Data Set. Available online: https://github.com/Jur1cek/gcj-dataset. (accessed on 15 September 2021).

- Simko, L.; Zettlemoyer, L.; Kohno, T. Recognizing and Imitating Programmer Style: Adversaries in Program Authorship Attribution. Proc. Priv. Enhancing Technol. 2018, 2018, 127–144. [Google Scholar] [CrossRef][Green Version]

- Abuhamad, M.; AbuHmed, T.; Mohaisen, A.; Nyang, D. Large-scale and language-oblivious code authorship identification. In Proceedings of the 2018 ACM SIGSAC Conference on Computer and Communications Security, Toronto, ON, Canada, 15–19 October 2018; pp. 101–114. [Google Scholar]

- Caliskan-Islam, A.; Harang, R.; Liu, A.; Narayanan, A.; Voss, C.; Yamaguchi, F.; Greenstadt, R. De-anonymizing programmers via code stylometry. In Proceedings of the 24th USENIX Security Symposium (USENIX Security 15), Washington, DC, USA, 12–14 August 2015; pp. 255–270. [Google Scholar]

- Alrabaee, S.; Saleem, N.; Preda, S.; Wang, L.; Debbabi, M. Oba2: An onion approach to binary code authorship attribution. Digit. Investig. 2014, 11, S94–S103. [Google Scholar] [CrossRef]

- Caliskan, A.; Yamaguchi, F.; Dauber, E.; Harang, R.E.; Rieck, K.; Greenstadt, R.; Narayanan, A. When Coding Style Survives Compilation: De-anonymizing Programmers from Executable Binaries. In Proceedings of the 25th Annual Network and Distributed System Security Symposium, NDSS 2018, San Diego, CA, USA, 18–21 February 2018; pp. 1–13. [Google Scholar]

- Frankel, S.F.; Ghosh, K. Machine Learning Approaches for Authorship Attribution using Source Code Stylometry. In Proceedings of the 2021 IEEE International Conference on Big Data (Big Data), Orlando, FL, USA, 15–18 December 2021; pp. 3298–3304. [Google Scholar] [CrossRef]

- Briciu, A.; Czibula, G.; Lupea, M. A deep autoencoder-based classification model for supervised authorship attribution. Procedia Comput. Sci. 2021, 192, 119–128. [Google Scholar] [CrossRef]

- Gu, Q.; Zhu, L.; Cai, Z. Evaluation Measures of the Classification Performance of Imbalanced Data Sets. In Computational Intelligence and Intelligent Systems; Springer: Berlin/Heidelberg, Germany, 2009; pp. 461–471. [Google Scholar]

- Brown, L.; Cat, T.; DasGupta, A. Interval Estimation for a proportion. Stat. Sci. 2001, 16, 101–133. [Google Scholar] [CrossRef]

- Freegle1643. Python Lexical Analyzer. Available online: https://github.com/Freegle1643/Lexical-Analyzer (accessed on 18 September 2021).

- Rehurek, R.; Sojka, P. Gensim–Python framework for vector space modelling. NLP Centre Fac. Inform. Masaryk Univ. Brno Czech Repub. 2011, 3, 2. [Google Scholar]

- Boetticher, G.D. Advances in Machine Learning Applications in Software Engineering; IGI Global: Hershey, PA, USA, 2007. [Google Scholar]

- Mikolov, T.; Sutskever, I.; Chen, K.; Corrado, G.S.; Dean, J. Distributed representations of words and phrases and their compositionality. Adv. Neural Inf. Process. Syst. 2013, 26, 3111–3119. [Google Scholar]

- Lau, J.H.; Baldwin, T. An Empirical Evaluation of doc2vec with Practical Insights into Document Embedding Generation. In Proceedings of the 1st Workshop on Representation Learning for NLP, Berlin, Germany, 11 August 2016; pp. 78–86. [Google Scholar]

- Miholca, D.L.; Czibula, G. Software Defect Prediction Using a Hybrid Model Based on Semantic Features Learned from the Source Code. In Proceedings of the Knowledge Science, Engineering and Management: 12th International Conference, KSEM 2019, Athens, Greece, 28–30 August 2019; Springer: Berlin/Heidelberg, Germany, 2019; pp. 262–274. [Google Scholar] [CrossRef]

- Miholca, D.L.; Czibula, G.; Tomescu, V. COMET: A conceptual coupling based metrics suite for software defect prediction. Procedia Comput. Sci. 2020, 176, 31–40. [Google Scholar] [CrossRef]

- Le, Q.V.; Mikolov, T. Distributed Representations of Sentences and Documents. Comput. Res. Repos. (CoRR) 2014, 1–9. [Google Scholar] [CrossRef]

- Scikit-learn. Machine Learning in Python. Available online: http://scikit-learn.org/stable/ (accessed on 1 December 2021).

- King, A.P.; Eckersley, R.J. Chapter 6—Inferential Statistics III: Nonparametric Hypothesis Testing. In Statistics for Biomedical Engineers and Scientists; Academic Press: Cambridge, MA, USA, 2019; pp. 119–145. [Google Scholar]

- Google. Online Web Statistical Calculators. Available online: https://astatsa.com/WilcoxonTest/ (accessed on 1 February 2022).

- Schölkopf, B.; Williamson, R.C.; Smola, A.J.; Shawe-Taylor, J.; Platt, J.C. Support vector method for novelty detection. Adv. Neural Inf. Process. Syst. 1999, 12, 582–588. [Google Scholar]

- Tax, D.M.; Duin, R.P. Support vector data description. Mach. Learn. 2004, 54, 45–66. [Google Scholar] [CrossRef]

- Khan, S.S.; Madden, M.G. One-class classification: Taxonomy of study and review of techniques. Knowl. Eng. Rev. 2014, 29, 345–374. [Google Scholar] [CrossRef]

- Heflin, B.; Scheirer, W.; Boult, T.E. Detecting and classifying scars, marks, and tattoos found in the wild. In Proceedings of the 2012 IEEE Fifth International Conference on Biometrics: Theory, Applications and Systems (BTAS), Arlington, VA, USA, 23–27 September 2012; pp. 31–38. [Google Scholar]

- Pritsos, D.A.; Stamatatos, E. Open-set classification for automated genre identification. In European Conference on Information Retrieval; Springer: Berlin/Heidelberg, Germany, 2013; pp. 207–217. [Google Scholar]

- Runeson, P.; Höst, M. Guidelines for Conducting and Reporting Case Study Research in Software Engineering. Empir. Softw. Eng. 2009, 14, 131–164. [Google Scholar] [CrossRef]

- Briciu, A. AutoSoft Data. Available online: https://github.com/anamariabriciu/AutoSoft (accessed on 14 April 2022).

- Maletic, J.; Marcus, A. Using latent semantic analysis to identify similarities in source code to support program understanding. In Proceedings of the 12th IEEE Internationals Conference on Tools with Artificial Intelligence (ICTAI 2000), Vancouver, BC, Canada, 15 November 2000; pp. 46–53. [Google Scholar] [CrossRef]

| Subset | |||

|---|---|---|---|

| 5 Developers | 12 Developers | 87 Developers | |

| No. of files per developer | ≥200 | ≥150 | ≥100 |

| Total. no. files | 1132 | 2357 | 11,089 |

| Total. no. tokens | 799,824 | 1,395,560 | 4,563,661 |

| Median tokens per file | 378.5 | 386 | 309 |

| Median lines per file | 61 | 65 | 52 |

| Avg. no. tokens per file | 706.56 | 592.09 | 411.55 |

| Avg. no. lines per file | 60.95 | 75.51 | 61.43 |

| Number of Features | Performance Measure | N-Gram Size | |||||

|---|---|---|---|---|---|---|---|

| 1 | 3 | 5 | 6 | 8 | |||

| 5 developers | 150 | Precision | 0.984 ± 0.008 | 0.993 ± 0.004 | 0.989 ± 0.007 | 0.988 ± 0.006 | 0.988 ± 0.005 |

| Recall | 0.982 ± 0.009 | 0.993 ± 0.004 | 0.988 ± 0.009 | 0.987 ± 0.008 | 0.988 ± 0.005 | ||

| F1 | 0.983 ± 0.008 | 0.993 ± 0.004 | 0.988 ± 0.009 | 0.987 ± 0.008 | 0.988 ± 0.005 | ||

| 300 | Precision | 0.986 ± 0.007 | 0.984 ± 0.006 | 0.985 ± 0.007 | 0.991 ± 0.007 | 0.992 ± 0.005 | |

| Recall | 0.986 ± 0.007 | 0.98 ± 0.008 | 0.985 ± 0.007 | 0.991 ± 0.007 | 0.992 ± 0.005 | ||

| F1 | 0.986 ± 0.007 | 0.98 ± 0.005 | 0.985 ± 0.007 | 0.991 ± 0.007 | 0.992 ± 0.005 | ||

| 12 developers | 150 | Precision | 0.968 ± 0.006 | 0.98 ± 0.005 | 0.984 ± 0.005 | 0.98 ± 0.007 | 0.973 ± 0.006 |

| Recall | 0.966 ± 0.007 | 0.979 ± 0.005 | 0.982 ± 0.006 | 0.978 ± 0.007 | 0.97 ± 0.007 | ||

| F1 | 0.966 ± 0.007 | 0.979 ± 0.005 | 0.982 ± 0.006 | 0.978 ± 0.007 | 0.97 ± 0.007 | ||

| 300 | Precision | 0.977 ± 0.007 | 0.984 ± 0.007 | 0.98 ± 0.004 | 0.979 ± 0.005 | 0.978 ± 0.008 | |

| Recall | 0.975 ± 0.007 | 0.981 ± 0.008 | 0.978 ± 0.005 | 0.977 ± 0.006 | 0.977 ± 0.008 | ||

| F1 | 0.975 ± 0.007 | 0.981 ± 0.008 | 0.979 ± 0.005 | 0.977 ± 0.006 | 0.977 ± 0.008 | ||

| 87 developers | 150 | Precision | 0.882 ± 0.004 | 0.892 ± 0.004 | 0.913 ± 0.004 | 0.911 ± 0.004 | 0.906 ± 0.006 |

| Recall | 0.868 ± 0.005 | 0.88 ± 0.004 | 0.901 ± 0.005 | 0.899 ± 0.004 | 0.895 ± 0.007 | ||

| F1 | 0.866 ± 0.005 | 0.88 ± 0.004 | 0.898 ± 0.005 | 0.896 ± 0.004 | 0.889 ± 0.008 | ||

| 300 | Precision | 0.913 ± 0.003 | 0.918 ± 0.006 | 0.922 ± 0.004 | 0.914 ± 0.004 | 0.904 ± 0.007 | |

| Recall | 0.902 ± 0.003 | 0.911 ± 0.005 | 0.913 ± 0.004 | 0.905 ± 0.006 | 0.894 ± 0.007 | ||

| F1 | 0.902 ± 0.003 | 0.909 ± 0.007 | 0.913 ± 0.004 | 0.904 ± 0.005 | 0.89 ± 0.005 | ||

| Type of Features | Number of Authors | Classifiers | ||||

|---|---|---|---|---|---|---|

| AutoSoft | SVC | RF | GNB | kNN | ||

| unigrams | 5 | 0.986 | 0.993 | 0.981 | 0.963 | 0.975 |

| 12 | 0.975 | 0.993 | 0.965 | 0.946 | 0.958 | |

| 87 | 0.902 | 0.953 | 0.735 | 0.841 | 0.854 | |

| trigrams | 5 | 0.98 | 0.994 | 0.98 | 0.972 | 0.96 |

| 12 | 0.981 | 0.992 | 0.976 | 0.947 | 0.936 | |

| 87 | 0.909 | 0.953 | 0.734 | 0.855 | 0.817 | |

| 5-grams | 5 | 0.985 | 0.998 | 0.982 | 0.971 | 0.99 |

| 12 | 0.979 | 0.99 | 0.976 | 0.935 | 0.983 | |

| 87 | 0.913 | 0.95 | 0.785 | 0.835 | 0.909 | |

| AutoSoft WINS | 24 | |||||

| AutoSoft LOSSES | 11 | |||||

| AutoSoft DRAWS | 1 | |||||

| Developer | N-Gram Type | Performance Measures | ||||

|---|---|---|---|---|---|---|

| unigrams | 0.904 0.015 | 0.9260.012 | 0.9650.013 | 0.945 0.009 | 0.5370.082 | |

| 5-grams | 0.9740.006 | 0.9770.007 | 0.9940.005 | 0.990.003 | 0.8580.046 | |

| unigrams | 0.921 0.014 | 0.9470.01 | 0.9650.013 | 0.956 0.008 | 0.5870.087 | |

| 5-grams | 0.9880.006 | 0.9930.004 | 0.9940.005 | 0.9930.003 | 0.9470.033 | |

| unigrams | 0.891 0.011 | 0.9170.006 | 0.9650.013 | 0.94 0.007 | 0.3330.055 | |

| 5-grams | 0.9790.009 | 0.9830.01 | 0.9940.005 | 0.9880.005 | 0.8670.083 | |

| unigrams | 0.89 0.016 | 0.9110.01 | 0.9650.013 | 0.937 0.009 | 0.40.074 | |

| 5-grams | 0.9770.008 | 0.980.007 | 0.9940.005 | 0.9870.005 | 0.8720.046 | |

| unigrams | 0.871 0.011 | 0.8960.007 | 0.9650.013 | 0.929 0.007 | 0.20.063 | |

| 5-grams | 0.9650.009 | 0.9680.01 | 0.9940.005 | 0.9810.005 | 0.7620.079 | |

| unigrams | 0.924 0.017 | 0.9480.009 | 0.9650.013 | 0.956 0.01 | 0.6790.054 | |

| 5-grams | 0.9920.005 | 0.9970.003 | 0.9940.005 | 0.9960.003 | 0.9840.016 | |

| unigrams | 0.899 0.014 | 0.9220.011 | 0.9650.013 | 0.943 0.008 | 0.4830.078 | |

| 5-grams | 0.970.009 | 0.9720.007 | 0.9940.005 | 0.9830.003 | 0.8170.046 | |

| Developer | Performance Measure | Unigrams | 5-Grams | ||

|---|---|---|---|---|---|

| AutoSoft | AutoSoft | ||||

| 0.904 | 0.783 | 0.974 | 0.763 | ||

| 0.926 | 0.91 | 0.977 | 1 | ||

| 0.965 | 0.829 | 0.994 | 0.724 | ||

| 0.945 | 0.842 | 0.985 | 0.839 | ||

| 0.537 | 0.505 | 0.858 | 1 | ||

| 0.921 | 0.815 | 0.988 | 0.756 | ||

| 0.947 | 0.956 | 0.993 | 1 | ||

| 0.965 | 0.829 | 0.994 | 0.724 | ||

| 0.956 | 0.887 | 0.993 | 0.839 | ||

| 0.587 | 0.707 | 0.947 | 1 | ||

| 0.891 | 0.808 | 0.979 | 0.756 | ||

| 0.917 | 0.948 | 0.983 | 1 | ||

| 0.965 | 0.829 | 0.994 | 0.724 | ||

| 0.94 | 0.884 | 0.988 | 0.839 | ||

| 0.333 | 0.647 | 0.867 | 1 | ||

| 0.89 | 0.786 | 0.977 | 0.761 | ||

| 0.911 | 0.916 | 0.98 | 1 | ||

| 0.965 | 0.829 | 0.994 | 0.724 | ||

| 0.937 | 0.87 | 0.987 | 0.839 | ||

| 0.4 | 0.517 | 0.872 | 1 | ||

| 0.871 | 0.797 | 0.965 | 0.758 | ||

| 0.896 | 0.933 | 0.968 | 1 | ||

| 0.965 | 0.829 | 0.994 | 0.724 | ||

| 0.929 | 0.877 | 0.981 | 0.839 | ||

| 0.2 | 0.569 | 0.762 | 1 | ||

| 0.924 | 0.824 | 0.992 | 0.763 | ||

| 0.948 | 0.961 | 0.997 | 1 | ||

| 0.965 | 0.829 | 0.994 | 0.724 | ||

| 0.956 | 0.889 | 0.996 | 0.839 | ||

| 0.679 | 0.795 | 0.984 | 1 | ||

| 0.899 | 0.842 | 0.97 | 0.761 | ||

| 0.922 | 0.986 | 0.972 | 1 | ||

| 0.965 | 0.829 | 0.994 | 0.724 | ||

| 0.943 | 0.9 | 0.983 | 0.839 | ||

| 0.483 | 0.922 | 0.817 | 1 | ||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Czibula, G.; Lupea, M.; Briciu, A. Enhancing the Performance of Software Authorship Attribution Using an Ensemble of Deep Autoencoders. Mathematics 2022, 10, 2572. https://doi.org/10.3390/math10152572

Czibula G, Lupea M, Briciu A. Enhancing the Performance of Software Authorship Attribution Using an Ensemble of Deep Autoencoders. Mathematics. 2022; 10(15):2572. https://doi.org/10.3390/math10152572

Chicago/Turabian StyleCzibula, Gabriela, Mihaiela Lupea, and Anamaria Briciu. 2022. "Enhancing the Performance of Software Authorship Attribution Using an Ensemble of Deep Autoencoders" Mathematics 10, no. 15: 2572. https://doi.org/10.3390/math10152572

APA StyleCzibula, G., Lupea, M., & Briciu, A. (2022). Enhancing the Performance of Software Authorship Attribution Using an Ensemble of Deep Autoencoders. Mathematics, 10(15), 2572. https://doi.org/10.3390/math10152572