Abstract

The prediction and smoothing fusion problems in multisensor systems with mixed uncertainties and correlated noises are addressed in the tessarine domain, under -properness conditions. Bernoulli distributed random tessarine processes are introduced to describe one-step randomly delayed and missing measurements. Centralized and distributed fusion methods are applied in a -proper setting, , which considerably reduce the dimension of the processes involved. As a consequence, efficient centralized and distributed fusion prediction and smoothing algorithms are devised with a lower computational cost than that derived from a real formalism. The performance of these algorithms is analyzed by using numerical simulations where different uncertainty situations are considered: updated/delayed and missing measurements.

Keywords:

hypercomplex algebra; missing measurements; multi-sensor information fusion estimation; random delayed measurements; MSC:

93E10; 60G12; 94A12

1. Introduction

Multisensor systems and data fusion techniques are receiving increasing research and practical attention due to their ability to provide more robust estimation procedures than those that use a single sensor, as well as their broad applications in fields such as robotics, image processing, autonomous navigation, and smart homes, among others [1,2,3,4,5]. In estimation problems from noisy sensor measurements, the best known and most widely applied procedure is the Kalman filter and its different extensions, which are based on a state–space system (see, for example, [5,6,7,8]).

Of great interest are those systems that incorporate the effect of possible uncertainties into the measurements caused by physical failures in the sensors and measurement noises as well as failures in data transmission, all of which result in random delayed and missing measurements.

These uncertainties can be modeled by using stochastic parameters, being widely spread to consider Bernoulli distributed random processes. In these uncertainty scenarios, an extensive literature exists on the design of efficient recursive estimation algorithms (see, e g., [9,10,11,12,13,14,15], and references therein).

Depending on how raw data from different sensors are processed, two fundamental multisensor information fusion approaches are used: centralized and distributed fusion methods. In the centralized fusion structure, data coming from multiple sources are directly sent to a single fusion center, where the optimal estimator can be obtained, whereas in the distributed fusion strategy, these data are independently transmitted to individual nodes where local estimators are computed and sent in a second layer to the fusion center, producing robust and reliable estimators with a lower computational cost. These two approaches have been extensively studied in the real field, and both centralized and distributed fusion estimation algorithms have been designed under different initial hypotheses. Specifically, when the signal to be estimated is modeled by a state–space model with uncertain measurements, filtering, prediction, and smoothing algorithms have been proposed in [9,10,11] from a centralized fusion perspective and in [13,14,15] by applying distributed fusion techniques.

Alternatively, the multisensor fusion estimation problem has also been analyzed by using 4D hypercomplex algebras [16,17,18,19,20,21,22,23]. These algebras appear to be a natural extension of complex algebras comprising a real part and three imaginary parts, which gives rise to ideal structures for describing phenomena in the real physical world. Moreover, the use of these algebras in different practical problems has revealed their supremacy over their treatment in the real space [24,25,26,27]. In this field, quaternions have been the most common 4D hypercomplex algebra in signal processing, since they have the desirable property of being a normed division algebra. Unlike quaternions, tessarines constitute a commutative algebra which facilitates the extension of the main results obtained in the real and complex fields to the four-dimensional case, and the use of tessarines as a signal processing tool has been gaining popularity in the last few years [22,23,28].

In general, the most suitable processing for these signals is the widely linear processing (WL) based on four-dimensional augmented vectors given by the signal itself and its three principal conjugations. Nevertheless, some properness properties related to the vanishing of the complementary functions make it possible to determine the type of processing to be used, which is based on reduced-dimensional processes that lead to computational savings without losing accuracy. This computational cost reduction cannot be achieved from a real formalism [24,29,30,31].

In the tessarine domain, two types of properness have recently been introduced, namely and -properness [24,32], and they have been satisfactorily applied in multisensor fusion estimation problems with uncertainties in the measurements [22,23]. In [22], -proper, , centralized fusion algorithms of reduced dimension are proposed for the computation of the optimal (in the least-squares sense) filter, predictor, and fixed-point smoother of the state in systems with random one-step delays and correlated noises. In [23], a more general problem with random sensor delays and missing measurements is analyzed. Under -properness conditions, , the authors have devised computationally efficient centralized and distributed fusion filtering algorithms by considering the LS distributed weighted fusion criterion. However, the prediction and smoothing problems remain to be solved.

Therefore, our aim in this paper is to address the prediction and smoothing problems under -properness conditions, , from both centralized and distributed approaches. As in [23], the state to be estimated is assumed to be observed through a state–space model with correlated noises, where measurements may be updated, one-step delayed, or contain only noise according to Bernoulli tessarine random variables. In this setting, both centralized and distributed fusion prediction and smoothing algorithms are provided. The advantage of these algorithms is that they have a lower computational load than their counterparts derived from a real processing. The behavior of these algorithms is numerically analyzed for different uncertainty scenarios by means of simulation examples, in which the prediction and smoothing results are also compared with the filter.

With this purpose, the remainder of the paper is structured as follows: Section 2 presents the basic concepts and properties regarding the signal processing in the tessarine field. In Section 3, the multisensor fusion estimation problem for systems with random one-step sensor delays and missing measurements is formulated in the tessarine domain, by considering a -proper scenario, . Section 4 and Section 5 provide, respectively, the -proper distributed and centralized fusion estimation algorithms for the computation of the corresponding prediction and smoothing estimators, as well as their mean square errors. Specifically, in Section 4, the least squares (LS) local estimators are first determined, and in a second layer, a weighted linear combination of these local estimators, in LS sense, is used to generate the distributed fusion estimators.

Afterwards, Section 6 includes numerical simulations to illustrate the performance of the proposed algorithms in different settings: both -proper and -proper scenarios, different uncertainty situations (one-step delay, missing measurements, and mixed uncertainties), centralized and distributed fusion methods, and different prediction and smoothing problems. Finally, the main conclusions of the paper are drawn in Section 7. For the sake of readability, all the proofs have been moved to Appendix A, Appendix B, Appendix C and Appendix D.

Notation: The notation used throughout this paper is fairly standard. The superscripts “*”, “”, and “” denote the tessarine conjugate, transpose, and Hermitian transpose. Boldface uppercase letters refer to matrices, boldface lowercase letters refer to column vectors, and lightface lowercase letters are used for scalar quantities. In particular, represents the zero matrix, is the identity matrix of dimension n, and (respectively, ) is the column vector of dimension n whose elements are all 1 (respectively, 0). Moreover, , and represent the set of integer, real, and tessarine numbers, respectively. Then, (respectively, ) indicates that is a real (respectively, tessarine) matrix, and (respectively, ) means that is a n-dimensional real (respectively, tessarine) vector. Additionally, and are the expectation and covariance operators, respectively; is a diagonal (or block diagonal) matrix with entries (block entries) on the main diagonal. Finally, “∘” and “⊗” symbolize the Hadamard and Kronecker products, respectively, and , is the Kronecker delta function.

2. Tessarine Processing

The tessarine domain is a commutative extension of the complex domain comprising a real part and three imaginary parts [28]. In this section, the main concepts and properties present in the tessarine domain are established.

Note that, unless otherwise stated, all the random variables are assumed to have zero-mean throughout this paper.

Definition 1.

A tessarine random signal vector can be defined as a stochastic process of the form [32]

with , for , real random signal vectors, and the triad satisfying the following identities:

Definition 2.

The pseudo-autocorrelation function of is defined as , , and the pseudo-cross-correlation function of as , .

Given a random signal , the real vector formed by its components is denoted by

Moreover, the conjugate of is defined as

and the following auxiliary tessarines are introduced:

For a complete description of the second-order statistics of , the augmented tessarine signal vector might be defined, which satisfies the following relationship with :

where , with

and where .

In this context, based on the vanishing of the different pseudo-correlation functions , ,29,32] introduced two interesting types of properness, named and -properness, which are included in the following definition.

Definition 3.

A random signal is -proper (respectively, -proper) if, and only if, , with (respectively, ), vanish .

Analogously, two random signals and are cross -proper, (respectively, cross -proper) if, and only if, , with (respectively, ), vanish .

Finally, and are jointly -proper (respectively, jointly -proper) if, and only if, they are -proper (respectively, -proper) and cross -proper (respectively, cross -proper).

Note that the and -properness properties have a direct impact on the signal processing approach. Thus, the optimal linear processing in the tessarine domain is the widely linear (WL) processing that entails operating on the augmented tessarine vector . Nevertheless, under -properness conditions, , the WL processing is reduced to a -proper linear processing, which implies a considerable reduction in the dimension of the processes involved. Particularly, -proper linear processing considers the tessarine random signal itself, , and -proper linear processing takes into account the -dimensional augmented vector given by the signal and its conjugate [29].

Definition 4.

Given two random tessarine signal vectors , the product ⋆ between them is defined as

Property 1.

The augmented vector of is , where .

3. Problem Statement

Consider a networked system given by an n-dimensional tessarine state which is observed from R sensors, each of which provides measurements , , perturbed by additive noises. Specifically, this system is assumed to be described by the following state–space model:

where , , are deterministic matrices, and are correlated tessarine white noises with pseudo-variances and , respectively, and . Moreover, is independent of , for any two sensors , and the initial state , with , is independent of and , for , .

Remark 1.

Unlike the state–space systems considered in the conventional linear processing, which only use the information supplied by the signal itself, the state equation in (1) captures the full second-order information available in the state transmission.

The measurements available from each sensor are assumed to be affected by random network-induced delay and missing measurements, according to the following model:

For each sensor and, for , is a tessarine random vector whose elements , for , are composed of independent Bernoulli random variables, , with , with known probabilities , which indicate whether the corresponding component of the available measurement is updated (), one-step delayed (), or only contains noise ().

The following hypotheses on the Bernoulli random variables are assumed:

- For each , they must satisfy that or at every instant of time, i.e., if one of them takes the value 0, the other one is 1, or both are 0.

- , for every .

- For each sensor , and , and are independent for , and also and are independent for .

- is independent of , and , for any .

In this setting, we consider the optimal (in the least-squares sense) linear estimation problem of the state on the basis of the measurements available from the R sensors: , .

To exploit the complete second-order statistical information available, the augmented statistics should be considered. With this purpose, the following WL model is defined from (1), (2), and Property 1:

with , and where

Moreover, , , , and .

By considering that and are jointly -proper, the available measurement Equation (5) can be rewritten in a reduced dimension form as follows:

with , and where

with

and

Moreover,

where

with

Remark 2.

Note that the -properness means a reduction in the dimension of the available measurements by a half (if ) or by a quarter (if ).

Analagously, in a -proper setting, the processes , , and can be replaced by , , , , and ; and, in a -proper settings, they can be replaced by , , , and .

Furthermore, , , , and .

This reduction in dimension results in computational savings in the estimation algorithms proposed, which cannot be attained from a real formalism.

In [23], conditions on the state–space model (3) which guarantee the -properness, for , of the processes involved are provided.

Then, by considering -proper conditions, our aim is to obtain the LS linear estimator of the state from the set of measurements , . Recently, this problem has been solved for the case of (filtering problem), providing both -proper centralized and distributed fusion filtering algorithms with similar performance to that obtained from a vectorial real approach but with a lower computational cost [23]. In this paper, this approach is extended to tackle the prediction (case ) and smoothing (case ) problems by using both centralized and distributed methods.

4. -Proper Distributed Fusion LS Linear Estimation

In this section, the distributed fusion LS linear estimation problem is addressed under -proper conditions.

The distributed fusion method consists of two steps: First, the measurements of each sensor are used to generate local LS linear estimators. Then, similar to the distributed fusion method used in [23], a fusion criterion based on weighted matrices in the LS sense is applied to generate the distributed fusion LS linear estimator as a linear combination of the local estimators. Next, these two steps are carried out.

4.1. Local -Proper LS Linear Estimation Algorithms

Consider the multisensor system given by (3), (4) and (6). The local -proper LS linear estimator of , denoted by is obtained by extracting the first n components of , where is given by the projection of onto the set of measurements , for , under -proper conditions.

Theorems 1–3 provide the algorithms to compute the LS linear estimator, , as well as their mean square errors, , for the filtering, prediction, and smoothing estimation problems. It should be remarked that the formulas of the LS linear filtering algorithm given in Theorem 1 were devised in [23]. They are included in this section without proof since they are used to initialize the LS linear prediction and smoothing algorithms. The proof of Theorems 2 and 3 are deferred to Appendix A and Appendix B, respectively.

Theorem 1

(Local LS linear filter). For each sensor , the optimal filter, , obtained from the system defined by Equations (3), (4) and (6), is computed through the following recursive expressions:

where can be recursively calculated as

with as the initial conditions.

The innovations, , satisfy the recursive equation:

with as the initial condition, and .

Moreover, and , where the matrices are obtained by this expression:

with

and obtained from the recursive formula:

The pseudo-covariance matrix of the innovations, , is computed as follows:

with

and

Finally, the pseudo-covariance matrices of the filtering errors, , are obtained from the following recursive formula:

with , calculated by the equation

and the initial conditions: , .

Theorem 2

(Local LS linear predictor). For each sensor , the optimal predictor, , , obtained from the system defined by Equations (3), (4) and (6), is computed as follows:

with the initial condition: the one-step predictor, , given in Theorem 1.

Moreover, the pseudo-covariance matrices of the prediction errors, , satisfy the following recursive formula:

with the initial condition: the one-step prediction error, , calculated from Theorem 1.

Theorem 3

(Local LS linear smoother). For each sensor , the optimal smoother , , obtained from the system defined by Equations (3), (4) and (6), is computed through the following recursive formulas:

with the initial condition: the filter, , computed from Theorem 1. The innovations are recursively computed from (9), and , with given by (13), and

with the initial condition: , computed from (10), and , , and

with the initial condition: , computed from Theorem 1.

Finally, the pseudo-covariance matrices of the smoothing errors, , satisfy the following recursive formula:

with the initial condition: the local LS filtering error, , given in Theorem 1.

4.2. Distributed -Proper LS Linear Estimation Algorithms

Now, to determine the distributed LS linear estimators under -proper conditions, a linear combination of the local LS linear estimators computed in Section 4.1 is considered to obtain the distributed LS linear estimator . The weights of this linear combination are those that minimize the mean square error. Then, the distributed -proper LS linear estimator is obtained by extracting the first n-components from .

By applying the LS optimality criterion, the distributed fusion LS linear estimator, , can be expressed by the form

where , and

with .

Moreover, the associated error pseudo-covariance matrix, , satisfies the equation

with , and given in (12).

Therefore, the distributed -proper LS linear estimators can be completely determined from the local LS linear estimators of each sensor , and the computation of their pseudo-cross-covariance matrices .

The following theorems (Theorems 4–6) provide recursive formulas for the efficient computation of such matrices in the filtering, prediction, and smoothing problem, respectively. Note that the filtering pseudo-cross-covariance matrices presented in Theorem 4 were obtained in [23], and hence the proof is omitted. They have been included here because they are used in Theorems 5 and 6. The proof of these theorems for the prediction and smoothing problems are deferred to Appendix C and Appendix D, respectively.

Theorem 4

(Filtering pseudo-cross-covariance matrices). The pseudo-cross-covariance matrices of the local filters, , are calculated as follows:

where are the pseudo-cross-covariance matrices of the local one-step predictors, which satisfy the equation

with as the initial conditions.

Moreover, , where

with as the initial condition, and where , is obtained from (10),

with , and

where

with as the initial condition, and where

and

for , and and defined in Theorem 3.

Theorem 5

(Prediction pseudo-cross-covariance matrices). The pseudo-cross-covariance matrices of the local predictors, , for , are computed through the equation:

with the initial condition: , given in Theorem 4.

Theorem 6

(Smoothing pseudo-cross-covariance matrices). The pseudo-cross-covariance matrices of the local smoothers, , for , are obtained from the following equations:

with the initial condition: , given in Theorem 4, , and

with the initial condition: , given in Theorem 4, and where

with the initial condition: , given in Theorem 4.

4.3. Computational Complexity

In this section, the computational complexity associated with the proposed distributed -proper LS linear estimation algorithms is analyzed.

First, it should be remarked that due to the isomorphism between the WL processing in the quaternion or tessarine domain and the processing, the three approaches are completely equivalent, and the same computational complexity is required in each of them. However, this equivalence vanishes under properness conditions when compared to their counterparts derived from real-valued processing.

Effectively, under , for , properness conditions, the dimension of the observation vector is reduced 4/k times, which leads to estimation algorithms with a lower computational load with respect to the ones derived from a WL or approach (see [30] for further details). Specifically, for each iteration, this computational load is of order for the local LS linear algorithms devised from a real formalism, whereas this is of order for the , for , algorithms.

Moreover, the computational load for the distributed linear estimation algorithms obtained from a real formalism is of order , whereas this is of order , , for the distributed -proper LS linear estimation algorithms.

5. -Proper Centralized Fusion LS Linear Estimation

In this section, the centralized fusion estimation problem is addressed under -proper conditions. With this approach, the measurement data from each sensor are directly sent to the fusion center to be processed.

Therefore, let us define the stacking vector of the augmented real measurements as , and consider the following augmented state–space system under -proper conditions:

where , with . Moreover, , , , and , with , , and .

Additionally, , , with the matrix given by , and

with and , for , given in (7).

In this setting, the centralized fusion -proper LS linear estimator, is the optimal LS linear estimator of the state from the measurements . In a similar way to Section 4.1, this estimator is obtained by extracting the first n components of , where is given by the projection of onto the the set of measurements , for , under -proper conditions.

Theorems 7–9 provide the algorithms to compute the centralized fusion -proper LS linear filtering, prediction, and smoothing estimators, , as well as their mean square errors, . It should be mentioned that the centralized fusion -proper LS linear filtering algorithm presented in Theorem 7 was devised in [23], and it will be used in both the prediction and smoothing algorithms. The proof of these Theorems is obtained by following a similar reasoning to that of Theorems 1–9 on the state–space system (24).

Theorem 7

(Centralized fusion -proper LS linear filter). The optimal centralized fusion -proper LS linear filter, , is obtained by extracting the first n components of , which is recursively calculated as follows:

where can be recursively computed as

with as the initial conditions.

The innovations, , are obtained as follows:

with as the initial condition, and , , with .

Moreover, , and , where and , for , with given in (8).

The pseudo-covariance matrix of the innovations, , is obtained from the expression

where

with computed in (12), and given by

Finally, the filtering error pseudo-covariance matrix, , is obtained from , recursively computed through the following equation:

with as the initial condition, and

with as the initial condition.

Theorem 8

(Centralized fusion -proper LS linear predictor). The optimal centralized fusion -proper LS linear predictor, , , is obtained by extracting the first n components of , which satisfies the expression

with the initial condition: the one-step predictor, , given in Theorem 7.

Moreover, the pseudo-covariance matrices of the prediction errors, , , are obtained from , which satisfies the following recursive formula:

with the initial condition: the pseudo covariance matrix of the one-step prediction error, , computed from Theorem 7.

Theorem 9

(Centralized -proper LS linear smoother). The optimal centralized fusion -proper LS linear smoother, , , is obtained by extracting the first n components of , which satisfies the following expression:

with the initial condition: the filter , computed from Theorem 7. The innovations are recursively computed from (25), and , with given by (26) and

with the initial condition: given in Theorem 7, and , , and

with as the initial condition.

Finally, the pseudo-covariance matrix of the smoothing errors, , are obtained from , which satisfies the following recursive formula:

with the initial condition: the pseudo-covariance matrix of the filtering error, , given in Theorem 7.

Remark 3.

A similar analysis to the one performed in Section 4.3 on the computational complexity of the proposed algorithms can be performed here. In this case, the computational load for each iteration of the centralized LS linear estimation algorithms obtained from a real formalism is of order , whereas this is of order , , for the centralized -proper LS linear estimation algorithms.

6. Numerical Example

In this section, the behavior and effectiveness of the -proper distributed and centralized algorithms given in Section 4.2 and Section 5, respectively, are analyzed by means of two numerical examples.

In the first example, the performance of these algorithms is illustrated under different uncertainty scenarios. In the second example, a general setting that is intended to be adoptable for use in a variety of practical applications is considered to evaluate the better behavior of the proposed estimators over their counterparts in the quaternion domain.

6.1. Example 1

With the aim of assessing the performance of the proposed theoretical algorithms, prediction and smoothing estimates obtained through both centralized and distributed fusion algorithms are compared with the corresponding filtering ones and also compared with each other by considering different situations of uncertainty in the measurements and both - proper, with , scenarios. With this purpose, a scalar tessarine signal satisfying the following equation:

is considered. The aim is to estimate from the measurements obtained from five sensors, modeled by the following measurement equation available at each sensor :

where the real measurement, , satisfies the equation

In the state Equation (27), , and the covariance matrices of the real state noise are given as follows:

with , in the -proper case, and , in the -proper case.

Moreover, in the measurement Equation (28) available, the parameters of the Bernoulli random variables , for , , and , are assumed to be constant in time, that is, , and characterized as follows:

- -

- in the -proper scenario, , for all , , , and

- -

- in the -proper scenario, , and , for , .

Furthermore, the correlation between the additive noises, and , is obtained from the following relation between them:

with , , , , , and where and are independent, and the real covariance matrices of the tessarine white Gaussian noises are given by

with , , , , .

To complete the conditions that guarantee the joint -properness between the state and measurements , the variance matrix of the real initial state is assumed to be given as follows:

with , in the -proper case, and , in the -proper one.

Under the above conditions, and considering the hypotheses of independence established in Section 3 on the Bernoulli random variables, the initial state and the additive noises and the prediction and smoothing error variances have been computed for both centralized and distributed fusion estimation methods by considering different values of the Bernoulli parameters in the -proper scenario as well as in the -proper scenario. More specifically, the following six cases have been analyzed in each scenario:

- In the -proper scenario:

- -

- Case 1: , ;

- -

- Case 2: , ;

- -

- Case 3: , ;

- -

- Case 4: , ;

- -

- Case 5: , ;

- -

- Case 6: , .

- In the -proper scenario:

- -

- Case 1: , , ;

- -

- Case 2: , , ;

- -

- Case 3: , , ;

- -

- Case 4: , , ;

- -

- Case 5: , , ;

- -

- Case 6: , , .

Note that in each -proper scenario, for , all the uncertainty situations are considered. Specifically, in Cases 1 and 2, since , for all , in the -proper scenario, (respectively, , for all , in the -proper scenario), they represent the delay situation in different levels. In other words, in Case 1, there is a greater probability that the corresponding measurement component is delayed one instant of time. In contrast, in Case 2 there is a high probability that the corresponding measurement component is updated. The situation of missing measurements is reflected in Cases 3 and 4, where it is more probable that the corresponding measurement component contains only noise in Case 3, and a signal plus noise in Case 4. Finally, in Cases 5 and 6, two situations of mixed uncertainties have been considered, which allow the performance of the estimators to be compared as the probability that the corresponding measurement component is delayed or updated increases.

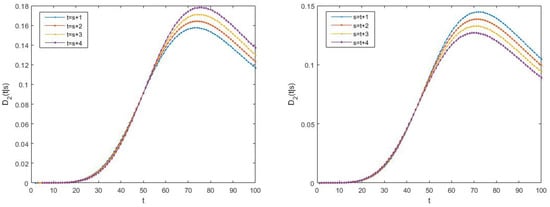

As a measure of the accuracy of the estimators, the filtering, prediction, and smoothing error variances have been calculated and displayed versus time in Figure 1, Figure 2, Figure 3 and Figure 4; also, the mean of these error variances (whose calculus expressions are described in Table 1) have been shown in Table 2 and Table 3. Note that these measures allow us to compare the performance of the estimators. That is, those estimators whose error variances have the smaller value present a better performance than those with a greater error variance (same consideration for the mean of the error variances).

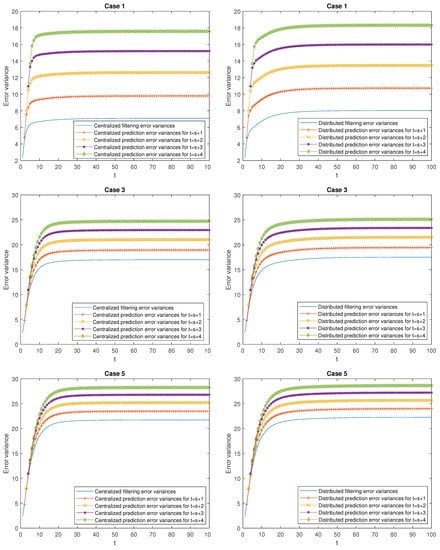

Figure 1.

Filtering and prediction error variances in the -proper scenario for Cases 1, 3, and 5 computed by using the centralized fusion algorithm (on the left) and the distributed algorithm (on the right).

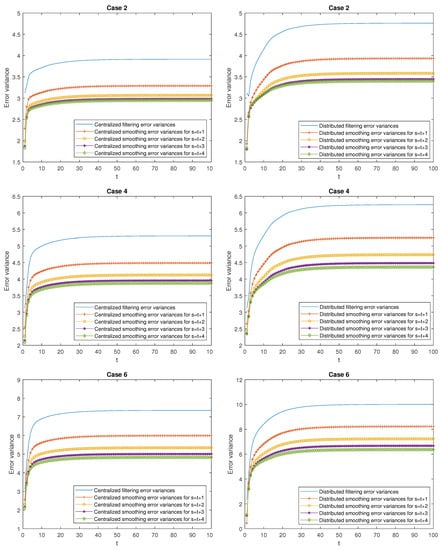

Figure 2.

Filtering and smoothing error variances in the -proper scenario for Cases 2, 4, and 6 computed by using the centralized fusion algorithm (on the left) and the distributed algorithm (on the right).

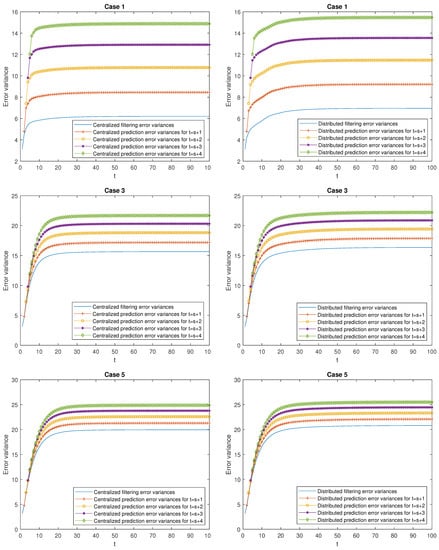

Figure 3.

Filtering and prediction error variances in the -proper scenario for Cases 1, 3, and 5 computed by using the centralized fusion algorithm (on the left) and the distributed algorithm (on the right).

Figure 4.

Filtering and smoothing error variances in the -proper scenario for Cases 2, 4, and 6 computed by using the centralized fusion algorithm (on the left) and the distributed algorithm (on the right).

Table 1.

Expressions for filtering, prediction, and smoothing mean square errors.

Table 2.

Filtering, prediction, and smoothing mean square errors in the -proper scenario.

Table 3.

Filtering, prediction, and smoothing mean square errors in the -proper scenario.

The error variances have been calculated for prediction and smoothing estimators, as well as for the filtering estimators in several stages, in all the cases previously described. By way of illustration, only one case for each situation of delay, missing measurements, and mixed uncertainties has been displayed in figures containing prediction errors (Figure 1 and Figure 3) as well as in figures drawing smoothing errors (Figure 1 and Figure 4), although the mean square errors for each case have been included in Table 2 and Table 3 for the and -proper scenarios, respectively. More specifically, the centralized and distributed fusion prediction error variances, and , respectively, for , as well as the corresponding filtering ones, are displayed in Figure 1 for Cases 1, 3, and 5 in the -proper scenario and for the same Cases in the -proper scenario in Figure 3. Analogously, Figure 2 and Figure 4 depict the centralized and distributed fusion smoothing error variances, and , respectively, for , as well as the corresponding filtering variances, for Cases 2, 4, and 6 in the -proper scenario (Figure 2), and for the same Cases in the -proper scenario (Figure 4).

Figure 1 and Figure 3 allow the centralized and distributed fusion prediction error variances to be compared with each other in each case and also with the corresponding filtering variances. So, it can be observed that on the one hand, the prediction error variances are greater than the corresponding filtering ones, and on the other hand, they also increase as (the number of the prediction stage) is greater. Analogously, from Figure 2 and Figure 4, we can confirm that smoothing algorithms provide better estimations than the corresponding filtering ones, and the accuracy of the smoothers also improves as (that is, as the number of measurements used to estimate the state) increases. Moreover, the centralized fusion algorithms provide better estimations than the corresponding distributed ones since they are optimal estimators versus the suboptimal ones obtained from the distributed fusion methods.

To compare the cases considered in each uncertainty situation, the means of the filtering, prediction and smoothing errors variances (whose calculus expressions are described in Table 1), are shown in Table 2 and Table 3 for the and -proper scenarios, respectively. In addition to the considerations drawn from Figure 1, Figure 2, Figure 3 and Figure 4, the following conclusions can be derived from Table 2, in the -proper scenario:

- Better performance of the centralized estimators over the distributed ones. Effectively, in Case 1, it can be observed that the mean of the centralized and distributed filtering error variances, and , takes the values and , respectively, which indicate a better performance of the centralized filters over the distributed ones. The same conclusion can be deduced when comparing the means of the prediction and smoothing error variances at the same stage . As an example, observe that the mean of the centralized and distributed prediction error variances for , denoted by and , respectively, take the values and , and the one corresponding to the mean of the centralized and distributed smoothing error variances at stage are given by , and . Similar considerations can be made for all the cases.

- Better performance of the smoothing estimators over the filtering ones and both, in turn, over the prediction ones. Effectively, in Case 1, the following relation is true: . Similar conclusions are obtained in all the cases and for any .

- Worse performance of the prediction estimators as the stage τ increases (the opposite consideration for the smoothing estimators). As an example, in Case 1, it is observed that (for the prediction errors) and (for the smoothing errors). Similar considerations can be made for all the cases.

Moreover, the following conclusions can be drawn by comparing Cases 1 and 2 in the delay situation, Cases 3 and 4 in the situation of missing measurements, and Cases 5 and 6 for mixed uncertainties. Specifically:

- In the delay situation: For Cases 1 and 2, it can be observed that the estimations obtained in Case 2 outperform the ones obtained in Case 1, due to the fact that in this case, the probability that the measurements are updated is greater than that of Case 1.

- In the situation of missing measurements: For Cases 3 and 4, the probability that the measurements contain only noise is smaller in Case 4 than in Case 3; hence, better estimations are obtained.

- In the situation of mixed uncertainties: For Cases 5 and 6, better estimations are obtained in Case 6 versus Case 5 since there is a greater probability that the measurements are updated or delayed and a lower probability that they contain only noise.

6.2. Example 2

In this second example, the effectiveness of our method is assessed in a realistic setting where the superiority of the proposed estimators over their counterparts in the quaternion domain under -properness conditions is analyzed in the case of a single sensor.

Specifically, we consider the following general equation of motion [21]:

where is the input of the system, and represents the variable of interest with indicating its range of change.

Note that the equations given in (29) are applicable in a wide variety of practical situations including bearings-only and rotation tracking. In the case of bearing-only tracking applications, the input typically represents force or acceleration, and in a rotation tracking scenario, it represents the torque or angular acceleration.

Consider the equivalent discrete-time model of (29)

with , and initial condition . Moreover, denotes the sampling interval, and the input is a tessarine white noise with real covariance matrix given by

By way of illustration, assume that the measurements available come from one sensor according to the equation (2), where is a tessarine white noise such that and are independent and their associated real covariance matrices are given by

Moreover, the independent Bernoulli random variables , for , , and , have constant parameters .

In this setting, the comparative analysis is carried under both -proper, scenarios, by considering the following Bernoulli parameters:

- -

- -proper scenario: , , and , for all , and

- -

- -proper scenario: and , and , and , and and .

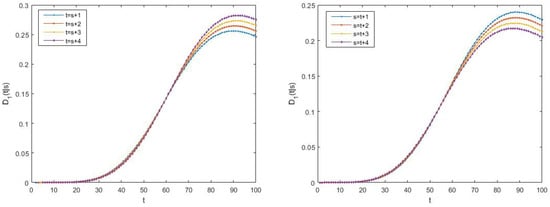

Then, for each -proper scenario, , the -proper LS linear estimation error variances are compared with their counterparts in the quaternion domain, i.e., the quaternion strictly linear (QSL) and the quaternion semi-widely linear (QSWL) estimation error variances, denoted by and , respectively. As a measurement for comparison, we consider the difference between both errors for the prediction and smoothing problems given by the expressions:

- -

- -proper scenario: .

- -

- -proper scenario: .

Figure 5 and Figure 6 display these differences for the variable of interest in the and -proper case, respectively. All the graphics show the superiority of the -proper estimators over their counterparts in the quaternion domain. Moreover, in the prediction problem, this superiority is higher as time ahead in the prediction stage is greater, whereas in the smoothing problem, a better performance of the -proper estimators is achieved in situations with a lower number of measurements used to estimate the state. Note that similar results are obtained for the component of state vector . These graphs have been omitted to not increase the length of the paper.

Figure 5.

Difference between QSL and -proper error variances for the prediction (on the left) and smoothing (on the right) problems in the -proper scenario.

Figure 6.

Difference between QSWL and -proper error variances for the prediction (on the left) and the smoothing (on the right) problems in the -proper scenario.

7. Discussion

The multisensor fusion prediction and smoothing estimation problems in systems with random sensor delays, missing measurements, and correlated noises have been investigated. As usual, these uncertainties are assumed to be modeled by independent Bernoulli distributed random processes.

Unlike most of the results existing in the literature, the problem has been addressed in the tessarine domain. An extremely interesting characteristic of this type of processing is the possibility to reduce the dimension of the problem when the processes involved are -proper. In practice, these properness characteristics can be statistically tested. Then, both distributed and centralized fusion estimation algorithms are proposed under -properness conditions, which offer significant computational advantages when compared to their counterparts derived from a real-valued processing.

It should be highlighted that as an alternative to tessarines, some other 4D hypercomplex algebras, such as quaternions, could have been used. The convenience of using a tessarine or quaternion processing depends on the particular property conditions verified by the processes involved. Additionally, in future research, more general structures, such as the generalised Segre’s quaternions, which include tessarines as a particular case, would offer the possibility to choose the best commutative algebra according to its properness characteristics.

Author Contributions

All authors have contributed equally to the work. The functions mainly carried out by each specific author are detailed below. Conceptualization, R.M.F.-A.; formal analysis, J.D.J.-L., R.M.F.-A., J.N.-M. and J.C.R.-M.; methodology, R.M.F.-A. and J.D.J.-L.; investigation, J.D.J.-L., R.M.F.-A., J.N.-M. and J.C.R.-M.; visualization, R.M.F.-A., J.D.J.-L., J.N.-M. and J.C.R.-M.; writing—original draft preparation, R.M.F.-A. and J.D.J.-L.; writing—review and editing, R.M.F.-A., J.D.J.-L., J.N.-M. and J.C.R.-M.; funding acquisition, R.M.F.-A. and J.N.-M.; project administration, R.M.F.-A. and J.N.-M.; software, J.D.J.-L.; supervision, J.N.-M. and J.C.R.-M.; validation, J.N.-M. and J.C.R.-M. All authors have read and agreed to the published version of the manuscript.

Funding

This work has been supported in part by the Project PID2021-124486NB-I00 of the ‘’Plan Estatal de I+D+i, Ministerio de Educación y Ciencia, Spain, the I+D+i Project with reference number 1256911 of ‘Programa Operativo FEDER Andalucía 2014–2020’, Junta de Andalucía, and Project EI-FQM2-2021 of ‘Plan de Apoyo a la Investigación 2021–2022’ from the University of Jaén.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Conflicts of Interest

The authors declare no conflict of interest. The funders had no role in the design of the study: in the collection, analyses, or interpretation of data; in the writing of the manuscript, or in the decision to publish the results.

Appendix A. Proof of Theorem 2

Based on an innovation approach, the optimal LS linear predictor, , for each sensor , can be obtained from the following expression [32]:

where , , and the innovations , with the local LS linear estimator of based on the set of available measurements . Then, using the state equation in (3), and taking into account the fact that , for , the following expression is obtained:

Appendix B. Proof of Theorem 3

Similar to the Proof of Theorem 2 given in Appendix A, the optimal LS linear smoother, , can be expressed as follows [2]:

and hence, the following recursive expression is easily derived:

Then, Equation (16) is easily derived from (A4), and taking into account the characteristics of both -proper scenarios.

Analogously, the optimal LS linear filter, , and the one-stage predictor, , admit the following expressions:

and

respectively, where , with , and .

Now, from (6) and (9), we have

where , and . Thus, from (3), (A5), and (A6), and reordering terms, Equation (17) is derived by using the characteristics of both -proper scenarios on the resulting expression. Its initial condition is immediately deduced from its definition.

Appendix C. Proof of Theorem 5

Hence, by characterizing this expression for both -proper scenarios, Equation (20) can be deduced.

Appendix D. Proof of Theorem 6

From (16), , for , can be expressed as follows:

where , with . Then, Equation (21) is easily derived by characterizing (A7) for both -proper scenarios. The initial condition is directly obtained from its definition.

Next, from (9), the following expression for , with , is obtained:

with . Now, by using (6), the hypotheses on the model, and Equations (A3) and (A6), the following equation can be obtained:

where . Then, by substituting (A9) in (A8), reordering terms, and taking into account the characteristics of the -proper scenarios, Equation (22) is deduced. Its initial condition is determined by its proper definition.

Finally, to derive Equation (23), the following expression will be used,

immediately obtained from (A5) and (A6). Then, by using the definition of , (A4) and (A10), reordering terms in the resultant expression, and applying the characterization of both -proper scenarios, Equation (23) can be deduced. From its definition, the initial condition is established.

References

- Kurkin, A.A.; Tyugin, D.Y.; Kuzin, V.D.; Chernov, A.G.; Makarov, V.S.; Beresnev, P.O.; Filatov, V.I.; Zeziulin, D.V. Autonomous mobile robotic system for environment monitoring in a coastal zone. Procedia Comput. Sci. 2017, 103, 459–465. [Google Scholar] [CrossRef]

- Hsu, Y.-L.; Chou, P.-H.; Chang, H.-C.; Lin, S.-L.; Yang, S.-C.; Su, H.-Y.; Chang, C.-C.; Cheng, Y.-S.; Kuo, Y.-C. Design and Implementation of a Smart Home System Using Multisensor Data Fusion Technology. Sensors 2017, 17, 1631. [Google Scholar] [CrossRef]

- Gao, B.; Hu, G.; Gao, S.; Zhong, Y.; Gu, C.; Beresnev, P.O.; Filatov, V.I.; Zeziulin, D.V. Multi-sensor optimal data fusion for INS/GNSS/CNS integration based on unscented Kalman filter. Int. J. Control Autom. Syst. 2018, 16, 129–140. [Google Scholar] [CrossRef]

- Huang, S.; Chou, P.; Jin, X.; Zhang, Y.; Jiang, Q.; Yao, S. Multi-Sensor image fusion using optimized support vector machine and multiscale weighted principal component analysis. Electronics 2020, 9, 1531. [Google Scholar] [CrossRef]

- Gao, B.; Hu, G.; Zhong, Y.; Zhu, X. Cubature rule-based distributed optimal fusion with identification and prediction of kinematic model error for integrated UAV navigation. Aerosp. Sci. Technol. 2021, 109, 1106447. [Google Scholar] [CrossRef]

- Yukun, C.; Xicai, S.; Zhigang, L. Research on Kalman-filter based multisensor data fusion. J. Syst. Eng. Electron. 2007, 18, 497–502. [Google Scholar] [CrossRef]

- Ding, F. Combined state and least squares parameter estimation algorithms for dynamic systems. Appl. Math. Model. 2014, 38, 403. [Google Scholar] [CrossRef]

- Shenglun, Y.; Mattia, Z. Robust Kalman Filtering under Model Uncertainty: The Case of Degenerate Densities. IEEE Trans. Automat. Contr. 2021. [Google Scholar] [CrossRef]

- Ma, J.; Sun, S. Centralized fusion estimators for multisensor systems with random sensor delays, multiple packet dropouts and uncertain observations. IEEE Sens. J. 2013, 13, 1228–1235. [Google Scholar] [CrossRef]

- Chen, D.; Xu, L. Optimal filtering with finite-step autocorrelated process noises, random one-step sensor delay and missing measurements. Commun. Nonlinear Sci. Numer. Simul. 2016, 32, 211–224. [Google Scholar] [CrossRef]

- Liu, W.-Q.; Wang, X.-M.; Deng, Z.-L. Robust centralized and weighted measurement fusion Kalman estimators for uncertain multisensor systems with linearly correlated white noises. Inf. Fusion 2017, 35, 11–25. [Google Scholar] [CrossRef]

- Lin, H.; Sun, S. Distributed fusion estimator for multi-sensor asynchronous sampling systems with missing measurements. IET Signal Process. 2016, 10, 724–731. [Google Scholar] [CrossRef]

- Tian, T.; Sun, S.; Li, N. Multi-sensor information fusion estimators for stochastic uncertain systems with correlated noises. Inf. Fusion 2016, 27, 126–137. [Google Scholar] [CrossRef]

- Xing, Z.; Xia, Y.; Yan, L.; Lu, K.; Gong, Q. Multisensor distributed weighted Kalman filter fusion with network delays, stochastic uncertainties, autocorrelated, and cross-correlated noises. IEEE Trans. Syst. Man Cyber. Syst. 2018, 48, 716–726. [Google Scholar] [CrossRef]

- Zhang, J.; Gao, S.; Li, G.; Xia, J.; Qi, X.; Gao, B. Distributed recursive filtering for multi-sensor networked systems with multi-step sensor delays, missing measurements and correlated noise. Signal Process. 2021, 181, 107868. [Google Scholar] [CrossRef]

- Yuan, X.; Yu, S.; Zhang, S.; Wang, G.; Liu, S. Quaternion-Based Unscented Kalman Filter for Accurate Indoor Heading Estimation Using Wearable Multi-Sensor System. Sensors 2015, 15, 10872–10890. [Google Scholar] [CrossRef]

- Talebi, S.; Kanna, S.; Mandic, D. A distributed quaternion Kalman filter with applications to smart grid and target tracking. IEEE Trans. Signal Inf. Process. Netw. 2016, 2, 477–488. [Google Scholar]

- Tannous, H.; Istrate, D.; Benlarbi-Delai, A.; Sarrazin, J.; Gamet, D.; Ho Ba Tho, M.C.; Dao, T.T. A new multi-sensor fusion scheme to improve the accuracy of knee flexion kinematics for functional rehabilitation movements. J. Sens. 2016, 16, 1914. [Google Scholar] [CrossRef] [Green Version]

- Navarro-Moreno, J.; Fernández-Alcalá, R.M.; Jiménez López, J.D.; Ruiz-Molina, J.C. Widely linear estimation for multisensor quaternion systems with mixed uncertainties in the observations. J. Frankl. Inst. 2019, 356, 3115–3138. [Google Scholar] [CrossRef]

- Wu, J.; Zhou, Z.; Fourati, H.; Li, R.; Liu, M. Generalized linear quaternion complementary filter for attitude estimation from multi-sensor observations: An optimization approach. IEEE Trans. Autom. Sci. Eng. 2019, 16, 1330–1343. [Google Scholar] [CrossRef]

- Talebi, S.P.; Werner, S.; Mandic, D.P. Quaternion-valued distributed filtering and control. IEEE Trans. Autom. Control. 2020, 65, 4246–4256. [Google Scholar] [CrossRef]

- Fernández-Alcalá, R.M.; Navarro-Moreno, J.; Ruiz-Molina, J.C. T-proper hypercomplex centralized fusion estimation for randomly multiple sensor delays systems with correlated noises. Sensors 2021, 21, 5729. [Google Scholar] [CrossRef]

- Jiménez-López, J.D.; Fernández-Alcalá, R.M.; Navarro-Moreno, J.; Ruiz-Molina, J.C. The distributed and centralized fusion filtering problems of tessarine signals from multi-sensor randomly delayed and missing observations under Tk-properness conditions. Mathematics 2021, 9, 2961. [Google Scholar] [CrossRef]

- Zanetti de Castro, F.; Eduardo Valle, M. A broad class of discrete-time hypercomplex-valued Hopfield neural networks. Neural Netw. 2020, 122, 54–67. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Alfsmann, D. On families of 2N-dimensional hypercomplex algebras suitable for digital signal processing. In Proceedings of the 14th European Signal Processing Conference, 14th European Signal Processing Conference (EUSIPCO 2006), Florence, Italy, 4–8 September 2006; pp. 1–4. [Google Scholar]

- Alfsmann, D.; Göckler, H.G.; Sangwine, S.J.; Ell, T.A. Hypercomplex algebras in digital signal processing: Benefits and drawbacks. In Proceedings of the 15th European Signal Processing Conference, Poznan, Poland, 3–7 September 2007; pp. 1322–1326. [Google Scholar]

- Hahn, S.L.; Snopek, K.M. Complex and Hypercomplex Analytic Signals: Theory and Applications; Artech House: Norwood, MA, USA, 2016. [Google Scholar]

- Catoni, F.; Boccaletti, D.; Cannata, R.; Catoni, V.; Nichelatti, E.; Zampetti, P. The Mathematics of Minkowski Space-Time: With an Introduction to Commutative Hypercomplex Numbers; Birkhaüser Verlag: Basel, Switzerland, 2008. [Google Scholar]

- Navarro-Moreno, J.; Ruiz-Molina, J.C. Wide-sense Markov signals on the tessarine domain. A study under properness conditions. Signal Process. 2021, 183, 108022. [Google Scholar] [CrossRef]

- Nitta, T.; Kobayashi, M.; Mandic, D.P. Hypercomplex widely linear estimation through the lens of underpinning geometry. IEEE Trans. Signal Process. 2019, 67, 3985–3994. [Google Scholar] [CrossRef]

- Grassucci, E.; Comminiello, D.; Uncini, A. An information-theoretic perspective on proper quaternion variational autoencoders. Entropy 2021, 23, 856. [Google Scholar] [CrossRef]

- Navarro-Moreno, J.; Fernández-Alcalá, R.M.; Jiménez-López, J.D.; Ruiz-Molina, J.C. Tessarine signal processing under the T-properness condition. J. Frankl. Inst. 2020, 357, 10100. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).