Abstract

The Capacitated Arc Routing Problem (CARP) is a combinatorial optimization problem, which requires the identification of such route plans on a given graph to a number of vehicles that generates the least total cost. The Dynamic CARP (DCARP) is a variation of the CARP that considers dynamic changes in the problem. The Artificial Bee Colony (ABC) algorithm is an evolutionary optimization algorithm that was proven to be able to provide better performance than many other evolutionary algorithms, but it was not used for the CARP before. For this reason, in this study, an ABC algorithm for the CARP (CARP-ABC) was developed along with a new move operator for the CARP, the sub-route plan operator. The CARP-ABC algorithm was tested both as a CARP and a DCARP solver, then its performance was compared with other existing algorithms. The results showed that it excels in finding a relatively good quality solution in a short amount of time, which makes it a competitive solution. The efficiency of the sub-route plan operator was also tested and the results showed that it is more likely to find better solutions than other operators.

Keywords:

capacitated arc routing problem; dynamic capacitated arc routing problem; artificial bee colony algorithm; evolutionary optimization; move operator MSC:

68W50; 90B06; 90B20; 90C27; 90C35; 90C59; 90C90

1. Introduction

The Capacitated Arc Routing Problem (CARP) is an NP-hard combinatorial optimization problem that was first introduced by Golden and Wong in [1]. The CARP requires determining the least cost route plans on a graph of a road network for vehicles subject to some constraints. It has many applications in real life, for instance, in winter gritting [2,3] or in urban solid waste collection [4,5]. Since the CARP is an NP-hard problem, instead of exact methods, mainly heuristics and meta-heuristics (e.g., [6,7,8,9,10]) are considered in the literature to find solutions. The existing methods are either too slow or do not give enough good quality solutions, so there is still room for improvements.

The standard CARP assumes a static problem, which is not the closest to real life, where changes may happen during the execution of the solution. These changes modify the instance, and thus, may have an effect on the feasibility and optimality of the current solution [11]. For this reason, the Dynamic CARP (DCARP), which is a variation of the CARP that takes into account dynamic changes, is a better approach. To make the model of the problem under consideration closer to the real-life problem, the changes should be made based on the collected information about the vehicles and the roads. For instance, information can be provided by the drivers of the vehicles about the executed tasks, by the GPS of the vehicles about their current position, and (indirectly) by traffic patrol drones [12] about the current state of the roads.

The Artificial Bee Colony (ABC) algorithm is a swarm intelligence-based algorithm for optimization problems [13]. It was successfully applied on multiple combinatorial optimization problems that are similar to the CARP [14,15,16,17,18,19] and was shown that the ABC algorithm provides better performance than most of the evolutionary computation-based optimization algorithms [16]. However, the ABC algorithm was never applied before, neither on the CARP nor on the DCARP.

In our previous work [20], we collected all the possible events and analyzed their effects on the model. Based on the results, a data-driven DCARP framework with three event handling algorithms and a rerouting algorithm (RR1) was developed. The framework uses ‘the ‘virtual task” strategy [21] to be able to use static CARP solvers for DCARP instances.

The contributions of this work are as follows:

- The definition of the first ABC algorithm for the CARP (CARP-ABC).

- The definition of a new small step-size move operator for the CARP, the sub-route plan operator, which is utilized in the CARP-ABC algorithm.

- The definition of a new method for creating initial population, the RSG. The purpose of the RSG is to create random but feasible solutions for the CARP quickly.

- Numerical experiments to test the CARP-ABC algorithm on a variety of CARP and DCARP instances. The same experiments were performed with other algorithms for CARP, then the results were compared. The results showed that for both CARP and DCARP instances, the CARP-ABC algorithm excels in finding a relatively good quality solution in a short amount of time.

- Numerical experiments to test the efficiency of the sub-route plan operator within the CARP-ABC algorithm, on a variety of CARP instances. The results showed that the sub-route plan operator is more likely to find a better solution than the other operators, especially when a greater modification is needed on the current solution (since it is a randomly generated solution and/or it is a solution of a larger CARP instance).

The rest of the paper is structured as follows. In Section 2, the related works are presented. In Section 3, the basic concepts related to the proposed CARP-ABC algorithm is introduced. In Section 4 and Section 5, the algorithm and the sub-route plan move operator are formulated in detail, respectively. In Section 6, the experiments and their results are discussed. The paper is concluded in Section 7.

2. Related Works

In this section, the related works are introduced. In Section 2.1, the algorithms that were developed for CARP are presented. In Section 2.2, the approaches for DCARP are summarized. In Section 2.3, the ABC algorithms that were developed for problems that are similar to the CARP are presented.

2.1. Algorithms for the CARP

As it was mentioned in Section 1, there are mainly approximate approaches (i.e., heuristics and metaheuristics) for the CARP. For this reason, only the methods that belong to that category are mentioned in this subsection.

2.1.1. Heuristics

Golden et al. developed the first heuristic algorithms for the CARP, namely, the path-scanning and the augment-merge [22]. Other notable heuristics for the CARP are the parallel-insert method [23], Ulusoy’s tour splitting method [24], the augment-insert method [25], the path-scanning with ellipse rule [26] and the path-scanning with efficiency rule [27].

2.1.2. Metaheuristics

The metaheuristic algorithms for the CARP can be divided into two main categories (with some exceptions): trajectory-based and population-based.

From the trajectory-based algorithms, the notable ones are the guided local search algorithm [28], the tabu search algorithms [29,30], the variable neighborhood search algorithm [31], and the greedy randomized adaptive search procedure with evolutionary path relinking [32]. It must be mentioned that in [29], two versions of the tabu search algorithm (TSA) were proposed (TSA1 and TSA2), from which the latter performed better. In [33], a global repair operator was developed and embedded into the TSA, creating the repair-based tabu search (RTS), which outperforms the TSA.

From the population-based algorithms, the notable ones are the genetic algorithm [34], the memetic algorithms [6,35], and the ant colony optimization algorithms [8,36,37]. From these, the Memetic Algorithm with Extended Neighborhood Search (MAENS) [6] is the most popular one, even though it only gives relatively good quality solutions and also has slow runtime. There are multiple solutions that try to improve some parts of the MAENS (e.g., [9,10]), but these improvements do not really increase the overall performance of it. The Ant Colony Optimization Algorithm with Path Relinking (ACOPR) [8] gives only relatively good quality solutions, but currently it has the fastest runtime on most of the CARP instances from the benchmark test sets.

The Hybrid Metaheuristic Approach (HMA) [7] is a population-based algorithm that utilizes a randomized tabu thresholding procedure as a part of its local refinement procedure. The HMA gives the best quality solutions among all existing algorithms and has faster runtime than MAENS, but it is still relatively slow on some real-life based CARP instances. The ACOPR gives only relatively good quality solutions, but currently, it has the fastest runtime on most of the CARP instances from the benchmark test sets.

2.2. Approaches for the DCARP

Despite the importance of the DCARP, the number of studies about CARP (or ARP) that consider dynamic changes in the problem during the execution of the solution are relatively small [20,21,38,39,40,41,42,43,44,45]. Moreover, there are only three studies that consider more than two type of changes [20,21,42] and only two of them (including our previous work) considers all the critical changes that can happen [20,21]. (For a more detailed comparison see [11,20].) Critical changes or events may change the problem to such an extent that the current solution is not feasible anymore, so handling them is essential. Both [20,21] propose a framework for the DCARP that, instead of using complex specialized algorithms, allows the use of any static CARP solvers for solving a DCARP instance.

To the best of our knowledge, the data-driven solution for the DCARP introduced in [20] is the only data-driven approach for DCARP or even CARP.

2.3. The ABC Algorithm and Its Applications

The original ABC algorithm was proposed by Karaboga in [13]. In [46], Karaboga and Görkemli proposed a new definition for the search behavior of the onlooker bees, which improved the convergence performance of the algorithm. For this reason, the new version of the ABC algorithm was named quick ABC (qABC).

The ABC algorithm was introduced as an algorithm for multivariable and multi-modal continuous function optimization, but later it was successfully applied on other types of optimization problems as well. Karaboga and Görkemli introduced an ABC and a qABC algorithm for combinatorial problems (CABC and qCABC, respectively) and applied them to the Traveling Salesman Problem (TSP) [14,15]. Both algorithms use the Greedy Sub Tour Mutation (GSTM) operator [47], which was developed to increase the performance of a genetic algorithm (GA) that solves the TSP. It was proven that the GSTM is significantly faster and and more accurate than other existing mutation operators [47]. Furthermore, it was shown that the ABC and the qABC algorithm provide better performance than many evolutionary computation-based optimization algorithms [16]. Since the TSP is similar to the CARP, in the hope that an ABC algorithm with a mutation operator such as GSTM will perform well, we developed the CARP-ABC algorithm (Section 4) with the sub-route plan operator (Section 5).

There are also ABC algorithms for the Vehicle Routing Problem (VRP) [48] and its variations [17,18]. However, there is only one ABC algorithm for the CARP and even that is for just a variation of CARP, the undirected CARP with profits [19]. Therefore, to the best of our knowledge, currently there are no ABC algorithms, neither for the CARP nor for the DCARP.

3. Problem Formulations

This section introduces basic concepts related to the proposed CARP-ABC algorithm to help to understand how it works. The concepts are introduced only briefly, for a more detailed description the corresponding works are referred to.

In this section, first, the static CARP, then the (data-driven) DCARP is formulated. It is followed by the introduction of the basic ABC algorithm and the existing move operators for CARP (which are used in the proposed CARP-ABC solution). The notations used for the CARP and the DCARP are collected in Table A1 in Appendix A.

3.1. The CARP

In the existing works, as the input graph, some assume an undirected graph [7], others assume a directed graph [8,49], and other ones a mixed graph [6,34]. In this work, a directed graph is assumed, in which undirected edges are regarded as two oppositely directed edges.

The (directed) graph of the CARP can be described the following way: , with a set of vertices V and a set of arcs (directed edges) A. A set of tasks is also given, which defines the arcs that have tasks assigned to them. If the graph of a CARP instance contains (undirected) edges, then an edge is added to A as a pair of arcs, one for each direction. For instance, if is an edge and , then the arcs and are added to A. Similarly, if is an edge with tasks assigned to it and , then the arcs and are added to T. The graph also has a special vertex (), the depot, and a dummy task , the significance of which is explained later.

The tasks are performed by a fleet of w homogeneous vehicles of capacity q. Every vehicle starts and ends its route at the depot (). Each task must be performed in a single operation, and each vehicle can satisfy at most as many demands as its maximum capacity.

The graph can be mapped to a road network where the arcs are road segments. Some of the road segments have tasks. To fulfill the tasks, different amounts but the same type of demand must be served. Each arc is characterized by the following functions:

- : the head vertex of the arc;

- : the tail vertex of the arc;

- : the dead-heading or traversing cost, the cost of crossing the arc.

In addition, each task is characterized by the following functions:

- : the unique identifier of the task, which is a positive integer;

- : (positive) demand, which indicates the load necessary to serve the task;

- : service cost, which is the cost of executing the task and crossing the arc (i.e., is included in ).

Although an edge is regarded as two oppositely directed arcs, if a task is assigned to it, then the task should be executed only once, in either direction. Let be a task of one of the arcs of an edge, then let denote the inversion of t, the other task of the edge. If and are the head and the tail vertexes of t, then and are the head and the tail vertexes of . The , and values are the same for t and .

Let the total number of tasks that have to be executed by at least one of the vehicles be denoted by n. The value of n depends on the composition of T: if T only contains arc tasks from edges, then , if T only contains arc tasks from arcs then .

The minimal total dead-heading cost between two vertices is provided by the function, which uses Dijkstra’s algorithm as the search algorithm. For instance, denotes the minimal total dead-heading cost traversing from vertex to vertex , where .

A CARP instance (I) is defined as follows:

3.1.1. Solution Representation

A solution for a CARP instance is expressed as a set of route plans. The route plans are sequences of the tasks that need to be executed in the given order. The consecutive tasks are connected by the shortest paths, which is provided by the function. Therefore, a solution S for a CARP instance can be expressed the following way:

where is the number of route plans and () is the k-th route plan within the solution S. The k-th route plan can be expressed the following way:

where is the number of (not dummy) tasks and is the i-th task within the k-th route plan. It must be noted that here, k is an index, which is only used to identify a specific route plan in the solution. The order of the route plans within the solution has no effect on the quality of the solution.

Since every route starts and ends in the depot, the dummy task – which represents the vehicle being in the depot – is added also as the first and the last element of the route plan sequences. Its , , and are set to 0, and both the head and the tail vertexes are the depot vertex.

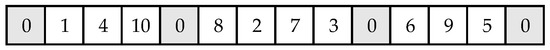

For the solution representation of the CARP, a natural encoding approach can be used, just like in most vehicle routing problems. This means that all route plans can be encoded as an ordered list of ids of the tasks, so a solution can be represented as the concatenation of these lists. However, every route plan starts and ends with the dummy task , so if the encoded route plans are concatenated, then there are consecutive dummy tasks in the resulting list. For the sake of simplicity, only one of each consecutive dummy task is kept in the encoded solution. Figure 1 shows an example of a solution representation.

Figure 1.

An example of a solution representation for a CARP instance with 10 required tasks, where 0 is the of the dummy task. In this example, there are 3 routes. The first route services the tasks with s 1, 4 and 10. The second services the tasks with s 8, 2, 7 and 3. The third services the tasks with s 6, 9 and 5.

3.1.2. Objective and Constraints

The objective of the CARP is to minimize the total cost of the solution S subject to some constraints, which are defined in this section. The total cost of a solution S (i.e., ) is calculated with the following formula (Equations (4)–(6)):

where and are the total dead-heading and service cost of the route plan .

The solution S has to satisfy the following constraints. First, each route plan starts and ends at the depot. Second, each task is executed exactly once. Therefore, the total number of tasks executed on each route plan (excluding the dummy task ) is equal to n:

Moreover, a task cannot be executed more than once, neither in the same route nor in another route:

where and are route plans within S, is the i-th task in the route plan , and is the j-th task in the route plan . If a task t has an inverse (i.e., ), then either t or is executed. Both cannot be executed in the same solution. Third, the total demand served each route plan does not exceed the capacity (q) of the vehicle:

3.2. The Data-driven DCARP

There are various approaches for DCARP, but in this work, the data-driven version of DCARP is considered, which was recently formulated in [20].

In this problem, instead of one static CARP instance, there is a series of DCARP instances (i.e., a DCARP scenario [21]) that needs to be solved. A DCARP scenario is denoted by , where m is the number of DCARP instances within the scenario (i.e., the number of dynamic events that occurred and changed the previous DCARP instance is ). Each () DCARP instance contains all the information about the current problem. The previous DCARP instance , the execution of the accepted solution for and the occurred event(s) define the next DCARP instance , where . The initial instance () can be viewed as a static (data-driven) CARP instance, since initially every vehicle is in the depot (in good state) and no task has been executed yet.

For a data-driven DCARP instance, information needs to be stored about all the vehicles and route plans. For each vehicle, the current location and state have to be known. Furthermore, identifiers are needed to be used for the vehicles and the route plans, since a vehicle may follow multiple route plans (one after another) and it is important to know for each route plan whether a vehicle already executed it, its execution is still in progress, or a vehicle still needed to be assigned to it to start its execution.

Instead of the number of vehicles (w), a set of identifiers of all the vehicles is needed to be defined, which is denoted by H. The set of the identifiers of the (currently) free vehicles is denoted by (), which is initially equal to H. The identifier of a vehicle is added to , if the vehicle finishes the execution of a route plan, and the identifier is removed, when a new route plan is assigned to the vehicle. If the execution of all the tasks is finished and all the vehicles are returned to the depot (i.e., there are no broken down vehicles outside on the roads), then , otherwise .

The set of identifiers of all the route plans is denoted by R, and the set of identifiers of the route plans that currently cannot be modified and not executed by any vehicle is denoted by (). When a new route plan is created, its identifier is added to R, and when the execution of it is finished or suspended (due to vehicle breakdown), its identifier is added to . If the execution of all the route plans is finished and there are no more tasks to execute, then , otherwise . The identifier is removed from only if the vehicle which is assigned to it was broken, but got fixed and can continue the execution of the plan. The function that defines which vehicle is assigned to a specific route plan is denoted by .

To store the current location of the vehicles in the instance, the virtual task strategy introduced in [21] is used, which replaces the executed tasks in each route plan with “virtual tasks”. A “virtual task” is an arc whose is the depot vertex and is the current location of the vehicle, vertex v (). For the sake of simplicity, it is assumed that when an unexpected event occurs, every vehicle is located exactly at a vertex. Since this task is “virtual”, it cannot be traversed, for this reason, it has an infinite traversing cost (i.e., ). Furthermore, since it is a “task”, there is a demand and a service cost assigned to it, which are calculated according to the provided data: the service cost is the total cost produced by the vehicle so far (i.e., it is the sum of traversing and serving cost of the arcs that were crossed or served by the vehicle), and the demand is the total demand served by the vehicle so far. A route plan can have at most one virtual task. Therefore, if a route plan already has a virtual task, then it is updated taking into account the arcs traversed and the tasks executed since then by the corresponding vehicle. The set of all virtual tasks is denoted by (), and the function that defines which virtual task belongs to a specific route plan is denoted by .

The set of arc tasks that need to be executed is denoted by T. If according to the gathered information a task t () was executed by the vehicle h (), then in the new DCARP instance t needs to be removed from T. Furthermore, the virtual task of the route plan of the vehicle (e.g., , where and ) needs to be updated. The new virtual task is generated in such a way that t is included in it (along with the other tasks the vehicle executed and arcs the vehicle traversed). If t has an inverse (i.e., ), then it is removed from T as well. Accordingly, the total number of tasks that have to be executed (n) is decreased by one or two.

The initial DCARP instance () is similar to a static CARP instance. The sets of the route plan identifiers (R) and the function are created and filled only after the solution is found for . The set is initially equal to H, then based on , all the vehicle identifiers that are assigned to a route plan are removed from . At this stage, the sets and are empty sets, therefore the function is an empty function as well. According to these, the initial DCARP instance () is defined as follows:

The subsequent DCARP instances (, where ) are defined as follows:

3.2.1. Structure of a Scenario

A new DCARP instance is constructed and added to the DCARP scenario, when an unexpected event happens that changes the current problem to such an extent that it has effect on the currently executed solution. In [21] all the possible events (based on realistic assumptions) were collected and analyzed based on their effect.

It is assumed that the roadmap, the number of vehicles, and the maximum capacity of the vehicles cannot change (at least during the execution of the solution). Therefore, V, , A, , , , q and H are the same in all the DCARP instances of a DCARP scenario.

It is assumed that roads can become closed/opened (it changes , thus , too), the traffic can decrease/increase (it changes and in some cases , thus , too), tasks can get cancelled/added (it changes T, n, , and ) and vehicles can breakdown/restart (it changes ), which are unexpected events. The expected events are the events that normally occur during the execution of the solution: a task is executed (it changes T), a vehicle moves (it changes , thus , too), or a vehicle returns to the depot (it changes and in some cases ). The affected components are updated only when a new instance is constructed. If rerouting is performed, then R and may change, but the changes are visible only in the next DCARP instance. Therefore, T, , n, , R, , , , , , , and may be different among the DCARP instances of a DCARP scenario.

Since due to the unexpected events some components of the DCARP instance change, the optimal solution may change, too. It is one’s choice to construct a new DCARP instance and reroute when there might be a better solution available, but the current solution is still feasible. However, constructing a new DCARP instance and rerouting is necessary when the current solution is not feasible anymore.

3.2.2. Solution Representation

For each DCARP instance, the solution representation is mainly the same as for static CARP instances. The only difference is that if the route plan has a virtual task assigned to it, then the virtual task is the second task within the route plan (since the first task is always the dummy task ). For instance, if the route plan has an identifier k () and there is a virtual task assigned to it (i.e., ), then .

3.2.3. Objective and Constraints

For each DCARP instance, the objective and the constraints are mainly the same, as well as for static CARP instances. The only difference is at the second constraint, which requires that the total number of tasks in the solution S (excluding the dummy task ) is equal to the sum of the number of tasks that still need to be executed (n) and the total number of virtual tasks ():

The attributes of a virtual task guarantee that a solver will always place the virtual task right after the dummy task within a route plan of a (nearly optimal) solution, so there is no need to add a constraint regarding it.

3.2.4. Finding a Solution

The data-driven DCARP framework allows rerouting when a critical event (i.e., an unexpected event that may change the feasibility of the current solution) occurs. These events are the task appearance, the demand increased and the vehicle breakdown.

The data-driven DCARP framework allows the use of static CARP solvers by converting the current data-driven DCARP instance into a static CARP instance. After a (sufficiently good) solution is found by the CARP solver, the solution is converted into a data-driven DCARP solution.

Converting a data-driven DCARP instance into a static CARP instance works as follows: the sets of vehicle and route plan identifiers (i.e., H, , R, and ) are omitted, along with the related functions ( and ). Furthermore, all virtual tasks related to finished and suspended route plans are removed from T. For instance, if () is the virtual task of the route plan with identifier k () and , then is removed from T (i.e., ).

Converting a static CARP solution into a data-driven DCARP solution works as follows: the virtual tasks that are related to finished and suspended route plans are added to the solution in separate route plans to keep track the total cost of the DCARP scenario. Furthermore, if there are any new route plans within the solution, the framework gives them identifiers and also attempts to assign each of them to a free vehicle. For the other route plans, it can be easily determined which route plan identifier belongs to which route plan, based on the virtual task within them.

3.3. The Basic ABC Algorithm

This section introduces the basic ABC algorithm for combinatorial problems, based on [16]. Just like in the original ABC algorithm [13], the artificial bees are classified into the three groups:

- employed bees, who are exploiting the food sources;

- onlooker bees, who are making the decision about which food source to select;

- scout bees, who are randomly choosing a new food source.

In the ABC algorithm, a food source is corresponded to a solution and the nectar amount of a food source is corresponded to the fitness of a solution.

The ABC algorithm is an iterative process with four phases in total. It begins with the initial phase, then it iterates three bee phases (always in the same order) until a predefined termination criterion is met. In the initial phase, the population is initialized with randomly generated food sources. In the first phase, the employed bee phase, the employed bees are sent to the food sources, where they determine the nectar amounts of the food sources. In the second phase, the onlooker bee phase, the probability value of the sources are calculated based on their nectar amount, then the onlooker bees are sent to the preferred food source to find neighboring food sources and determine their nectar amount. In the third phase, the scout bee phase, the exploitation process of the sources exhausted by the bees are stopped and the scout bees are sent out to randomly discover new food sources within the search area. In each phase, the best food source found so far is memorized. The phases are described in more detail in the subsections below.

3.3.1. Initialization Phase

In the initialization phase, the parameters and the population are initialized. The parameters of the ABC algorithm can be defined as follows:

- : the number of food sources, which is also the number of the employed bees and onlooker bees (i.e., for every food source, there is only one employed bee);

- : the number of trials after which a food source is assumed to be abandoned;

- a termination criterion.

The population is initialized by randomly generating number of food sources and assigning one employed bees to each of them. The employed bees evaluate the fitness of these solutions.

3.3.2. Employed Bee Phase

At this phase, each employed bee generates a new food source in the neighborhood of its current position. Once is obtained, it will be evaluated and compared to . If the nectar amount of is equal to or higher than that of , replaces and becomes a new member of the population, otherwise is retained. In other words, a greedy selection mechanism is employed between the old and the new candidate solutions.

3.3.3. Onlooker Bee Phase

An onlooker bee evaluates the nectar information taken from all the employed bees and selects a food source depending on its probability value calculated by the following expression:

where is the nectar amount (i.e., the fitness value) of the i-th food source . The higher the value of is, the higher the probability of that the i-th food source is selected.

Once the onlooker has selected her food source , she produces a modification on by using a local search operator. The local search operator randomly selects a position in the neighborhood of . As in the case of the employed bees, if the modified food source has a better or equal nectar amount than , the modified food source replaces and becomes a new member in the population.

3.3.4. Scout Bee Phase

If a food source cannot be further improved through a predetermined number of trials limit, the food source is assumed to be abandoned, and the corresponding employed bee becomes a scout. The scout produces a food source randomly.

In the basic ABC algorithm, in each cycle, at most one scout bee goes outside to search for a new food source.

3.4. Move Operators for the CARP

In population-based evolutionary algorithms, to enrich the diversity of the population, move operators with different levels of step-size are utilized to generate new, neighboring solutions. These move operators can be divided into two main categories: small step-size operators and large step-size operators. Small step-size operators can modify the position and/or the direction of the tasks within one or two route plans. In contrast, large step-size operators are able to modify more than two route plans. The most commonly used small step-size operators in the literature, which are used in this work as well, are inversion, (single) insertion, swap, and two-opt operators [6,7,8]. In this work, a novel small step-size operator is used as well, the sub-route plan operator, which is introduced in this work, in Section 5. The only large step-size operator used in this work is merge-split, which was introduced in [6]. It is called a large-step-size operator, since it is able to modify more than two route plans.

The inversion and the sub-route operators can only change the direction and the order of the tasks within one route plan, so they do not change the feasibility of the solution. In contrast, the insertion, the swap, and the two-opt operators may change the amount of demand that needs to be served in some of the route plans, so the feasibility of the solution may change, too. For this reason, based on the settings, the output solution of these operators could be different. If infeasible solutions are not accepted and the calculated output solution is infeasible, then the operator returns the original, input solution instead (assuming that the input solution is a feasible solution).

3.4.1. Inversion Operator

The inversion operator randomly selects a task within the input solution. If this task has an inverse (i.e., ), then the operator replaces t with within the solution, else it returns the input solution.

3.4.2. Insertion Operator

The insertion operator randomly selects a task , then replaces (inserts) it before or after another randomly selected task within the input solution. The selected tasks can be in different route plans or in the same route plan, but they cannot be the same tasks (i.e., ).

It creates two potential output solutions, based on where is inserted (before or after ). If has an inverse, then the operator creates other potential output solutions, which contains the inverse task of the task (i.e., ) instead of . It selects the solution as the output solution which has the smallest total cost among the potential output solutions.

3.4.3. Swap Operator

The swap operator randomly selects two tasks ( and , where ), then replaces them with each other (i.e., swaps them). Similarly to the insertion operator, the selected tasks can be from the same route plan or different route plans, but they cannot be the same tasks.

It creates potential output solutions, which contain one or two inverse task(s) of the selected tasks instead of the task(s). All the four possible combinations are considered. It selects the solution as the output solution which has the smallest total cost among the potential output solutions.

3.4.4. Two-Opt Operator

The two-opt operator randomly selects two route plans (e.g., and ) of the solution. Based on the selected two route plans, two cases exist for this move operator. If the selected two route plans are the same (i.e., ), then a sub-route plan (i.e., a part of the route plan) is selected randomly and its direction is reversed. If the selected two route plans are different (i.e., ), then these two route plans are randomly cut into four sub-route plans, and then two new potential output solutions are generated by reconnecting the four sub-route plans and the best one from them is selected. For example, and are cut into sub-route plans and , respectively. Two new solutions are generated by connecting them in the following ways: (1) with and with and (2) with reversed and reversed with .

3.4.5. Merge-split Operator

As it was mentioned before, the merge-split operator can make large changes in the solution (e.g., it can modify the order of all the tasks within one or more route plans), so it is considered a large step-size operator. This operator randomly selects x number of different route plans in the input solution, where x is a random number (). It obtains an unordered list of tasks by merging the tasks of the selected route plans into one list, and then sorts this unordered list with a path scanning heuristic (e.g., [22], which is used in this work as well). The obtained ordered list is then optimally split into new route plans using Ulusoy’s splitting procedure [24].

The ordered list is constructed by the path scanning heuristic the following way. First, an empty path is initialized, then, the affected tasks are added one by one into the current path, until no tasks are left in the unordered list. In each iteration, only those tasks are taken into account that can be added to the current path without breaking the capacity constraint. If there are no any tasks like that, then the depot is added to the current path and a new path is initialized (that becomes the current path). When a task or the depot is added to the current path, the task/depot is connected to the end of the current path with the shortest path between them. If there are multiple tasks that can be added, the one that is closest to the end of the current path is added. If there are multiple tasks that are closest to the end of the current path, then one of the following rules are applied to determine which task should be added next:

- 1.

- maximize the distance between and ;

- 2.

- minimize the distance between and ;

- 3.

- maximize the term ;

- 4.

- minimize the term ;

- 5.

- use rule 1, if the vehicle is less than half-full, otherwise use rule 2.

In the rules above, t () is a task and () is the depot. In one run, only one of the rules can be used. Therefore, the path scanning heuristic is ran five times, which results in five ordered lists.

The Ulusoy’s splitting procedure creates five new candidate output solutions from the five ordered lists by splitting the lists into route plans. How the procedure works is best summarized in [50]. The procedure starts with constructing the Directed Acyclic Graph (DAG) from the ordered list. A DAG is a graph with arcs that represent feasible sub-tours of one giant tour. Next, the shortest path through the graph is calculated, which gives the optimal partition of the giant tour into feasible route plans. As the final step, a new candidate solution is created from the untouched route plans of the input solution and the route plans returned by the procedure. From the five candidate solutions the best one is chosen and returned by the operator.

4. The Proposed ABC Algorithm for CARP (CARP-ABC Algorithm)

In this chapter, the ABC algorithm developed for CARP (CARP-ABC algorithm) is presented. The notations used for the CARP-ABC algorithm are collected in Table A2 in Appendix A.

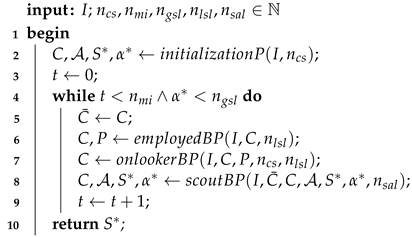

The algorithmic description of the main CARP-ABC algorithm can be seen in Algorithm 1. The main algorithm can be divided into four main phases: initialization, employed bee, onlooker bee, and scout bee phases. The algorithm begins with the initialization phase, then enters a cycle, where it repeats the mentioned phases in the respective order until the termination criterion is satisfied (line 4). In the initialization phase (line 2), the colony (C), the age of the solutions within the colony (), the global best solution (), and its age () are initialized. In the employed bee phase (line 6), local search is performed around the members of the colony. In the onlooker bee phase (line 7), a more in-depth local search is performed around one solution from the colony. In the scout bee phase (line 8), global search is performed.

| Algorithm 1: Main CARP-ABC algorithm. |

|

The parameters of the algorithm are the followings:

- I: a CARP instance;

- : the size of the colony, the number of solutions in the population;

- : the maximum number of iterations of the algorithm;

- : the global search limit, the maximally allowed number of consecutive iterations in which the currently known global best solution is not improved;

- : the local search limit, the maximally allowed number of consecutive iterations in which the currently known local best solution is not improved in the employed bee phase and the onlooker bee phase of the algorithm;

- : the solution age limit, the maximally allowed number of consecutive iterations of the algorithm in which a solution is kept in the population;

- a termination criterion, which in default is that either the or the is reached.

4.1. Initialization Phase

The algorithmic description of the initialization phase of the CARP-ABC algorithm can be seen in Algorithm 2. The algorithm in this phase first initializes the sets for the solutions for and their age (lines 2–3). To guarantee an initial population with certain quality and diversity, the solutions are generated randomly by using the Random Solution Generation (RSG) algorithm for CARP (line 6), which is introduced in this work (in Section 4.1.1). Other population based evolutionary algorithms usually use the Randomized Path-Scanning Heuristic (RPSH) [22] to generate initial solutions. However, our experiments showed that, in the case of the CARP-ABC algorithm, it does not improve the convergence speed of the algorithm, so only the RSG is used.

After the initialization of the colony, the algorithm selects the solution with the best (highest) fitness value by using the function (line 11). The fitness of a solution is defined by its total cost (it needs to be as small as possible). Therefore, the fitness value of a solution is computed by the following fit function:

where is the lower bound of the solution (i.e., the total service cost of all the tasks, involving only one of each tasks which has an inverse). Its value ranges between 0 and 1. Solutions with greater fitness values are preferred, since greater fitness value means that the total cost of the solution is closer to the lower bound.

| Algorithm 2: initializationP (Initialization Phase of the CARP-ABC algorithm). |

|

4.1.1. Random Solution Generation Algorithm

As the first step, the algorithm generates random permutations, which contain positive integers from 1 to n (i.e., the id of every arc task and one of the two s of every edge task) in random order. As the next step, the algorithm reads the s in the permutation one-by-one from left to right, while summing up the demand of the corresponding tasks. If the task assigned to the currently read would break the capacity constraint of the current route plan, the algorithm inserts a “0” (the of the dummy task ) before the of the task in the sequence (i.e., the task is added to a new route plan). After it is finished with separating the s of the tasks into route plans, the algorithm checks each task in the solution and, if it has an inverse task, then randomly (e.g., with 0.5 probability) replaces the id of the task with the of its inverse. As the final step, the algorithm inserts a “0” as the first and last task of the solution to make it a valid solution.

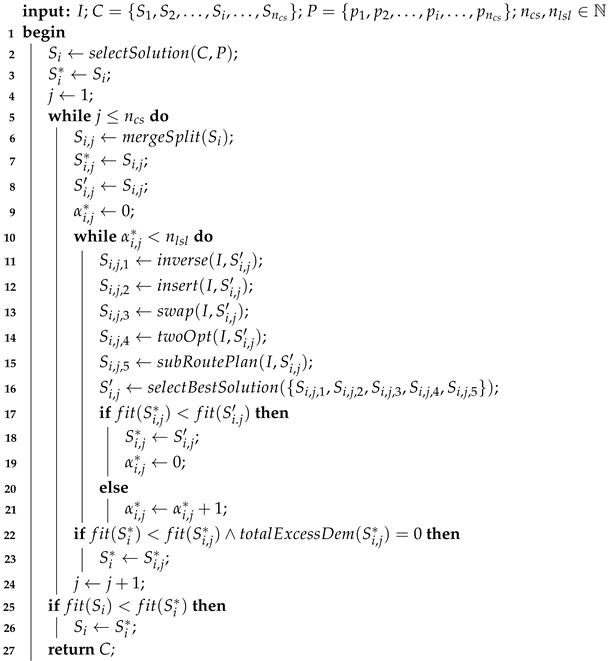

4.2. Employed Bee Phase

The algorithmic description of the employed bee phase of the CARP-ABC algorithm can be seen in Algorithm 3. The algorithm in this phase, for each employed bee, generates new candidate solutions in the neighborhood of with each small step-size operator, then evaluates and selects the best solution (lines 2–11). In this phase, only the inversion operator (line 6) and the sub-route plan operator (line 7) are used, because only these operators guarantee that the new candidate solution will be feasible. It is repeated until the known best local solution cannot be improved within the defined number of iterations (i.e., its age reached ). If the fitness value of the new candidate solution is greater than or equal to the fitness value of the current solution , the new solution replaces the current one in the population (lines 14–15).

As the next step, the algorithm calculates the winning probability values for the solutions (lines 16–19). The probability values are calculated with the same function as in the basic ABC algorithm (Equation (13)).

| Algorithm 3: employedBP (Employed Bee Phase of the CARP-ABC algorithm). |

|

4.3. Onlooker Bee Phase

The algorithmic description of the onlooker bee phase of the CARP-ABC algorithm can be seen in Algorithm 4. The algorithm in this phase, depending on the values, selects a solution with the function. This function first performs a roulette selection times to select number of solutions from the colony. Next, it compares the selected solutions to each other and selects the best one from them (i.e., the one with the greatest fitness value).

As a next step, the algorithm generates number of new candidate solutions in the neighborhood of (i.e., one solution for each onlooker bee) with the merge-split operator (lines 5–6). It generates new candidate solutions in the neighborhood of these solutions with the small step-size operators, until the known best local solution cannot be improved within the defined number of iterations (i.e., the age of the solution, , reaches ) (lines 7–21). In this phase, all the small step-size operators (i.e., inversion, insertion, swap, 2-opt, and sub-route plan) are applied to , which is the best solution that was found in the previous iteration (lines 11–15). From the resulted solutions, the best one is chosen with the function as the new (line 16). If the new is better than the currently known best solution in the neighborhood of (i.e., ), then it is set as the new best solution (lines 17–19). Otherwise, the age of (i.e., ) is increased by one (lines 20–21). After the search ends in the neighborhood of , it is checked whether the best solution found (i.e., ) is better than the best solution found in the whole neighborhood of (i.e., ). If has a higher fitness value and is also feasible (its total excess demand is zero), then it will be set as the new (lines 22–23).

If the best solution found in this phase (i.e., ) is better than the current solution , then is replaced by in the colony (lines 25–26).

| Algorithm 4: onlookerBP (Onlooker Bee Phase of the CARP-ABC algorithm). |

|

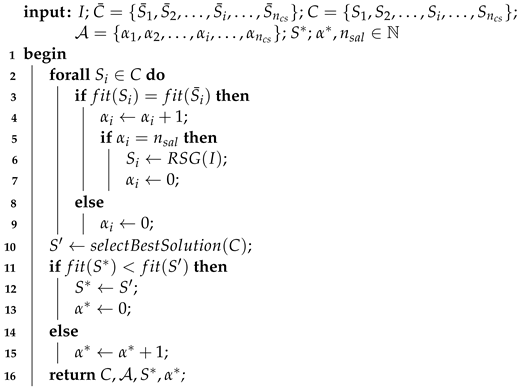

4.4. Scout Bee Phase

The algorithmic description of the scout bee phase of the CARP-ABC algorithm can be seen in Algorithm 5. The algorithm in this phase increases the age of unchanged solutions (lines 3–4) and sets the age to zero for new solutions (lines 8–9) within the colony. Furthermore, if there is an abandoned solution (i.e., a solution which could not be improved through a predetermined number of trials, which is called ), the algorithm replaces it with a new solution (lines 5–7), which is generated by using the RSG algorithm (as in the initialization phase).

In this phase, the algorithm also updates the global best solution, . First, the best solution of the new colony is selected with the function as solution (line 10). If is better than , then is set as the new global best solution (lines 11–13). Otherwise, the age of (i.e., ) is increased by one (lines 14–15).

| Algorithm 5: scoutBP (Scout Bee Phase of the CARP-ABC algorithm). |

|

4.5. Computational Complexity Analysis

In this section, the computational complexity of the proposed CARP-ABC algorithm is discussed. The computational complexity is expressed by using the big-O notation. For the sake of simplicity, approximations are used and the constant values are omitted. The computational complexity of the whole algorithm depends on the given parameter values and the complexity of the input CARP instance, mainly on n (i.e., the number of tasks that have to be executed).

4.5.1. Initialization Phase

The computational complexity of the initialization phase is , in which is the complexity of the RSG algorithm and is the complexity of selecting the best solution. is multiplied by , because RSG is executed times to create the initial population.

Within the RSG algorithm, the complexity of generating a random permutation of the task identifiers is , assuming that the Fisher–Yates shuffle algorithm [51] is used for it. After a permutation is generated, the algorithm iterates over each element, which also has as complexity. Therefore, the complexity of the RSG algorithm is around , which is if the constant multiplier is omitted.

4.5.2. Employed Bee Phase

The computational complexity of the employed bee phase is , in which the complexity of the local search is . The complexity of the probability calculation is (assuming, that the sum of the fitness values is calculated only once). is multiplied by , because the local search is executed for all the members of the population.

Within the local search, the complexity of the inversion operator is and the complexity of the sub-route plan operator is . Within the sub-route plan operator, the complexity of selecting a route plan is , since in the worst case (i.e., every task is on a separate route). After a route plan is selected, one of the methods of the operator is executed. From the methods, the sub-route plan rotation method has the greatest complexity, which is , since in the worst case (i.e., there is only one route plan in the solution).

4.5.3. Onlooker Bee Phase

The computational complexity of the onlooker bee phase is , in which the complexity of selecting a solution from the colony is or is the constant k is omitted. The complexity of the main search is .

Within the main search, the complexity of the merge-split operator is , because the complexity of selecting the number of route plans is and the complexity of the other components of the operator (i.e., selecting the route plans, collecting the affected tasks, and executing the RPSH) is . The complexity of RPSH is , since in the worst case all the n tasks are affected in the solution. After the merge-split operator returns a solution, search is performed around this solution. The complexity of this search is , because the complexity of the sub-route plan operator is and the complexity of the other operators (i.e., inversion, insertion, swap, and two-opt) are .

4.5.4. Scout Bee Phase

The computational complexity of the scout bee phase is , because in the worst case, all the solutions have to be replaced in the colony for exceeding ), so RSG is executed times. Next, the best solution is chosen from the colony, which has complexity.

4.5.5. Whole Algorithm

The computational complexity of the whole CARP-ABC algorithm is composed of the complexity of the initialization phase and the multiplication of the other phases by , since in the worst case the algorithm runs till the maximum number of iterations is reached. If the duplications are removed, then it is the following: .

If the parameters of the CARP-ABC algorithm and the sub-route plan operator are set to a fixed value, then the computational complexity is the following: . Therefore, the time complexity of the CARP-ABC algorithm is mostly linear but it contains components with logarithmic time complexity (e.g., when a route plan is selected).

5. Sub-Route Plan Operator

The sub-route plan operator is based on the GSTM operator for TSP [47]. The main differences between the modified version and the original version are due to the differences between the TSP and the CARP. Therefore, the modified version works with arcs instead of nodes. Furthermore, since the solution for a TSP is always one route plan, while the solution for a CARP (usually) consists of more than one route plan, the modified version takes into account only a part of the solution (one route plan) instead of the whole solution.

The sub-route plan operator is a complex move operator which consists of two different greedy search methods (greedy reconnection and sub-route rotation) and a method that provides distortion. In all three methods, inversion of the affected tasks is considered. Inversion of the tasks has real importance when a sequence of tasks is inverted in the sub-route rotation method because when the execution order of the tasks changes, the direction in which the tasks are executed should be changed too, to keep the traveling cost minimal. The used notations within this chapter are collected in Table A3 in Appendix A.

5.1. The Main Algorithm

The algorithmic description of the main algorithm of the sub-route plan operator can be seen in Algorithm 6. As input, a CARP instance I, a solution S of I, and the parameters of the algorithm are expected. The parameters are the following:

- the reconnection probability ();

- the correction and perturbation probability ();

- the linearity probability ();

- the minimum length of the sub-route plan ();

- the (maximal) size of the neighborhood of a task arc that is considered ().

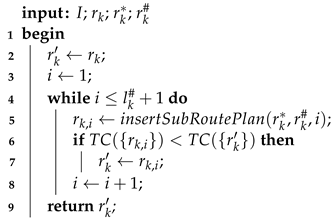

| Algorithm 6: subRoutePlan (main algorithm of the sub-route plan operator). |

|

The maximal length of the sub-route plan () is determined after the route plan is selected. In the proposed CARP-ABC algorithm, the parameters of this algorithm are given as constant values, so only I and S are expected.

In the first step of the algorithm, a route plan is selected from the solution S (line 2), then, if the number of (not dummy) tasks within is sufficient (i.e., is greater than or equal to , line 3), the algorithm proceeds to the next step. Otherwise, it returns the input solution S unchanged (line 21).

In the following step of the algorithm, the parameters are initialized and the (sub-)route plans are generated. The maximum length of the sub-route plan () is determined based on the number of tasks within () and the predefined minimum length of the sub-route plan () (line 4), and the new route plan () is initialized (line 5). The length of the sub-route plan l is determined randomly based on and (line 6). The position index of the starting task of the sub-route plan (s) is randomly selected taking into account l (line 7). The position index of the ending task of the sub-route plan (e) is determined by s and l (line 8). The sub-route plan is constructed by taking the sub-route plan that is enclosed by the tasks and from (line 9). The route plan without is denoted by (line 10).

As the next step of the algorithm, a random number is generated () between 0 and 1 (line 11), which determines the operation of the operator. If is less than or equal to the predefined reconnection probability (i.e., , line 12), then the greedy reconnection method is executed (line 13, Section 5.2), otherwise, a new random number is generated (line 15). If the new value of is less than or equal to the predefined correction and perturbation probability (i.e., , line 16), then distortion is added to (line 17, Section 5.3), otherwise, the sub-route plan rotation method is executed (line 19, Section 5.4). As the final step, the solution S is updated by removing the old route plan and adding the new one, (line 20), then the updated solution is returned (line 21).

5.2. Greedy Reconnection Method

The greedy reconnection method inserts into the position within that generates the least amount of increase in the total cost of the route plan.

5.2.1. Algorithm

The algorithmic description of the greedy reconnection method within the sub-route plan operator can be seen in Algorithm 7. As input, the CARP instance I, the original route plan of the solution S, the selected sub-route plan , and the truncated route plan (i.e., without ) are expected.

| Algorithm 7: greedyReconnection (algorithm of the greedy reconnection method within the sub-route plan operator). |

|

In the first step of the algorithm, the new route plan is initialized with the current route plan (line 2). The position index i that is used to find the best position for insertion of into is initialized as well (line 3). The value “1” refers to the first (not dummy) task within (i.e., ). The value “0” would refer to the first dummy task () and “” to the last dummy task within (assuming is the number of not dummy tasks within ). In the following step, the algorithm checks each position within to find the best one to insert into (lines 4–8). In each iteration, before task within , it inserts with the function (line 5). The total cost of the resulting route plan () is then compared with the total cost of (line 6). If is better than (i.e., it has lower total cost), then it becomes the new value of (line 7).

In the final step of the algorithm, is returned by the function (line 9).

5.2.2. Example

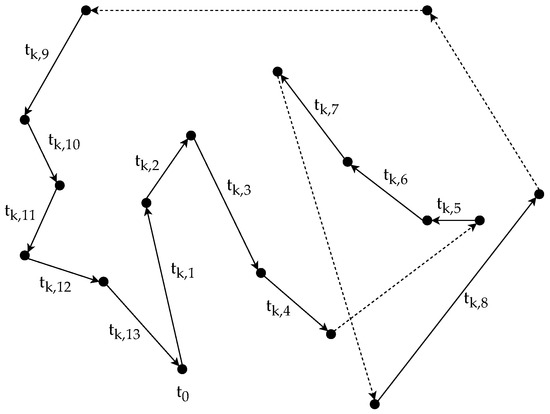

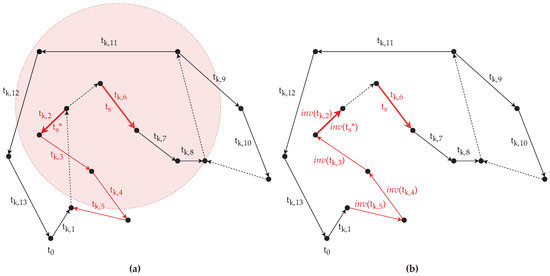

For a better understanding of the method, see the following example. Let the selected route plan be and the length of the sub-route plan be . Based on these, let the selected starting and ending task be and , then the selected sub-route plan is and the route plan without is . Let us assume that inserting between and in results in the least amount of increase in the total cost of the solution, then will be the new k-th route plan in the solution.

The example discussed in the previous paragraph is depicted in Figure 2 and Figure 3. In both figures, the arc tasks served within a route plan are depicted with solid lines, and the other arcs, which are only traversed, are depicted with dashed lines. The original route plan can be seen in Figure 2. In Figure 3, the sub-route plan and the truncated route plan are shown, highlighted with red and blue colors, respectively. The selected starting and ending tasks ( and ) are depicted with thicker lines.

Figure 2.

Example route plan .

Figure 3.

Greedy reconnection method: (a) sub-route plan subtracted from route plan ; (b) sub-route plan connected to the route plan .

5.3. Distortion Method

The distortion method takes the tasks in and inserts them one-by-one into , starting from the position index s, and by rolling or mixing with the predefined linearity probability (). Rolling means selecting the current last task in and mixing means selecting a random task in .

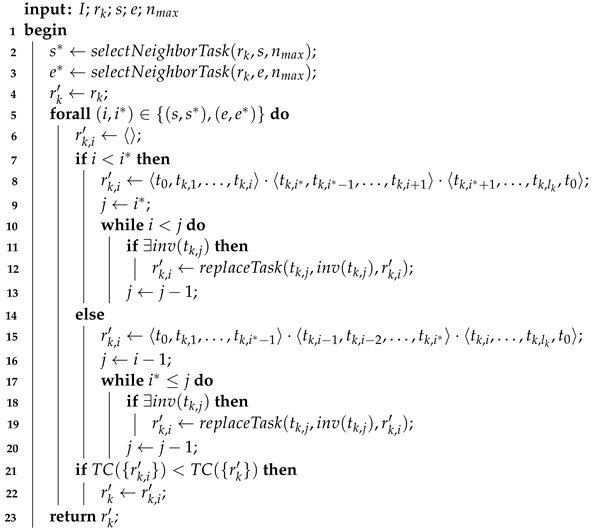

5.3.1. Algorithm

The algorithmic description of the distortion method within the sub-route plan operator can be seen in Algorithm 8. As input, the CARP instance I, the selected sub-route plan , the truncated route plan (i.e., without ), the position index s of the starting task of within the original route plan , and the linearity probability are expected.

| Algorithm 8: Distortion (algorithm of the distortion method within the sub-route plan operator). |

|

In the first step of the algorithm, the position index i is initialized with s (line 2) and the new route plan is initialized with the truncated route plan (line 3). While there are tasks in , the algorithm executes the following steps (lines 4–12). First, a random number is generated () between 0 and 1 (line 5) and the last task is selected from into t (line 6). If is less than or equal to , then the value of t is changed into a random task from (lines 7–9). The selected task t is then inserted into the position i in with the function (line 10) and removed from with the function (line 11). The task is always inserted right after the previously inserted task. When runs out of tasks (i.e., all the tasks within it have been inserted into ), the algorithm returns (line 13).

5.3.2. Example

For a better understanding of the method, see the following example. Let the selected route plan be and the length of the sub-route plan be . Based on these, let the selected starting and ending task be and , then the selected sub-route plan is and the route plan without is . The length of is three, so in this case the algorithm has three iterations. Let the linearity probability be .

In the first iteration, let the random number be . Since it is smaller than , a random task is selected from . Let the selected task be . In this case, the new route plan is (i.e., is inserted between and ) and (i.e., is removed).

In the second iteration, let the random number be . Since it is greater than , the currently last task is selected from , which is . In this case, the new route plan is (i.e., is inserted between and and .

In the third iteration, since only one task left in , regardless of the value of , is selected. Therefore, and . Since is now empty, the algorithm returns .

5.4. Sub-route Plan Rotation Method

The sub-route plan rotation method selects one neighbor task randomly from the neighbors of and ( and , respectively), then inverts the sequence of tasks enclosed by and (including in the sequence), where . The inversion of the sequence is performed in such a manner, that and (or ) become direct neighbors in the new route plan .

5.4.1. Algorithm

The algorithmic description of the sub-route plan rotation method within the sub-route plan operator can be seen in Algorithm 9. As input, the CARP instance I, the original route plan , the position index s of the starting task and the position index e of the ending task of within the original route plan , and the size of the neighborhood are expected.

| Algorithm 9: subRoutePlanRotation (algorithm of the sub-route plan rotation method within the sub-route plan operator). |

|

In the first step of the algorithm, one position index of the closest neighbor tasks is selected randomly for both and ( and , respectively) with the function (lines 2–3) and the new route plan is initialized with the original route plan (line 4). In the next step, for all , the following steps are executed (lines 5–22). First, the potential new route plan is initialized with an empty sequence (line 6), then, based on the relationship between i and , a sub-route plan is selected and inverted. If is before (i.e., ) in , then is directly followed by (or ) in (lines 7–8). Otherwise, if is before in , then (or ) is directly followed by in (lines 14–15). In both cases, each task that has an inverse and is within the inverted sub-sequence, is replaced by its inverse task in the new route plan with the function (lines 9–13, lines 16–20). From the sub-route plan rotations, the one that has the lowest total cost is chosen (lines 21–22) and returned by the algorithm (line 23).

5.4.2. Determining the Neighborhood

The neighbors are determined according to the predefined size of the neighborhood (). The distance between arc task and another arc task is calculated based on their order within and whether the other arc task has an inverse task (i.e., it is from an edge task) or not. Let be an arc task in that is not (i.e., ). If is before in (i.e., ) and has inverse task (i.e., ), then the distance between the two arc tasks is the shortest path between the head vertices of and , so it can be calculated with the expression . The shortest path is calculated starting from , because during the sub-route plan rotation gets reversed (i.e., it gets replaced by its inverse in the route plan) and it is known that the head vertex of the task is the same as the tail vertex of the inverse task (i.e., ). If the task does not have inverse, then the shortest path is calculated starting from . If is after in (i.e., ) and has inverse task, then is calculated. If the task does not have inverse, then the shortest path is calculated ending at . The reasoning behind what expression to use in each case is summarized in Table 1.

Table 1.

Summary table about what expression to use to calculate the distance between an arbitrary arc task from the route plan and ().

5.4.3. Example

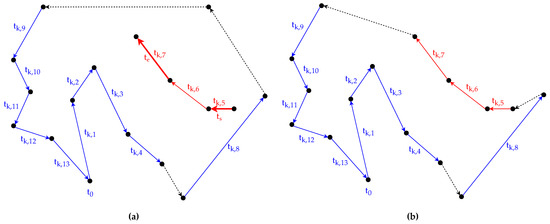

For a better understanding of the method, see the following example. Let the selected route plan be , the length of the sub-route plan be , and the size of the neighborhood be . (At this method, l only defines the distance between the two selected arc tasks, it has no effect on the length of the rotated sub-route plans.) Based on these, let the selected two arcs task be and . Let us assume that all the arc tasks in are from an edge task, so they all have inverse task.

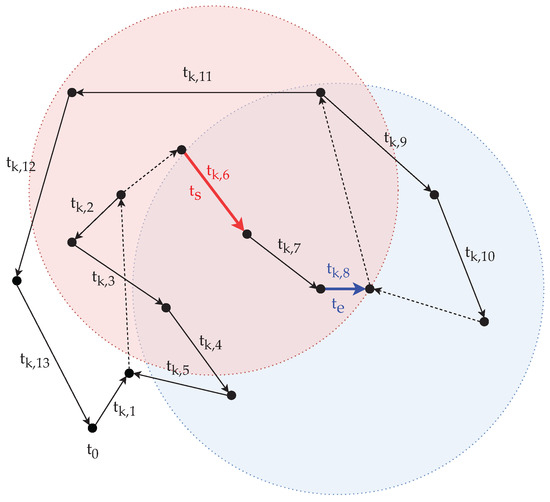

The route plan, the selected arc tasks, and their neighborhood are illustrated on Figure 4. The arc tasks served within the route plan are depicted with solid lines, and the other arcs, which are only traversed, are depicted with dashed lines. The arc tasks and and their neighborhood are highlighted with red and blue color, respectively. Only the arc tasks that are completely covered by the ellipses are part of the neighborhood. It must be noted that the ellipses are only for representational purpose. Since identifying the neighborhood is quite complex due to the distance calculation, this yields to that the shape that covers only the neighbor arc tasks varies. Based on this, for , the set of the neighbor arc tasks is , and for , it is .

Figure 4.

Nearest neighbors of the arc tasks () and () within the route plan , when .

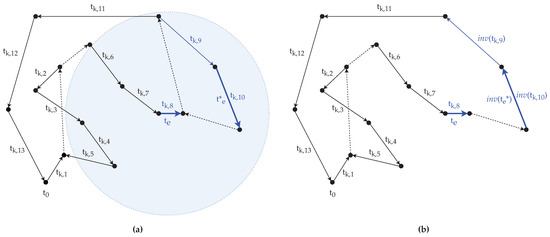

For , let us assume that the selected neighbor task is (i.e., ), then the sub-route plan is (Figure 5a). Since precedes (i.e., ), the sub-route plan is reversed in a manner that in the new route plan is directly followed by . The reversed sub-route plan is , therefore the new route plan is (Figure 5b).

Figure 5.

Rotation of the sub-route plan enclosed by and : (a) An arc task (here ) is randomly selected as from the neighbor list of arc task (), thus a sub-route plan is obtained; (b) The sub-route plan is inverted, so will be directly followed by in the new route plan .

For , let us assume that the selected neighbor task is (i.e., ), then the sub-route plan is (Figure 6a). Since is after (i.e., ), the sub-route plan is reversed in a manner that in the new route plan directly follows . The reversed sub-route plan is , therefore the new route plan is (Figure 6b).

Figure 6.

Rotation of the sub-route plan enclosed by and : (a) An arc task () is randomly selected as from the neighbor list of arc task (), thus, a sub-route plan is obtained; (b) The sub-route plan is inverted, so will be directly followed by in the new route plan .

6. Experiments

The proposed CARP-ABC algorithm (ABC algorithm from now on in this section) along with the sub-route plan operator was implemented in Python (3.6) in the Spyder (4.2.1) development environment. To compare the ABC algorithm with other static CARP solvers, the HMA and the ACOPR were also implemented. To test the ABC algorithm and the HMA as complete rerouting algorithms within the DCARP framework and compare them to the minimal rerouting algorithm RR1, the implementations from our previous work [20] were used. Python programming language was chosen for the implementation, because the DCARP framework will be supported in the future with the PM4Py process mining platform, which is written in Python. The experiments were performed on a laptop PC with Windows 10 operation system, equipped with an Intel(R) Core(TM) i5-3320M 2.60 GHz 2-core CPU and 8 GB of RAM.

It must be signified, that the HMA and the ACOPR were implemented based on their algorithmic description (in [7] and in [8], respectively), because the original implemented version of them is not available. Therefore, the implementations used in this work might have errors that decrease the effectiveness of these algorithms.

In this section, first, the setups of the experiments are specified (Section 6.1). Next, the results of the CARP experiments (Section 6.2), the results of the DCARP experiments (Section 6.4), and the results of the operator experiments (Section 6.3) are discussed, in the respective order.

6.1. Experimental Setups

For the CARP experiments, five CARP instances of different sizes were used. The ABC algorithm, the HMA, and the ACOPR were run 30 times with a time limit set to 10 min and applied to the CARP instances, independently, then the recorded outputs were compared and analyzed.

For the DCARP experiments, one CARP instance of medium size was used. Since the initial DCARP instance is fundamentally a static CARP instance and the HMA is the currently known the most accurate metaheuristic for CARP, the HMA was used to obtain the initial solution. The travel and service logs and the events were generated with the algorithms introduced in [20]. For each event type, 15 events were independently generated, then executed on the initial instance, creating new DCARP instances. The RR1 algorithm, the ABC algorithm, and the HMA were run with a time limit set to 1 min and applied to these instances, independently, then the recorded outputs were compared and analyzed.

For the operator experiments, three CARP instances of different sizes were used. The sub-route plan operator and the other small step-size operators for CARP (i.e., inversion, insertion, swap, and two-opt) were used as local search operators within the employed bee phase of the ABC algorithm. The employed bee phase was chosen instead of the onlooker bee phase so the efficiency of the operators can be measured on solutions of different qualities, not only on good quality solutions. The (modified) ABC algorithm was run 30 times with a time limit set to 10 min and applied to the CARP instances, independently, then the recorded outputs were compared and analyzed.

At the CARP and the DCARP experiments, during the execution of each algorithm, the new global best solution and the time it took for the algorithm to find the new global best solution (i.e., the elapsed time since the beginning of the execution of the algorithm) were recorded and analyzed. At the operator experiments, during the execution of the algorithm, the number of search trials in which the move operators found a better local best solution (i.e., ) was recorded and analyzed.

The used instances and the parameter settings of the used algorithms are specified in the subsections (in Section 6.1.1 and in Section 6.1.2, respectively).

6.1.1. Used Instances

To test the CARP solvers, benchmark test sets are often used in the literature. These test sets can be divided into two main categories: synthetic (e.g., containing randomly generated instances) [52,53,54] and real-life based (containing examples based on real road networks and tasks) [2,28,29].

Since testing an algorithm on all the instances would be time-consuming, only the following five instances were selected for the CARP experiments:

- “kshs1” from the KSHS set [54];

- “egl-e1-A” and “egl-s1-A” from the EGL set [2];

- “egl-g1-A” and “egl-g2-A” from EGL-Large set [29].

For the DCARP experiments, only the “egl-e1-A” instance was used. For the operator experiments, the “egl-e1-A”, “egl-s1-A”, and “egl-g1-A” instances were used.

The EGL and the EGL-Large sets originate from the data of a winter gritting application in Lancashire (UK). The EGL set contains 24 instances in which two different graphs are combined with various attribute values. The instances “egl-e1-A” and “egl-s1-A” were selected to represent one of each graph. The EGL-Large set contains 10 instances in which the graph is the same but the number of task edges is 347 in 5 instances and 375 in the other 5 instances. The instances “egl-g1-A” and “egl-g2-A” were selected to represent one from both kinds of instances.

The attributes of all the five selected instances are briefly summarized in Table 2. These CARP instances were selected to represent CARPs of very different sizes, thus requiring very different complexity levels to solve them. It can be seen, that the “kshs1” instance is a small synthetic CARP instance, which means a small search space for the algorithm, so for problem difficulty it can be put into the easy category. The EGL instances are real-life based CARP instances, so they are naturally more complex. Based on their size, the difficulty of the “egl-e1-A” instance is medium, the difficulty of the “egl-s1-A” instance is hard, and the difficulty of the instances “egl-g1-A” and “egl-g2-A” are very hard.

Table 2.

Attributes of the used CARP instances.

6.1.2. Parameter Settings

The ABC algorithm was tested with multiple parameter settings. Based on the results, the following settings provided the best quality results without unnecessarily increasing the running time of the algorithm, thus these are used in the experiments:

- : 10;

- : 10,000;

- : 100;

- : 20;

- : 20.

According to the investigation in [47], the ideal parameter values for the GSTM operator are the followings: , , , , , and , where n is the number of cities. In the experiments, the same parameter values are used for the sub-route plan move operator. The only difference is that n is the number of tasks within the selected route plan.

For the HMA and the ACOPR, the optimal parameter settings defined by the corresponding works [7,8] were used. For the RR1, no parameters are needed.

6.2. Results of the CARP Experiments

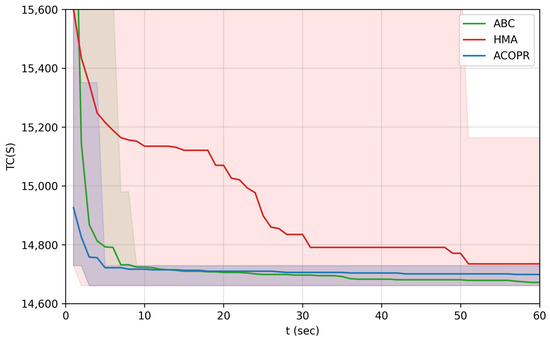

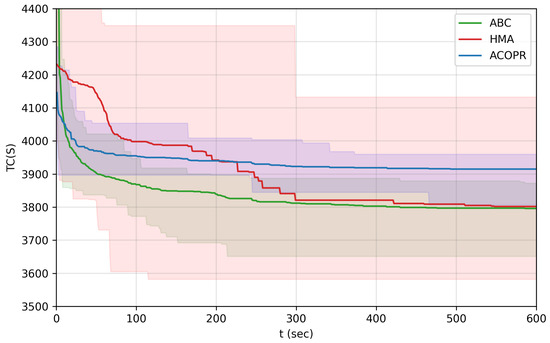

The charts on Figure 7, Figure 8 and Figure 9 show the convergence speed of 30 independent runs of the algorithms for the selected instances. As it was mentioned before, for these, the new global best solution and the time it took for the algorithm to find the new global best solution were recorded. The y-axis shows the total cost of the solution, and the x-axis shows the elapsed time since the algorithm started running in seconds. The different colors indicate the outputs of the different algorithms. The colored lines indicate the average convergence speed and the colored areas cover all the values that were recorded (i.e., the areas are enclosed by the minimum and maximum values). The closer the line is to the intersection of the axes, the better the convergence speed of the algorithm.

Figure 7.

The convergence speed of 30 independent runs of HMA, ACOPR, and ABC algorithms on the “kshs1” instance, plotted on one chart.

Figure 8.

The convergence speed of 30 independent runs of HMA, ACOPR, and ABC algorithm on the “egl-e1-A” instance, plotted on one chart, with time limit ( s).

Figure 9.

The convergence speed of 30 independent runs of HMA, ACOPR, and ABC algorithm on the “egl-s1-A” instance, plotted on one chart with time limit ( 600 s).

In the case of the “kshs1” instance (Figure 7), it can be seen that the convergence speed of the ACOPR and the ABC algorithm is around twice as fast as the speed of the HMA. However, even though the speed of the ACOPR and the ABC algorithm is nearly the same, the ACOPR algorithm has failed to find the best solution in 30 runs, thus making the ABC algorithm the best solver for CARPs of small size like the “kshs1” instance.

In the case of the “egl-e1-A” instance (Figure 8), the differences between the convergence speed of the algorithms start to show. It can be seen that in all cases, the ABC algorithm provides better solutions and faster, than the ACOPR algorithm. The HMA algorithm has a very slow cycle time, thus it has a very slow convergence speed as well. If time is not taken into account, the HMA can generally provide better solutions than the other algorithms.

In Table 3, the total cost of the globally best solution within different time limits is examined, based on 30 independent runs of the ABC algorithm and the HMA. The calculated statistics are the following: minimum (Min.), maximum (Max.), average (Avg.), and standard deviation (Std.). It can be seen that within 1 min, the ABC algorithm always provided better solutions. Within 5 min, in some cases, the HMA algorithm found better results (it has smaller Min. value), but in average the ABC algorithm still performed better (it has smaller Max. and Avg. values). Nevertheless, within 10 or more minutes, the HMA algorithm provided better solutions. Regardless of the time limit, the ABC algorithm is slightly more stable than the HMA algorithm, in terms of how much the solution varies for different runs (it has smaller Std. values).

Table 3.

Statistics of the total cost of the globally best solution of the HMA and the ABC algorithms on the “egl-e1-A” instance, within different time limits.

In the case of the “egl-s1-A” instance (Figure 9 and Table 4), the differences between the convergence speed of the HMA and the ABC algorithm is more complex. It can be seen that before 200 s, the ABC algorithm performs better, between 200 and 400 s they perform around the same, then after 400 s the HMA performs better.

Table 4.

Statistics of the total cost of the globally best solution of the HMA and the ABC algorithms on the “egl-s1-A” instance, within different time limits.

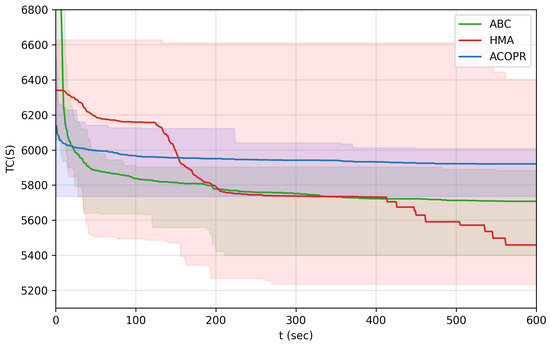

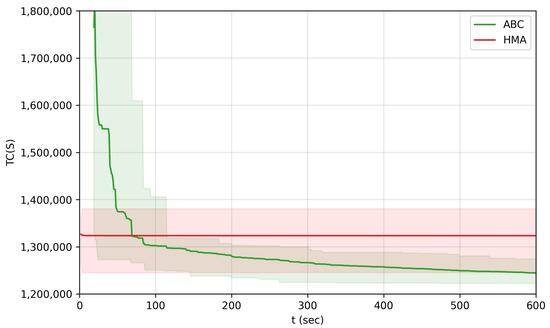

The results were similar for the “egl-g1-A” and “egl-g2-A” instances (Figure 10 and Table 5 and Table 6). In most of the runs, the set time limit was not enough for the HMA to improve its initial solution, so only its initial solution was recorded. That is why the graph for the HMA looks like a straight line in Figure 10. As a result of this, in the measured time period, the ABC algorithm performed better than the HMA after around 100 s.

Figure 10.

The convergence speed of 30 independent runs of HMA, and ABC algorithm on the “egl-g1-A” instance, plotted on one chart with time limit (t≤ 600 s).

Table 5.

Statistics of the total cost of the globally best solution of the HMA and the ABC algorithms on the “egl-g1-A” instance, within different time limits.

Table 6.

Statistics of the total cost of the globally best solution of the HMA and the ABC algorithms on the “egl-g2-A” instance, within different time limits.

Based on the results, it can be concluded that the ABC algorithm can provide a good enough solution within a short amount of time. Since it has a small cycle time, the best global solution can be updated more frequently. The ABC algorithm is better than the ACOPR algorithm in all aspects. The ABC algorithm has faster convergence speed and finds better quality solutions, than the ACOPR algorithm. Furthermore, it is competitive with the HMA, when the running time of the algorithms is set to a short time interval.

6.3. Results of the Operator Experiments

The results of the operator experiments are summarized in Table 7. In each row, the percentage of the number of trials is shown, in which the operator (specified by the column header) found a better solution, compared to the total number of trials in which a better solution was found for the instance (that is specified in the first column). For the sake of simplicity, let us call this measure efficiency. It can be seen that the sub-route plan operator has the highest efficiency in all the three cases, thus, among the examined operators, it has the highest chance to improve the current solution, regardless of the problem size.

Table 7.

The efficiency of the move operators compared with each other within the CARP-ABC algorithm, measured on instances of different sizes.

A correlation can be observed between the size and complexity of the CARP problem and the efficiency of the operators. As it was mentioned before, the complexity of the “egl-e1-A” instance is medium, the “egl-s1-A” instance is difficult, and the the “egl-g1-A” instance is the most difficult. By increasing the size of the problem, the efficiency of the inversion and the sub-route plan operator increases compared to the other operators, and by decreasing the size of the problem, the efficiency of the insertion, the swap, and the two-opt operators increases compared to the other operators.