Abstract

Metaheuristics are proven solutions for complex optimization problems. Recently, bio-inspired metaheuristics have shown their capabilities for solving complex engineering problems. The Whale Optimization Algorithm is a popular metaheuristic, which is based on the hunting behavior of whale. For some problems, this algorithm suffers from local minima entrapment. To make WOA compatible with a number of challenging problems, two major modifications are proposed in this paper: the first one is opposition-based learning in the initialization phase, while the second is inculcation of Cauchy mutation operator in the position updating phase. The proposed variant is named the Augmented Whale Optimization Algorithm (AWOA) and tested over two benchmark suits, i.e., classical benchmark functions and the latest CEC-2017 benchmark functions for 10 dimension and 30 dimension problems. Various analyses, including convergence property analysis, boxplot analysis and Wilcoxon rank sum test analysis, show that the proposed variant possesses better exploration and exploitation capabilities. Along with this, the application of AWOA has been reported for three real-world problems of various disciplines. The results revealed that the proposed variant exhibits better optimization performance.

MSC:

68T01; 68T05; 68T07; 68T09; 68T20; 68T30

1. Introduction and Literature Review

Optimization is a process to fetch the best alternative solution from the given set of alternatives. Optimization processes are evident everywhere around of us. For example, to run a generating company, the operator has to take care of operating cost and to check and deal with various type of markets to execute financial transactions.The operator has to optimize the fuel purchase cost, sell the power at maximum rate and purchase the carbon credits at minimum cost to earn profit. Sometimes, optimization processes involve various stochastic variables to model the uncertainty in the process. Such processes are quite difficult to handle and often pose a severe challenge to the optimizer or solution provider algorithms. Evolution of modern optimizers is the outcome of these complex combinatorial multimodal nonlinear optimization problems. Unlike classical optimizers, where the search starts with the initial guess, these modern optimizers are based on the stochastic variables, and hence, they are less vulnerable towards local minima entrapment. These problems become the main source of emerging of metaheuristic algorithms, which are capable of finding a near-optimal solution in less computation time. The popularity of metaheuristic algorithms [1] has increased exponentially in the last two decades due to their simplicity, derivation-free mechanism, flexibility and better results providing capacity in comparison with conventional methods. The main inspiration of these algorithms is nature, and hence, aliased as nature-inspired algorithms [2].

Social mimicry of nature and living processes, behavior analysis of animals and cognitive viability are some of the attributes of nature-inspired algorithms. Darwin’s theory of evolution has inspired some nature-inspired algorithms, based on the property of “inheritance of good traits” and “competition, i.e., survival of the fittest”. These algorithms are Genetic Algorithm [3], Differential Evolution and Evolutionary Strategies [4].

The other popular philosophy is to mimic the behavior of animals which search for food. In these approaches, food or prey is used as a metaphor for global minima in mathematical terms. Exploration, exploitation and convergence towards the global minima is mapped with animal behavior. Most of the nature-inspired algorithms also known as population-based algorithms can further be classified as:

- Bio-inspired Swarm Intelligence (SI)-based algorithms: This category includes all algorithms inspired by any behavior of swarms or herds of animals or birds. Since most birds and animals live in a flock or group, there many algorithms that fall under this category, such as Ant Colony Optimization (ACO) [5], Artificial Bee Colony [6], Bat Algorithm [7], Cuckoo Search Algorithm [8], Krill herd Algorithm [9], Firefly Algorithm [10], Grey Wolf Optimizer [11], Bacterial Foraging Algorithm [12], Social Spider Algorithm [13], Cat Swarm Optimization [14], Moth Flame Optimization [15], Ant Lion Optimizer [16], Crow Search Algorithm [17] and Grasshopper Optimization Algorithm [18]. A social interaction-based algorithm named gaining and sharing knowledge was proposed in reference [19]. References pertaining to the applications of bioinspired algorithms affirm the suitability of these algorithms on real-world problems [20,21,22,23]. A timeline of some famous bio-inspired algorithms is presented in Figure 1.

Figure 1. Development timeline of some of the leading bio-inspired algorithms.

Figure 1. Development timeline of some of the leading bio-inspired algorithms. - Physics- or chemistry-based algorithms: Algorithms developed by mimicking any physical or chemical law fall under this category. Some of them are Big bang-big crunch Optimization [24], Black Hole [25], Gravitational search Algorithm [26], Central Force [27] and Charged system search [28].

Other than these population-based algorithms, a few different algorithms have also been proposed to solve specific mathematical problems. In [29,30], the authors proposed the concept of construction, solution and merging. Another Greedy randomised adaptive search-based algorithm using the improved version of integer linear programming was proposed in [31].

The No Free Lunch Theorem proposed by Wolpert et al. [32] states that there is no one metaheuristic algorithm which can solve all optimization problems. From this theorem, it can be concluded that there is no single metaheuristic that can provide the best solution for all problems. It is possible that one algorithm may be very effective for solving certain problems but ineffective in solving other problems. Due to the popularity of nature-inspired algorithms in providing reasonable solutions to complex real-life problems, many new nature-inspired optimization techniques are being proposed in the literature. It is interesting to note that all bio-inspired algorithms are subsets of nature-inspired algorithms. Among all of these algorithms, the popularity of bio-inspired algorithms has increased exponentially in recent years. Despite of this popularity, these algorithms have also been critically reviewed [33].

In 2016, Mirjalili et al. [34] proposed a new nature-inspired algorithm called the Whale optimization algorithm (WOA), inspired by the bubble-net hunting behavior of humpback whales. The humpback whale belongs to the rorqual family of whales, known for their huge size. An adult can be 12–16 m long and weigh 25–30 metric tons. They have a distinctive body shape and are known for their breaching behavior in water with astonishing gymnastic skills and for haunting songs sung by males during their migration period. Humpback whales eat small fish herds and krills. For hunting their prey, they follow a unique strategy of encircling the prey spirally, while gradually shrinking the size of the circles of this spiral. With incorporation of this theory, the performance of WOA is superior to many other nature-inspired algorithms. Recently, in [35], WOA was used to solve the optimization problem of the truss structure. WOA has also been used to solve the well-known economic dispatch problem in [36]. The problem of unit commitment from electric power generation was solved through WOA in [37]. In [38], the author applied WOA to the long-term optimal operation of a single reservoir and cascade reservoirs. The following are the main reasons to select WOA:

- There are few parameters to control, so it is easy to implement and very flexible.

- This algorithm has a specific characteristic to transit between exploration and exploitation phasesm as both of these include one parameter only.

Sometimes, it also suffers from a slow convergence speed and local minima entrapment due to the random size of the population. To overcome these shortcomings, in this paper, we propose two major modifications to the existing WOA:

- The first modification is the inculcation of the opposition-based learning (OBL) concept in the initialization phase of the search process, or in other words, the exploratory stage. The OBL is a proven tool for enhancing the exploration capabilities of metaheuristic algorithms.

- The second modification is of the position updating phase, by updating the position vector with the help of Cauchy numbers.

The remaining part of this paper is organized as follows: Section 2 describes the crisp mathematical details of WOA. Section 3 is a proposal of the proposed variant; an analogy based on modified position update is also established with the proposed mathematical framework. Section 4 includes the details of benchmark functions. In Section 5 and Section 6 show the results of the benchmark functions and some real-life problems that occur with different statistical analyses. Last but not the least, the paper concludes in Section 7 with a decisive evaluation of the results, and some future directions are indicated.

2. Mathematical Framework of WOA

The mathematical model of WOA can be presented in three steps: prey encircling, exploitation phase through bubble-net and exploration phase, i.e., prey search.

- Prey encircling: Humpback whales choose their target prey through the capacity to finding the location of prey. The best search agent is followed by other search agents to update their positions, which can be given mathematically as:where denotes the position vector of the best obtained solution, is the position vector, s is the current iteration, denotes the absolute value and · denotes the element to element multiplication.The coefficients and can be calculated as follows:where linearly decreases with every iteration from 2 to 0 and . By adjusting the values of vectors and , the current position of search agents shifted towards the best position. This position updating process in the neighborhood direction also helps in encircling the prey n dimensionally.

- Exploitation phase through bubble-net: The value of decreases in the interval . Due to changes in , also fluctuates and represents the shrinking behavior of search agents. By choosing random values of in the interval , the humpback whale updates its position. In this process, the whale swims towards the prey spirally and the circles of spirals slowly shrink in size. This shrinking of the spirals in a helix-shaped movement can be mathematically modeled as:where is the constant factor responsible for the shape of spirals and l randomly belongs to interval .In the position updating phase, whales can choose any model, i.e., the shrinking mechanism or the spiral mechanism. The probability of this simultaneous behavior is assumed to be 50 during the optimization process. The combined equation of both of these behavior can be represented as:

- Exploration PhaseIn this phase, is chosen opposite to the exploitation phase, i.e., the value of must be >1 or <−1, so that the humpback whales can move away from each other, which increases the exploration rate. This phenomenon can be represented mathematically as:where represents the position of a random whale.After achieving the termination criteria, the optimization process finishes. The main features of WOA are the presence of the dual theory of circular shrinking and spiral path, which increase the exploitation process of finding the best position around the prey. Afterwards, the exploration phase provides a larger area through the random selection of values of .

3. Motivation and Development of the Augmented Whale Optimization Algorithm

It is observed in the previous reported applications that inserting mutation in the population-based schemes can enhance the performance of the optimization. Some noteworthy applications are reported in [39].

3.1. Augmented Whale Optimization Algorithm (AWOA)

By taking the motivation of the modified position update, we present the development of AWOA and the mathematical steps we have incorporated. To simulate the behavior of whale through modified position update and their connection to the position update mechanism for mating, we require two mechanisms:

- The mechanism that puts the whales in diverse directions.

- The mechanism that updates the positions of the whales by using a mathematical signal.

3.1.1. The Opposition-Based Position Update Method

For simulating the first mechanism, we choose the opposition number generation theory that was proposed by H. R Tizoosh. Opposition-based learning is the concept that puts the search agents in diverse (rather opposite directions) so that the search for optima can also be initiated from opposite directions. This theory has been applied in many metaheuristic algorithms, and now, it is a proven fact that the search capabilities of the optimizer can substantially be enhanced by the application of this opposition number generation technique. Some recent papers have provided evidence of this [40,41]. With these approaches, an impact of opposition-based learning can be easily seen. Furthermore, a rich review on the techniques related to opposition, application area and performance-wise comparison can be read in [42,43].

The following points can be taken as some positive arguments in favor of the application of the oppositional number generation theory (ONGT) concept:

- While solving multimodal optimization problems, it is required that an optimizer should start a process from the point which is nearer to the global optima; in some cases, the loose position update mechanism becomes a potential cause for local minima entrapment. The ONGT becomes a helping hand in such situations, as it places search agents in diverse directions, and hence, the probability of local minima entrapment is substantially decreased.

- In real applications, where the shape and nature of objective functions are unknown, the ONGT can be a beneficial tool because if the function is unimodal in nature, as per the research, the exploration capabilities of any optimizers can be substantially enhanced by the application of ONGT. On the other hand, if the function is multimodal in nature, then ONGT will help search agents to acquire opposite positions and help the optimizer’s mechanism to converge to global optima.

For the reader’s benefit, we are incorporating some definitions of opposite points in search space for a 2D and multidimensional space.

Definition 1.

Let be a real number, where the opposite number of x is defined by:

The same holds for Q dimensional space.

Definition 2.

Let be a point in Q dimensional space, where and ; the opposite points matrix can be given by . Hence:

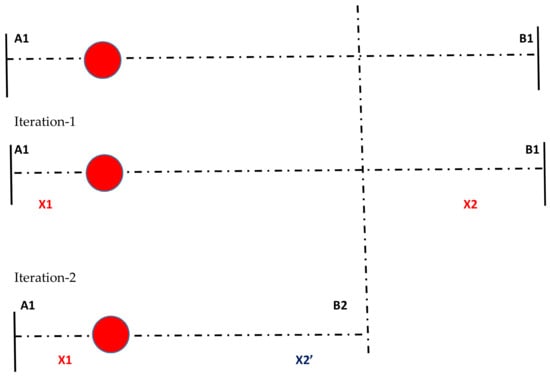

where and are the lower limit and upper limit, respectively. Furthermore, Figure 2 illustrates the search process of ONGT, where A1 and B1 are the search boundaries, and it shrinks as the iterative process progresses.

Figure 2.

Solving the one-dimensional problem by recursive halving the search interval.

3.1.2. Position Updating Mechanism Based on the Cauchy Mutation Operator

For simulating the second mechanism, we require a signal that is a close replica of a whale song. In the literature, a significant amount of work has been done on the application of the Cauchy mutation operator due to the following reasons:

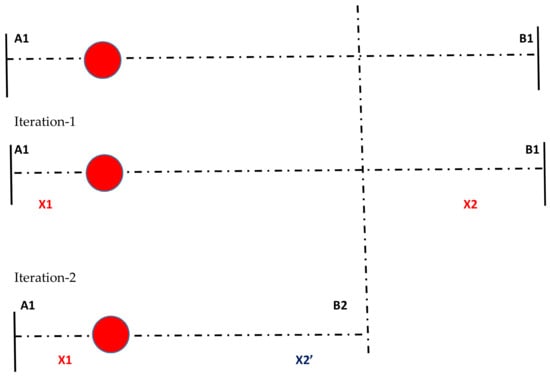

- Since the expectation of the Cauchy distribution is not defined, the variance of this distribution is infinite; due to this fact, the Cauchy operators sometimes generate a very long jump as compared to normally distributed random numbers [44,45]. This phenomenon can be observed in Figure 3.

Figure 3. Whale position update inspired from Cauchy Distribution.

Figure 3. Whale position update inspired from Cauchy Distribution. - It is also shown in [44] that Cauchy distribution generates an offspring far from its parents; hence, the avoidance of local minima can be achieved.

In the proposed AWOA, the position update mechanism is derived from the Cauchy distribution operator. The Cauchy density function of the distribution is given by:

where t is the scaling parameter and the corresponding distribution function can be given as:

First, a random number is generated, after which a random number is generated by using following equation:

We assume that is a whale position update generated by the search agents and on the basis of this signal, the position of the whale is updated. Furthermore, we define a position-based weight matrix of position vector of whale, which is given as:

where is a weight vector and NP is the population size of whale. Furthermore, the position update equation can be modified as:

Summarizing all the points discussed in this section, we propose two mechanisms for the improvement of the performance of WOA. The first one is the opposition-based learning concept that places whales in diverse directions to explore the search space effectively, and based on the whale behaviour(modified position update) is created by them, the position update mechanism is proposed. To simulate whale song, we employ Cauchy numbers. Hence, both of these mechanisms can be beneficial for enhancing the exploration and exploitation capabilities of WOA. In the next section, we will evaluate the performance of the proposed variant on some conventional and CEC-17 benchmark functions.

4. Benchmark Test Functions

Benchmark functions are a set of functions with different known characteristics (separability, modality and dimensionality) and often used to evaluate the performance of optimization algorithms. In the present paper, we measure the performance of our proposed variant AWOA through two benchmark suites.

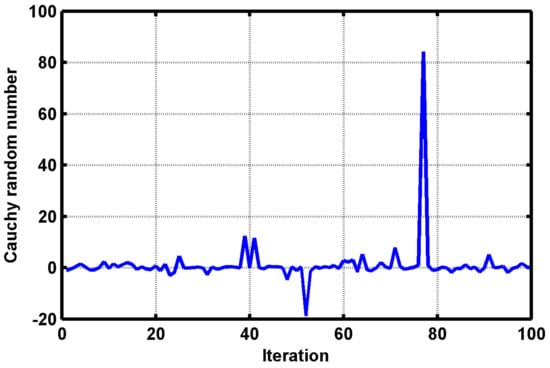

- Benchmark Suite 1: In this suite, 23 conventional benchmark functions are considered, out of which 7 are unimodal and rest are multimodal and fixed dimension functions. The details of benchmark functions, such as mathematical definition, minima, dimensions and range are incorporated in Table 1. For further details, one can refer to [46,47,48]. The shapes of the used benchmark functions are given in Figure 4.

Table 1. Details of Benchmark Functions Suite 1.

Table 1. Details of Benchmark Functions Suite 1. Figure 4. Benchmark Suite 1.

Figure 4. Benchmark Suite 1. - Benchmark Suite 2: For further benchmarking our proposed variant, we also choose a set of 29 functions of diverse nature from CEC 2017. Table 2 showcases the minor details of these functions. For other details, such as optima and mathematical definitions, we can follow [49].

Table 2. Details of CEC-2017 (Benchmark Suite 2).

Table 2. Details of CEC-2017 (Benchmark Suite 2).

5. Result Analysis

In this section, various analyses that can check the efficacy of the proposed modifications are exhibited. For judging the optimization performance of the proposed AWOA, we have chosen some recently developed variants of WOA for comparison purpose. These variants are:

- Lévy flight trajectory-based whale optimization algorithm (LWOA) [50].

- Improved version of the whale optimization algorithm that uses the opposition-based learning, termed OWOA [41].

- Chaotic Whale Optimization Algorithm (CWOA) [51].

5.1. Benchmark Suite 1

Table 3 shows the optimization results of AWOA on Benchmark Suite 1 along with the leading. The table shows entries of mean and standard deviation (SD) of function values of 30 independent runs. Maximum function evaluations are set to 15,000. The first four functions in the table are unimodal functions. Benchmarking of any algorithm on unimodal functions gives us the information of the exploration capabilities of the algorithm. Inspecting the results of proposed AWOA on unimodal functions, it can be easily observed that the mean values are very competitive for the proposed AWOA as compared with other variants of WOA.

Table 3.

Results of Benchmark Suite-1.

For rest of the functions, indicated mean values are competitive and the best results are indicated in bold face. From this statistical analysis, we can easily derive a conclusion that proposed modifications in AWOA are meaningful and yield positive implications on optimization performance of the AWOA specially on unimodal functions. Similarly, for multimodal functions BF-7 and BF-9 to 11, BF-15 to 19 and BF-22 have optimal values of mean parameter. We observed that the values of mean are competitive for rest of the functions and performance of proposed AWOA has not deteriorated.

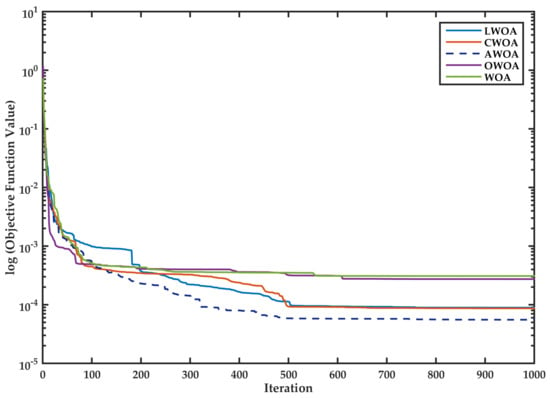

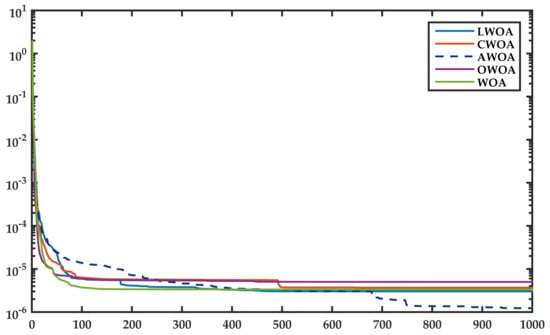

5.1.1. Convergence Property Analysis

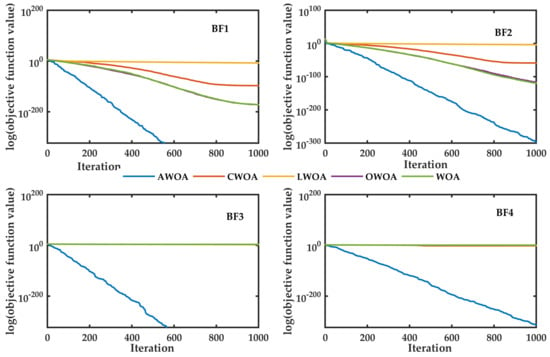

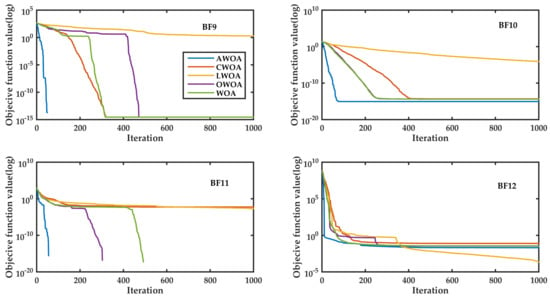

Similarly, the convergence plots for functions BF1 to BF4 have also been plotted in Figure 5 for the sake of clarity. From these convergence curves, it is observed that the proposed variant shows better convergence characteristics and the proposed modifications are fruitful to enhance the convergence and exploration properties of WOA. As it can be seen that convergence properties of AWOA is very swift as compared to other competitors. It is to be noted here that BF1–BF4 are unimodal functions and performance of AWOA on unimodal functions indicates enhanced exploitation properties. Furthermore, for showcasing the optimization capabilities of AWOA on multimodal functions the plots of convergence are exhibited in Figure 6. These are plotted for BF9 to BF12. From these results of proposed AWOA, it can easily be concluded that the results are also competitive.

Figure 5.

Convergence property analysis of unimodal functions.

Figure 6.

Convergence property analysis of multimodal functions.

5.1.2. Wilcoxon Rank Sum Test

A rank sum test analysis has been conducted and the p-values of the test are indicated in Table 4. We have shown the values of Wilcoxon rank sum test by considering a 5% level of significance [52]. Values that are indicated in boldface are less than 0.05, which indicates that there is a significance difference between the AWOA results and other opponents.

Table 4.

Results of Wilcoxon rank sum test of AWOA.

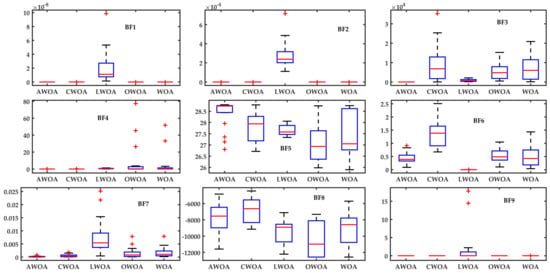

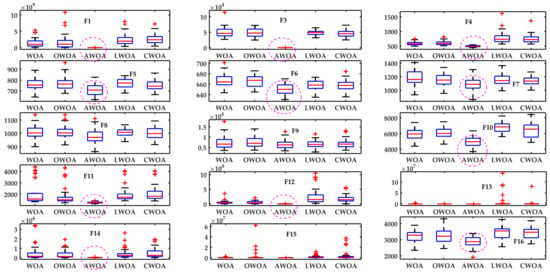

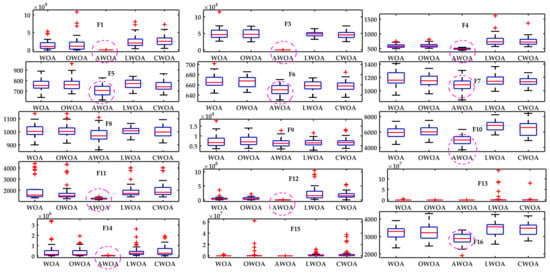

5.1.3. Boxplot Analysis

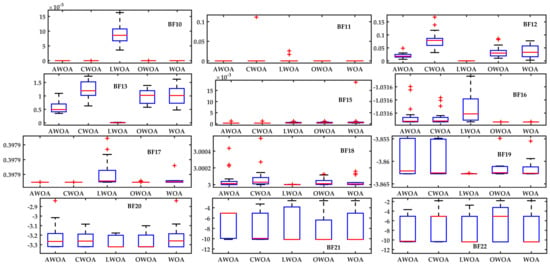

To present a fair comparison between these two opponents, we have plotted boxplots and convergence of some selected functions. Figure 7 shows the boxplots of function (BF1–BF12). From the boxplots, it is observed that the width of the boxplots of AWOA are optimal in these cases; hence, it can be concluded that the optimization performance of AWOA is competitive with other variants of WOA. The mean values shown in the boxplots are also optimal for these functions. The performance of AWOA on the remaining functions of this suite has been depicted through boxplots shown in Figure 8. From these, it can be concluded that the performance of proposed AWOA is competitive, as mean values depicted in the plots are optimal for most of the functions.

Figure 7.

Boxplot analysis of Benchmark Suite 1.

Figure 8.

Boxplot analysis of the remaining functions of Benchmark Suite 1.

5.2. Benchmark Suite 2

In this section, we report the results of the proposed variant on CEC17 functions. The details of CEC 17 functions have been exhibited in Table 2. To check the applicability of the proposed variant on higher as well as lower dimension functions, 10- and 30-dimension problems are chosen deliberately. While performing the simulations we have obeyed the criterion of CEC17; for example, the number of function evaluations have been kept for AWOA and other competitors. The results are averaged over 51 independent runs, as indicated by CEC guidelines. The results of the optimization are expressed as mean and standard deviation of the objective function values obtained from the independent runs. Table 5 and Table 6 show these analyses and the bold face entries in the tables show the best performer. Table 7 and Table 8 also report the statistical comparison results of objective function values obtained from independent runs through Wilcoxon rank sum test with 5% level of significance. These results are p-values indicated in the each column of the observation table when the opponent is compared with the proposed AWOA. These values are indicator of the statistical significance.

Table 5.

Results of Benchmark Suite-2 (10D).

Table 6.

Results of Benchmark Suite-2 (30D).

Table 7.

Results of the rank sum test on Benchmark Suite-2 (10D).

Table 8.

Results of the rank sum test on Benchmark Suite-2 (30D).

5.3. Results of the Analysis of 10D Problems

For 10D problems, the depiction of results are in terms of the mean values and standard deviation values obtained from 51 different independent runs that are indicated for each opponent of AWOA. Furthermore, the following are the noteworthy observations from this study:

- From the table, it is observed that the values obtained from optimization process and their statistical calculation indicate that the substantial enhancement is evident in terms of mean and standard deviation values. These values are shown in bold face. We observe that out of 29 functions, the proposed variant provides optimal mean values for 23 functions. In addition to that, we have observed that the value of the mean parameter is optimal for 23 functions for AWOA. Except CECF16, 17, 18, 23, 24, 26 and CECF29, the mean values of the optimization runs are optimal for AWOA. This supports the fact that the proposed modifications are helpful for enhancing the optimization performance of the original WOA. Inspecting other statistical parameters, namely standard deviation values, also gives a clear insight into the enhanced performance.

- We observe that for unimodal functions, these values are optimal as compared to different versions of WOA as compared to AWOA; hence, it can be said that for unimodal functions, AWOA outperforms. Unimodal functions are useful to test the exploration capability of any optimizer.

- Inspecting the performance of the proposed version of WOA on multimodal functions that are from CECF4-F10 gives a clear insight on the fact that the proposed modifications are meaningful in terms of enhanced exploitation capabilities. Naturally, in multimodal functions, more than one minimum exist, and to converge the optimization process to global minima can be a troublesome task.

- The results of optimization runs indicated in bold face depict the performance of AWOA.

5.3.1. Statistical Significance Test by the Wilcoxon Rank Sum Test

The results of the rank sum test are depicted in Table 7. It is always important to judge the statistical significance of the optimization run in terms of calculated p-values. For this reason, the proposed AWOA has been compared with all opponents and results in terms of the p-values that are depicted. Bold face entries show that there is a significance difference between optimization runs obtained in AWOA and other opponents. This fact demonstrates the superior performance of AWOA.

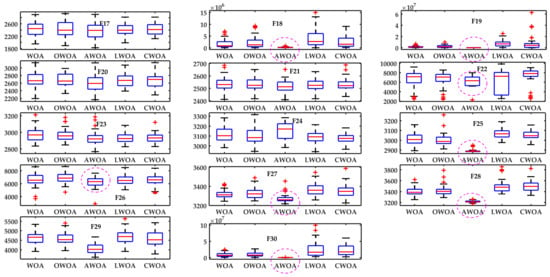

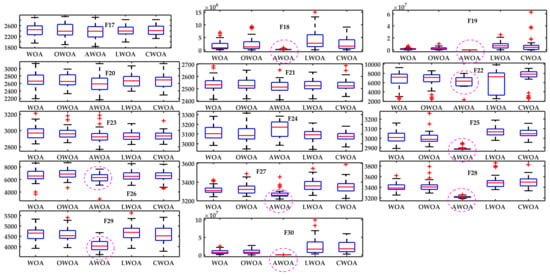

5.3.2. Boxplot Analysis

Boxplot analysis for 10D functions are performed for 20 independent runs of objective function values. This analysis is depicted in Figure 9 and Figure 10. From these boxplots, it is easily to state that the results obtained from the optimization process acquire an optimal Inter Quartile Range and low mean values. For showcasing the efficacy of the proposed AWOA, all the optimal entries of mean values are depicted with an oval shape in boxplots.

Figure 9.

Boxplot analysis of the 10D functions of Benchmark Suite 2.

Figure 10.

Boxplot analysis of the remaining 10D functions of Benchmark Suite 2.

5.4. Results of the Analysis of 30D Problems

The results of the proposed AWOA, along with other variants of WOA, are depicted in terms of statistical attributes of independent 51 runs in Table 6. From the results, it is clearly evident that except for F24, the proposed AWOA provides optimal results as compared to other opponents. Mean values of objective functions and standard deviation of the objective functions obtained from independent runs are shown in bold face.

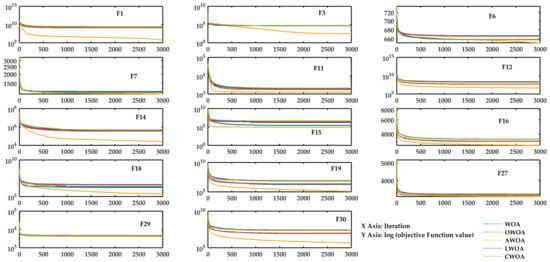

The results of the rank sum test are depicted in Table 8. It is always important to judge the statistical significance of the optimization run in terms of calculated p-values. For this reason, the proposed AWOA was compared with all opponents and the results in terms of p-values are depicted. Bold face entries show that there is a significance difference between optimization runs obtained in AWOA and other opponent, as the obtained p-values are less than 0.05. We observe that for the majority of the functions, calculated p-values are less than 0.05. Along with the optimal mean and standard deviation values, p-values indicated that the proposed AWOA outperforms. In addition to these analyses, a boxplot analysis was performed of the proposed AWOA with other opponents, as depicted in Figure 11 and Figure 12. From these figures, it is easy to learn that the IQR and mean values are very competitive and optimal in almost all cases for 30-dimension problems. Inspecting the convergence curves for some of the functions, such as unimodal functions F1 and F3 and for some other multimodal and hybrid functions, as depicted in Figure 13.

Figure 11.

Boxplot analysis of the 30D functions of Benchmark Suite 2.

Figure 12.

Boxplot analysis of the remaining 30D functions of Benchmark Suite 2.

Figure 13.

Convergence property analysis of some 30D functions of Benchmark Suite 2.

5.5. Comparison with Other Algorithms

To validate the efficacy of the proposed variant, a fair comparison on CEC 2017 criteria is executed. The optimization results of the proposed variant along with some contemporary and classical optimizers are reported in Table 9. The competitive algorithms are Moth flame optimization (MFO) [15], Sine cosine algorithm [53], PSO [54] and Flower pollination Algorithm [55]. It can be easily observed that the results of our proposed variant are competitive for almost all the functions.

Table 9.

Comparison of AWOA with other algorithms for 30D.

6. Applications of AWOA in Engineering Test Problems

6.1. Model Order Reduction

In control system engineering, most of the linear time invariant systems are of a higher order, and thus, difficult to analyze. This problem has been solved using the reduced model order technique, which is easy to use and less complex in comparison to earlier control paradigm techniques. Nature-inspired optimization algorithms have proved to be an efficient tool in this field, as they help to minimize the integral square of lower-order systems. This approach was first introduced in [56] followed by [39,57,58] and many more. These works advocate the efficacy of optimization algorithm in solving the reduced model order technique, as these reduce the complexity, computation time and cost of the reducing process. For testing the applicability of AWOA on some real-world problems, we have considered the Model Order Reduction problem in this section. In MOR, large complex systems with known transfer functions are converted with the help of an optimization application to the reduced order system. The following are the steps of the conversion:

- Consider a large complex system with a higher order and obtain the step response of the system. Stack the response in the form of a numerical array.

- Construct a second-order system with the help of some unknown variables that are depicted in the following equation. Furthermore, obtain the step response of the system and stack those numbers in a numerical array.

- Construct a transfer function that minimizes the error function, preferably the Integral Square Error (ISE) criterion.

6.1.1. Problem Formulation

In this technique, a transfer function given by of a higher order is reduced, in function of a lower order, without affecting the input ; the output is . The integral error defined by the following equation is minimized in the process using the optimization algorithm:

where is a transfer function of any Single Input and Single Output system defined by:

For a reduced order system, can be given by:

where . In this study, we calculate the value of coefficients of the numerator and denominator of a reduced order system defined in Equation (21) while minimizing the error. To establish the efficiency of our proposed variant, we have reported two numerical examples.

6.1.2. Numerical Examples and Discussions

- Function 1

- Function 2

The results of the optimization process by depicting the values of time domain specifications, namely rise time and settling time for both functions, are exhibited in Table 10. Furthermore, the convergence proofs of the algorithm on both functions are depicted in Figure 14 and Figure 15. Errors in the time domain specifications as compared to the original system are depicted in Table 11.

Table 10.

Simulated models for different WOA variants and time domain specifications.

Figure 14.

Results of MOR for function 1.

Figure 15.

Results of MOR for function 2.

Table 11.

Error analysis of MOR results on both functions.

From these analyses, it is quite evident that MOR performed by AWOA leads to a configuration of the system that follows the time domain specifications of the original system quite closely. In addition to that, the error in objective function values are also optimal in the case of AWOA.

6.2. Frequency-Modulated Sound Wave Parameter Estimation Problem

This problem has been taken in many approaches to benchmark the applicability of different optimizers. This problem was included in the 2011 Congress on Evolutionary computation competition for testing different evolutionary optimization algorithms on real problems [59]. This problem is a six-dimensional problem, where the parameters of a sound wave are estimated in such a manner that it should be matched with the target wave.

The mathematical representation of this problem can be given as:

The equations of the predicted sound wave and target sound wave are as follows:

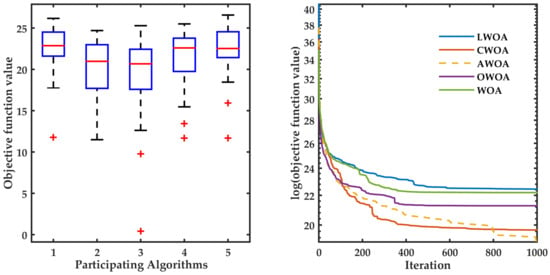

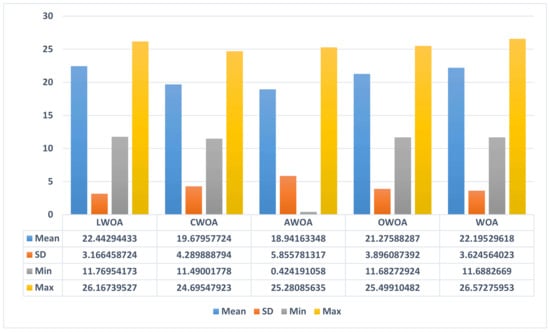

The results of this design problem are shown in terms of different analyses that include the boxplot and convergence property, which are obtained from 20 independent runs. The Figure 16 shows this analysis. A comparison of the performance on the basis of error in the objective function values is depicted in Figure 17. Here, boxplot axis entry 1, 2, 3, 4 and 5 show LWOA, CWOA, proposed AWOA, OWOA and WOA, respectively.

Figure 16.

Boxplot and Convergence Property analysis for the FM problem.

Figure 17.

Comparative results of different statistical measures of independent runs.

6.3. PID Control of DC Motors

In today’s machinery era, DC motors are used in various fields such as the textile industry, rolling mills, electric vehicles and robotics. Among the various controllers available for DC motors, the Proportional Integral Derivative (PID) is the most widely used and proved its efficiency as an accurate result provider without disturbing the steady state error and overshoot phenomena [60]. With this controller, we also needed an efficient tuning method to control the speed and other parameters of DC motors. In recent years, some researchers have explored the meta-heuristic algorithm in tuning of different types of PID controllers. In [61], the authors presented a comparative study between simulated annealing, particle swarm optimization and genetic algorithm. Stochastic fractal search has been applied to the DC motor problem in [62]. The sine cosine algorithm is also used in the determination of optimal parameters of the PID controller of DC motors in [20]. In [63], the authors proposed the chaotic atom search optimization for optimal tuning of the PID controller of DC motors with a fractional order. A hybridized version of foraging optimization and simulated annealing to solve the same problem was reported in [64].

6.3.1. Mathematical Model of DC Motors

The DC motor problem used here is a specific type of DC motor which controlled its speed through input voltage or change in current. In DC motors, the applied voltage is directly proportional to the angular speed , while the flux is constant, i.e.:

The initial voltage of armature satisfies the following differential equation:

The motor torque (due to various friction) developed in the process (neglecting the disturbance torque) is given by:

Taking the Laplace transform of these equations and assuming all the initial condition to zero, we get:

On simplifying these equations, open loop transfer function of DC motor can be given as:

6.3.2. Results and Discussion

All the parameters and constant values considered in this experiment are given in Table 12. The simulation results for tuning the PID controller for plant DC motors are depicted in Table 13. First column entries show the plant and controller combined realization as a closed system and the other two entries show specification of time domain simulation conducted when the system is subjected to step input.

Table 12.

Various parameters of DC motors.

Table 13.

Comparison of AWOA with other algorithms for the DC motor controller design problem.

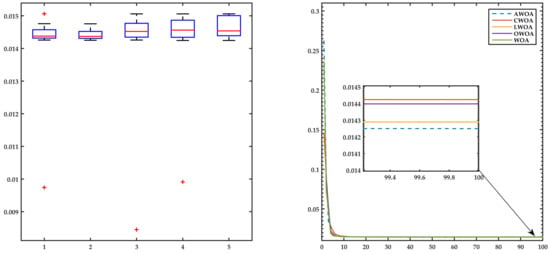

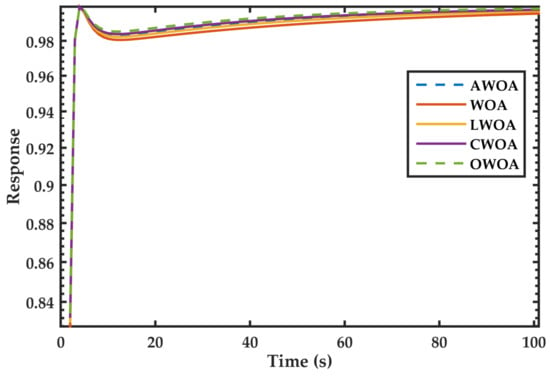

After a careful observation, it is concluded that the closed loop system realized with the proposed AWOA possesses optimal settling and rise time that itself depicts a fast transient response of the system. Although the comparative analysis of other algorithms also depicts very competitive values of these times, the response and convergence process of AWOA are swift as compared to other opponents. The boxplot analysis and convergence property analysis are shown in Figure 18. The boxplot shows the comparison of the optimization results when the optimization is run 20 independent times. The X axis shows the AWOA, CWOA, LWOA, OWOA and WOA algorithms. The optimal entries of settling time and rise time are in bold face to showcase the efficacy of the AWOA. The step response of these controllers has been shown in Figure 19.

Figure 18.

Comparative results of different controllers for DC motors.

Figure 19.

Step Response Analysis of Different Controllers.

7. Conclusions

This paper is a proposal of a new variant of WOA. The singing behavior of whales is mimicked with the help of opposition-based learning in the initialization phase and Cauchy mutation in the position update phase. The following are the major conclusions drawn from this study:

- The proposed AWOA was validated on two benchmark suits (conventional and CEC 2017 functions). These benchmark suits comprise mathematical functions of distinct nature (unimodal, multimodal, hybrid and composite). We have observed that for the majority of the functions, AWOA shows promising results. It is also observed that the performance of AWOA is competitive with other algorithms.

- The statistical significance of the obtained results is verified with the help of a boxplot analysis and Wilcoxon rank sum test. It is observed that boxplots are narrow for the proposed AWOA and the p-values are less than 0.05. These results show that the proposed variant exhibits better exploration and exploitation capabilities, and with these results, one can easily see the positive implications of the proposed modifications.

- The proposed variant is also tested for challenging engineering design problems. The first problem is the model order reduction of a complex control system into subsequent reduced order realizations. For this problem, AWOA shows promising results as compared to WOA. As a second problem, the frequency-modulated sound wave parameter estimation problem was addressed. The performance of the proposed AWOA is competitive with contemporary variants of WOA. In addition to that, the application of AWOA was reported for tuning the PID controller of the DC motor control system. All these applications indicate that the modifications suggested for AWOA are quite meaningful and help the algorithm find global optima in an effective way.

The proposed AWOA can be applied to various other engineering design problem, such as network reconfiguration, solar cell parameter extraction and regulator design. These problems will be the focus of future research.

Author Contributions

Formal analysis, K.A.A.; Funding acquisition, K.A.A.; Investigation, K.A.A.; Methodology, S.S. and A.S.; Project administration, A.W.M.; Software, K.M.S.; Supervision, A.S. and A.W.M.; Validation, K.M.S.; Visualization, K.M.S.; Writing—original draft, S.S. and A.S.; Writing—review & editing, S.S. and A.S. All authors have read and agreed to the published version of the manuscript.

Funding

The research is funded by Researchers Supporting Program at King Saud University, (RSP-2021/305).

Acknowledgments

The authors present their appreciation to King Saud University for funding the publication of this research through Researchers Supporting Program (RSP-2021/305), King Saud University, Riyadh, Saudi Arabia.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Yang, X.S. Nature-Inspired Metaheuristic Algorithms; Luniver Press: Beckington, UK, 2010. [Google Scholar]

- Glover, F. Future paths for integer programming and links to artificial intelligence. Comput. Oper. Res. 1986, 13, 533–549. [Google Scholar] [CrossRef]

- Holland, J.H. Genetic algorithms. Sci. Am. 1992, 267, 66–73. [Google Scholar] [CrossRef]

- Rechenberg, I. Evolution strategy: Nature’s way of optimization. In Optimization: Methods and Applications, Possibilities and Limitations; Springer: Berlin/Heidelberg, Germany, 1989; pp. 106–126. [Google Scholar]

- Dorigo, M.; Di Caro, G. Ant colony optimization: A new meta-heuristic. In Proceedings of the 1999 Congress on Evolutionary Computation-CEC99 (Cat. No. 99TH8406), IEEE, Washington, DC, USA, 6–9 July 1999; Volume 2, pp. 1470–1477. [Google Scholar]

- Basturk, B. An artificial bee colony (ABC) algorithm for numeric function optimization. In Proceedings of the IEEE Swarm Intelligence Symposium, Indianapolis, IN, USA, 12–14 May 2006. [Google Scholar]

- Yang, X.S. A new metaheuristic bat-inspired algorithm. In Nature Inspired Cooperative Strategies for Optimization (NICSO 2010); Springer: Berlin/Heidelberg, Germany, 2010; pp. 65–74. [Google Scholar]

- Yang, X.S.; Deb, S. Cuckoo search via Lévy flights. In Proceedings of the 2009 World Congress on Nature & Biologically Inspired Computing (NaBIC), Coimbatore, India, 9–11 December 2009; pp. 210–214. [Google Scholar]

- Gandomi, A.H.; Alavi, A.H. Krill herd: A new bio-inspired optimization algorithm. Commun. Nonlinear Sci. Numer. Simul. 2012, 17, 4831–4845. [Google Scholar] [CrossRef]

- Yang, X.S. Firefly algorithm, stochastic test functions and design optimisation. Int. J. Bio-Inspir. Comput. 2010, 2, 78–84. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey wolf optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef] [Green Version]

- Das, S.; Biswas, A.; Dasgupta, S.; Abraham, A. Bacterial foraging optimization algorithm: Theoretical foundations, analysis, and applications. In Foundations of Computational Intelligence; Springer: Berlin/Heidelberg, Germany, 2009; Volume 3, pp. 23–55. [Google Scholar]

- James, J.; Li, V.O. A social spider algorithm for global optimization. Appl. Soft Comput. 2015, 30, 614–627. [Google Scholar]

- Chu, S.C.; Tsai, P.W.; Pan, J.S. Cat swarm optimization. In Pacific Rim International Conference on Artificial Intelligence; Springer: Berlin/Heidelberg, Germany, 2006; pp. 854–858. [Google Scholar]

- Mirjalili, S. Moth-flame optimization algorithm: A novel nature-inspired heuristic paradigm. Knowl.-Based Syst. 2015, 89, 228–249. [Google Scholar] [CrossRef]

- Mirjalili, S. The ant lion optimizer. Adv. Eng. Softw. 2015, 83, 80–98. [Google Scholar] [CrossRef]

- Askarzadeh, A. A novel metaheuristic method for solving constrained engineering optimization problems: Crow search algorithm. Comput. Struct. 2016, 169, 1–12. [Google Scholar] [CrossRef]

- Saremi, S.; Mirjalili, S.; Lewis, A. Grasshopper optimisation algorithm: Theory and application. Adv. Eng. Softw. 2017, 105, 30–47. [Google Scholar] [CrossRef] [Green Version]

- Mohamed, A.W.; Hadi, A.A.; Mohamed, A.K. Gaining-sharing knowledge based algorithm for solving optimization problems: A novel nature-inspired algorithm. Int. J. Mach. Learn. Cybern. 2020, 11, 1501–1529. [Google Scholar] [CrossRef]

- Agarwal, J.; Parmar, G.; Gupta, R. Application of sine cosine algorithm in optimal control of DC motor and robustness analysis. Wulfenia J. 2017, 24, 77–95. [Google Scholar]

- Agrawal, P.; Ganesh, T.; Mohamed, A.W. Chaotic gaining sharing knowledge-based optimization algorithm: An improved metaheuristic algorithm for feature selection. Soft Comput. 2021, 25, 9505–9528. [Google Scholar] [CrossRef]

- Agrawal, P.; Ganesh, T.; Mohamed, A.W. A novel binary gaining–sharing knowledge-based optimization algorithm for feature selection. Neural Comput. Appl. 2021, 33, 5989–6008. [Google Scholar] [CrossRef]

- Agrawal, P.; Ganesh, T.; Oliva, D.; Mohamed, A.W. S-shaped and v-shaped gaining-sharing knowledge-based algorithm for feature selection. Appl. Intell. 2022, 52, 81–112. [Google Scholar] [CrossRef]

- Erol, O.K.; Eksin, I. A new optimization method: Big bang–big crunch. Adv. Eng. Softw. 2006, 37, 106–111. [Google Scholar] [CrossRef]

- Hatamlou, A. Black hole: A new heuristic optimization approach for data clustering. Inf. Sci. 2013, 222, 175–184. [Google Scholar] [CrossRef]

- Rashedi, E.; Nezamabadi-Pour, H.; Saryazdi, S. GSA: A gravitational search algorithm. Inf. Sci. 2009, 179, 2232–2248. [Google Scholar] [CrossRef]

- Formato, R.A. Central force optimization. Prog. Electromagn. Res. 2007, 77, 425–491. [Google Scholar] [CrossRef] [Green Version]

- Kaveh, A.; Talatahari, S. A novel heuristic optimization method: Charged system search. Acta Mech. 2010, 213, 267–289. [Google Scholar] [CrossRef]

- Azadeh, A.; Asadzadeh, S.M.; Jalali, R.; Hemmati, S. A greedy randomised adaptive search procedure–genetic algorithm for electricity consumption estimation and optimisation in agriculture sector with random variation. Int. J. Ind. Syst. Eng. 2014, 17, 285–301. [Google Scholar] [CrossRef]

- Blum, C.; Pinacho, P.; López-Ibáñez, M.; Lozano, J.A. Construct, merge, solve & adapt a new general algorithm for combinatorial optimization. Comput. Oper. Res. 2016, 68, 75–88. [Google Scholar]

- Thiruvady, D.; Blum, C.; Ernst, A.T. Solution merging in matheuristics for resource constrained job scheduling. Algorithms 2020, 13, 256. [Google Scholar] [CrossRef]

- Wolpert, D.H.; Macready, W.G. No Free Lunch Theorems for Search; Technical Report; Technical Report SFI-TR-95-02-010; Santa Fe Institute: Santa Fe, NM, USA, 1995. [Google Scholar]

- Lones, M.A. Mitigating metaphors: A comprehensible guide to recent nature-inspired algorithms. SN Comput. Sci. 2020, 1, 1–12. [Google Scholar] [CrossRef] [Green Version]

- Mirjalili, S.; Lewis, A. The whale optimization algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Kaveh, A.; Ghazaan, M.I. Enhanced whale optimization algorithm for sizing optimization of skeletal structures. Mech. Based Des. Struct. Mach. 2017, 45, 345–362. [Google Scholar] [CrossRef]

- Touma, H.J. Study of the economic dispatch problem on IEEE 30-bus system using whale optimization algorithm. Int. J. Eng. Technol. Sci. (IJETS) 2016, 5, 11–18. [Google Scholar] [CrossRef]

- Ladumor, D.P.; Trivedi, I.N.; Jangir, P.; Kumar, A. A whale optimization algorithm approach for unit commitment problem solution. In Proceedings of the National Conference on Advancements in Electrical and Power Electronics Engineering (AEPEE-2016), Morbi, India, 28–29 June 2016; pp. 4–17. [Google Scholar]

- Cui, D. Application of whale optimization algorithm in reservoir optimal operation. Adv. Sci. Technol. Water Resour. 2017, 37, 72–79. [Google Scholar]

- Saxena, A. A comprehensive study of chaos embedded bridging mechanisms and crossover operators for grasshopper optimisation algorithm. Expert Syst. Appl. 2019, 132, 166–188. [Google Scholar] [CrossRef]

- Ibrahim, R.A.; Elaziz, M.A.; Lu, S. Chaotic opposition-based grey-wolf optimization algorithm based on differential evolution and disruption operator for global optimization. Expert Syst. Appl. 2018, 108, 1–27. [Google Scholar] [CrossRef]

- Elaziz, M.A.; Oliva, D. Parameter estimation of solar cells diode models by an improved opposition-based whale optimization algorithm. Energy Convers. Manag. 2018, 171, 1843–1859. [Google Scholar] [CrossRef]

- Xu, Q.; Wang, L.; Wang, N.; Hei, X.; Zhao, L. A review of opposition-based learning from 2005 to 2012. Eng. Appl. Artif. Intell. 2014, 29, 1–12. [Google Scholar] [CrossRef]

- Mahdavi, S.; Rahnamayan, S.; Deb, K. Opposition based learning: A literature review. Swarm Evol. Comput. 2018, 39, 1–23. [Google Scholar] [CrossRef]

- Gupta, S.; Deep, K. Cauchy Grey Wolf Optimiser for continuous optimisation problems. J. Exp. Theor. Artif. Intell. 2018, 30, 1051–1075. [Google Scholar] [CrossRef]

- Wang, G.G.; Zhao, X.; Deb, S. A novel monarch butterfly optimization with greedy strategy and self-adaptive. In Proceedings of the Soft Computing and Machine Intelligence (ISCMI), 2015 Second International Conference on IEEE, Hong Kong, China, 23–24 November 2015; pp. 45–50. [Google Scholar]

- Digalakis, J.G.; Margaritis, K.G. On benchmarking functions for genetic algorithms. Int. J. Comput. Math. 2001, 77, 481–506. [Google Scholar] [CrossRef]

- Molga, M.; Smutnicki, C. Test functions for optimization needs. Test Funct. Optim. Needs 2005, 101, 48. [Google Scholar]

- Yang, X.S. Test problems in optimization. arXiv 2010, arXiv:1008.0549. [Google Scholar]

- Awad, N.; Ali, M.; Liang, J.; Qu, B.; Suganthan, P. Problem Definitions and Evaluation Criteria for the CEC 2017 Special Session and Competition on Single Objective Bound Constrained Real-Parameter Numerical Optimization; Technical Report; Nanyang Technological University Singapore: Singapore, 2016. [Google Scholar]

- Ling, Y.; Zhou, Y.; Luo, Q. Lévy flight trajectory-based whale optimization algorithm for global optimization. IEEE Access 2017, 5, 6168–6186. [Google Scholar] [CrossRef]

- Oliva, D.; Abd El Aziz, M.; Hassanien, A.E. Parameter estimation of photovoltaic cells using an improved chaotic whale optimization algorithm. Appl. Energy 2017, 200, 141–154. [Google Scholar] [CrossRef]

- Wilcoxon, F. Individual comparisons by ranking methods. Biom. Bull. 1945, 1, 80–83. [Google Scholar] [CrossRef]

- Mirjalili, S. SCA: A sine cosine algorithm for solving optimization problems. Knowl.-Based Syst. 2016, 96, 120–133. [Google Scholar] [CrossRef]

- Kennedy, J.; Eberhart, R. Particle swarm optimization. In Proceedings of the IEEE International Conference on Neural Networks IV, Perth, WA, Australia, 27 November–1 December 1995; Volume 1000. [Google Scholar]

- Yang, X.S. Flower pollination algorithm for global optimization. In International Conference on Unconventional Computing and Natural Computation; Springer: Berlin/Heidelberg, Germany, 2012; pp. 240–249. [Google Scholar]

- Biradar, S.; Hote, Y.V.; Saxena, S. Reduced-order modeling of linear time invariant systems using big bang big crunch optimization and time moment matching method. Appl. Math. Model. 2016, 40, 7225–7244. [Google Scholar] [CrossRef]

- Dinkar, S.K.; Deep, K. Accelerated opposition-based antlion optimizer with application to order reduction of linear time-invariant systems. Arab. J. Sci. Eng. 2019, 44, 2213–2241. [Google Scholar] [CrossRef]

- Shekhawat, S.; Saxena, A. Development and applications of an intelligent crow search algorithm based on opposition based learning. ISA Trans. 2020, 99, 210–230. [Google Scholar] [CrossRef]

- Das, S.; Suganthan, P.N. Problem Definitions and Evaluation Criteria for CEC 2011 Competition on Testing Evolutionary Algorithms on Real World Optimization Problems; Jadavpur University: Kolkata, India; Nanyang Technological University: Singapore, 2010. [Google Scholar]

- Shah, P.; Agashe, S. Review of fractional PID controller. Mechatronics 2016, 38, 29–41. [Google Scholar] [CrossRef]

- Hsu, D.Z.; Chen, Y.W.; Chu, P.Y.; Periasamy, S.; Liu, M.Y. Protective effect of 3, 4-methylenedioxyphenol (sesamol) on stress-related mucosal disease in rats. BioMed Res. Int. 2013, 2013, 481827. [Google Scholar] [CrossRef]

- Bhatt, R.; Parmar, G.; Gupta, R.; Sikander, A. Application of stochastic fractal search in approximation and control of LTI systems. Microsyst. Technol. 2019, 25, 105–114. [Google Scholar] [CrossRef]

- Hekimoğlu, B. Optimal tuning of fractional order PID controller for DC motor speed control via chaotic atom search optimization algorithm. IEEE Access 2019, 7, 38100–38114. [Google Scholar] [CrossRef]

- Ekinci, S.; Izci, D.; Hekimoğlu, B. Optimal FOPID Speed Control of DC Motor via Opposition-Based Hybrid Manta Ray Foraging Optimization and Simulated Annealing Algorithm. Arab. J. Sci. Eng. 2021, 46, 1395–1409. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).