Abstract

This paper presents a general testing coverage software reliability modeling framework that covers imperfect debugging and considers not only fault detection processes (FDP) but also fault correction processes (FCP). Numerous software reliability growth models have evaluated the reliability of software over the last few decades, but most of them attached importance to modeling the fault detection process rather than modeling the fault correction process. Previous studies analyzed the time dependency between the fault detection and correction processes and modeled the fault correction process as a delayed detection process with a random or deterministic time delay. We study the quantitative dependency between dual processes from the viewpoint of fault amount dependency instead of time dependency, then propose a generalized modeling framework along with imperfect debugging and testing coverage. New models are derived by adopting different testing coverage functions. We compared the performance of these proposed models with existing models under the context of two kinds of failure data, one of which only includes observations of faults detected, and the other includes not only fault detection but also fault correction data. Different parameter estimation methods and performance comparison criteria are presented according to the characteristics of different kinds of datasets. No matter what kind of data, the comparison results reveal that the proposed models generally give improved descriptive and predictive performance than existing models.

1. Introduction

Software reliability growth models (SRGMs) based on the nonhomogeneous Poisson process (NHPP) have been provided to describe the software reliability in previous eras [1]. One common assumption of most models is that faults will be instantaneously removed after the failure caused by the faults being observed. However, it does not always stand in real software developing process because of the software’s complexity and the tester’s limited ability, i.e., it needs nontrivial time and effort not only to report, diagnose, and locate a fault but also to fix and verify a fault instead so that the time can be ignored. Therefore, it is very important to model software reliability based on the perspective of the fault correction process. Recently, great importance has been emphasized on modeling fault correction processes.

It is Schneidewind who first modeled the fault correction process (FCP) along with the fault detection process (FDP) by assuming a constant time delay between the two processes [2]. Then Xie et al. gave an extension study on Schneidewind’s idea from an equal time lag to a time-varied lag function [3]. Schneidewind also extended his original model by introducing a random time delay obeying exponential distribution [4]. Huang et al. considered how to combine both fault dependency and a time-dependent debugging time lag into reliability modeling, meanwhile they classified the faults into leading faults and dependent faults [5]. Xie et al. provided a modeling framework of a fault correction process by defining a random time delay model with different distributions, such as the gamma [6], normal, Weibull, chi-square, and Erlang distribution [7]. New statistical distributions continued to be presented in follow-up research, such as the hyper-Erlang distribution [8]. Improved joint likelihood functions for combined FDP and FCP were derived to replace the single likelihood function [9]. Peng et al. integrated testing effort function and imperfect debugging into the dual processes modeling, because they regarded the testing effort as a considerable influencing factor, which affects not only the fault detection rate but also the time to correct a detected fault [10]. Later, they introduced fault dependency into the paired FDP and FCP models instead of neglecting the faults’ correlation [11]. Lo et al. summarized a framework which could cover some existing models [12]. Recently, this research was extended to a multi-release, open-source software failure process [13] together with different kinds of failure data, such as masked data [14]. Pachauri et al. implemented delay in fault correction after fault detection based on imperfect correction [15]. Tiwari et al. considered inclusive modeling for investigating the detection and correction of faults under an imperfect debugging scenario where new faults are involved throughout the correction of a hard type of fault [16]. Choudhary et al. evaluated the optimum release and cost by considering that either detection or correction does not provide adequate information and proposed an effort-based optimized decision model taking into account the cost of detection and correction separately using a multi-attribute utility theory [17]. Saraf et al. presented a model of two-stage fault detection and fault correction in view of imperfect debugging, error generation, and change point, where a combination of exponential and gamma distribution is adopted [18]. Kumar et al. allocated the resources in an optimal manner to minimize the cost during the testing phase using FDP and FCP under a dynamic environment [19].

Although models proposed by the above studies can effectively evaluate software reliability, they have the following two disadvantages: firstly, time-delay functions are developed by assuming how the correction time lag will be, which may not be the case. Since time-delay functions are not derived from actual testing process, it may not be very realistic to use them to characterize the relationship between fault detection and correction processes. Secondly, stochastic distribution of fault correction time brings more difficulties in modeling and corresponding parameter estimation. Sometimes the calculations may not yield any solutions. Moreover, in essence, they can be attributed to one category, models based on the time dependency between these two processes, i.e., time delay. However, we know that the time to remove faults depends on many factors, such as the complexity of detected faults, the skills of testers, available resources, and the software development environment. Therefore, it is fairly important to model fault detection and correction processes with different software reliability models. To overcome this problem, the objective of this paper is to provide a general framework for the combined modeling of software detection and correction process from another perspective, which is different from the above-mentioned one.

Shu et al. proposed modeling both fault detection and correction processes from the viewpoint of the fault number concretely and in two ways, the ratio of corrected fault number to detected fault number and the difference between these two numbers [20]. Compared to the time dependency models, they opened a new direction for modeling dual processes, but they only presented models under the condition of perfect debugging, and the fault detection rate follows a constant. Thus, a lot of questions affecting the model accuracy remained unsolved, such as imperfect debugging and a more complicated fault detection rate. In this paper, we aim to give a general framework from the viewpoint of fault amount dependency, so that further studies can be motivated to utilize this framework along this way. In addition, we want to provide an extensive discussion on essential factors, which may have a great effect on model accuracy. Recently, we provided a model on this direction, but the limitation of this article is also that only one testing coverage function is provided, and one single process parameter estimation method is discussed [21].

In addition, with the evolution of SRGMs, many factors have been incorporated into the modeling framework to improve the evaluation accuracy [22]. Testing coverage is a very promising metric during software development for both developers and users, which can help developers assess testing progress and help users estimate confidence in accepting software products [23]. Many time-dependent testing coverage functions (TCFs) following different distributions have been proposed [24], such as the logarithmic–exponential [25], S-shaped [26], Rayleigh [27], Weibull & Logistic [28], and lognormal [29], and many TCF-based SRGMs have been proposed, such as the Rayleigh, logarithmic–exponential, Beta, and Hyper-exponential model. Recently, Chatterjee et al. presented a unified approach to model the reliability growth of software with imperfect debugging and three types of testing coverage curves, such as the exponential, Weibull, and S-shaped [30]. They also proposed a SRGM considering the effects of uncertain testing environment and testing coverage of multi-release software in the presence of two different types of faults [31]. Obviously, incorporating coverage in the SRGM helps to enhance software reliability and predict the faults in a more realistic and accurate way.

Furthermore, different parametric SRGMs are developed depending on failure data gathered during software testing. There are two kinds of failure data, one of which includes only detected faults observations, whereas the other includes not only detected fault number but also the corrected fault number. So, different parameter estimation methods have been recommended aiming at these two conditions. Improved joint likelihood functions for the combined FDP and FCP were derived to replace the single likelihood function [9], meanwhile a combined least square error function is presented to replace a single least square error function [6]. Different criteria are also given to reflect the characteristics of the dual processes simultaneously, e.g., combined MSE and MRE instead of those criteria only considering the characteristics of single process [7].

Besides, some other attempts have been made to model dual processes simultaneously. One is the so-called parametric model based on the Markov chain rather than on NHPP [32]. Liu et al. extended the Markov model by focusing on the weighted least square estimation method, which emphasizes the influence of later data on the prediction [33]. Conversely, nonparametric models are proposed to model these two processes together, e.g., data-driven models, such as robust recurrent neural networks [34], artificial neural networks models [35], finite and infinite server queuing models [36], and quasi-renewal, time-delay fault removal models [37]. The simulation rate-based method [38] was developed to simulate software failure processes by queuing models [39]. Chatterjee et al. developed a modeling framework to incorporate imperfect debugging and change-point with the Weibull-type fault reduction factor considering fault removal as a two-step process [40].

The main contributions of our work are as follows.

- We develop a general framework for modeling both fault detection and correction processes from the viewpoint of fault amount dependency instead of time dependency in the context of different testing coverage and imperfect debugging.

- We consider testing coverage functions including the Weibull-type, delayed S-shaped, and inflection S-shaped functions to verify their flexibility in modeling different failure phenomena.

- We discuss the models under two kinds of failure datasets followed by two kinds of parameter estimation methods and performance comparison criteria accordingly.

- We conduct case studies based on two kinds of failure datasets to verify the feasibility of the proposed method.

The remainder of this paper is structured as follows. In Section 2, we analyze the relationship between fault detection and fault correction processes on the basis of one real dataset, then propose three testing coverage functions to build the proposed models. According to different kinds of failure datasets, we give parameter estimation methods and performance comparison criteria for estimation and prediction accordingly in Section 3. Then, we validate the performance of the proposed models with several existing SRGMs on three real datasets in Section 4. Finally, we summarize the conclusions in Section 5.

2. Model Formulation

2.1. Assumptions

The assumptions are made to develop the proposed models as follows:

- The software failure process follows an NHPP process.

- The mean number of faults detected in the time interval () is proportional to the number of undetected faults at time .

- The fault detection rate is denoted by testing coverage, which is written as , where refers to one kind of testing coverage, e.g., code percentage that has been examined up to time , and is the derivative of .

- The software debugging process is imperfect, and new faults could be introduced during fault correction.

- The detected faults cannot be corrected immediately, and the dependency between fault detection and correction processes is represented by , that is, , where is the cumulative detected faults, and represents the cumulative corrected faults.

Under the above assumptions, we can obtain the following equations:

Solving the value of from Equation (1) and using the initial condition that and , we can obtain the paired FDP and FCP models as follows:

where means .

We can obtain different paired mean value functions by substituting different testing coverage functions and fault amount dependency function in Equations (4) and (5).

2.2. The Relationship between and

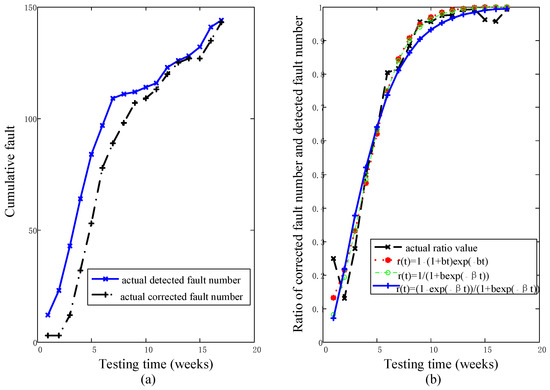

Firstly, we study the relationship between the mean number of and . A set of failure data collected from testing a real software program (Dataset 1, DS-1) [9] is utilized here. Suppose , the points of cumulative detected and corrected faults are plotted in Figure 1a, and the actual ratio values of are also drawn in Figure 1b.

Figure 1.

The cumulative number of faults detected and corrected with three function fitting results for the actual ratio on DS-1 (a) The actual cumulative number of detected faults and corrected faults for DS-1; (b) The actual ratio values and three function fitting results for the actual ratio on DS-1.

From Figure 1, it can be noticed that and are both increasing functions of testing time . At the beginning of software testing, there are many faults, most of which are simple and easy to be detected. Therefore, the number of faults detected grows rapidly, but the testers at this time are not familiar with the software, so they appear very slow in locating and fixing the detected faults, thus the fault correction lags far behind fault detection, that is, the ratio of corrected fault number to detected fault number is very low and even decreases over time. Then, as the testing progresses, under the influence of the learning process, the testers gain more experience and master the software better than before, they can remove the faults faster, so the number of faults corrected grows faster, and the proportion of faults corrected number against faults detected number increases rapidly over time. Then the faults become more complicated and difficult to locate and fix, so the growth of the faults detected number becomes slow, and the difference between FCP and FDP may decrease. At the end of testing, the faults are almost completely detected, and it is more difficult to find more faults, so the number of detected faults becomes harder to grow, and all the faults are almost completely corrected, hence the ratio of faults corrected number against faults detected number tends to 1.

Apparently, this phenomenon is better captured by an S-shaped function.

Here three S-shaped functions are used to compare their fitting performance on DS-1, i.e., , , and . Table 1 gives their values of MSE, , and Adjusted on DS-1 separately. From Table 1, yields a better fit for the actual ratio values and is finally decided to be taken as . It can be noted that is a nondecreasing function with an S-shaped curve, whose structure is very flexible and may catch the features of the software testing’s learning process.

Table 1.

The values of MSE, , and Adjusted of for DS-1.

2.3. Framework and New Testing Coverage Models

Here time-variable testing coverage functions are chosen to obtain the following specific models, and these testing coverage functions have been recommended in several references with great flexibility [41].

Model 1: Suppose the testing coverage is a Weibull-type function, that is

We can obtain

where and are constant parameters.

Because , the derivative of equals to . For , the fault detection rate is increasing, and it is decreasing for , and it keeps a constant for . In most actual testing processes, software failure intensity usually increases initially and then decreases, so the flexibility of the Weibull-type function captures the characteristics of the failure intensity.

Model 2: Suppose the testing coverage takes a delayed S-shaped function, that is

Then

Because , the derivative of equals to . For any , the fault detection rate keeps increasing.

Model 3: Suppose the testing coverage is an inflection S-shaped function, that is

then

because , and the derivative of equals to . For any , the fault detection rate keeps increasing.

It can be seen that fault detection and correction processes as well testing coverage and imperfect debugging are all integrated into the proposed paired models.

In this paper, two kinds of failure data will be utilized. One kind of failure data contains only the detected fault number, and the other contains not only the detected fault number but also the corrected fault number. DS-I belongs to the second type, and Datasets 2 and 3 belong to the first type, respectively. Table 2 lists 19 models, among which, M1 to M5 are paired FDP and FCP models with a different time delay as comparison models to depict both fault correction and detection processes [6,7]. M6 to M16 are taken as comparison models for single process models. M17 to M19 are proposed models in this paper. Accordingly, for DS-1, the paired models in M17 to M19 will be used to compare the performance, and for DS-2 and DS-3, the FCP models in M17 to M19 will be used to compare the performance.

Table 2.

Summary of models.

3. Parameter Estimation Methods and Model Comparison Criteria

This section presents two kinds of parameter estimation methods and model comparison criteria for paired failure data and single process failure data, respectively. Paired failure data refers to observations of both fault detection and correction, and single process failure data refers to observations of only the detected fault number. This is because models for bother fault detection and correction are described as paired models. In addition to those parameters for fault detection, there are parameters for fault correction, therefore all parameters should be estimated together. For a long time in the software reliability modeling field, few published datasets were available, including both observations of fault detection and correction; it is only in recent years that considerable efforts have been made to collect more and more data including both observations of fault detection and correction in software projects, which greatly support the research of modeling and the analysis of dual processes. Conversely, parametric SRGM research depends on failure data gathered during software testing, that is, when different types of failure data are presented, one needs to adopt the corresponding method in terms of their characteristics.

3.1. Parameter Estimation Methods

3.1.1. Parameter Estimation Method for Paired FDP and FCP Models

Against failure observations of both fault detection and correction, the sum of the squared residuals for both detected and corrected faults is shown as follows:

where is the cumulative number of detected faults observed until time . is the estimated cumulative number of detected faults until time obtained from the fitted mean value function. is the cumulative number of corrected faults until time , and is the estimated cumulative number of corrected faults until time obtained from the fitted mean value function, .

Obviously, the parameters of the model need to minimize the sum of squared deviations of detected defects and corrected defects at the same time. Here we take the combined least square estimation (LSE) method to estimate the models’ parameters. The solution of simultaneous equations can be obtained by calculating the derivatives of each parameter in Equation (12) and setting the result to be equal to zero. Taking Equation (7) as an example, the following is

After solving these equations numerically, we can obtain the point estimates of all parameters for the proposed model.

3.1.2. Parameter Estimation Method for Single Process Models

For observations with only detected faults, the sum of the squared distance is shown as follows to obtain the least square estimates:

where denotes the cumulative detected fault number until time .

Similarly, from Equation (14), we can obtain the corresponding equations to solve estimators, taking FCP in Equation (7) for example:

After solving equations in (15) simultaneously, we can derive the least square estimators of all model parameters.

3.2. Criteria for a Comparison of the Power of Models with Paired FDP and FCP Models

3.2.1. Criteria for a Comparison of the Descriptive Power of Models with Paired FDP and FCP Models

Under the context of the LSE method to obtain the estimates of all parameters, the combined mean squared errors for both fault detection and correction are taken as the measurement to examine the fitting performance of paired models, which is defined as [6]

Thus, the lower value of MSE indicates the better goodness-of-fit performance.

3.2.2. Criteria for a Comparison of the Predictive Power of Models with Paired FDP and FCP Models

Mean relative errors (MREs) for both fault detection and correction processes are taken as a criterion to examine the prediction performance of paired models. MRE is expressed as follows:

Assume that by the end of testing time , totally paired data are collected. Firstly, we use the paired data up to time ( < < ) to estimate the parameters of and , then substitute the estimated parameters into the mean value functions to yield the prediction values of the cumulative fault numbers and . Then the procedure is repeated from to .

Therefore, the fewer MREs, the better the model’s prediction performance.

3.3. Criteria for a Comparison of the Power of Model with Single Process Models

3.3.1. Criteria for a Comparison of the Descriptive Power of Models with Single Process Models

For single process models, we adopted the following seven goodness-of-fit criteria to compare their fitting performance.

- (1)

- Mean value of squared error (MSE)

- (2)

- Correlation index of the regression curve equation ()

- (3)

- Adjusted

- (4)

- Predictive-ratio risk ()

The lower the value of , the better the goodness-of-fit.

- (5)

- Predictive power ()

Less means a better fitting.

- (6)

The lower indicates the preferred model.

- (7)

The with a lower value has a better goodness-of-fit.

3.3.2. Criteria for a Comparison of the Predictive Power of Models with Single Process Models

For single process models, we used the SSE criterion to examine the predictive power of SRGMs. SSE is expressed as follows:

A lower SSE means a better prediction performance.

4. Numerical Examples

In the following experiments, we validate the proposed models on three real datasets and compare their performance based on different comparison criteria.

4.1. Case Study 1

DS-1 is collected from testing a medium size software system [10] and has been widely used in many papers, such as [7,11,23,48]. DS-1 includes observations of not only detected faults but also corrected faults, in which there was a total of 144 faults observed and 143 faults corrected within 17 weeks. For simplicity and tractability, the detailed information about this dataset can be referenced in [6]. We chose M1 to M5 (paired FDP and FCP models) as comparing models, meanwhile the combined LSE method and combined MSE and MRE values were chosen as the parameter estimation method and goodness-of-fit and prediction performance criteria, respectively.

For the descriptive power comparison, all data points were used to fit the models and estimate the model parameters. The results are listed in Table 3, together with the MSE values used for a goodness-of-fit comparison.

Table 3.

Estimates of parameters and performance values of paired models for DS-1.

For the predictive performance comparison, we used the first 80% of DS-1 to estimate the parameters of all models, then we compared the prediction ability of all models according to the remaining 20% of the data points. The results of the MRE values are listed in the far right column of Table 3.

From Table 3, it can be noted that for the proposed models:

- M19 (the proposed model with inflection S-shaped testing coverage) has the smallest MSE = 22.9948 and MSEd = 26.6022 among all models.

- M18 (the proposed model with delayed S-shaped testing coverage) has the second smallest MSE = 27.5294 and MSEd = 29.3943 among all models.

- M17 (the proposed model with Weibull-type testing coverage) has the third smallest MSE = 27.9714 and MSEd = 37.6473 among all models.

According to the combined MSE criterion (16), all proposed models, that is, M17, M18 and M19, have a better goodness-of-fit performance than existing models. We also noticed that the proposed models’ MSE values belong to value interval of (22.9948, 27.9714), whereas existing models’ MSE values belong to interval (34.6214, 104.8889), apparently the proposed models’ MSE values are much smaller than the values of existing models, e.g., other models’ MSE values can be 1.53 times (G-O with gamma distributed time delay model (M5)’s 34.6214) and even 4.56 times (G-O with time-dependent delay model (M2)’s 104.8889) larger than the value of the proposed model of M19. So, we can deduce that for DS-1, the descriptive power of our proposed models based on the fault amount dependency between fault detection and fault correction processes is better than those of other models based on the time dependency between these two processes.

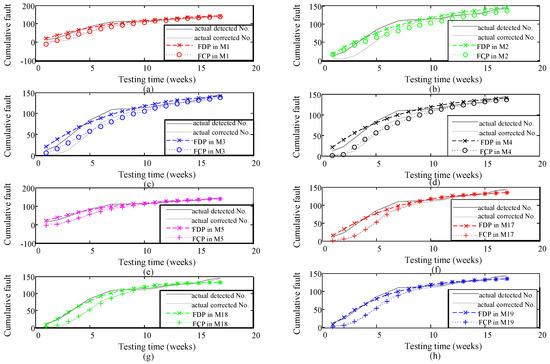

Figure 2a–h shows the graph of the fitting comparison of existing paired models M1 to M5 with the proposed models M17 to M19 based on DS-1. From Figure 2, we can see that the proposed models fit the dataset very well in both fault detection and correction processes. Especially, Figure 2b–d shows that the fitting curves of the existing models M2, M3, and M1 have a great deviation from the curves of the actual fault data, whose fitting degree are far less than those of the models proposed in this paper.

Figure 2.

Comparisons of the fitting results based on M1-M5 and M17-M19 for DS-1 (a–e) FDP and FCP fitting results based on M1-M5 vs. actual detected faults and corrected fault number; (f–h) FDP and FCP fitting results based on M17-M19 vs. actual detected faults and corrected fault number.

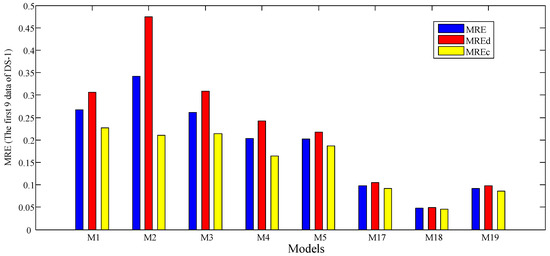

For the predictive power comparison, similarly, all proposed models, that is, M17, M18, and M19, provide better predictive power than the existing models. M18 presents the smallest MRE = 0.0475 followed by M19’s MRE = 0.0921, which is the second smallest value of MRE and M17’s MRE = 0.0980, which is the third smallest value of MRE. Other existing models’ MRE values belong to interval (0.2018, 0.3422), which is obviously much larger than the value range of the proposed models, e.g., other MRE values can be 4.25 times (G-O with gamma distributed time delay model (M5)’s 0.2018) and even 7.2 times (G-O with time-dependent delay model (M2)’s 0.3422) larger than the value of the proposed model M18.

Figure 3 shows the graph of the prediction comparison of all models based on DS-1, which agrees with those in Table 3. Obviously, it shows that the proposed models have the best predictive performance through the intuitive effect of the eyes. Overall, MRE values of M1 to M5 are far greater than those of M17 to M19. Numerically, MRE values of M1 to M5 are almost two to three times that of M17 to M19. Among them, the MRE value of M2 is the largest, which is close to 0.5, while that of M18 is the smallest, namely 0.05. In other words, the prediction accuracy of M2 is almost one order of magnitude lower than that of M18.

Figure 3.

Comparisons of the prediction results based on M1-M5 and M17-M19 for DS-1.

4.2. Case Study 2

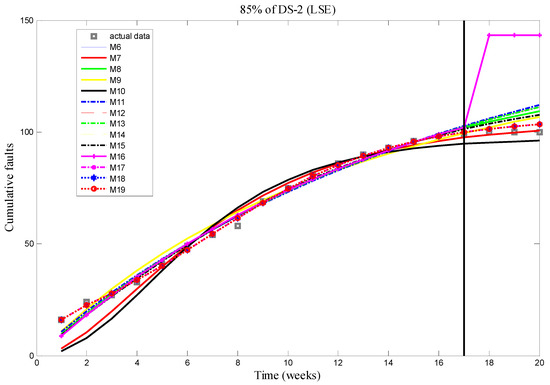

Dataset 2 (DS-2) is collected from Tandem Computer Release #1 [49] and has been used in papers, such as [46,50]. There was a total of 20 faults observed within about 20 weeks, which includes observations of only fault detection data. Therefore, we chose M6 to M16 (single process models) as comparing models, meanwhile the traditional LSE method (14) and seven criteria (18)–(24) were chosen as the parameter estimation method and goodness-of-fit performance criteria.

For the descriptive power comparison, all data points were used to fit the models and estimate model parameters. The estimates of model parameters and all seven estimation criteria values are listed in Table 4 and Table 5, respectively.

Table 4.

Parameter estimates of M6 to M19 for DS-2.

Table 5.

Comparisons of descriptive and predictive power of M6 to M19 for DS-2.

For the predictive performance comparison, we used the first 85% of DS-2 to estimate the parameters of all models, and then we compared the prediction ability of all models according to the remaining 15% of DS-2 data points. SSE criterion (25) is taken as the predictive power criterion, and the prediction values are listed in the far right column of Table 5.

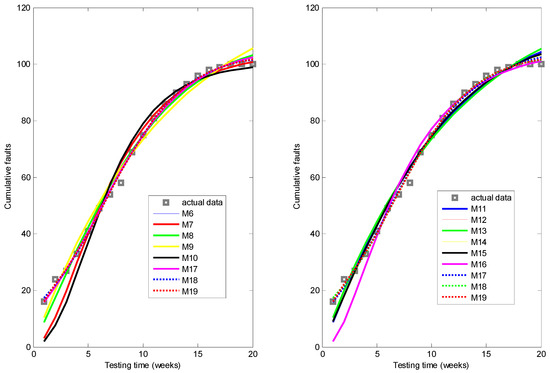

Figure 4 graphically illustrates the fitting comparisons of all single process models M6 to M16 and FCPs of the proposed models M17 to M19 based on DS-2. In order to obtain a clearer display effect, we divided these models into two groups, and each group included the actual data and the proposed models. Figure 4 shows that the curves of the proposed models M17 to M19 have less deviation from the curve of the actual data than existing models, which confirms that the fitting performance of the proposed models are better than existing models.

Figure 4.

Comparisons of the fitting results for M11-M19 based on DS-2.

From Table 5, it is clear that all FCPs of the proposed models provide the top three best results of SRGMs according to these seven criteria values of MSE, , Adjusted , , , , and .

- M17 (the proposed model with Weibull-type testing coverage), M18 (the proposed model with delayed S-shaped testing coverage), and M19 (the proposed model with inflection S-shaped testing coverage) provide much smaller MSE values than existing models, among which M19’s MSE = 2.0100 is the lowest value among all models followed by M18’s MSE = 2.1653 and M17’s MSE = 3.3221.

- M17, M18, and M19 provide the largest values of 0.9971, 0.9980, and 0.9981, respectively, compared to existing models, where M19 provides the largest = 0.9981.

- M17, M18, and M19 provide the largest Adjusted values of 0.9960, 0.9975, and 0.9975, respectively, compared to existing models, where M18 and M19 provide the largest = 0.9975.

- M17, M18, and M19 provide the smallest values of 0.0242, 0.0224, and 0.0135, respectively, compared to existing models, where M19 provides the smallest = 0.0135 followed by M18’s 0.0224 and M17’s 0.0242.

- M17, M18, and M19 provide the smallest values of 0.0215, 0.0224, and 0.013, respectively, compared to existing models, where M19 provides the smallest = 0.0131 followed by M17’s 0.0215 and M18’s 0.0224.

- M17, M18, and M19 provide the smallest absolute values of of 0.0701, 0.0164, and 0.02681, respectively, compared to existing models, where M18 provides the smallest absolute value of = 0.0164 followed by M19 and M17.

- M17, M18, and M19 provide the smallest values of 1.5696, 1.3077, and 1.2607, respectively, compared to existing models, where M19 provides the smallest = 1.2607 followed by M18 and M17.

To sum up, among these three proposed models, M19 affords the best results for MSE, , Adjusted , , , and ; and M18 provides the best result for . Therefore, according to the comparison results in this dataset, we can conclude that the proposed models seem to have a better fitting performance overall.

For the predictive power comparison, though the SSE values given by the proposed models are not the smallest results, they are the second, third, and fourth best results, respectively, which are slightly bigger than the best value. That is, since the minimum SSE value is 1.8720 (given by the delayed S-shaped model (M7)), and compared to other models, we note that the SSE values given by the proposed models are far better than those of other models. For example, the SSE values of other models can be 2.4 times (given by the Yamada Rayleigh model (M10), whose value equals to 46.3688) or even 296.31 times (given by the SRGM-3 model (M16), whose value equals to ) that of the proposed model M17, namely 19.3357. In addition, the delayed S-shaped model only affords the best result for DS-2 but does not afford the best result for DS-3, meanwhile it does not give the best goodness-of-fit result for DS-2. Among these three proposed models, M17 gives the best prediction result followed by M19 and M18. Their SSE values belong to interval (19.3357, 19.6887), which indicates that for DS-2, the proposed models show the same level of prediction accuracy.

Figure 5 shows the graph of the cumulative number of detected faults for all models using 85% of DS-2, which agrees with those in Table 5. Obviously, it shows that the proposed models have a better predictive performance except M7, but far better than other models; e.g., M16 has a large deviation from the curve of the actual data than all models.

Figure 5.

Comparisons of the prediction results for M11-M19 based on DS-2.

4.3. Case Study 3

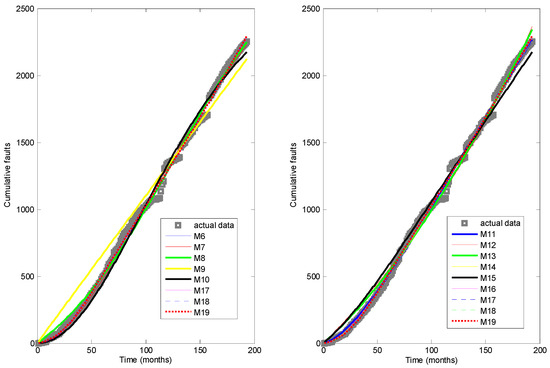

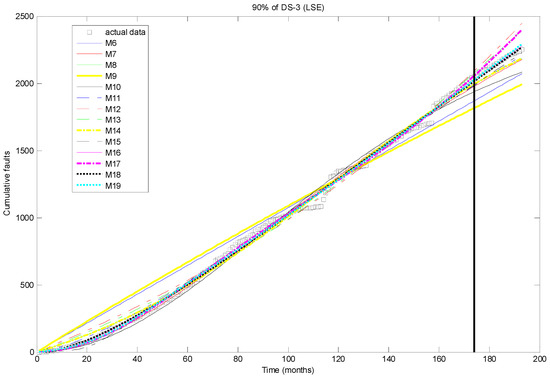

Dataset 3 (DS-3) is from a networking component of the Linux Kernel project [51] and has been widely used in many papers, such as [52]. Linux is a common open-source operating system, which is popular and represents the actual situation of the current software system to a certain extent, and the failure dataset is collected from Bugzilla. There was a total of 2251 faults observed within 193 months (5933 days) from 15 November 2002 to 28 January 2019. This dataset also has observations of only fault detection data, and compared to DS-1 and DS-2 this dataset has a larger time series.

For the descriptive power comparison, we utilized all data points to fit the models and estimate model parameters. Similarly, the estimated values of model parameters are tabulated in Table 6. Seven criteria values for fitting performance comparisons are summarized in Table 7, along with the prediction comparison results of SSE in the far right column. The fitting of the models on DS-3 is graphically illustrated in Figure 6. As is clearly shown, the curves of the proposed models M17 to M19 are much closer to the curve of the actual data than existing models, which confirms that the fitting performance of the proposed models is much better than existing models.

Table 6.

Parameter estimates of M6 to M19 for DS-3.

Table 7.

Comparisons of descriptive and predictive power of M6 to M19 for DS-3.

Figure 6.

Comparisons of the fitting results for M1-M5 and M17-M19 based on DS-3.

Table 7 shows that:

- The proposed models provide the top three best results over all models according to the estimation criteria values of MSE, , Adjusted , and , where M17 (the proposed model with Weibull-type testing coverage) gives the best results of MSE, , Adjusted , and followed by M19 (the proposed model with inflection S-shaped testing coverage).

- M18 (the proposed model with delayed S-shaped testing coverage) gives the best results of and .

- Though M18 gives the second-best result of the absolute value of , whereas the SRGM-3 model gives the best result, there is a small difference between these two results, that is, the lowest absolute value of is 0.1743, and the absolute value of given by M18 is 0.4106.

Therefore, according to the above results, the proposed models generally provide a better fitting performance.

For the predictive performance comparison, we used the first 90% of DS-3 to estimate the model parameters, and then we adopted the remaining 10% of the DS-3 data points to compare the prediction ability of the models. Though the values provided by the proposed models are not the best results, M18 presents the second-best result, which is slightly bigger than the best result, for the smallest SSE value is (given by SRGM-3 model), and M18’s SSE value is . Since DS-3 has a larger time series, the prediction accuracy of M18 is in the same order of magnitude as that of the SRGM-3 model, which means that the prediction accuracy of the two models is almost the same. Compared to other models, we find that the proposed models’ SSE values belong to interval (, ), whereas the existing models’ SSE values belong to interval (, ), and the proposed models’ SSE values are relatively smaller than the values of existing models; e.g., the other models’ SSE values can be 6.98 times (Fault removal model’s ) and even 197.25 times (Yamada exponential model’s ) larger than the value of the proposed model of M18. In addition, for the SRGM-3 model, it only provides the best prediction result for DS-3, but provides the worst prediction result for DS-2. Overall, the proposed models give a better predictive performance.

Figure 7 shows the graph of the cumulative number of detected faults for all models using 90% of DS-3, which agrees with those in Table 7. Obviously, it shows that the proposed models have a better predictive performance in general.

Figure 7.

Comparisons of the prediction results of M6 to M19 for DS-3.

5. Conclusions

In this article, we integrated three testing coverage functions (Weibull-type, delayed S-shaped, and inflection S-shaped) into software reliability modeling based on NHPP with incorporation of both fault detection processes and fault correction processes. The relationship between the mean value functions of detected faults and corrected faults is introduced from the viewpoint of fault amount dependency instead of time dependency. We compared the performance of three proposed models with several existing models on three real failure datasets in which two kinds of failure data are employed. For one kind of failure data that contained only the detected fault number, the traditional LSE method was used to estimate the model parameters, and seven comparison criteria were adopted to compare the fitting performance together with the SSE value to compare the prediction performance of models. For another kind of failure data that contained failure observations of both fault detection and correction, the combined LSE method was used for parameter estimation, and the combined MSE and MRE values were used for model fitting and prediction performance comparison. No matter the case, the proposed models provided generally better goodness-of-fit and prediction results compared with other existing paired models and single process models.

In addition, the results show that models with testing coverage can generally give a better fit and prediction to the observed data, so we suggest that an extension study on time variable testing coverage functions should be performed. Moreover, we will use the maximum likelihood estimation method to estimate the parameters and their confidence intervals of the proposed models to compare any difference between limitation on data form or accuracy of model performance in the future. We will also discuss the release time problem by use of the proposed models giving reliability function simulation under various sample sizes.

Currently, we are considering more complicated circumstance, such as incorporating more different functions to characterize the relationship between the fault amounts of the two processes. We should have more failure datasets to verify such a software reliability model and to support the conclusions we made. Further progress with respect to these subjects will be proposed in the future paper.

Author Contributions

Conceptualization, Q.L. and H.P.; Data Curation, Q.L.; Formal Analysis, Q.L.; Funding Acquisition, Q.L.; Investigation, Q.L.; Methodology, Q.L. and H.P.; Project Administration, Q.L.; Resources, Q.L. and H.P.; Supervision, H.P.; Writing—Review and Editing, Q.L. All authors have read and agreed to the published version of the manuscript.

Funding

This research was partly funded by “National Key Laboratory of Science and Technology on Reliability and Environmental Engineering of China”, grant number “WDZC2019601A303”.

Institutional Review Board Statement

Not applicable.

Informed Consent Statement

Not applicable.

Data Availability Statement

Not applicable.

Acknowledgments

The authors would like to thank the editor and referees for their valuable comments.

Conflicts of Interest

The authors declare no conflict of interest.

References

- Erto, P.; Giorgio, M.; Lepore, A. The Generalized Inflection S-Shaped Software Reliability Growth Model. IEEE Trans. Reliab. 2020, 69, 228–244. [Google Scholar] [CrossRef]

- Schneidewind, N.F. Analysis of error processes in computer software. ACM Sigplan Not. 1975, 10, 337–346. [Google Scholar] [CrossRef]

- Xie, M.; Zhao, M. The Schneidewind software reliability model revisited. In Proceedings of the Third International Symposium on Software Reliability Engineering, Research Triangle Park, NC, USA, 7–10 October 1992; pp. 184–192. [Google Scholar]

- Schneidewind, N.F. Modelling the fault correction process. In Proceedings of the 12th International Symposium on Software Reliability Engineering, Hong Kong, China, 27–30 November 2001; IEEE Computer Society Press: Los Alamitos, CA, USA, 2001; pp. 185–190. [Google Scholar]

- Huang, C.Y.; Lin, C.T. Software Reliability Analysis by Considering Fault Dependency and Debugging Time Lag. IEEE Trans. Reliab. 2006, 55, 436–450. [Google Scholar] [CrossRef]

- Xie, M.; Hu, Q.P.; Wu, Y.P.; Ng, S.H. A study of the modeling and analysis of software fault-detection and fault-correction processes. Qual. Reliab. Eng. Int. 2007, 23, 459–470. [Google Scholar] [CrossRef]

- Wu, Y.P.; Hu, Q.P.; Xie, M.; Ng, S.H. Modeling and analysis of software fault detection and correction process by considering time dependency. IEEE Trans. Reliab. 2007, 56, 629–642. [Google Scholar] [CrossRef]

- Okamura, H.; Dohi, T. A Generalized Bivariate Modeling Framework of Fault Detection and Correction Processes. In Proceedings of the 2017 IEEE 28th International Symposium on Software Reliability Engineering (ISSRE), Toulouse, France, 23–26 October 2017. [Google Scholar]

- Wang, L.; Hu, Q.; Jian, L. Software reliability growth modeling and analysis with dual fault detection and correction processes. Iie Trans. 2016, 48, 359–370. [Google Scholar] [CrossRef]

- Peng, R.; Li, Y.F.; Zhang, W.J.; Hu, Q.P. Testing effort dependent software reliability model for imperfect debugging process considering both detection and correction. Reliab. Eng. Syst. Saf. 2014, 126, 37–43. [Google Scholar] [CrossRef]

- Peng, R.; Zhai, Q. Modeling of software fault detection and correction processes with fault dependency. Eksploat. I Niezawodn.-Maint. Reliab. 2017, 19, 467–475. [Google Scholar] [CrossRef]

- Lo, J.H.; Huang, C.Y. An integration of fault detection and correction processes in software reliability analysis. J. Syst. Softw. 2006, 79, 1312–1323. [Google Scholar] [CrossRef]

- Yang, J.; Liu, Y.; Xie, M.; Zhao, M. Modeling and analysis of reliability of multi-release open source software incorporating both fault detection and correction processes. J. Syst. Softw. 2016, 115, 102–110. [Google Scholar] [CrossRef]

- Yang, J.; Zhao, M.; Chen, J. ELS algorithm for estimating open source software reliability with masked data considering both fault detection and correction processes. Commun. Stat. Theory Methods 2021, 1–26. [Google Scholar] [CrossRef]

- Pachauri, B.; Kumar, A.; Dhar, J. Reliability analysis of open source software systems considering the effect of previously released version. Int. J. Comput. Appl. 2019, 41, 31–38. [Google Scholar] [CrossRef]

- Tiwari, A.; Sharma, A. Imperfect Debugging-Based Modeling of Fault Detection and Correction Using Statistical Methods. J. Circuits Syst. Comput. 2021, 30, 2150185. [Google Scholar] [CrossRef]

- Choudhary, C.; Kapur, P.K.; Khatri, S.K.; Muthukumar, R.; Shrivastava, A.K. Effort based release time of software for detection and correction processes using MAUT. Int. J. Syst. Assur. Eng. Manag. 2020, 11, S367–S378. [Google Scholar] [CrossRef]

- Saraf, I.; Iqbal, J. Generalized software fault detection and correction modeling framework through imperfect debugging error generation and change point. Int. J. Inf. Technol. 2019, 11, 751. [Google Scholar] [CrossRef]

- Kumar, V.; Khatri, S.K.; Dua, H.; Sharma, M.; Mathur, P. An Assessment of Testing Cost with Effort-Dependent FDP and FCP under Learning Effect: A Genetic Algorithm Approach. Int. J. Reliab. Qual. Saf. Eng. 2014, 21, 1450027. [Google Scholar] [CrossRef]

- Shu, Y.; Wu, Z.; Liu, H.; Yang, X. Software reliability modeling of fault detection and correction processes. In Proceedings of the 2009 Annual Reliability and Maintainability Symposium, Fort Worth, TX, USA, 26–29 January 2009; pp. 521–526. [Google Scholar]

- Li, Q.Y.; Pham, H. Modeling software fault-detection and fault-correction processes by considering the dependencies between fault amounts. Appl. Sci. 2021, 11, 6998. [Google Scholar] [CrossRef]

- Li, Q.; Pham, H. NHPP software reliability model considering the uncertainty of operating environments with imperfect debugging and testing coverage. Appl. Math. Model. 2017, 51, 68–85. [Google Scholar] [CrossRef]

- Huang, C.Y.; Kuo, S.Y.; Lyu, M.R. An assessment of testing-effort dependent software reliability growth models. IEEE Trans. Reliab. 2007, 56, 198–211. [Google Scholar] [CrossRef]

- Cai, X.; Lyu, M.R. Software reliability modeling with test coverage experimentation and measurement with a fault-tolerant software project. In Proceedings of the 18th IEEE International Symposium on Software Reliability, Trollhattan, Sweden, 5–9 November 2007; pp. 17–26. [Google Scholar]

- Malaiya, Y.K.; Li, M.N.; Bieman, J.M.; Karcich, R. Software reliability growth with test coverage. IEEE Trans. Reliab. 2002, 51, 420–426. [Google Scholar] [CrossRef]

- Pham, H.; Zhang, X. NHPP software reliability and cost models with testing coverage. Eur. J. Oper. Res. 2003, 145, 443–454. [Google Scholar] [CrossRef]

- Vouk, M.A. Using Reliability Models during Testing with Non-Operational Profiles. Available online: https://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.47.8863&rep=rep1&type=pdf (accessed on 24 November 2021).

- Gokhale, S.; Trivedi, K.S. A time/structure based software reliability model. Ann. Softw. Eng. 1999, 8, 85–121. [Google Scholar] [CrossRef]

- Park, J.Y.; Lee, G.; Park, J.H. A class of coverage growth functions and its practical application. J. Korean Stat. Soc. 2008, 37, 241–247. [Google Scholar] [CrossRef]

- Chatterjee, S.; Shukla, A. A unified approach of testing coverage-based software reliability growth modelling with fault detection probability, imperfect debugging, and change point. J. Softw. Evol. Process 2019, 31, e2150. [Google Scholar] [CrossRef]

- Chatterjee, S.; Saha, D.; Sharma, A.; Verma, Y. Reliability and optimal release time analysis for multi up-gradation software with imperfect debugging and varied testing coverage under the effect of random field Environments. Ann. Oper. Res. 2021, 1–21. [Google Scholar] [CrossRef]

- Jia, L.; Yang, B.; Guo, S.; Park, D.H. Software reliability modeling considering fault correction process. IEICE Trans. Inf. Syst. 2010, 93, 185–188. [Google Scholar] [CrossRef]

- Liu, Y.; Li, D.; Wang, L.; Hu, Q. A general modeling and analysis framework for software fault detection and correction process. Softw. Test. Verif. Reliab. 2016, 26, 351–365. [Google Scholar] [CrossRef]

- Hu, Q.P.; Xie, M.; Ng, S.H.; Levitin, G. Robust recurrent neural network modelling for software fault detection and correction prediction. Reliab. Eng. Syst. Saf. 2007, 92, 332–340. [Google Scholar] [CrossRef]

- Xiao, H.; Cao, M.; Peng, R. Artificial neural network based software fault detection and correction prediction models considering testing effort. Appl. Soft Comput. 2020, 94, 106491. [Google Scholar] [CrossRef]

- Huang, C.Y.; Huang, W.C. Software reliability analysis and measurement using finite and infinite server queueing models. IEEE Trans. Reliab. 2008, 57, 192–203. [Google Scholar] [CrossRef]

- Hwang, S.; Pham, H. Quasi-renewal time-delay fault-removal consideration in software reliability modeling. IEEE Trans. Syst. Man Cybern.-Part A Syst. Hum. 2009, 39, 200–209. [Google Scholar] [CrossRef]

- Lin, C.T. Analyzing the effect of imperfect debugging on software fault detection and correction processes via a simulation framework. Math. Comput. Model. 2011, 54, 3046–3064. [Google Scholar] [CrossRef]

- Hu, Q.P.; Peng, R.; Ng, S.H.; Wang, H.Q. Simulation-based FDP & FCP analysis with queueing models. In Proceedings of the 2008 IEEE International Conference on Industrial Engineering and Engineering Management, Singapore, 8–11 December 2008; pp. 1577–1581. [Google Scholar]

- Chatterjee, S.; Shukla, A. Modeling and Analysis of Software Fault Detection and Correction Process through Weibull-Type Fault Reduction Factor, Change Point and Imperfect Debugging. Arab. J. Sci. Eng. 2016, 41, 5009–5025. [Google Scholar] [CrossRef]

- Chatterjee, S.; Shukla, A. Effect of Test Coverage and Change Point on Software Reliability Growth Based on Time Variable Fault Detection Probability. J. Softw. 2016, 11, 110–117. [Google Scholar] [CrossRef][Green Version]

- Yamada, S.; Ohba, M.; Osaki, S. S-Shaped Reliability Growth Modeling for Software Error Detection. IEEE Trans. Reliab. 1984, 32, 475–484. [Google Scholar] [CrossRef]

- Ohba, M. Inflection S-shaped software reliability growth model. In Stochastic Models in Reliability Theory; Springer: Berlin/Heidelberg, Germany, 1984; pp. 144–162. [Google Scholar]

- Yamada, S.; Tokuno, K.; Osaki, S. Imperfect debugging models with fault introduction rate for software reliability assessment. Int. J. Syst. Sci. 1992, 23, 2241–2252. [Google Scholar] [CrossRef]

- Pham, H.; Zhang, X. An NHPP Software Reliability Model and Its Comparison. Int. J. Reliab. Qual. Saf. Eng. 1997, 4, 269–282. [Google Scholar] [CrossRef]

- Zhang, X.; Teng, X.; Pham, H. Considering fault removal efficiency in software reliability assessment. IEEE Trans. Syst. Man Cybern. Part A Syst. Hum. 2003, 33, 114–120. [Google Scholar] [CrossRef]

- Kapur, P.K.; Pham, H.; Anand, S.; Yadav, K. A unified approach for developing software reliability growth models in the presence of imperfect debugging and error generation. IEEE Trans. Reliab. 2011, 60, 331–340. [Google Scholar] [CrossRef]

- Hsu, C.J.; Huang, C.Y.; Chang, J.R. Enhancing software reliability modelling and prediction through the introduction of time-variable fault reduction factor. Appl. Math. Model. 2011, 35, 506–521. [Google Scholar] [CrossRef]

- Wood, A. Predicting software reliability. IEEE Comput. 1996, 11, 69–77. [Google Scholar] [CrossRef]

- Pham, H.; Nordmann, L. A general imperfect-software-debugging model with S-shaped fault-detection rate. IEEE Trans. Reliab. 1999, 48, 169–175. [Google Scholar] [CrossRef]

- Linux Kernel Bugzilla. Available online: https://bugzilla.kernel.org/ (accessed on 12 March 2016).

- Wu, C.Y.; Huang, C.Y. A Study of Incorporation of Deep Learning into Software Reliability Modeling and Assessment. IEEE Trans. Reliab. 2021, 70, 1621–1640. [Google Scholar] [CrossRef]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).