1. Introduction

Suppose a series of inhomogeneous Bernoulli trials, with a given profile of success probabilities , is paced randomly in time by some independent point process. As the outcomes and epochs of the first trials become known at some time t, the gambler is asked to bet on the time of the last success. The gambler is allowed to choose either a action, a action, or a proper subset of future times called trap. The gambler wins with if no further successes occur, and with if exactly one success occurs after time t. In the case a trapping action is chosen, the gambler wins if the last success epoch is isolated by the trap from the other success epochs.

Motivation to study this game stems from connections to the best-choice problems with random arrivals [

1,

2,

3,

4,

5,

6,

7,

8,

9] and the random records model [

10,

11]. A prototype problem of this kind involves a sequence of rankable items arriving by a Poisson process with a finite horizon, where the

arrival is relatively the best (a record) with probability

. The optimisation task is to maximise the probability of selecting the overall best item (the last record) using a non-anticipating stopping strategy. Cowan and Zabczyk [

5] showed that the optimal strategy is

myopic, which means that the decision to stop on a particular record arrival only depends on whether the winning chance with

exceeds that with

. They also determined the critical cut-offs of the optimal strategy and studied some asymptotics. Similar results have been obtained for the best-choice problem with some other pacing processes [

1,

4,

7,

9]. In this context, trapping can be employed to test optimality of the myopic strategy, which fails if in some situations the action

outperforms

but a trapping action is better still. Simple trapping strategies are easy to evaluate and provide insight into the occurrence of records.

Regarding the pacing point process, we shall assume that it is mixed binomial [

12]. This setting covers, in particular, the wide class of mixed Poisson processes. In essence, this pacing process is characterised by the

prior distribution

of the total number of trials, and some background continuous distribution to spread the epochs of the trials in an i.i.d. manner. Without loss of generality, the distribution will be assumed uniform; hence, given the number of trials, they are scattered in time like the uniform order statistics on

. We enrich the model with a natural size parameter by letting

vary within a family of power series distributions.

The most obvious instance of a trapping action amounts to leaving some fraction of time to isolate the last success. We call this trapping action the

z-strategy, with a parameter designating the proportion of time getting skipped (as compared to the real-time cut-off in the name of the familiar ‘

-strategy’ of the best choice [

13,

14]). The overall optimality of the class of

z-strategies among all trapping actions will be explored for a fixed and a random number of trials. For the problem of stopping on the last success, the optimality of the myopic strategy will be shown to hold if the sequence of its cut-offs is decreasing and interlacing with another set of critical points of

z-strategies.

Then we specialise to the best-choice problem driven by a Pólya-Lundberg pacing process, when the number of trials follows a logarithmic series distribution. In different terms, the model was introduced by Bruss and Yor [

15]. Bruss and Rogers [

4] recently observed that the strategy stopping at the first record after time threshold

is not optimal. We present a more detailed analysis; in particular, we use a curious property of certain hypergeometric functions to show that the cut-offs of the myopic strategy are increasing, hence the monotone case of optimal stopping [

16] does not hold. Simulation suggests, however, that the myopic strategy is very close to optimality, both in terms of the cut-offs and the winning probability. A better approximation to optimality is achieved by the strategy that stops as soon as

becomes more beneficial than trapping with a z-strategy.

Viewed inside a bigger picture, the log-series prior appears as the edge

instance of the random records model with negative binomial distribution

of the number of trials. It is known that for

, corresponding to the geometric prior, all cut-offs coincide [

17,

18], while for integer

they are decreasing [

7]. In [

19], we show that for

the myopic strategy is not optimal, with the pattern of cut-offs as in the log-series case treated here.

2. Setting the Scene

2.1. The Probability Model

Let

be a power series distribution

and scale parameter

varying within the interval of convergence of

.

The associated mixed binomial process

is an orderly counting process with the uniform order statistics property. The process can also be seen as a time inhomogeneous pure-birth process, with a transition rate expressible through the generating function of

, see [

20].

Conditionally on :

- (i)

The epochs of the trials within and are independent;

- (ii)

The posterior distribution of the number of trials yet to occur is a power series distribution

with scale variable

and a normalisation function

.

- (iii)

is a mixed binomial process on

, with the number of trials distributed according to (

2).

The conditioning relation (

2) appears in many statistical problems related to censored or partially observable data.

In principle, instead of considering a family of distributions for

with parameter

q, we could deal with one counting process on the

x-scale. We prefer not to adhere to this viewpoint, as the ‘real time’ variable is more intuitive. Nevertheless, we will use (

3) to switch back and forth between

t and

x, as

x is better suitable for power series work.

Let

be a profile of success probabilities. We assume that

The

trial, which is occurring at index/epoch

k, is a success with probability

, independently of other trials and the pacing process. Thus, the point process of success epochs is obtained from

by thinning out the

point with probability

. Taken by itself, the process counting the success epochs is typically intractable [

10]. A notable exception is the random records model (

) with the geometric prior

, when the process is Poisson [

1].

We shall identify state with the event . The notation will be used to denote the event that the trial epoch is t and the outcome is a success. If there is at least one success, the sequence of successes increases in both components.

2.2. The Trapping Game and Stopping Problem

A single episode of the trapping game refers to the generic state . The gambler plays either or , or chooses a proper subset of the interval . The trap , for , will be called z-strategy; this action leaves a portion of the remaining time to isolate the last success epoch from other successes.

Let be the sigma-algebra generated by the epochs and outcomes of trials on . Under stopping strategy , we mean a random variable taking values in and adapted to the filtration . The performance of is assessed by the probability of the event that is the last success state.

We call a stopping strategy Markovian if in the event

a decision to stop or to continue in state

does not depend on the trials before time

t. The general theory [

21] implies existence of the optimal stopping strategy and that it can be found within the class of Markovian strategies.

Conditional on

, the probability that

is the last success equals the winning probability with

, while the probability that

is the penultimate success equals the winning probability with

. If for every

, where

is at least as good as

, also every state

has this property, then the optimal stopping problem is

monotone [

21].

Define the myopic stopping strategy to be the first record , if any, such that is at least as beneficial as . In the monotone case the myopic strategy is optimal among all stopping strategies.

Suppose for each there exists a cut-off time such that the action is at least as good as precisely for . Then coincides with the time of the first success satisfying (or if there is no such trial). The problem is monotone, hence is optimal if the cut-offs are non-increasing, that is .

4. Random Number of Trials: -Strategies

We proceed with the continuous time setting, assuming

and

are given. In state

, the probability of isolating the last success by means of a

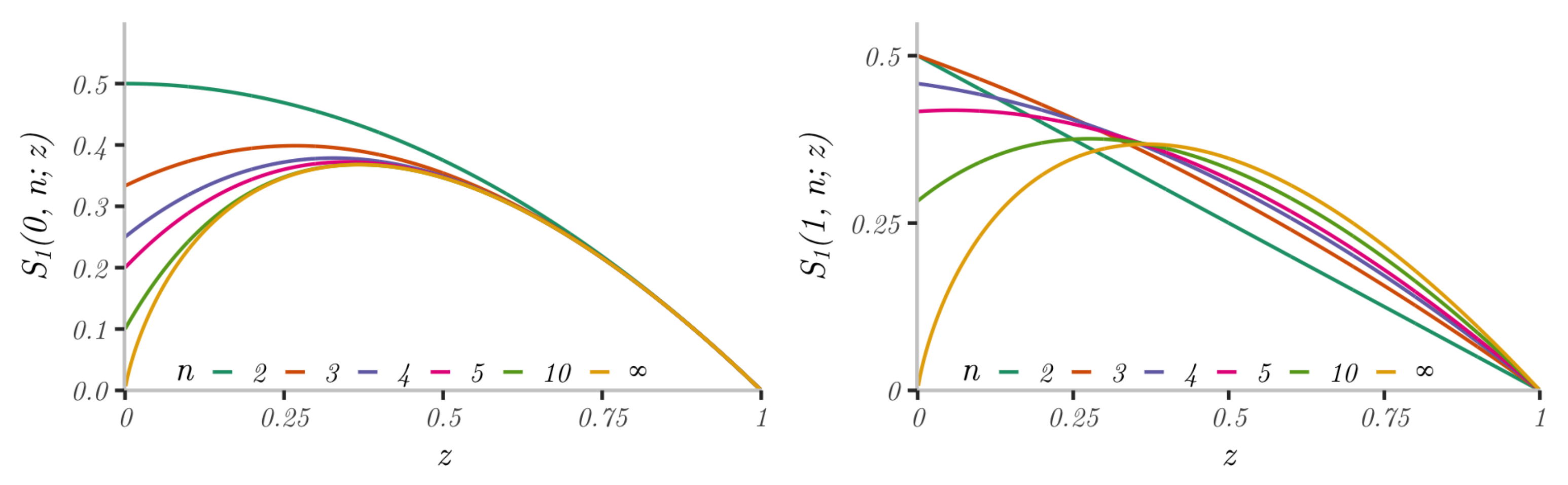

z-strategy is a convex mixture of the Bernstein polynomials:

The

instance,

is the probability to win with

, and

Similarly, the probability that none of the successes are trapped by the

z-strategy is:

and

is the probability to win with

.

Being a convex mixture of unimodal functions,

itself need not be unimodal. Accordingly, the optimal trap need not be a final interval. It may rather include a few disjoint intervals akin to ‘islands’ in the discrete time best-choice problems [

29].

Concavity is a simple condition to ensure unimodality. We say that is concave if for every the second difference in the first variable is non-positive.

Theorem 2. Suppose is concave. Then is unimodal with maximum at some . If then for the z-strategy is optimal among all trapping actions, and if then outperforms every trapping action.

Proof. By the shape-preserving properties of Bernstein polynomials [

25], the internal sum in (

10) is a concave function in

z, therefore the mixture

is also concave hence unimodal. The maximum is attained at 0 if

, and

otherwise. The overall optimality follows from the unimodality as in Theorem 1. □

The concavity is easy to express in terms of

explicitly. The second difference in the variable

k of the probability generating function

becomes

Computing

at

yields the second difference of

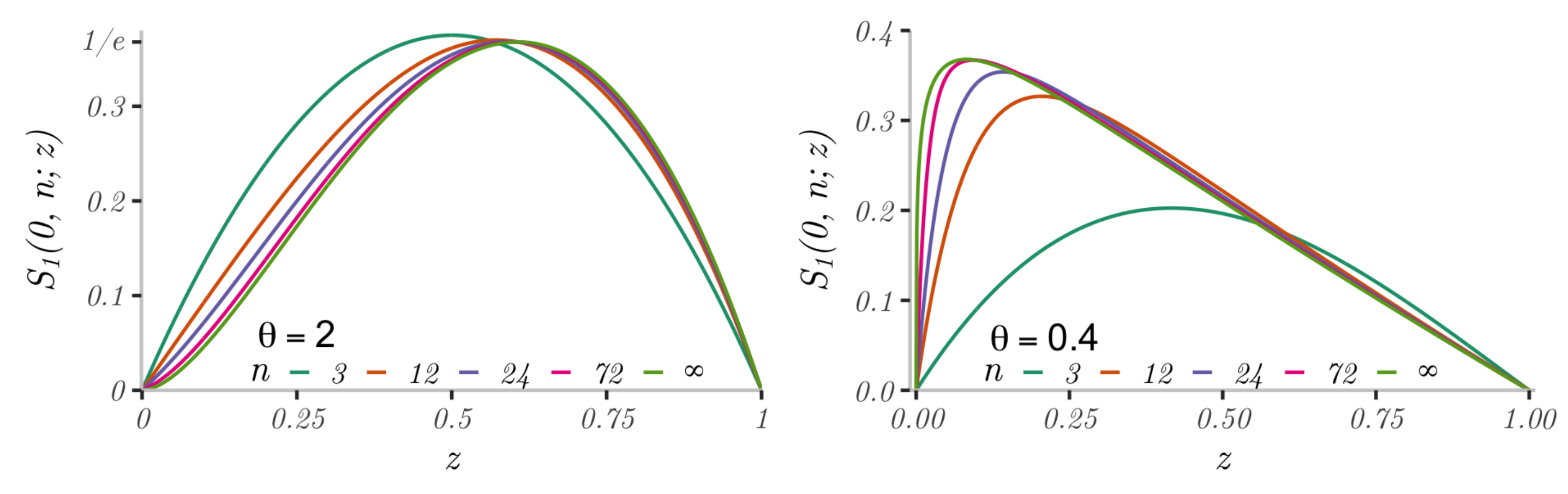

From this, a sufficient condition for the concavity of

is

Notably, (

12) ensures unimodality for arbitrary

and only involves two consecutive success probabilities. The price to pay for the simplicity is that the condition is restrictive, as seen in

Figure 3.

For the profile (

9), straight calculation shows that (

11) is non-positive, hence

is concave, iff

This is only a half range, but it includes two most important for application cases and .

5. Tests for the Monotone Case of Optimal Stopping

Using (

2) and (

3), we can cast the winning probabilities with actions

and a

z-strategy as:

where

and

Thus, . We are looking next at some critical points for the trapping game and the optimal stopping problem.

Lemma 3. Equation has at most one root , for every .

Proof. Coefficients of the series

have at most one change of sign from + to −, hence Descartes’ rule of signs for power series [

30] entails that there is at most one positive root. □

We set

if the root does not exist. Define the cut-off

This is the earliest time when becomes at least as good as . Keep in mind that if the sequence is monotone, then is also monotone but with the monotonicity direction reversed. The monotone case of optimal stopping holds for every q, hence is optimal, if .

Example 1. In the paradigmatic case and the geometric prior with ,

we haveand explicitly computable power series The equation yields identical roots and coinciding cut-offs .

Thus, stops at the first success trial after a time threshold. See [1,7,17,18,19] for details on this remarkable case. Lemma 4. Equation has at most one root , for every . If the root exists, then .

Proof. We follow the argument in Lemma 3. The derivative at

is

which has at most one change of sign for

, and then from + to −. Furthermore,

This follows by comparing the series and noting that the weights at positive terms in are higher. □

If there is no finite root, we set

. Let

We have for , and by Lemma 4. Thus, is the earliest time when the action at index k cannot be improved by a z-strategy with small enough z.

To summarise the above: for action is better than , and tor a trapping strategy is better than next.

Theorem 3. The optimal stopping problem belongs to the monotone case (for every admissible q) if and only if In that case we have the interlacing pattern of roots Proof. We argue in probabilistic terms. The bivariate sequence of success epochs is an increasing Markov chain. The monotone case of optimal stopping occurs iff the set of states where outperforms is closed, which holds iff this is an upper subset with respect to the partial order in . The latter property amounts to the monotonicity condition .

By Lemma 3, the inequality always holds. In the monotone case, if in some state the actions bygone and next are equally good, then trapping cannot improve upon these by optimality of the myopic strategy. In the analytic terms, the above translates as the inequality . □

6. The Best-Choice Problem under the Log-Series Prior

In this section we consider the random records model with the classic profile

, and a pacing process with the logarithmic series prior

(so

), where

and

. See [

31] for Poisson mixture representations of

. The function

is concave, hence by Theorem 2 it is sufficient to consider

z-strategies.

Let be the time of the first trial.

Lemma 5. Under the logarithmic series prior (

15)

the pacing process has the following features: - (i)

The time of the first trial has probability density function - (ii)

is a Pólya-Lundberg birth process with transition rates - (iii)

Given , the posterior distribution of is In particular, conditionally on , the posterior distribution is geometric with the ‘failure’ probability .

Proof. Assertion (i) follows from

and (iii) from the identity

underlying the formula for

in terms of

. □

In view of part (ii), we will use

to denote the log-series prior (

15).

6.1. Hypergeometrics

The power series of interest can be expressed via the Gaussian hypergeometric function

Recall the differentiation formula

the parameter transformation formula

and Euler’s integral representation for

The probability generating function for the number of successes following state

, for

, is given by a hypergeometric function:

Expanding at

we identify two basic power series as:

where as before

and

is the derivative in the first parameter. The differentiation formula implies backward recursions:

The normalisation function for probabilities (

14) is

for

, and

. Applying the transformation formula yields

hence, we may write the winning probability with

as the series

It is readily seen that, as

k increases, this function decreases to

. This result was already observed in [

18] using a probabilistic argument. The convergence to

relates to the fact that for large

k, the point process of record epochs approaches a Poisson process.

For

, we derive an integral formula. Consider first the case

. The probability generating function of the number of record epochs following

and falling in the final interval

has probability generating function

Differentiating at

yields

, which is the same as

for

, whence

For

, a similar calculation with log-series weights NB

gives

6.2. The Myopic Strategy

The positive root obtained by equating

is

. On the other hand, solving

yields a smaller value

, hence the interlacing condition of Theorem 3 fails for

. Translating in terms of the best-choice problem, this means that

stops at the first trial if this occurs before

, but a

z-strategy will be more beneficial for a bigger range of times

. Therefore, at least for

, it is not optimal to stop at the first trial before

and the myopic strategy can be beaten.

The root

is found by equating

where for shorthand

. Formulas become more complicated for larger

k.

We see that

, which suggests monotonicity of the whole sequence. To show this, pass to the quotient and re-define the root

as a unique solution on

to

where

acts in the first parameter. As

x increases from 0 to 1, this logarithmic derivative runs from 0 to ∞.

Lemma 6. The logarithmic derivative (

18)

increases in k, hence the sequence of roots is strictly decreasing. Proof. Euler’s integral specialises as:

Expanding in parameter at

gives the integral representations

By the same kind of argument, a similar formula is obtained for

. Splitting the integration domain, and using symmetries of the integrand yields for

which implies the asserted monotonicity. □

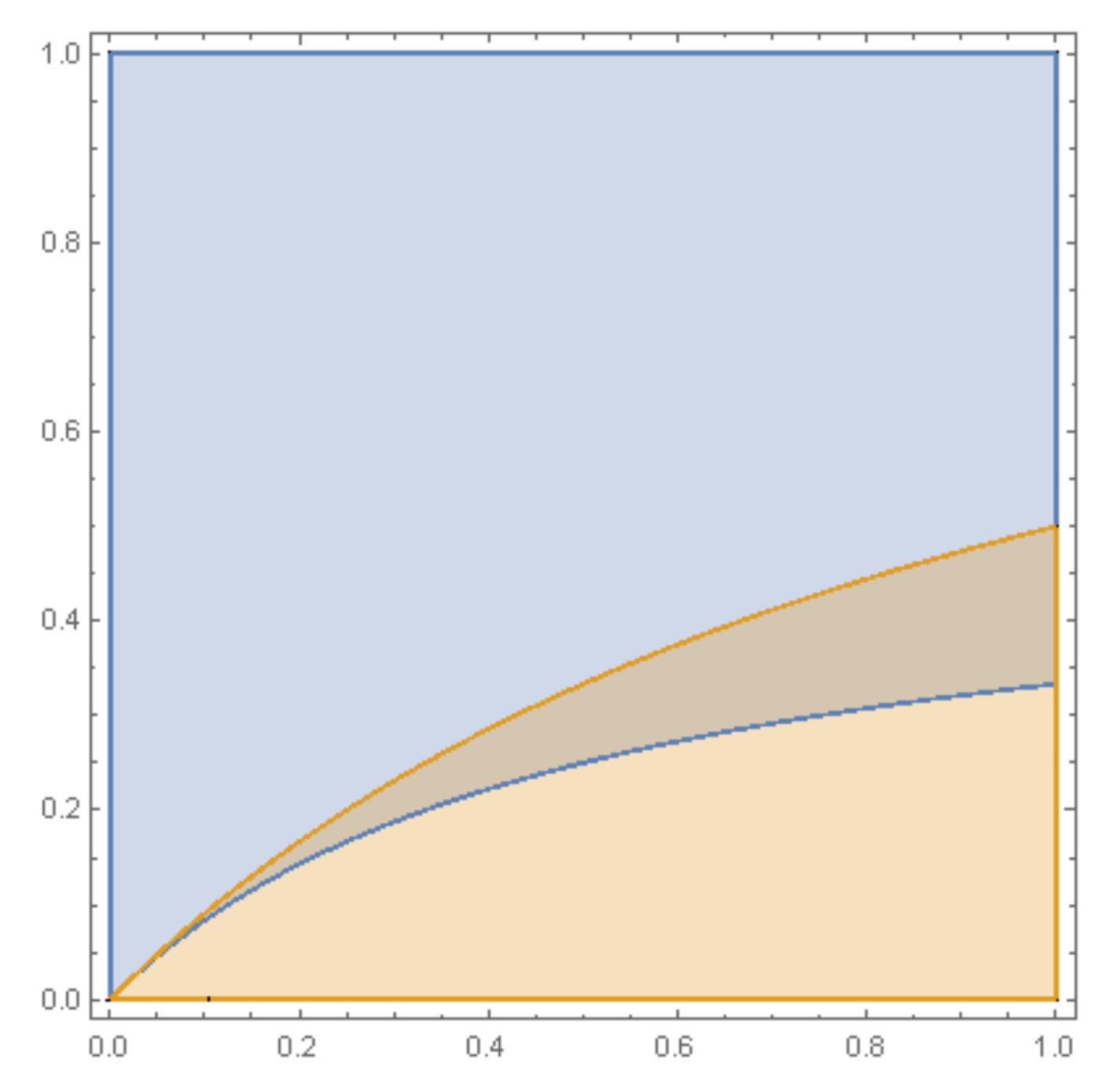

Figure 4 shows some shapes of

and

for

.

The log-series distribution weights satisfy

. Comparison with the geometric distribution, as in [

19], in combination with the lemma give

as

. The same limit has been shown for analogous roots in the best-choice problem with the negative binomial prior

for integer

; however, the monotonicity direction in that setting is different [

7].

To summarise findings of this section, we have:

Theorem 4. The monotone case of optimal stopping does not hold. The myopic strategy is not optimal and has the following features:

- (i)

for , the cut-offs of satisfy ;

- (ii)

for , is the optimal action for every ;

- (iii)

for times as in (ii), the myopic strategy coincides with the optimal stopping strategy (in the event ).

6.3. Optimality and Bounds

For state and , define the continuation value to be the maximum probability of the best choice, as achievable by stopping strategies starting in the state. By the optimality principle, the overall optimal stopping strategy, starting from , stops at the first record satisfying .

Given

, let

be the next trial epoch (or 1 in the event

). Similar to the argument in Lemma 5, we find that the random variable

has density

By the

trial, the optimal stopping strategy stops if this is a record and

is more beneficial than the optimal continuation, hence integrating out

we obtain

This has the equivalent differential form for

,

For the special instance

, integrating out the variable

gives

or, in the differential form with initial conditions

and

By Corollary 4, the continuation value coincides with the winning probability of

in a segment of the range; therefore:

As a check, for

let

. With this change of variable, (

19) simplifies as

For

x in the range where

, this becomes the recursion (

16).

Outside the range covered by (

21), Equations (

19) and (

20) should be complemented by a ‘

’ boundary condition

Figure 5 shows stop, continuation and z-strategy curves for

and 3. The numerical simulation suggests that the equation

has a unique solution

and that the critical points increase with

k, so the optimal stopping strategy is similar to the myopic. These critical points have lower bounds

defined as the solution to

and upper bounds

defined as the critical points where

is the same as the

z-strategy.

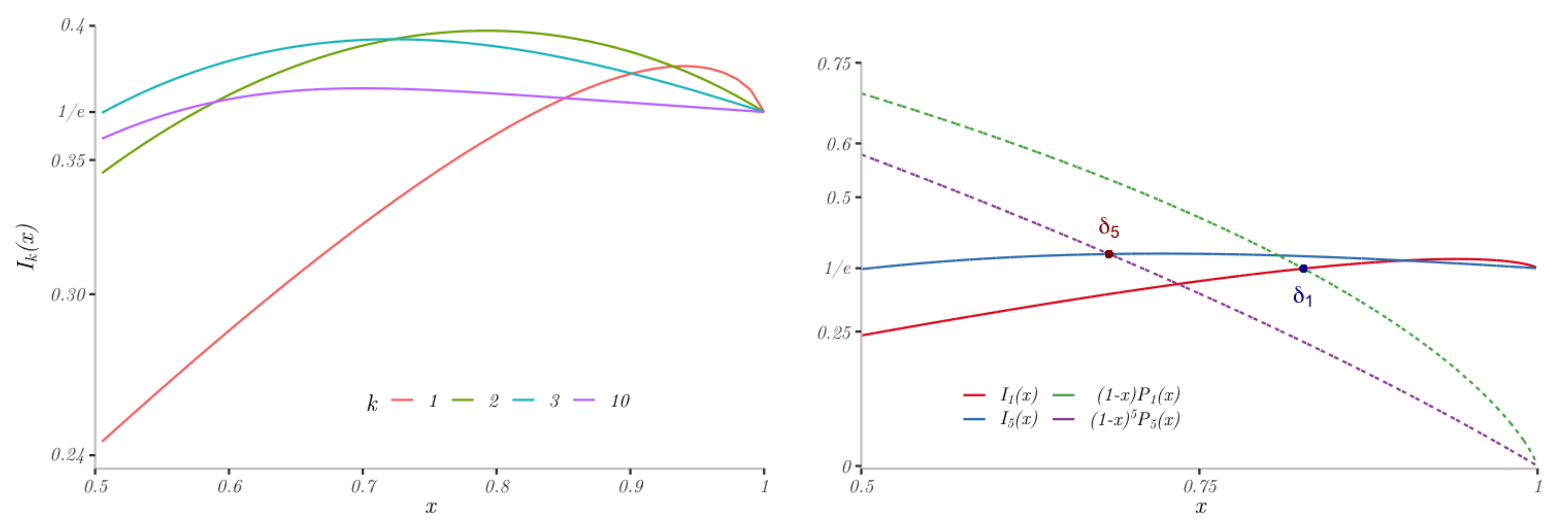

To approximate the continuation value in the range

, we simulated some easier computable bounds

The upper

information bound

(see

Figure 6) is the winning probability of an informed gambler who in state

(with

) knows the total number of trials

, as in

Section 3. Two lower bounds stem from the comparison with the myopic and

z-strategies. The points

computed for

all satisfy

, and so the first relation turns equality for

. Therefore, the critical points satisfy

The results of computation are presented in

Figure 5 and

Table 1,

Table 2,

Table 3 and

Table 4. The data show excellent performance of the strategy that by the first trial chooses between stopping and proceeding with a

z-strategy.