Exploring Conditions for Enhancing Critical Thinking in Networked Learning: Findings from a Secondary School Learning Analytics Environment

Abstract

1. Introduction

2. Literature Review

2.1. Networked Learning Environments and Critical Thinking

2.2. Quantitative Networked Learning Metrics

3. Study Context

4. Methods and Materials

4.1. Research Design and Procedure

4.2. Participants

4.3. Measures

4.3.1. Social Network Metrics

- Out-degree centrality: As students were encouraged to post replies to their classmates’ posts, out-degree centrality was computed to measure how many participants a student had out-going ties with (i.e., how many people they sent replies to).

- Out k-step reach centrality: To measure how closely connected a student was to other participants in the class, out k-step reach centrality was used. Out k-step reach centrality refers to “the proportion of actors that a given actor can reach in k steps or less” [59] (p. 178). It was used instead of closeness centrality as it is more suited to directed networks.

- Arc reciprocity: This was used as a network- or class-level metric to measure the extent to which replies from a student to another participant was matched by replies from that participant to the student. By default, UCINET dichotomizes the data in order to generate these metrics, meaning that the analysis focused on whether ties/interactions existed between two participants rather than how many ties there were between two participants.

4.3.2. Engagement in Collaborative or Networked Learning

- I try my best to contribute to group discussions in EL;

- I share my ideas during group work in EL;

- I try my best to get involved in discussions during EL;

- I try my best to contribute to group work in EL;

- I enjoy discussions with my classmates in EL.

4.3.3. Critical Reading Score

4.4. Data Analysis

5. Results

5.1. Multiple Regression Analysis

5.2. Further Analysis

Out k-Step Reach

6. Discussion

7. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Ravenscroft, A. Dialogue and connectivism: A new approach to understanding and promoting dialogue-rich networked learning. Int. Rev. Res. Open. Dis. 2011, 12, 139–160. [Google Scholar] [CrossRef]

- Verbert, K.; Duval, E.; Klerkx, J.; Govaerts, S.; Santos, J.L. Learning analytics dashboard applications. Am. Behav. Sci. 2013, 57, 1500–1509. [Google Scholar] [CrossRef]

- Cukurova, M.; Luckin, R.; Millán, E.; Mavrikis, M. The NISPI framework: Analysing collaborative problem-solving from students’ physical interactions. Comput. Educ. 2018, 116, 93–109. [Google Scholar] [CrossRef]

- Schellens, T.; Van Keer, H.; De Wever, B.; Valcke, M. Tagging thinking types in asynchronous discussion groups: Effects on critical thinking. Interact. Learn. Envir. 2009, 17, 77–94. [Google Scholar] [CrossRef]

- Smidt, H.; Thornton, M.; Abhari, K. The future of social learning: A novel approach to connectivism. In Proceedings of the 50th Hawaii International Conference on System Sciences, Big Island, HI, USA, 3–7 January 2017; HICSS Conference Office: Honolulu, HI, USA, 2017. [Google Scholar]

- Williams, R.; Karousou, R.; Mackness, J. Emergent learning and learning ecologies in Web 2.0. Int. Rev. Res. Open. Dis. 2011, 12, 39–59. [Google Scholar] [CrossRef]

- Bell, F. Network theories for technology-enabled learning and social change: Connectivism and actor network theory. In Proceedings of the 7th International Conference on Networked Learning 2010, Aalborg, Denmark, 3–4 May 2010; Lancaster University: Lancaster, UK, 2010. [Google Scholar]

- Redecker, C.; Ala-Mutka, K.; Punie, Y. Learning 2.0-The Impact of Social Media on Learning in Europe. Policy Brief. JRC Scientific and Technical Report. EUR JRC56958 EN. 2010. Available online: http://ftp.jrc.es/EURdoc/JRC56958.pdf (accessed on 6 September 2019).

- Hossain, M.M.; Wiest, L.R. Collaborative middle school geometry through blogs and other web 2.0 technologies. J. Comput. Math. Sci. Teach. 2013, 32, 337–352. [Google Scholar]

- Jones, C.; Ryberg, T.; de Laat, M. Networked Learning. In Encyclopedia of Educational Philosophy and Theory; Peters, M., Ed.; Springer Singapore: Singapore, 2017; pp. 1–6. [Google Scholar]

- De Laat, M.; Lally, V.; Lipponen, L.; Simons, R.-J. Investigating patterns of interaction in networked learning and computer-supported collaborative learning: A role for Social Network Analysis. Int. J. Comp-Supp. Coll. 2007, 2, 87–103. [Google Scholar] [CrossRef]

- Carolan, B.V. Social Network Analysis and Education: Theory, Methods & Applications; Sage Publications: Thousand Oaks, CA, USA, 2013. [Google Scholar]

- Jonassen, D.H. Thinking technology: Toward a constructivist design model. Educ. Technol. 1994, 34, 34–37. [Google Scholar]

- Kent, C.; Rechavi, A.; Rafaeli, S. Networked Learning Analytics: A Theoretically Informed Methodology for Analytics of Collaborative Learning. In Learning In a Networked Society: Spontaneous and Designed Technology Enhanced Learning Communities; Kali, Y., Baram-Tsabari, A., Schejter, A.M., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 145–175. [Google Scholar]

- Dede, C. The evolution of distance education: Emerging technologies and distributed learning. Am. J. Dis. Educ. 1996, 10, 4–36. [Google Scholar] [CrossRef]

- Wenger, E. Communities of practice: Learning as a social system. Syst. Thinker 1998, 9, 2–3. [Google Scholar] [CrossRef]

- Richardson, J.C.; Maeda, Y.; Lv, J.; Caskurlu, S. Social presence in relation to students’ satisfaction and learning in the online environment: A meta-analysis. Comput. Hum. Behav. 2017, 71, 402–417. [Google Scholar] [CrossRef]

- Shum, S.B.; Ferguson, R. Social learning analytics. J. Educ. Tech. Soc. 2012, 15, 3–26. [Google Scholar]

- The New London Group. A pedagogy of multiliteracies: Designing social futures. Harvard. Educ. Rev. 1996, 66, 60–92. [Google Scholar] [CrossRef]

- Burnett, C.; Davies, J.; Merchant, G.; Rowsell, J. Changing Contexts for 21st-Century Literacies. In New Literacies around the Globe; Routledge: New York, NY, USA, 2014; pp. 21–33. [Google Scholar]

- Cope, B.; Kalantzis, M. The things you do to know: An introduction to the pedagogy of multiliteracies. In A Pedagogy of Multiliteracies; Cope, B., Kalantzis, M., Eds.; Palgrave Macmillan: London, UK, 2015; pp. 1–36. [Google Scholar]

- Bernard, R.M.; Abrami, P.C.; Borokhovski, E.; Wade, C.A.; Tamim, R.M.; Surkes, M.A.; Bethel, E.C. A meta-analysis of three types of interaction treatments in distance education. Rev. Educ. Res. 2009, 79, 1243–1289. [Google Scholar] [CrossRef]

- Jaggars, S.S.; Xu, D. How do online course design features influence student performance? Comput. Educ. 2016, 95, 270–284. [Google Scholar] [CrossRef]

- Akyol, Z.; Garrison, D.R. Understanding cognitive presence in an online and blended community of inquiry: Assessing outcomes and processes for deep approaches to learning. Brit. J. Educ. Technol. 2011, 42, 233–2500. [Google Scholar] [CrossRef]

- Gold, S. A constructivist approach to online training for online teachers. J. Asynchron. Learn. Netw. 2001, 5, 35–57. [Google Scholar] [CrossRef]

- Klisc, C.; McGill, T.; Hobbs, V. Use of a post-asynchronous online discussion assessment to enhance student critical thinking. Australas. J. Educ. Tec. 2017, 33, 63–76. [Google Scholar] [CrossRef]

- Garrison, D.R.; Akyol, Z. Toward the development of a metacognition construct for communities of inquiry. Internet. High. Educ. 2015, 24, 66–71. [Google Scholar] [CrossRef]

- Thompson, C. Critical thinking across the curriculum: Process over output. Int. J. Hum. Soc. Sci. 2011, 1, 1–7. [Google Scholar]

- Johnson, R.D.; Hornik, S.; Salas, E. An empirical examination of factors contributing to the creation of successful e-learning environments. Int. J. Hum.-Comput. St. 2008, 66, 356–369. [Google Scholar] [CrossRef]

- Aviv, R.; Erlich, Z.; Ravid, G.; Geva, A. Network analysis of knowledge construction in asynchronous learning networks. J. Asynchron. Learn. Netw. 2003, 7, 1–23. [Google Scholar] [CrossRef]

- Wise, A.; Zhao, Y.; Hausknecht, S. Learning analytics for online discussions: Embedded and extracted approaches. J. Learn. Anal. 2014, 1, 48–71. [Google Scholar] [CrossRef]

- Arnold, K.E.; Pistilli, M.D. Course signals at Purdue: Using learning analytics to increase student success. In Proceedings of the 2nd International Conference on Learning Analytics and Knowledge, Vancouver, BC, Canada, 29 April–02 May 2012; ACM: New York, NY, USA, 2012; pp. 267–270. [Google Scholar]

- Dawson, S. ‘Seeing’ the learning community: An exploration of the development of a resource for monitoring online student networking. Brit. J. Educ. Technol. 2010, 41, 736–752. [Google Scholar] [CrossRef]

- Cela, K.L.; Sicilia, M.Á.; Sánchez, S. Social network analysis in e-learning environments: A preliminary systematic review. Educ. Psychol. Rev. 2015, 27, 219–246. [Google Scholar] [CrossRef]

- Wasserman, S.; Faust, K. Social Network Analysis: Methods and Applications; Cambridge University Press: Cambridge, UK, 1994; Volume 8. [Google Scholar]

- Siemens, G.; Long, P. Penetrating the fog: Analytics in learning and education. Educ. Rev. 2011, 46, 30. [Google Scholar]

- Daradoumis, T.; Martínez-Monés, A.; Xhafa, F. An integrated approach for analysing and assessing the performance of virtual learning groups. In Proceedings of the International Conference on Collaboration and Technology Workshop on Groupware (CRIWG 2004), San Carlos, Costa Rica, 5–9 September 2004; Springer: Berlin/Heidelberg, Germany, 2004; pp. 289–304. [Google Scholar]

- Rakic, S.; Softic, S.; Vilkas, M.; Lalic, B.; Marjanovic, U. Key Indicators for Student Performance at the E-Learning Platform: An SNA Approach. In Proceedings of the 2018 16th International Conference on Emerging eLearning Technologies and Applications (ICETA), Stary Smokovec, Slovakia, 15–16 November 2018; IEEE: New York, NY, USA, 2018; pp. 463–468. [Google Scholar]

- Kellogg, S.; Booth, S.; Oliver, K. A social network perspective on peer supported learning in MOOCs for educators. Int. Rev. Res. Open. Dis. 2014, 15, 263–289. [Google Scholar] [CrossRef]

- Paredes, W.C.; Chung, K.S.K. Modelling learning & performance: A social networks perspective. In Proceedings of the 2nd International Conference on Learning Analytics and Knowledge, Vancouver, BC, Canada, 29 April–2 May 2012; ACM: New York, NY, USA, 2012; pp. 34–42. [Google Scholar]

- Sousa-Vieira, M.; López-Ardao, J.; Fernández-Veiga, M. The Network Structure of Interactions in Online Social Learning Environments. In Proceedings of the 9th International Conference on Computer Supported Education (CSEDU 2017), Porto, Portugal, 21–23 April 2017; Springer: Cham, Switzerland, 2018; pp. 407–431. [Google Scholar]

- Martınez, A.; Dimitriadis, Y.; Rubia, B.; Gómez, E.; De la Fuente, P. Combining qualitative evaluation and social network analysis for the study of classroom social interactions. Comput. Educ. 2003, 41, 353–368. [Google Scholar] [CrossRef]

- Skrypnyk, O.; Joksimović, S.k.; Kovanović, V.; Gašević, D.; Dawson, S. Roles of course facilitators, learners, and technology in the flow of information of a cMOOC. Int. Rev. Res. Open. Dis. 2015, 16, 188–217. [Google Scholar] [CrossRef]

- Laghos, A.; Zaphiris, P. Sociology of student-centred e-learning communities: A network analysis. In Proceedings of the IADIS International Conference on e-Society, Dublin, Ireland, 13–16 July 2006; IADIS Press: Dublin, Ireland, 2006. [Google Scholar]

- Beck, R.J.; Fitzgerald, W.J.; Pauksztat, B. Individual behaviors and social structure in the development of communication networks of self-organizing online discussion groups. In Designing for Change in Networked Learning Environments; Springer: Dordrecht, The Nederlands, 2003; pp. 313–322. [Google Scholar]

- Cho, H.; Stefanone, M.; Gay, G. Social information sharing in a CSCL community. In Proceedings of the Conference on Computer Support for Collaborative Learning: Foundations for a CSCL Community, Boulder, CO, USA, 7–11 January 2002; Lawrence Erlbaum Associates: Hillsdale, NJ, USA, 2002; pp. 43–50. [Google Scholar]

- Sousa-Vieira, M.E.; López-Ardao, J.C.; Fernández-Veiga, M.; Ferreira-Pires, O.; Rodríguez-Pérez, M.; Rodríguez-Rubio, R.F. Prediction of learning success/failure via pace of events in a social learning network platform. Comput. Appl. Eng. Educ. 2018, 26, 2047–2057. [Google Scholar] [CrossRef]

- Saqr, M.; Fors, U.; Tedre, M. How the study of online collaborative learning can guide teachers and predict students’ performance in a medical course. BMC Med. Educ. 2018, 18, 24. [Google Scholar] [CrossRef] [PubMed]

- Saqr, M.; Alamro, A. The role of social network analysis as a learning analytics tool in online problem based learning. BMC Med. Educ. 2019, 19, 160. [Google Scholar] [CrossRef] [PubMed]

- Alexander, R. Essays on Pedagogy; Routledge: New York, NY, USA, 2008. [Google Scholar]

- Black, P.; Wiliam, D. Assessment and classroom learning. Assess. Educ. Prin. Policy Pract. 1998, 5, 7–74. [Google Scholar] [CrossRef]

- Stahl, G.; Koschmann, T.D.; Suthers, D.D. Computer-supported Collaborative Learning. In Cambridge Handbook of the Learning Science; Cambridge University Press: Cambridge, UK, 2006; pp. 409–426. [Google Scholar]

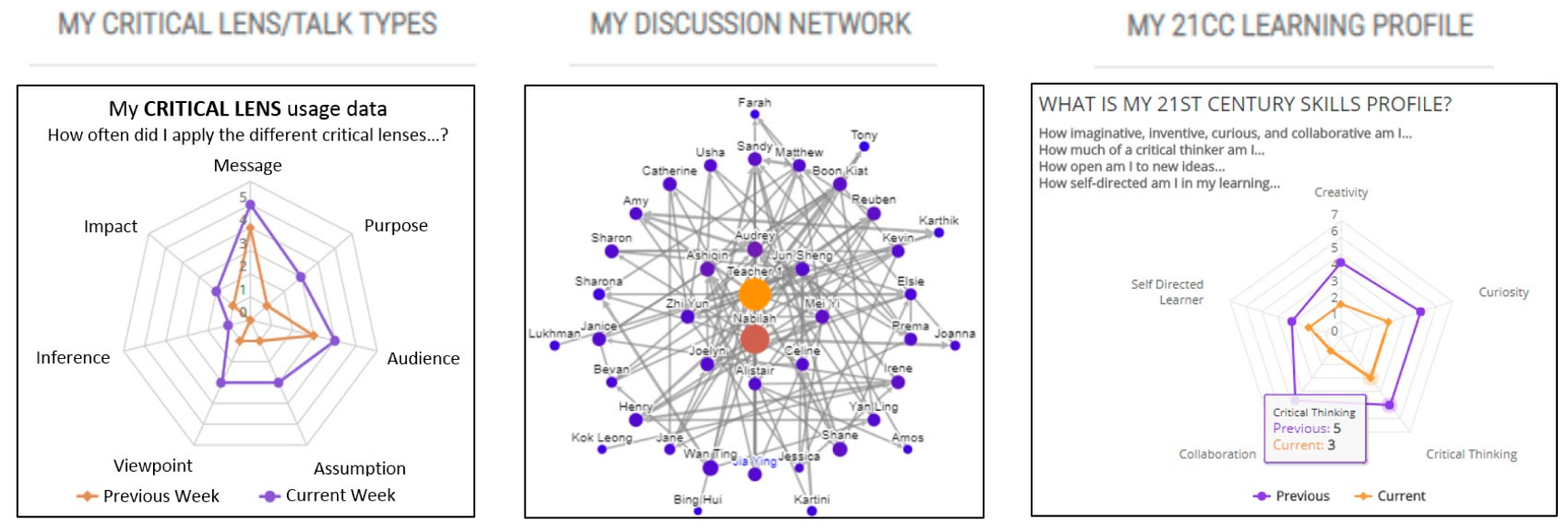

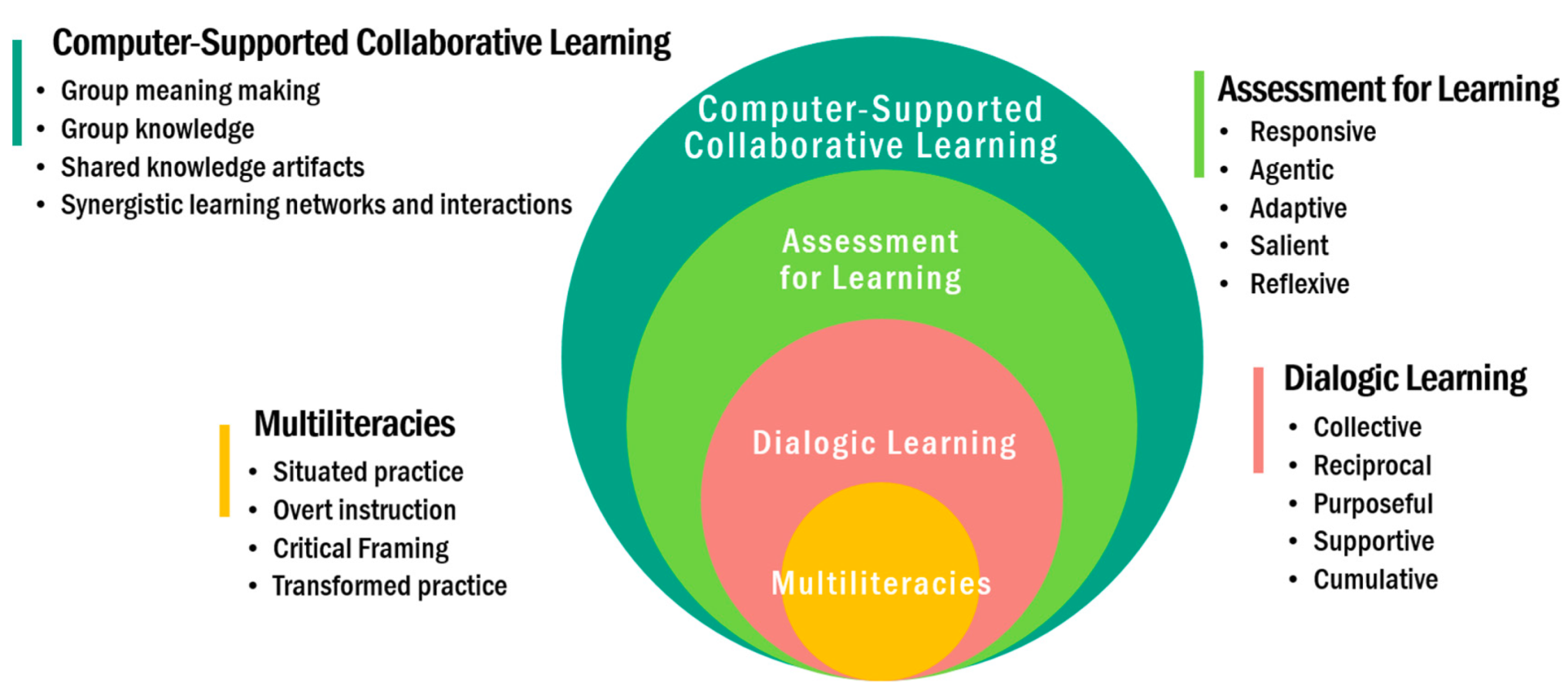

- Tan, J.P.-L.; Koh, E.; Jonathan, C.; Yang, S. Learner dashboards a double-edged sword? Students’ sense-making of a collaborative critical reading and learning analytics environment for fostering 21st-century literacies. J. Learn. Anal. 2017, 4, 117–140. [Google Scholar] [CrossRef]

- Tan, J.P.-L.; McWilliam, E. From literacy to multiliteracies: Diverse learners and pedagogical practice. Pedag. Int. J. 2009, 4, 213–225. [Google Scholar] [CrossRef]

- Paul, R.; Elder, L. Critical Thinking: Tools for Taking Charge of Your Learning and Your Life; Prentice Hall: Upper Saddle River, NJ, USA, 2001. [Google Scholar]

- Tan, J.P.-L.; Caleon, I.S.; Jonathan, C.R.; Koh, E. A Dialogic Framework for Assessing Collective Creativity in Computer-Supported Collaborative Problem-Solving Tasks. Res. Pract. Tech. Enhanc. Learn. 2014, 9, 411–437. [Google Scholar]

- Jonathan, C.; Tan, J.P.-L.; Koh, E.; Caleon, I.S.; Tay, S.H. Enhancing students’ critical reading fluency, engagement and self-efficacy using self-referenced learning analytics dashboard visualizations. In Proceedings of the 25th International Conference on Computers in Education, Christchurch, New Zealand, 4–8 December 2017; Asia-Pacific Society for Computers in Education: Jhongli, Taiwan, 2017; pp. 457–462. [Google Scholar]

- Borgatti, S.; Everett, M.; Freeman, L. Ucinet 6.0 Version 1.00. Natick: Analytic Technologies. Sofware for Social Network Analysis. Version para Windows. Se puede conseguir en la dirección. 1999. Available online: http://www.analytictech.com/ (accessed on 6 February 2011).

- Borgatti, S.P.; Everett, M.G.; Johnson, J.C. Analyzing Social Networks; Sage Publications Ltd: London, UK, 2013. [Google Scholar]

- Tan, J.P.-L.; Nie, Y. The role of authentic tasks in promoting twenty-first century learning dispositions. In Authentic Problem Solving and Learning in the 21st Century; Springer: Singapore, 2015; pp. 19–39. [Google Scholar]

- Cohen, P.; West, S.G.; Aiken, L.S. Applied Multiple Regression/Correlation Analysis for the Behavioral Sciences; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 2014. [Google Scholar]

- Menard, S. Applied Logistic Regression Analysis; Sage Publications: Thousand Oaks, CA, USA, 2002; Volume 106. [Google Scholar]

- Cohen, J. Statistical Power Analysis for the Behavioural Sciences, 2nd ed.; Cohen, J., Ed.; Lawrence Erlbaum Associates: Hillsdale, NJ, USA, 1988. [Google Scholar]

- Zohar, A.; Dori, Y.J. Higher order thinking skills and low-achieving students: Are they mutually exclusive? J. Learn. Sci. 2003, 12, 145–181. [Google Scholar] [CrossRef]

- Prinsen, F.; Volman, M.L.; Terwel, J. The influence of learner characteristics on degree and type of participation in a CSCL environment. Brit. J. Educ. Technol. 2007, 38, 1037–1055. [Google Scholar] [CrossRef]

- Azevedo, R. Using hypermedia to learn about complex systems: A self-regulation model. In Proceedings of the 10th International on Artificial Intelligence in Education, San Antonio, TX, USA, 19–23 May 2001; IOS Press: Amsterdam, The Netherlands, 2001. [Google Scholar]

- Rahikainen, M.; Lallimo, J.; Hakkarainen, K. Progressive inquiry in CSILE environment: Teacher guidance and students’ engagement. In Proceedings of the 1st European Conference on Computer-Supported Collaborative Learning (Euro-CSCL 2001), Maastricht, The Netherlands, 22–24 March 2001; Maastricht McLuhan Institute: Maastricht, The Netherlands, 2001; pp. 520–528. [Google Scholar]

- Philip, D. Social network analysis to examine interaction patterns in knowledge building communities. Can. J. Learn. Technol. 2010, 36, 1–20. [Google Scholar] [CrossRef]

- Hewitt, J.; Teplovs, C. An analysis of growth patterns in computer conferencing threads. In Proceedings of the 1999 Conference on Computer Support for Collaborative Learning, Palo Alto, CA, USA, 12–15 December 1999; Lawrence Erlbaum Associates: Mahwah, NJ, USA, 1999; pp. 232–241. [Google Scholar]

- Lipponen, L.; Rahikainen, M.; Hakkarainen, K.; Palonen, T. Effective participation and discourse through a computer network: Investigating elementary students’ computer supported interaction. J. Educ. Comput. Res. 2002, 27, 355–384. [Google Scholar] [CrossRef]

- Hara, N.; Bonk, C.J.; Angeli, C. Content analysis of online discussion in an applied educational psychology course. Instr. Sci. 2000, 28, 115–152. [Google Scholar] [CrossRef]

- Goggins, S.; Xing, W. Building models explaining student participation behavior in asynchronous online discussion. Comput. Educ. 2016, 94, 241–251. [Google Scholar] [CrossRef]

- Heo, H.; Lim, K.Y.; Kim, Y. Exploratory study on the patterns of online interaction and knowledge co-construction in project-based learning. Comput. Educ. 2010, 55, 1383–1392. [Google Scholar] [CrossRef]

- Martinez-Maldonado, R.; Clayphan, A.; Yacef, K.; Kay, J. MTFeedback: Providing notifications to enhance teacher awareness of small group work in the classroom. IEEE T. Learn. Technol. 2014, 8, 187–200. [Google Scholar] [CrossRef]

| Critical Lenses | Critical Talk Types |

|---|---|

| Message: What is the text/author telling the reader? | Ideate: I think that… |

| Purpose: What is the objective of the text/author? | Justify: I think so because… |

| Audience: Who are the target readers? | Validate: I agree… |

| Assumption: What presuppositions does the author make? | Challenge: I disagree… |

| Viewpoint: Whose perspective is the text written from? | Clarify: I need to ask… |

| Inference: What conclusions did I draw from the text and why? | |

| Impact: How effective are the language/visuals used? |

| Class | n | % Males | % Females | EL Teacher |

|---|---|---|---|---|

| Class A | 40 | 67.5% | 32.5% | Teacher 1 |

| Class B | 40 | 42.5% | 57.5% | Teacher 2 |

| Class C | 23 | 34.8% | 65.2% | Teacher 3 |

| Class D | 39 | 30.8% | 69.2% | Teacher 1 |

| Class E | 39 | 51.3% | 48.7% | Teacher 4 |

| Class F | 40 | 42.5% | 57.5% | Teacher 5 |

| Class G | 42 | 52.4% | 47.6% | Teacher 2 |

| Total | 263 | 46.8% | 53.2% | 5 Teachers |

| Model 1 | Model 2 | |||||

|---|---|---|---|---|---|---|

| Variable | n | M | SD | n | M | SD |

| Post-test critical reading score | 263 | 18.82 | 5.88 | 252 | 18.94 | 5.91 |

| Pre-test critical reading score | 263 | 17.49 | 4.91 | 252 | 17.55 | 4.93 |

| Pre-test engagement in collaboration | 263 | 5.30 | 1.07 | 252 | 5.36 | 0.96 |

| Word count of posts | 263 | 1012 | 674.79 | 252 | 979.52 | 583.22 |

| Out-degree centrality | 263 | 0.24 | 0.15 | 252 | 0.23 | 0.13 |

| Out 2-step reach centrality | 263 | 0.83 | 0.22 | 252 | 0.83 | 0.22 |

| Class arc reciprocity | 263 | 0.52 | 0.07 | 252 | 0.52 | 0.07 |

| Model 1 | Model 2 | |||||||

|---|---|---|---|---|---|---|---|---|

| Predictor | B | SEB | Β | t | B | SEB | β | t |

| Pre-test critical reading score | 0.362 | 0.067 | 0.302 | 5.431 *** | 0.329 | 0.069 | 0.275 | 4.751 *** |

| Pre-test engagement in collaboration | 0.319 | 0.278 | 0.058 | 1.148 | 0.284 | 0.317 | 0.046 | 0.896 |

| Word count of posts | 0.001 | 0.001 | 0.158 | 2.560 * | 0.002 | 0.001 | 0.208 | 2.966 ** |

| Out-degree centrality | −6.670 | 2.679 | −0.166 | −2.490 * | −9.251 | 3.495 | −0.202 | −2.647 ** |

| Out 2-step reach centrality | 4.426 | 1.638 | 0.168 | 2.702 ** | 4.617 | 1.736 | 0.170 | 2.660 ** |

| Class arc reciprocity | 27.174 | 4.458 | 0.324 | 6.096 *** | 28.045 | 4.609 | 0.335 | 6.085 *** |

| Below-Median Group | Above-Median Group | |||||

|---|---|---|---|---|---|---|

| Variable | n | Mean (SD) | n | Mean (SD) | t | Cohen’s d (Effect size) |

| Out-degree centrality | 136 | 18.77 (6.54) | 127 | 18.88 (5.11) | −0.15 | −0.02 (Small) |

| Out 2-step reach centrality | 136 | 17.58 (5.80) | 127 | 20.15 (5.71) | −3.62 *** | −0.45 (Medium) |

| Out 3-step reach centrality | 135 | 17.21 (4.67) | 128 | 20.52 (6.54) | −4.69 *** | −0.58 (Medium) |

| Out 4-step reach centrality | 167 | 19.40 (6.36) | 96 | 17.82 (4.83) | 2.27 * | 0.28 (Medium) |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Koh, E.; Jonathan, C.; Tan, J.P.-L. Exploring Conditions for Enhancing Critical Thinking in Networked Learning: Findings from a Secondary School Learning Analytics Environment. Educ. Sci. 2019, 9, 287. https://doi.org/10.3390/educsci9040287

Koh E, Jonathan C, Tan JP-L. Exploring Conditions for Enhancing Critical Thinking in Networked Learning: Findings from a Secondary School Learning Analytics Environment. Education Sciences. 2019; 9(4):287. https://doi.org/10.3390/educsci9040287

Chicago/Turabian StyleKoh, Elizabeth, Christin Jonathan, and Jennifer Pei-Ling Tan. 2019. "Exploring Conditions for Enhancing Critical Thinking in Networked Learning: Findings from a Secondary School Learning Analytics Environment" Education Sciences 9, no. 4: 287. https://doi.org/10.3390/educsci9040287

APA StyleKoh, E., Jonathan, C., & Tan, J. P.-L. (2019). Exploring Conditions for Enhancing Critical Thinking in Networked Learning: Findings from a Secondary School Learning Analytics Environment. Education Sciences, 9(4), 287. https://doi.org/10.3390/educsci9040287