1. Educational Policies Searching for Equity

During the 1960s, the consensus that educational systems should attenuate students’ prior social inequalities led governments to develop educational policies that seek to contribute to reducing social inequalities and ensuring equal opportunities [

1,

2].

In this context, the school should be erected as the foundation of social mobility and economic development [

2]. Surely, one of the most relevant policies aimed at fulfilling this purpose has been the emergence of compensatory education, which can be defined as the movement that promotes educational programs or policies that intend to diminish the social and economic vulnerability of children and young people at risk of social and school exclusion, promoting equal opportunities in education, social mobility, and integration of minorities [

3,

4,

5].

A variety of programs has been implemented around the world—for example, in the United States, in Australia, in France, in England, and in Portugal [

3,

6,

7]—all under the umbrella of compensatory education. During the 1960s and 1970s, the programs targeted the preschool period (i.e., Head Start, Abecedarian Project, Perry Preschool Project), reinforcing educational institutions with human, material, and financial resources, as well as services; or with financial support directly to schools with vulnerable populations (i.e., Title I). Since the 1980s, the programs have focused their action on territories affected by high rates of school and social exclusion, assigning to schools the responsibility for developing intervention plans in partnership with the community (i.e., Zones d’Éducation Prioritaire, Education Action Zones, Territórios Educativos de Intervenção Prioritária). In this case, the schools are also reinforced with human and material resources, and have autonomy to decide how to use them.

Despite the good intentions behind the development of educational policies aimed at improving the academic performance of the most disadvantaged, critiques and doubts have put these policies into question since their inception [

1,

8]. Bernstein’s criticisms [

3] of compensatory education, and the fact that this concept makes it possible to reinforce the stigmatization of the most disadvantaged, continue to be of particular concern [

9,

10]. Furthermore, in practice, compensatory education programs have often presented poor results and their accomplishments have mostly been below expectations, especially regarding their attempts to improve the academic performance of the most disadvantaged students. Ultimately, what has recurrently been questioned is their ability to improve schools’ and students’ achievement, and thereby contribute to the social mobility of disadvantaged students [

1,

2,

5,

11,

12,

13]. This fact appears to be particularly relevant for high school contexts given that, as stated by [

14] (p. 198), “Whereas the social and emotional components of school climate are often perceived as more important in elementary and middle schools, academic performance is a more central mission of high schools”. The reason for this is that the grades obtained in high school are usually fundamental for proceeding into higher education. In line with this argument, here we assess academic performance based on outcomes, “as objective scores on individual performance tasks, such as tests or exams” [

15] (p. 139). Specifically, we use the grades obtained by the students in the national exams at the end of secondary education as indicators of their academic performance.

In parallel with compensatory education programs, vocational education has been a regular feature of secondary school systems, providing an alternative to more traditional academic education. It can be said that a European consensus regarding the integration and/or strengthening of vocational programs in educational systems has been gradually established.

However, the actual role of vocational education is considered from diverging perspectives. On the one hand, institutions such as the European Union and OECD encourage the investment in vocational programs, arguing that it is a reaction to economic needs, and also a possibility for students to acquire important skills for personal development and active citizenship, thereby facilitating their integration in the labor market [

16]. On the other hand, researchers operating in the framework of social reproduction theories argue that vocational education “diverts students from higher education and the professions” [

17] (p. 29). In their turn, Hanushek, Schwerdt, and Woessmann [

18] found evidence that students enrolled in vocational programs have a higher probability of entering the labor market in the short term, since the programs respond to the immediate needs of the labor market, but, on average, before the age of 50, the graduates from vocational programs face difficulties in maintaining their jobs and unemployment becomes more frequent.

Other concerns relate to the social stigma that is often associated with vocational pathways and to the risk that tracking reinforces social stratification, given that a disproportionate number of disadvantaged students are directed to vocational courses. Indeed, a recent OECD study shows that, on “average across OECD countries, 24% of students from disadvantaged schools are enrolled in pre-vocational or vocational programs, compared to 3% of students from advantaged schools” [

19] (p. 90).

Hanuschek and Woessmann [

20] (C68–C69) also add that the earlier the tracking takes place, the more it increases inequality: “Out of the 18 countries, the top four countries in terms of the increase in inequality between primary and secondary school are all early trackers (…). The bottom six countries with the largest decrease in inequality are all late trackers that do not track before the age of PISA testing (…)”.

There is, then, an ongoing debate about whether vocational education has mostly an emancipatory or a subjugating role. It is at these crossroads that compensatory and vocational education meet.

2. The Portuguese Educational System, Compensatory and Vocational Education

The Portuguese educational system is divided into basic education (1st to 9th grade) and secondary education (10th to 12th grade). At the end of secondary education, national exams are mandatory for students intending to proceed into higher education. These exams are subject specific (e.g., Portuguese, Maths, Geography); they are identical for all students at a national level, and they are assessed blindly by teachers scattered throughout the country.

Like many other Western countries, Portugal has been investing in compensatory education, mainly through the Territórios Educativos de Intervenção Prioritária (TEIP) program. It has also been encouraging investment in vocational programs. Indeed, the TEIP program has been running since 2006—it is now in its third generation—and, with the reform of secondary education in 2004, vocational programs were massively introduced in public secondary schools [

21]. This explains the 40% increase in the number of vocational education graduates in Portugal between 2005 and 2014, the highest among OECD countries, alongside Australia [

22]. It needs to be stressed that the Law 55/2008, which regulates the TEIP program, explicitly sets up a link between compensatory and vocational education, as it encourages schools to diversify their educational offer, especially regarding vocational programs, and to promote the relationship between the school and the labor market [

23]. The diversification of curricular options through vocational programs enables increasing schooling levels and a faster integration in the labor market [

24]. However, the massive orientation of disadvantaged students to vocational programs instead of higher education brings to question the exact purpose of the TEIP program, as it may be transformed into a mechanism for social selection and the maintenance of academic and socioeconomic inequalities.

A study developed by [

25]—a statistical branch of the Portuguese Ministry of Education—shows that, at the end of 2017/2018, only 33.6% of the students enrolled in vocational education finished secondary education with the age of 17, against 80.8% of those enrolled in regular courses. Also, 33.4% of the students enrolled in vocational education ended the school year of 2017/2018 aged 19 or more, against 4.1% of the students in regular courses. Considering that, in Portugal, “disadvantaged students are around four times more likely to repeat a grade” [

19] (p. 88), we can argue that students from disadvantaged backgrounds are more likely to enter a vocational education path.

3. The TEIP Program

Portugal launched the Portuguese compensatory education program Territórios Educativos de Intervenção Prioritária (TEIP) in 1996. Inspired by the French program Zones d’Éducation Prioritaires (ZEP), TEIP pursues a strategy of territorialization of educational policies based on the positive discrimination of certain geographic, administrative, and social territories. The aim is to combat school and social exclusion by promoting the role of local actors [

26]. Therefore, Law no. 147/B/ME/96 [

27], which regulates TEIP, states that its objectives are combating school failure, absenteeism, and the early school leaving of children and young people. The first edition of the program involved 34 educational territories, began in 1996 but came to an end in 1999. However, the program was reinstated in 2006 along the same lines as its predecessor. It is now called TEIP2 and has four fundamental objectives: (a) The improvement of the students’ educational trajectory and academic results; (b) the reduction of school dropout and educational underachievement; (c) the transition from school to work life; (d) the intervention of the school as a central educational and cultural agent in the life of the communities which it belongs to [

23]. In this paper, we focus on (a), given that academic results, as explained above, are crucial for access to higher education and, therefore, for fulfilling the goals of promoting social mobility and educational equity that are embedded in the compensatory education program [

28].

Currently, for schools to access the TEIP program, they must apply to funds from the European Social Fund via Regional Coordination and Development Commissions, which are public institutions that work towards the socioeconomic cohesion and sustainable development of the Portuguese regions. Interestingly, the evaluation criteria of the applications make no explicit reference to any sort of socioeconomic diagnosis of the territory the schools are implemented in, nor of their populations. What is required from schools is that they describe their current situation regarding retention, dropout, and indiscipline rates, as well as their students’ overall academic performance. Therefore, the socioeconomic context itself is neglected or, to put it another way, simply approached through the proxy of educational indicators. This, together with the absence of centrally defined criteria for considering “what counts” or “may count” as a disadvantaged territory (supposedly those targeted by the program), introduces a high degree of obscurity in the appraisal of the process through which schools “become” TEIP. This means not only that access to the program is rather opaque, but also that there can be significant variations in its implementation in each school. As recently reported by the OECD, “Several of Portugal’s equity funding measures are based on school and student applications and not on needs, as in the case of inclusion mechanisms that follow a rights-based approach. As a result, the capacity to acquire compensatory funding depends on schools’ initiative and the capacity to apply for supplemental funding. This raises additional equity concerns in the levels and strategic value of funds” [

29] (p. 113).

In any case, when a school “becomes” TEIP, it signs a contract with the Ministry of Education in order to ensure the necessary pedagogical and financial support, and positive differentiation mechanisms in access to resources [

28]. The TEIP program thus supports schools in the challenges they face, making it possible to reinforce material and human resources. In addition, an external expert monitors the design and implementation of the school’s educational project, seeking the development of educational practices aimed at promoting school success, tutorials, the activation of clubs, and the provision of services to students. Furthermore, closer relationships between schools and their communities in order to establish partnerships for following students up, facilitating their post-school integration, are also sought [

30].

TEIP2 is now in its third phase: The first phase, initiated in 2006, involved 35 schools; the second phase, which started in September 2009, 24 additional schools; and the third phase, which began in November 2009, a further 46 schools, completing a total of 105 schools. TEIP3 (TEIP3 follows the guidelines of its predecessor (TEIP2), so we will refer to TEIP3 as the 4th phase of the program), the third generation of the program (regulated by Law no. 20/2012), began in 2012, with objectives identical to those of its predecessor, and 32 more schools entered the program. There are currently 137 schools involved in the program.

4. The Assessment of the TEIP Program

Several studies analyze the design, implementation, and development of the TEIP program in specific dimensions such as the curriculum, social justice, leadership, citizenship education, stigmatization, indiscipline, the relationship between TEIP schools and families, and the teachers perspectives of the TEIP program are some of the examples [

10,

26,

31,

32,

33,

34] that give us some insights about a specific school or a set of schools: But there is a lack of studies that specifically focus on one (or more) of the four TEIP objectives and that provide general information, at a national level, including all schools in the program, or at least with reference those either in basic or secondary education. As far as we know, for basic education, there are two reports [

30,

35], and for secondary education there is only the work developed by the authors [

36]. Given that the 2012 report [

30] simply adds one more year of analysis to the indicators presented in the 2011 report [

35], we will refer mainly to the 2012 report in the following paragraphs. Overall, the reports claim to assess four relevant dimensions: Early school leaving, indiscipline, absenteeism, and school success.

Considering the indicators where comparative data with non-TEIP public schools are presented, i.e., early school leaving and school success, the conclusions are conflicting. On the one hand, there seems to have been a larger decrease in early school leaving rates (retention/non-completion) in TEIP schools than in non-TEIP public schools from 2007/2008 to 2010/2011. However, the results of TEIP and non-TEIP schools are not presented for the period before the TEIP program was introduced, making it impossible to grasp the performance of the schools before the introduction of the TEIP program. On the other hand, there is an increase in the gap between TEIP and non-TEIP public schools in the results of national exams/assessment tests in Mathematics and Portuguese Language (4th, 6th, and 9th years) (2006/2007–2010/2011) [

30]. The rest of the assessed dimensions, i.e., the evolution of indiscipline and absenteeism, is presented without comparison to non-TEIP public schools, thus hindering an understanding of the evolution of the gap between the two types of schools. It is also important to mention that the 2012 TEIP report [

30] focused solely on basic education. It did not carry out an assessment of the TEIP program on secondary education.

Apart from these official reports, quantitative and longitudinal studies that consider the population of TEIP schools compared to non-TEIP public schools are virtually non-existent. To the best of our knowledge, only the research developed by [

36] has sought to fill the gap regarding the assessment of the TEIP2 program. The authors analyzed the evolution of academic scores between 2001/2002 and 2012/2013, using databases made available each year by the Ministry of Education and, through a longitudinal approach, compared the means of the scores obtained by students from TEIP and non-TEIP schools in national exams. They found that the gap between TEIP schools and non-TEIP public schools had not declined but had, in fact, increased.

Although the research by [

36] is, until now, the most exhaustive quantitative piece of research we know of, some relevant questions still remain unanswered, which we intend to respond in this article, namely: (i) Identify and select from the databases only the students who, in addition to the score in a national exam, also have an internal score assigned by a particular school. In this way, it is guaranteed that the score obtained in that exam is the result of a school path taken in that specific school, and therefore, the scores obtained by self-proposed students will not penalize the schools in which they took the exams but not the school path; (ii) analyzing the results of each national exam for each subject (Portuguese, Mathematics, Biology), combining the results in a final result for each school, taking in account their specificities regarding the performance in different national exams; (iii) addressing the argument that justifies the widening gap between the TEIP and non-TEIP public schools based on the supposition that TEIP schools have been increasingly able to retain low-performing students, and that these students are responsible for lowering TEIP schools’ overall results.

However, regarding the last argument, for this interpretation to hold, this would mean that the school would register an increase in the proportion of national exams taken by their students. Alternatively, there is still another possible interpretation (which will be duly addressed in the discussion): It is possible to imagine that a school can retain an increasing proportion of low-performing students in non-vocational tracks (meaning that national exams would be compulsory for those students) while keeping or even lowering its proportion of exams. In this case, and simultaneously, the school would have to lose a substantial number of its best-performing students. Nonetheless, if this were to be the case, it would mean that the program would be responsible for having an undesirable, non-intended consequence: That of segregating (and stigmatizing) students.

Therefore, and given that, as mentioned above, in the Portuguese educational system, students need to undertake national exams if they want to access higher education, if any given school is found to be systematically reducing the number of exams carried out, it is plausible to assume that less and less of its students are attempting to proceed into higher education. In fact, it is possible that more students are either dropping out or being directed to vocational programs so that, at the end of secondary education, they enter the labor market.

Considering the fact that secondary education, concerning the TEIP program, remains underassessed, this study is unprecedented and fills a gap in the knowledge produced about the TEIP program. Using a longitudinal approach (15 years’ analysis), we aim to understand the evolution of the students’ academic performance—answering the question of whether the TEIP program is achieving its first goal, improving students′ academic paths, and in the number of national exams scores (NES) undertaken by students in TEIP schools when compared to students in non-TEIP public schools. This is crucial to assess the question of whether there is an increase in the number of national exams taken at TEIP schools and, consequently, more underperforming students taking exams, or instead, less TEIP students taking exams compared to non-TEIP public schools, which brings into question the potential contribution of the TEIP program to social mobility, and the possibility that more and more TEIP schools are massively directing students to vocational courses.

5. Methodology

We analyzed the national exams scores (NES) and the number of national exams carried out between 2001/2002 and 2015/2016. To do so, we used the databases made available by the Ministry of Education yearly since 2002; these contain the students’ scores in the national exams, as well as their internal scores (the internal scores (IS) are the grades attributed by the schools to the students during secondary education. These grades are given in a scale between 0 and 20, where 10 is the minimum pass mark). These databases do not contain socioeconomic or family variables, nor information about the level of vulnerability of the schools’ population. This fact makes it impossible to produce statements about the socioeconomic status of any given specific school in articulation with academic indicators. All we can say is that a given school is part of the TEIP program, and that this presumes a disadvantaged socioeconomic condition of its population/territory. Regarding this fact, it should be added that:

- -

Socioeconomic data at the “freguesia” level (the small administrative government level in Portugal, equivalent to the local authority districts in a city such as London) are not accessible through the national statistical information system.

- -

Even if such data were available, it would be misleading to assert straightforward connections between the socioeconomic data at the “freguesia” level and at the school level.

- -

Socioeconomic data collected by the schools, which we know can be found in databases that are not easily made available to researchers, are aggregated at the school cluster level. This prevents establishing reliable links between such data and individual schools in any given cluster.

Given that TEIP schools are more vulnerable public schools, we considered that their performance should be compared with that of their public counterparts, and thus, private, fee-paying, more exclusive schools were not considered in this analysis. In addition, we organized secondary schools according to the year in which they joined the TEIP program.

Considering the arguments above, we decided to carry out a quantitative analysis, with a longitudinal approach, which provided the evolution of pre- and post-TEIP schools’ performances, as described below.

We began by selecting the students that do have an internal score (IS), as previously mentioned. This means we ensured that the students considered have indeed attended a given school. We then analyzed the average performance of five groups of schools: Public non-TEIP, TEIP, TEIP1, TEIP2/3, and TEIP4 (we use these designations (TEIP1, TEIP2/3, and TEIP4) to define the different phases of the program: Phase 1 (2006/2007); Phase 2 (2009/2010); Phase 3 (2009/2010); Phase 4 or TEIP3 (2011/2013). Phases 2 and 3 are represented together because they began only two months apart, and thus do not justify an independent analysis), for each academic year between 2001/2002 and 2015/2016. This enabled us to develop a longitudinal understanding of the performance of TEIP and non-TEIP public schools regarding national exams scores.

We then calculated the average and standard deviations of the scores obtained for each subject for which there is a national exam (e.g., Portuguese, Maths, Biology, etc.). Subsequently, we calculated each individual exam’s difference to the respective average national exam score in that subject, and divided it by the national standard deviation in that subject (in this way, we obtained each exam’s z-score by subject).

Finally, we analyzed the evolution in the number of national exams carried out in each academic year. This made it possible to compare the different groups of schools by assessing the variation in the proportion of national exams undertaken in TEIP schools relative to non-TEIP public schools. While we do not have detailed data for the time series, we know that in 2007/2008, there were 46.401 in TEIP schools, and that in 2017/2018 there were 177.232. This compares to a total of 1.315.594 students in basic and secondary public schools in 2007/2008, and 1.188.187 in 2017/2018 (

www.pordata.pt). This means that the proportion of TEIP of students in basic and secondary schools has risen from 3.5% in 2007/08 to 15% in 2017/2018.

6. Results

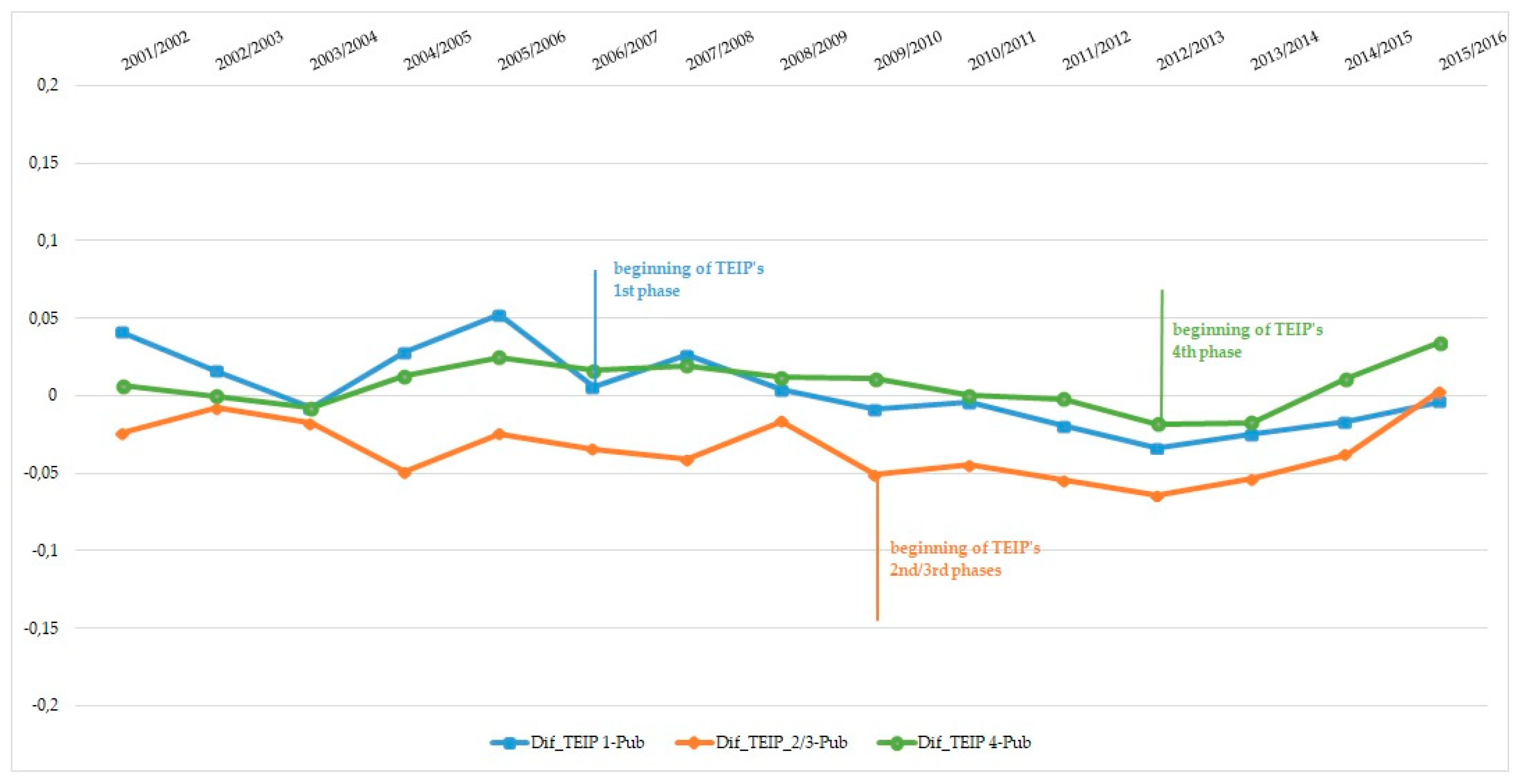

Below, in

Figure 1, we can observe the evolution of the differences in national exam scores between non-TEIP public schools and TEIP1, TEIP2/3, and TEIP4 schools. It becomes clear that the gap between non-TEIP public schools and the schools of the different TEIP phases has been increasing, particularly in the first two batches of schools that enrolled in the program. Notwithstanding some (short) periods of reduction in the differences (for example, between 2013/2014 and 2015/2016), the gap is still large when compared to that of the pre-TEIP period (2001/2002 to 2005/2006), and current values are worse than those when schools from Phase 1 and Phases 2 and 3 joined the program. As for the Phase 4 schools, which joined in TEIP program in 2012/2013, their distance to non-TEIP public schools remains stable. The take home message seems to be that the general trend for the first two batches of schools has been of a systematic increase over the years, and that enrolment in the program does not appear to have any effect on this overall trend. For the last batch of schools, the trend has generally been stable, and, again, no “TEIP effect” seems evident.

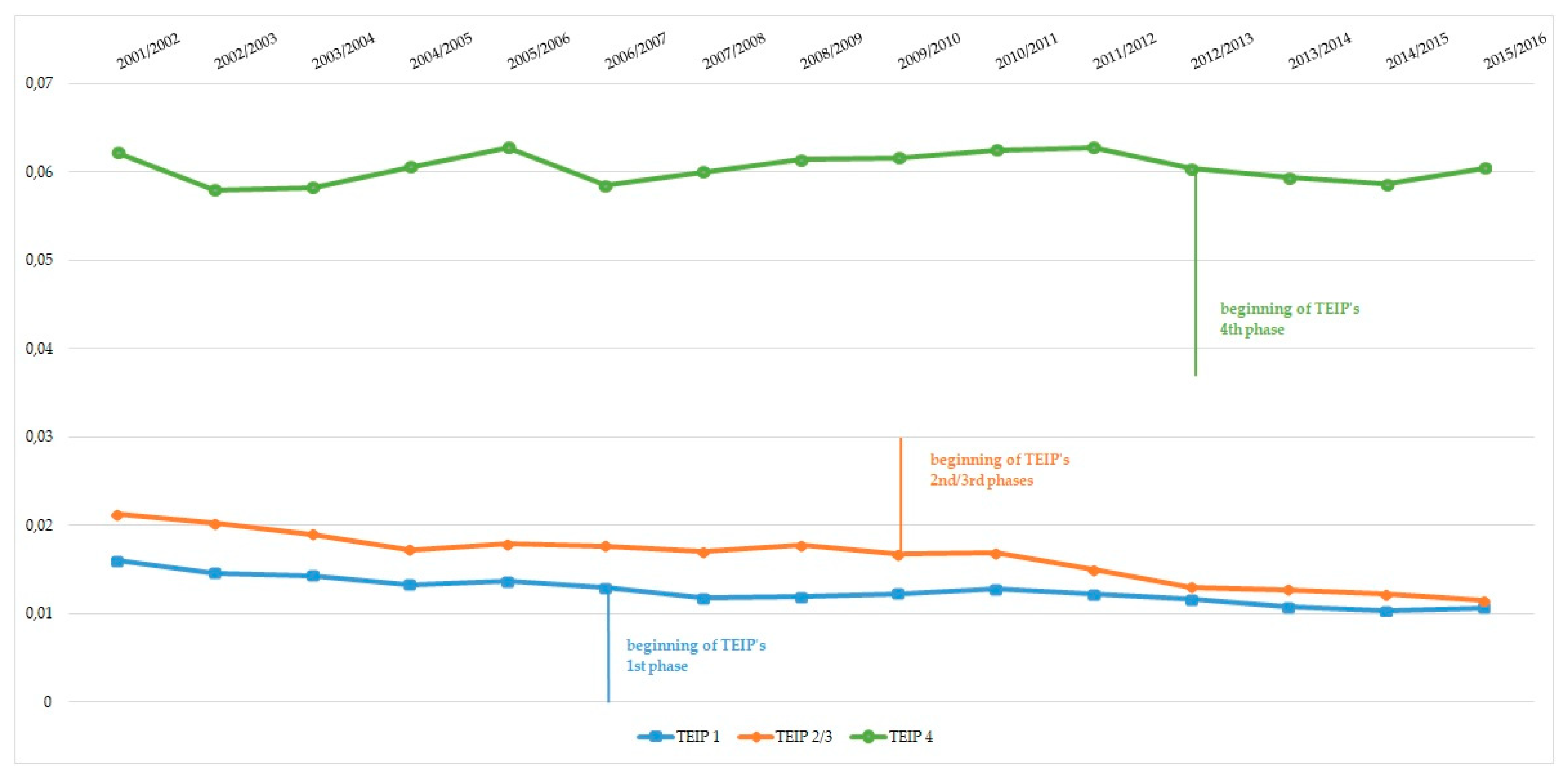

In

Figure 2, the analysis of the z-scores’ standard deviations shows no clear trend in the dispersion of the schools’ results. Again, the broad picture of this 15-year analysis shows that, despite some year-to-year fluctuations, one cannot detect any consistent effect resulting from a group of schools enrolling in the TEIP program. It should be noted the small scale of the y-axis, which means that, overall, there have not been major changes in any of the different schools’ batch results. What is more, even these small changes are not the same in the different groups. In detail, schools from the first phase present, in the last year analyzed, almost the same dispersion as to when they enrolled in the program. Schools from the second phase have, since their enrolment, maintained a 5% difference from non-TEIP schools for several years, with an approximation to the value of non-TEIP schools in the last year of the analysis. Schools that have joined the program in the 4th phase have, since 2001, showed a dispersion very similar to non-TEIP schools, with a slight increase in the few years since their enrolment. To sum up, there is no evidence of any meaningful change in the exams scores’ dispersion resulting from a batch of schools enrolling in the program.

Figure 3 presents the proportion of exams undertaken in TEIP1, TEIP2/3, and TEIP4 schools relative to the total of exams undertaken in public schools. We found that there is an overall reduction of approximately 20% in the proportion of exams carried out in TEIP schools. This decrease is even larger in TEIP2/3 schools, where the proportion of exams is reduced to almost half during the period under analysis. In TEIP1 schools, there is also a gradual decline in the proportion of exams undertaken. Only TEIP4 schools show stability in the proportion of exams carried out.

The overall picture for the first two batches of schools to enter the program depicts a continuous decrease in the proportion of exams undertaken, starting prior to their enrolment in the program. For the third batch (4th phase), there seems to be hardly any fluctuation. Again, the existence of an effect resulting from joining the program is far from clear, with this being true for the three batches of schools.

To sum up, the data seems to indicate that, in relation to national exams, there is not any identifiable TEIP effect. This is true for the (standardized) averages of the different batches of schools, which appear to follow a pre-existing trend, irrespective of the year in which they joined the program. Complementarily, enrolment does not seem to affect the schools’ grades dispersion (standard deviations). Finally, the stable 15-year trend in the proportion of exams carried out in comparison to non-TEIP schools—slow but consistent decline in the case of TEIP1 and TEIP2/3 schools, and stability in the case of TEIP4—seems unrelated to TEIPs’ start dates.

7. Discussion

In this paper, we address TEIP’s evaluation in one of its key objectives, i.e., the improvement of the students’ academic results. To more properly assess this objective, one would benefit from—on top of exam results—information on the students that take part in the program, namely their socioeconomic level, so it would be possible to control for changes in the composition of the schools’ population over the years. This would be important since one cannot rule out the possibility of differential changes in the group of schools throughout the years under analysis, which might account for detected differences. In fact, there are authors that have argued for the possibility of a stigma effect [

10], in which a school would suffer from prejudice after being identified as a TEIP school. This effect might have consequences on the school’s composition, namely by making it less attractive to better-performing students. As such information is not available, we will discuss the findings in light of these limitations.

As our results show, the national exams’ average of each batch of schools that have entered the program has not converged, after enrolment, with non-TEIP schools. What is more, two of the three batches show a diverging trend. Lacking data on the school composition, namely the socioeconomic status of its students, pushes us to discuss the plausibility of different scenarios: One where it is assumed that the composition of the TEIP schools, compared to the remaining public schools, did not change as a consequence of enrolling in the program; and an alternative scenario, in which one assumes that the schools’ composition did in fact change throughout the years.

Assuming that the socio-economic level of the TEIP schools’ students did not decrease over the years (in comparison to non-TEIP schools), the divergence of the average results leads us to conclude that the program, contrary to its intent, has hindered schools’ performance. Furthermore, in this scenario, the lack of change in the standard deviations means that all students have worsened their results. Indeed, if the average decrease came from a low-performing subgroup, standard deviations would have increased.

The alternative scenario is one in which the TEIP schools’ population, namely regarding its socioeconomic level, has been changing, particularly because of the stigma effect. The average decrease of the results would then be explained by the change in the population that is taking the exams and not by the specific features of the program. As in the first scenario, the stability of the standard deviations would also mean that the average decrease was not caused by a poor-performing sub-group, but by the overall population. This seems, in fact, coherent with the stigma hypothesis, which does anticipate that the best-performing students will look for (and succeed in entering) more prestigious schools, while worse-performing students will be “attracted” to TEIP schools.

Still, another crucial piece of information comes from the analysis of the proportion of national exams taken by TEIP, a line of enquiry that we have never seen pursued up until now. Results show the steady decrease in the relative proportion of national exams undertaken by TEIP schools throughout the years, namely from Phases 1 and 2/3, exactly the same schools that have been drifting apart from non-TEIP schools in relation to the national exams’ average. To be sure, it is precisely the two first batches of schools to join the program—which already showed a divergent trend prior to their entrance—that coincidently show a decrease in the proportion of national exams. The third batch of schools, which seems to follow non-TEIP schools closely (prior and after their enrolment), does not show such a decrease.

Considering all the arguments, the first scenario—which assumes no change in the schools’ population—seems highly unlikely. First of all, this would mean that TEIP schools (particularly the first two batches) would have, despite the increase in the human and material resources made available by the program, hindered their students’ performance. What is more, the lower results would come when a smaller proportion of students are taking national exams, while we do not have detailed data on the number of students enrolled in TEIP schools for the time series, we know that in 2007/08, there were 46.401 in TEIP schools, and that in 2017/18, there were 177.232. This compares to a total of 1.315.594 students in basic and secondary public schools in 2007/08, and 1.188.187 in 2017/18 (

www.pordata.pt), which means that the proportion of TEIP of students in basic and secondary schools has risen from 3.5% in 2007/08 to 15% in 2017/18. To be sure, whereas the lack of data of the number of students per year does not allow for more fine-tuned analysis, the overall decline of the proportion of exams undertook by TEIP schools is taking place while the proportion of students in TEIP schools has been increasing, which would mean that these schools were actually discouraging students that would otherwise choose a regular (i.e., non-vocational) educational path from doing so, and not only the lowest-performing students (since that would result in an increase in the average grade in national exams).

The alternative scenario, which assumes a (relative) change in the schools’ population, seems far more plausible. For once, the decrease in national exam performance in the overall population would immediately be explained by the lowering of the school’s students’ socioeconomic levels (and related lower performance). Furthermore, the decrease in the proportion of exams taken goes in line with the fact that Portugal has been investing in the diversification of school pathways, particularly in vocational programs, and the 40% increase in secondary education graduates from vocational programs between 2005 and 2014 [

22] is a symptom of that investment. The TEIP program has been no exception. The legal documents that regulate it (Law no. 55/2008, Law no. 20/2012) not only stress the need to improve the link between the school and the labor market, but also encourage schools to diversify their curricular offer through vocational programs. The decrease in the number of national exams carried out along the years is a good reason to believe that, in the case of the TEIP program, there has been an overinvestment in vocational programs in detriment of general, regular programs.

It is important to notice that, despite assessments of the plausibility of each scenario, the decrease in the proportion of exams surely suggests that TEIP schools are less and less leading their students into higher education. Among the OECD countries [

22], the differences in remuneration between those who have completed secondary education and those who have completed at least one cycle of studies in tertiary education reach 55%; this difference is higher in Portugal, amounting to 60%. Having said this, we can say that, on average, the more academic qualifications, the greater the probability of obtaining better jobs and income. That is, tertiary education is the cycle of studies that can offer better guarantees of access to better income and a more favorable social position. Therefore, if TEIP schools are not pushing their students into higher education but, on the contrary, into vocational education, are they really compensating for social inequalities? Could it even be argued that they are ultimately reinforcing social inequalities? Or, in a similar fashion, that the TEIP program has become a social selection program grounded on a sort of tracking rather than a positive discrimination program?

Another conclusion seems granted for any of the discussed scenarios: As a whole, TEIP schools have not been successful, regarding academic performance, in diminishing the gaps between their students and students from non-TEIP schools. Either way, i.e., regardless of the TEIP program or (also) because of it, the overall net conclusion from our results is one of an educational system that has become, throughout the years, more segregated, with groups of schools (TEIP1 and 2/3) drifting apart from other public schools. If the program is simply unable to curb other societal forces or is, in itself, part of the problem, remains open for discussion, though we do argue that the latter, according to the data presented, seems to be more plausible.