PLATON: Developing a Graphical Lesson Planning System for Prospective Teachers

Abstract

1. Introduction

- Clear requirements for a lesson planning system which is customizable and independent of planning models/frameworks

- Proposal for a generic data model for lesson plans

- Graphical approach for planning with a focus on time providing different views, analysis functions and automatic feedback

- Evaluation which shows the usefulness and usability of the developed prototype in real world planning scenarios

2. Lesson Plans

- formal aspects (e.g., name of the teacher, date, school, subject, room),

- description of the context (e.g., teaching intentions, learning outcomes, standards, learner group, previous and future lessons, assessment)

- visualization of the teaching process (e.g., a table containing the teaching steps, used media and the allotted time)

- attachments (e.g., worksheets, blackboard sketches)

3. Requirements

4. Existing Lesson Planning Tools

- reduction of the cognitive load, e.g., by providing structures/templates (e.g., IPAS, IIM, SLP)

- integration of helpful resources such as standards (e.g., STEPS, IPAS, Ph.)

- easy re-usage of existing lesson plans (e.g., EW, IPAS, IIM, SLP, LDw)

- support for the development of worksheets (e.g., IPAS)

- collaboration (e.g., EW)

- fostering reflection (e.g., based on visualizations (LAMS, LDSE, LDw) or checklists (IPAS))

- detailed modeling of computer-supported group interactions (e.g., LAMS)

- computer-supported execution of the plans/models (e.g., LAMS)

- provision of activity patterns, which can be directly used (e.g., LDSE)

5. The PLATON System

- graphical, time-based planning based on a timeline metaphor,

- specification of extensible structures (including customizable fields),

- explicit modeling of anticipated solutions and expectations on materials created by students,

- connecting individual lessons of a unit, especially by explicitly modeling of transitions between two lessons, and

- provision of different views (e.g., resource or multi-lesson view, fulfilled standards of a unit/lesson) as well as support functions to reflect on the planning (e.g., visualizations, statistical evaluations of the social forms used in a lesson/unit, and automatic feedback).

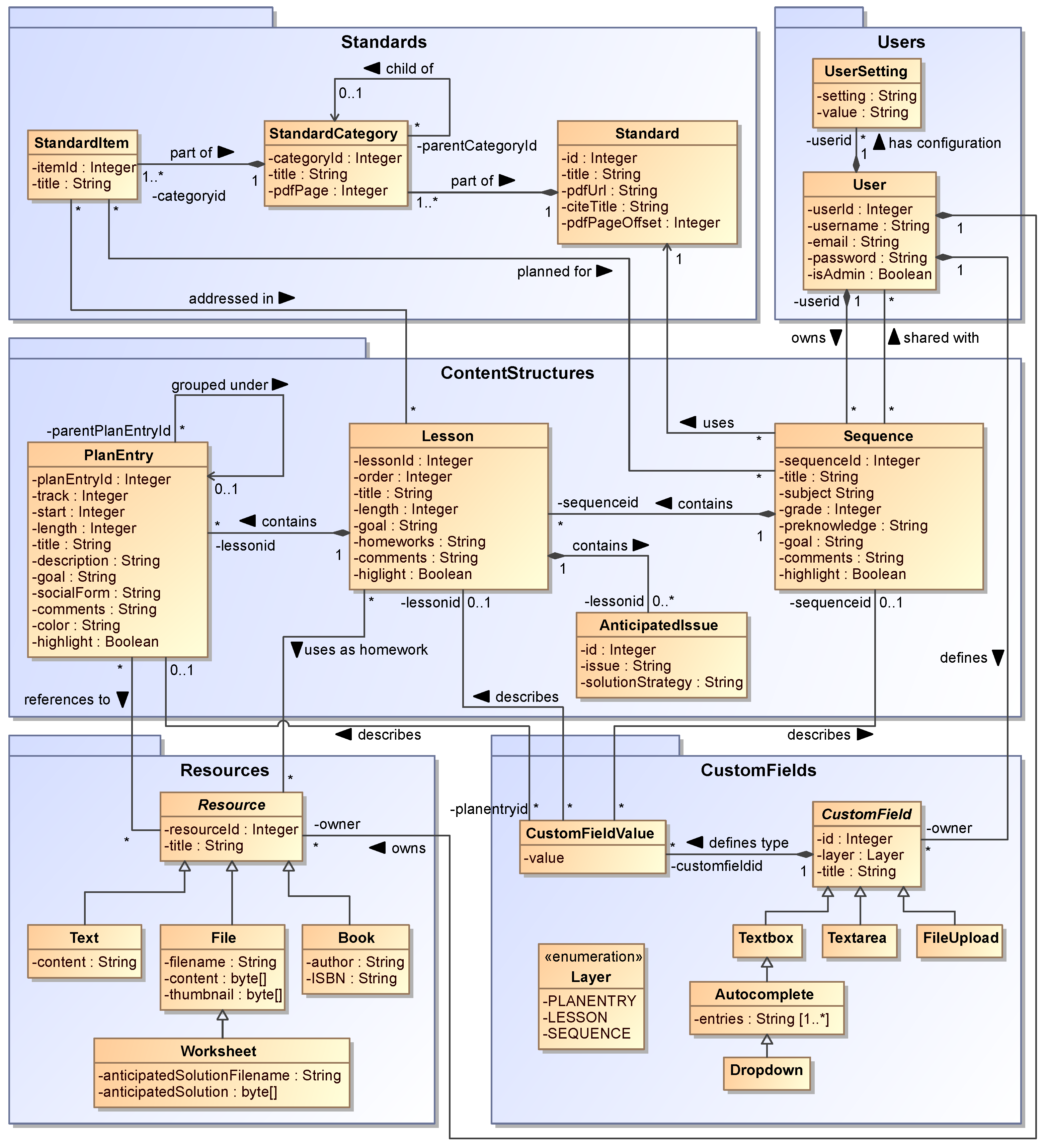

5.1. Data Model

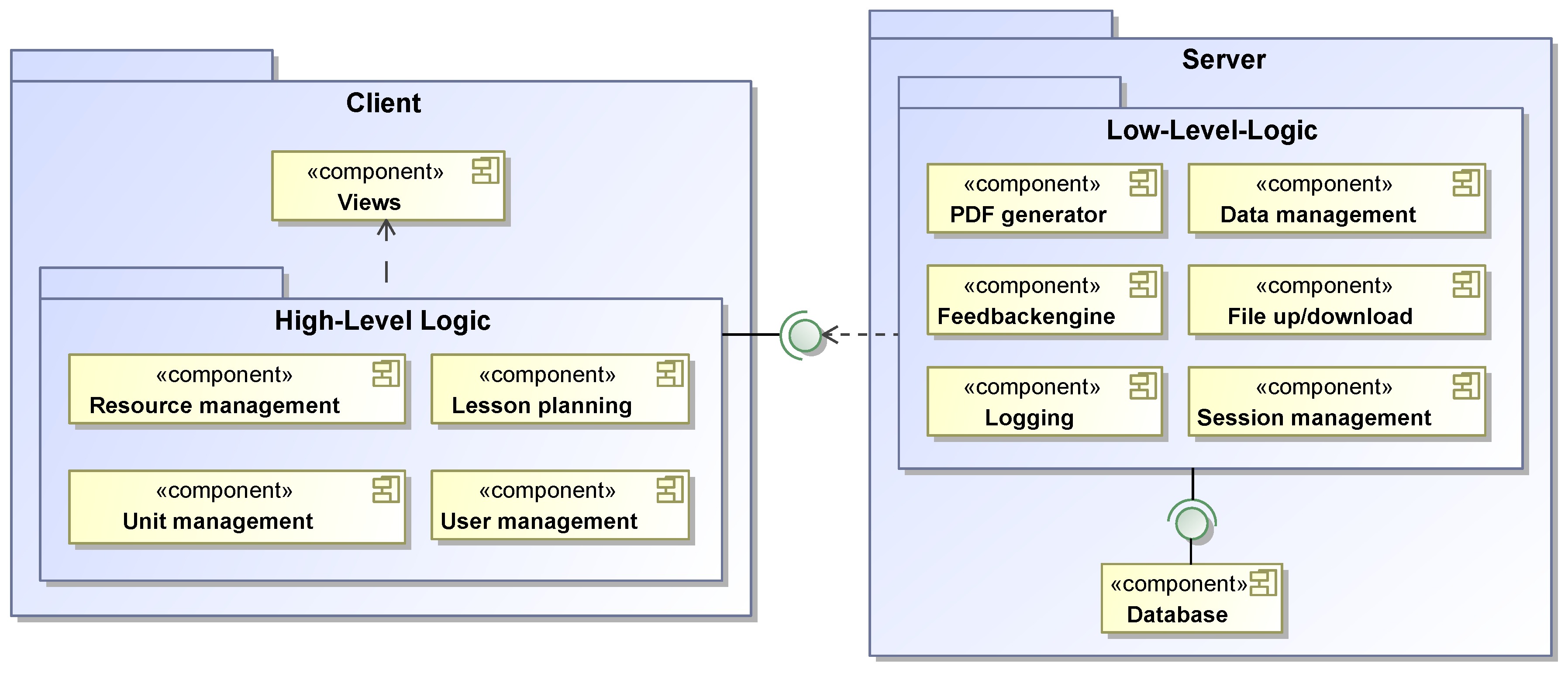

5.2. Architecture

5.2.1. Overall Architecture

5.2.2. Client Architecture

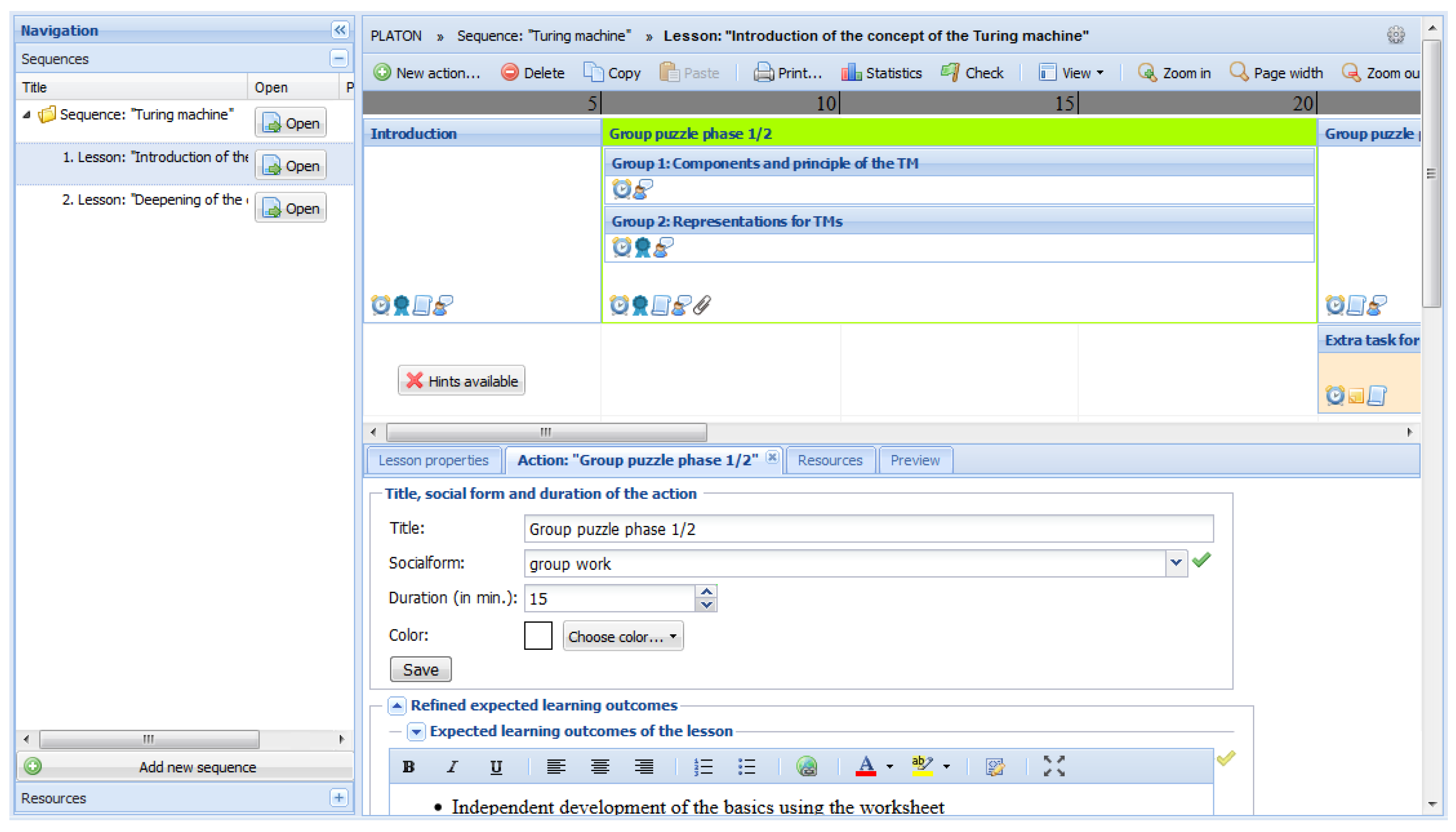

5.3. Implementation of the PLATON System

5.4. The Graphical User Interface

6. Evaluation of the PLATON Prototype

- How do the participants rate the graphical representation?

- -

- Is the graphical representation understandable and meaningful?

- -

- Is the graphical representation powerful enough to express all possible ideas/plans?

- -

- Does the graphical representation facilitate reflection?

- Are there fundamental usability issues?

- How do the participants rate the practicability of the tool?

- Which features were used and which ones are of particular importance?

6.1. General Results

6.2. The Graphical Representation

6.3. Practicability

6.4. Which Features Were Used and Which Ones Are of Particular Importance?

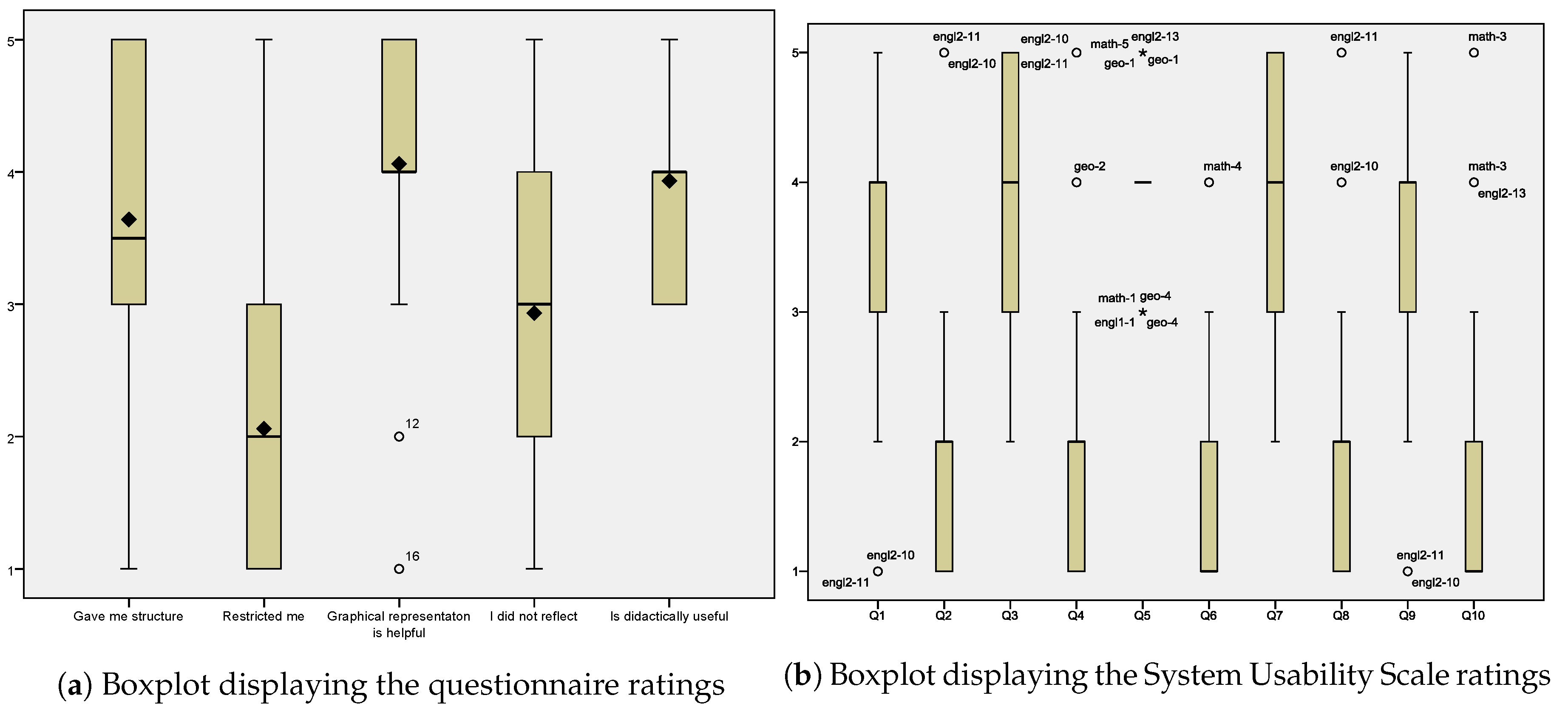

6.5. Quantitative Evaluation

6.5.1. Questionnaire

- The tool gave me structure in the planning process.

- The tool has restricted me in my planning process.

- The graphical notation for planning a lesson is helpful.

- I did not reflect on the lesson plan while planning.

- The given structure has been chosen didactically meaningful for lesson planning.

6.5.2. System Usability Scale

7. Discussion

8. Conclusions

Funding

Acknowledgments

Conflicts of Interest

Appendix A. Requirements

Appendix A.1. Functional Requirements

| Functional Requirement | No. | Criterion |

|---|---|---|

| Personal workspace (FR1) | FR1.1 | The system must provide a personal, protected workspace. |

| Adaptability and customizability of the system (FR2) | FR2.1 | The system must be customizable in terms of input fields, which can be used for planning activities, lessons, and units. |

| FR2.2 | The system must allow users to format the content in a known way such as common WYSIWYG office tools (e.g., enumerations, bold/italic text, …). | |

| Management of units (FR3) | FR3.1 | The system must allow users to create, delete and duplicate units. |

| FR3.2 | The system must provide users input fields for typical aspects (e.g., topic, description of the learner group and goals). | |

| FR3.3 | The system must allow creating, deleting, ordering, and duplicating lessons of a unit. | |

| Planning/editing of lessons (FR4) | FR4.1 | The system must provide users input fields for typical aspects (e.g., topic, duration of the lesson, goals). |

| FR4.2 | The system should provide users access to superordinate details (such as goals) of the unit without the need to change the current view. | |

| Planning teaching activities (FR5) | FR5.1 | The system must allow users to plan the sequence of teaching activities/phases of a lesson (including differentiations, nesting, parallel group tasks and alternatives). |

| FR5.2 | The system must provide input fields for the description of typical elements of activities/phases (e.g., title, duration, description) and optional elements (e.g., social form). | |

| FR5.3 | The system should automatically update depending values such as start and end times when modifying the teaching sequence. | |

| FR5.4 | The system must allow teachers to add backup time, i.e. more activities than the in-class time available. | |

| FR5.5 | The system must allow users to move teaching activities into other lessons of the unit. | |

| FR5.6 | The system should allow users to color code teaching activities. | |

| Standards/Curriculum (FR6) | FR6.1 | The system should make all relevant standards available directly within the system. |

| FR6.2 | The system should allow users to select and link standards to units and lessons. | |

| Resource management (FR7) | FR7.1 | The system must allow the users to create, view, edit, and delete resources such as books, links and arbitrary files. |

| FR7.2 | The system must allow resources to be associated with (multiple) activities of a lesson. | |

| Anticipated student solutions (FR8) | FR8.1 | The system must provide options for modeling resources created by students. It must be possible to describe these resources (as model solutions or e.g., to indicate a level of expectations). |

| Providing different views (FR9) | FR9.1 | The system should provide different specialized (over)views on the plan, such as all resources linked to a unit or a lesson, a timeline view of resource usage, standards used in the unit. |

| Statistical analyses (FR10) | FR10.1 | The system should provide various statistical evaluations such as an analysis of percentage of social forms used in the planned lessons. |

| Automatic feedback (FR11) | FR11.1 | The system should contain an automated analysis which provides hints on possible errors and optimizations (e.g., a missing/incomplete descriptions or an unrealistic schedule). |

| Notes and comments (FR12) | FR12.1 | The system must allow users to enter notes for units, lessons and teaching activities. |

| FR12.2 | The system should allow notes to be flagged in a way, so that these are highlighted. | |

| Sharing of plans (FR13) | FR13.1 | The system must allow users to share their plans with other registered users so that these designs can be viewed in the same way as self-developed ones. |

| Printing (FR14) | FR14.1 | The system must allow the lesson plan to be saved as a PDF file or printed on paper. |

| FR14.2 | The printout must be customizable so that users can influence which aspects are to be displayed (e.g., page orientation and sections to be included in the printout). | |

| Export (FR15) | FR15.1 | The system must allow exporting a unit or a single lesson containing all resources in a standardized format such as a ZIP archive that can be used without the planning tool. |

Appendix A.2. Non-Functional Requirements

| Non-Functional Requirement | No. | Criterion |

|---|---|---|

| Usability (NFR1) | NFR1.1 | The system should be based on common user-interface concepts and be as self-explanatory as possible. In particular, for use in timely constrained studies, the system must ensure a short training period (maximum 15 min, self-describing, learning support). |

| NFR1.2 | The system should at best avoid possible errors by selecting suitable metaphors or visual feedback, or at least rendering them understandable so that they can be easily remedied. | |

| Interoperability (NFR2) | NFR2.1 | The system must be platform independent and usable without installation. |

| Performance & Scalability (NFR3) | NFR3.1 | The user interface must respond within a reasonable time (<1 s). |

| NFR3.2 | The architecture must explicitly support scalability (vertical and/or horizontal). | |

| Customizability/Extendability (NFR4) | NFR4.1 | The architecture of the system should be modular with defined interfaces that extensions and adaptations are possible without significant effort. |

| Openness (NFR5) | NFR5.1 | The system should be fully developed, compiled and usable with open source software. |

| Neutral naming (NFR6) | NFR6.1 | When selecting predefined names or labels, neutral terms should be chosen in order to prevent the association with specific planning models. |

| Security (NFR7) | NFR7.1 | The system must be designed and implemented in such a way as unauthorized access and (typical) attack vectors (e.g., SQL injection, session hijacking, etc.) are prevented. |

References

- Westerman, D.A. Expert and novice teacher decision making. J. Teach. Educ. 1991, 42, 292–305. [Google Scholar] [CrossRef]

- Wild, M. Designing and evaluating an educational performance support system. Br. J. Educ. Technol. 2000, 31, 5–20. [Google Scholar] [CrossRef]

- Wiske, M.S.; Sick, M.; Wirsig, S. New technologies to support teaching for understanding. Int. J. Educ. Res. 2001, 35, 483–501. [Google Scholar] [CrossRef]

- Mutton, T.; Hagger, H.; Burn, K. Learning to plan, planning to learn: The developing expertise of beginning teachers. Teach. Teach. 2011, 17, 399–416. [Google Scholar] [CrossRef]

- Livingston, C.; Borko, H. Expert-novice differences in teaching: A cognitive analysis and implications for teacher education. J. Teach. Educ. 1989, 40, 36–42. [Google Scholar] [CrossRef]

- John, P.D. Lesson Planning for Teachers, reprint ed.; Cassell education: London, UK, 1995. [Google Scholar]

- Hattie, J. Lernen Sichtbar Machen. Visible Learning; Überarb. dt.-sprachige Ausg., 2., korr. Aufl.; Schneider-Verl. Hohengehren: Baltmannsweiler, Germany, 2014. [Google Scholar]

- Cameron, L. LAMS: Pre-Service Teachers Update the Old Lesson Plan. In Proceedings of Society for Information Technology & Teacher Education International Conference 2008; McFerrin, K., Weber, R., Carlsen, R., Willis, D.A., Eds.; Association for the Advancement of Computing in Education (AACE): Las Vegas, NV, USA, 2008; pp. 2517–2524. [Google Scholar]

- Shneiderman, B. Direct Manipulation: A Step Beyond Programming Languages. IEEE Comput. 1983, 16, 57–69. [Google Scholar] [CrossRef]

- Seel, A. Von der Unterrichtsplanung zum konkreten Lehrerhandeln—Eine Untersuchung zum Zusammenhang von Planung und Durchführung von Unterricht bei Hauptschullehrerstudentinnen. Unterrichtswissenschaft 1997, 25, 257–273. [Google Scholar]

- Meyer, H. Leitfaden zur Unterrichtsvorbereitung, 7. Auflage ed.; Cornelsen: Berlin, Germany, 2014. [Google Scholar]

- Strickroth, S. Unterstützungsmöglichkeiten für die computerbasierte Planung von Unterricht. Ein graphischer, zeitbasierter Ansatz mit automatischem Feedback. Ph.D. Thesis, Humboldt-Universität zu Berlin, Berlin, Germany, 2016. [Google Scholar] [CrossRef]

- Tyler, R.W. Basic Principles of Curriculum and Instruction; University of Chicago Press: Chicago, IL, USA, 1949. [Google Scholar]

- Yinger, R.J. A Study of Teacher Planning. Elem. Sch. J. 1980, 80, 107–127. [Google Scholar] [CrossRef]

- Klafki, W. Didactic analysis as the core of preparation of instruction (Didaktische Analyse als Kern der Unterrichtsvorbereitung). J. Curric. Stud. 1995, 27, 13–30. [Google Scholar] [CrossRef]

- Bybee, R.W.; Taylor, J.A.; Gardner, A.; Van Scotter, P.; Powell, J.C.; Westbrook, A.; Landes, N. The BSCS 5E Instructional Model: Origins and Effectiveness; BSCS: Colorado Springs, CO, USA, 2006; Volume 5, pp. 88–98. [Google Scholar]

- Marshall, J.C.; Horton, B.; Smart, J. 4Ex2 Instructional Model: Uniting Three Learning Constructs to Improve Praxis in Science and Mathematics Classrooms. J. Sci. Teach. Educ. 2009, 20, 501–516. [Google Scholar] [CrossRef]

- Esslinger-Hinz, I.; Wigbers, M.; Giovannini, N.; Hannig, J.; Herbert, L.; Jäkel, L.; Klingmüller, C.; Lange, B.; Neubrech, N.; Schnepf-Rimsa, E. Der ausführliche Unterrichtsentwurf; Beltz: Weinhein und Basel, Germany, 2013. [Google Scholar]

- Saad, A.; Chung, P.W.H.; Dawson, C.W. Effectiveness of a case-based system in lesson planning. J. Comput. Assist. Learn. 2014, 30, 408–424. [Google Scholar] [CrossRef]

- Chung, L.; do Prado Leite, J.C.S. On Non-Functional Requirements in Software Engineering. In Conceptual Modeling: Foundations and Applications; Lecture Notes in Computer Science; Borgida, A.T., Chaudhri, V.K., Giorgini, P., Yu, E.S., Eds.; Springer: Berlin/Heidelberg, Germany, 2009; Volume 5600, pp. 363–379. [Google Scholar] [CrossRef]

- Glinz, M. On Non-Functional Requirements. In Proceedings of the 15th IEEE International Requirements Engineering Conference, Delhi, India, 15–19 October 2007; pp. 21–26. [Google Scholar] [CrossRef]

- Löhner, S.; van Joolingen, W.R.; Savelsbergh, E.R. The effect of external representation on constructing computer models of complex phenomena. Instr. Sci. 2003, 31, 395–418. [Google Scholar] [CrossRef]

- Ainsworth, S. DeFT: A conceptual framework for considering learning with multiple representations. Learn. Instr. 2006, 16, 183–198. [Google Scholar] [CrossRef]

- Meyer, H. Was Ist Guter Unterricht? Cornelsen Scriptor: Berlin, Germany, 2004. [Google Scholar]

- Helmke, A. Was wissen wir über guten Unterricht? Über die Notwendigkeit einer Rückbesinnung auf den Unterricht als dem “Kerngeschäft” der Schule (II. Folge). Pädagogik 2006, 58, 42–45. [Google Scholar]

- Zhou, Y.; Gong, C. Research on Application of Wiki-Based Collaborative Lesson-Preparing. In Proceedings of the 4th International Conference on Wireless Communications, Networking and Mobile Computing 2008 (WiCOM ’08), Dalian, China, 12–14 October 2008; pp. 1–5. [Google Scholar] [CrossRef]

- Ren, Y.; Gong, C. Empirical Study on Application of Wiki Based Collaborative Lesson-Preparing. In Proceedings of the 2011 IEEE International Conference on Information Technology, Computer Engineering and Management Sciences (ICM), Nanjing, Jiangsu, China, 24–25 September 2011; Volume 4, pp. 138–141. [Google Scholar] [CrossRef]

- Krug, S. Don’t Make Me Think, Revisited: A Common Sense Approach to Web Usability, 3rd ed.; New Riders: Berkeley, CA, USA, 2014. [Google Scholar]

- Vaughan, N.; Lawrence, K. Investigating the role of mobile devices in a pre-service teacher education program. In Proceedings of E-Learn: World Conference on E-Learning in Corporate, Government, Healthcare, and Higher Education 2012; Bastiaens, T., Marks, G., Eds.; Association for the Advancement of Computing in Education (AACE): Montréal, QC, Canada, 2012; pp. 822–830. [Google Scholar]

- Park, S.H.; Baek, E.O.; An, J.S. Usability Evaluation of an Educational Electronic Performance Support System (E-EPSS): Support for Teacher Enhancing Performance in Schools (STEPS); Annual Proceedings of Selected Research and Development and Practice Papers Presented at the NationalConvention of the Association for Educational Communicationsand Technology; Association for Educational Communications and Technology: Bloomington, IN, USA, 2001; pp. 560–570. [Google Scholar]

- Masterman, E.; Craft, B. Designing and evaluating representations to model pedagogy. Res. Learn. Technol. 2013, 21. [Google Scholar] [CrossRef]

- Furnas, G.W.; Landauer, T.K.; Gomez, L.M.; Dumais, S.T. The Vocabulary Problem in Human-system Communication. Commun. ACM 1987, 30, 964–971. [Google Scholar] [CrossRef]

- Teachnology Incorporated. Lesson Plan Maker. Available online: http://www.teach-nology.com/web_tools/lesson_plan/ (accessed on 8 October 2019).

- Britain, S. A Review of Learning Design: Concept, Specifications and Tools. A report for the JISC E-Learning Pedagogy Programme. Available online: https://staff.blog.ui.ac.id/harrybs/files/2008/10/learningdesigntoolsfinalreport.pdf (accessed on 8 October 2019).

- Griffiths, D.; Blat, J. The role of teachers in editing and authoring units of learning using IMS Learning Design. Adv. Technol. Learn. 2005, 2, 243–251. [Google Scholar] [CrossRef]

- Prieto, L.; Dimitriadis, Y.; Craft, B.; Derntl, M.; Émin, V.; Katsamani, M.; Laurillard, D.; Masterman, E.; Retalis, S.; Villasclaras, E. Learning design Rashomon II: Exploring one lesson through multiple tools. Res. Learn. Technol. 2013, 21. [Google Scholar] [CrossRef]

- Katsamani, M.; Retalis, S.; Boloudakis, M. Designing a Moodle course with the CADMOS learning design tool. Educ. Media Int. 2012, 49, 317–331. [Google Scholar] [CrossRef]

- Derntl, M.; Neumann, S.; Oberhuemer, P. Opportunities and Challenges of Formal Instructional Modeling for Web-Based Learning. In Advances in Web-Based Learning—International Conference on Web-based Learning (ISCL); Lecture Notes in Computer Science; Leung, H., Popescu, E., Cao, Y., Lau, R.W.H., Nejdl, W., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; Volume 7048, pp. 253–262. [Google Scholar] [CrossRef]

- Hernández-Leo, D.; Villasclaras-Fernández, E.D.; Asensio-Pérez, J.I.; Dimitriadis, Y.; Jorrín-Abellán, I.M.; Ruiz-Requies, I.; Rubia-Avi, B. COLLAGE: A collaborative Learning Design editor based on patterns. J. Educ. Technol. Soc. 2006, 9, 58–71. [Google Scholar]

- Villasclaras-Fernández, E.; Hernández-Leo, D.; Asensio-Pérez, J.I.; Dimitriadis, Y. Web Collage: An implementation of support for assessment design in {CSCL} macro-scripts. Comput. Educ. 2013, 67, 79–97. [Google Scholar] [CrossRef]

- Miao, Y.; Hoeksema, K.; Hoppe, H.U.; Harrer, A. CSCL Scripts: Modelling Features and Potential Use. In Proceedings of the 2005 Conference on Computer Support for Collaborative Learning: Learning 2005: The Next 10 Years! International Society of the Learning Sciences (CSCL ’05), Taipei, Taiwan, 30 May–4 June 2005; pp. 423–432. [Google Scholar]

- Prieto, L.P.; Tchounikine, P.; Asensio-Pérez, J.I.; Sobreira, P.; Dimitriadis, Y. Exploring teachers’ perceptions on different {CSCL} script editing tools. Comput. Educ. 2014, 78, 383–396. [Google Scholar] [CrossRef]

- Wild, M. Developing Performance Support Systems for Complex Tasks: Lessons from a Lesson Planning System. Ph.D. Thesis, Edith Cowan University, Faculty of Communication, Health and Science, Perth, Australia, 1998. [Google Scholar]

- Northrup, P.T.; Pilcher, J.K. STEPS: An EPSS Tool for Instructional Planning. In Proceedings of the Selected Research and Development Presentations at the National Convention of the Association for Educational Communications and Technology (AECT), St. Louis, MI, USA, 18–22 February 1998; pp. 309–314. [Google Scholar]

- Liu, T.C.; Juang, Y.R. IPAS—Teacher’s Knowledge Management Platform for Teachers Professional Development. In Proceedings of the International Conference on Engineering Education, Manchester, UK, 18–21 August 2002; pp. 18–22. [Google Scholar]

- Hansen, C.C. Technology as an Electronic Mentor: Scaffolding Preservice Teachers in Writing Effective Literacy Lesson Plans. J. Early Child. Teach. Educ. 2006, 27, 129–148. [Google Scholar] [CrossRef]

- Sloop, B.; Horton, B.; Marshall, J.; Higdon, R. Mathematical Inquiry: An Instructional Model and Web-Based Lesson-Planning Tool for Creating, Refining, and Sharing Inquiry-Based Lessons. MathMate 2014, 36, 28–36. [Google Scholar]

- Dalziel, J. Implementing Learning Design: The Learning Activity Management System (LAMS). In Proceedings of the Interact, Integrate, Impact: 20th Annual Conference of the Australasian Society for Computers in Learning in Tertiary Education, Adelaide, Australia, 7–10 December 2003; Crisp, G., Thiele, D., Scholten, I., Barker, S., Baron, J., Eds.; Australasian Society for Computers in Learning in Tertiary Education: Adelaide, Australia, 2003; pp. 593–596. [Google Scholar]

- Campbell, C.; Cameron, L. Using Learning Activity Management Systems (LAMS) with pre-service secondary teachers: An authentic task. In Proceedings of the 26th ASCILITE Auckland 2009, Auckland, New Zealan, 6–9 December 2009. [Google Scholar]

- Masterman, E.; Manton, M. Teachers’ perspectives on digital tools for pedagogic planning and design. Technol. Pedagog. Educ. 2011, 20, 227–246. [Google Scholar] [CrossRef]

- Laurillard, D.; Charlton, P.; Craft, B.; Dimakopoulos, D.; Ljubojevic, D.; Magoulas, G.; Masterman, E.; Pujadas, R.; Whitley, E.; Whittlestone, K. A constructionist learning environment for teachers to model learning designs. J. Comput. Assist. Learn. 2013, 29, 15–30. [Google Scholar] [CrossRef]

- Laurillard, D.; Kennedy, E.; Charlton, P.; Wild, J.; Dimakopoulos, D. Using technology to develop teachers as designers of TEL: Evaluating the learning designer. Br. J. Educ. Technol. 2018, 49, 1044–1058. [Google Scholar] [CrossRef]

- Agostinho, S. The use of a visual learning design representation to document and communicate teaching ideas. In Proceedings of the 23rd Annual Ascilite Conference: Who’s Learning? Whose Technology? Markauskaite, L., Goodyear, P., Reimann, P., Eds.; Sydney University Press: Sydney, Australia, 2006; pp. 3–7. [Google Scholar]

- Baylor, A.L.; Ryu, J. The Effects of Image and Animation in Enhancing Pedagogical Agent Persona. J. Educ. Comput. Res. 2003, 28, 373–394. [Google Scholar] [CrossRef]

- Zhang, J. The Nature of External Representations in Problem Solving. Cogn. Sci. 1997, 21, 179–217. [Google Scholar] [CrossRef]

- Fowler, M. Analysemuster: Wiederverwendbare Objektmodelle; Addison Wesley: Bonn, Germany, 1999. [Google Scholar]

- Gamma, E.; Helm, R.; Johnson, R.; Vlissides, J. Entwurfsmuster. Elemente wiederverwendbare objektorientierter Software; Addison Wesley: München, Germany, 2011. [Google Scholar]

- Coad, P. Object-oriented Patterns. Commun. ACM 1992, 35, 152–159. [Google Scholar] [CrossRef]

- Fowler, M. Patterns of Enterprise Application Architecture; The Addison-Wesley Signature Series; Addison Wesley: Boston, MA, USA, 2003. [Google Scholar]

- Fowler, M. Passive View. Available online: https://www.martinfowler.com/eaaDev/PassiveScreen.html (accessed on 8 October 2019).

- Fraternali, P.; Rossi, G.; Sánchez-Figueroa, F. Rich Internet Applications. IEEE Internet Comput. 2010, 14, 9–12. [Google Scholar] [CrossRef]

- GWT Project. Available online: http://www.gwtproject.org/ (accessed on 8 October 2019).

- Idera Incorporated. Sencha GXT. Available online: https://www.sencha.com/products/gxt/ (accessed on 8 October 2019).

- Mordani, R. Java™ Servlet Specification Version 3.0. 2009. Available online: https://www.jcp.org/en/jsr/detail?id=315 (accessed on 8 October 2019).

- Apache Foundation. Apache Tomcat. Available online: https://tomcat.apache.org/ (accessed on 8 October 2019).

- PhantomJS—Scriptable Headless Browser. Available online: https://phantomjs.org/ (accessed on 8 October 2019).

- MariaDB Foundation. MariaDB.org—Supporting Continuity and Open Collaboration. Available online: https://mariadb.org/ (accessed on 8 October 2019).

- Free Software Foundation. GNU Affero General Public License, Version 3 (AGPL-3.0). Available online: https://opensource.org/licenses/AGPL-3.0 (accessed on 8 October 2019).

- Strickroth, S. PLATON—A Time-Based, Graphical Lesson Planning Tool. Available online: https://platon.strickroth.net (accessed on 8 October 2019).

- Strickroth, S.; Pinkwart, N. Softwaresupport für die graphische, zeitbasierte Planung von Unterrichtseinheiten. In DeLFI 2014—Die 12. e-Learning Fachtagung Informatik; Trahasch, S., Plötzner, R., Schneider, G., Sassiat, D., Gayer, C., Wöhrle, N., Eds.; Gesellschaft für Informatik: Bonn, Germany, 2014; pp. 314–319. [Google Scholar]

- Strickroth, S.; Pinkwart, N. Planung von Schulunterricht: Automatisches Feedback zur Reflexionsanregung über eigene Unterrichtsentwürfe. In Tagungsband der 15. e-Learning Fachtagung Informatik (DeLFI); GI Lecture Notes in Informatics; Köllen Druck+Verlag GmbH: Bonn, Germany, 2017. [Google Scholar]

- Terhart, E. Teacher Induction in Germany: Traditions and Perspectives. In Professional Inductions of Teachers in Europe and Elsewhere; Zuljan, M.V., Vogrinc, J., Eds.; Faculty of Education, University of Ljubljana: Ljubljana, Slovenia, 2007; pp. 154–166. [Google Scholar]

- Lindgaard, G.; Chattratichart, J. Usability Testing: What Have We Overlooked? In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems (CHI ’07), San Jose, CA, USA, 28 April–4 May 2007; ACM: New York, NY, USA, 2007; pp. 1415–1424. [Google Scholar] [CrossRef]

- Dillen, A. The evaluation of software usability. In Encyclopedia of Human Factors and Ergonomics; Karwowski, W., Ed.; Taylor & Francis: London, UK, 2001; Volume II, pp. 1110–1112. [Google Scholar]

- Brooke, J. SUS—A quick and dirty usability scale. In Usability Evaluation in Industry; Thomas, P.W.J.B., Weerdmeester, B., McClelland, I.L., Eds.; Taylor & Francis Ltd.: London, UK, 1996; pp. 189–194. [Google Scholar]

- Finstad, K. The system usability scale and non-native english speakers. J. Usability Stud. 2006, 1, 185–188. [Google Scholar]

- Lohmann, K. System Usability Scale (SUS)—An Improved German Translation of the Questionnaire. Available online: https://web.archive.org/web/20160330062546/http://minds.coremedia.com/2013/09/18/sus-scale-an-improved-german-translation-questionnaire/ (accessed on 8 October 2019).

- Bangor, A.; Kortum, P.; Miller, J. Determining what individual SUS scores mean: Adding an adjective rating scale. J. Usability Stud. 2009, 4, 114–123. [Google Scholar]

- Gong, C.; Zhou, Y. The Piloting Researches on Collaborative Lesson-preparing based on Eduwiki Platform. In Proceedings of the IEEE International Conference on Computer Science and Software Engineering, Wuhan, China, 12–14 December 2008; Volume 5, pp. 113–116. [Google Scholar] [CrossRef]

- Mühlhausen, U. Über Unterrichtsqualität ins Gespräch kommen: Szenarien für eine Virtuelle Hospitation mit multimedialen Unterrichtsdokumenten und Eigenvideos; Schneider-Verl. Hohengehren: Baltmannsweiler, Germany, 2011. [Google Scholar]

- Rupp, C. Requirements-Engineering und -Management; Hanser: München, Germany, 2014. [Google Scholar]

| No. | Functional Requirement | Short Motivation |

|---|---|---|

| FR1 | Personal workspace | Lesson planning is a process which is undertaken by (usually) one teacher for a specific learner group. As a draft may include personal information about specific students and, therefore, should not be publicly available (cf. NFR7). |

| FR2 | Adaptability and customizability of the system | A lesson plan can or should include different aspects—depending on the underlying model or specifications of the mentor (cf. Section 2). Moreover, many teachers have a clear opinion on how their drafts and plans should look like. Therefore, a planning tool needs to be customizable in order to fulfill these different requirements. |

| FR3 | Management of units | In practice, classes are not typically planned and conducted in isolated lessons, but in units which consist of multiple connected lessons. Usually not all lessons of a unit are planned at once but iteratively. Especially for more advanced teachers, re-usage of already existing plans plays an important role. |

| FR4 | Planning/editing of lessons | After the design of the unit, the next step is to plan the associated lessons in more detail. Here, the goals of a lesson are determined before designing the detailed sequence of teaching activities (cf. FR5). |

| FR5 | Planning teaching activities | A lesson is usually structured into several distinct teaching activities or phases. These, in turn, need to be described, e.g., in terms of their title, duration, or social form. Oftentimes, teaching activities are sequenced linearly, however, for internal differentiation and group tasks they can also occur in parallel (or might be modelled as one activity with two nested group tasks). As the lesson plan is steadily reflected on, revised and complemented by the teachers, adjustment procedures need to be designed intuitively. |

| FR6 | Standards/Curriculum | Teachers have to obey curricular standards while often in need of justification, e.g., by citing those standards. |

| FR7 | Resource management | Resources such as textbooks, worksheets, presentations, or drafts for blackboard drawings are elementary parts of lessons and therefore needs to be taken into account during planning. They are closely linked to the context such as learning group, goals, and current teaching activity. In addition, materials or blackboard drawings may also be relevant at multiple distinct teaching activities or in following lessons and should therefore also be referenceable there. This also helps reflecting on which resources are needed at a certain time and the management of those (in class). |

| FR8 | Anticipated student solutions | Not only do teachers develop and share resources, but also learners are often encouraged to write notes, texts, draw pictures, create posters, or edit worksheets. This might happen directly in class or in the form of homework assignments. These types of resources (learning solutions) are often used as a basis for following lessons (e.g., discussions, presentations or comparisons) and should therefore also be anticipated while planning. |

| FR9 | Providing different views | To promote reflection on the plannings, it is helpful to highlight different aspects and provide dedicated overviews with different levels of abstraction and specialized representations (cf. [22,23]). |

| FR10 | Statistical analyses | Diversity of methods is often seen as a quality feature for high quality teaching [24,25]. To uncover possible imbalances in e.g., social forms, it is helpful to provide a statistical analysis. |

| FR11 | Automatic feedback | Mentors are not always available to give timely feedback. Algorithmically generated feedback can fill this gap by providing hints 24/7 and, thus, stimulate reflection. |

| FR12 | Notes and comments | The planning process is often carried out iteratively and thereby refined several times. Therefore, certain aspects might remain “open” at an early stage but need to be further elaborated at a later time. Comments, however, are not only important in the planning phase but can also be used to reflect the planning and and documenting the experiences following the implementation of a lesson. |

| FR13 | Sharing of plans | Sharing allows a mentor to view the most recent version of the plan. Many teachers find exchanging their designs helpful in order to receive feedback or as a source of inspiration for their own plans (cf. [26,27]). |

| FR14 | Printing | Teachers are often asked to submit their final lesson plan on paper to a supervisor before implementing a lesson plan. In addition, the plan may serve as an orientation during the implementation of the lesson plan. |

| FR15 | Export | One the one hand, more and more classrooms are equipped with computers (for teachers). Therefore, exporting a complete lesson plan that can be used directly in class would be helpful. On the other hand, personal backups need to be possible. |

| No. | Non-Functional Requirement | Short Motivation |

|---|---|---|

| NFR1 | Usability | The user interface (concept, complexity, appearance, …) and its behavior (expectation conformity, controllability, fault tolerance, …) have a decisive influence on how a system is perceived and used [28]. This is important as the system should be used by non-tech-savvy users. |

| NFR2 | Interoperability | The software must be usable on various operating systems and platforms (even mobile devices, as those are often used for quick lookups and recalling the planning; cf. [29]). In addition, the system must be usable if users do not have administrator permissions (i.e., in schools). |

| NFR3 | Performance & Scalability | For practical use, especially on mobile devices, it is important that both the initial loading and the starting time as well as the response time, especially for frequently used functions, are as low as possible. Also, scalability plays a special role for (open) field studies or a broad productive use when the system has to stay usable with increasing number of users (e.g., by adding resources). |

| NFR4 | Customizability/Extendability | Research lives from trying out and evaluating new, innovative ideas. To avoid having to design a completely new system for each approach, it would be easier to adapt an existing system. |

| NFR5 | Openness | To pass on the system to other scientists and to make it more widely applicable in practice, it is necessary not to use any tools, compilers or libraries for which license fees are charged. |

| NFR6 | Neutral naming | As described in Section 2, there are various planning templates/frameworks available. Almost every one of these has its own terminologies, theories or concepts. Special terms should be avoided as they might imply a specific concept (cf. [18]). This is not a theoretical problem and has already been discussed for planning systems in [30,31]. However, the selection of alternative terms should be done with great care so as not to create confusion or other hurdles (see “The Vocabulary Problem”, cf. [32]). Even if the system uses neutral terms, it should also be usable with specific didactics using its terminology (see FR2, adaptability and customizability of the system). |

| NFR7 | Security | Lesson plans often include personal and private data, which needs to be protected. This applies in particular if a (research) prototype is to be offered or used productively on the internet. |

| Requirements | LPS | STEPS | IPAS | TS | EW | IIM | SLP | LAMS | Ph. | LDSE | LDw |

|---|---|---|---|---|---|---|---|---|---|---|---|

| FR1: Pers. workspace | (+) | + | + | + | + | + | + | + | + | (+) | + |

| FR2: Customizability | − | − | − | − | (−) | (+) | − | (+) | + | − | − |

| FR3: Sequences | − | (+) | (+) | (+) | − | − | − | − | (+) | (+) | − |

| FR4: Lessons | (+) | (+) | (+) | (+) | (−) | (+) | (+) | (+) | (+) | (+) | (+) |

| FR5: Activity planning | (+) | ? | (+) | (+) | (+) | (+) | (+) | (+) | (+) | (+) | (+) |

| FR6: Standards | − | (+) | (+) | (+) | − | (+) | (+) | − | − | − | − |

| FR7: Resource mgmnt. | − | − | (+) | − | (+) | (+) | − | (+) | − | (+) | (+) |

| FR8: Anticip. solut. | − | − | − | − | − | − | − | − | − | − | − |

| FR9: Views | − | − | − | (+) | − | − | − | − | − | (+) | (+) |

| FR10: Statistical eval. | − | − | − | − | − | − | − | − | − | (+) | (+) |

| FR11: Automatic FB | − | − | − | − | − | − | − | − | − | − | − |

| FR12: Comments | (+) | ? | (+) | − | (+) | − | − | − | − | − | + |

| FR13: Sharing | − | − | + | ? | (+) | + | + | + | + | − | + |

| FR14: Printing | (+) | (+) | ? | ? | (+) | (+) | (+) | − | (+) | − | (+) |

| FR15: Export | ? | − | ? | ? | ? | − | ? | (−) | (−) | (−) | (−) |

| Subject | Computer Science | Computer Science | Matd | Geography | English |

| Experience | Bachelor | Post-University | Master | Master | Bachelor/Master |

| # participants | 10 | 18 | 7 | 11 | 15 |

| Design | lab | field | lab | lab | field |

| Duration | 120 min. | 4–5 weeks | 210 min. | 150 min. | 2 weeks |

© 2019 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Strickroth, S. PLATON: Developing a Graphical Lesson Planning System for Prospective Teachers. Educ. Sci. 2019, 9, 254. https://doi.org/10.3390/educsci9040254

Strickroth S. PLATON: Developing a Graphical Lesson Planning System for Prospective Teachers. Education Sciences. 2019; 9(4):254. https://doi.org/10.3390/educsci9040254

Chicago/Turabian StyleStrickroth, Sven. 2019. "PLATON: Developing a Graphical Lesson Planning System for Prospective Teachers" Education Sciences 9, no. 4: 254. https://doi.org/10.3390/educsci9040254

APA StyleStrickroth, S. (2019). PLATON: Developing a Graphical Lesson Planning System for Prospective Teachers. Education Sciences, 9(4), 254. https://doi.org/10.3390/educsci9040254