1. Introduction

With the advantages of digital technologies, a large number of data ranging from students’ online engagement to grades are available for both face-to-face and online courses in Australian universities; these course analytics data can be observed and analysed in order to improve the quality of teaching and learning environments. There is a global pressure to reform the engineering educational curriculum and teaching and learning environment so that the graduate capabilities of students can be achieved [

1,

2]. Well defined learning objectives of a course are usually assessed using multiple grading systems, as a single grading system may not be able to measure students’ understandings on course objectives [

3]. Cavenett [

1] criticised the tradition grading systems of engineering courses and mentioned that there is more focus on assessing the hard technical knowledge and narrow focus on soft skills including professionalism, teamwork and communication. Sustainable development concepts are also required to be included in the curriculum and assessment tasks to make engineering graduates ready for the twenty-first century [

4]. According to Jikaran-Doe and Doe [

5], utilization of appropriate technology in the teaching environment may influence learning outcomes of a course. Chowdhury [

6] noted that modern teaching methods include contemporary practices using project-based learning, problem-based learning, work integrated learning and integrated learning approaches.

Modern teaching and learning environment is enhanced with technology-rich approaches. It includes pedagogical, technological and social elements to support learning styles to be flexible, open and accessible to resources in learning process [

7]. According to Han and Ellis [

8], face-to-face or online discussions are integral part of teaching and learning environment, as discussions allow learners to have more time to critically read, review, reflect, participate and engage with the topics. Course analytics, sometimes known as Learning Analytics, can be defined as a big data in a digital learning scenario [

9]. It aims at collection, analysis and measurement of course dashboard data in order to understand and optimize learning and teaching environment of a course or program [

10]. It also aims to interpret and visualize data for improved learning [

11]. It is an automatic and effective tracking systems of students’ engagement [

12]. Campbell et al. [

13] defined the course analytics as “analysing institutional data captured by a learning management system”. It is the analysis of qualitative data gathered from learning behaviour [

14]. Course/learning analytics is also referred to as academic analytics; according to Maseleno et al. [

15], academic analytics deals with the improvement of teaching and learning process, resource optimization, work balance and institutional key performance measurement through the uses of learners, academics and institutional data. It helps to demonstrate institutional accountability with regard to students’ successes in public. However, course analytics is more specified on the learning process and it empowers the learners to recognize “the wealth of data related to learning” [

16].

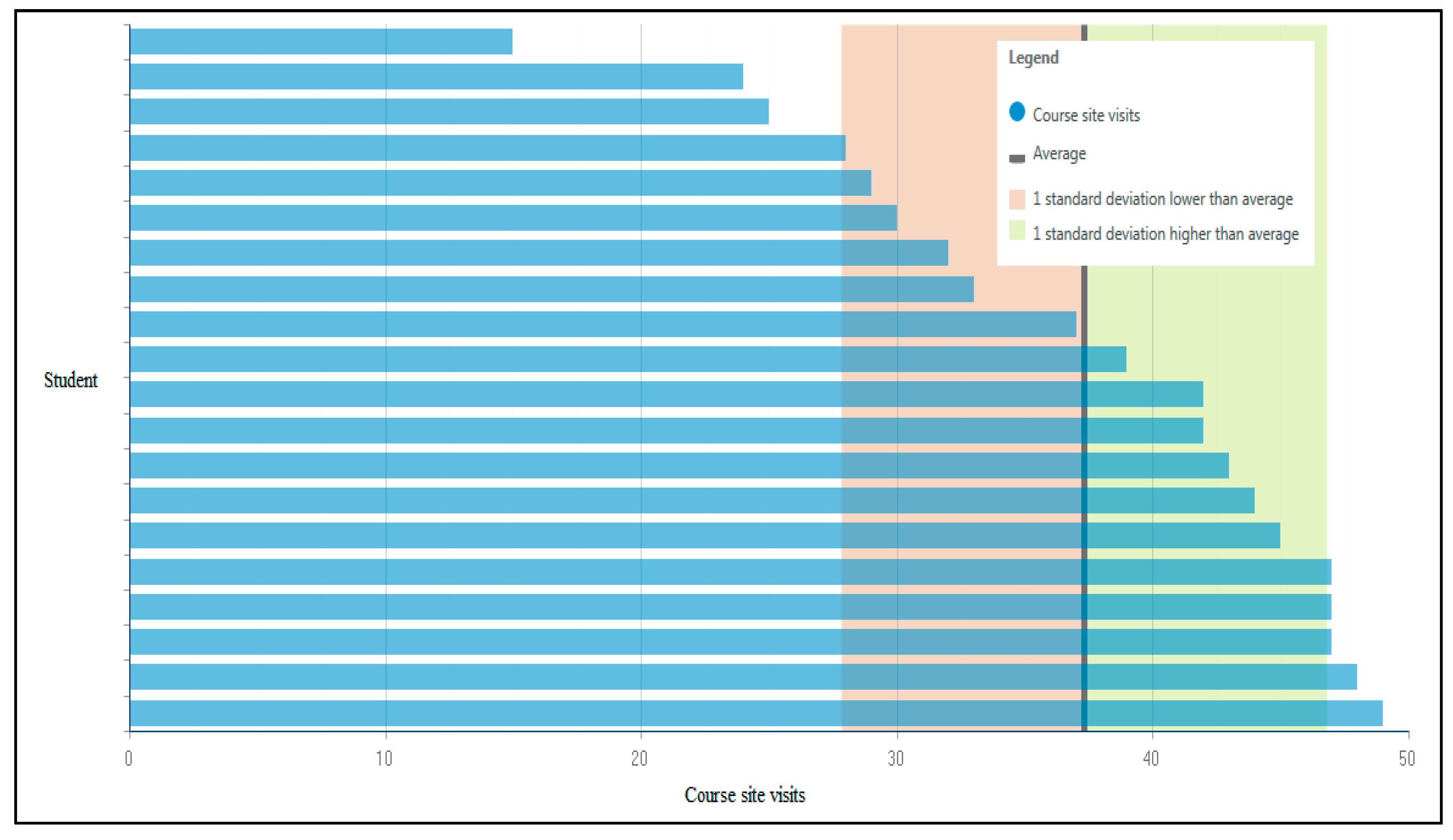

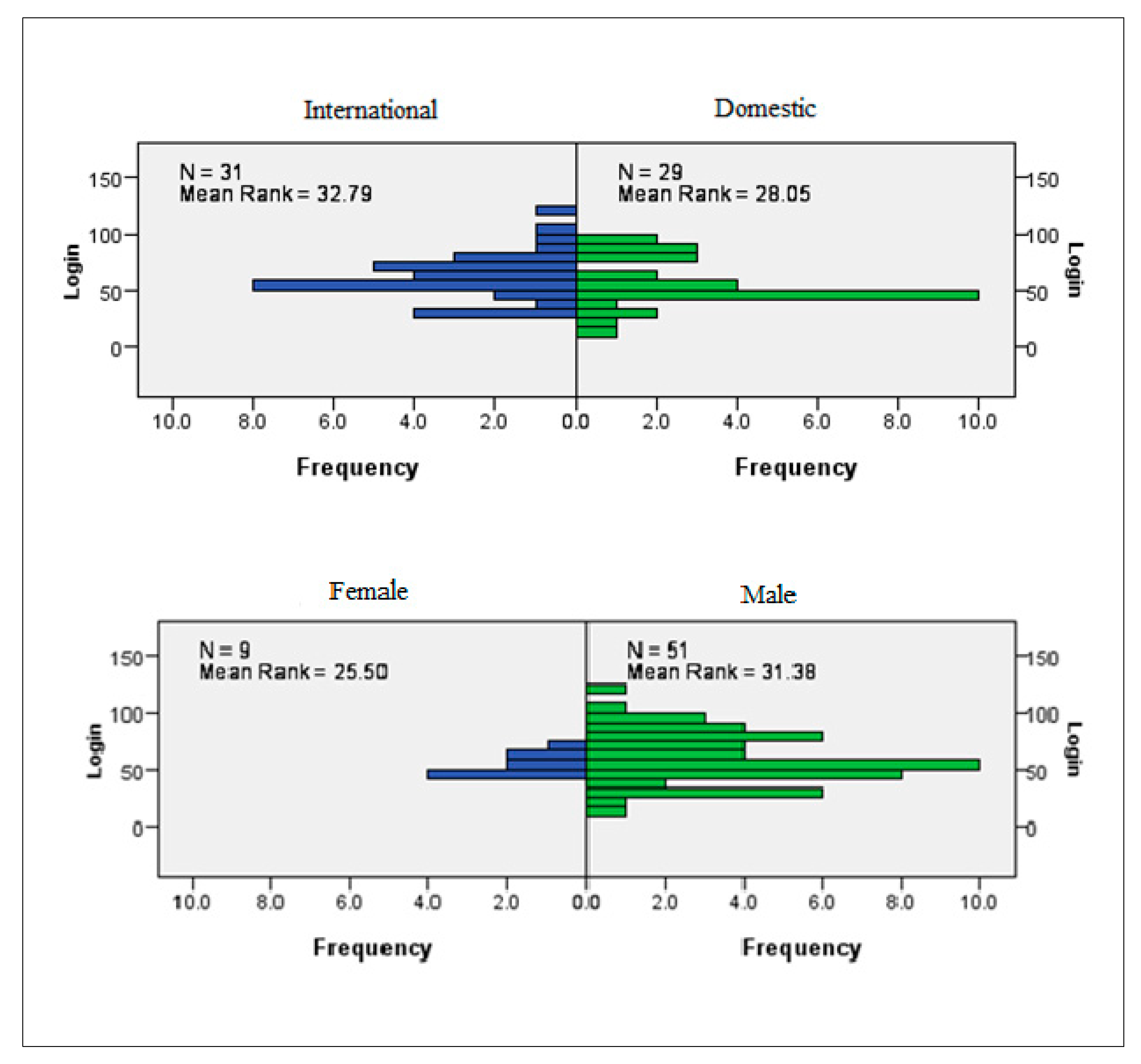

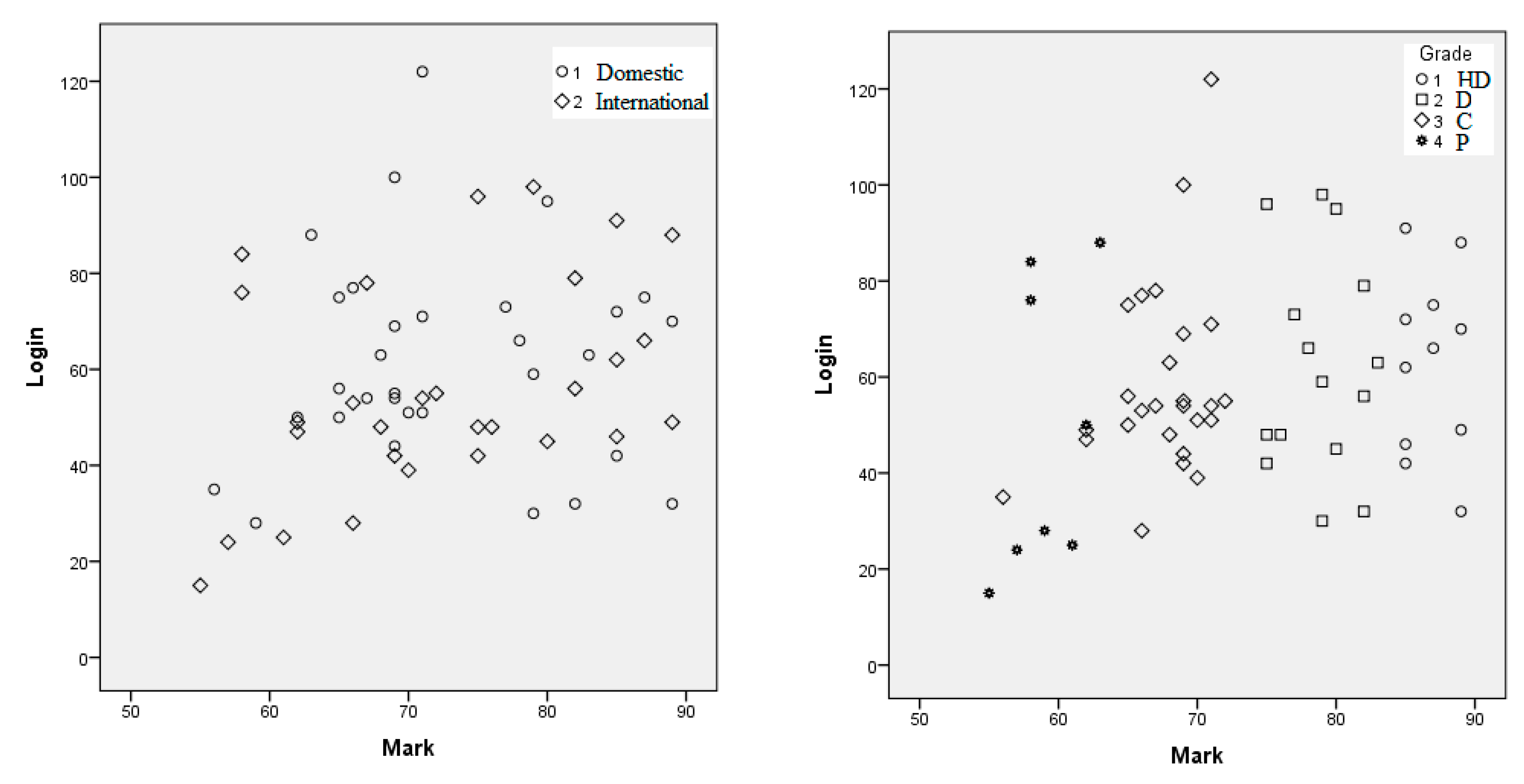

Figure 1 shows a typical visualization of students learn-online engagement data for a course.

Hwang et al. [

17] argued that characteristics of a course and students’ learning performances are understood using the conceptual framework of analytics data. According to Lu et al. [

18], students’ academic performances can be predicted observing learn-online engagement data even after one-third the duration of a semester. Maseleno et al. [

15] demonstrated that course analytics can be utilised to improve the personalized learning. It can also influence the teachers to adjust the delivery mode of instruction [

5]. A survey conducted by Tucker et al. [

19] to nearly 50,000 students enrolled in Open University of Australia online courses indicated that students were very satisfied for the online interactions with educators and fellow students.

Course analytics data can also be used to identify the students at risk and likely dropout groups [

20]. Hachey et al. [

21] collected course analytics data for 962 students and observed that students with limited experience in learn-online engagement obtained low grades and had low retention rates. Lu et al. [

22] conducted an experiment to evaluate the effectiveness of course analytics in learning activity over 10 weeks in a Taiwanese university and observed that using analytics data improved students’ learning outcomes and engagement. Interventions are required by the instructors for the effective use of course analytics data. Intervention strategies have already been developed by [

23], Leeuwen et al. [

24] and Syah et al. [

25]. Optimum utilization of course analytics data requires academics to upgrade skills for ICT and pedagogy simultaneously in a safe, powerful and cost-effective manner and higher educational institutions should provide adequate supports, professional development trainings and environments to academics [

26].

Using course analytics data is challenging, as all data are not meaningful for teaching, learning and educational research purposes [

27]. Saqr et al. [

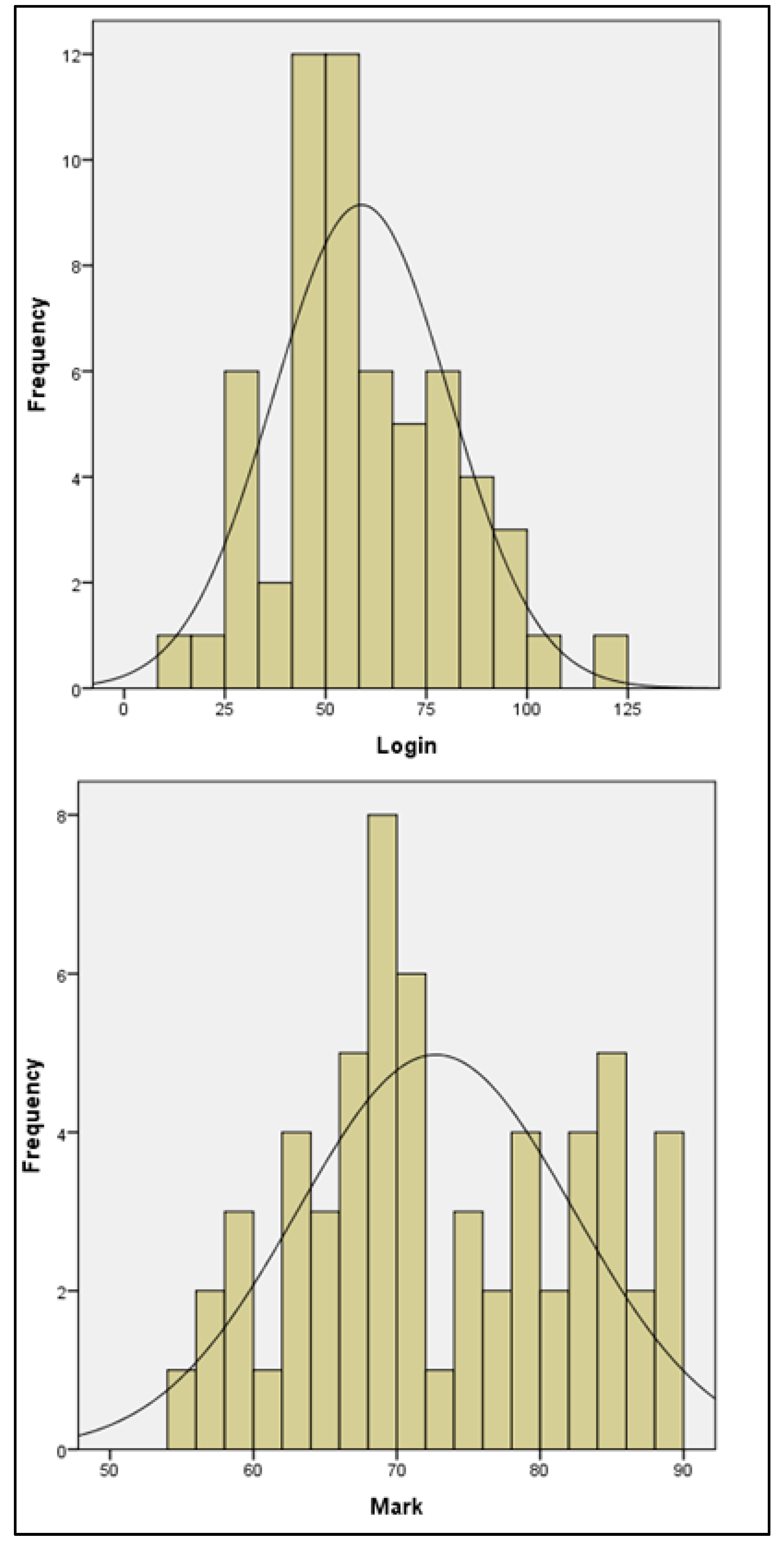

12] observed moderate correlation (r = 0.47; n = 131) between frequency of logins and final grades for medical students (n = 131). They also observed weak relationships (r = 0.25; n = 131) between views/hits and final grade for the same study. Beer at al. [

28] investigated learning analytics data for 80,000 students and revealed that correlation of students’ engagement with final marks were significantly variable. Moodle provides basic course analytics data for a particular course. Pradas et al. [

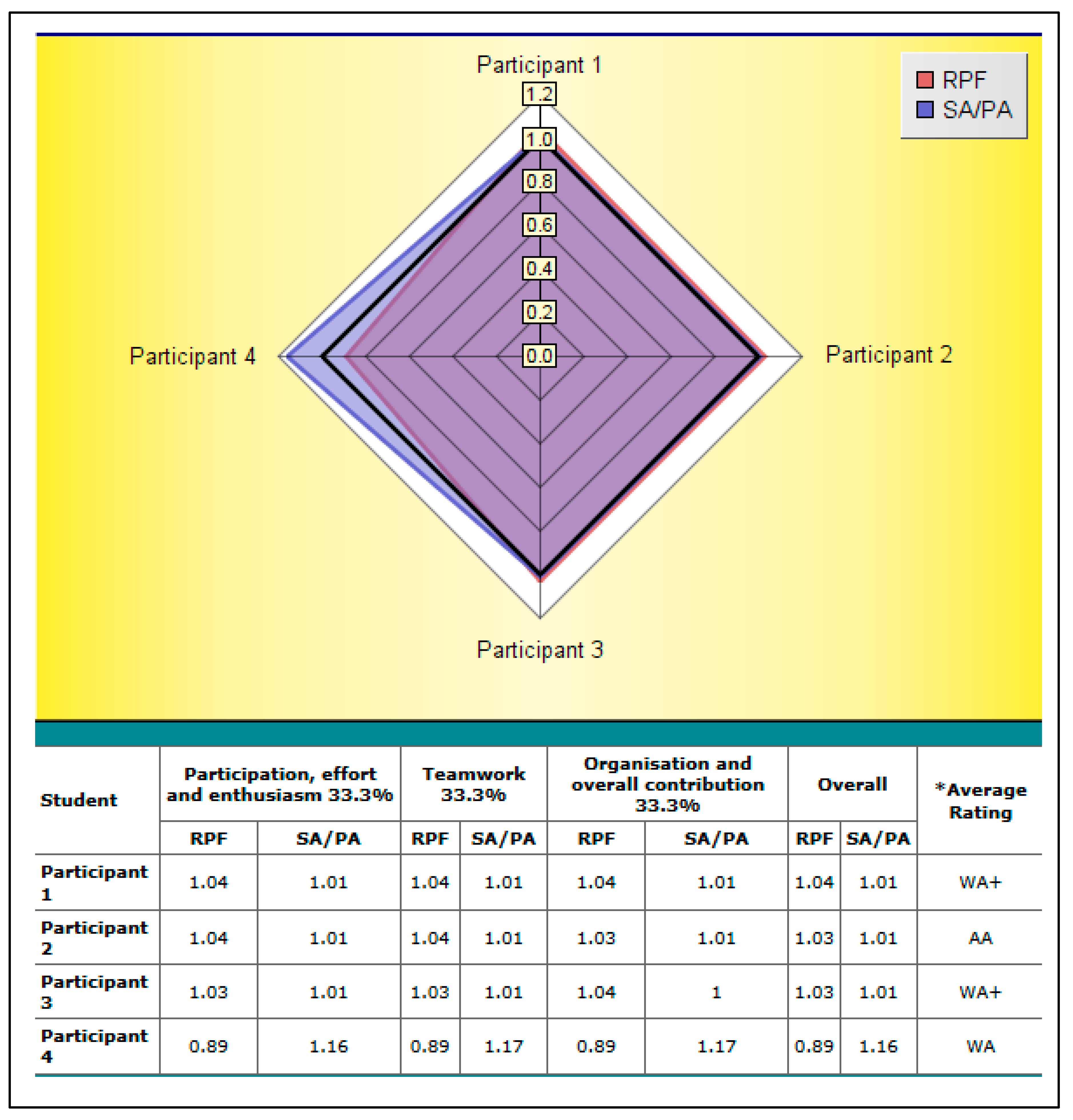

29] investigated the accountability of Moodle generated course analytics data to predict teamwork and commitment levels in online learning contexts. This study was conducted in a Master’s program in Spain. The results from the study showed no relationship between learn-online engagement and teamwork and commitment acquisition. Conjin et al. [

30] investigated the prediction of students’ performances using Moodle data for 17 blended courses and observed that the probability of prediction strongly vary across courses. They also observed that Moodle data provide little value for early intervention. Zacharis [

31] observed 52% variation on prediction of final grade using Moodle data.

This research considers applying statistical techniques to predict students’ performances using course analytics data from Moodle. The effectiveness of using Moodle data for an engineering course at the University of South Australia was investigated through statistical techniques.

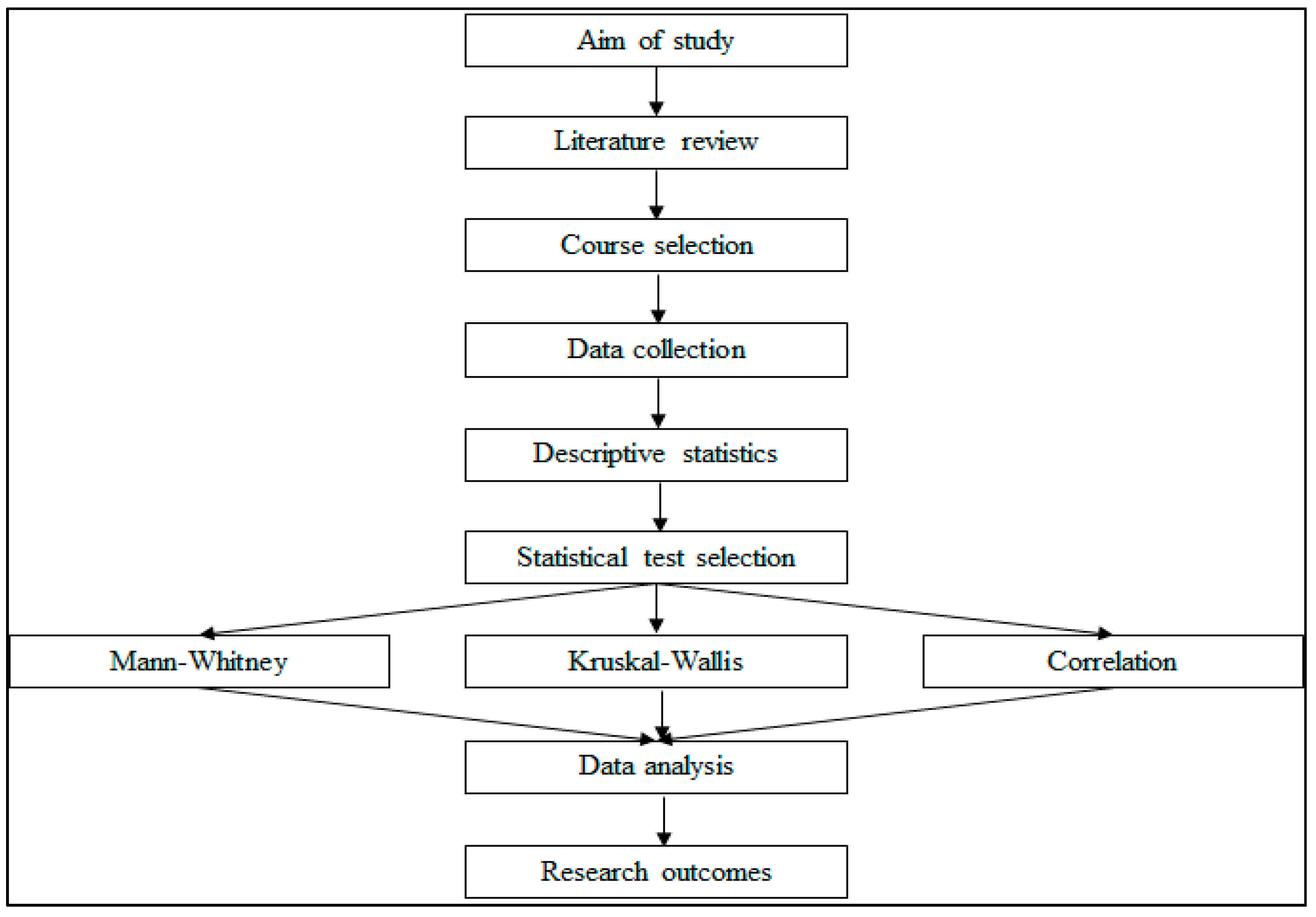

Section 2 shows the methodology including the description of appropriate statistical tests used in this study, while

Section 3 shows the results from the analysis.