1. Introduction

Learning Design or Design for Learning [

1], as a field of educational research and practice, aims to improve the effectiveness of learning, e.g., helping teachers to create and make explicit their own designs [

2]. A similar term, “learning design”, is also used to refer either to the creative process of designing a learning activity or to the artefact resulting from such a process [

3]. Despite this emphasis on the creation of learning designs, there is a lack of frameworks to evaluate the implementation of the designs in the classroom [

4]. Moreover, in order to evaluate the implementation of learning design, there is a need for evidence coming from those digital or physical spaces where teaching and learning processes take place [

5].

Observations (or observational methods) have been traditionally used by researchers and practitioners to support awareness and reflection [

6,

7]. Especially in educational contexts that occupy, fully or partially, physical spaces, observations offer an insight not easily available through other data sources (e.g., surveys, interviews, or teacher and student journals). Indeed, since human observations are limited to what the eye can see, and are done through the human resources available, automated observations can provide a complementary view and lower effort solution, especially when the learning scenario is supported by technology [

8,

9]. Thus, the integration of manual and automated observations with other data sources [

10] offers a more complete and triangulated picture of the teaching and learning processes [

11].

Interestingly, while both learning design (hereafter, LD) and classroom observation (CO) pursue the support of teaching and learning practices, often they are not aligned. To better understand why and how LD and CO have been connected in the existing literature, this paper reports on the results obtained from a systematic literature review. More precisely, this paper explores the nature of the observations, how the researchers establish the relationship between LD and CO and how this link is implemented in practice. Then, the lessons learnt from the literature review led us to spot open issues and future directions that are to be addressed by the research community.

Out of 2793 papers obtained from different well-known databases in the area of technology-enhanced learning, 24 articles were finally considered for the review. In the following sections, we introduce related works that motivated this study, describe the research methodology followed during the review process, and finally, discuss the results obtained in relation to the research questions that guided the study.

2. Supporting Teaching Practice through Learning Design and Classroom Observation

While Learning Design refers to the field of educational research and practice, different connotations are linked to the term ‘learning design’ (without capitals) [

5,

12,

13,

14]. According to some authors, learning design (LD) can be seen as a product or an artefact that describes the sequence of teaching and learning activities [

5,

15,

16,

17], including the actors’ roles, activities, and environments as well as the relations between them [

18]. At the same time, learning design is also referred to as the process of designing a learning activity and or creating the artefacts that describe the learning activity [

1,

13,

19]. In this paper, we will reflect not only on the

artefact but also on the

process of designing for learning, trying to clarify which one, and how, it is connected with classroom observations.

While designing for learning, practitioners develop hypotheses about the teaching and learning process [

20]. The collection of evidence during the enactment to test these hypotheses contributes to the orchestration tasks (e.g., by detecting deviations from the teacher’s expectations that may require regulation) to the teacher professional development (leading to the better understanding and refinement of the teaching and learning practices) [

16,

21] and to the decision making at the institutional level (e.g., in order to measure the impact of their designs and react upon them) [

22]. However, the support available for teachers for design evaluation is still low [

4] and, as Ertmer et al. note, scarce research is devoted to evaluating the designs [

23].

In a parallel effort to support teaching and learning, classroom observation (CO) contributes to refining and reflecting on those practices. CO is a “non-judgmental description of classroom events that can be analysed and given interpretation” [

24]. Through observations, we can gather data on individual behaviours, interactions, or the educational setting both in physical and digital spaces [

8,

25] using multiple machine- and human-driven data collection techniques (such as surveys, interviews, activity tracking, teaching and learning content repositories, or classroom and wearable sensors). Indeed, Multimodal Learning Analytics (MMLA) solutions can be seen as “modern” observational approaches suitable for physical and digital spaces [

26], to infer climate in the classroom [

27], or to observe technology-enhanced learning [

28] or to put in evidence the human and machine-generated data for the design of LA systems [

29].

According to the observational methods, the design of the observation should be aligned with the planned activities [

30], which, in the case of the classroom observations, are described in the learning design. Later, observers must be aware of the context where the teaching and learning processes take place including, among others, the subjects and objects involved. Again, this need for context awareness can be satisfied with the details provided in the LD artefacts [

31]. Finally, going one step further, the context and the design decisions may guide the analysis of the observations [

6,

32].

Another main aspect of the observations is the protocol guiding the data collection. Unstructured protocols provide observers with full expressivity to describe what they see, with the risk of producing big volumes of unstructured data that is more difficult and time-consuming to interpret [

33]. On the contrary, structured observations are less expressive but, on the other hand, are more prone to automatization with context-aware technological means, reduce the observation effort and tend to be more accurate in systematic data gathering [

34]; this factor allows for more efficient data processing [

35] and makes the integration with other sources in multimodal datasets easier, thus enabling data triangulation [

36].

From the (automatic) data gathering and analysis perspective, LD artefacts have been used in the area of LA to contextualise the analysis [

37,

38] and LD processes to customise such solutions [

39]. Symmetrically, both the field of Learning Design and the practitioners also benefit from this symbiosis [

5], e.g., by analysing the design process or assessing the impact of the artefacts on learning, new theories can be extracted. Thus, classroom observations (beyond the mere data gathering and analysis technique) could profit from similar synergies with LD processes and artefacts, as some authors have already pointed out [

23].

3. Research Questions and Methodology

In order to better understand how learning design and classroom observation have been connected in the existing literature, we carried out a systematic literature review [

40] to answer the following research questions:

- RQ1:

What is the nature of the observations (e.g., stakeholders, unit of analysis, observation types, when the coding is done, research design, complementary sources for data triangulation, limitations of observations and technological support)?

- RQ2:

What are the purposes of the studies connecting learning design and classroom observations?

- RQ3:

What is the relationship between learning design and classroom observations established at the methodological, practical and technical levels?

- RQ4:

What are the important open issues and future lines of work?

While the first three research questions are aimed at being descriptive and mapping the existing reality based on the research and theoretical works, the last research question was aimed at being prescriptive; by identifying the gaps in literature based on corresponding limitations and research results, we offer future research directions.

To answer these research questions, we selected six main academic databases in Technology Enhanced Learning: IEEE Xplore, Scopus, AISEL, Wiley, ACM, and ScienceDirect. Additionally, Google Scholar (top 100 papers out of 15500 hits) was added in order to detect “grey literature” not indexed in most common literature databases but potentially relevant to assess the state of a research field.

After taking into account alternative spellings, the resulting query was: (“classroom observation*” OR “lesson observation*” OR “observational method*”) AND (“learning design” OR “design for learning” OR “lesson plan” OR “instructional design” OR scripting). Aside from this, the first part of the query was decided based on different possible uses of the term “observation”, whereas in the part of the query “learning design” or “design for learning” there are established differences in the use of these related concepts [

19] as already discussed in the previous section. At the same time, “instructional design”, although it has a different origin, sometimes is used interchangeably [

3] and “scripting” [

36] are also widely used.

The query was run on 15 March 2018. To select the suitable papers we followed the

PRISMA statement [

41]—guideline and process used for rigorous systematic literature reviews. Although several papers contained these keywords in the body of the paper, we narrowed the search down to title, abstract, and keywords, aiming for those papers where these terms could have a more significant role in the contribution. Therefore, whenever the research engine allowed it, the query was applied to title, abstract, and keyword, obtaining a total of 2793 items from the different databases. After the duplicates were removed, we ended up with 2392 papers. Then, to apply the same criteria to all papers, we conducted a manual secondary title/abstract/keyword filtering, obtaining 81 publications. Finally, abstracts and full papers were reviewed, excluding those that were not relevant for our research purpose (i.e., no direct link between LD and observations—43), not accessible (the paper could not be found on the internet nor provided by the authors—14 papers). Finally, 24 papers were selected for in-depth analysis.

The analysis of the articles was guided by the research questions listed previously. According to the content analysis method [

42], we applied inductive reasoning followed by iterative deductive analysis. While the codes in some categories were predefined, others emerged during the analysis (e.g., when identifying complementary data-sources, or when eliciting the influence that LD has on CO and vice versa). As a result, the articles were fully read and (re)coded through three iterations.

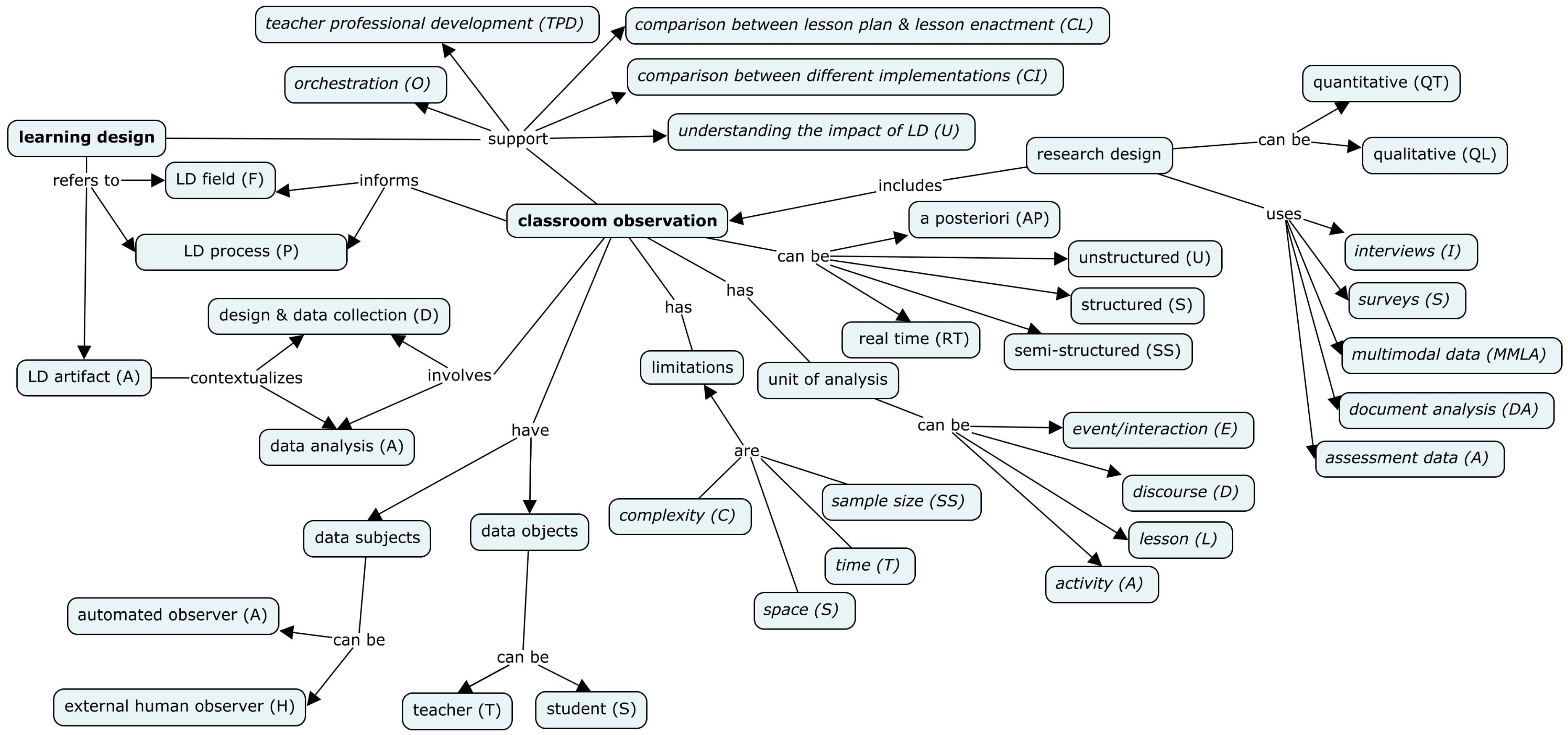

Figure 1 provides an overview of the codification scheme, showing the categories, the relations and whether the codes were predefined (using normal font) or emerged during the process (in italics).

It should be noted that both predefined and emerging codes were agreed on between the researchers. Although a single researcher did the coding, the second author was involved in ambiguous cases. In most of the cases, the content analysis only required identifying the topics and categories depicted in

Figure 1. However, in some cases, it was necessary to infer the categories such as the

unit of analysis, which had to be identified, based on the research methodology information (further details are provided in the following section).

4. Results and Findings

Table 1 shows the main results of our analysis, including the codification assigned per article. While the main goal was to identify empirical works, we also included theoretical papers in the analysis since they could provide relevant input for the research questions. More concretely, out of 24 papers, we identified 3 papers without empirical evidence: Adams et al provide guidelines for the classroom observation at scale and the other two papers by Eradze and Laanpere, Eradze et al, 2017 reflect on the connections between classroom observations and learning analytics. This section summarises the findings of the systematic review, organised along with our four research questions.

4.1. RQ1—What is the Nature of the Observations?

The distribution of observation roles among data subjects and objects was clear and explicit in every paper. In all cases, external human observers were in charge of the data collection and coding—twice in combination with automated LA solutions (in this case, a proposal to involve LA solutions) [

45,

46]—with both teachers and students as the common data objects (22 papers). Although the definition of the

unit of analysis is an important methodological decision in observational studies or research in general [

35,

67,

68,

69] we only found an explicit reference to it in one paper [

66]. Nevertheless, looking at the description of the research methodology, we can infer that most of the studies focused on

events (directed at interaction and behavioural analysis) (14) and

activities (10).

While either structured or semi-structured observations (10 and 10 respectively) were the most common observation types, unstructured observations were also mentioned (7). Interestingly, just two papers conceived the option of combining the three different observational protocols [

45,

46]. Going one step further and looking at how the observation took place, there was an equal distribution between real-time and a posteriori cases, but in all cases following traditional data collection (i.e., by a human). The existence of so many a posteriori observational data collection could be closely related to the limited resources and effort often available to carry out manual observations.

A variety of research designs were followed in the studies: 9 papers reported qualitative methods, 6 quantitative and 8 mixed methods. Most of them combined observations with additional data sources, including documents (16), interviews (15), assessment data (4), and surveys (3). In a majority of cases, aside from observations, there were at least two other sources of data used (16 cases).

Most of the papers use (or consider using) additional data sources that were not produced automatically, as happened with the observations. This fact illustrates how demanding data integration of (often multimodal) data can be. While MMLA solutions could be applied in a variety of studies, quantitative and mixed-method studies that enriched event observations with additional data sources—see, e.g., [

53,

54,

60,

65] are potential candidates to benefit from MMLA solutions that aid not only the systematic data gathering but also the integration and analysis of multiple data sources.

Regarding the learning designs, the majority of papers included the artefact as a data source where they applied document analysis to extract the design decisions. Moreover, in several studies—e.g., [

44,

58,

59]—the learning design was not available and was inferred a posteriori, with indirect observations. These two situations illustrate one of the main limitations for the alignment with learning design: LDs are not always explicit or, if they are documented, come in different forms (e.g., including texts, graphical representations, or tables) and level of detail tables [

70,

71]. Apart from being time-consuming, inferring or interpreting the design decisions is error prone and can influence the contextualization. This problem, also mentioned by the LA community when attempting to combine LD and LA [

32,

72], shows the still low adoption of digital solutions (see for example the Integrated learning design environment (ILDE:

http://ilde.upf.edu) that supports the LD process and highlights the need for a framework on how to capture and systematise learning design data.

4.2. RQ2—What are the Purposes of Studies Connecting LD and CO?

According to the papers, the main reasons identified in the studies were: To support teacher professional development (13), classroom orchestration (11), and reflection, e.g., understanding the impact of the learning design (10) or comparing the design and its implementation (8). Moreover, in many cases (13), the authors report connecting LD and CO for two or more purposes at the same time. Therefore, linking LD and CO can be useful to cater to several research aims and teacher needs. The fact that this synergy is mostly used to support teacher professional development can be also explained with the wide use of classroom observations in teacher professional development and teacher training.

4.3. RQ3—What is the Relationship between Learning Design and Classroom Observations Established at the Methodological, Practical and Technical Level?

One of the aims of our study was to identify the theoretical contributions that aim at connecting CO with LD. Only three papers aimed at contributing to linking learning design and classroom observations. Solomon in 1971 was a pioneer in bringing together learning design and classroom observations. In his paper [

62] the author suggested a process and a model for connecting CO and LD in order to compare planned learning activities with the actual implementation in the classroom. In his approach, data was collected and analysed based on LD, attending specific foci of interest. It also looks at previous lessons to get indicators on the behavioural changes, and aligns them with the input (strategies in the lesson plan), coding student and teacher actions and learning events by identifying actors (according to objectives in the lesson plan), output (competencies gained in the end). The approach also places importance on the awareness and reflection possibilities of such observations, not only from teachers but also from students. Later on, Eradze et al. proposed a model and a process for lesson observation, which were framed by the learning design. The output of the observation is a collection of the statements represented in a computational format (xAPI) so that they can be interpreted and analysed by learning analytics solutions [

45,

46]. In these papers, the authors argue that the learning design not only guides the data gathering but also contextualises the data analysis, contributing to a better understanding of the results.

At the practical level, the relation established between learning design and classroom observation was mainly a guidance at different degrees: either the authors reported to have observed aspects related to the learning design (eight papers), or to interpret the results of the observational analysis (six papers) or, from the beginning, the learning design guided the whole observation cycle (i.e., design, data gathering, and analysis) (10 papers). How is CO reflected on LD and Learning Design as a practice? In 15 cases, the final result of the synergy was recommendations for teaching and learning practice (design for learning), in eight cases the use of observations aimed at informing the LD, and three papers had used CO to contribute to theory or the field of LD in general. In other words, while many papers used the learning design artefact, the observations contributed to inform the (re)design process.

Additionally, from the

technical perspective, it should be noted that none of the papers reported having used specific tools to create learning design or to support the observational design, the data collection nor the analysis process. Nevertheless, one paper [

45] presented a tool that uses the learning design to support observers in the codification and contextualization of interaction data. The fact that most of the papers have extracted the LD using document analysis indicates low adoption of LD models and design tools by researchers and practitioners. Thus, there is a need for solutions that enable users to create or import the designs that guide the contextualization of the data collection and analysis.

4.4. RQ4—What are the Important Open Issues and Future Lines of Work?

Although most of the papers did not report limitations in connecting LD and CO (18 papers), those who did refer to problems associated with the observation itself such as time constraints (difficulties annotating/coding in the time available [

62,

63], space constraints - observer mobility [

61] and sample size [

50,

56,

63].

Furthermore, as a result of the paper analysis, we have identified different issues to be addressed by the research community to enable the connection between LD and CO, and achieve it in more efficient ways, namely:

4.4.1. Dependence on the Existence of Learning Design

Dependence on the LD as an artefact is one of the issues for the implementation of such a synergy: while in this paper we assume that the learning design is available, in practice, this is not always the case. Often, the lesson plan remains in the head of the practitioner without being registered or formalised [

32,

72]. Therefore, for those cases, it would be necessary to rely on bottom-up solutions whose goal is to infer the lesson structure from the data gathered in the learning environment [

73]. However, solutions of this type are still scarce and prototypical.

4.4.2. Compatibility with Learning Design Tools

The studies reviewed here did not report using any LD or CO tool. However, to aid the connection between learning design and classroom observations, it is necessary to have access to a digital representation of the artefact. Tools such as

WebCollage (

https://analys.gsic.uva.es/webcollage),

LePlanner (

https://beta.leplanner.net) or the

ILDE (Integrated Learning Design Environment,

https://ilde.upf.edu) guide users through the design process. To facilitate compatibility, it would be recommendable to use tools that rely on widespread standards (e.g., IMS-LD – a specification that enables modelling of learning processes) instead of proprietary formats. From the observational side, tools such as

KoboToolbox (

http://analys.kobotoolbox.org),

FieldNotes (

http://fieldnotesapp.info),

Ethos (

https://beta2.ethosapp.com),

Followthehashtag (

http://analys.followthehashtag.com,

Storify (

https://storify.com),

VideoAnt (

https://ant.umn.edu), and

LessonNote (

http://lessonnote.com) have been designed to support observers during the data collection. Also, in this case, for compatibility reasons, it would be preferable to use tools that allow users to export their observations following standards already accepted by the community (e.g., xAPI).

4.4.3. Workload and Multimodal Data Gathering

As we have seen in the reviewed papers, observation processes often require the participation of ad-hoc observers. To alleviate the time and effort that observations entail, technological means could be put in place, enabling teachers to gather data by themselves [

74]. For example, (multimodal) learning analytics solutions that monitor user activity and behaviour [

26,

73,

75,

76] could be used to automate part of the data collection or to gather complementary information about what is happening in the digital and the physical space. It is also worth noting that the inclusion of new data sources may contribute not only to promoting the quality of analysis (by triangulating the evidence), but also to obtaining a more realistic interpretation of the teaching and learning processes under study.

4.4.4. Underlying Infrastructure

To the best of our knowledge, there is no tool or ecosystem that enables the whole connection between LD and CO (i.e., creation of the learning design, observational design, data gathering, integration, and analysis). From the reviewed literature only one tool, Observata [

45,

46] could fit this purpose. However, this tool was still under design and therefore not evaluated by the time this review took place.

5. Conclusions

This paper reports a systematic literature review on the connection between learning design and classroom observation, where 24 papers were the subject of analysis. These papers illustrate the added value that the alignment between these two areas may bring, including but not limited to teacher professional development, orchestration, institutional decision-making and educational research in general. To cater to the needs for evidence-based teaching and learning practices, this review contextualises classroom observations within modern data collection approaches and practices.

Despite the reported benefits, the main findings from the papers lead us to conclude that in order to make use of the synergies of linking LD and CO, technological infrastructure plays a crucial role. Starting from the learning design, this information is not explicit and formalising it implies adding extra tasks for the practitioners. Similarly, ad-hoc observers are in charge of data collection and analysis. Taking into account that the unit of analysis in most cases is the event (interaction-driven) or the activity, the workload that the observations entail might not be compatible with teaching at the same time, and, therefore, require external support. Nevertheless, despite using multiple data sources in research, none of the papers have reported automatic data gathering or the use of MMLA solutions for its analysis. Thus, to enable inquiry processes where teachers and researchers can manage the whole study, we suggest that MMLA solutions could contribute to reducing the burden by inferring the lesson plan and by automatically gathering parts of the observation.

Moreover, to operationalise the connection between the designs, it will be necessary to promote the usage of standards both in the LD and the CO solutions, so that we can increase the compatibility between platforms. This strategy could contribute to the creation of technological ecosystems that support all the steps necessary to support the connection between the design and the observations. Additionally, there is a need for methodological frameworks and tools that guide the data gathering and integration, so that the learning design is taken into consideration not only to frame the data analysis but also to inform the observational design. Furthermore, this paper mainly illustrates the benefits that LD and CO synergies may bring to researchers focusing on educational research, but more development would be needed for teacher adoption and teaching practice.

Finally, coming back to the research methodology of this paper, our study presents a number of limitations: First, restricting the search to the title, abstract or keywords may have caused the exclusion of valuable contributions; and second, the lack of explicit descriptions or omission about the LD and CO processes/artefacts in the papers may have caused deviations in the codifications. Nevertheless, the analysis of the collected papers still illustrates the synergies and challenges of this promising tandem of learning design and classroom observation.

Author Contributions

Conceptualization, methodology, data curation, formal analysis, investigation, visualization, writing—original draft, M.E.; Supervision, formal analysis, visualization, writing—review and editing, M.J.R.-T.; Supervision, writing—review and editing, M.L.

Funding

This research was funded by the European Union’s Horizon 2020 Research and Innovation Programme under Grant Agreement No. 731685 (Project CEITER).

Acknowledgments

The authors thank Jairo Rodríguez-Medina for inspiration and helpful advice.

Conflicts of Interest

The authors declare no conflict of interest. Ethics approval was not required. All available data is provided in the paper.

References

- Goodyear, P.; Dimitriadis, Y. In medias res: Reframing design for learning. Res. Learn. Technol. 2013, 21. [Google Scholar] [CrossRef]

- Laurillard, D. Teaching as a Design Science: Building Pedagogical Patterns for Learning and Technology; Routledge: New York, NY, USA, 2013; ISBN 1136448209. [Google Scholar]

- Mor, Y.; Craft, B.; Maina, M. Introduction—Learning Design: Definitions, Current Issues and Grand Challenges. In The Art & Science of Learning Design; Sense Publishers: Rotterdam, The Netherlands, 2015; pp. 9–26. [Google Scholar]

- Hernández-Leo, D.; Rodriguez Triana, M.J.; Inventado, P.S.; Mor, Y. Preface: Connecting Learning Design and Learning Analytics. Interact. Des. Archit. J. 2017, 33, 3–8. [Google Scholar]

- Hernández-Leo, D.; Martinez-Maldonado, R.; Pardo, A.; Muñoz-Cristóbal, J.A.; Rodríguez-Triana, M.J. Analytics for learning design: A layered framework and tools. Br. J. Educ. Technol. 2019, 50, 139–152. [Google Scholar] [CrossRef]

- Wragg, T. An Introduction to Classroom Observation (Classic Edition); Routledge: Abingdon, UK, 2013; ISBN 1136597786. [Google Scholar]

- Cohen, L.; Manion, L.; Morrison, K. Research Methods in Education; Routledge: Abingdon, UK, 2013; ISBN 113572203X. [Google Scholar]

- Hartmann, D.P.; Wood, D.D. Observational Methods. In International Handbook of Behavior Modification and Therapy: Second Edition; Bellack, A.S., Hersen, M., Kazdin, A.E., Eds.; Springer US: Boston, MA, USA, 1990; pp. 107–138. ISBN 978-1-4613-0523-1. [Google Scholar]

- Blikstein, P. Multimodal learning analytics. In Proceedings of the Third International Conference on Learning Analytics and Knowledge (LAK ’13), Leuven, Belgium, 8–13 April 2013; ACM Press: New York, NY, USA, 2013; p. 102. [Google Scholar]

- Eradze, M.; Rodríguez-Triana, M.J.M.J.; Laanpere, M. How to Aggregate Lesson Observation Data into Learning Analytics Datasets? In Proceedings of the 6th Multimodal Learning Analytics Workshop (MMLA), Vancouver, BC, Canada, 14 March 2017; CEUR: Aachen, Germany, 2017; Volume 1828, pp. 1–8. [Google Scholar]

- Martínez, A.; Dimitriadis, Y.; Rubia, B.; Gómez, E.; De la Fuente, P. Combining qualitative evaluation and social network analysis for the study of classroom social interactions. Comput. Educ. 2003, 41, 353–368. [Google Scholar] [CrossRef]

- Cameron, L. How learning design can illuminate teaching practice. In Proceedings of the Future of Learning Design Conference, Wollongong, Australia, 10 December 2009; Faculty of Education, University of Wollongong: Wollongong, NSW, Australia, 2009. [Google Scholar]

- Dobozy, E. Typologies of Learning Design and the introduction of a “LD-Type 2” case example. eLearn. Pap. 2011, 27, 1–11. [Google Scholar]

- Law, N.; Li, L.; Herrera, L.F.; Chan, A.; Pong, T.-C. A pattern language based learning design studio for an analytics informed inter-professional design community. Interac. Des. Archit. 2017, 33, 92–112. [Google Scholar]

- Conole, G. Designing for Learning in an Open World; Springer Science & Business Media: Berlin, Germany, 2012; Volume 4, ISBN 1441985166. [Google Scholar]

- Mor, Y.; Craft, B. Learning design: Reflections upon the current landscape. Res. Learn. Technol. 2012, 20, 19196. [Google Scholar] [CrossRef]

- Dalziel, J. Reflections on the Art and Science of Learning Design and the Larnaca Declaration. In The Art and Science of Learning Design; Sense Publishers: Rotterdam, The Netherlands, 2015; pp. 3–14. ISBN 9789463001038. [Google Scholar]

- Jonassen, D.; Spector, M.J.; Driscoll, M.; Merrill, M.D.; Van Merrienboer, J. Handbook of Research on Educational Communications and Technology: A Project of the Association for Educational Communications and Technology; Routledge: Abingdon, UK, 2008; ISBN 9781135596910. [Google Scholar]

- Muñoz-Cristóbal, J.A.; Hernández-Leo, D.; Carvalho, L.; Martinez-Maldonado, R.; Thompson, K.; Wardak, D.; Goodyear, P. 4FAD: A framework for mapping the evolution of artefacts in the learning design process. Australas. J. Educ. Technol. 2018, 34, 16–34. [Google Scholar] [CrossRef]

- Mor, Y.; Ferguson, R.; Wasson, B. Editorial: Learning design, teacher inquiry into student learning and learning analytics: A call for action. Br. J. Educ. Technol. 2015, 46, 221–229. [Google Scholar] [CrossRef]

- Hennessy, S.; Bowker, A.; Dawes, M.; Deaney, R. Teacher-Led Professional Development Using a Multimedia Resource to Stimulate Change in Mathematics Teaching; Sense Publishers: Rotterdam, The Netherlands, 2014; ISBN 9789462094345. [Google Scholar]

- Rienties, B.; Toetenel, L. The Impact of Learning Design on Student Behaviour, Satisfaction and Performance. Comput. Hum. Behav. 2016, 60, 333–341. [Google Scholar] [CrossRef]

- Ertmer, P.A.; Parisio, M.L.; Wardak, D. The Practice of Educational/Instructional Design. In Handbook of Design in Educational Technology; Routledge: New York, NY, USA, 2013; pp. 5–19. [Google Scholar]

- Moses, S. Language Teaching Awareness. J. Engl. Linguist. 2001, 29, 285–288. [Google Scholar] [CrossRef]

- Marshall, C.; Rossman, G.B. Designing Qualitative Research; Sage Publications: Rotterdam, The Netherlands, 2014; ISBN 1483324265. [Google Scholar]

- Ochoa, X.; Worsley, M. Augmenting Learning Analytics with Multimodal Sensory Data. J. Learn. Anal. 2016, 3, 213–219. [Google Scholar] [CrossRef]

- James, A.; Kashyap, M.; Victoria Chua, Y.H.; Maszczyk, T.; Nunez, A.M.; Bull, R.; Dauwels, J. Inferring the Climate in Classrooms from Audio and Video Recordings: A Machine Learning Approach. In Proceedings of the 2018 IEEE International Conference on Teaching, Assessment, and Learning for Engineering (TALE), Wollongong, NSW, Australia, 4–7 December 2018; pp. 983–988. [Google Scholar]

- Howard, S.K.; Yang, J.; Ma, J.; Ritz, C.; Zhao, J.; Wynne, K. Using Data Mining and Machine Learning Approaches to Observe Technology-Enhanced Learning. In Proceedings of the 2018 IEEE International Conference on Teaching, Assessment, and Learning for Engineering (TALE), Wollongong, NSW, Australia, 4–7 December 2018; pp. 788–793. [Google Scholar]

- Cukurova, M.; Luckin, R.; Mavrikis, M.; Millán, E. Machine and Human Observable Differences in Groups’ Collaborative Problem-Solving Behaviours. In Proceedings of the Data Driven Approaches in Digital Education: EC-TEL 2017; Lavoué, É., Drachsler, H., Verbert, K., Broisin, J., Pérez-Sanagustín, M., Eds.; Springer International Publishing: Cham, Switzerland, 2017; Volume 10474, pp. 17–29. [Google Scholar]

- Anguera, M.T.; Portell, M.; Chacón-Moscoso, S.; Sanduvete-Chaves, S. Indirect observation in everyday contexts: Concepts and methodological guidelines within a mixed methods framework. Front. Psychol. 2018, 9, 13. [Google Scholar] [CrossRef] [PubMed]

- Rodríguez-Triana, M.J.; Vozniuk, A.; Holzer, A.; Gillet, D.; Prieto, L.P.; Boroujeni, M.S.; Schwendimann, B.A. Monitoring, awareness and reflection in blended technology enhanced learning: A systematic review. Int. J. Technol. Enhanc. Learn. 2017, 9, 126–150. [Google Scholar] [CrossRef]

- Lockyer, L.; Heathcote, E.; Dawson, S. Informing pedagogical action: Aligning learning analytics with learning design. Am. Behav. Sci. 2013, 57, 1439–1459. [Google Scholar] [CrossRef]

- Gruba, P.; Cárdenas-Claros, M.S.; Suvorov, R.; Rick, K. Blended Language Program Evaluation; Palgrave Macmillan: London, UK, 2016; ISBN 978-1-349-70304-3 978-1-137-51437-0. [Google Scholar]

- Pardo, A.; Ellis, R.A.; Calvo, R.A. Combining observational and experiential data to inform the redesign of learning activities. In Proceedings of the Fifth International Conference on Learning Analytics And Knowledge (LAK ’15), Poughkeepsie, NY, USA, 16–20 March 2015; ACM: New York, NY, USA, 2015; pp. 305–309. [Google Scholar]

- Bakeman, R.; Gottman, J.M. Observing Interaction; Cambridge University Press: Cambridge, UK, 1997; ISBN 9780511527685. [Google Scholar]

- Rodríguez-Triana, M.J.; Martínez-Monés, A.; Asensio-Pérez, J.I.; Dimitriadis, Y. Scripting and monitoring meet each other: Aligning learning analytics and learning design to support teachers in orchestrating CSCL situations. Br. J. Educ. Technol. 2015, 46, 330–343. [Google Scholar] [CrossRef]

- Soller, A.; Martınez, A.; Jermann, P.; Muehlenbrock, M. From Mirroring to Guiding: A review of the state of the art in interaction analysis. Int. J. Artif. Intell. Educ. 2005, 15, 261–290. [Google Scholar]

- Corrin, L.; Lockyer, L.; Corrin, L.; Mulder, R.; Williams, D.; Dawson, S. A Conceptual Framework linking Learning Design with Learning Analytics Learning Analytics. In Proceedings of the Sixth International Conference on Learning Analytics & Knowledge, Edinburgh, UK, 25–29 April 2016; pp. 329–338. [Google Scholar]

- Rodríguez-Triana, M.J.; Prieto, L.P.; Martínez-Monés, A.; Asensio-Pérez, J.I.; Dimitriadis, Y. The teacher in the loop. In Proceedings of the 8th International Conference on Learning Analytics and Knowledge (LAK ’18), Sydney, NSW, Australia, 7–9 March 2018; ACM: New York, NY, USA, 2018; pp. 417–426. [Google Scholar]

- Kitchenham, B. Procedures for Performing Systematic Reviews; Keele University: Keele, UK, 2004. [Google Scholar]

- Liberati, A.; Altman, D.G.; Tetzlaff, J.; Mulrow, C.; Gøtzsche, P.C.; Ioannidis, J.P.A.; Clarke, M.; Devereaux, P.J.; Kleijnen, J.; Moher, D. The PRISMA statement for reporting systematic reviews and meta-analyses of studies that evaluate health care interventions: explanation and elaboration. J. Clin. Epidemiol. 2009, 62, 31–34. [Google Scholar] [CrossRef] [PubMed]

- Elo, S.; Kyngäs, H. The qualitative content analysis process. J. Adv. Nurs. 2008, 62, 107–115. [Google Scholar] [CrossRef] [PubMed]

- Adams, C.M.; Pierce, R.L. Differentiated Classroom Observation Scale—Short Form. In Proceedings of the The Proceedings of the 22nd Annual Conference of the European Teacher Education Network, Coimbra, Portugal, 19–21 April 2012; pp. 108–119. [Google Scholar]

- Anderson, J. Affordance, learning opportunities, and the lesson plan pro forma. ELT J. 2015, 69, 228–238. [Google Scholar] [CrossRef]

- Eradze, M.; Laanpere, M. Lesson Observation Data in Learning Analytics Datasets: Observata; Springer: Berlin, Germany, 2017; Volume 10474, ISBN 9783319666099. [Google Scholar]

- Eradze, M.; Rodríguez-Triana, M.J.M.J.; Laanpere, M. Semantically Annotated Lesson Observation Data in Learning Analytics Datasets: A Reference Model. Interact. Des. Archit. J. 2017, 33, 75–91. [Google Scholar]

- Freedman, A.M.; Echt, K.V.; Cooper, H.L.F.; Miner, K.R.; Parker, R. Better Learning Through Instructional Science: A Health Literacy Case Study in “How to Teach So Learners Can Learn”. Health Promot. Pract. 2012, 13, 648–656. [Google Scholar] [CrossRef] [PubMed]

- Ghazali, M.; Othman, A.R.; Alias, R.; Saleh, F. Development of teaching models for effective teaching of number sense in the Malaysian primary schools. Procedia Soc. Behav. Sci. 2010, 8, 344–350. [Google Scholar] [CrossRef][Green Version]

- Hernández, M.I.; Couso, D.; Pintó, R. Analyzing Students’ Learning Progressions Throughout a Teaching Sequence on Acoustic Properties of Materials with a Model-Based Inquiry Approach. J. Sci. Educ. Technol. 2015, 24, 356–377. [Google Scholar] [CrossRef]

- Jacobs, C.L.; Martin, S.N.; Otieno, T.C. A science lesson plan analysis instrument for formative and summative program evaluation of a teacher education program. Sci. Educ. 2008, 92, 1096–1126. [Google Scholar] [CrossRef]

- Jacobson, L.; Hafner, L.P. Using Interactive Videodisc Technology to Enhance Assessor Training. In Proceedings of the Annual Conference of the International Personnel Management Association Assessment Council, Chicago, IL, USA, 23–27 June 1991. [Google Scholar]

- Kermen, I. Studying the Activity of Two French Chemistry Teachers to Infer their Pedagogical Content Knowledge and their Pedagogical Knowledge. In Understanding Science Teachers’ Professional Knowledge Growth; Grangeat, M., Ed.; Sense Publishers: Rotterdam, The Netherlands, 2015; pp. 89–115. ISBN 978-94-6300-313-1. [Google Scholar]

- Molla, A.S.; Lee, Y.-J. How Much Variation Is Acceptable in Adapting a Curriculum? Nova Science Publishers: Hauppauge, NY, USA, 2012; Volume 6, ISBN 978-1-60876-389-4. [Google Scholar]

- Nichols, W.D.; Young, C.A.; Rickelman, R.J. Improving middle school professional development by examining middle school teachers’ application of literacy strategies and instructional design. Read. Psychol. 2007, 28, 97–130. [Google Scholar] [CrossRef]

- Phaikhumnam, W.; Yuenyong, C. Improving the primary school science learning unit about force and motion through lesson study. AIP Conf. Proc. 2018, 1923, 30037. [Google Scholar]

- Yuval, L.; Procter, E.; Korabik, K.; Palmer, J. Evaluation Report on the Universal Instructional Design Project at the University of Guelph. 2004. Available online: https://www.google.com.hk/url?sa=t&rct=j&q=&esrc=s&source=web&cd=1&ved=2ahUKEwiXgNat4-rhAhUNGaYKHdMiAsoQFjAAegQIABAC&url=https%3A%2F%2Fopened.uoguelph.ca%2Finstructor-resources%2Fresources%2Fuid-summaryfinalrep.pdf&usg=AOvVaw2Hht_P2gKzmq0zydIrEe5Q (accessed on 24 April 2019).

- Ratnaningsih, S. Scientific Approach of 2013 Curriculum: Teachers’ Implementation in English Language Teaching. J. Engl. Educ. 2017, 6, 33–40. [Google Scholar] [CrossRef][Green Version]

- Rozario, R.; Ortlieb, E.; Rennie, J. Interactivity and Mobile Technologies: An Activity Theory Perspective. In Lecture Notes in Educational Technology; Springer: Berlin, Germnay, 2016; pp. 63–82. ISBN 978-981-10-0027-0; 978-981-10-0025-6. [Google Scholar]

- Simwa, K.L.; Modiba, M. Interrogating the lesson plan in a pre-service methods course: Evidence from a University in Kenya. Aust. J. Teach. Educ. 2015, 40, 12–34. [Google Scholar] [CrossRef]

- Sibanda, J. The nexus between direct reading instruction, reading theoretical perspectives, and pedagogical practices of University of Swaziland Bachelor of Education students. RELC J. 2010, 41, 149–164. [Google Scholar] [CrossRef]

- Suherdi, I.S.N. and D. Scientific Approach: An English Learning-Teaching (Elt) Approach in the 2013 Curriculum. J. Engl. Educ. 2017, 5, 112–119. [Google Scholar]

- Solomon, W.H. Participant Observation and Lesson Plan Analysis: Implications for Curriculum and Instructional Research. 1971. Available online: https://files.eric.ed.gov/fulltext/ED049189.pdf (accessed on 24 April 2019).

- Suppa, A. English Language Teachers’ Beliefs and Practices of Using Continuous Assessment: Preparatory Schools in IIu Abba Bora Zone in Focus; Jimma University: Jimma, Ethiopia, 2015. [Google Scholar]

- Vantassel-Baska, J.; Avery, L.; Struck, J.; Feng, A.; Bracken, B.; Drummond, D.; Stambaugh, T. The William and Mary Classroom Observation Scales Revised. The College of William and Mary School of Education Center for Gifted Education, 2003. Available online: https://education.wm.edu/centers/cfge/_documents/research/athena/cosrform.pdf (accessed on 24 April 2019).

- Versfeld, R.; Teaching, T.H.E. Investigating and Establishing Best Practices in the Teaching of English as a Second Language in Under-Resourced and Multilingual Contexts. Teaching and Learning Resources Centre University of Cape Town. Report. 1998. Available online: http://citeseerx.ist.psu.edu/viewdoc/download?doi=10.1.1.569.115&rep=rep1&type=pdf (accessed on 24 April 2019).

- Zhang, Y. Multimodal teacher input and science learning in a middle school sheltered classroom. J. Res. Sci. Teach. 2016, 53, 7–30. [Google Scholar] [CrossRef]

- Matusov, E. In search of “the appropriate” unit of analysis for sociocultural research. Cult. Psychol. 2007, 13, 307–333. [Google Scholar] [CrossRef]

- Rodríguez-Medina, J.; Rodríguez-Triana, M.J.; Eradze, M.; García-Sastre, S. Observational Scaffolding for Learning Analytics: A Methodological Proposal. In Lecture Notes in Computer Science; Springer: Berlin, Germany, 2018; Volume 11082, pp. 617–621. [Google Scholar]

- Eradze, M.; Väljataga, T.; Laanpere, M. Observing the Use of E-Textbooks in the Classroom: Towards “Offline” Learning Analytics. In Lecture Notes in Computer Science; Springer: Berlin, Germany, 2014; Volume 8699, pp. 254–263. [Google Scholar]

- Falconer, I.; Finlay, J.; Fincher, S. Representing practice: Practice models, patterns, bundles…. Learn. Media Technol. 2011, 36, 101–127. [Google Scholar] [CrossRef]

- Agostinho, S.; Harper, B.M.; Oliver, R.; Hedberg, J.; Wills, S. A Visual Learning Design Representation to Facilitate Dissemination and Reuse of Innovative Pedagogical Strategies in University Teaching. In Handbook of Visual Languages for Instructional Design: Theories and Practices; Information Science Reference: Hershey, PA, USA, 2008; ISBN 9781599047294. [Google Scholar]

- Mangaroska, K.; Giannakos, M. Learning Analytics for Learning Design: Towards Evidence-Driven Decisions to Enhance Learning. In Lecture Notes in Computer Science (Including Subseries Lecture Notes in Artificial Intelligence and Lecture Notes in Bioinformatics); Lavoué, É., Drachsler, H., Verbert, K., Broisin, J., Pérez-Sanagustín, M., Eds.; Springer International Publishing: Cham, Switzerland, 2017; Volume 10474, pp. 428–433. ISBN 9783319666099. [Google Scholar]

- Prieto, L.P.; Sharma, K.; Kidzinski, Ł.; Rodríguez-Triana, M.J.; Dillenbourg, P. Multimodal teaching analytics: Automated extraction of orchestration graphs from wearable sensor data. J. Comput. Assist. Learn. 2018, 34, 193–203. [Google Scholar] [CrossRef] [PubMed]

- Saar, M.; Prieto, L.P.; Rodríguez-Triana, M.J.; Kusmin, M. Personalized, teacher-driven in-action data collection: technology design principles. In Proceedings of the 18th IEEE International Conference on Advanced Learning Technologies (ICALT), Mumbai, India, 9–13 July 2018. [Google Scholar]

- Merenti-Välimäki, H.L.; Lönnqvist, J.; Laininen, P. Present weather: Comparing human observationsand one type of automated sensor. Meteorol. Appl. 2001, 8, 491–496. [Google Scholar] [CrossRef]

- Muñoz-Cristóbal, J.A.; Rodríguez-Triana, M.J.; Gallego-Lema, V.; Arribas-Cubero, H.F.; Asensio-Pérez, J.I.; Martínez-Monés, A. Monitoring for Awareness and Reflection in Ubiquitous Learning Environments. Int. J. Hum. Comput. Interact. 2018, 34, 146–165. [Google Scholar] [CrossRef]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).