Abstract

Tutorials play a key role in the teaching and learning of engineering sciences. However, the efficacy of tutorials as platforms for providing personal and academic support is continuously being challenged by factors such as declining faculty-to-student ratios and students’ under-preparedness. This study adopted reciprocal peer tutorial assessment as an instructional strategy in a capstone course in Process Metallurgy. The findings from highlighted the delicate balance between the obvious benefits and the unintended consequences of adopting reciprocal peer assessments during tutorials. The obvious benefits of RPTA included opportunities for synergistic peer learning, healthy competition among students, self-directed learning, among others. However, the benefits of RPTA were negated by factors such as low level of trust among peers, anxiety over year marks, time constraints, and discomfort due to perceived incompetency when compared to their peers. Finally, the findings from the present study provided opportunities for iterative design and continuous improvement.

1. Background

Tutorials are important teaching and learning tools designed to enhance understanding of the disciplinary content covered in lecturers [1]. Tutorials create an active and interactive learning environment, and are considered an important platform for providing both academic and personal support [1,2]. As opposed to the didactic nature of lectures and other forms of instruction, tutorials are support systems where students engage with specific learning materials, and provide opportunities for students to express own points of view and interact and relate with tutors and other students through discussion and problem-solving [1,2]. Despite the widely documented benefits of tutorials as instructional tools in engineering education, the impact on students learning is continuously being questioned. In essence, the success of tutorials as learning tools largely depend on the students’ preparedness in, and commitment to, the tutorial sessions [1,3]. Students’ participation also plays a critical role in the success of tutorials as supplemental tools for guided learning [1]. The under preparedness of students, and the poor attendances during tutorials, are some of the factors that often militate against the effectiveness of tutorials to students learning.

Inasmuch as the conventional tutorial strategies may still be relevant and effective, contestations exist around their efficacy in large number of students. In South Africa, in particular, large student enrolments are typical in most public universities. The declining faculty-student ratios are, thus, proving to be a challenge in the deployment tutorials as effective teaching and learning strategies. In order to circumvent some of these challenges, this paper proposes a tutorial-based assessment strategy that leverages on reciprocal peer assessment in tutorial practice questions. The paper explores reciprocal peer tutorial assessment (RPTA) as an innovative strategy to enhance students’ disciplinary understanding and engagement in a capstone course in Process Metallurgy.

2. Theoretical Background

2.1. Peer Assisted Learning

Peer assisted learning (PAL) is defined as a collaborative learning approach that involves the acquisition of knowledge and skills through active support among peers [4,5]. It involves participants from similar social groupings who are not professional teachers helping each other to learn [4]. In essence, PAL is an umbrella term that involves the various forms of peer-assisted learning such as peer-teaching, peer-learning, peer-assessment, peer-mentoring, and peer-leadership. Enshrined in the social-constructivist approach proposed by Vygotsky [6], PAL creates opportunities for meaningful learning through critical dialogue among peers. To date, several scholars have highlighted the benefits of including the peer-assisted learning, and its variants, in the acquisition of disciplinary knowledge [4,5,7,8,9,10,11]. Amongst these, peer assessment has attracted significant attention in the recent past [5,7,8,9,10,11,12].

Peer assessment is a process whereby individual students or groups of students in the same academic level, or course of study, rate the performance of their peers [7]. Peer assessment is widely accepted in educational research as a strategy that can enhance students learning by fostering desired attributes, such as teamwork and collaboration, critical enquiry, reflection, disciplinary and interdisciplinary communication skills, collaborative learning, and taking responsibility for own learning [5,8,13]. It increases the possibility for students to engage in reflection and exploration of concepts in the absence of authority of the course facilitator. By evaluating the their peers, students can gain more practice in communicating the subject matter than is typically the case in learning activities which are facilitated by the course facilitator [8,11]. O’Moore and Baldock [11] proposed that peer assessments assist in demystifying the assessment process by providing the students with a better understanding of what is required to achieve a particular standard. It also assists the students to reflect on their own approaches to assessment tasks [11]. Other benefits include students’ mastery of concepts and developing relationships with other students [10]. Peer assessment is also a pragmatic approach of for providing regular feedback and enhancing students’ engagement, particularly for large tutorial cohorts [10,11].

Peer tutorial assessment forms one of the variants of peer assessment that has received considerable attention in higher education [5,7,9,10,11,12]. By their nature, tutorial-based assessments are an important platform for providing feedback and academic and personal support [2]. To date, several studies have highlighted the importance of reciprocal peer support, in the form of reciprocal peer tutoring, in tutorial based assessments. In fact, reciprocal peer tutoring (RPT) is a form of collaborative learning that involves students of similar backgrounds experiencing interchanging roles of tutor and learner [5,14,15,16,17]. Fantuzzo et al. [14] proposed that reciprocal peer tutoring results in higher academic and psychological adjustment through mutual assistance strategies between the tutor and the tutee. The findings from the studies highlighted above necessitated the need to further explore the opportunities to enhance students’ disciplinary learning using reciprocal peer tutorial assessment (RPTA) as an instructional and assessment strategy. The RPTA, a variant of reciprocal peer tutoring, is defined herein as a teaching and learning strategy wherein individual students, or groups of students, assess the tutorial submissions and/or tasks of their peers with or without the assistance of the course facilitator. Falchikov [7] proposed the benefits of peer feedback marking as an opportunity to provide feedback and identifying strengths and weaknesses in students’ submissions. Eva [9] highlighted that the prolonged interaction among students in a tutorial-based assessment was beneficial to self-directed learning and identifying competency areas. In earlier studies, Gray [12] reported on a study involving two-stage peer marking of an engineering examination, with and without model answers. By comparing the discrepancies in the marks allocated before and after the distribution of model of model answers, the study provided the students with opportunities to constructively and critically become aware of the subject matter and the communication aspects in examinations [12]. Thus, the RPTA approach is potentially a powerful collaborative learning tool that can catalyse the ability of students to master knowledge areas in stages, learn from their successes and mistakes, and build disciplinary self-efficacy as they progress through knowledge requirements of the course. Furthermore, RPTA can also be considered to be a panacea to effective assessment and rapid feedback in large classes [4,7,11,12,18].

2.2. Students’ Perceptions to Peer Assessments

Whilst acknowledging the benefits of peer- assessments highlighted so far, there are several challenges and limitations associated with the assessment process [12,19,20,21,22,23,24,25,26,27]. Previous studies noted the negative impact of peer assessments on relationships among the peers and criticized the potential lack of validity, reliability and objectivity in the assessments undertaken by students [12,19,20]. Gray [12] highlighted the tendency by students to be too harsh on the colleagues as evidenced by the tendency to under-mark their peers’ work. Liu and Carless [26] highlighted the challenge of perceived lack of expertise based on students’ belief that their peers are not qualified enough to give insightful expertise.

The perceptions of students on peer assessments can have a significant influence on their approaches to learning [20,27,28,29,30,31,32]. Van de Watering et al., [31,32] defined students’ perception of assessments as the way in which the students viewed and understood the role, importance and nature of assessments. Birenbaum [29] highlighted that the differences in assessment preferences correlated with differences in learning strategies. Depending on intrinsic and extrinsic factors, students can adopt either the surface or the deep learning approach. In surface learning approaches, students view the assessments as unwelcome external impositions, and the learning strategy is often associated with the desire to complete the task with as little personal engagement as possible [20,22,28,29,30,31,32]. In contrary, deep learning approaches, are based on active conceptual analyses and often result in a deep level of conceptual understanding [20,22,30,31,32]. This implies that assessment strategies perceived inappropriate may constrain intrinsic motivation for deep learning by students, which in turn can encourage surface approaches. In fact, previous research has demonstrated that positive intrinsic motivation is related to greater cognitive engagement as well as positive actual academic performance [20,22,28,29].

Understanding the perceptions and preferences of students to peer assessments thus provides opportunities to improving the design construct of the process assessment process. Previous studies highlight a positive correlation between the students’ preferences and perceptions to assessment method and the level of skills acquired [20,28,29]. Nevertheless, the mismatch between the students’ perceptions and expectations of peer assessments often presents a conundrum to the design and implementation of RPTAs. Drew [33] highlighted the need for clear expectations, clear assessment criteria and timely feedback as possible ways of helping students learn. In other studies, Vickerman [19] proposed that the design and structure of peer assessments should ensure that students appreciate the technicalities and interpretations of assessment criteria in order for them to make sound judgments on the subject content.

3. Contextual Background to the Study

The exploratory study was conducted on a capstone undergraduate course in Process Metallurgy, namely, Physical Chemistry of Iron and Steel Manufacturing (capstone) at the University of Witwatersrand (Table 1). The teaching and learning practices in this course involves traditional lectures based on PowerPoint lecture notes and discipline specific scientific journal articles. Group tutorials during designated tutorial periods are used to compliment the understanding of the disciplinary content based on selected knowledge areas. Based on observations from previous (2016-2017) cohorts, the under preparedness, poor attendance in tutorials, and the students taking the tutorials as opportunities to solicit for answers from the facilitator were some of the challenges that affected the effectiveness of the conventional approaches to tutorials. In view of these challenges, RPTA was implemented as an alternative teaching and learning strategy. The broad objective of this study was to evaluate the impact to disciplinary learning and the perceptions and preferences of students to RPTA over the conventional tutorial approaches. The following research questions were investigated:

Table 1.

Brief description of the course under study.

- (i).

- What is the impact of reciprocal peer tutorial assessment on understanding of the disciplinary concepts covered in the course?

- (ii).

- What are the students’ perceptions and preferences towards the reciprocal peer tutorial assessment process?

4. Methodology

4.1. Reciprocal Peer Tutorial Assessment (RPTA) Strategy

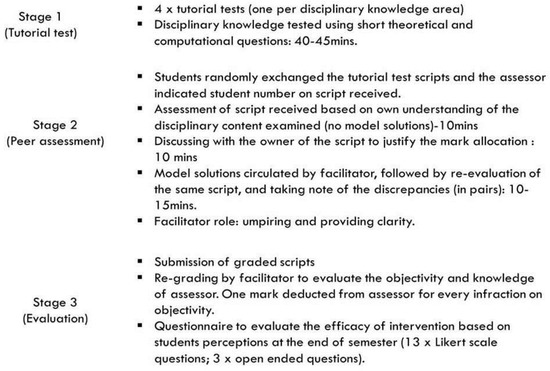

The learning outcomes of the course were broken down into four knowledge areas in iron and steel manufacturing, namely, (1) fundamental principles (thermodynamics and kinetics and transport), (2) blast furnace operation principles (raw materials engineering; equipment, process design and operation; process chemistry), (3) oxygen steelmaking (equipment, process design, and operation; process chemistry), and (4) secondary metallurgy principles (equipment, process design and operation; clean steel strategies). A tutorial assessment was designed for each knowledge area, making a total of four formative tutorial assessments for the course (Table 1). Disciplinary and conceptual knowledge was tested in the form of short theoretical and computational tutorial practice questions. Each tutorial test was designed not to exceed 40 min per session. Figure 1 shows the flow of activities during the RPTA exercise. In order to ensure the autonomy of students’ learning during the RPTA process, the facilitator acted as an umpire and provided clarity when necessary.

Figure 1.

Conceptual flow of activities during the RPTA process.

The approach adopted in this study is similar to a previous study conducted by Gray [12]. As highlighted in Stage 3 of the process, random tutorial scripts were selected and re-assessed by the course facilitator. Collorary to the conventional approaches to assessing students’ work, the current intervention also assessed the objectivity and level of disciplinary knowledge of the assessor. The conditions of engagement, highlighted in the course brief circulated at the beginning of the semester, clearly indicated that a mark would be deducted from the assessor for every infraction on objectivity. In the event that lack of objectivity and consistency was identified, the facilitator deducted an equivalent of marks from the assessor’s tutorial test mark. This was done to ensure fairness and reduce incidences of prejudice during the assessment process.

4.2. Data Collection

At the end of the teaching period, a pencil and paper questionnaire was distributed to the 2018 (class size = 52) cohort in order to evaluate how the peer tutorial assessment impacted on students’ learning. The survey instrument (Appendix A) consisted of thi13rteen Likert-scale questions and three open-ended questions. The Likert-scale questions relied on the scoring 1 (strongly disagree) to 5 (strongly agree). The open-ended questions solicited responses on how the RPTA intervention positively or negatively affected one’s own learning, as well as on how the course facilitator could improve on the peer assessment practice. The open ended questions also provided flexibility on any additional information the students were willing to share.

4.3. Ethical Considerations

Before the survey instrument was distributed, the students were assured of confidentiality and the voluntary nature of the exercise. Since the survey instrument was targeted at a specific group of students within a specific year and subject of study, it was, therefore, difficult to eliminate the variables of the sample being known to the researcher. Thus, to ensure anonymity, the questionnaire was conducted according to the University ethics guidelines of conducting students’ evaluations, wherein the students filled in the questionnaires and the class representative placed them directly into an envelope in the absence of the facilitator. Thus, the voluntary submission of the questionnaire was considered as consent to participate in the study.

4.4. Data Analysis

Descriptive statistics were adopted to analyse the responses from the questions based on the Likert scale. Out of the class size of 52, the average response rate for Likert scale questions was 65%, and this was considered to be acceptable for the scoping study. The Likert-scale questions were broken down into three categories, (1) to evaluate the students’ familiarity of the use of reciprocal peer-tutorial assessment as a teaching and learning strategy, (2) to evaluate the impact of reciprocal peer tutorial assessment on disciplinary learning, and (3) to evaluate the students’ perceptions and preferences on the reciprocal peer tutorial assessment strategy. The analyses were conducted using Microsoft Excel® 2010. The open-ended questions were analysed based on text analysis by selecting the frequently appearing responses and/or key words in the responses.

5. Results

5.1. Students’ Prior Knowledge and Exposure to RPTAs

Table 2 shows the students’ level of prior knowledge and prior exposure to reciprocal peer assessments as teaching and learning strategies. Out of the 35 respondents, 60% confirmed that they did not have any prior exposure to reciprocal peer tutorial assessments as a strategy for collaborative learning during tutorials. On the other hand, 25 of the 35 respondents (71.4%) confirmed familiarity with the expectations of the reciprocal peer assessments adopted in the present study. The high level of familiarity by students was expected since the procedure and conditions of engagement of the RPTA were distributed at the start of the teaching period.

Table 2.

Students’ prior knowledge and exposure to peer tutorial assessments.

5.2. Impact of RPTA on Disciplinary Learning

Table 3 shows the impact of reciprocal peer tutorial assessment on disciplinary learning. The objective was to evaluate the impact of RPTA strategy on the understanding of the disciplinary concepts covered in the course, and also to evaluate how the current interventions enhanced students’ own learning and interest in the subject.

Table 3.

Impact of reciprocal peer tutorial assessment on disciplinary learning.

Ironically, only 32% of the respondents believed that the RPTA had a positive impact on understanding the disciplinary content. Furthermore, only 29% of the respondents cited that peer tutorial assessment increased their interest on the subject.

In addition, only 50% of the respondents confirmed that peer assessment in tutorials enhanced their own learning (Table 3). Despite the low ranking in the Likert-scale type responses, 31 students responded to the open-ended questions on how the RPTA positively impacted their learning experiences. From the text analyses of students responses, some of the most frequently cited reasons include healthy competition among students (freq. ×13); different perspectives to problem solving (freq. ×9); opportunity for intellectual debate, learn more on concepts, and identifying common mistakes (freq. ×6); and exchange of ideas from positive interaction between facilitator and students (freq. ×3). According to one respondent, “the different responses from peers increased my understanding of concepts, and I was able to see how peers viewed different concepts”. Other respondents cited the opportunity to understand better when some of the concepts were explained in simple terms by peers and the better clarity as a result of the different perspectives/approaches on the work from peer discussions.

The RPTA strategy was deliberately designed to emphasise on testing disciplinary knowledge using short theoretical and computational-based tutorial practice questions. The majority of the respondents (97%) concurred on the need for high understanding of the disciplinary concepts in reciprocal peer tutorial assessments (Table 3). Six respondents concurred that the approach was a good and effective way to force students to engage with concepts and prepare beforehand. Nevertheless, some respondents (freq. ×5) doubted their peers’ level of disciplinary knowledge, and felt that that they were marked down due to assessor’s lack of knowledge. In particular, some respondents felt that some of the responses from peers were confusing, and succinctly stated that it was “confusing to read someone’s work if you don’t understand the concepts”.

Based on the analysis of open ended questions, several reasons were cited as the major constraints to disciplinary understanding and self-interest in the subject (freq. ×13). Commonly cited reasons affecting the understanding of concepts include time constraints to prepare for the tutorial tests; under preparedness leading to anxiety over the impact of the assessments on the year mark; too much work resulting in unnecessary stress, anxiety and pressure; and deliberate focus on marks other than real learning. Since the scheduled dates for the tutorial assessments sometimes coincided with assessments in other courses (freq. ×10), the increased workload thus entailed that some students would be under prepared for the tutorial practice test, and would thus be forced to cram. In fact, one respondent succinctly stated that the high workload as a result of the RPTA practices “forced me to spot questions rather than fully applying my mind”.

5.3. Students’ Perceptions and Preferences on the Peer Tutorial Assessments

The final set of questions was designed to assess the students’ views on, or with, the overall peer tutorial assessment process. The students were tasked to rate their objectivity, if they enjoyed assessing their peers, and whether they would recommend the same approach in other courses. The results reveal mixed responses to the overall perceptions of students on peer tutorial assessment (Table 4). Interestingly, 51% of the respondents were happy with the way they were evaluated by their peers evaluated enjoyed assessing their peers. However, only 17% cited that they enjoyed assessing their peers. Apparently, the discomfort in assessing peer assessment arose from students doubting their own capabilities to evaluate their peers whom they perceived to be more knowledgeable than them. Based on open-ended questions, some respondents cited that they felt incompetent and were embarrassed from their poor performance during the tutorial tests. Overall, 60% acknowledged that they were objective in assessing their peers, while 66% confirmed that they did not consider the reciprocal peer tutorial assessments to be an opportunity to level grudges.

Table 4.

Impact of reciprocal peer tutorial assessment strategy on students’ satisfaction.

Table 5 shows the results of the students’ preferences to RPTA. Despite the obvious opportunities to discuss the content and learn from their peers, 60% of the responded would not recommend the use of reciprocal peer-assessments in other courses (Table 4). Overall, 51% of the respondents cited that the exercise was too much work for them, and explicitly cited expressed explicit preference to replace the tutorial tests with a take home assignment. Other reasons cited for the negative perception on the reciprocal peer tutorial assessments included difficulties with assessing peers’ work, opportunities for peers to cheat and award each other marks, and the RPTA exercise being time consuming and boring.

Table 5.

Students’ preferences to RPTA.

6. Discussion

Based on a two-stage reciprocal peer evaluation in tutorial tests, this study explored opportunities collaborative learning in order to enhance students’ disciplinary learning and engagement in a capstone course in Process Metallurgy. Tutorial-based assessments are an important platform for providing both academic and personal support [2,7]. Previous studies clearly attest the importance of reciprocal peer tutoring and its variants to be vital tools in reflective and self-directed learning by students, providing feedback, and in identifying strengths and weaknesses in students’ learning [5,7,10,11,12,14,16,18].

Based on students’ responses, the opportunity for intellectual debate and experiencing different perspectives to problem solving by peers was rated to have positively impacted on the understanding of disciplinary concepts. This is because some students understood the concepts better when they were explained in simple terms by their peers. In fact, several studies affirm that the students who studied with reciprocal peer tutoring demonstrated a better disciplinary understanding of the material tested [5,14,15,17]. Since the facilitator’s role was reduced to only to umpiring, the timely feedback from peers also significantly reduced the pressure of dealing with large tutorial cohorts [11,12].

Furthermore, the importance of prior understanding of the disciplinary concepts forms the key precept for students to fully benefit in RPTA [5]. This realization, complemented with positive effects of RPTA on incentivising the students to prepare beforehand, provides synergistic benefits for self-directed learning. Thus, reciprocal peer tutorial assessments provide a good and effective way to force students to engage with disciplinary concepts and prepare beforehand, as well as helping them to identify their competencies and weaknesses [5,7,9,12].

An in-depth analysis of open-ended responses revealed that reciprocal peer assessment in tutorial tasks enhanced healthy competition among students. The beneficial effects of healthy competition on students learning has been widely explored in game-based learning [23,24]. These studies proposed that competition is vital to student learning by providing additional challenges and motivation, as well as opportunities for active participation in the learning process. Thus, in this case, RPTA can act as a motivational trigger that can stimulate students’ engagement and persistence in the learning activities. In other words, when in a healthy competition with others, students tend to work harder and, invariably, improving their knowledge in the process [24].

Nevertheless, competition among students can also have unintended negative impacts on disciplinary learning and students’ engagement [23,24,25]. Some of the respondents noted the RPTA provided opportunities for peers to cheat and award each other marks. Since the RPTA contributed 20% to the year mark for the course, competition linked to external rewards in the form of year marks can invariably lower the students’ sense of control, leading to reduced intrinsic motivation [25]. Clearly, some respondents cited that the RPTA exercise was too much work for them, time consuming and boring, and explicitly cited expressed explicit preference to replace the tutorial tests with a take home assessment task. Furthermore, the anxiety over year marks highlighted by students can also have significant implications on the apparent surface and superficial learning strategies adopted by some of the students [20,22,27,28,29,30,31,32]. As opposed to deep learning approaches where a student engages meaningfully with disciplinary content, there was tendency towards surface learning approaches associated with memorization without any meaningful engagement with the concepts involved [20,22,27,28,29,30,31,32]. Although not validated by findings from the present study, the negative perceptions and preferences to RPTA could have significantly precipitated the undesirable learning strategies adopted by some of the students.

The findings highlighted discomfort by students in assessing their peers whom they perceived to be more knowledgeable than them. In fact, some students cited that they felt incompetent and were embarrassed from their poor performance during the tutorial assessments. Thus, such students may be discouraged by the perceived persistent underperformance against their peers [21,23,24,34,35,36,37]. This sense of social comparison can, in fact, negatively affect the students’ self-efficacy, thereby undermining performance [21,23,24,34,35,36,37]. Self-efficacy, defined as the personal belief that an individual has the means and capabilities to attain prescribed learning goals, is a key component to self-regulated learning [34,35,36,37]. Inasmuch as students with high self-efficacy tend to be motivated to take up more challenges and control of their own learning, those with low efficacy are most likely to get frustrated, give up, and or/or engage in unethical behaviour to boost their year marks [34,35,36,37].

Furthermore, the reciprocity of learning among peers forms the fundamental tenet of collaborative learning in the current RPTA strategy. Based on findings from a study evaluating the students’ attitudes towards reciprocal peer tutoring, helpful group members, opportunities to work in groups, feedback from groups, comfort from peer interaction, and the opportunities to share knowledge were some of the obvious benefits of reciprocal peer tutoring [16]. Other scholars proposed that the success of reciprocal peer tutoring lied in the social and cognitive congruence between the peers [17,38]. Thus, for RPTA to be successful, it has to be based on a high level of mutual assistance, mutual trust, social acceptance and positive reinforcement among the students.

It is also clear that the benefits of the RPTA strategy in the present study could have been negated by the low levels of trust among peers. For example, some students explicitly stated that they doubted their peers’ level of disciplinary knowledge, and felt that they were marked down due to assessor’s lack of knowledge. These challenges are congruent to findings in previous studies [12,19,20,26,39,40]. In particular, the findings from previous studies clearly highlighted the students’ doubt in the reliability, validity and objectivity of peer assessments, and that the students preferences to learn from an expert academic rather than from inexperienced peers [12,19,20,26,39,40]. An earlier study by Gray [12] also concluded that students tended to be hard on their colleagues, seemed to be reluctant to award marks for other than obviously correct answers, and struggled to comprehend poorly expressed answers. However, despite these challenges, RPTA provided the students with opportunities to understand the expectations of the assessments in the course under study.

7. Conclusions

The findings from this exploratory study highlighted the delicate balance between the obvious benefits and the unintended consequences of adopting reciprocal peer assessments during tutorials. Due to complexity of factors affecting student learning in any given set up, the synergistic benefits of RPTA were obviously negated by factors such as negative perceptions and preferences towards the assessment methods, low level of trust among peers, anxiety over year marks, time constraints and discomfort due to perceived incompetency when compared to their peers. Overall, the link between disciplinary understanding and engagement on the overall pass rate in the course requires further analysis, and is a subject of further study in the next phases of the study. Based on the design-based research methodology involving multiple research cycles of design, testing and observations, evaluation and reflection [41,42,43,44], the next stage of the research is to conduct detailed trend analyses of the students’ responses, and correlate the trends to the summative disciplinary competencies over a period of three academic years. Overall, the results from this study form an important basis to inform the design and continuous improvement of the peer learning interventions in the Process Metallurgy discipline.

Funding

This research received no external funding.

Acknowledgments

The author acknowledges the support from the School of Chemical and Metallurgical Engineering, University of Witwatersrand, Johannesburg.

Conflicts of Interest

The author declares no conflict of interest.

Appendix A. Questionnaire Used for Data Collection

Course Name: Physical Chemistry of Iron and Steel Manufacturing Process

Course Coordinator: __________________Contact Details: __________________

Date: _________________________________

| Questions | Rating (1 = Strongly Disagree; 5 = Strongly Agree) | |||||

|---|---|---|---|---|---|---|

| 1 = Strongly disagree | 2 = Disagree | 3 = Neutral | 4 = Agree | 5 = Strongly agree | ||

| 1 | The expectations of peer assessment in tutorials was explained to me in advance | |||||

| 2 | Prior to this course, I was exposed to the use of peer assessment in tutorial sessions | |||||

| 3 | I enjoyed assessing my peers in the tutorials | |||||

| 4 | Peer assessment enhanced my understanding of the concepts covered in the tutorials | |||||

| 5 | Peer assessment in this course was too much work for me | |||||

| 6 | Assessing my peers was opportunity to level grudges | |||||

| 7 | Peer assessment in tutorials enhanced my own learning | |||||

| 8 | I am happy with the way my peer(s) evaluated my work | |||||

| 9 | I was objective in assessing my peers | |||||

| 10 | In order to be able to assess my peers, I need to have high understanding of the concepts | |||||

| 11 | Peer tutorial assessment increased my interest in the subject | |||||

| 12 | I recommend that we adopt peer tutorial assessment in other courses | |||||

| 13 | Please stop the peer tutorial assessments | |||||

Comment on how peer assessments in the tutorials enhanced your own learning: __________________________________________________________________________________________________________________________________________________________________________

Comment on how peer assessments in the tutorials negatively impacted your own learning: __________________________________________________________________________________________________________________________________________________________________________

Comment on how the course coordinator can improve the peer assessments in the tutorials: __________________________________________________________________________________________________________________________________________________________________________

Any other information you would like to share: __________________________________________________________________________________________________________________________________________________________________________

References

- Webb, G. The tutorial method, learning strategies and student participation in tutorials: Sone problems and suggested solutions. Program. Learn. Educ. Technol. 1983, 20, 117–121. [Google Scholar] [CrossRef]

- Roux, C. Holistic curriculum development: Tutoring as a support process. South Afr. J. Educ. 2009, 29, 17–32. [Google Scholar] [CrossRef]

- Karve, A.V. Tutorials: Students’ viewpoint. Indian J. Pharmacol. 2006, 38, 198–199. [Google Scholar] [CrossRef]

- Topping, K.J. Trends in peer assessment. Educ. Pyschol. 2005, 25, 631–645. [Google Scholar] [CrossRef]

- Gazula, S.; McKenna, L.; Cooper, S.; Paliadelis, P. A systematic review of reciprocal peer tutoring within tertiary health profession education programs. Health Prof. Educ. 2017, 3, 64–78. [Google Scholar] [CrossRef]

- Vygotsky, L.S. Thought and Language, Revised Edition; The MIT Press: Cambridge, MA, USA, 1986. [Google Scholar]

- Falchikov, N. Peer feedback marking: Developing peer assessment. Innov. Educ. Train. Int. 1995, 32, 175–187. [Google Scholar] [CrossRef]

- Boud, D.; Cohen, R.; Sampson, J. Peer learning and assessment. Assess. Eval. High. Educ. 1999, 24, 413–426. [Google Scholar] [CrossRef]

- Eva, K.W. Assessing tutorial-based assessment. Adv. Health Sci. Educ. 2001, 6, 243–257. [Google Scholar] [CrossRef]

- Schleyer, G.K.; Langdon, G.S.; James, S. Peer tutoring in conceptual design. Eur. J. Eng. 2005, 30, 245–254. [Google Scholar] [CrossRef]

- O’Moore, L.M.; Baldock, T.E. Peer assessment learning sessions (PALS): An innovative feedback technique for large engineering classes. Eur. J. Eng. Educ. 2007, 32, 43–55. [Google Scholar] [CrossRef]

- Gray, T.G.F. An exercise to improving the potential of exams for learning. Eur. J. Eng. Educ. 1987, 12, 311–323. [Google Scholar] [CrossRef]

- Hersam, M.C.; Luna, M.; Light, G. Implementation of interdisciplinary group learning and peer assessment in a Nanotechnology Engineering course. J. Eng. Educ. 2004, 93, 49–57. [Google Scholar] [CrossRef]

- Fantuzzo, J.W.; Riggio, R.E.; Connelly, S.; Dimeff, L. Effects of reciprocal peer tutoring on academic achievement and psychological adjustment: A component analysis. J. Educ. Psychol. 1989, 81, 172–177. [Google Scholar] [CrossRef]

- Griffin, M.M.; Griffin, B.W. An investigation of the effects of reciprocal peer tutoring on achievement, self-efficacy, and test anxiety. Contemp. Educ. Psychol. 1998, 23, 298–311. [Google Scholar] [CrossRef] [PubMed]

- Cheng, Y.C.; Ku, H.Y. An investigated of the effects of reciprocal peer tutoring. Comput. Hum. Behav. 2009, 25, 40–49. [Google Scholar] [CrossRef]

- Dioso-Henson, L. The effect of reciprocal peer tutoring and non-reciprocal peer tutoring on the performance of students in college physics. Res. Educ. 2012, 87, 34–49. [Google Scholar] [CrossRef]

- Ballantyne, R.; Hughes, K.; Mylonas, A. Developing procedures for implementing peer assessment in large classes using action research process. Assess. Eval. High. Educ. 2002, 27, 427–441. [Google Scholar] [CrossRef]

- Vickerman, P. Student perspectives on formative peer assessment: An attempt to depeen learning? Assess. Eval. High. Educ. 2009, 34, 221–230. [Google Scholar] [CrossRef]

- Lladó, A.P.; Soley, L.F.; Sansbelló, R.M.F.; Pujolras, G.A.; Planella, J.P.; Roura-Pascual, N.; Martínez, J.J.S.; Moreno, L.M. Student perceptions of peer assessment: An interdisciplinary study. Assess. Eval. High. Educ. 2014, 39, 592–610. [Google Scholar] [CrossRef]

- Bandura, A.; Locke, E.A. Negative self-efficacy and goals effects revisited. J. Appl. Psychol. 2003, 88, 87–89. [Google Scholar] [CrossRef] [PubMed]

- Biggs, J.; Tang, C. Teaching for Quality Learning at University: What the Student Does, 4th ed.; Society for Research into Higher Education and Open University Press: Berkshire, England, 2011; pp. 16–33. [Google Scholar]

- Vandercruysse, S.; Vandewaetere, M.; Cornillie, F.; Clarebout, G. Competition and students’ perceptions in a game-based language learning environment. Educ. Technol. Res. Dev. 2013, 61, 927–950. [Google Scholar] [CrossRef]

- Chen, C.H.; Liu, J.H.; Shou, W.C. How competition in a game-based science learning environment influences students’ learning achievement, flow experience, and learning behavioural patterns. Int. Forum Educ. Technol. Soc. 2018, 21, 164–176. [Google Scholar]

- Deci, E.L.; Koestner, R.; Ryan, R.M. A meta-analytic review of experiments examining the effects of extrinsic rewards on intrinsic motivation. Psychol. Bull. 1999, 126, 627–668. [Google Scholar] [CrossRef]

- Liu, N.F.; Carless, D. Peer feedback: The learning elements of peer assessment. Teach. High. Educ. 2006, 11, 279–290. [Google Scholar] [CrossRef]

- Weinstein, C.E.; Cubberly, W.E.; Richardson, F.C. The effects of test anxiety on learning at superficial and deep levels of processing. Contemp. Educ. Psychol. 1982, 7, 107–112. [Google Scholar] [CrossRef]

- Birenbaum, M. Toward adaptive assessment: The students’ angle. Stud. Educ. Eval. 1994, 20, 239–255. [Google Scholar] [CrossRef]

- Birenbaum, M. Assessment preferences and their relationship to learning strategies and orientations. High. Educ. 1997, 33, 71–84. [Google Scholar] [CrossRef]

- Struyven, K.; Dochy, F.; Janssens, S. Students’ perceptions about evaluation and assessment in higher education: A review. Assess. Eval. High. Educ. 2005, 30, 331–347. [Google Scholar] [CrossRef]

- Van de Watering, G.; van der Rijt, J. Teachers’ and students’ perceptions of assessments: A review and a study into the ability and accuracy of estimating the difficulty levels of assessment items. Educ. Res. Rev. 2006, 1, 133–147. [Google Scholar] [CrossRef]

- Van de Watering, G.; Gijbels, D.; Dochy, F.; van der Rijt, J. Students’ assessment preferences, perceptions of assessment and their relationships to study results. High. Educ. 2008, 56, 645–658. [Google Scholar] [CrossRef]

- Drew, S. Perceptions of what helps learn and develop in education. Teach. High. Educ. 2001, 6, 309–331. [Google Scholar] [CrossRef]

- Bandura, A. Perceived self-efficacy in cognitive development and functioning. Educ. Pyschol. 1993, 28, 117–148. [Google Scholar] [CrossRef]

- Zimmerman, B.J. Self-efficacy: An essential motive to learn. Contemp. Educ. Pyschol. 2000, 25, 82–89. [Google Scholar] [CrossRef] [PubMed]

- Asghar, A. Reciprocal peer coaching and its use as a formative assessment strategy for first-year students. Assess. Eval. High. Educ. 2010, 35, 403–417. [Google Scholar] [CrossRef]

- Papinczak, T.; Young, L.; Groves, M.; Haynes, M. An analysis of peer, self, and tutor assessment in problem-based learning tutorials. Med. Teach. 2007, 29, e122–e132. [Google Scholar] [CrossRef] [PubMed]

- Schmidt, H.G.; Moust, J.H.C. What makes a tutor effective? A structural equations modelling approach to learning in a problem-based curricula. Acad. Med. 1995, 70, 708–714. [Google Scholar] [CrossRef] [PubMed]

- Krych, A.J.; March, C.N.; Bryan, R.E.; Peake, B.J.; Pawlina, W.; Carmichael, S.W. Reciprocal peer teaching: Students teaching students in Gross Anatomy laboratory. Clin. Anat. 2005, 18, 296–301. [Google Scholar] [CrossRef] [PubMed]

- Bennett, D.; O’Flynn, S.; Kelly, M. Peer assisted learning in the clinical setting: An activity systems analysis. Adv. Health Sci. Educ. 2015, 20, 595–610. [Google Scholar] [CrossRef]

- Design-Based Research Collective Design-based research: An emerging paradigm for educational enquiry. Educ. Res. 2003, 32, 5–8. [CrossRef]

- Peer Group. What is Design-Based Research? 2006. Available online: http://dbr.coe.uga.edu/explain01.htm#first (accessed on 2 August 2018).

- Anderson, T.; Shattuck, J. Design-based research: A decade of progress in education research? Educ. Res. 2012, 41, 16–25. [Google Scholar] [CrossRef]

- Štemberger, T.; Cencič, M. Design-based research in an educational research context. J. Contemp. Educ. Stud. 2014, 1, 62–75. [Google Scholar]

© 2019 by the author. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).