1. Introduction

In a world that is saturated with digital devices of all kinds and where student ownership of laptops computers is above 90% [

1]; Pagram et al. [

2] it is odd that universities around Australia cling to the paper-based hand-written exam. This paper reports on an e-exam system that was developed to redress this imbalance. Specifically, it reports upon the Western Australian part of a national research project that trialed an e-exam system in a variety of universities and faculties. In Western Australia the faculty chosen was Education at Edith Cowan University (ECU)—one of the largest teacher education schools in Western Australia (WA).

The national project had the following expected outcomes:

To ascertain students’ preferences in relation to Information and Communication Technology (ICT) use as it relates to supervised assessment.

To provide insight into student experiences of the use of an e-exam system in a supervised assessment.

To identify any gaps between student expectations, experiences, expected technological capabilities of the e-exam system and real-life practice.

To provide insight into student performance by comparing/contrasting typed versus handwritten forms of high stakes assessments.

To contribute to guidance for academics in regard to deployment of ICT enhanced supervised assessments.

To contribute to identification of support needed by staff to develop their use of ICT in the supervised assessment process.

The project was designed to explore and contrast student expectations and experiences for hand written responses versus typed responses within supervised assessments, current practice, and best practice with respect to ICT use in supervised assessment. It is anticipated that the findings will be used as part of a dialogue with lecturers, students, administrators, and curriculum designers to inform the development and potential deployment of technology enhanced supervised assessments. The aim of which is to allow for greater pedagogical richness in supervised assessments that are able to take full advantage of the affordances of ICTs, with a view to increasing student motivation and engagement.

This paper will begin with a review of the literature around e-examinations followed by a description of the context of the ECU study. Following this are a number of sections explaining the method and findings of different stages of the ECU implementation. The paper is brought to a close with conclusions and recommendations.

2. Literature

The expansion of ICT has given birth to the use of current electronic exams (e-exams). For the purposes of this paper, e-exams are defined as timed computer-based summative assessments conducted using a computer running a standardized operating system. An advantage of this is that students already possess a familiarity with the technologies used for conducting these e-exams [

3]. A recent survey of students at a large Australian university conducted during 2012 indicated 98% ownership of mobile WiFi-enabled devices with laptop ownership the highest at 91% [

4], so there is no doubt students have the digital fluency and access to undertake examinations using a computer.

E-exams are having a significant impact on assessment and have been widely used in higher education in the world [

5,

6] They are considered to have many important advantages over traditional paper based methods, such as a decrease in cost [

7], marking automation [

8,

9], adaptive testing [

10], increase of assessment frequency [

11] and the ability to test greater numbers of learners [

12]. Additional benefits of e-exams over paper-based examinations are that these systems allow the use of text, images, audio, video, and interactive virtual environments [

13]. However, there are continuing challenges related to the use of e-exams including security and human interference [

14], incapacity to evaluate high-order thinking competencies [

10], the inappropriateness of technological infrastructures [

7], and the complexity of the system which means that significant training may be needed [

15,

16].

Several studies have been conducted on the perceptions of students and lecturers with regard to the application of e-exams in higher education. Often students held positive attitudes toward e-exams because of advantages such as time efficiency, low cost and perceived improvement of assessment quality [

9]. However, students were not unanimously in favor and gave reasons such as problems logging on, speed of typing and unfamiliarity with the exam system and software [

17]. Lecturers also held a variety of opinions. Some research found that the majority of lecturers preferred e-exams to traditional methods of exams [

15,

16,

18], others were resistant to adopting e-exams because they were reticent to change established examination habits and norms [

18,

19,

20].

Research undertaken by Al-Qdah and Ababneh [

21] demonstrated that different question types and different disciplines would affect the result of e-exams. For example, in Computer Science and Information Technology students have been found to perform better on multiple-choice and true or false questions. Other studies using both computing and English majors found that many students completed e-exams in 30% less time than paper based exams [

18], while Santoso et al. [

9] believed that students from a non-IT background would find difficulties with e-exams [

17]. Therefore, the type of questions and the nature of the discipline needed to be taken into consideration when it comes to designing e-exams.

3. Context of the Western Australian Trials

The university where the research took place is situated in the metropolitan area of Perth, Western Australia, is a large university with approximately 30,000 students, 17,546 of whom are female. These students are spread over three campuses. Historically, the University has its foundations in teacher education and training and its School of Education is the largest in Western Australia, with 5617 students and 104 academic staff [

22].

It was decided to undertake the research using students from the university’s one-year Graduate Diploma in Education (secondary) course with the two specializations chosen being design and technology and computing. These were chosen as the subjects are more challenging in terms of content for an e-exam system while minimizing other variables such as computer literacy (the students in these subjects tending to have a high level of computer literacy).

The e-exam environment used in the National Teaching and Learning project was a bespoke USB based system that allowed a closed (no internet access) exam to be administered on a Windows or Macintosh computer. The computer simply boots from the USB drive instead of its normal hard-drive and this locks out any network, hard drive or wireless access. This results in the students being locked within the exam environment.

The topics chosen for the WA trial were specifically chosen to push the limits of the e-exam system, these being; safe operating procedures (in design and technology), a highly graphical exam; and computer programming using the Python language (in computing) requiring a modified e-exam environment with a Python language editor.

4. Method and Findings: Production and Management

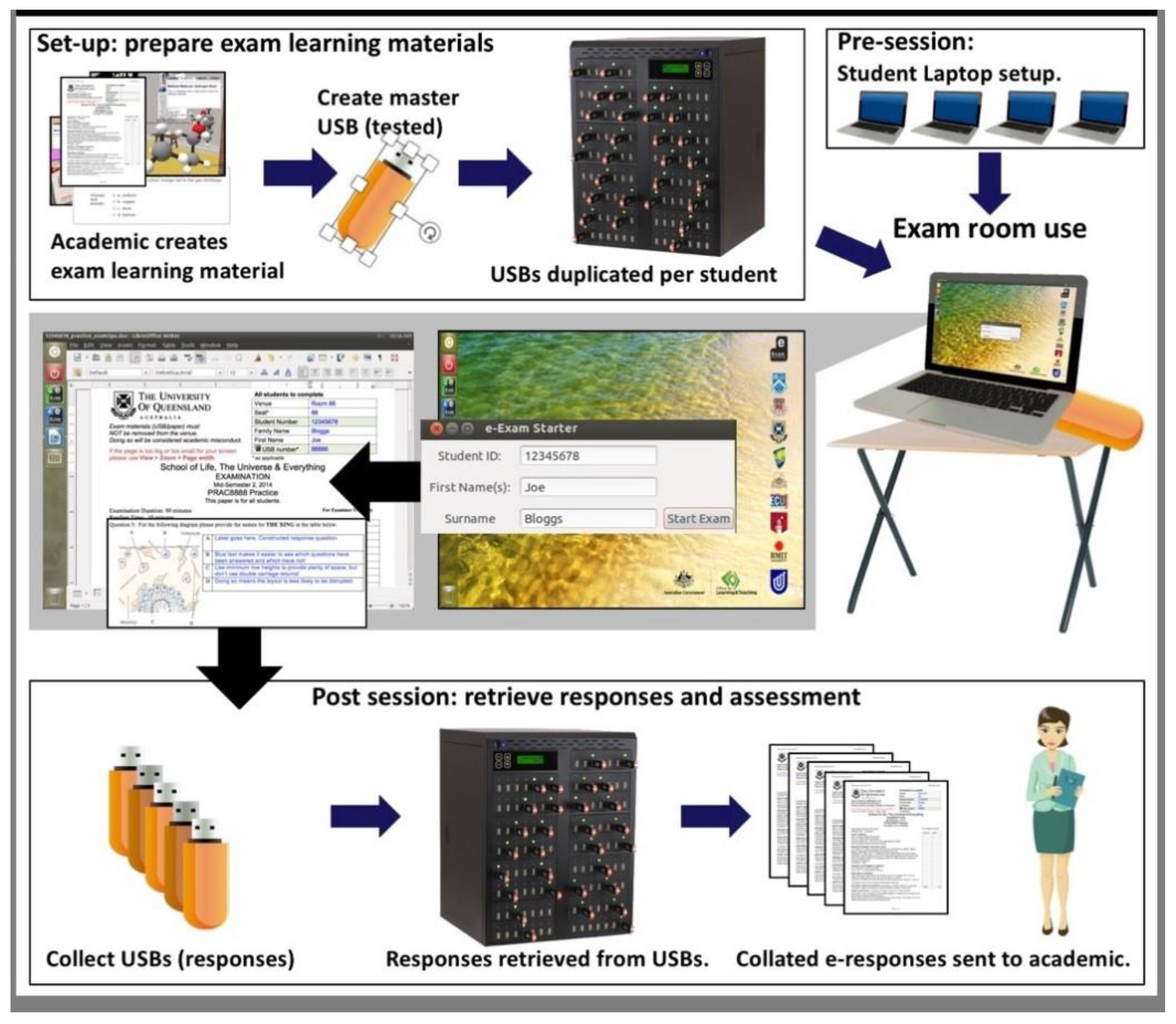

The process of creating and administering the e-exam can be seen in

Figure 1. One of the researchers prepared the exam materials and retrieved the student-responses for marking by the lecturers.

In each class, there were between 9 and 15 students and the lecturers provided a Word version of their exam to the researchers. From these, two master copies of each exam were created. The first master was a test version that did not contain the actual questions that would be in the exam and was use in a pre-trial test given to each class one week before the actual exam. The idea of this pre-trial was to train the students in the use of the e-exam environment. The actual exam was identical, but include the examination questions themselves. Both the trial and the actual exam masters were tested by the researchers and the lecturers.

A USB duplicator was used to produce the examination USB drives for student use. These were then manually checked to ensure all files had been copied correctly. In each case the exams and trials were invigilated by the research team.

4.1. Design and Technology (D&T) Exam

For the design and technology exam, a paper exam with content based upon safe operating procedures was created. The original paper-based exam consisted of short answer questions responded to on the exam paper itself often in response to a photographic clue. In transferring this Microsoft Word based exam to the e-exam format, considerable editing was required in order to allow the graphics to display correctly while allowing a text box for responses. The trial of this exam took place in the students’ normal classroom (a D&T workshop area) and students were asked to bring along their own laptop computers, with the researchers providing a few university owned ones (these were adapted from the normal university standard operating environment in order to allow booting from the USB) as a backup.

The trial went with only a few technical hitches outlined in the overall findings section, and students were resistant to using their own technology. The exam itself took place in a computer lab, and the exam went well but the limitations of the word processor provided or rather its differences from Microsoft Word were evident. For example, drawing was difficult, and students found navigating the USB based Linux operating system problematic.

4.2. Computing Exam

For the computing exam, a programming exam based on the Python language was used. As the Python language had been blocked as a security measure in the normal e-exam system, a special version was developed to allow it to run. This then allowed the students to program and save their work to the USB. The trial and exam took place in the students’ normal computer lab classroom with some students using their own computers for the trial, however, all elected to use the lab computers in the exam.

5. Production and Management Findings

Production of the exams as exam papers took some time as they had to be adapted and in the case of the computing exam the system itself needed to be changed. Production of the USBs was a straightforward but time consuming process with all USBs needing to be tested as there were occasionally fails in the copying process. Invigilation needed to be by staff with some technical knowledge in order to be ready to deal with any problems that may have arisen (however there were very few). Technical staff were also needed to recover the completed exams from the USBs for the lecturers to mark. Thus, overall, the e-exam needed more time both pre and post-exam and additional technical support, when compared to the paper equivalent.

6. Method & Findings: Student Interviews

Immediately following each examination a focus-group style interview was held with four students to investigate the students’ reactions and feelings toward the exam. The interviews were semi-structured, broadly covering issues around handwriting vs computer use, personal vs university technology, exam software and Linux OS ease of use, student enjoyment of approach, and suggestions for improving the system.

6.1. D&T Exam

All interviewed students were very definite about preferring to type exam answers as opposed to using pen and paper. They gave the expected reasons for this related to editing, spelling, legibility, and speed.

While students used university desktops for the exam it was initially thought that the students would use their own technology. However, students were not in favor of this, mostly due to a distrust around booting up their machine with a different operating system and concomitant fear of losing data. Additionally while all students had laptops some of these were corporate machines (for example from the Education Department) and students were even less keen for these to be booted from the USB. In the final analysis, there were not any problems with booting the desktop machines in preparation for the actual examination.

The Linux operating system, software, and general environment did result in some problems for the students. Students expressed that they felt a lack of familiarity with the menus/shortcuts in the software and had some difficulties navigating the file manager associated with the operating system. Despite students expressing concerns about the software, when asked directly, they did not relate a strong preference toward Microsoft Office over Libreoffice (the wordprocessor provided). At least one student was unable to locate where the files were being saved and this was indicative of students’ difficulties with the file manager. Students also felt that it would have been better if the system auto-saved their progress.

Finally, students were concerned that parts of the examination were not ‘locked down’ as they could edit the actual questions or delete them accidentally.

6.2. Computing Exam

All interviewed students preferred to type answers in preference to hand writing. The reasons given were speed and ease of editing.

Students were not in favor of using their own machines for the exam and gave the following reasons:

Inconsistency between machine specifications leading to inequity

Different hardware may not work

One student had older hardware with a noisy fan and was worried about disturbing others

Fear of losing data

As computing students, this group did not express difficulties with the operating system and the file manager. However, they were critical of Libreoffice, particularly the drawing tools that were required to be used for flowcharting. They felt dedicated flowcharting software should have been provided as time was wasted figuring out how to use the Libreoffice drawing tools. Students felt that the programming environment (Python) was well integrated into the system and functioned as expected. Students also commented on the way that windowing works differently in Linux, but if anything, saw this as an advantage.

While the students navigated the file manager well, they still believed that the instructions regarding exactly where files should be saved needed to be clearer.

6.3. Student Interview Findings Overall

The following were the major findings from the focus-group interviews across the two groups:

The machines used for the actual examination booted from the USB and no technical difficulties were experienced

Students prefer to type their exams with reasons given as legibility, spelling, editability, and speed

Students do not want to use their own laptops with reasons given as fear of losing data, inequity, and laptop ownership (in the case of corporate laptops)

Students experienced unfamiliarity with the operating system particularly the Linux file manager

Students experienced unfamiliarity with the office software (in this case Libreoffice)

The Python programming language was integrated well into the system

Specialized software may be needed for some tasks (e.g., flowcharting)

Aspects of the exam needed to be locked down so as to be un-editable

An auto-save function should be included with the OS for the exam

7. Method and Findings: Staff Interviews

The two staff members responsible for the examinations were individually interviewed on the day of the exam. The combined results from these interviews are presented here.

Both staff members expressed that their students would prefer to do an exam using typing rather than hand writing giving the same reasons as the students with the following additions:

The staff members also agreed with the students in believing it was better to use the university machines than those owned by the students and again they gave the same reasons as the students. Despite the students expressing concern the staff members did not feel that the differences between the e-exam system and software that the students were accustomed to was enough to warrant concern.

Overall the staff members felt that the system was best for multiple choice questions and written responses. Disadvantages of the system were risk of computer crash and consequent loss of work, slightly unfamiliar file system, having to change the bios of computers for USB boot and the system was inappropriate for subjects with a large practical component due to the limitations of the e-exam system. The staff also expressed that the system should run online with an auto-save function.

8. Method and Findings: Student Survey

The small population sizes in these two exam trials mean that comparisons and/or generalizations to larger populations are impossible. However, this does not make the data meaningless. In a system such as this, designed for high-stakes examination situations, one would hope for strong agreement to positively stated questions even in a small group and this is how this data has been interpreted. That is, in interpreting these charts the authors’ expectations were that if the examination system is viable there would be high-levels of agreement to survey questions.

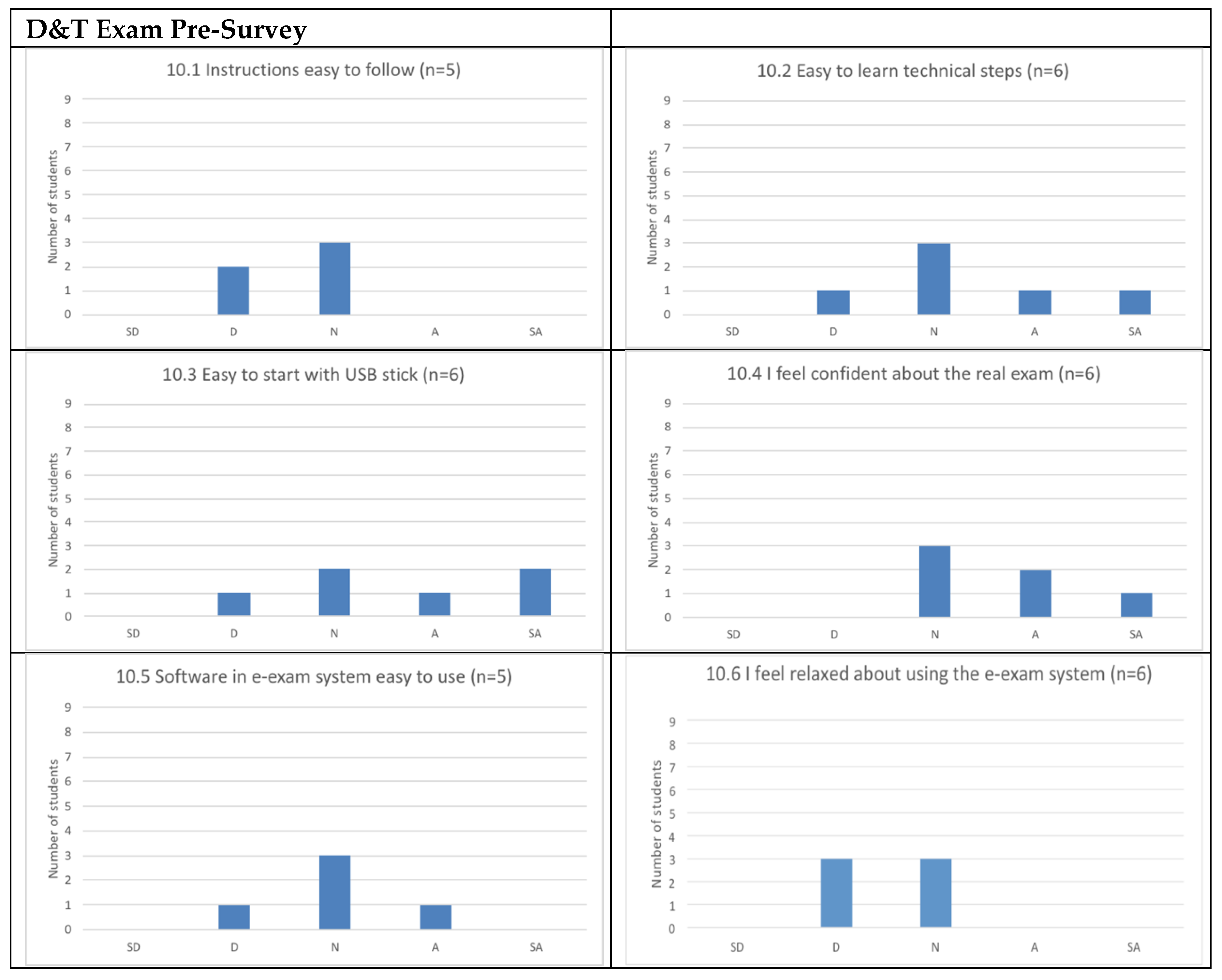

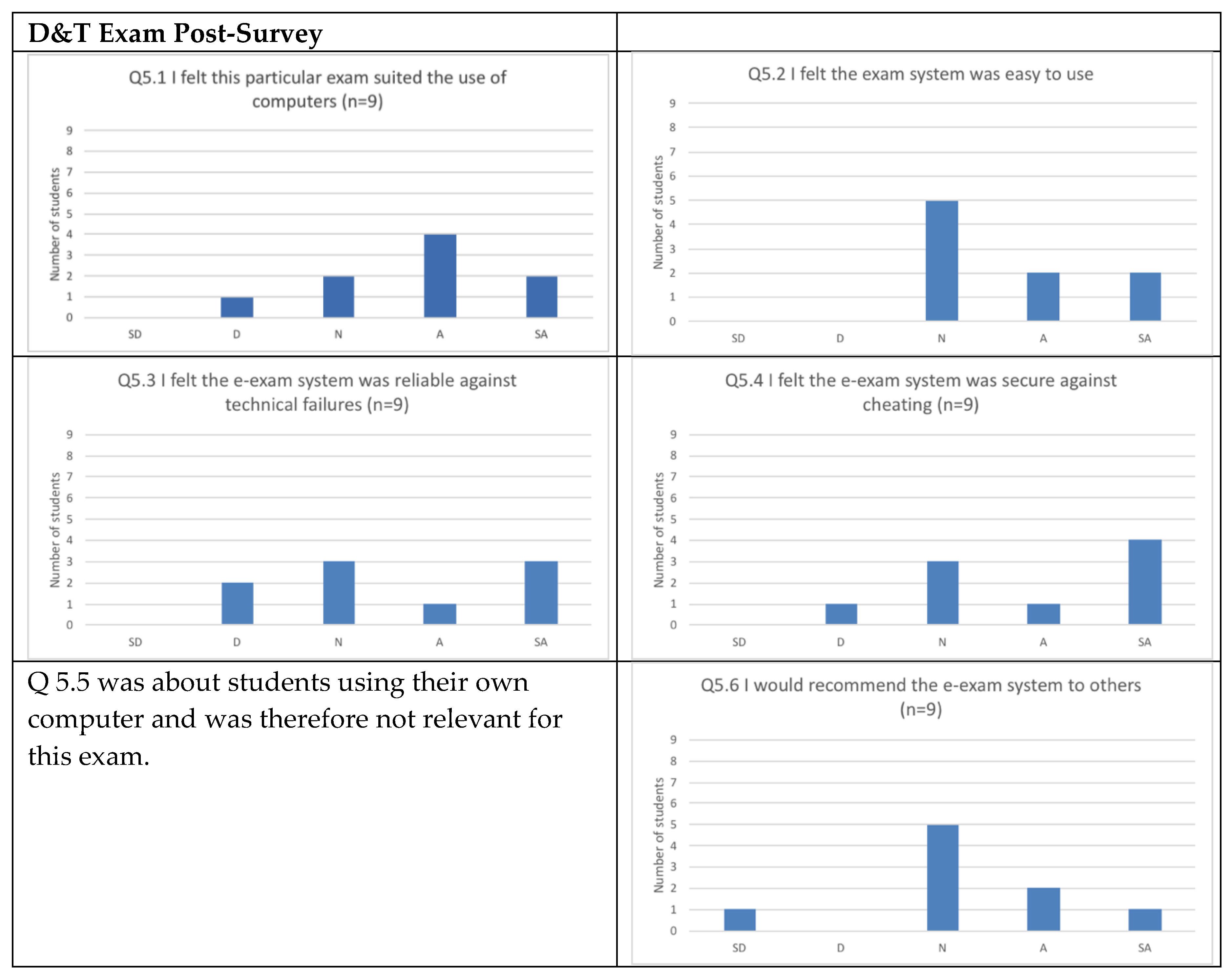

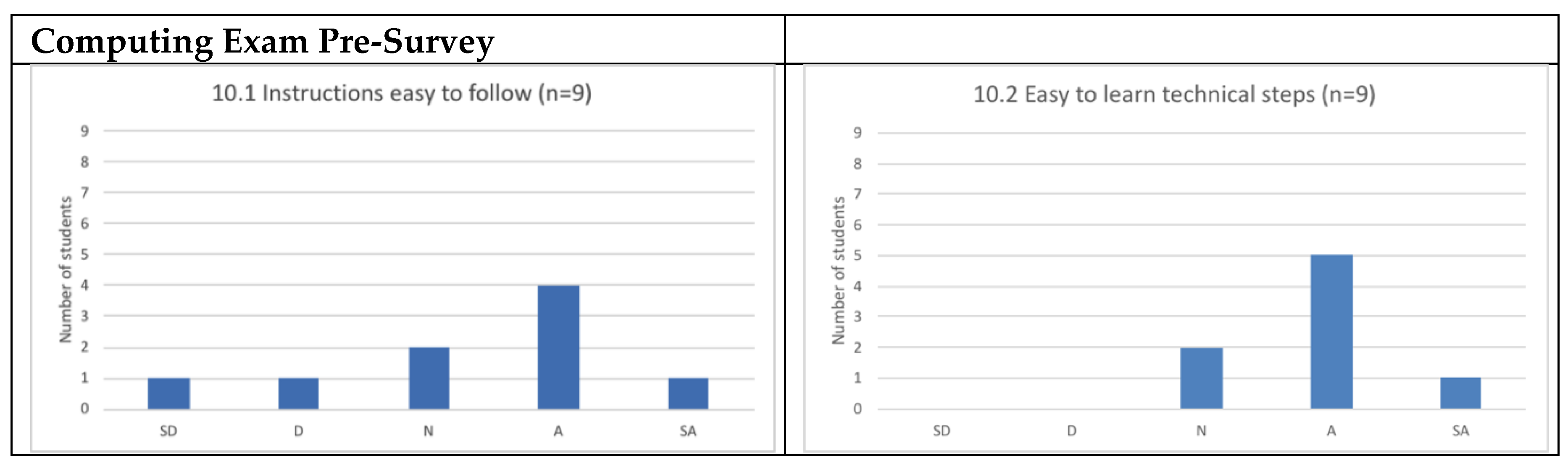

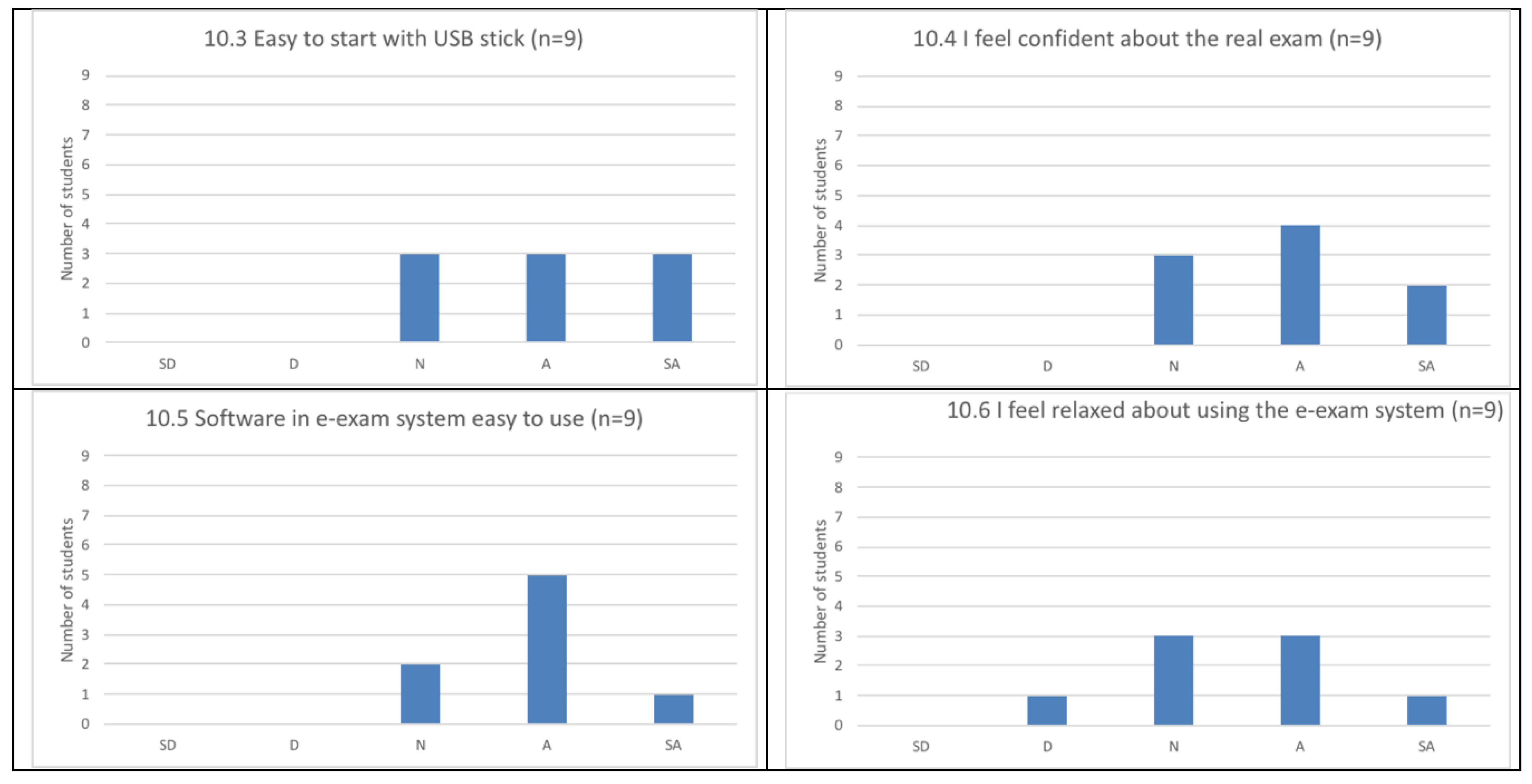

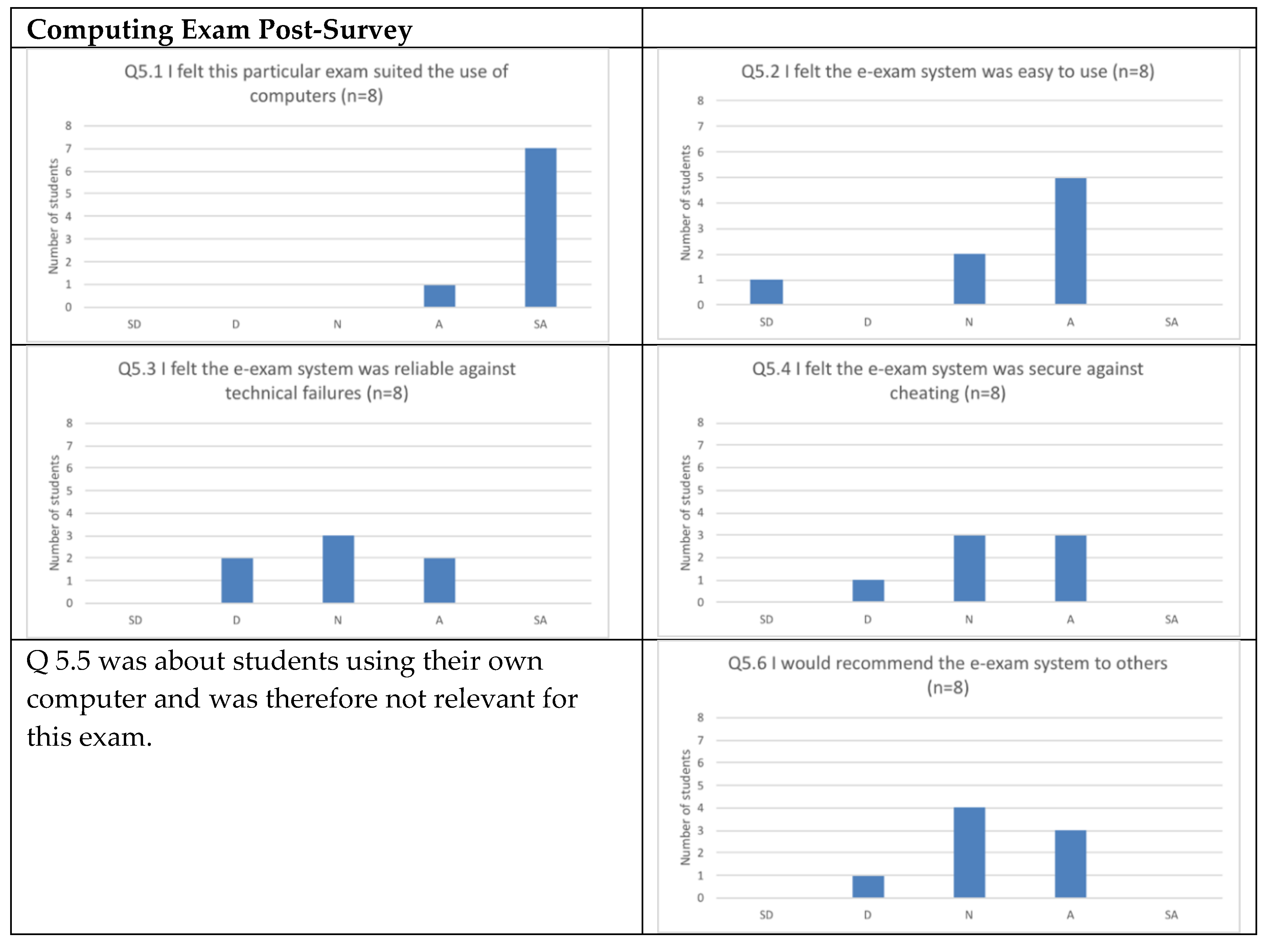

Due to space limitations the results from selected pre and post-survey questions most useful to the current discussion are given here. These selected questions were Likert style questions showing general approval or disapproval toward the e-exam system. The x-axes in the following charts are as follows: SD = strongly disagree, D = disagree, N = Neutral, A = agree, and SA = strongly agree.

8.1. D&T Exam

The following charts (

Figure 2) are taken from question 10 in the pre-implementation student survey, hence, the numbering from 10.1 to 10.6 below. The results indicate that prior to the e-exam the six D&T students felt reasonably confident about the upcoming e-exam (10.4). However, they had reservations about the instructions, the technical steps, the USB boot, and the e-exam system and software. While this is concerning, it is understandable that leading into an assessment that will affect their marks that they should feel a little anxious. The ‘disagree’ responses are still problematic as with an exam system one would like the students to be feeling very positive.

Subsequent to the D&T e-exam students participated in a post-implementation survey and some relevant charts from question 5 of this survey are shown in

Figure 3. The charts suggest that after the e-exam the nine D&T students were mostly positive regarding their experience. The main concerns were around the possibility of technical failures, and cheating. The neutral responses may be considered concerning as it would be desirable to have mostly positive responses for a system designed for a high-stakes situation. Of the nine only 3 agree with the statements ‘I would recommend the e-exam system to others’. The reasons for this may be garnered from questions 5.3 and 5.4 that show that the students were concerned about technical failure and the possibility of cheating.

8.2. Computing Exam

Figure 4 shows that prior to the computing e-exam the nine Computing students felt very confident about the upcoming e-exam, with 7 out of 9 either agreeing or strongly agreeing (10.4). They felt confident about the instructions, the technical steps, the USB boot and the e-exam system and software. This is understandable both due to the nature of the students (studying computing) and the fact that they knew the exam would be electronic regardless of whether the e-exam system was used or not. Additionally, the Python environment was the same as the environment they were used to, and this is in contrast to the word processor (Libreoffice), which was a little unfamiliar.

Figure 5 suggests that after the computing e-exam the eight computing students were mostly positive regarding their experience and felt that the e-exam suited the use of computers (5.1). However, being computing students may have made them less trusting about the reliability of the exam and the possibility of cheating (5.3 and 5.4). It is possible that this factored in to their reluctance to be prepared to recommend the e-exam system to others, with only 3 of them agreeing with this statement (5.6). Again, neutral responses to these statements may be concerning to the designers of the e-exam system.

9. Conclusions

Combining the results from the interviews and surveys for the two groups (computing and D&T the following overall conclusions may be made. These conclusions should be read with appreciation of the small sample sizes and the atypical nature of the two e-exams. For examinations that were in this trial, there appears to be little advantage in using the e-exam system.

The exams required extensive pre-preparation beyond what is standard for both D&T and computing before production. Also subsequent to the e-exams data needs to be copied from the USBs. This pre and post work is extra to running a traditional examination.

Students did not wish to use their own computers for the e-exam principally because of data-security fears.

The software and environment also presented some difficulties for students. Understanding the Linux file system and using the Libreoffice word processor (particularly for drawing) were the main issues.

Overall students were reasonably positive toward the e-exam system but were fearful of losing data.

Recommendations

The system should have an auto-save or save to the cloud. This may lead to improvements in post exam efficiency and would also address student problems with the Linux file system.

Specific software for certain tasks such as drawing would be a good inclusion.

10. Post-Script

The above findings represent the situation at the conclusion of the ECU trials. However, development of the e-exam system has been ongoing. Since this paper was written the following enhancements to the software have been achieved:

It is now possible to run the e-exam system in a fully online mode. However, a completely reliable network connection is required for this.

Auto saving has been added to both online and offline versions of the e-exam system to protect student data in the event of a ‘crash’.