1. Introduction

In today’s global digital transformation, the rapid development of artificial intelligence technology has brought unprecedented opportunities and challenges to higher education. From an international policy perspective, there are clear guidelines supporting AI-assisted transformation in higher education. Recent policy frameworks, such as UNESCO’s “AI and Education: Guidance for Policy-makers” (2024) and the OECD’s “Education Policy Outlook: AI in Education” (2024), emphasize the need to promote AI-assisted educational transformation, strengthen curriculum system reform for the digital economy and future industries, and optimize disciplinary and professional settings.

Although the field of Artificial Intelligence in Education (AIEd) has a substantial history as a research domain, the rapid evolution of AI applications has never created such pressing ethical concerns (

Chan & Lee, 2024). Crucially, existing AI ethics education in universities is often fragmented, overly reliant on traditional lecture formats, and struggles to connect abstract ethical theories with the concrete, nuanced challenges students face when using Gen-AI tools like ChatGPT (3.5)—a problem we identify as a critical theory–practice disconnect. Under the guidance of relevant policies, higher education institutions are actively embracing AI technology, exploring new models and methods in education and teaching. Beyond computer science programs that deeply integrate AI technology, numerous other disciplines are incorporating AI core concepts and thinking approaches into professional education through “AI+X” integration models, forming widespread AI general education curricula. This transformation is not merely technical; it reshapes pedagogical models and the very role of the educator, demanding a new focus on personalized learning and ethical oversight (

Al Nabhani et al., 2025). As Gen-AI continues to influence educational trends and horizons, a proactive and holistic approach to ethics becomes paramount (

Li et al., 2026).

From early computer-assisted instruction to today’s intelligent educational platforms, AI technology applications in higher education continue to expand in scope and deepen in application. Research indicates that AI technology can not only achieve personalized teaching and improve educational quality but also optimize educational resource allocation and promote educational equity (

Zawacki-Richter et al., 2019). However, research such as that of

Crawford et al. (

2023) and

Lund et al. (

2023) has emerged that examines the ethical considerations and transformative potential of AI, not only in enhancing academic productivity but also in addressing the challenge of plagiarism in academic assignments.

The increasing prevalence of Artificial Intelligence in higher education underscores the necessity to explore its implications on ethical, social, and educational dynamics within the sector (

Al-Zahrani, 2024). Therefore, higher education needs to establish comprehensive governance frameworks while embracing technological innovation. How to scientifically and rationally understand and utilize AI technology to better serve higher education development goals has become an important issue of concern in educational and academic circles. In view of this, this study aims to establish a full-cycle AI ethics education system from theoretical construction and practical training to intelligent prevention, providing systematic solutions for universities to address AI technology challenges.

2. Related Work

2.1. Current Status of AI Ethics Education

Currently, AI ethics education in universities worldwide is in an exploratory development stage, characterized by multi-level and uneven development. From an overall layout perspective, specialized AI ethics courses remain relatively scarce in university curricula, with most institutions not yet listing them as independently offered professional courses. AI tools are constantly being released into the public domain. As with all new technological innovations, this brings a range of opportunities and challenges (

Cowls et al., 2023).

Ethics education content is mainly scattered across traditional courses such as computer ethics and technology ethics, or interspersed as special lectures in AI professional courses. This fragmented teaching arrangement makes it difficult to form systematic ethical knowledge systems, and students often obtain only scattered ethical concepts and principles during learning. As AI technology rapidly advances, it becomes imperative to equip students with tools to navigate through the many intricate ethical considerations surrounding its development and use. Despite growing recognition of this necessity, the integration of AI ethics into higher education curricula remains limited.

From a pedagogical standpoint, university AI ethics education is often characterized by a disconnect between abstract theory and practical application. Curricula frequently prioritize traditional frameworks like utilitarianism and deontology, while failing to provide in-depth analysis of contemporary AI-specific dilemmas such as algorithmic bias or data privacy. Case studies, when used, are often presented superficially, without the necessary technical and ethical depth. This pedagogical approach hinders students’ ability to translate ethical principles into concrete problem-solving skills for real-world technology development.

In terms of teaching methods and formats, traditional one-way didactic classroom instruction still dominates. Although some universities attempt to introduce interactive teaching methods such as case discussions and scenario simulations, limited by class time arrangements and teacher experience, these innovative practices have not yet achieved scale effects. Online educational resource development shows fragmentation, with various MOOC platforms offering AI ethics-related courses of varying quality, lacking systematic planning and authoritative certification.

Faculty development faces obvious disciplinary barriers, with teachers responsible for ethics instruction mostly coming from humanities and social sciences backgrounds, with a limited understanding of AI technology. Computer science teachers, while mastering technical knowledge, often lack systematic ethics training. This disciplinary division makes it difficult to achieve organic integration of technology and ethics in teaching. Additionally, low participation from industry practitioners creates a disconnection between teaching content and industry development needs. Furthermore, addressing these curricular and pedagogical gaps requires more than just technical or instructional adjustments; it calls for a broader socio-ethical perspective rooted in institutional leadership and values. The challenge of AI ethics education is not merely about what is taught, but also about the institutional culture that supports ethical decision-making. Recent scholarship highlights that effective AI governance in education is intrinsically linked to values-based leadership, which is essential for fostering a culture of ethical engagement among both faculty and students (

Al-Zahrani, 2024). Therefore, a comprehensive review of the current landscape must also consider how institutional policies and leadership can create an environment where ethical reflection is prioritized alongside technological innovation.

Finally, the AI ethics evaluation system construction remains in a blank state. Universities currently lack scientific AI ethics literacy assessment standards, making it difficult to quantify ethics education effectiveness. The results revealed that female students exhibited higher AI ethical awareness than male students, indicating the need for more nuanced assessment tools (

Møgelvang et al., 2024). In engineering education accreditation and professional evaluation, ethics requirements are often weakened to formalistic indicators, failing to truly guide teaching reform.

2.2. Main Existing Problems

2.2.1. Theoretical Lag Behind Technological Development

Traditional ethical frameworks struggle to encompass new problems arising from AI. Traditional academic ethics theories mainly focus on classic problems such as plagiarism, fabrication, and duplicate publication, lacking systematic thinking about new problems arising from AI. Research shows that 89% of students admit to using AI tools like ChatGPT for homework—a reality that throws a stark light on the limitations of earlier academic integrity measures (

Johnson & Smith, 2025).

Current ethical frameworks struggle to answer questions such as: Does using AI tools for literature translation constitute academic misconduct? Does having AI participate in data analysis without declaration in papers violate academic integrity? Does using AI models trained by others for research require authorization? Existing academic ethics textbooks and course content were mostly written before AI technology became widespread, lacking in-depth exploration of ethical issues in new technological contexts.

2.2.2. Practice Disconnected from Real Situations

Case teaching lacks dynamism and interactivity. Current academic ethics education mainly adopts theoretical instruction and static case analysis, lacking close integration with real research situations. For example, teachers might explain definitions and harms of plagiarism, but rarely let students experience firsthand how to avoid accidental academic misconduct when using AI tools. Traditional case teaching often uses historical events, lacking simulation and practice of emerging technology scenarios.

2.2.3. Passive and Lagging Prevention Measures

Current academic ethics management mainly relies on post-incident discovery and punishment mechanisms, such as plagiarism detection software and investigation of reports, lacking proactive prevention and guidance measures. This study investigates the capabilities of various AI content detection tools in discerning human and AI-authored content, revealing significant limitations in current detection systems. Many universities are equipped with advanced plagiarism detection systems, but these systems often cannot identify AI-generated content or deeply rewritten texts.

Beyond these general frameworks, the recent surge of generative AI (Gen-AI) has spurred a more specific body of literature focused on its direct application and ethical implications within higher education. Recent studies are now documenting the profound challenges Gen-AI poses to academic integrity by investigating the actual habits of university students, moving the discussion beyond simple plagiarism to the nuanced complexities of undeclared AI assistance (

Balalle & Pannilage, 2025). The question of authorship, for instance, has become a focal point of debate, with scholars now examining the core principles of accountability in AI-assisted research and the challenges institutions face in formulating clear policies (

Nazarovets & Teixeira da Silva, 2024). Concurrently, a promising research stream is exploring innovative pedagogical integrations. Instead of merely viewing Gen-AI as a threat, educators are experimenting with models that position it as a “cognitive partner,” as demonstrated in case studies where tools like ChatGPT are used to enhance collaborative argumentative writing and AI literacy (

Zaim et al., 2024). A robust AI ethics education system must therefore be grounded in this contemporary, application-specific literature.

3. Materials and Methods

Our full-cycle AI ethics education system is built upon a three-stage methodological approach: (1) the construction of a robust theoretical framework; (2) the development of practical pedagogical tools and a training platform; and (3) the implementation of a continuous validation and quality control mechanism. Each stage and its components are described in the following subsections.

3.1. Core Concept Definitions

To ensure the clarity of this paper, we provide operational definitions for several central concepts:

AI Ethics: Within the scope of this study, AI ethics refers to the moral principles, norms, and values required for the responsible use of artificial intelligence, with a specific focus on generative AI (Gen-AI) in academic contexts. It encompasses not only traditional academic integrity issues but also novel challenges posed by Gen-AI, such as data privacy, algorithmic fairness, intellectual property attribution, and the transparency of human-AI collaboration.

Full-Cycle Education: This refers to a closed-loop educational model that integrates three core stages: theoretical navigation, practical training, and intelligent prevention. It aims to move beyond singular knowledge transfer by embedding ethics education throughout the entire life-cycle of academic activity.

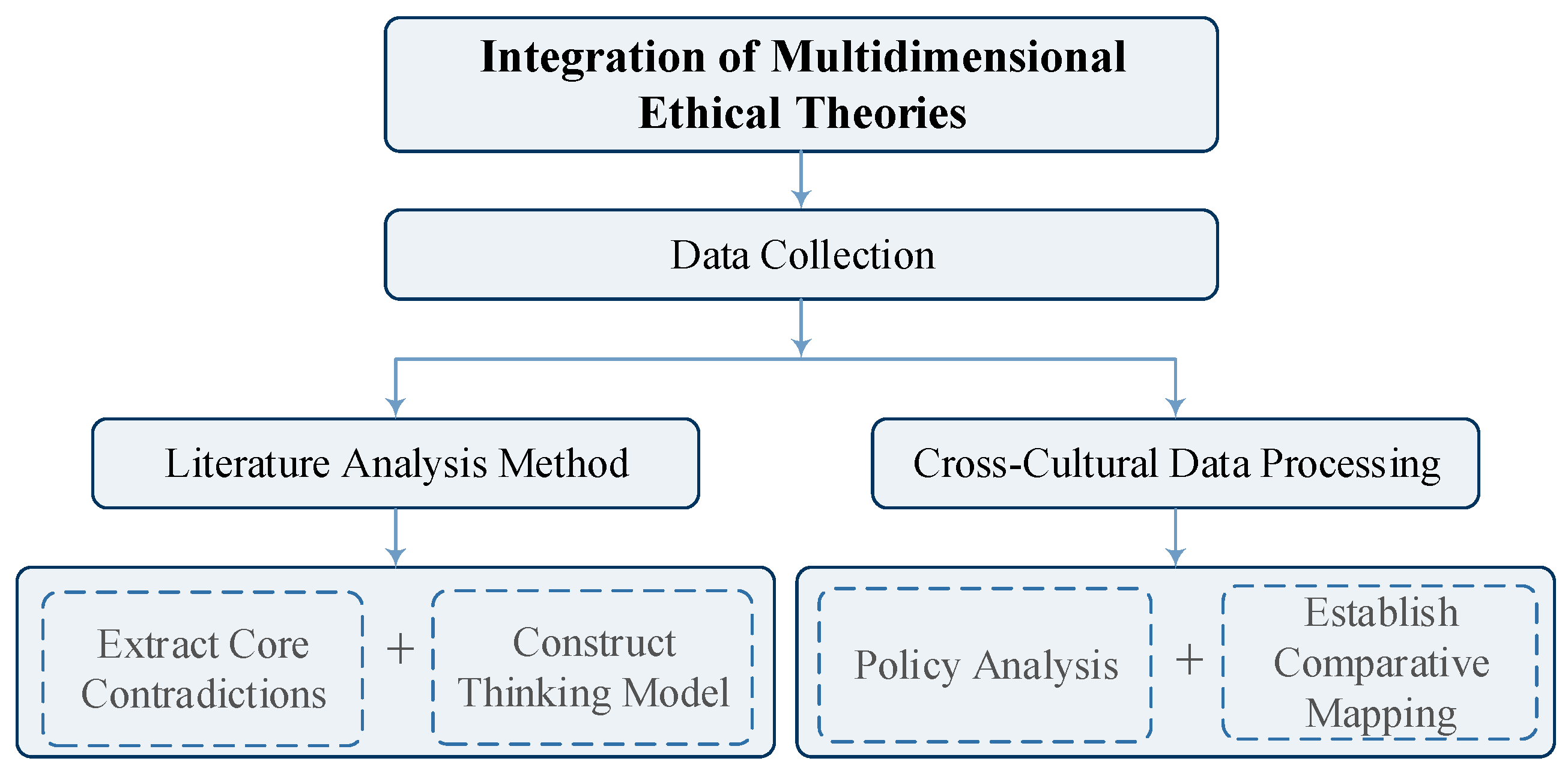

3.2. Theoretical Construction: Four-Dimensional Academic Ethics Framework

The foundation of our educational system is a four-dimensional academic ethics framework designed to address the multifaceted challenges of Gen-AI. The process of developing this foundational framework, from integrating ethical theories to cross-cultural analysis, is illustrated in

Figure 1.

Building on the theoretical foundations above, this study designs a four-dimensional academic ethics framework teaching module, including research review, data privacy, algorithmic fairness, and intellectual property rights. This module, informed by recent research on AI ethics frameworks (

Jobin et al., 2019;

Floridi et al., 2018), aims to help learners systematically master ethical norms for AI-assisted research, providing practical ethical judgment and action guidance.

3.2.1. Research Review Dimension

Teaching in the research review dimension focuses on guiding learners to master methods for establishing ethical review standards for AI-assisted research. Drawing from established frameworks for responsible AI research (

Barocas et al., 2017), the curriculum addresses key considerations, including algorithmic accountability, transparency requirements, and impact assessment protocols.

Through case analysis, learners understand how to evaluate the legitimacy of research purposes, the appropriateness of methods, and the verifiability of results, while simulating the creation of review checklists suitable for their research fields. This approach builds upon the IEEE’s ethical design standards (

IEEE, 2017) and integrates recent developments in AI governance frameworks.

3.2.2. Data Privacy Dimension

Data privacy dimension teaching focuses on practical training through full-process data management simulation, enabling learners to master data classification and protection strategies. The curriculum incorporates principles from the General Data Protection Regulation (GDPR) and other international privacy frameworks, adapted for AI research contexts.

For example, in social science research, learners practice how to anonymize sensitive data while balancing data utilization value with privacy security. The training includes hands-on exercises with differential privacy techniques (

Dwork & Roth, 2014) and federated learning approaches that preserve data confidentiality while enabling collaborative research.

3.2.3. Algorithmic Fairness Dimension

Algorithmic fairness dimension teaching introduces biased algorithm cases, such as gender discrimination in recruitment AI, guiding learners to master fairness assessment tools and practice algorithm optimization methods. The curriculum draws from seminal work on algorithmic bias (

Barocas & Selbst, 2016) and recent advances in fairness metrics (

Mehrabi et al., 2021).

Students engage with tools such as IBM’s AI Fairness 360 toolkit (

https://research.ibm.com/blog/ai-fairness-360, accessed on 12 December 2024) and Google’s What-If Tool (

https://pair-code.github.io/what-if-tool, accessed on 12 December 2024, version 1.8.1) to analyze bias in real-world datasets. The training emphasizes understanding different definitions of fairness—individual fairness, group fairness, and counterfactual fairness—and their implications for different application domains.

3.2.4. Intellectual Property Rights Dimension

Intellectual property rights dimension teaching uses human–machine collaboration case studies, such as AI-assisted paper writing attribution disputes, helping learners understand the contribution assessment mechanism design logic and master the core principles of property attribution judgment. This dimension addresses emerging questions about AI-generated content ownership and collaborative authorship standards.

The curriculum examines recent legal precedents and academic policy developments regarding AI-generated intellectual property, including patent applications involving AI inventors and copyright questions for AI-generated creative works (

Yanisky-Ravid & Liu, 2018).

3.3. Technical Transparency Gradient Model Teaching Application

Addressing teaching challenges from deep learning black box characteristics, AI ethics teaching introduces a technical transparency gradient model as a core teaching tool, building on interpretable AI research (

Ribeiro et al., 2016;

Lundberg & Lee, 2017). Dividing AI system interpretability into five progressive levels helps learners understand transparency requirements in different scenarios and master corresponding ethical practice methods:

Input Transparency Layer: Requires learners to complete data traceability reports, clearly recording data sources, preprocessing steps, and feature selection logic. This level emphasizes data provenance and documentation standards established in reproducible research practices (

Wilkinson et al., 2016).

Process Transparency Layer: Training through model training log recording practice, requiring learners to record parameter adjustment processes and validation methods in detail. Students learn to implement version control systems and maintain comprehensive experimental logs that enable research reproducibility.

Output Transparency Layer: Teaching focuses on result interpretation capability development, having learners write reports including confidence analysis and uncertainty explanations for AI-generated research conclusions. This incorporates techniques from uncertainty quantification in machine learning (

Gal & Ghahramani, 2016).

Decision Transparency Layer: Training through algorithmic decision decomposition tasks, guiding learners to analyze weight allocation logic at key nodes. Students work with attention mechanisms in neural networks and feature importance measures to understand how AI systems make decisions.

Value Transparency Layer: Teaching through ethical reflection seminars, requiring learners to reveal implicit value orientations in algorithms. This level draws from value-sensitive design methodologies (

Friedman & Hendry, 2019) and incorporates stakeholder analysis techniques.

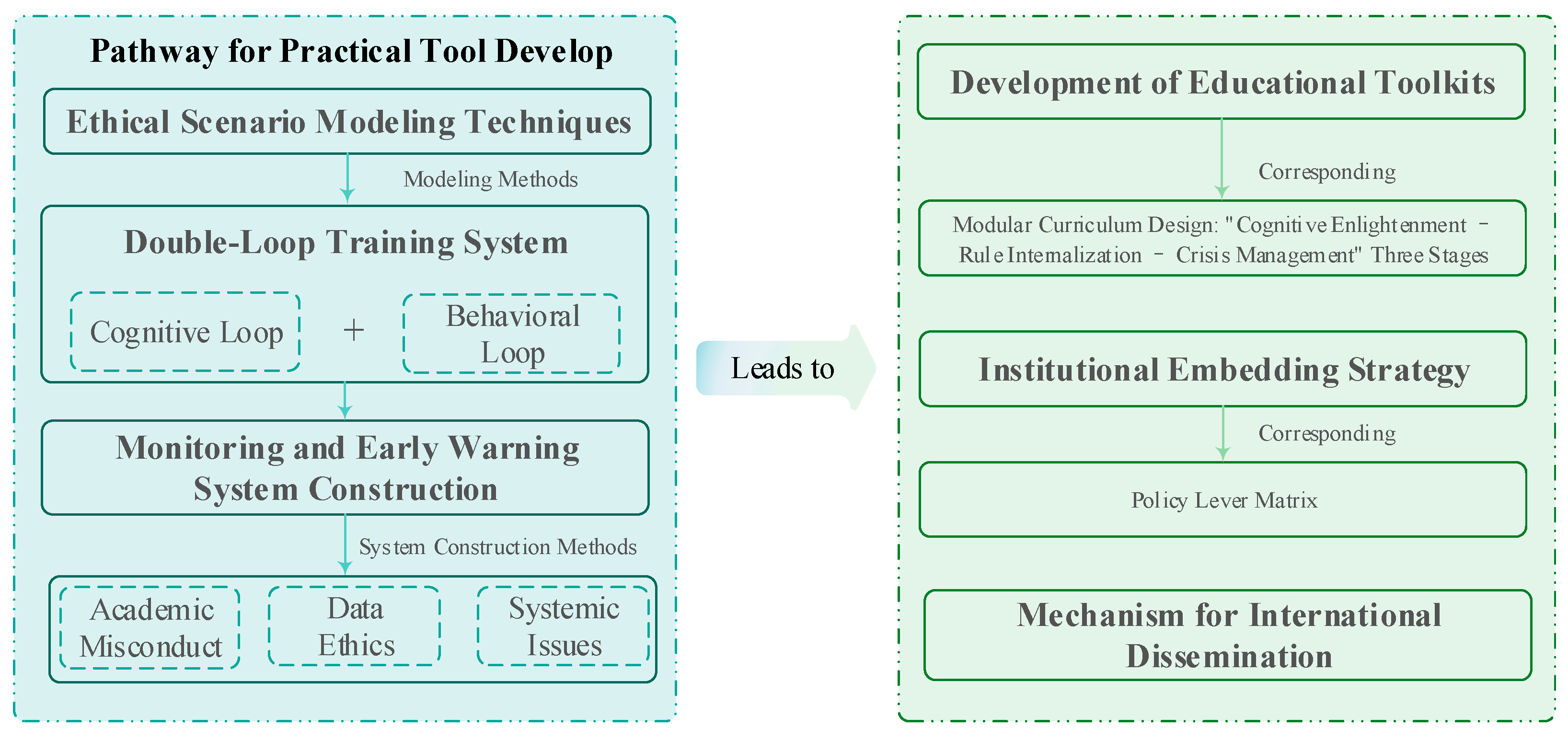

3.4. Practical Training: Cultivating AI Ethics Practice Capabilities

To systematically translate the theoretical framework into practical competencies, we developed a full life-cycle AI ethics education platform and a suite of pedagogical tools. The architecture of these practical components, from development pathways to the final educational toolkits, is outlined in

Figure 2.

3.4.1. Full Life-Cycle AI Ethics Education Platform

To systematically cultivate researchers’ AI ethics literacy, this study developed a full life-cycle AI ethics reasoning platform using a modular design to integrate educational technology with ethics training needs. The platform’s architecture draws from successful educational technology implementations (

Clark & Mayer, 2016) and incorporates gamification elements shown to enhance engagement in ethics training (

Deterding et al., 2011).

The platform’s core functions are achieved through the coordination of five components:

Dynamic Situational Case Library: As a foundational resource module, it collects real AI ethics controversy cases across disciplines, supporting multi-dimensional retrieval by research field, ethical conflict type, and complexity level. The case library includes landmark cases such as the Microsoft Tay chatbot incident, Google’s Project Maven controversy, and recent academic misconduct cases involving AI tools. Cases are continuously updated to reflect emerging ethical challenges in AI development and deployment.

Virtual Laboratory: Constructs high-fidelity research environments, allowing learners to conduct full-process ethical decision-making for AI projects under controllable risk conditions. The virtual lab simulates realistic research scenarios, including data collection, model training, and publication processes, enabling students to experience ethical dilemmas firsthand without real-world consequences.

Ethics Reasoning Engine: As an intelligent decision support system, it integrates decision tree algorithms with game theory models to generate multi-perspective analysis reports for complex ethical dilemmas. The engine draws from computational ethics research (

Wallach & Allen, 2008) and incorporates multiple ethical frameworks, including consequentialist, deontological, and virtue ethics perspectives.

Collaborative Learning Platform: Breaks through individual learning limitations, supporting multi-person collaborative role-playing and debate discussions. The platform facilitates cross-cultural and interdisciplinary dialogue, recognizing that AI ethics challenges often require diverse perspectives and expertise (

Winfield & Jirotka, 2018).

Assessment and Feedback System: Adopts formative evaluation strategies, real-time monitoring of learning trajectories, and generates diagnostic reports. The system provides personalized feedback based on individual learning patterns and performance, incorporating adaptive learning principles from educational psychology research (

Plass et al., 2020).

3.4.2. “Cognition-Behavior” Dual-Loop Training Mechanism

The study innovatively proposes a “cognition-behavior” dual-loop training model, enhancing ethical decision-making effectiveness through dual pathways of psychological construction and practical reinforcement. This approach addresses the well-documented gap between moral knowledge and moral action in educational psychology (

Blasi, 1980;

Narvaez & Rest, 1995).

Cognitive Loop: Focuses on theoretical internalization using blended learning methods. The cognitive pathway incorporates classical ethical framework instruction to establish knowledge systems, controversial case analysis to cultivate critical thinking, and expert workshops to promote cognitive upgrading. The loop draws from cognitive load theory (

Sweller et al., 2011) to optimize information processing and retention.

Behavioral Loop: Emphasizes skill externalization through progressive training scenarios, starting from low-risk situational simulations and gradually transitioning to practical operations in real research environments, ultimately achieving behavioral calibration through structured peer evaluation. The behavioral training incorporates principles from situated learning theory (

Lave & Wenger, 1991) and communities of practice research.

The two loops are coupled through spaced repetition principles from neurocognitive science, reinforcing unity of knowledge and action through cycles of theoretical cognition, situational practice, and feedback correction. This dual-channel training mechanism effectively addresses the traditional ethics education dilemma of “easy to know but difficult to practice,” enabling learners to quickly activate ethical awareness and make compliant decisions when facing actual conflicts of interest in research.

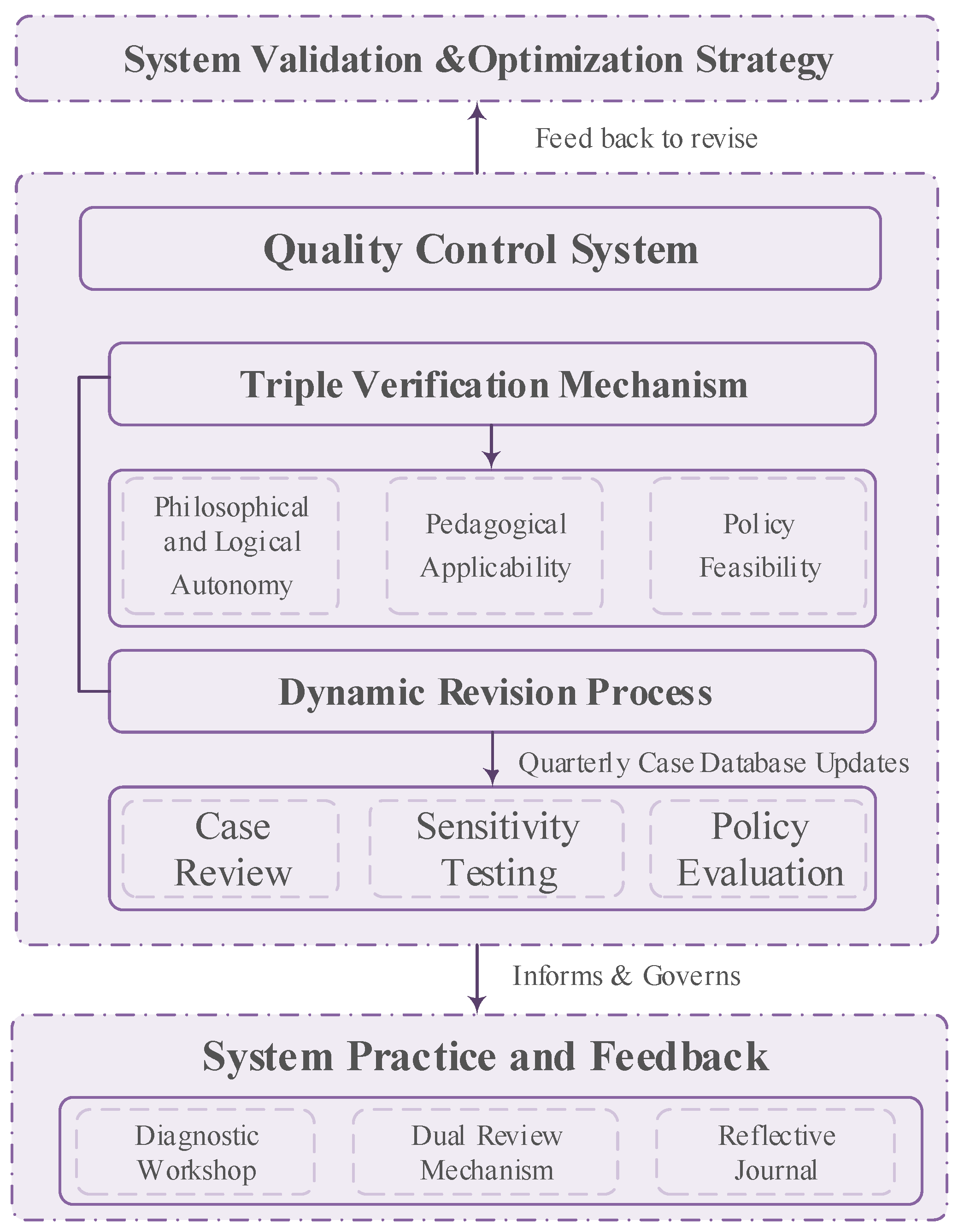

3.5. System Validation and Dynamic Quality Control

Finally, to ensure the long-term effectiveness and continuous improvement in our educational system, we designed a dynamic validation and quality control loop. This mechanism ensures the system is not static but evolves based on structured feedback and rigorous evaluation. The architecture of this quality control and optimization loop is depicted in

Figure 3.

As shown in

Figure 3, the Quality Control System governs the entire process through a Triple Verification Mechanism and a Dynamic Revision Process. Feedback from system implementation is systematically collected through tools like diagnostic workshops and reflective journals. This data informs the Optimization Strategy, which in turn provides evidence for the Dynamic Revision Process. Crucially, this process creates a feedback loop that informs revisions to both the theoretical framework (

Figure 1) and the practical tools (

Figure 2), thus completing the full cycle of development and improvement.

3.6. Intelligent Prevention: Academic Ethics Intelligent Monitoring

3.6.1. Data Source Integration

The multimodal intelligent monitoring model for academic ethics constructed in this study relies on manual collection and integration of multi-dimensional behavioral data to achieve a three-dimensional monitoring of the entire process of scientific research activities. By establishing a standardized mechanism for manual data collection and integration, it ensures efficient integration and collaborative analysis of various data sources.

In terms of laboratory management data, dedicated personnel record equipment usage, personnel entry–exit information, and details of resource allocation in real time. These records provide a physical space-based basis for evaluating the compliance of research behaviors. The integration of process materials covers the entire research cycle: staff verify timestamps of experimental records, conduct traceability analysis of version iterations, and check the integrity of modification traces. For academic communication data, relevant personnel sort out conference participation, collaboration networks, citation behavior characteristics, and peer review content to construct a dynamic map of researchers’ academic social relationships. The collection of digital behavioral data involves multiple dimensions, including literature retrieval methods, software tool usage, online learning paths, and interaction performance in academic social activities, thus forming a digital portrait of researchers. For monitoring achievement output data, in addition to traditional outcomes such as paper publications and patent applications, staff also collect information related to new academic achievements, such as code submission records and data sharing status. Finally, feedback and evaluation data are collected by aggregating supervisor evaluation opinions, peer review results, researchers’ self-reflection reports, and third-party supervision suggestions to establish a multi-dimensional academic credit evaluation system. This holographic data integration strategy breaks through the limitations of traditional monitoring methods, enabling a comprehensive grasp of academic ethics risks from micro-behaviors to macro-patterns, making academic ethics monitoring more comprehensive and accurate.

3.6.2. Three-Level Risk Prevention and Control Mechanism

To systematically prevent academic ethics risks, this study constructs a three-level progressive risk prevention and control mechanism, implementing hierarchical governance strategies for different forms of academic misconduct. Through data-driven risk identification and early warning, this mechanism achieves precise prevention and control of core issues such as plagiarism, data fraud, and authorship disputes. The three-level prevention and control mechanism forms a complete system of progressive layers and mutual support, providing comprehensive protection from content originality and data authenticity to cooperative fairness. By combining intelligent monitoring with manual review, it improves regulatory efficiency while ensuring the accuracy of judgments, providing strong support for building an honest and fair academic environment. Practical applications show that this mechanism can effectively reduce the incidence of academic misconduct and significantly improve the timeliness and fairness of academic supervision.

Plagiarism Risk Prevention and Control: This level focuses on preventing various forms of academic plagiarism and establishes a multi-layered system for protecting text originality. The basic protection layer conducts rapid screening for explicit plagiarism; the intermediate protection layer identifies rewritten plagiarism through semantic analysis technology; the advanced protection layer focuses on originality protection at the ideological level, preventing implicit plagiarism such as theft of core viewpoints. The system adopts dynamically adjusted identification standards to fully consider the differences in text characteristics across disciplines and provides researchers with originality self-check tools to promote conscious compliance with academic norms.

Data Authenticity Assurance: This level is committed to maintaining the credibility of research data and constructing a full-process data quality monitoring system. It identifies potential abnormal patterns by analyzing records of the entire process of data generation, processing, and analysis; evaluates the rationality of research data through logical verification of experimental processes; and ensures the authenticity of research results presentation using professional image detection methods. The system is specially designed with data credibility evaluation indicators to provide objective references for academic review and establish best practice guidelines for data management for researchers.

Maintenance of Authorship Fairness: This level focuses on balancing rights and interests in scientific research cooperation and establishes a scientific contribution evaluation and conflict prevention mechanism. It forms an objective contribution evaluation by quantitatively analyzing researchers’ participation and contribution types in various stages of the project; identifies potential rights and interests conflict risks based on the analysis of historical cooperation patterns; and provides early warning and mediation suggestions at key nodes to promote fair consensus among cooperative parties. This mechanism emphasizes the protection of researchers’ legitimate rights and interests, and all evaluation processes follow strict privacy protection principles.

4. Results

This section presents the empirical findings from three core experiments designed to evaluate the effectiveness of the proposed full-cycle AI ethics education system, focusing on theoretical framework validation, practical training outcomes, and platform usability. All experiments adhered to rigorous research protocols, with sample sizes determined through power analysis (α = 0.05, 1 − β = 0.8) and data analyzed using SPSS 26.0. Normality tests confirmed parametric distribution of outcomes, enabling the use of paired t-tests for within-group comparisons and independent samples t-tests for between-group analyses.

4.1. Effectiveness of the Four-Dimensional Academic Ethics Framework

The first experiment assessed whether the four-dimensional framework (research review, data privacy, algorithmic fairness, and intellectual property) could enhance students’ AI ethics knowledge compared to baseline levels. A total of 100 third-year undergraduates (52 computer science majors, 48 social science majors) participated in a pretest–posttest design, using an AI ethics knowledge test developed for this study (see

Appendix A.1 for the instrument’s structure and sample items).

As shown in

Table 1, statistically significant improvements were observed across all dimensions after the intervention. The overall AI ethics literacy score increased from 59.2 ± 9.2 to 81.2 ± 7.1 (

p < 0.001), representing a 37.2% improvement. Among individual dimensions, algorithmic fairness judgment showed the largest gain (44.6%), rising from 55.2 ± 10.3 to 79.8 ± 8.1, followed by data privacy protection (41.7% improvement). Social science majors demonstrated slightly higher gains in research review ethics (+3.2 percentage points) compared to computer science majors, while the latter showed stronger improvements in algorithmic fairness (+2.8 percentage points), suggesting disciplinary differences in baseline knowledge gaps.

The consistent improvement across all dimensions indicates that the framework successfully addresses the theoretical lag identified in

Section 2.2.1, particularly through its emphasis on technical scenario analysis that traditional ethics curricula lack. The high improvement in algorithmic fairness—an area rarely covered in conventional ethics courses—validates the framework’s responsiveness to emerging AI-specific ethical challenges.

4.2. Impact of the “Cognition-Behavior” Dual-Loop Training Mechanism

To evaluate whether the dual-loop mechanism (cognitive loop: theoretical learning + case analysis; behavioral loop: scenario simulation + practical training) enhances ethical decision-making capabilities, a controlled experiment was conducted with 120 graduate students randomized into experimental (n = 60) and control (n = 60) groups. The experimental group received eight weeks of dual-loop training, while the control group received traditional lecture-based ethics instruction.

Post-training assessment using standardized AI-assisted research dilemma scenarios (inter-rater reliability κ = 0.89) revealed significant between-group differences (

Table 2). The scenarios were designed to evaluate practical decision-making skills; a detailed example of a scenario and its corresponding evaluation criteria are provided in

Appendix A.2. The experimental group scored significantly higher in decision compliance (25.6 ± 3.2 vs. 18.9 ± 4.5,

p < 0.001), rationality (32.1 ± 4.1 vs. 23.5 ± 5.2,

p < 0.001), and timeliness (24.3 ± 3.8 vs. 17.2 ± 4.9,

p < 0.001). The composite score for the experimental group (82.0 ± 7.5) exceeded the control group by 37.6%, with effect sizes (Cohen’s d) ranging from 1.72 to 2.13, indicating strong practical significance.

Qualitative analysis of decision protocols further revealed that experimental group participants more frequently referenced specific ethical principles (68% vs. 32% of control participants) and proposed technically feasible solutions (75% vs. 41%). This suggests the dual-loop mechanism effectively bridges the theory–practice gap identified in

Section 2.2.1, transforming abstract ethical knowledge into actionable decision-making skills.

4.3. User Experience of the Full Life-Cycle AI Ethics Education Platform

The third experiment evaluated user acceptance of the platform’s core functionalities through a mixed-methods approach involving 20 instructors and 180 students. Participants completed a five-point Likert scale questionnaire designed to measure user acceptance of the platform’s functionalities (the full instrument is available in

Appendix A.3) after 4 weeks of platform usage, supplemented by focus group interviews. The qualitative data from these interviews were analyzed using a thematic analysis approach, as detailed in

Appendix A.4.

As shown in

Table 3, all platform functionalities received favorable ratings (≥3.8), with overall mean scores of 4.2 ± 0.6 for instructors and 4.1 ± 0.7 for students. The virtual laboratory module achieved the highest rating (4.4), with participants praising its “high-fidelity simulation of real research dilemmas” and “safe environment for ethical experimentation.” The ethics reasoning engine received the lowest but still positive scores, with feedback highlighting opportunities to improve “explanation depth of multi-perspective analyses.”

5. Discussion

5.1. Theoretical Contributions and Framework Validation

The empirical findings from this study provide substantial evidence supporting the effectiveness of the proposed full-cycle AI ethics education system. The significant improvements observed across all four dimensions of the academic ethics framework (37.2% overall improvement, p < 0.001) demonstrate that systematic, technology-specific ethics education can successfully address the theoretical lag identified in current curricula. The particularly strong performance in algorithmic fairness judgment (44.6% improvement) is noteworthy, as this represents an entirely new domain of ethical consideration that traditional ethics frameworks have struggled to encompass. We posit this substantial gain is attributable to two primary factors: firstly, the novelty of the subject matter meant students started from a lower baseline, allowing for greater growth; and secondly, our pedagogical approach for this module heavily incorporated hands-on, tool-based learning (e.g., using IBM’s AI Fairness 360). This suggests a critical implication for curriculum design: for complex and technical AI ethics topics, abstract theoretical discussions are insufficient. Instead, curricula should prioritize experiential learning environments where students can directly engage with real-world tools to understand and mitigate ethical risks.

The differential performance patterns between computer science and social science majors reveal important insights about disciplinary knowledge gaps. Social science students’ stronger improvement in research review ethics (+3.2 percentage points) reflects their foundational training in research methodology, while computer science students’ superior gains in algorithmic fairness (+2.8 percentage points) suggest that technical understanding facilitates deeper engagement with AI-specific ethical challenges. This finding supports the study’s emphasis on interdisciplinary collaboration in AI ethics education. More specifically, it implies that a “one-size-fits-all” approach to AI ethics education is likely to be ineffective. To truly foster interdisciplinary competence, universities should develop curricula that are not only co-designed by faculty from both technical and humanities disciplines but also encourage the formation of mixed-major student teams for project-based work. Such an approach would allow students to leverage their inherent strengths while actively learning from the perspectives and skills of their peers from different backgrounds, creating a more holistic and robust learning experience.

5.2. Practical Implementation and Educational Effectiveness

The “cognition-behavior” dual-loop training mechanism’s substantial impact (37.6% improvement in composite ethical decision-making scores) validates the theoretical foundation that ethical competence requires both knowledge acquisition and behavioral practice. The large effect sizes (Cohen’s d ranging from 1.65 to 2.64) suggest that the dual-loop approach addresses a fundamental limitation in traditional ethics education: the persistent gap between moral knowledge and moral action identified by

Blasi (

1980).

The full life-cycle AI ethics education platform’s positive reception (overall mean rating of 4.1/5.0) demonstrates the feasibility of scaling comprehensive ethics education through technology-enhanced learning environments. The virtual laboratory module’s highest rating (4.4/5.0) confirms that safe, high-fidelity simulation environments can provide authentic ethical learning experiences without real-world risks, addressing a critical challenge in ethics education.

5.3. Ethical Considerations and Feasibility Challenges

While the intelligent monitoring system demonstrates technical feasibility, its implementation warrants careful ethical consideration. Such systems risk creating a culture of surveillance that could undermine the very atmosphere of trust and autonomy necessary for fostering genuine ethical development. The goal of ethics education should be to cultivate internal moral reasoning, not merely external compliance enforced by monitoring. We acknowledge that a system designed to detect misconduct could, if implemented poorly, stifle creativity, academic freedom, and place students in an adversarial position with the institution.

Therefore, the deployment of such a system must be governed by strict ethical guidelines, including full transparency, a “pedagogy-first, not punishment-first” principle, robust data protection, and an opt-in or consent-based framework that respects student autonomy.

5.4. Limitations and Future Research Directions

Several significant limitations must be acknowledged. First, the experimental timeframes (4–8 weeks) may not capture the long-term retention of ethical learning. Second, the study was conducted at a single institution, which limits the generalizability of our findings to different cultural and institutional contexts. Third, our evaluation of user experience relied on self-reported data, which may be subject to social desirability bias. Fourth, a central tension exists between our educational goal of fostering intrinsic ethics and the extrinsic nature of the intelligent monitoring system.

Finally, and most critically from a methodological standpoint, our experimental design for evaluating the “cognition-behavior” dual-loop mechanism was unbalanced. The advantage of the experimental group may be due not only to the integrated structure of the “dual-loop” but also simply to the presence of practical training, which the control group lacked. The current design cannot disentangle these effects. Future research should employ a more robust design, such as including an additional control group that receives non-integrated practice, to isolate the unique contribution of the dual-loop integration.

6. Conclusions

This study validated a full-cycle educational system that effectively enhances students’ AI ethics literacy and decision-making skills. Rather than merely summarizing our contributions, we conclude by offering forward-looking recommendations to translate these findings into actionable practice. Our work suggests that the development of responsible AI governance in academic settings requires a systemic, not piecemeal, approach.

For higher education institutions, we recommend a phased adoption of this full-cycle system. Institutions can begin by integrating the four-dimensional ethics framework into existing computer science and humanities courses to build foundational awareness. The next phase could involve implementing the “cognition-behavior” dual-loop mechanism through specialized workshops or dedicated modules, before scaling up to the full technology-enhanced platform. This incremental approach can facilitate institutional buy-in and resource allocation. For policymakers and accreditation bodies, our findings provide a potential benchmark for what constitutes comprehensive AI ethics education. We suggest that future accreditation standards could require institutions not only to offer ethics courses but also to demonstrate mechanisms for integrating theoretical knowledge with practical skills, similar to the model we have proposed. This would shift the focus from mere course provision to demonstrable student competency.

Ultimately, this research demonstrates that fostering responsible AI use requires moving beyond isolated courses to build an institutional culture of ethical reflection. The system presented here provides a concrete and scalable pathway for institutions to begin this critical journey, preparing students not just to be users of AI, but to be its conscientious architects and guardians.

Author Contributions

Conceptualization, X.X.; methodology, X.X.; validation, Y.G.; formal analysis, X.X. and F.M.; writing—review and editing, X.X. All authors have read and agreed to the published version of the manuscript.

Funding

This research was funded by the General Project of Higher Education Research Funding Program (NMGJXH-2025XB106), General Project of Hohhot Basic Research and Applied Basic Research Program (2025-GUI-JI-44), Postgraduate Research Innovation Fund Project of Inner Mongolia Normal University (CXJJS25040), and General Program of Inner Mongolia Natural Science Foundation (2023MS06016).

Institutional Review Board Statement

The study was conducted in accordance with the Declaration of Helsinki and approved by the Ethics Committee of Inner Mongolia Normal University (protocol code 2025XB106 and date: 22 May 2025).

Informed Consent Statement

Informed consent was obtained from all subjects involved in the study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author.

Conflicts of Interest

The authors declare no conflicts of interest.

Abbreviations

The following abbreviations are used in this manuscript:

| AI | Artificial Intelligence |

Appendix A. Research Instruments and Procedures

Appendix A.1. AI Ethics Knowledge Test

The AI ethics knowledge test was a 30-item multiple-choice questionnaire developed by the research team to assess students’ understanding of the four core dimensions of the ethics framework. Each dimension (Research Review, Data Privacy, Algorithmic Fairness, Intellectual Property) was represented by seven to eight questions. The items were developed based on a review of existing ethics literature and recent case studies involving Gen-AI. The total score ranged from 0 to 30.

Sample Item (Algorithmic Fairness Dimension):

A university uses an AI model to predict student success. The model was trained on historical data, which shows that students from high-income backgrounds have higher graduation rates. As a result, the model tends to give higher success predictions to new applicants from similar backgrounds. This is a primary example of:

- (a)

Data privacy violation

- (b)

Algorithmic bias

- (c)

Intellectual property infringement

- (d)

Lack of model transparency

Sample Item (Data Privacy Dimension):

Which of the following principles is a core component of the General Data Protection Regulation (GDPR)?

- (a)

Data should be stored indefinitely for future use.

- (b)

Data can be collected for any purpose once consent is given.

- (c)

Data collection should be limited to what is necessary for the specified purpose.

- (d)

Anonymized data is not subject to any privacy considerations.

Appendix A.2. Ethical Dilemma Scenario and Evaluation Criteria

Participants’ ethical decision-making capabilities were assessed using a series of standardized scenarios. Participants were required to describe their intended course of action and provide a rationale. Their responses were then scored by two independent raters (κ = 0.89) based on a predefined rubric.

Sample Scenario:

You are a graduate student working on a literature review for your thesis, and you are under a tight deadline. To speed up the process, you use a sophisticated Gen-AI tool (like ChatGPT-4) to summarize dozens of academic papers. The tool generates well-written paragraphs that accurately reflect the content of the original papers. You then copy and paste these AI-generated summaries into your literature review chapter, weaving them together with your own topic sentences and transitions. You do not explicitly mention the use of the AI tool in your methodology or acknowledgements.

Question: Describe your assessment of this course of action. What, if any, are the ethical issues involved? What would be the most ethically responsible way to use such a tool in this context?

Evaluation Criteria (as seen in

Table 2):

Decision Compliance (0–30 points): Scored based on how well the participant’s proposed action aligns with established academic integrity norms. A higher score indicates a clear rejection of plagiarism and a proposal for transparent use.

Decision Rationality (0–40 points): Scored based on the depth and coherence of the participant’s reasoning. A higher score reflects the ability to identify multiple ethical dimensions (e.g., plagiarism, transparency, intellectual honesty) and reference relevant ethical principles.

Decision Timeliness (0–30 points): Scored based on the participant’s ability to propose a proactive and ethically sound solution, rather than a reactive or ethically ambiguous one.

Appendix A.3. Platform User Experience Questionnaire

User acceptance was measured using a 15-item questionnaire on a five-point Likert scale (1 = Strongly Disagree, 5 = Strongly Agree). The instrument was designed to assess the perceived usability and effectiveness of the platform’s core functionalities. The overall scale demonstrated high internal consistency (Cronbach’s α = 0.92).

Sample Items:

(Dynamic Situational Case Library) “The case library provided relevant and challenging real-world scenarios.”

(Virtual Laboratory) “The virtual laboratory was a safe and effective environment to practice my ethical decision-making skills.”

(Ethics Reasoning Engine) “The feedback from the reasoning engine helped me understand the ethical dilemmas from multiple perspectives.”

(Collaborative Learning Module) “Discussing the cases with other students on the platform enhanced my learning.”

(Assessment and Feedback System) “The feedback system gave me useful insights into my learning progress.”

Appendix A.4. Qualitative Data Analysis Procedure

The data from the focus group interviews were analyzed using Thematic Analysis, following the six-phase process. The procedure was as follows:

Familiarization with data: Two researchers independently read and re-read the interview transcripts to immerse themselves in the data.

Generating initial codes: The researchers systematically coded interesting features of the data across the entire dataset.

Searching for themes: The codes were collated into potential themes.

Reviewing themes: The researchers reviewed the themes, checking if they worked in relation to the coded extracts and the entire dataset.

Defining and naming themes: The specifics of each theme were refined, and clear, descriptive names were assigned.

Producing the report: The final analysis was written up, weaving together the thematic narrative with compelling extract examples.

References

- Al Nabhani, F., Hamzah, M. B., & Abuhassna, H. (2025). The role of artificial intelligence in personalizing educational content: Enhancing the learning experience and developing the teacher’s role in an integrated educational environment. Contemporary Educational Technology, 17, ep573. [Google Scholar] [CrossRef] [PubMed]

- Al-Zahrani, A. M. (2024). Exploring the impact of artificial intelligence on higher education: The dynamics of ethical, social, and educational implications. Humanities and Social Sciences Communications, 11, 912. [Google Scholar] [CrossRef]

- Balalle, H., & Pannilage, S. (2025). Reassessing academic integrity in the age of AI: A systematic literature review on AI and academic integrity. Social Sciences & Humanities Open, 11, 101299. [Google Scholar] [CrossRef]

- Barocas, S., Hardt, M., & Narayanan, A. (2017). Fairness and machine learning. MIT Press. [Google Scholar]

- Barocas, S., & Selbst, A. D. (2016). Big Data’s disparate impact. California Law Review, 104, 671–732. [Google Scholar] [CrossRef]

- Blasi, A. (1980). Bridging moral cognition and moral action: A critical review of the literature. Psychological Bulletin, 88, 1–45. [Google Scholar] [CrossRef]

- Chan, C. K. Y., & Lee, K. K. H. (2024). A meta systematic review of artificial intelligence in higher education: A call for increased ethics, collaboration and rigour. International Journal of Educational Technology in Higher Education, 21, 4. [Google Scholar] [CrossRef]

- Clark, R. C., & Mayer, R. E. (2016). E-learning and the science of instruction (4th ed.). Wiley. [Google Scholar]

- Cowls, J., Bjelobaba, S., Glendinning, I., Khan, Z. R., Santos, R., Pavletic, P., & Kravjar, J. (2023). ENAI recommendations on the ethical use of artificial intelligence in education. International Journal for Educational Integrity, 19, 12. [Google Scholar] [CrossRef]

- Crawford, J., Cowling, M., & Allen, K. A. (2023). Leadership is needed for ethical Chatgpt: Character, assessment, and learning using artificial intelligence (AI). Journal of University Teaching & Learning Practice, 20, 1–25. [Google Scholar]

- Deterding, S., Dixon, D., Khaled, R., & Nacke, L. (2011, September 29–30). From game design elements to gamefulness: Defining gamification. 15th International Academic MindTrek Conference (pp. 9–15), Tampere, Finland. [Google Scholar]

- Dwork, C., & Roth, A. (2014). The algorithmic foundations of differential privacy. Foundations and Trends in Theoretical Computer Science, 9, 211–407. [Google Scholar] [CrossRef]

- Floridi, L., Cowls, J., Beltrametti, M., Chatila, R., Chazerand, P., Dignum, V., Luetge, C., Madelin, R., Pagallo, U., Rossi, F., Schafer, B., & Valcke, P. (2018). AI4People—An ethical framework for a good ai society: Opportunities, risks, principles, and recommendations. Minds and Machines, 28, 689–707. [Google Scholar] [CrossRef] [PubMed]

- Friedman, B., & Hendry, D. G. (2019). Value sensitive design: Shaping technology with moral imagination. MIT Press. [Google Scholar]

- Gal, Y., & Ghahramani, Z. (2016, June 20–22). Dropout as a bayesian approximation: Representing model uncertainty in deep learning. 33rd International Conference on Machine Learning (pp. 1050–1059), New York, NY, USA. [Google Scholar]

- IEEE. (2017). IEEE standard for ethical design process (IEEE Std 2857-2021). IEEE.

- Jobin, A., Ienca, M., & Vayena, E. (2019). The global landscape of ai ethics guidelines. Nature Machine Intelligence, 1, 389–399. [Google Scholar] [CrossRef]

- Johnson, R., & Smith, K. (2025). Student perspectives on AI tool usage in academic work: A multi-institutional study. Computers & Education, 198, 104–118. [Google Scholar]

- Lave, J., & Wenger, E. (1991). Situated learning: Legitimate peripheral participation. Cambridge University Press. [Google Scholar]

- Li, T., Yusof, S. B., Abuhassna, H., & Pan, Q. (2026). The impact of AI on higher education trends and educational horizons. In B. Edwards, H. Abuhassna, D. Olugbade, O. Ojo, & W. Jaafar Wan Yahaya (Eds.), AI in education, governance, and leadership: Adoption, impact, and ethics (pp. 1–32). IGI Global Scientific Publishing. [Google Scholar] [CrossRef]

- Lund, B. D., Wang, T., Mannuru, N. R., Nie, B., Shimray, S., & Wang, Z. (2023). ChatGPT and a new academic reality: Artificial intelligence-written research papers and the ethics of the large language models in scholarly publishing. Journal of the Association for Information Science and Technology, 74, 570–581. [Google Scholar] [CrossRef]

- Lundberg, S. M., & Lee, S. I. (2017). A unified approach to interpreting model predictions. In Advances in Neural Information Processing Systems, 30, 4765–4774. [Google Scholar]

- Mehrabi, N., Morstatter, F., Saxena, N., Lerman, K., & Galstyan, A. (2021). A survey on bias and fairness in machine learning. ACM Computing Surveys, 54, 115. [Google Scholar] [CrossRef]

- Møgelvang, A., Bjelland, C., Grassini, S., & Ludvigsen, K. (2024). Gender differences in the use of generative artificial intelligence chatbots in higher education: Characteristics and consequences. Education Sciences, 14(12), 1363. [Google Scholar] [CrossRef]

- Narvaez, D., & Rest, J. (1995). The four components of acting morally. In W. M. Kurtines, & J. L. Gewirtz (Eds.), Moral development: An introduction (pp. 385–400). Allyn and Bacon. [Google Scholar]

- Nazarovets, S., & Teixeira da Silva, J. A. (2024). ChatGPT as an “author”: Bibliometric analysis to assess the validity of authorship. Accountability in Research, 1–11. [Google Scholar] [CrossRef]

- Plass, J. L., Moreno, R., & Brünken, R. (2020). Cognitive load theory. Cambridge University Press. [Google Scholar]

- Ribeiro, M. T., Singh, S., & Guestrin, C. (2016, August 13–17). Why should I trust you? Explaining the predictions of any classifier. 22nd ACM SIGKDD International Conference on Knowledge Discovery and Data Mining (pp. 1135–1144), San Francisco, CA, USA. [Google Scholar]

- Sweller, J., Ayres, P., & Kalyuga, S. (2011). Cognitive load theory. Springer. [Google Scholar]

- Wallach, W., & Allen, C. (2008). Moral machines: Teaching robots right from wrong. Oxford University Press. [Google Scholar]

- Wilkinson, M. D., Dumontier, M., Jan Aalbersberg, I. J., Appleton, G., Axton, M., Baak, A., Blomberg, N., Boiten, J. W., da Silva Santos, L. B., Bourne, P. E., Bouwman, J., Brookes, A. J., Clark, T., Crosas, M., Dillo, I., Dumon, O., Edmunds, S., Evelo, C. T., Finkers, R., … Mons, B. (2016). The FAIR guiding principles for scientific data management and stewardship. Scientific Data, 3, 160018. [Google Scholar] [CrossRef]

- Winfield, A. F., & Jirotka, M. (2018). Ethical governance is essential to building trust in robotics and artificial intelligence systems. Philosophical Transactions of the Royal Society A, 376, 20180085. [Google Scholar] [CrossRef]

- Yanisky-Ravid, S., & Liu, X. (2018). When artificial intelligence systems produce inventions: The 3A era and an alternative model for patent law. Cardozo Law Review, 39, 2215–2263. [Google Scholar] [CrossRef]

- Zaim, M., Arsyad, S., Waluyo, B., Ardi, H., Al Hafizh, M., Zakiyah, M., Syafitri, W., Nusi, A., & Hardiah, M. (2024). AI-powered EFL pedagogy: Integrating generative AI into university teaching preparation through UTAUT and activity theory. Computers and Education: Artificial Intelligence, 7, 100335. [Google Scholar] [CrossRef]

- Zawacki-Richter, O., Marín, V. I., Bond, M., & Gouverneur, F. (2019). Systematic review of research on artificial intelligence applications in higher education: Where are the educators? International Journal of Educational Technology in Higher Education, 16, 39. [Google Scholar] [CrossRef]

| Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).