3. Design-Based Implementation Development and Research Approach

The project aimed to iteratively design, test, and disseminate an AR-supported spatial orientation classroom and home intervention, particularly for low-income communities. The collaborative team, made up of public media app developers and researchers, created an 8-week intervention including an AR app, hands-on activities, a digital Teacher’s Guide, and a Family Fun guide.

Using a design-based implementation research (DBIR) approach, the research group conducted three studies, namely (1) a formative user study (tested the prototype version), (2) a pilot study (tested alpha version), and (3) a comparison study (tested beta version). These studies gathered data from educators, parents, and children through gameplay observations, surveys, interviews, and child assessments. Each version was revised based on feedback, leading to the final version that was published on the Apple App Store for free public access.

The development process involved internal iterations for usability, learning, and design improvements. Feedback from advisors and teachers was included in subsequent iterations. The number of iterations varied, depending on the technical challenges, available time, and study needs. This iterative process was essential due to the unpredictability of how AR concepts translate into real-world experiences. Few educational games have feedback loops to prioritize learning as part of the process (

Hirsh-Pasek et al., 2015). Designs that worked well in theory often needed extensive revision when implemented. Frequent testing allowed the team to adapt to the constraints of AR and refine the experience to maximize learning and usability.

3.1. Selection of Technology

Early on in the project, we chose to develop a tablet-based AR app, specifically for the iPad, due to its larger screen, which supports better collaborative play and easier adult (teacher and parent) supervision. To ensure broad usability, we used the Apple provided and supported ARKit without relying on LiDAR to make it compatible with standard iPads and better suited for low-income communities.

3.2. Overview of AR Game

The AR app, titled AR Adventures, is a tablet (specifically iPad) app that leverages WGBH Educational Foundation’s popular digital brand entitled Early Math with Gracie & Friends and is available for free in the Apple App Store. It was designed for approximately 15 min of individual child play (roughly the same amount of time as a typical learning center in the classroom), and to be played several times throughout the 8-week spatial-thinking curriculum. The final app includes two AR experiences guided by the Gracie character and her robot friend, Tulip (

Figure 2). Each experience leverages AR technology in unique ways to support young children’s spatial orientation.

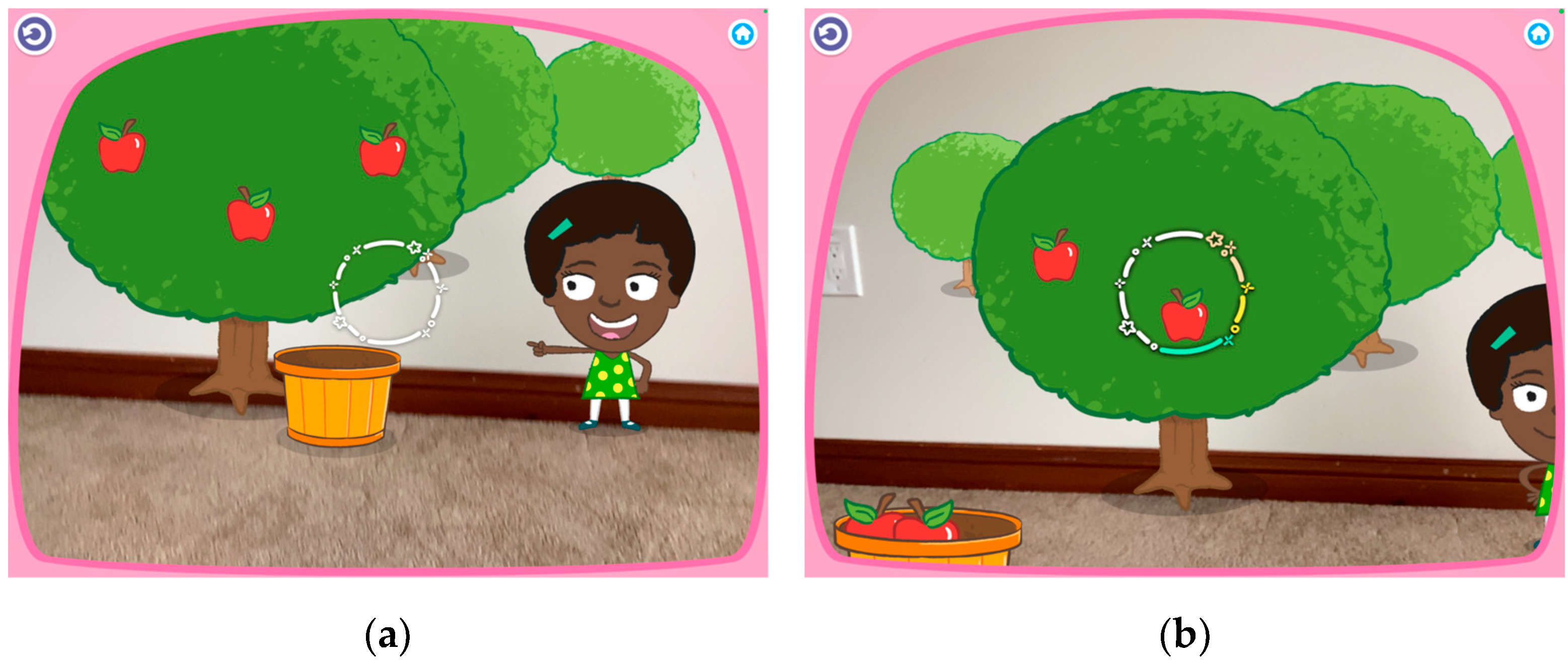

3.2.1. Sparkly Circle Mechanic

Children interact with AR objects using a visual tool known as the “sparkly circle,” which helps children pick up or drop off an object. The sparkly circle is a translucent circle made up of arcs and stars at the center of the child’s screen (

Figure 3a). Children can point the sparkly circle at an AR object in their real-world environment to interact with it. When placed over an object that can be either collected or given, the sparkly circle shows a rainbow color (

Figure 3b) to indicate that it is picking up or dropping off the object. This encourages young children to hold the tablet long enough to complete the transaction.

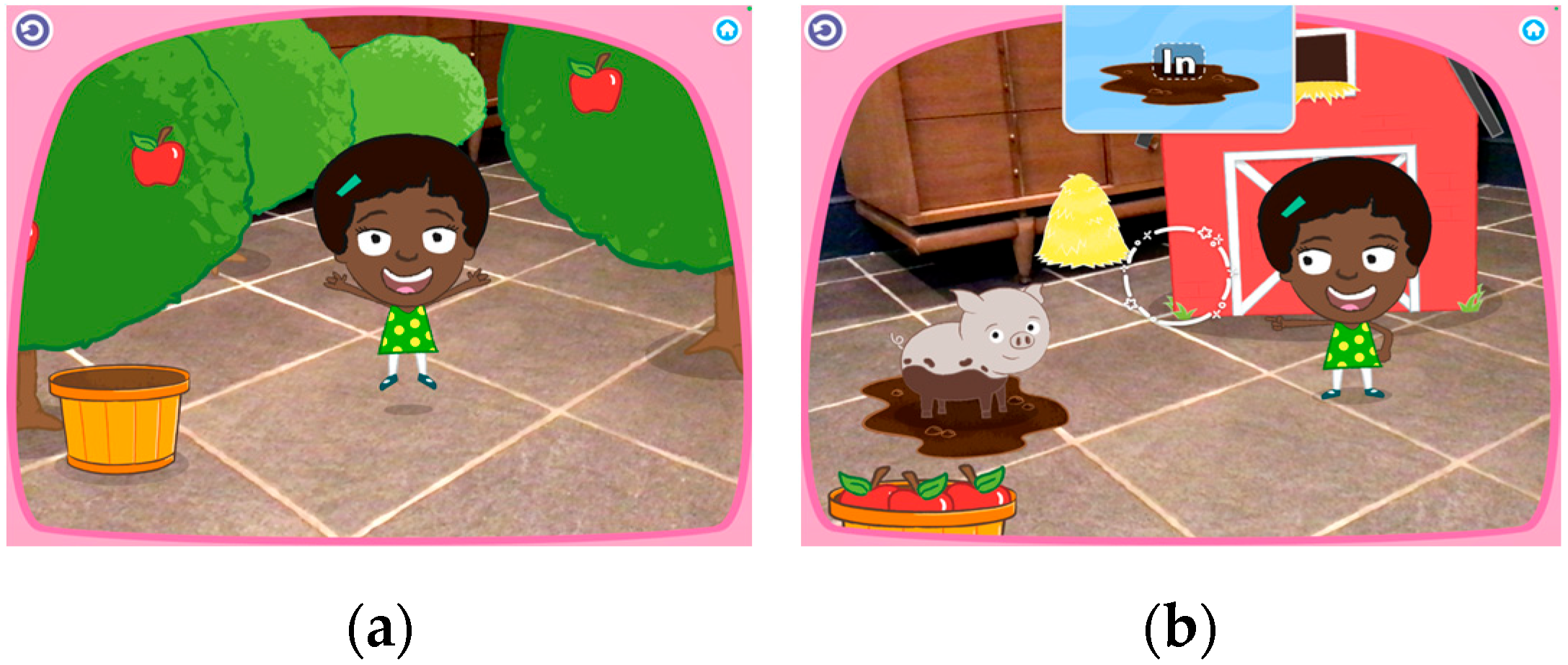

3.2.2. Picking Apples and Feeding Animals

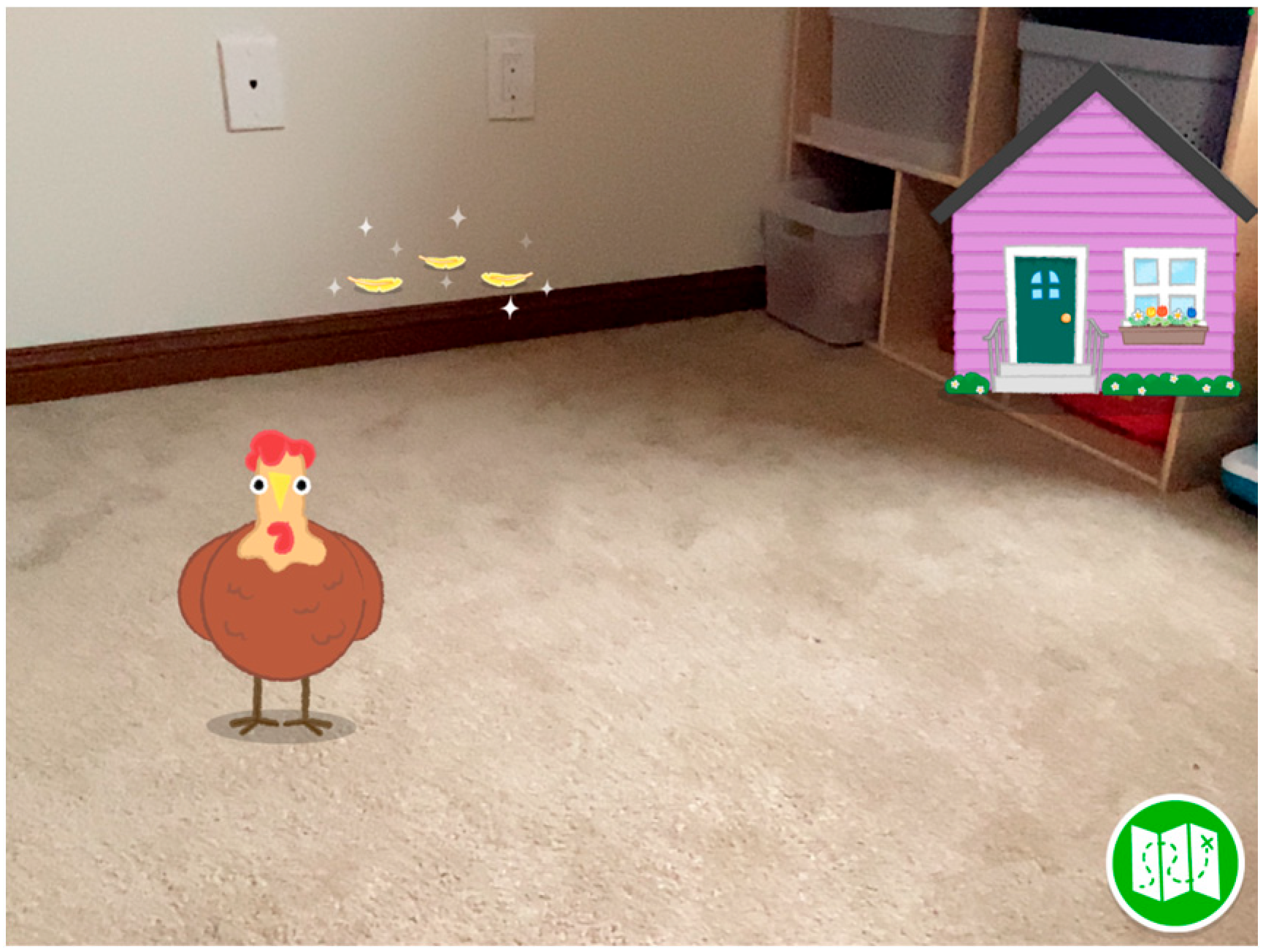

In

Around the Farm, children can pick digital apples from AR trees in their real-world environment and feed them to AR animals (

Figure 4a,b). This game leverages AR to help children practice spatial vocabulary by embodying it as they move around the AR space with spatial tasks. They help Gracie and Tulip navigate a touchscreen map (

Figure 4a) around a mud puddle, under a rainbow, over a bridge, and so on to get Tulip to the apple orchard. Once Tulip arrives at the orchard on the map, the game zooms in on that spot and automatically opens the AR experience. An image of Gracie’s hands lifting an iPad (

Figure 4b) and supporting audio encourage the child.

Once the child’s iPad reaches a certain tilt, an AR Gracie appears embedded in the child’s real-world environment and directs the child to use the sparkly circle to pick up a digital basket. As the child holds the sparkly circle on the basket, the sparkly circle becomes animated with colors rotating through the circle. Then, the AR basket disappears from its real-world location and is locked to the lower left corner of the child’s screen, signifying that the basket has been “collected” (

Figure 4a). Gracie then instructs children to collect apples from the AR tree using the same sparkly circle. When an apple is “collected,” it disappears from the tree and reappears in the basket. Once the apples are collected, children turn their view back to the AR Gracie, who tells them to go back to the map. Back on the map (

Figure 5a), children navigate Tulip from the orchard to the cow pasture and enter the AR scene there. The child follows Gracie’s spatial directions to locate and “feed” the animals. For example, prompted with “feed the cow between the corn stalks,” the child places the sparkly circle on a cow for a moment to “feed” the cow an apple. After all cows are fed, they then return to the apple orchard, navigate to the pig pen, and feed AR pigs apples. Once all animals are fed, the child wins the game.

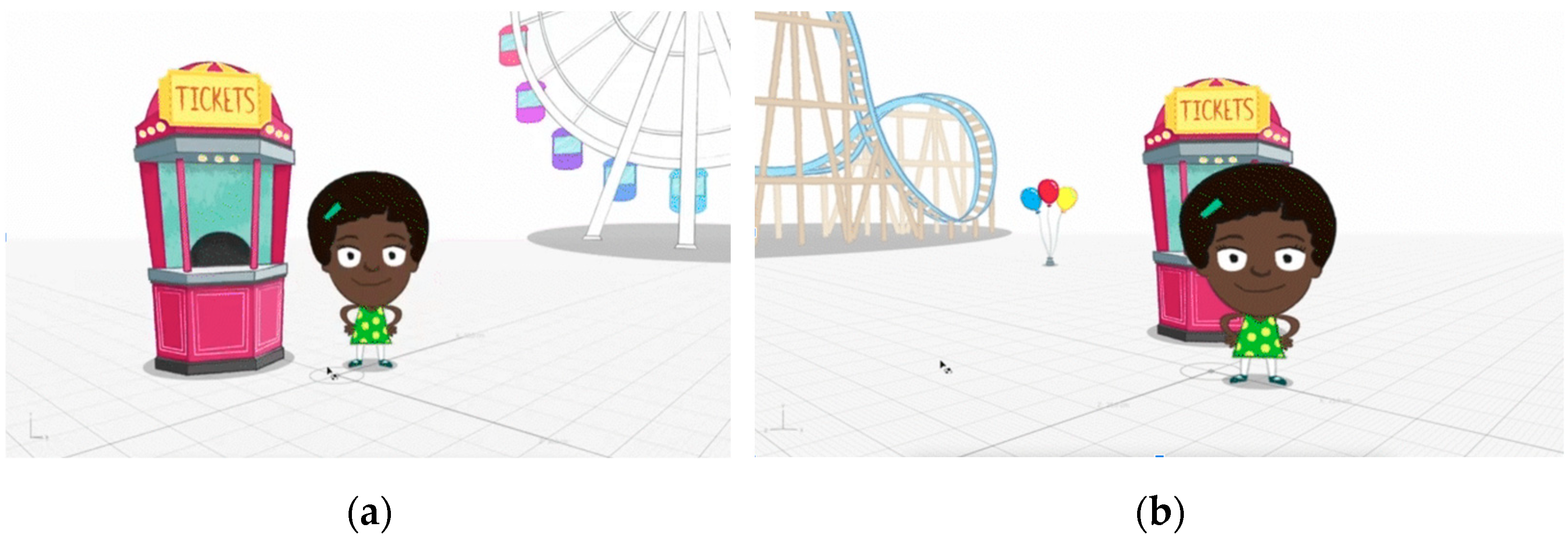

3.2.3. Using AR Environment to Build a 2D Map

In

Map the Fair, children build a digital map of a “county fair” to show where landmarks (e.g., rides and a ticket booth) are located within the AR fair scene (

Figure 6a–c). This game leverages AR by allowing a child to create a 2D abstract map based on what they see in their AR-enhanced world (

Figure 6a) and observe how the spatial relationships in their real world translate into that abstract, symbolic depiction (

Figure 6c). The child starts with a blank fair map without any landmarks. Gracie instructs the child to explore the fair and discover where everything is located before they make their map. The game then transitions to the AR fair scene, where Gracie instructs the child to get a ticket from the ticket booth. The child uses the sparkly circle to collect a ticket, which is then locked to the bottom left of the screen. After the child collects a ticket, Gracie provides spatial clues about which ride to visit. When the child finds the AR ride in their real-world environment, they use the sparkly circle to give the ticket to the ride. The ride animates, and the child receives a sticker of that ride (

Figure 6b) that they then place on the map using spatial relations they observed in the AR fair scene (

Figure 6c). For example, the child needs to place the Ferris wheel to the right of Gracie to the rollercoaster goes between the balloons. Once the map is complete with all four rides on the map, the child wins the game.

3.3. Observational Methods

Data used to generate the recommendations in this paper included data from teachers (

Lewis Presser et al., 2025) and direct observations of children’s gameplay (

Braham et al., under review). As this paper is a design case intended to share lessons learned in the design of the AR app, we will summarize those methods and refer readers to the deeper descriptions in other sources. Teacher interviews and surveys were collected at the end of each study (pilot study and comparison study) and used to understand teachers’ observations and perspectives on the use of the AR app in the classroom. We also individually observed a sample of children playing. Children wore the head-mounted GoPro camera on a headband during individual 5–10 min gameplay sessions in their classrooms, allowing researchers to capture detailed video of the digital content on the tablet screen, the physical classroom environment, and the children’s verbal and motor interactions without showing their faces, thus preserving anonymity. Researchers followed a standardized protocol to guide gameplay and provided assistance as needed. Later, videos were coded by independent researchers using a systematic set of prompts to analyze children’s navigation, engagement, learning of game mechanics, adult support required, and technical challenges encountered. This approach enabled rich, nuanced data collection from the child’s viewpoint, capturing both behavioral and contextual factors influencing their interaction with the AR app.

Braham et al. (under review) found that young children were generally engaged and were interested in playing the AR game, with most children successfully learning the game mechanics either independently or with some adult support. The head-mounted cameras effectively captured children’s verbal reactions, gestures, and interactions from their perspective, revealing joyful responses to the AR experience. Teachers noted the game’s potential for reinforcing spatial vocabulary through active, embodied learning. Observations highlighted the need for additional visual and verbal scaffolding within the game to help children follow instructions amid classroom distractions. Technical challenges related to the visibility of AR objects in cluttered environments were identified and addressed by adjusting object appearance and adding visual cues. Overall, the study demonstrated that AR games combined with first-person video observation can effectively support and assess young children’s spatial learning. While the findings are important and reported elsewhere, our focus in this paper remains on distilling design principles based on the needs of young children, the content area, and the features of the technology.

App Features

Throughout development, the team explored various approaches to using AR technology for learning. Below, in

Table 1, we share the main features and explorations of the tested builds of the app. There were many iterations of the app between these builds, and the team encountered interesting challenges that were unique to designing for AR experiences. Features that tested well in builds were kept, enhanced, and tested again in subsequent builds.

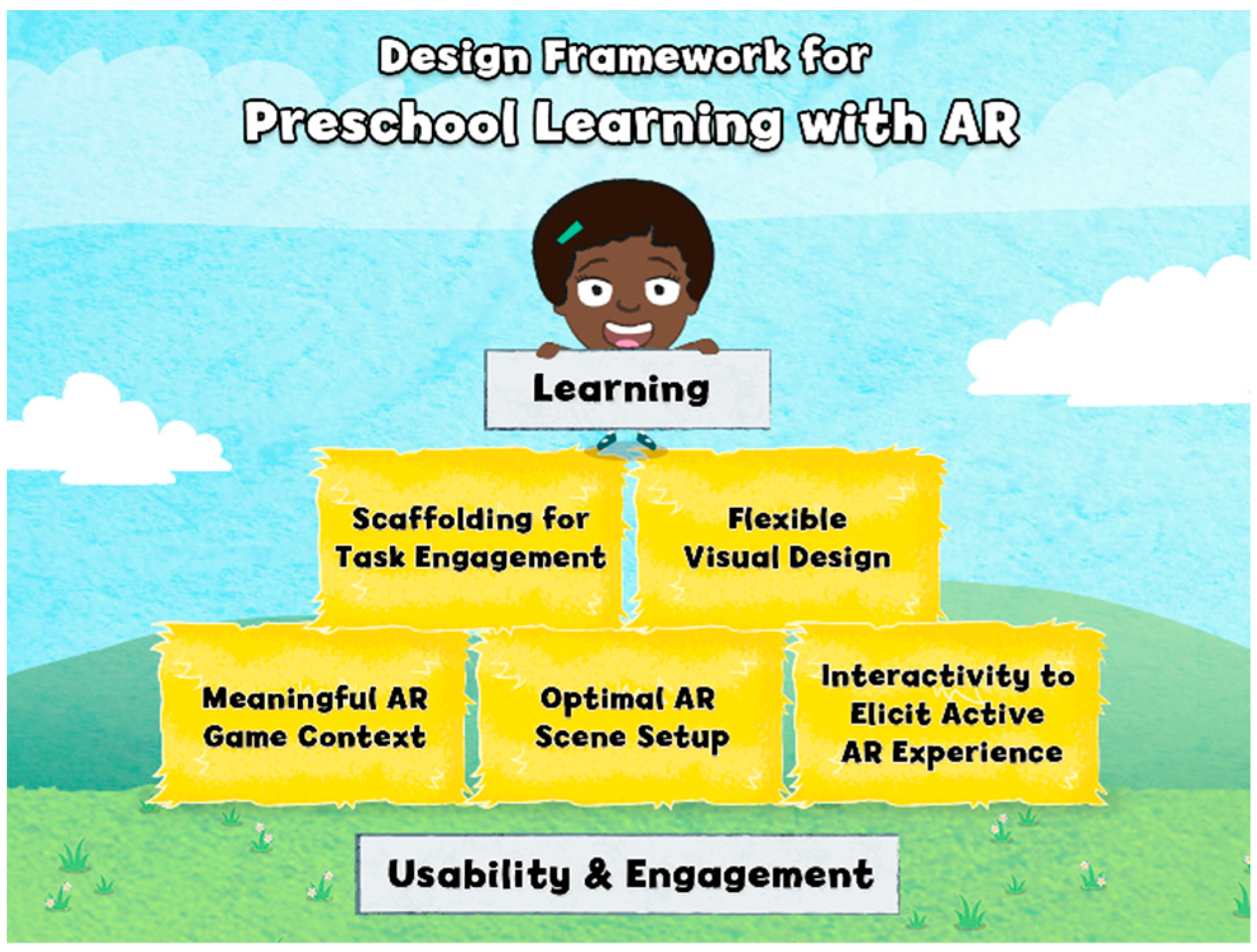

4. Design Considerations for Young Children’s Augmented Reality Learning Experience

This section outlines five design considerations for creators of engaging, usable, and supportive AR experiences for young children. Based on challenges and solutions from our collaborative DBIR process, these guidelines build on our framework to help designers develop effective AR learning tools for educational settings.

4.1. Design Consideration 1: Meaningful AR Game Context

AR experiences to support young children’s learning should (1) have clear, simple, and stepped-out tasks with supportive scaffolding and opportunities to rest the brain and arms; (2) use AR as a support to non-AR experiences; and (3) utilize the affordances of the technology.

Few AR games have interactive AR components and learning goals, especially for young children. There is often a learning curve to using the technology, and AR tasks can easily increase cognitive load. The AR experience can also be tiresome for children because to view the AR, a child needs to hold the device upright and move it around. Given these barriers to AR technology, we questioned not only how to support children in using this new technology for learning and make it an intuitive and beneficial experience, but also what the added benefit is to children beyond what could be learned without the technology.

Cognitive load refers to the limited amount of information one can hold in their working memory at any particular moment. As we explored different game approaches and ideas to determine what would be the best use of AR for supporting children’s spatial learning, we found that very clear, simple, and straightforward tasks in AR were successful. If a task has multiple steps, children should complete the steps one at a time rather than keeping all the steps in mind. Separating each of these steps allowed children to complete tasks intentionally and see the result of those tasks. Slowing the game, encouraging gentle movements, and decreasing stimuli from nonessential components also supported positive outcomes.

For example, in an early version of the

Map the Fair game, children would view a touchscreen digital fair map that had no landmarks except for a representation of Gracie and a road. Children would view the fair in AR and be tasked with toggling back and forth between the map and the AR fair to place the landmarks that they viewed in AR on the digital map (

Figure 7). This task proved to be too taxing for children, as it was hard to remember multiple landmarks and their relationship to one another all at the same time and then translate that information to the map representation.

Figure 6 shows the highest level in that version of the game. We found that to reduce the cognitive load, children needed a much more stepped-out way to understand what to look at and what to do in AR, and then they were able to translate that into a representation on a map.

In the final version of

Map the Fair (

Figure 6), we have a mostly empty map, with a sticker of Gracie and the ticket booth in the middle, and landmarks on the left- and right-most sides of the map. The stickers on the map correspond to the location of the landmarks and Gracie in the AR view and help to constrain children’s view, allowing them to know where the AR scene starts and ends. In the AR fair scene, children are tasked with first getting instructions from Gracie, getting a ticket for a ride, and then finding a specific ride in relation to her. Back on the touchscreen map, children focus just on that one ride and that ride’s relationship to Gracie. The levels in the game continue by using a relationship with one other landmark (e.g., “in front of the trees”), and children translate that relationship from the AR fair to the touchscreen map, until the map is completed with all the landmarks at the fair. Movement and animation of the AR rides at the fair are used only as a reward for correctly completing the learning task, as opposed to having the rides and the fair in a constant state of motion, thus allowing children to better focus on the task.

AR technology often requires children to hold the tablet and move it around (e.g., hold the tablet up and move it side to side). This can create arm fatigue, which is a challenge, especially for young children (

Tuli & Mantri, 2020). In both

Map the Fair and

Around the Farm, children are instructed to put the iPad down and complete a touchscreen task after each AR task. In

Map the Fair, children drag and drop a digital sticker of a ride to its correct location on the map after they have visited that one ride in AR. In

Around the Farm, children navigate the farm map after picking AR apples or feeding AR animals. This gives children a chance to rest their arms from holding the device upright for long periods of time. We observed that giving children a break from AR scenes allowed both their arms and minds to rest, helped maintain engagement in the game, and prevented children from getting fatigued.

We also observed that there is a cognitive benefit to having an interplay between the touchscreen interactions on a tablet and a supporting AR component. The interplay between the AR fair and the touchscreen map in Map the Fair provided a similar-to-real-life experience for how children would view landmarks in their real world and see them reflected on a map. The technology can connect the landmarks seen in the real world (specifically, the AR objects) and provide a scaffolded experience to the representation (those same objects on a touchscreen map). This is analogous to how children could be taught this spatial-representation content without the technology, but with the addition of scaffolding, checking for right and wrong answers, and embodied learning. It is important to note that there were still challenges with unifying the AR scene and the touchscreen representation of that scene. For example, in the early version of the fair map, the road was the connective component between landmarks, but that was not possible to show in AR. We had to convey relationships between AR objects (i.e., fair landmarks) without a continuous environment or connective tissue as one does in the real world. This was one of the main distinctions between the capabilities of AR versus what one might do with virtual reality (VR).

There seems to be a lot of potential for using AR for young children’s spatial learning when the AR is accompanied by touchscreen objects that can provide representations or abstractions of what is seen in an augmented real-world environment. This is a unique affordance of AR. We concluded that not every interaction in the game needs to be in AR, but instead AR can be used as a tool to support children and enhance or be enhanced by touchscreen representations and interactions to foster learning.

4.2. Design Consideration 2: Optimal AR Scene Setup

Keep the setup barrier as low as possible (use tilt detection until technology advances), and use visual tutorials to convey that (1) the device must be picked up (to see through the camera view), (2) there is a transition into an AR scene (to prepare children for the change in type of experience), and (3) some movement of the device is required (to set up the AR scene). Define the optimal environment and positioning for the ideal AR experience but develop for and test (often and with children) in a variety of environments, with differing distances to surfaces and differing angles of the device.

AR technology requires the user to activate the AR experience, something not familiar to young children who typically play other mobile or digital games. Setup requires image detection (using a QR code or some other designed image to anchor the digital object to in the real world), plane detection (using the device’s camera to detect a surface onto which a digital object can be placed), and/or tilt detection (using the device’s motion sensors to determine a suitable angle of the device that would create a meaningful AR view). Young, preliterate children are often used to instantaneous digital responses and have little patience for making the technology “work.” If they do not see a change almost immediately, they feel that the technology is broken. Thus, a setup process can induce frustration, impatience, heightened cognitive load, or the requirement of unwanted adult facilitation, ultimately triggering fatigue, decreased engagement, or complete disinterest. Because the setup is necessary, visual and audio scaffolds that do not rely on written instructions are needed throughout the process for preliterate children. It is essential to understand and overcome the burdens of setting up AR to use the technology for learning, especially in a classroom setting. By decreasing barriers for children’s setup, AR can be an additional intuitive piece of technology that will be able to enhance children’s learning experiences.

In our studies, we did not include image detection as an option for AR setup, as there is a barrier for children that would require them either to print out QR codes or images, or to have these images on some otherwise purchased material. We did not want access to a printer or the possession of dollar bills to be a barrier for parents or preschool educators using the app. Our team focused on exploring and testing plane detection and tilt detection with preschool children. We found that the plane detection took time for setup, which was often frustrating for children who expected instantaneous responses from the technology. Using plane detection also opened the possibility for more errors on the children’s side, such as not actually having a flat surface in front of them or being too close to other objects. Children felt that when setup took time or an error occurred, the app was broken, and they would also lose interest without adult facilitation. Thus, our findings suggest that plane detection was too cumbersome for such a young and preliterate age group.

The team determined that tilt detection for the AR setup was the optimal setup for this age group. Tilt detection uses the device’s internal sensors to detect when children hold the device at a predetermined (by the app developer) angle to initiate the AR scene. We found that even with tilt detection, children need to be reminded during AR setup to hold the iPad device vertically and move the iPad device (left, right, up, and down) very slowly to set the scene. Children also needed something to signify that the setup was complete. To address this, we included scaffolds to facilitate setup and decrease the likelihood of confusion, frustration, and/or fatigue. We included an animated tutorial of the character Gracie picking up an iPad into a vertical, upright position—from Gracie’s point of view (

Figure 8). This allowed children to see that they needed to hold the iPad upright. This image stayed on-screen until the child’s iPad detected that it was in an adequate upright tilted position.

Once the tilt was detected within a range between fully upright and a slight downward angle away from children and toward the floor, the iPad would then zoom in on an image on the screen that represented where children would be going in AR, along with a sound effect. For example, zooming in on apple trees signaled that children would be entering an AR apple orchard. At the end of the zoom, the device’s camera view would be displayed. If the AR scene still had not been triggered (e.g., if a child had moved the iPad so that it was now not registering an adequate tilt), then children would get another animation of Gracie’s robot friend, Tulip, in the corner of the screen lifting and gently moving the iPad up and side to side (see

Figure 9), along with supporting audio. This animation plays until children do the action and get an adequate tilt. The AR scene is then set up, which children can understand by seeing Gracie in front of their camera view.

AR scenes are set up dynamically and are based on children’s environments, and so the distance and angle between a child’s device and surface determine where the AR scene will be anchored. Because designers of AR experiences essentially have no control over how a child positions their device from the surface, nor do they have control of the child’s environment, they have limited control of the actual experience. The AR experience can differ significantly between a child sitting at a table, a child sitting on the floor, and a child standing up, and it can even further differ based on the angle at which a child is tilting or holding the device (e.g., upright at 90 degrees or at 60 degrees). The child’s height, viewpoint, and distance between where they hold the device and the other objects in space must all be considered.

Our team also noticed that when the AR objects are anchored straight ahead of the camera’s view, they often look “stickered” onto the real world. We observed that AR objects look more real with a slight tilt, so in later versions of the app, we adjusted the AR objects to be anchored slightly lower than the height of the camera. This accounts for the slight downward tilt that children and adults often naturally play with, making the objects look truly grounded in the scene. Differences in the AR view when children play with different positions of the iPad can be seen in

Figure 10a,b, from our Alpha version of the app before anchoring adjustments were made. Note that these visual differences can greatly affect how spatial relationships can be discussed, thus affecting learning.

The amount of space around a child or the number, color, and size of surfaces around the child will affect where the AR objects are positioned and how they look. For example, a child sitting in front of a wall and a child sitting in the middle of a large, open room will experience the AR scenes differently. A child with surfaces near them (e.g., a table, a couch, a bookshelf, toys, or clutter) may see AR objects oddly placed onto objects in the real world and appearing at an unintended distance (because the real-world object got in the way of the AR object’s placement;

Figure 11a,b).

Based on children’s movement in our studies, we recommend having children seated during the experience to prevent them from bumping into things in the real world and to prevent blocking the camera view or having quick movements in front of the camera. We have focused the design of our AR experiences on a child sitting either at a table or on the floor, holding the device above a surface, with an open space around the child. Our team has iteratively developed and tested our app to make the AR experience pleasant and fulfilling, even if slightly different, in these varying environments and modes of use.

Our team also found that the device needs a continuous, mostly unobstructed camera feed from the real world to create the AR experience. Excessive camera movements or people or objects blocking the camera’s view makes it difficult for the device to process the input from the real-world environment and could prevent the app from working. This can be a challenge in a preschool classroom or in homes with multiple children because children may move into the device’s camera view, or a child will focus the camera on other children while playing to see them with the AR objects. When obstruction or moving too fast does happen, we provide an overlay that allows children to restart the AR scene (

Figure 12).

Children are very active and are rarely in the same position for long. As a result, preschool children’s learning and playing take many forms. Sometimes children will be at a table with a chair, sometimes on the floor, and sometimes standing up. Overcoming the challenges of designing for the many movements and positions of children and allowing flexibility for that in the design of AR experiences will help to meet children where they naturally are and where they want and expect technology to be. This allows children to use and engage with the technology for learning.

4.3. Design Consideration 3: Interactivity That Elicits an Active AR Experience

Design scenes with a few prominent AR objects and not with immersive scenes. Keep AR objects large enough to comfortably fill (and possibly extend beyond) the screen, and consider limiting AR objects to three primary ones in any one view. Consider a screen border, drop shadows, and/or other designs during the AR experience to make the AR objects stand out against the background of the real world.

We wanted children to navigate through a scene of AR objects and characters to explore spatial relationships, but the brand of assets and characters that were available only provided 2D assets. We chose to billboard our AR objects, thus allowing us to use the 2D style of the [blinded] brand. Billboarded images are rotated to always face the child. This is often used in 3D video games for distant background objects, such as trees and grass, or other objects that could reasonably appear the same from any angle. In our designs, we have used this technique for all AR objects, with the idea that children are in the center of a circular AR environment.

Figure 13a,b show the billboarding approach of the exact same positioning of Gracie and the ticket booth when viewed from a different perspective; notice how Gracie and the ticket booth are always facing the child.

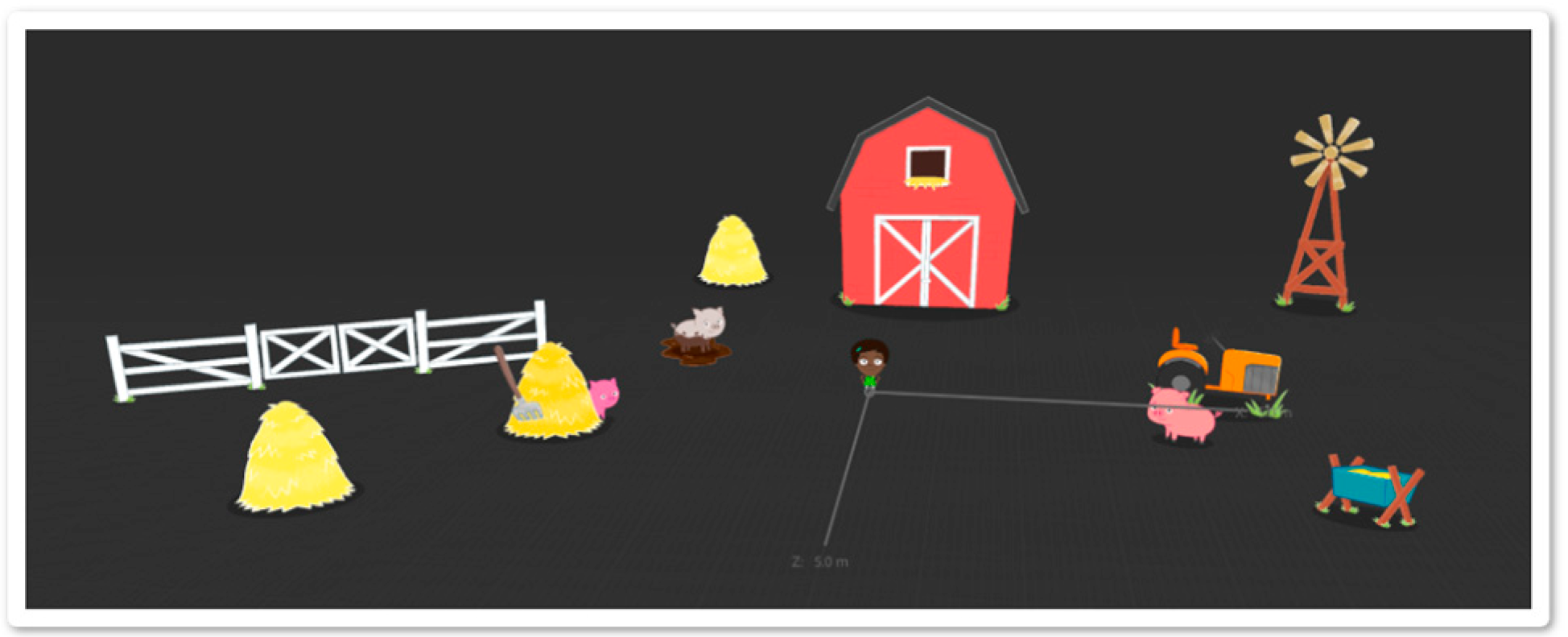

In the AR experiences in our app, the AR objects are situated roughly in a circle around the child and the character Gracie.

Figure 14 shows that general arrangement and how we situated the camera view of the child being at a slight distance from Gracie so that Gracie appears between the child and other AR objects in the scene.

In our work, we observed that the sizes and layout of AR objects impacted children’s ability to interact with the objects and understand the spatial relationships among them. The sizes and layout also greatly affected how children perceived the AR scene and how enjoyable and meaningful that scene could be. Our team explored diorama-like views (where a child could be an observer with a miniature AR scene down in front of and around them) and more true-to-life-size views (where AR objects would be larger, even more life-size). The diorama-like views were challenging because they included less embodiment of spatial relationships and fewer reasons to use AR (looking in on a world was more easily performed without AR). With the true-to-life-size views, the scale of the objects quickly became awkward. When objects were too big, children could not see enough of the object to know what it was. Also, the objects looked unappealing in the real world because, in many classrooms and homes, it was hard to get objects at a far-enough real distance, and instead they would appear to be stickered onto surfaces that were closer to the children.

We went with a blend between diorama-like and true-to-life-size views, but closer to diorama. Using tall objects gave the AR scene a sense of verticality and encouraged children to move the device to explore the AR space. Although we used tall objects, it was also important to provide children with the ability to see most of the AR objects on the screen at once. Extending beyond the camera frame is okay for large objects, but they should be viewable if children take a step back to view them. As we were designing for children to be seated and not up and moving around, this helped to govern the size of the AR objects that we included.

Figure 15 shows the carousel from

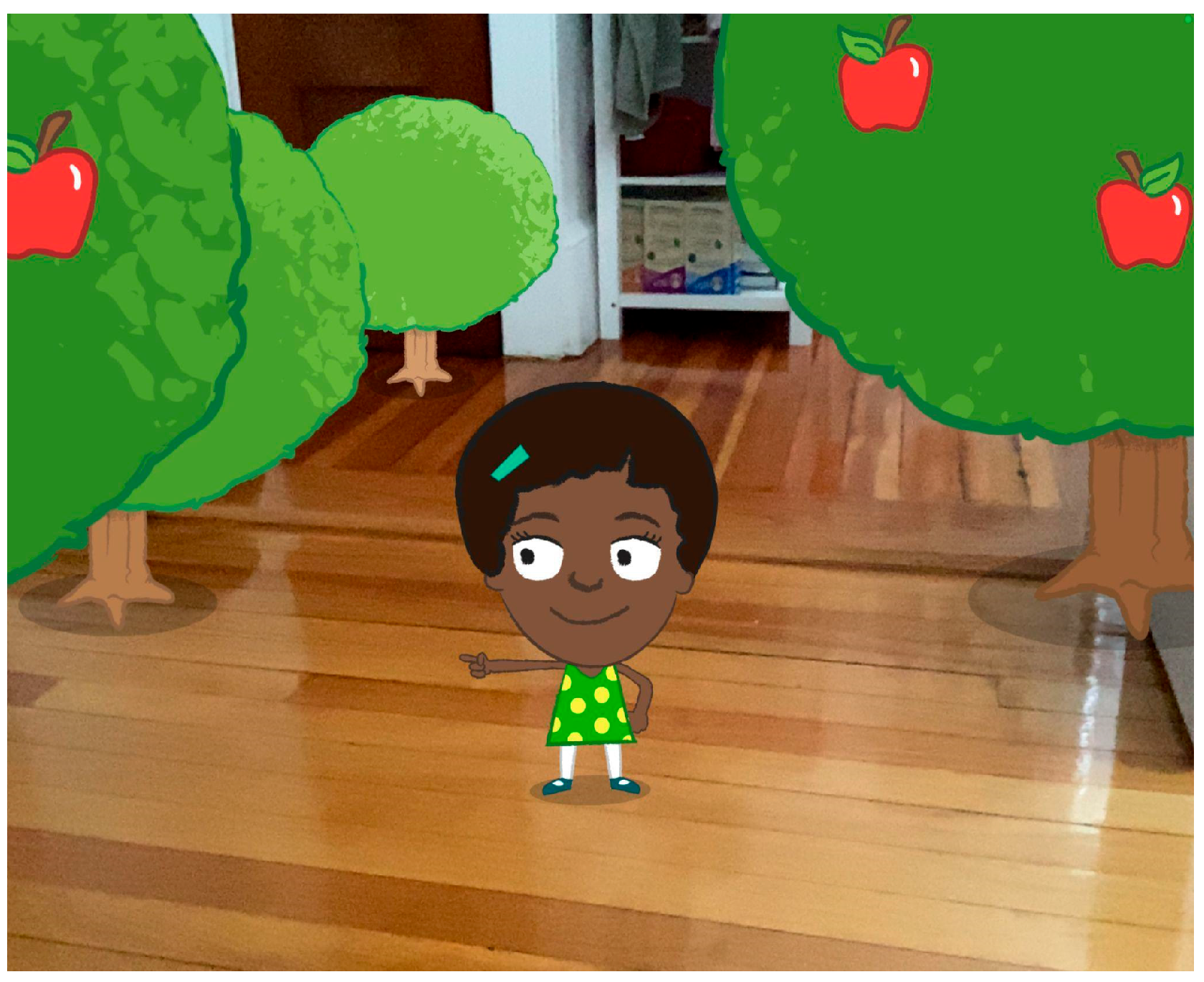

Map the Fair appearing to fill the majority of the iPad screen view but being smaller than a real-world child’s chair. It also shows how the backgrounded trees allow the carousel to pop against the real world, giving the carousel a feeling of depth, but not attempting to create an immersive feel.

In an early version of the app, our team explored making AR feel more immersive by surrounding children with apple trees so they would feel as if they were in an orchard. However, this translated poorly in AR, as the apple trees felt stickered in an odd circle (

Figure 16a,b) around the child. AR is not an immersive technology like virtual reality, and we found that the best AR experiences, especially for young children’s learning, were not about immersing children in a world, but about enabling them to interact with a few objects in their real space. Any scene design can then be used to support a feeling of depth for those primary AR objects.

The team also explored the number of primary objects to include in a scene so there were enough objects from which to understand spatial relations, but not too many objects so that the scene became difficult to navigate and felt overwhelming when juxtaposed onto the real world (which may already have multiple objects or clutter in it). An overwhelming and busy scene will not allow children to focus their attention on learning tasks. At the same time, when the scene had very few AR objects, it was unclear that children could find additional AR objects by moving their body and looking around in the real space. We found that arranging a few AR objects in the camera view at the same time while also providing some space between objects can help children with understanding what the objects are, what their relationships are, and how to interact with them. We chose to have no more than three primary AR objects in the camera view at one time (in addition to the character Gracie) so that the AR objects always had a spatial relationship to another that could be observed on screen.

Designs for young children typically use a bright and vibrant color palette. This is the case for both digital experiences and classroom decorations. Our team found that bright, monochromatic AR objects could be hard to see against brightly colored classroom decorations and walls. For example, in the alpha version of our app, children needed to find bright yellow feathers in a task. During testing, several of the children were using the app near a yellow wall, and when they turned to find the AR feathers, the feathers did not stand out against that similarly hued wall (

Figure 17). It was also a challenge to make many of our brightly colored assets pop from the brightly colored environment in which the game was being played.

We found that children benefited from having a visual signal indicating the AR visual style. In the AR Adventures app, we added a bright pink border around the screen in the same style as our brand visual assets. This border is the same as the robot character Tulip’s head to convey that children playing the game are looking through Tulip’s eyes; we call this “Tulip vision.” Tulip vision helped other AR images to stand out from the real-world environments by connecting the colors and styles between the border and those other AR assets. Tulip vision also helped convey that the camera view was still part of the game (as opposed to just being a camera view). As a before-and-after example,

Figure 18a shows an early version of a

Map the Fair AR scene with smaller objects and without the pink Tulip vision border, while

Figure 18b shows the scene in the final version of the app, with larger objects and added Tulip vision.

When the colors and visual design are engaging for preschool children and when they can be discerned from the background, the AR objects can do what they are intended to do—enhance and augment an experience in the child’s own real environment.

4.4. Design Consideration 4: Flexible Visual Design

For preschoolers who need both hands to hold a tablet for an AR experience, use the device’s ability to focus the camera for interactivity and consider using a reticle with a design that leverages black and white, color, transparency, size, and animation or movement to signify active and inactive states.

In general, children can interact with AR objects by pointing and focusing the tablet’s camera at the object, by moving the tablet closer to the object, or by touching/tapping on the screen to interact. For young children who need both hands to hold up an iPad, touching the screen with their finger is not a viable solution while still maintaining the view through the device’s camera. We explored moving the iPad for interactivity, which led to errors triggered by the children’s environment. For example, in one version of the app, we hid a digital pig behind a digital haystack in AR. Children could move the iPad closer to the haystack and the pig would pop out. When this worked, children were delighted, would giggle, and wanted to do it more. However, sometimes the AR scene would be set up with surfaces (e.g., walls or windows) close to the children. The AR haystack would appear on the surface, but the app had anchored the haystack at a further distance than the surface, making it impossible to trigger the pig popping out because the child could never move through that surface and close enough to trigger the pig.

To solve these issues, we did not include interactivity that required the movement of the iPad toward an AR object. Instead, we concentrated our efforts on pointing the camera as the ideal mode for interactivity. We determined that, for the preschool audience, interactivity with AR assets was accessible, intuitive, and fun when a reticle was used to show where the device’s camera was focusing. As mentioned in the “Overview of the AR Game” section above, our reticle is called the “sparkly circle” and is a translucent circle that can be moved so that it hovers over a digital object to “collect” it. However, the reticle design underwent iterations to provide enough contrast with both the real-world environment and the AR assets. We ended up using a circular reticle composed of lines and stars that went from an inactive state of being white, translucent, and relatively small, to an active state with a larger size, full transparency, a black border, and animation of a variety of colors rotating around it. The intention was that the movement from the animation and the use of black/white and other colors would help the reticle contrast against any other AR object and any real-world environment (

Figure 19a,b). The team also found that size can imply interactivity by making the reticle a size that would nicely fit around interactive AR objects, such as an AR apple in an apple tree that can be picked by using the reticle.

Leveraging an intuitive mode of interactivity together with AR technology can be a powerful and engaging learning tool. Interactivity empowers the learner and allows them to embody spatial relationships, thus helping to solidify their understanding, and designers should seek to make interactive rather than passive AR experiences for children.

4.5. Design Consideration 5: Scaffolding for Task Engagement

Our scaffolding combined visual supports with verbal instructions, and some screens included limited on-screen text. Although words were used sparingly, they were always paired with spoken directions or other visual cues to support understanding. This layered approach enables children to play more independently while recognizing that most preschoolers cannot read and will not be fully unsupervised. Additionally, the on-screen text assists adults supervising gameplay by reinforcing key directions and vocabulary related to the tasks.

AR designers should build visual scaffolds into the game that provide hints to children about how they should move, what they should look for during the task, and what they should do after completing a task. They should also limit the range of AR objects around the child and incorporate features to direct attention.

In non-AR experiences, designers have complete control over whatever is on the screen and, thus, what the user sees. In AR experiences, the designer only has control over what objects are placed onto the user’s real space, but the user can move anywhere and view any of those AR objects (or none of them) as they please. Several challenges come from this, such as how to encourage proper gameplay, how to keep attention on the task of the game, and how to scaffold the learning. In general, the challenge is how to guide the user’s experience to be what the designer envisions, despite the designer’s lack of control over the visuals. Our team explored a variety of approaches to find solutions.

We observed that the placement of AR objects could be used to convey movement and exploration, but too much movement by children took them out of the AR scene and became distracting, as they were not sure what to look at or do. We found that limiting the AR view to 180 degrees, specifically 90 degrees to the child’s right and 90 degrees to the child’s left, allowed children to stay focused on the task and play from a seated position. It also encouraged manageable movement and exploration within the AR space (

Figure 20).

By focusing children’s attention and placing limits on the AR world, confusion is reduced, and children are better able to enter the AR experience with intentionality and understand that the experience has tasks and a goal. This supports children in understanding that AR experiences can also be for purposeful learning.

We found that children needed to be centered and have some kind of home base in the AR scene. The central base in our app is Gracie. The AR scene is anchored around Gracie, and Gracie is the first thing that children see when the AR scene appears. Gracie gives instructions for each task, children complete the task, and then children navigate back to Gracie to know what to do next. We observed that when nothing was interactive or animated until children went back to Gracie, the flow and pace of the game was slow, consistent, intentional, and supportive of cognitive processing. Children were able to focus on the one task they were doing (e.g., picking apples, getting a ticket, feeding the animals, giving the ride a ticket, or going back to Gracie) without distraction.

We explored several approaches for how Gracie could direct children’s tasks. Early builds focused on visual approaches and audio approaches were added to our later builds. To help children navigate and know where to turn and how to move the iPad to complete the task, we used Gracie’s eye movements and pointing gestures (

Figure 21), a strategy that was highly effective. Children were engaged with Gracie and able to follow her eye and gestural cues. We also explored the use of arrows (

Figure 22), especially in places where Gracie was not seen. Arrows were also effective; however, we excluded them in our final build, as they seemed superfluous to Gracie’s gestures and other supports provided.

Once a task is completed, children return to view Gracie through the device’s camera. In early builds, we explored having speech bubbles to convey where Gracie was located (

Figure 23). In later builds, we just used audio of Gracie saying, “Come back to me.” Children navigate back to Gracie and receive audio instructions while also seeing Gracie’s mouth moving, which supports children’s listening, and are centered in the same location before navigating the AR scene for a new task.

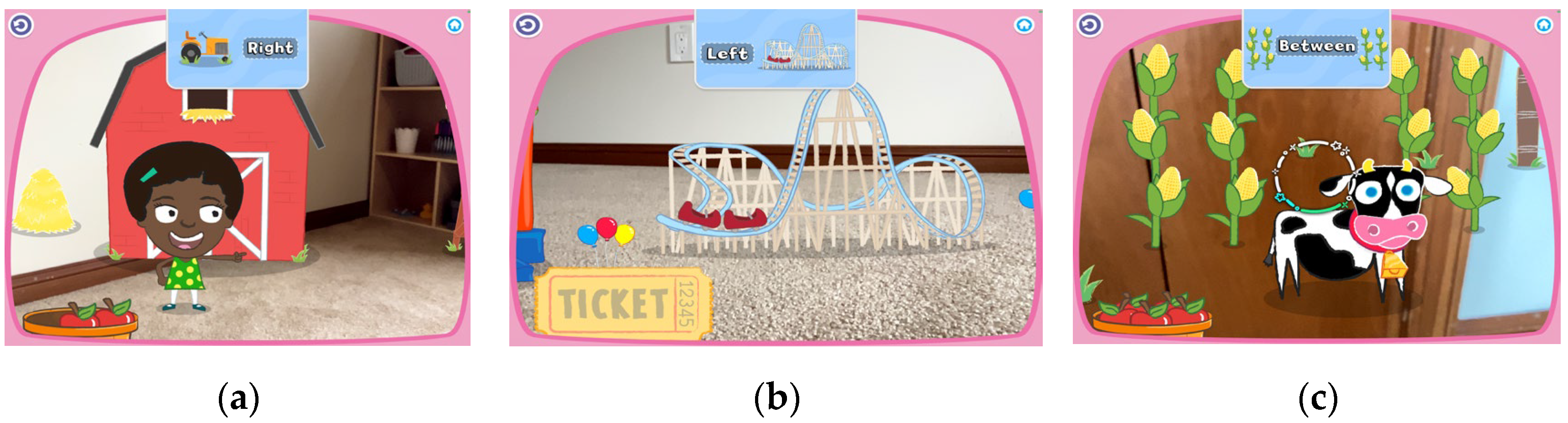

To provide instructions for specific spatial learning tasks, in our final build, we added tags in the top center of the screen that provide a visual representation of the object that the child needs to find. The object that they are tasked with finding is portrayed by a box with a landmark placed in the same spatial relationship as it appears in AR.

Figure 24a shows that the child must find the animal to the right of the tractor,

Figure 24b shows that the child must find the ride to the left of the roller coaster, and

Figure 24c shows that the child has found the animal between the corn stalks. Gracie gives all of these as audio instructions as well.

Because children can look anywhere at any time and we have no control over the movement of their device, providing helpful audio scaffolding can be extremely challenging. By the time the audio is triggered and heard by children, they could have moved to a drastically different location, making the audio scaffolding obsolete. Therefore, there is minimal audio scaffolding in our AR experiences for incorrect movements. Instead, audio encourages children to continue to seek out the object they have been tasked with finding.

With these modes of supportive scaffolds for early learners, children were able to navigate the AR world to accomplish their learning tasks. They were able to understand the requested spatial concepts from the abstract visual representations and translate that information into the augmented real-world environment to complete the tasks of giving apples to the correct animals and providing their ticket to the appropriate rides.

5. Lessons Learned

5.1. Affordances and Potential Benefits

Our findings suggest that AR holds promise to positively impact education, which could support young children’s spatial orientation learning and add benefits beyond non-technology-based learning activities. In our work, we have identified five advantages and unique affordances of AR for learning, specifically in a spatial learning context: (1) allows interactivity with children’s real-world environment; (2) verifies children’s real-world movements (left, right, and up down) and supports embodied learning; (3) scaffolds activities that take place in children’s own real space through digital verbal and visual supports; (4) releases much control to children; and (5) has the potential to engage in a unique way by embedding digital elements, such as characters, objects, and other designed assets, into children’s real-world environment.

By leveraging these affordances, we have found that AR provides an effective approach to spatial learning tasks in a real-world environment, enhancing spatial skills. Our AR experiences place children at the center, with digital objects maintaining spatial relationships that allow children to explore these spatial concepts. AR gives children an active role, as they embody the spatial relationships between objects or landmarks by moving their own body. Our studies suggest that children were engaged in these experiences and that they learned from them, providing evidence of promise for AR technology in preschool education.

While navigating the challenges of designing AR experiences for young children’s spatial learning, our team identified six major differences in designing AR learning experiences versus non-AR experiences:

- (1)

AR experiences require child setup. This setup can cause frustration, heighten cognitive load, or require adult facilitation—any of which can decrease engagement and trigger cognitive fatigue.

- (2)

AR experiences for young children’s learning need new forms of scaffolding and support. This is a benefit in that it opens up opportunities for new learning experiences, yet it is one of the largest differences between designing AR and designing non-AR gameplay.

- (3)

AR experiences depend greatly on children’s physicality. This can be tricky when designing for children, who are generally at different heights than the adult designers and who often change positions and move frequently.

- (4)

AR experiences depend greatly on children’s environment. The visibility of digital objects depends on the colors in the background. This is not controllable for the designers.

- (5)

AR experiences release control to children. This poses many challenges for design teams as they attempt to create a complete experience.

- (6)

Conceptual AR experiences can translate differently when built. This requires more iterations to obtain the intended AR experience.

As designers keep these differences in mind and consider the design challenges of creating AR learning experiences for preschoolers and a preschool environment, such as children’s physical capabilities, emerging-but-not-yet-mastered skills, expectations, and intuition, there is the possibility to further leverage the technology for learning in other contexts for this age group.

5.2. Potential Limitations

Existing AR technology has several limitations, and there are a few models for how to best support young children’s learning with it. First, setting up the AR environment can be finicky, tax children’s motor and attentional skills, and require visual tutorials and demonstrations, as preschoolers often cannot read text that might be instructive for older children. Second, the AR experience depends greatly on children’s own environment, an element that cannot be controlled in the design process. This includes the lighting, color, and appearance of the background space. Third, current AR technology does not allow objects to interact directly with one another or the real environment; for instance, they cannot hide behind real-world objects. Fourth, children engage in play in a variety of ways—while standing up, sitting down, or playing in front of a table—which can complicate their interactions with AR. Fifth, young children get fatigued when holding tablets for long periods of time, warranting shorter AR experiences. Sixth, we acknowledge the limitations of pilot tests and observations as a methodology. With small sample sizes and the observations limit control in the study design, our findings are limited in generalizability. These constraints highlight the need for further research with larger, more diverse populations and extended testing in naturalistic contexts to better understand the effectiveness and usability of AR learning experiences for preschoolers. Finally, there is still much to learn about how to scaffold at appropriate times so that feedback is timely and appropriate for children’s actions.

5.3. Teacher Preparation for Using AR in Preschool Classrooms

Designing AR experiences for classroom use requires careful consideration to ensure that they are feasible and align with existing educational structures; otherwise, teachers may be reticent to use them. For instance, teachers need to understand the learning objectives for each game. Before introducing AR to students, teachers should play the AR games themselves to identify ways to support children as they play, both with the game and through other classroom experiences and hands-on activities. Teachers need to determine the ideal space in which children can safely play (e.g., on a rug and at a learning-center table). They should set clear expectations for patience during AR setup, as the appearance of AR characters and objects may take time. Teachers can also encourage children to slowly scan their environment and support their perseverance in doing so. However, teachers and caregivers should limit the amount of time children play to less than 10–15 min per session, balancing the use of the AR technology with other modes of learning. Technology, including AR technology, is just a tool to foster learning.