The Critical Role of Science Teachers’ Readiness in Harnessing Digital Technology Benefits

Abstract

1. Introduction

- Which digital tools and materials do secondary school science teachers in Estonia use?

- What is the current level of digital competence among Estonian science teachers?

- What are the main benefits and challenges perceived by teachers regarding digital tools?

- How does digital tool usage differ between distance and face-to-face science teaching?

2. Theoretical Background

2.1. The Importance of Digital Literacy and Digital Competences

2.2. Benefits and Challenges of Digital Tools Usage in Science Teaching and Learning

2.3. Remaining Challenges

2.4. Key Areas of Research on Digital Technology

3. Materials and Methods

3.1. Sample

3.2. Instrument

3.3. Data Collection

3.4. Anonymization for Ethical Compliance

3.5. Data Analysis

4. Results and Analysis

4.1. Differences in the Use of Digital Tools in Distance and Contact Learning

4.2. Teaching Methods in Science Education

4.3. Digital Tools Available at Schools—Availability and Use of Digital Tools in Schools

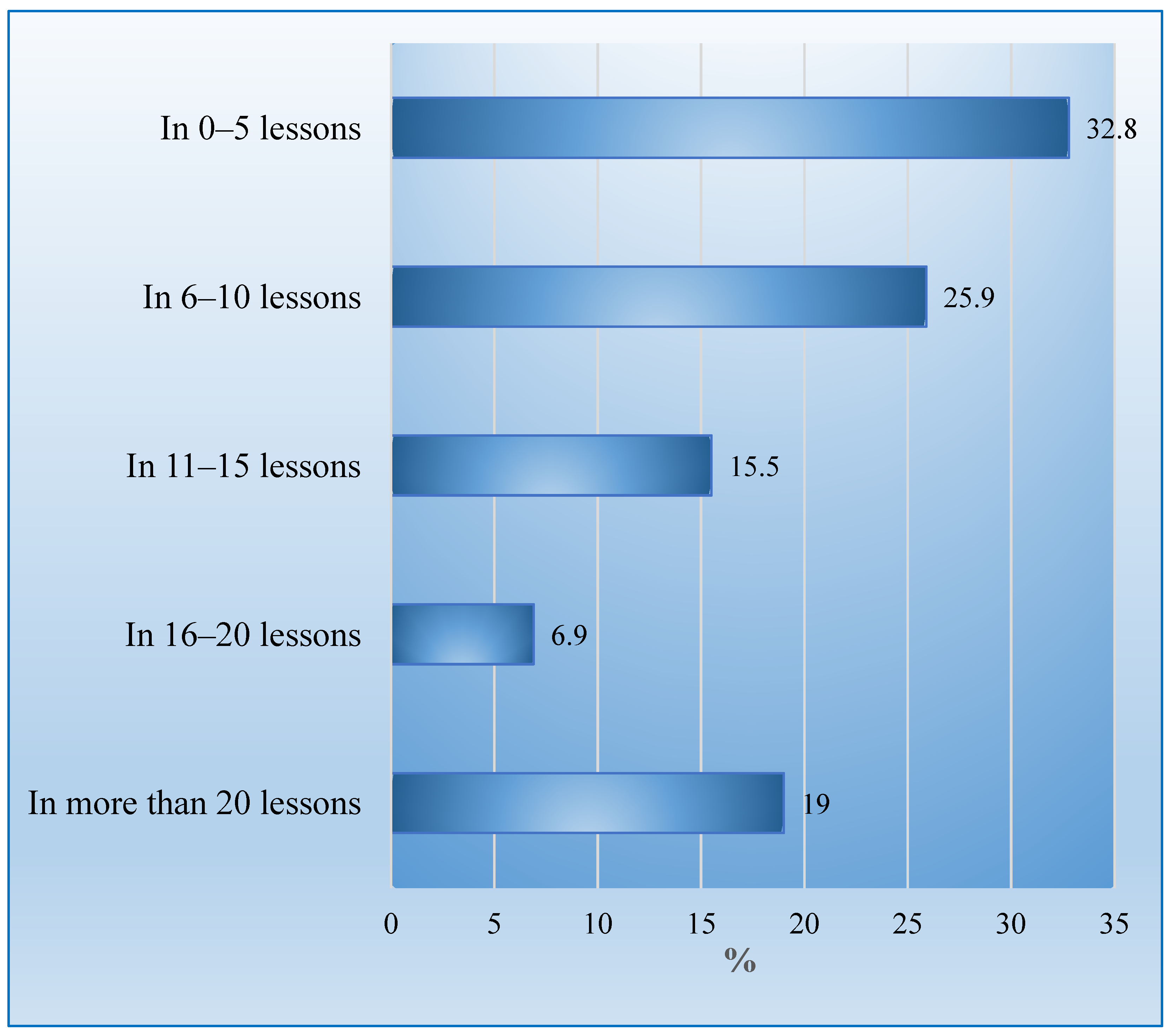

4.4. Frequency of Using Digital Learning Tools

4.5. Use of Digital Tools in Different Science Subjects

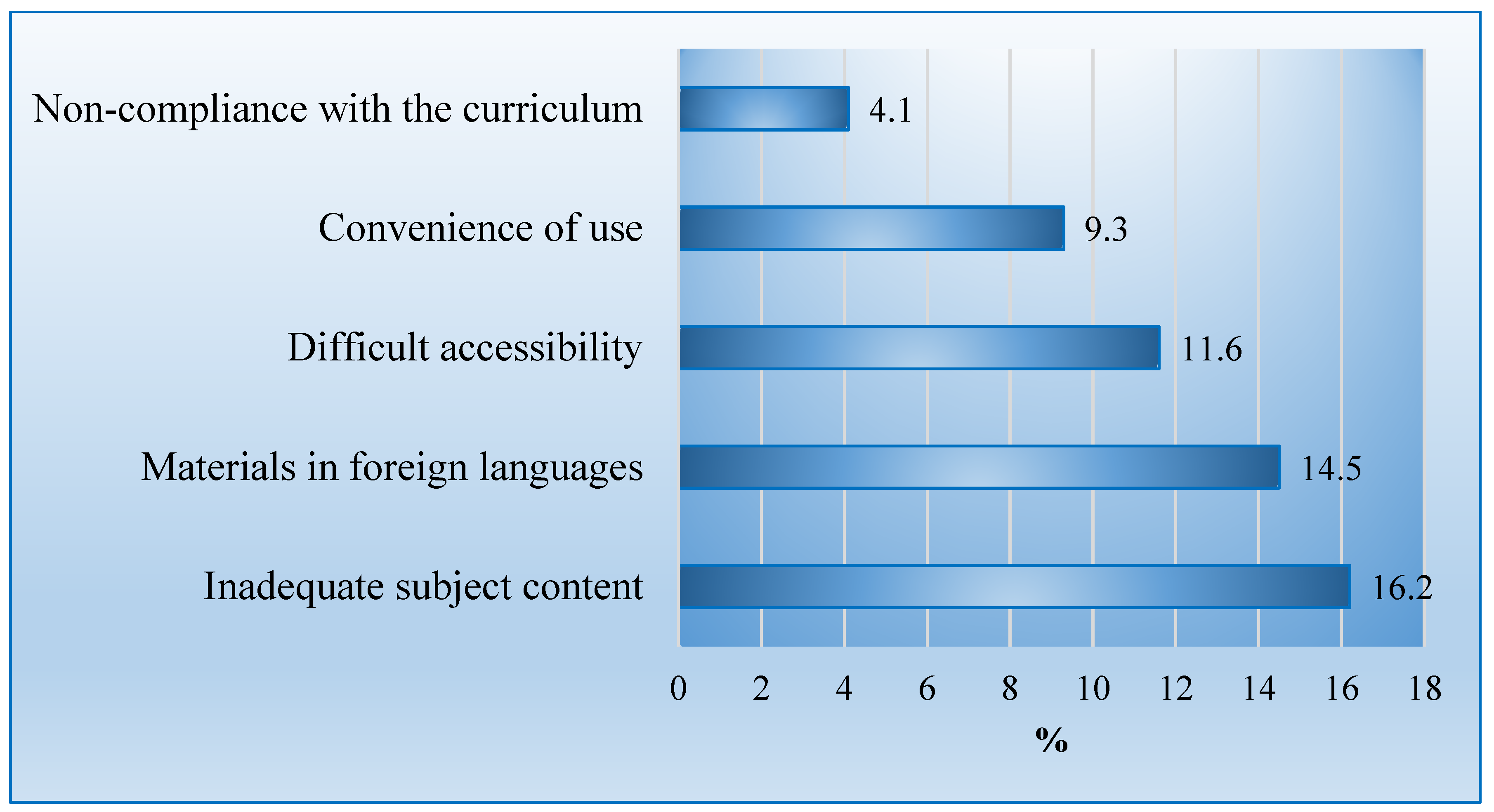

4.6. Challenges and Barriers to the Usage of Digital Tools in Science Education

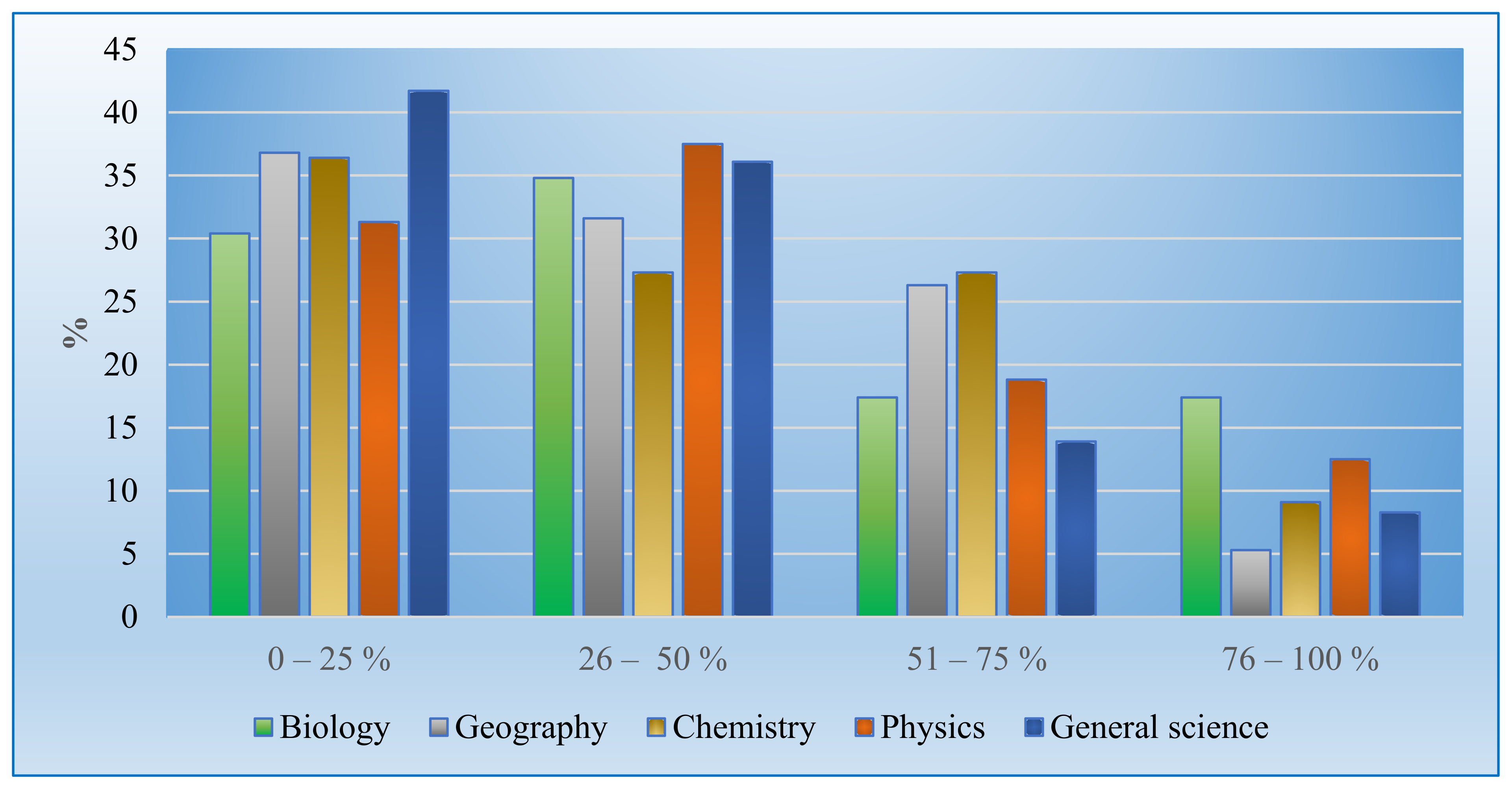

4.7. Prevalence of Digital Tool Utilization Across Various Science Subjects

4.8. Use of Digital Tools Across Different Age Groups

4.9. Discrepancies in Teachers’ Responses

5. Discussion

6. Conclusions

7. Limitations

8. Recommendations and Implications

Implications for Science Teachers

- ➢

- Increased access to AI-powered tools will enhance teaching in science subjects through data-driven experimentation and personalized learning experiences (The Guardian, 2025; Choudhury et al., 2024).

- ➢

- Strengthened broadband infrastructure ensures equitable access to digital tools, reducing disparities for rural schools (European Commission, 2025).

- ➢

- Targeted teacher training programs, especially under AI Leap, will support educators in integrating advanced digital pedagogy, including AI literacy and ethical use.

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. The Results of Qualitative Content Analysis Based on QCAmap

| Main Category | Subcategory | Code | No of Respondents |

| Digital Tools | Computer | Desktop computer | 38 |

| Laptop | 22 | ||

| Tablet | 28 | ||

| Other devices | Educational robot | 7 | |

| Virtual glasses | 3 | ||

| Smartphones | 9 | ||

| Document camera | 4 | ||

| Data projector | 7 | ||

| Vernier dataloggers | 3 | ||

| Interactive whiteboard (Smart Board) | 17 | ||

| Frequency of Usage | Usage per month | 0–5 times | 19 |

| 6–10 times | 4 | ||

| 11–15 times | 9 | ||

| 16–20 times | 4 | ||

| More than 20 times | 11 | ||

| Daily use in teaching | In one lesson | 19 | |

| In 2–3 lessons | 25 | ||

| In 4–6 lessons | 14 | ||

| Methods in Science Lessons | Practical work data | Outdoor learning, field trips | 20 |

| Experiments, hands-on-learning | 19 | ||

| Group work | Collaborative tasks, brainstorming | 12 | |

| ICT integration | VR glasses, computer labs, and interactive visualizations | 11 | |

| Self-directed online learning | 8 | ||

| Other methods | Observation | 3 | |

| Worksheets | 2 | ||

| Frontal discussions | 1 | ||

| Barriers | Hindering obstacles | Availability | 20 |

| Ease of use | 16 | ||

| Problems connected to curriculum | Lack of subject-specific content | 28 | |

| Foreign language materials | 25 | ||

| Non-compliance with curriculum | 7 | ||

| Differences in Distance and Contact Learning | No difference | No differences | 19 |

| Differences | More digital tools used during contact learning | 12 | |

| Less digital tools used during contact learning | 27 |

References

- Aydin, M. K., Yildirim, T., & Kus, M. (2024). Teachers’ digital competences: A scale construction and validation study. Frontiers in Psychology, 15, 1356573. [Google Scholar] [CrossRef]

- Besser, A., Flett, G. L., & Zeigler-Hill, V. (2022). Adaptability to a sudden transition to online learning during the COVID-19 pandemic: Understanding the challenges for students. Scholarship of Teaching and Learning in Psychology, 8(2), 85–105. [Google Scholar] [CrossRef]

- Biggins, D., & Holley, D. (2022). Student wellbeing and technostress: Critical learning design factors. Journal of Learning Development in Higher Education, 25, 1–12. [Google Scholar] [CrossRef]

- Bilyalova, A. A., Salimova, D. A., & Zelenina, T. I. (2020). Digital transformation in education. In T. Antipova (Ed.), Integrated science in digital age (pp. 265–276). Springer International Publishing. [Google Scholar] [CrossRef]

- Branig, M., Engel, C., Schmalfuß-Schwarz, J., Müller, E. F., & Weber, G. (2022). Where are we with inclusive digital further education? Accessibility through digitalization. Mobility for Smart Cities and Regional Development—Challenges for Higher Education, 21–33. [Google Scholar] [CrossRef]

- Braun, V., & Clarke, V. (2019). Reflecting on reflexive thematic analysis. Qualitative Research in Sport, Exercise and Health, 11, 589–597. [Google Scholar] [CrossRef]

- Castaño Muñoz, J., Vuorikari, R., Costa, P., Hippe, R., & Kampylis, P. (2023). Teacher collaboration and students’ digital competence—Evidence from the SELFIE tool. European Journal of Teacher Education, 46(3), 476–497. [Google Scholar] [CrossRef]

- Chen, X., Breslow, L., & DeBoer, J. (2018). Analyzing productive learning behaviors for students using immediate corrective feedback in a blended learning environment. Computers & Education, 117, 59–74. [Google Scholar] [CrossRef]

- Choudhury, A., Sarma, K. K., Misra, D. D., Guha, K., & Iannacci, J. (2024). Edge computing for smart-city human habitat: A pandemic-resilient, AI-powered framework. Journal of Sensor and Actuator Networks, 13(6), 76. [Google Scholar] [CrossRef]

- Chounta, I.-A., Bardone, E., Raudsep, A., & Pedaste, M. (2022). Exploring teachers’ perceptions of artificial intelligence as a tool to support their practice in Estonian K-12 education. International Journal of Artificial Intelligence in Education, 32(3), 725–755. [Google Scholar] [CrossRef]

- Claro, M., Castro-Grau, C., Ochoa, J. M., Hinostroza, J. E., & Cabello, P. (2024). Systematic review of quantitative research on digital competences of in-service school teachers. Computers & Education, 215, 105030. [Google Scholar] [CrossRef]

- ClassPoint. (2024, January 19). Top 6 digital formative assessment tools of 2023 compared|ClassPoint. Available online: https://www.classpoint.io/blog/digital-formative-assessment-tools (accessed on 24 February 2025).

- Cohen, L., Manion, L., & Morrison, K. (2007). Research methods in education (6th ed.). Routledge Falmer. [Google Scholar]

- Cone, L., Brøgger, K., Berghmans, M., Decuypere, M., Förschler, A., Grimaldi, E., Hartong, S., Hillman, T., Ideland, M., Landri, P., & van de Oudeweetering, K. (2022). Pandemic Acceleration: COVID-19 and the emergency digitalization of European education. European Educational Research Journal, 21(5), 845–868. [Google Scholar] [CrossRef]

- Coulston, F., Lynch, F., & Vears, D. F. (2025). Collaborative coding in inductive content analysis: Why, when, and how to do it. Journal of Genetic Counseling, 34(3), e70030. [Google Scholar] [CrossRef] [PubMed]

- Dašić, D., Ilievska Kostadinović, M., Vlajković, M., & Pavlović, M. (2024). Digital literacy in the service of science and scientific knowledge. International Journal of Cognitive Research in Science, Engineering and Education (IJCRSEE), 12(1), 219–227. [Google Scholar] [CrossRef]

- de Jong, T., Lazonder, A. W., Chinn, C. A., Fischer, F., Gobert, J., Hmelo-Silver, C. E., Koedinger, K. R., Krajcik, J. S., Kyza, E. A., Linn, M. C., Pedaste, M., Scheiter, K., & Zacharia, Z. C. (2024). Beyond inquiry or direct instruction: Pressing issues for designing impactful science learning opportunities. Educational Research Review, 44, 100623. [Google Scholar] [CrossRef]

- Education Estonia. (2024a). ProgeTiiger: Pioneering technological literacy in Estonia. Available online: https://www.educationestonia.org/pioneering-technological-literacy-in-estonia/ (accessed on 15 March 2025).

- Education Estonia. (2024b). What are the digital solutions used in Estonian education? Education Estonia. Available online: https://www.educationestonia.org/infosystems (accessed on 1 April 2025).

- e-Estonia. (2023). A decade on, Estonia’s ProgeTiiger is gearing up to teach AI to students. Available online: https://e-estonia.com/a-decade-on-estonias-progetiger-is-gearing-up-to-teach-ai-to-students (accessed on 12 April 2024).

- EKKA. (2022). Teacher training evaluation decision. Available online: https://ekka.edu.ee (accessed on 4 February 2024).

- European Commission. (2017). DigCompEdu—European commission. Available online: https://joint-research-centre.ec.europa.eu/digcompedu_en (accessed on 11 February 2025).

- European Commission. (2024). Estonia: A snapshot of digital skills. Digital Skills and Jobs Platform. Available online: https://digital-skills-jobs.europa.eu/en/latest/briefs/estonia-snapshot-digital-skills (accessed on 2 April 2025).

- European Commission. (2025). Broadband connectivity stimulates rural revitalisation|Shaping Europe’s digital future. Available online: https://digital-strategy.ec.europa.eu/en/library/broadband-connectivity-stimulates-rural-revitalisation (accessed on 15 May 2025).

- Fajri, N., Sriyati, S., & Rochintaniawati, D. (2024). Global research trends of digital learning media in science education: A bibliometric analysis. Jurnal Penelitian Pendidikan IPA, 10(1), 1–11. [Google Scholar] [CrossRef]

- Falloon, G. (2020). From digital literacy to digital competence: The teacher digital competency (TDC) framework. Educational Technology Research and Development, 68(5), 2449–2472. [Google Scholar] [CrossRef]

- Farias-Gaytan, S., Aguaded, I., & Ramirez-Montoya, M.-S. (2022). Transformation and digital literacy: Systematic literature mapping. Education and Information Technologies, 27(2), 1417–1437. [Google Scholar] [CrossRef]

- Farooq, E., Zaidi, E., & Shah, M. M. A. (2024). The future classroom: Analyzing the Integration and impact of digital technologies in science education. Jurnal Penelitian Dan Pengkajian Ilmu Pendidikan: E-Saintika, 8(2), 280–318. [Google Scholar] [CrossRef]

- Futri, I. M., Nasir, M., & Sahal, M. (2024). Application of virtual laboratory PhET simulation on dynamic fluid material to improve learning outcomes of class XI students at SMA Negeri 4 pekanbaru. Journal of Science, Learning Process and Instructional Research, 2(1), 20–26. [Google Scholar]

- HARNO (Haridus- ja Noorteamet). (2022). DigiKiirendi programme develops digital competences. Available online: https://harno.ee (accessed on 3 April 2025).

- Healy, A. F., Jones, M., Lalchandani, L. A., & Tack, L. A. (2017). Timing of quizzes during learning: Effects on motivation and retention. Journal of Experimental Psychology: Applied, 23(2), 128–137. [Google Scholar] [CrossRef]

- Helsper, E. J., Schneider, L., van Deursen, A. J. A. M., & van Laar, E. (2021). Youth digital skills indicator. Zenodo. [Google Scholar] [CrossRef]

- Hillmayr, D., Ziernwald, L., Reinhold, F., Hofer, S. I., & Reiss, K. M. (2020). The potential of digital tools to enhance mathematics and science learning in secondary schools: A context-specific meta-analysis. Computers & Education, 153, 103897. [Google Scholar] [CrossRef]

- Howard, S. K., Tondeur, J., Siddiq, F., & Scherer, R. (2021). Ready, set, go! Profiling teachers’ readiness for online teaching in secondary education. Technology, Pedagogy and Education, 30(1), 141–158. [Google Scholar] [CrossRef]

- Hrynevych, L. M., Morze, N. V., Vember, V. P., & Boiko, M. A. (2021). Use of digital tools as a component of STEM education ecosystem. Educational Technology Quarterly, 2021(1), 118–139. [Google Scholar] [CrossRef]

- Inan-Karangul, B., Seker, M., & Aykut, C. (2021). Investigating students’ digital literacy levels during online education due to COVID-19 pandemic. Sustainability, 13(21), 11878. [Google Scholar] [CrossRef]

- Kalvet, T. (2012). Innovation: A factor explaining e-government success in Estonia. Electronic Government, an International Journal, 9(2), 142. [Google Scholar] [CrossRef]

- Kormakova, V., Klepikova, A., Lapina, M., & Rugelj, J. (2021). ICT competence of a teacher in the context of digital transformation of education. Available online: https://covid19.neicon.ru/publication/10430 (accessed on 15 May 2025).

- Lorenz, B., Kikkas, K., & Laanpere, M. (2016). Digital Turn in the Schools of Estonia: Obstacles and Solutions. In P. Zaphiris, & A. Ioannou (Eds.), Learning and collaboration technologies (pp. 722–731). Springer International Publishing. [Google Scholar] [CrossRef]

- Lund, A., Furberg, A., Bakken, J., & Engelien, K. L. (2014). What does professional digital competence mean in teacher education? Nordic Journal of Digital Literacy, 9(4), 280–298. [Google Scholar] [CrossRef]

- McPherson, H., & Pearce, R. (2022). The shifting educational landscape: Science teachers’ practice during the COVID-19 pandemic through an activity theory lens. Disciplinary and Interdisciplinary Science Education Research, 4(1), 19. [Google Scholar] [CrossRef] [PubMed]

- Ministry of Education and Research. (2015, September 15). Minister ligi: All school studies digital by 2020. Available online: https://www.hm.ee/en/news/minister-ligi-all-school-studies-digital-2020 (accessed on 24 March 2024).

- National curriculum for upper secondary schools. (2023). Available online: https://www.riigiteataja.ee/en/eli/529042024001/consolide (accessed on 5 May 2025).

- Neumann, K., & Waight, N. (2019). Call for Papers: Science teaching, learning, and assessment with 21st century, cutting-edge digital ecologies. Journal of Research in Science Teaching, 56(2), 115–117. [Google Scholar] [CrossRef]

- Nor, N. M., & Halim, L. (2023). Science teaching and learning through digital education: A systematic literature review. International Journal of Academic Research in Progressive Education and Development, 12(3), 1956–1975. [Google Scholar] [CrossRef]

- Nowell, L. S., Norris, J. M., White, D. E., & Moules, N. J. (2017). Thematic analysis: Striving to meet the trustworthiness criteria. International Journal of Qualitative Methods, 16(1), 1609406917733847. [Google Scholar] [CrossRef]

- Ogegbo, A. A., & Ramnarain, U. (2022). A systematic review of computational thinking in science classrooms. Studies in Science Education, 58(2), 203–230. [Google Scholar] [CrossRef]

- Orav-Puurand, K., Jukk, H., Kraav, T., Oras, K., & Pihlap, S. (2024). A study of Estonian high school students’ views on learning mathematics and the integration of digital tools in distance learning. Acta Didactica Napocensia, 17(1), 36–43. [Google Scholar] [CrossRef]

- Oskarita, E., & Arasy, H. N. (2024). The role of digital tools in enhancing collaborative learning in secondary education. International Journal of Educational Research, 1(1), 26–32. [Google Scholar] [CrossRef]

- Pedaste, M., Kallas, K., & Baucal, A. (2023). Digital competence test for learning in schools: Development of items and scales. Computers & Education, 203, 104830. [Google Scholar] [CrossRef]

- PhET Interactive Simulations. (n.d.). PhET: Free online physics, chemistry, biology, earth science and math simulations. University of Colorado Boulder. Available online: https://phet.colorado.edu (accessed on 13 April 2025).

- Pinto-Santos, A. R., Pérez Garcias, A., & Darder Mesquida, A. (2022). Development of teaching digital competence in initial teacher training: A systematic review. World Journal on Educational Technology: Current Issues, 14(1), 1–15. [Google Scholar]

- Raave, D. K., Saks, K., Pedaste, M., & Roldan Roa, E. (2024). How and why teachers use technology: Distinct integration practices in K-12 education. Education Sciences, 14(12), 1301. [Google Scholar] [CrossRef]

- Rahmawati, S., Abdullah, A. G., & Widiaty, I. (2024). Teachers’ digital literacy overview in secondary school. International Journal of Evaluation and Research in Education (IJERE), 13(1), 597–606. [Google Scholar] [CrossRef]

- Reychav, I., Elyakim, N., & McHaney, R. (2023). Lifelong learning processes in professional development for online teachers during the Covid era. Frontiers in Education, 8, 1041800. [Google Scholar] [CrossRef]

- Rihoux, B., & Ragin, C. C. (2009). Configurational comparative methods: Qualitative comparative analysis (QCA) and related techniques (Vols. 1–51). SAGE Publications, Inc. [Google Scholar] [CrossRef]

- Rosin, T., Vaino, K., Soobard, R., & Rannikmäe, M. (2020). Estonian science teacher beliefs about competence-based science e-testing. Science Education International, 32(1), 34–45. [Google Scholar] [CrossRef]

- Sari, D. R., & Wulandari, R. (2023). Elevating cognitive learning outcomes via inquiry learning empowered by digital web teaching materials in science education. Indonesian Journal of Education Methods Development, 18(4), 4. [Google Scholar] [CrossRef]

- Sánchez-Cruzado, C., Santiago Campión, R., & Sánchez-Compaña, M. T. (2021). Teacher digital literacy: The indisputable challenge after COVID-19. Sustainability, 13(4), 1858. [Google Scholar] [CrossRef]

- Schechter, R., Gross, R., & Cai, J. (2024). Exploring nationwide student engagement and performance in virtual lab simulations with labster. Available online: https://www.learntechlib.org/primary/p/224601/ (accessed on 14 June 2025).

- Schmidt, B., Crepaldi, M. A., Bolze, S. D. A., Neiva-Silva, L., & Demenech, L. M. (2020). Saúde mental e intervenções psicológicas diante da pandemia do novo coronavírus (COVID-19). Estudos de Psicologia (Campinas), 37, e200063. [Google Scholar] [CrossRef]

- Shapovalov, Y. B., Bilyk, Z. I., Usenko, S. A., & Shapovalov, V. B. (2022). Systematic analysis of digital tools to provide STEM and science education. Journal of Physics: Conference Series, 2288(1), 012032. [Google Scholar] [CrossRef]

- Tallinna Reaalkool. (2023). Science competence centre equipment. Available online: https://real.edu.ee (accessed on 16 January 2025).

- The Guardian. (2025, May 26). Estonia eschews phone bans in schools and takes leap into AI. Available online: https://www.theguardian.com/education/2025/may/26/estonia-phone-bans-in-schools-ai-artificial-intelligence (accessed on 1 June 2025).

- Thomas, D. R. (2006). A general inductive approach for analyzing qualitative evaluation data. American Journal of Evaluation, 27(2), 237–246. [Google Scholar] [CrossRef]

- van Laar, E., van Deursen, A. J. A. M., van Dijk, J. A. G. M., & de Haan, J. (2020). Determinants of 21st-century skills and 21st-century digital skills for workers: A systematic literature review. Sage Open, 10(1), 2158244019900176. [Google Scholar] [CrossRef]

- Vashisht, S. (2024, November 6–7). Enhancing learning experiences through augmented reality and virtual reality in classrooms. 2024 2nd International Conference on Recent Advances in Information Technology for Sustainable Development (ICRAIS) (pp. 12–17), Manipal, India. [Google Scholar] [CrossRef]

- Viberg, O., Mutimukwe, C., Hrastinski, S., Cerratto-Pargman, T., & Lilliesköld, J. (2024). Exploring teachers’ (future) digital assessment practices in higher education: Instrument and model development. British Journal of Educational Technology, 55(6), 2597–2616. [Google Scholar] [CrossRef]

- Watson, J. H., & Rockinson-Szapkiw, A. (2021). Predicting preservice teachers’ intention to use technology-enabled learning. Computers & Education, 168, 104207. [Google Scholar] [CrossRef]

- Zhang, Q. (2024). Harnessing artificial intelligence for personalized learning pathways: A framework for adaptive education management systems. Applied and Computational Engineering, 82, 167–172. [Google Scholar] [CrossRef]

- Zhao, L., Zhao, B., & Li, C. (2023). Alignment analysis of teaching–learning-assessment within the classroom: How teachers implement project-based learning under the curriculum standards. Disciplinary and Interdisciplinary Science Education Research, 5(1), 13. [Google Scholar] [CrossRef]

| Time of Teaching Experience | No. of Teachers | Description of Teachers |

|---|---|---|

| 0–2 years | 8 | new or relatively new to teaching |

| 2–6 years | 20 | moderately experienced |

| 6–10 years | 7 | mid-career level |

| Stage | Sub-Stage | Description |

|---|---|---|

| Project Initiation | The initiation phase of the project begins within QCAmap by specifying a project name and providing a detailed description; project settings, including privacy options, are configured to align with the project’s needs. | |

| Data Entry | Conditions and Outcomes | Cases and their associated conditions (variables) are entered into the system. Both binary (0/1) and multi-value conditions can be input, depending on the nature of the analysis. |

| Data Table Completion | A data table is populated with cases (as rows) and conditions (as columns). This data forms the foundation for the subsequent QCA. | |

| QCA Execution | Truth Table Generation | A truth table is automatically generated by QCAmap, displaying all possible combinations of conditions and their corresponding outcomes. |

| Minimization Process | An algorithm is applied to minimize the truth table, identifying the most parsimonious combination of conditions that lead to specific outcomes. This process is central to QCA, as it simplifies complex data into understandable patterns. | |

| Result Interpretation | The results, including minimized formulas, are interpreted to understand the underlying patterns among the cases. This step is crucial for drawing meaningful conclusions from the analysis. | |

| Results Export | Exporting Results | The outcomes of the analysis, such as truth tables and minimized formulas, are exported in various formats (e.g., CSV, PDF) for further examination or reporting purposes. |

| Co-Coding Scheme Development | Codes and Categories Definition | A coding scheme is developed, encompassing all codes and categories that will be used to analyze the data. Definitions and examples for each code are provided to ensure clarity. |

| Discussion and Refinement | The coding scheme is discussed among all coders to reach a consensus, ensuring a shared understanding and reducing the likelihood of discrepancies during the coding process. | |

| Training | Coders are trained in the application of the coding scheme, which may involve practicing on sample data. Feedback is provided to resolve any emerging issues. | |

| Trial Coding | A small sample of the data is independently coded by each coder. The results are then compared to identify and address any differences in coding decisions. | |

| Independent Coding | Data Assignment | The data is divided among coders, who independently apply the coding scheme to their assigned portions. This independent work is critical to prevent bias. |

| Results Comparison | The coded data is compared using inter-coder reliability measures, such as Krippendorff’s alpha, to assess the level of agreement between coders. | |

| Discrepancies Resolution | Discussion of Discrepancies | Any discrepancies are discussed in a meeting among coders. The goal is to reach a consensus on how the data should be coded, which may involve refining the coding scheme or providing additional training. |

| Consensus Achievement | Agreement is reached on the final coding, ensuring consistency across all cases. | |

| Final Coding and Analysis | Final Coding Execution | The final coding is completed based on the consensus reached during discrepancy resolution. |

| Reliability Assessment | The final inter-coder reliability is calculated to evaluate the consistency of the coding process. | |

| Data Analysis | The finalized coding allows for the comprehensive analysis of the data, leading to the extraction of significant findings. | |

| Documentation and Reporting | Process Documentation | The entire co-coding process is documented, including the development of the coding scheme, training, trial coding, discrepancy resolution, and final reliability measures. |

| Reporting | The co-coding results and reliability metrics are reported in the final research output to ensure transparency and credibility. |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Laius, A.; Orgusaar, G. The Critical Role of Science Teachers’ Readiness in Harnessing Digital Technology Benefits. Educ. Sci. 2025, 15, 1001. https://doi.org/10.3390/educsci15081001

Laius A, Orgusaar G. The Critical Role of Science Teachers’ Readiness in Harnessing Digital Technology Benefits. Education Sciences. 2025; 15(8):1001. https://doi.org/10.3390/educsci15081001

Chicago/Turabian StyleLaius, Anne, and Getriin Orgusaar. 2025. "The Critical Role of Science Teachers’ Readiness in Harnessing Digital Technology Benefits" Education Sciences 15, no. 8: 1001. https://doi.org/10.3390/educsci15081001

APA StyleLaius, A., & Orgusaar, G. (2025). The Critical Role of Science Teachers’ Readiness in Harnessing Digital Technology Benefits. Education Sciences, 15(8), 1001. https://doi.org/10.3390/educsci15081001