Innovative Hands-On Approach for Magnetic Resonance Imaging Education of an Undergraduate Medical Radiation Science Course in Australia: A Feasibility Study

Abstract

1. Introduction

2. Materials and Methods

- Incidental findings observed during the MRI scanning. This might cause potential stress to respective participants due to health and privacy concerns. You should see your GPs [general practitioners] if you suspect having any medical diseases or conditions. In case of any potentially significant findings observed during the MRI scanning, we will advise you to approach your GPs according to Ahpra’s [Australian Health Practitioner Regulation Agency’s] requirements. When you are scanned by your peers, they will be able to see MRI images of your body, resulting in privacy concern. However, all participants are registered as students with MRPBA and required to observe its privacy and confidentiality requirements during the MRI scanning. It is not uncommon for healthcare professionals to provide clinical services to those who they know in clinical practice, e.g., healthcare professionals visiting their staff clinics run by their colleagues for medical services, etc. Participant numbers (codes) instead of names will be entered into the MRI scanner for initiating the scans to further address any privacy concerns.

- Triggering claustrophobia when being scanned by your peers. However, you can use the MRI call button provided to terminate the scan at any point.”

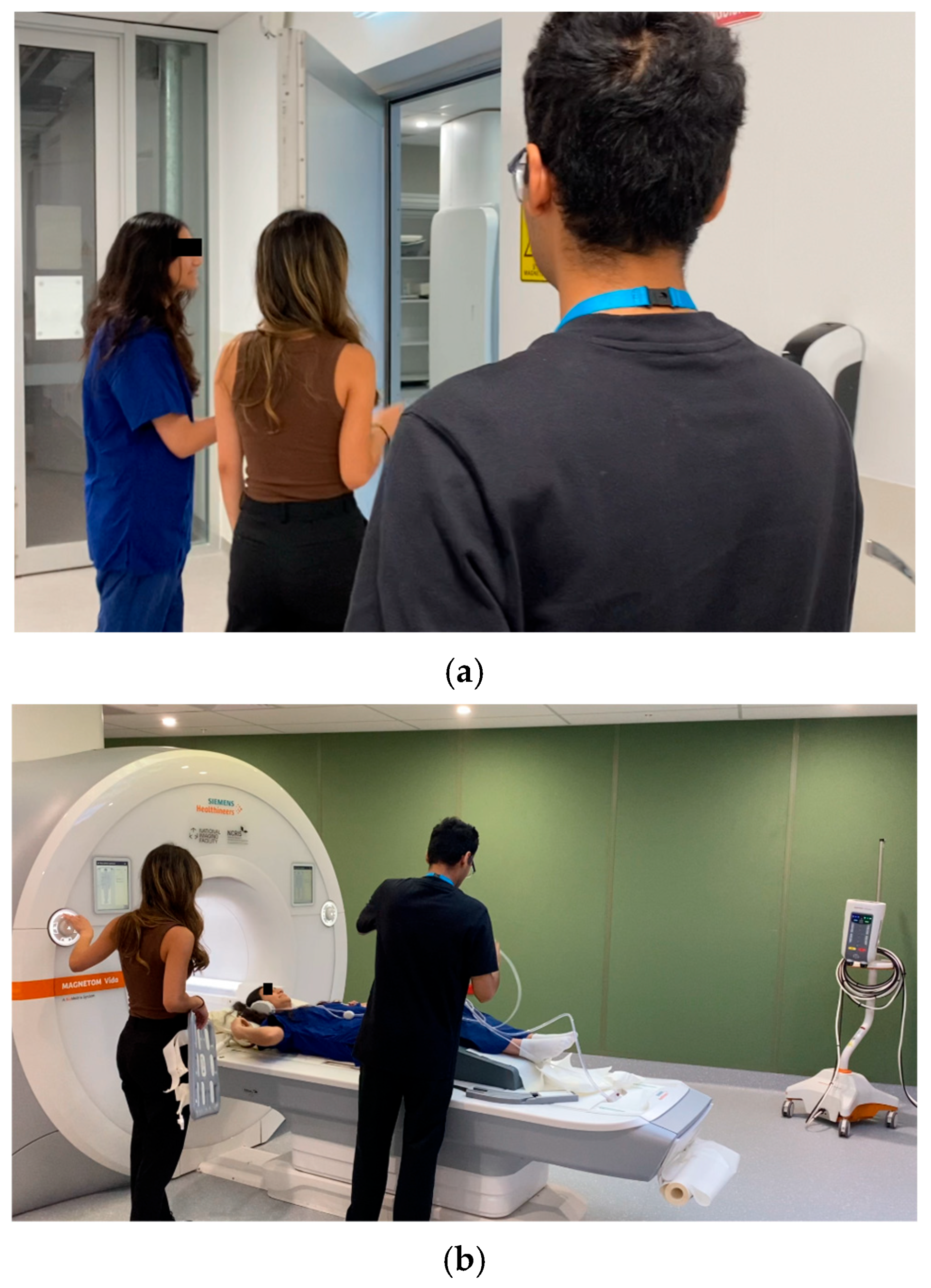

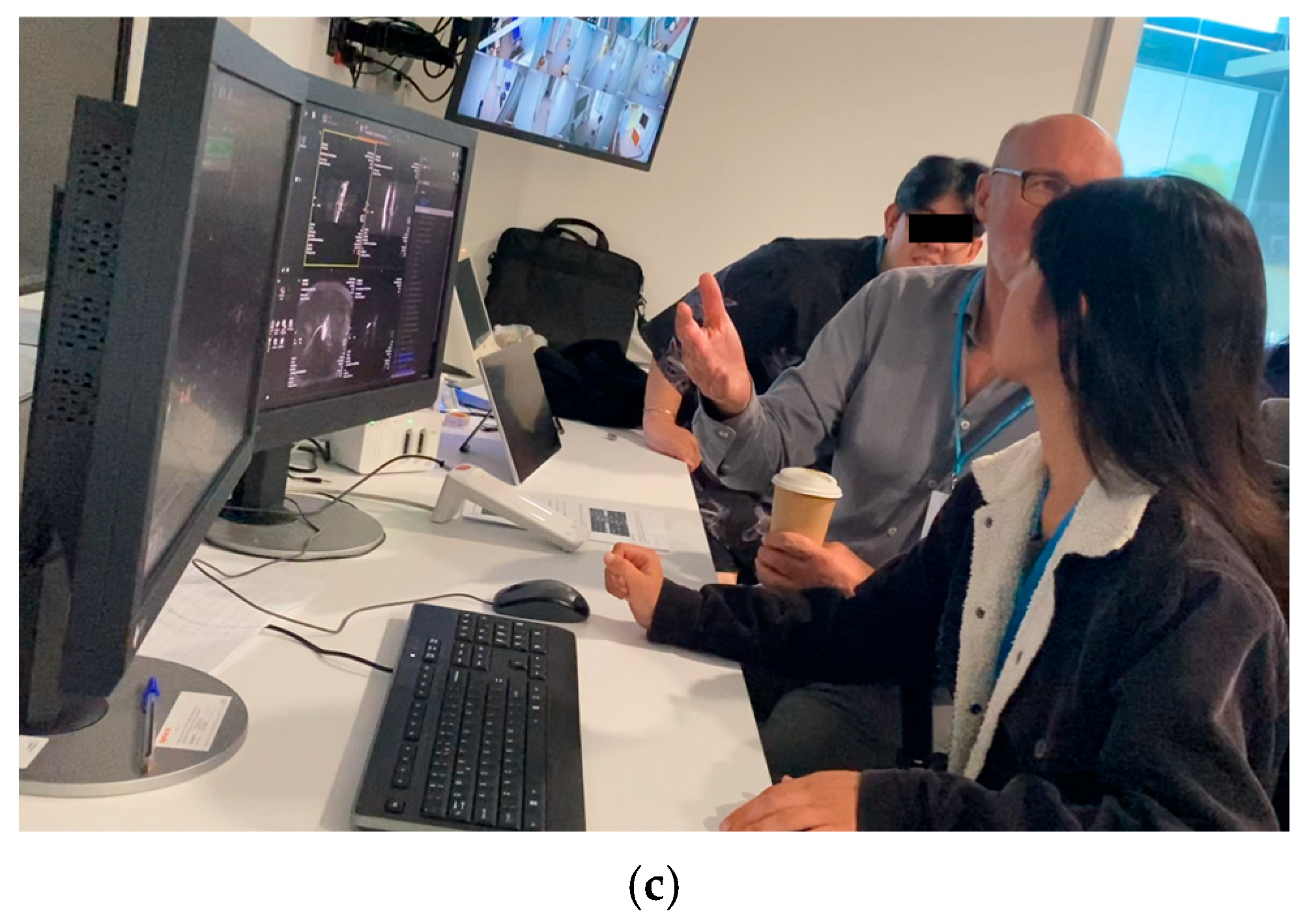

2.1. MRI Learning Program

- How to Learn MRI: An Illustrated Workbook written by Colucci et al. (2023)

- MRI from Picture to Proton (Chapters 1–10 and 12) (McRobbie et al., 2017)

2.2. Pre- and Post-Program Questionnaires and Tests

2.3. Data Analysis

3. Results

3.1. Participants’ Background and Needs and Expectation

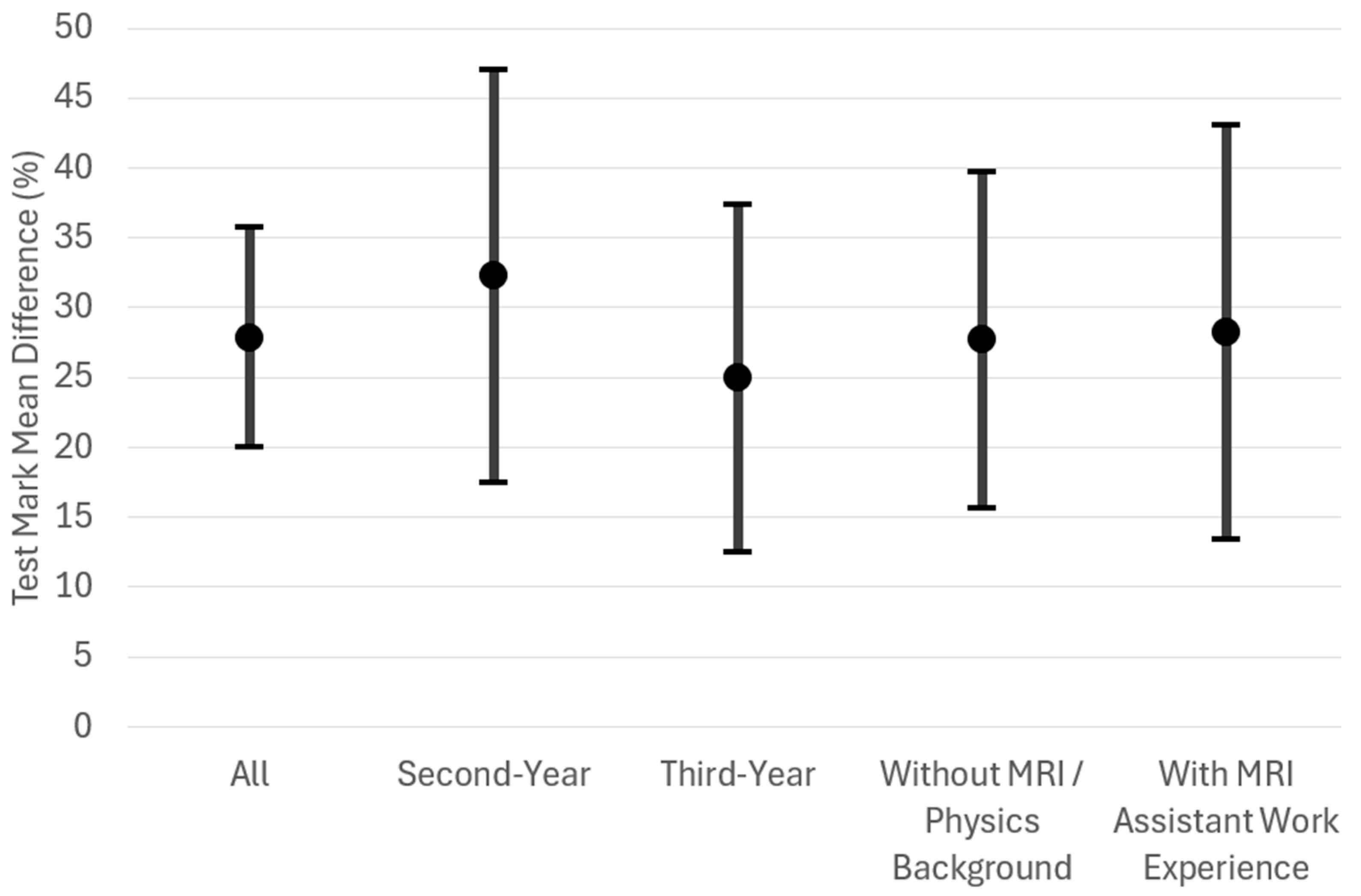

3.2. Participants’ Competence Improvement

3.3. Program’s Educational Quality Evaluation

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| ACT | Australian Capital Territory |

| Ahpra | Australian Health Practitioner Regulation Agency |

| BSc | Bachelor of Science |

| CBS | Computer-based simulation |

| CI | Confidence interval |

| Curtin MRI | Curtin Medical Research Institute |

| FOV | Field of view |

| Gd | Gadolinium |

| GPs | General practitioners |

| INACSL | International Nursing Association for Clinical Simulation and Learning |

| MCQs | Multiple choice questions |

| MI | Medical imaging |

| MRI | Magnetic resonance imaging |

| MRPBA | Medical Radiation Practice Board of Australia |

| MRPs | Medical radiation practitioners |

| MRS | Medical radiation science |

| NCRIS | National Collaborative Research Infrastructure Strategy |

| NIF | National Imaging Facility |

| SD | Standard deviation |

| SNR | Signal-to-noise ratio |

| UWA | The University of Western Australia |

| WA | Western Australia |

| WHO | World Health Organization |

Appendix A. Sample Pre- and Post-Program Questionnaire Questions

Appendix A.1. Pre-Program Question

- Do you have any MRI and/or physics background prior to studying Bachelor of Science (Medical Radiation Science) units? (select all that apply)

- None

- An additional undergraduate physics/engineering/mathematics course/more

- An additional undergraduate physics unit/more

- An additional undergraduate engineering unit/more

- An additional undergraduate mathematics unit/more

- A physics/engineering/mathematics related postgraduate course/more

- Previous experience as an MRI assistant

- Previous research experience in MRI

- Previous experience in using MRI modality console

Appendix A.2. Post-Program Question

- Please rate the effectiveness of this program for learning MRI using the following 5-point scale.

- Very ineffective

- Ineffective

- Neutral

- Effective

- Very effective

Appendix B. Sample Pre- and Post-Program Test Questions

Appendix B.1. Physics and Instrumentation Questions

- Time-of-flight (TOF) MR angiography is able to distinguish blood from surrounding tissue. Which of the following is correct?

- (a)

- A rapid succession of pulses saturates the tissue, causing an inability to produce a strong signal. When blood flows into the slice, it is not saturated; therefore, it is able to generate a strong MR signal in response to subsequent pulses.

- (b)

- The TOF method is an extension of the diffusion-weighted imaging where flowing liquids are detected in the same manner that diffusing molecules are detected. The fast-flowing blood produces a very bright signal.

- (c)

- The TOF method relies on distinguishing between the time for the blood versus the tissue spins to align with a rapid succession of gradient pulses.

- (d)

- TOF MRA is a 3D reconstruction algorithm that filters noise within each slice in parallel to applying enhancement filters for blood. This advanced processing algorithm is all applied in real time and able to produce a seamless 3D rendering akin to fluoroscopy techniques.

- (e)

- A series of spin echoes are collected at increasing time steps whereby the time spacing is akin to parabolic “time of flight” equation.

- Diffusion weighted imaging is typically used to measure the diffusion of:___________________

Appendix B.2. Clinical Practice Questions

- You are setting up to perform a scan using a spin echo sequence and wish to acquire 25 slices. Once you have entered your scan parameters, the system determines the Max # slices to be 24. What is a quick way to avoid subjecting the patient to a second scan? Select all that are correct.

- (a)

- Increase TR slightly to allow more time to collect more slices.

- (b)

- Decrease the flip angle slightly to make acquisition times shorter.

- (c)

- Change the number of slices to 24 and accept that you will not have 25.

- (d)

- Decrease TE slightly to decrease time per slice.

- (e)

- There is no need to avoid a second scan as the extra time is insignificant.

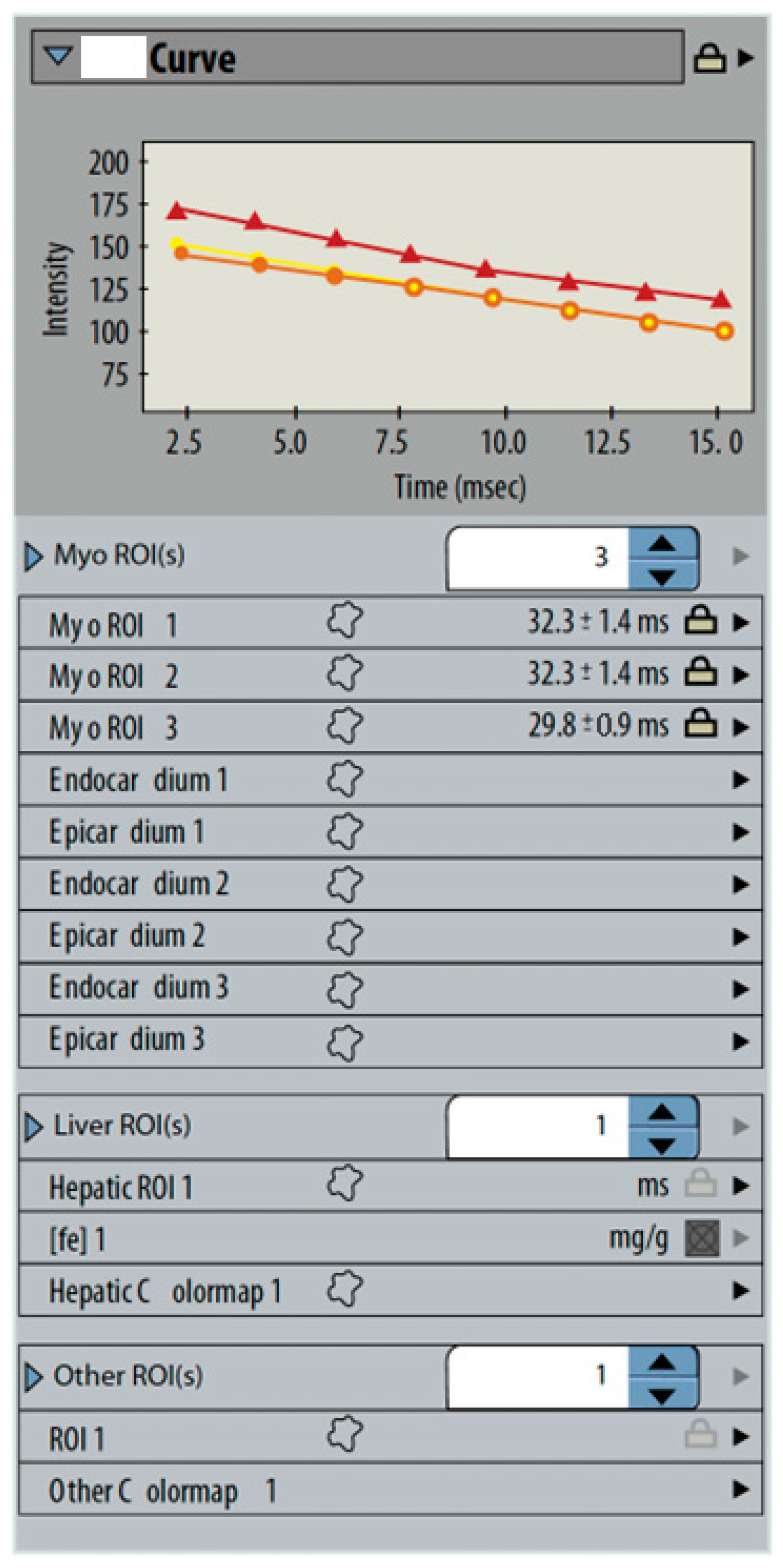

- MFGRE (multiecho fast gradient-echo) sequences are analyzed to measure cardiac iron content. A set of three gated images are analyzed by tracing small regions around the interventricular septum on each image. The system displays a graph and a table of signal times (Figure A1). What is represented by the curves?_______________________________________________________________________

Appendix C. Participants’ Pre- and Post-Program Test Marks for Physics and Instrumentation, and Clinical Practice Questions

| Before | After | p-Value | Mean Difference (95% CI) | Cohen’s d Effect Size (95% CI) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean | Minimum | Median | Maximum | SD | Mean | Minimum | Median | Maximum | SD | |||

| All Participants | ||||||||||||

| 35.48 | 19.05 | 34.52 | 57.14 | 11.69 | 57.38 | 33.33 | 58.33 | 85.71 | 15.16 | 0.001 | 21.90 (11.75–32.05) | 1.54 (0.59–2.46) |

| Second-Year Participants | ||||||||||||

| 28.57 | 23.81 | 28.57 | 33.33 | 4.35 | 56.55 | 42.86 | 59.52 | 64.29 | 9.40 | 0.028 | 27.98 (10.65–45.30) | 2.57 (0.39–4.73) |

| Third-Year Participants | ||||||||||||

| 40.08 | 19.05 | 40.48 | 57.14 | 13.08 | 57.94 | 33.33 | 53.57 | 85.71 | 18.97 | 0.037 | 17.86 (1.53–34.18) | 1.15 (0.06–2.17) |

| Participants without MRI/Physics Background | ||||||||||||

| 35.37 | 23.81 | 35.71 | 47.62 | 8.85 | 55.44 | 33.33 | 59.52 | 73.81 | 13.09 | 0.026 | 20.07 (6.03–34.10) | 1.32 (0.26–2.34) |

| Participants with MRI Assistant Work Experience | ||||||||||||

| 35.71 | 19.05 | 30.95 | 57.14 | 19.49 | 61.90 | 42.86 | 57.14 | 85.71 | 21.82 | 0.076 | 26.19 (−6.73–59.11) | 1.98 (−0.15–4.06) |

| Before | After | p-Value | Mean Difference (95% CI) | Cohen’s d Effect Size (95% CI) | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean | Minimum | Median | Maximum | SD | Mean | Minimum | Median | Maximum | SD | |||

| All Participants | ||||||||||||

| 38.24 | 25.49 | 36.27 | 58.82 | 12.01 | 75.19 | 55.88 | 77.45 | 97.06 | 14.90 | <0.001 | 36.96 (26.63–47.29) | 2.56 (1.23–3.86) |

| Second-Year Participants | ||||||||||||

| 35.78 | 25.49 | 29.41 | 58.82 | 15.66 | 76.23 | 58.82 | 77.45 | 91.18 | 13.88 | 0.019 | 40.44 (12.49–68.39) | 2.30 (0.29–4.28) |

| Third-Year Participants | ||||||||||||

| 39.87 | 26.47 | 37.25 | 55.88 | 10.22 | 74.51 | 55.88 | 73.53 | 97.06 | 16.81 | 0.002 | 34.64 (20.79–48.48) | 2.63 (0.84–4.37) |

| Participants without MRI/Physics Background | ||||||||||||

| 39.22 | 26.47 | 37.25 | 58.82 | 13.18 | 78.01 | 55.88 | 82.35 | 97.06 | 15.23 | 0.002 | 38.79 (23.10–54.49) | 2.29 (0.81–3.73) |

| Participants with MRI Assistant Work Experience | ||||||||||||

| 35.95 | 25.49 | 35.29 | 47.06 | 10.80 | 68.62 | 58.82 | 61.76 | 85.29 | 14.51 | 0.011 | 32.68 (17.99–47.36) | 5.53 (0.69–10.72) |

References

- American Board of Radiology. (2018). Item writers’ guide. Available online: https://www.theabr.org/wp-content/uploads/2020/09/Item-Writers-Guide-2018.pdf (accessed on 11 May 2025).

- Australian Health Practitioner Regulation Agency. (2024). Accredited programs of study. Available online: https://www.medicalradiationpracticeboard.gov.au/Accreditation/Accredited-programs-of-study.aspx (accessed on 12 May 2025).

- Baker, C., Nugent, B., Grainger, D., Hewis, J., & Malamateniou, C. (2024). Systematic review of MRI safety literature in relation to radiofrequency thermal injury prevention. Journal of Medical Radiation Sciences, 71(3), 445–460. [Google Scholar] [CrossRef]

- Bor, D. S., Sharpe, R. E., Bode, E. K., Hunt, K., & Gozansky, W. S. (2021). Increasing patient access to MRI examinations in an integrated multispecialty practice. Radiographics, 41(1), E1–E8. [Google Scholar] [CrossRef] [PubMed]

- Burke, J. F., Sussman, J. B., Kent, D. M., & Hayward, R. A. (2015). Three simple rules to ensure reasonably credible subgroup analyses. BMJ, 351, h5651. [Google Scholar] [CrossRef] [PubMed]

- Cataldo, J., Collins, S., Walker, J., & Shaw, T. (2023). Use of virtual reality for MRI preparation and technologist education: A scoping review. Journal of Medical Imaging and Radiation Sciences, 54(1), 195–205. [Google Scholar] [CrossRef] [PubMed]

- Chaka, B., & Hardy, M. (2021). Computer based simulation in CT and MRI radiography education: Current role and future opportunities. Radiography, 27(2), 733–739. [Google Scholar] [CrossRef]

- Chau, M., Arruzza, E., & Johnson, N. (2022). Simulation-based education for medical radiation students: A scoping review. Journal of Medical Radiation Sciences, 69(3), 367–381. [Google Scholar] [CrossRef]

- Chau, M., & Arruzza, E. S. (2023). Maximising undergraduate medical radiation students’ learning experiences using cloud-based computed tomography (CT) software. Simulation & Gaming, 54(4), 447–460. [Google Scholar] [CrossRef]

- Colucci, P. G., Gao, M. A., Schweitzer, A. D., Chang, E. W., Riyahi, S., Taya, M., Lu, C., Ballon, D., Min, R. J., & Prince, M. R. (2023). A novel hands-on approach towards teaching diagnostic radiology residents MRI scanning and physics. Academic Radiology, 30(5), 998–1004. [Google Scholar] [CrossRef]

- Cooley, C. Z., Stockmann, J. P., Witzel, T., LaPierre, C., Mareyam, A., Jia, F., Zaitsev, M., Wenhui, Y., Zheng, W., Stang, P., Scott, G., Adalsteinsson, E., White, J. K., & Wald, L. L. (2020). Design and implementation of a low-cost, tabletop MRI scanner for education and research prototyping. Journal of Magnetic Resonance, 310, 106625. [Google Scholar] [CrossRef]

- Day, J., Devers, C. J., Wu, E., Devers, E. E., & Gomez, E. (2022). Development of educational media for medical trainees studying MRI physics: Effect of media format on learning and engagement. Journal of the American College of Radiology, 19(6), 711–721. [Google Scholar] [CrossRef]

- Department of Education, Australian Government. (2024). National collaborative research infrastructure strategy (NCRIS). Available online: https://www.education.gov.au/ncris (accessed on 12 May 2025).

- Dewland, T. A., Hancock, L. N., Sargeant, S., Bailey, R. K., Sarginson, R. A., & Ng, C. K. C. (2013). Study of lone working magnetic resonance technologists in Western Australia. International Journal of Occupational Medicine and Environmental Health, 26(6), 837–845. [Google Scholar] [CrossRef] [PubMed]

- Elshami, W., & Abuzaid, M. (2017). Transforming magnetic resonance imaging education through simulation-based training. Journal of Medical Imaging and Radiation Sciences, 48(2), 151–158. [Google Scholar] [CrossRef] [PubMed]

- Fortier, E., Bellec, P., Boyle, J. A., & Fuente, A. (2025). MRI noise and auditory health: Can one hundred scans be linked to hearing loss? The case of the Courtois NeuroMod project. PLoS ONE, 20(1), e0309513. [Google Scholar] [CrossRef] [PubMed]

- Hasoomi, N., Fujibuchi, T., & Arakawa, H. (2024). Developing simulation-based learning application for radiation therapy students at pre-clinical stage. Journal of Medical Imaging and Radiation Sciences, 55(3), 101412. [Google Scholar] [CrossRef]

- Hazell, L., Lawrence, H., & Friedrich-Nel, H. (2020). Simulation based learning to facilitate clinical readiness in diagnostic radiography. A meta-synthesis. Radiography, 26(4), e238–e245. [Google Scholar] [CrossRef]

- Imura, T., Mitsutake, T., Iwamoto, Y., & Tanaka, R. (2021). A systematic review of the usefulness of magnetic resonance imaging in predicting the gait ability of stroke patients. Scientific Reports, 11(1), 14338. [Google Scholar] [CrossRef]

- INACSL Standards Committee. (2016). INACSL standards of best practice: Simulation SM Simulation design. Clinical Simulation in Nursing, 12, S5–S12. [Google Scholar] [CrossRef]

- Jimenez, Y. A., Gray, F., Di Michele, L., Said, S., Reed, W., & Kench, P. (2023). Can simulation-based education or other education interventions replace clinical placement in medical radiation sciences? A narrative review. Radiography, 29(2), 421–427. [Google Scholar] [CrossRef]

- Kadam, P., & Bhalerao, S. (2010). Sample size calculation. International Journal of Ayurveda Research, 1(1), 55–57. [Google Scholar] [CrossRef]

- Kim, J., Park, J. H., & Shin, S. (2016). Effectiveness of simulation-based nursing education depending on fidelity: A meta-analysis. BMC Medical Education, 16, 152. [Google Scholar] [CrossRef]

- Knowles, M. (1990). The adult learner: A neglected species (4th ed.). Gulf Publishing Company. [Google Scholar]

- Kolb, D. A. (1984). Experiential learning: Experience as the source of learning and development. Prentice Hall. [Google Scholar]

- La Cerra, C., Dante, A., Caponnetto, V., Franconi, I., Gaxhja, E., Petrucci, C., Alfes, C. M., & Lancia, L. (2019). Effects of high-fidelity simulation based on life-threatening clinical condition scenarios on learning outcomes of undergraduate and postgraduate nursing students: A systematic review and meta-analysis. BMJ Open, 9(2), e025306. [Google Scholar] [CrossRef] [PubMed]

- Martella, M., Lenzi, J., & Gianino, M. M. (2023). Diagnostic technology: Trends of use and availability in a 10-year period (2011–2020) among sixteen OECD countries. Healthcare, 11, 2078. [Google Scholar] [CrossRef] [PubMed]

- Mayer, R. E. (2002). Multimedia learning. Psychology of Learning and Motivation, 41, 85–139. [Google Scholar] [CrossRef]

- McRobbie, D. W., Moore, E. A., & Graves, M. J. (2017). MRI from picture to proton (3rd ed.). Cambridge University Press. [Google Scholar] [CrossRef]

- Medical Radiation Practice Accreditation Committee. (2019). Accreditation standards: Medical radiation practice 2019. Available online: https://www.ahpra.gov.au/documents/default.aspx?record=WD21/30784&dbid=AP&chksum=p31uaKToamWisUOvmFKtUg%3d%3d&_gl=1*18fusvm*_ga*MjAxNjg2NDg1LjE3MjUzMzM3MTY.*_ga_F1G6LRCHZB*czE3NDcwMzE1ODckbzI1JGcxJHQxNzQ3MDMxNjEzJGowJGwwJGgw (accessed on 11 May 2025).

- Medical Radiation Practice Board of Australia (MRPBA). (2013). Professional capabilities for medical radiation practice. Available online: https://www.medicalradiationpracticeboard.gov.au/documents/default.aspx?record=WD13%2f12534&dbid=AP&chksum=OIuB81d6eQCqo%2bewP9PHOA%3d%3d (accessed on 11 May 2025).

- Medical Radiation Practice Board of Australia (MRPBA). (2020). Professional capabilities for medical radiation practice. Available online: https://www.medicalradiationpracticeboard.gov.au/documents/default.aspx?record=WD19%2f29238&dbid=AP&chksum=qSaH9FIsI%2ble99APBZNqIQ%3d%3d (accessed on 11 May 2025).

- Medicines and Healthcare Products Regulatory Agency. (2021). Safety guidelines for magnetic resonance imaging equipment in clinical use. Available online: https://assets.publishing.service.gov.uk/government/uploads/system/uploads/attachment_data/file/958486/MRI_guidance_2021-4-03c.pdf (accessed on 11 May 2025).

- Mittendorff, L., Young, A., & Sim, J. (2022). A narrative review of current and emerging MRI safety issues: What every MRI technologist (radiographer) needs to know. Journal of Medical Radiation Sciences, 69(2), 250–260. [Google Scholar] [CrossRef] [PubMed]

- National Imaging Facility (NIF). (2025a). Funders and partners. Available online: https://anif.org.au/funders-and-partners/ (accessed on 12 May 2025).

- National Imaging Facility (NIF). (2025b). Instruments and infrastructure. Available online: https://anif.org.au/what-we-do/our-capabilities/capabilities/ (accessed on 12 May 2025).

- National Imaging Facility (NIF). (2025c). Western Australia NIF node. Available online: https://anif.org.au/uwa/ (accessed on 5 May 2025).

- Ng, C. K. C. (2020). Evaluation of academic integrity of online open book assessments implemented in an undergraduate medical radiation science course during COVID-19 pandemic. Journal of Medical Imaging and Radiation Sciences, 51(4), 610–616. [Google Scholar] [CrossRef]

- Ng, C. K. C. (2022a). A review of the impact of the COVID-19 pandemic on pre-registration medical radiation science education. Radiography, 28(1), 222–231. [Google Scholar] [CrossRef]

- Ng, C. K. C. (2022b). Artificial intelligence for radiation dose optimization in pediatric radiology: A systematic review. Children, 9(7), 1044. [Google Scholar] [CrossRef]

- Ng, C. K. C., Baldock, M., & Newman, S. (2024). Use of smart glasses (assisted reality) for Western Australian X-ray operators’ continuing professional development: A pilot study. Healthcare, 12(13), 1253. [Google Scholar] [CrossRef]

- Ng, C. K. C., White, P., & McKay, J. C. (2008). Establishing a method to support academic and professional competence throughout an undergraduate radiography programme. Radiography, 14, 255–264. [Google Scholar] [CrossRef]

- Robinson, N. B., Gao, M., Patel, P. A., Davidson, K. W., Peacock, J., Herron, C. R., Baker, A. C., Hentel, K. A., & Oh, P. S. (2022). Secondary review reduced inpatient MRI orders and avoidable hospital days. Clinical Imaging, 82, 156–160. [Google Scholar] [CrossRef]

- Rocca, M. A., Preziosa, P., Barkhof, F., Brownlee, W., Calabrese, M., De Stefano, N., Granziera, C., Ropele, S., Toosy, A. T., Vidal-Jordana, À., Di Filippo, M., & Filippi, M. (2024). Current and future role of MRI in the diagnosis and prognosis of multiple sclerosis. Lancet Regional Health-Europe, 44, 100978. [Google Scholar] [CrossRef]

- Staus, N. L., O’Connell, K., & Storksdieck, M. (2021). Addressing the ceiling effect when assessing STEM out-of-school time experiences. Frontiers in Education, 6, 690431. [Google Scholar] [CrossRef]

- Sullivan, G. M., & Feinn, R. (2012). Using effect size—Or why the p value is not enough. Journal of Graduate Medical Education, 4(3), 279–282. [Google Scholar] [CrossRef]

- Vestbøstad, M., Karlgren, K., & Olsen, N. R. (2020). Research on simulation in radiography education: A scoping review protocol. Systematic Reviews, 9(1), 263. [Google Scholar] [CrossRef]

- Westbrook, C. (2017). Opening the debate on MRI practitioner education—Is there a need for change? Radiography, 23, S70–S74. [Google Scholar] [CrossRef]

- World Health Organization (WHO). (2011). Patient safety curriculum guide: Multi-professional edition. Available online: https://iris.who.int/bitstream/handle/10665/44641/9789241501958_eng.pdf?sequence=1 (accessed on 11 May 2025).

| Monday | Tuesday | Wednesday | Thursday | Friday |

|---|---|---|---|---|

| Morning | ||||

|

|

|

|

|

| Afternoon | ||||

|

|

|

|

|

| Needs and Expectation | % |

|---|---|

| Scanning parameter selection | 60 |

| Performing scans | 50 |

| Image interpretation | 50 |

| Scanning room preparation | 30 |

| Patient preparation | 30 |

| Patient positioning | 30 |

| Patient care | 30 |

| Physics and instrumentation | 10 |

| Understanding their contribution to this research study | 10 |

| Before | After | p-Value | Mean Difference (95% CI) | Cohen’s d Effect Size (95% CI) | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Mean | Minimum | Median | Maximum | SD | Mean | Minimum | Median | Maximum | SD | ||||

| Self-perceived competences | All Participants | ||||||||||||

| 2.80 | 2.00 | 3.00 | 3.00 | 0.42 | 3.20 | 3.00 | 3.00 | 4.00 | 0.42 | 0.046 | - | - | |

| Second-Year Participants | |||||||||||||

| 2.75 | 2.00 | 3.00 | 3.00 | 0.50 | 3.25 | 3.00 | 3.00 | 4.00 | 0.50 | 0.157 | - | - | |

| Third-Year Participants | |||||||||||||

| 2.83 | 2.00 | 3.00 | 3.00 | 0.41 | 3.17 | 3.00 | 3.00 | 4.00 | 0.41 | 0.157 | - | - | |

| Participants without MRI/Physics Background | |||||||||||||

| 2.86 | 2.00 | 3.00 | 3.00 | 0.38 | 3.14 | 3.00 | 3.00 | 4.00 | 0.38 | 0.157 | - | - | |

| Participants with MRI Assistant Work Experience | |||||||||||||

| 2.67 | 2.00 | 3.00 | 3.00 | 0.58 | 3.33 | 3.00 | 3.00 | 4.00 | 0.58 | 0.157 | - | - | |

| Test mark (%) | All Participants | ||||||||||||

| 34.87 | 25.88 | 32.02 | 50.00 | 8.76 | 62.72 | 43.42 | 62.94 | 82.89 | 13.93 | <0.001 | 27.85 (19.98–35.72) | 2.53 (1.21–3.82) | |

| Second-Year Participants | |||||||||||||

| 30.48 | 25.88 | 28.95 | 38.16 | 5.43 | 62.72 | 47.37 | 64.91 | 73.68 | 11.18 | 0.006 | 32.24 (17.44–47.04) | 3.47 (0.70–6.27) | |

| Third-Year Participants | |||||||||||||

| 37.79 | 26.32 | 37.06 | 50.00 | 9.73 | 62.72 | 43.42 | 59.87 | 82.89 | 16.55 | 0.008 | 24.93 (12.50–37.36) | 2.10 (0.59–3.58) | |

| Participants without MRI/Physics Background | |||||||||||||

| 35.21 | 27.63 | 33.77 | 47.37 | 7.14 | 62.91 | 43.42 | 63.16 | 81.58 | 13.32 | 0.002 | 27.69 (15.65–39.74) | 2.13 (0.72–3.49) | |

| Participants with MRI Assistant Work Experience | |||||||||||||

| 34.06 | 25.88 | 26.32 | 50.00 | 13.80 | 62.28 | 47.37 | 56.58 | 82.89 | 18.44 | 0.015 | 28.22 (13.38–43.05) | 4.73 (0.53–9.19) | |

| Question | Mean | Minimum | Median | Maximum | SD |

|---|---|---|---|---|---|

| 1. Program effectiveness for learning 1 | 4.20 | 4.00 | 4.00 | 5.00 | 0.42 |

| 2. Program engagement 2 | 4.50 | 4.00 | 4.50 | 5.00 | 0.53 |

| 3. Adequacy of peer contribution 3 | 4.40 | 3.00 | 4.50 | 5.00 | 0.70 |

| 4. Adequacy of facilitator (program staff) contribution 3 | 4.40 | 3.00 | 4.50 | 5.00 | 0.70 |

| 5. Adequacy of supervision 4 | 3.00 | 3.00 | 3.00 | 3.00 | 0.00 |

| 6. Value of MRI knowledge review session 5 | 4.20 | 3.00 | 4.00 | 5.00 | 0.63 |

| 7. Value of phantom scanning session 5 | 3.90 | 3.00 | 4.00 | 5.00 | 0.74 |

| 8. Value of peer scanning session 5 | 4.80 | 4.00 | 5.00 | 5.00 | 0.42 |

| Strengths | % |

|---|---|

| Needs and expectations met/exceeding expectations | 50 |

| Extremely valuable/very effective/very practical | 50 |

| Enhanced understanding of physics and instrumentation | 20 |

| Learnt from a range of professionals (radiologist, radiographers, and scientists) | 10 |

| Perfect number of participants | 10 |

| Subdividing participants into 2 groups to run parallel activities for efficiency | 10 |

| Test focused on practical aspects | 10 |

| Suggestions | |

| More time to learn from radiographers | 70 |

| Consolidating program activities to include more | 30 |

| Subdividing participants into 2 groups to run all activities in parallel (to include more activities) | 20 |

| Assigning dedicated pre-readings a day before respective practical activities rather than to week 1 | 10 |

| Covering all routine scans | 10 |

| Simplifying pre-readings with dedicated support sessions in week 1 | 10 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ng, C.K.C.; Vos, S.; Moradi, H.; Fearns, P.; Sun, Z.; Dickson, R.; Parizel, P.M. Innovative Hands-On Approach for Magnetic Resonance Imaging Education of an Undergraduate Medical Radiation Science Course in Australia: A Feasibility Study. Educ. Sci. 2025, 15, 930. https://doi.org/10.3390/educsci15070930

Ng CKC, Vos S, Moradi H, Fearns P, Sun Z, Dickson R, Parizel PM. Innovative Hands-On Approach for Magnetic Resonance Imaging Education of an Undergraduate Medical Radiation Science Course in Australia: A Feasibility Study. Education Sciences. 2025; 15(7):930. https://doi.org/10.3390/educsci15070930

Chicago/Turabian StyleNg, Curtise K. C., Sjoerd Vos, Hamed Moradi, Peter Fearns, Zhonghua Sun, Rebecca Dickson, and Paul M. Parizel. 2025. "Innovative Hands-On Approach for Magnetic Resonance Imaging Education of an Undergraduate Medical Radiation Science Course in Australia: A Feasibility Study" Education Sciences 15, no. 7: 930. https://doi.org/10.3390/educsci15070930

APA StyleNg, C. K. C., Vos, S., Moradi, H., Fearns, P., Sun, Z., Dickson, R., & Parizel, P. M. (2025). Innovative Hands-On Approach for Magnetic Resonance Imaging Education of an Undergraduate Medical Radiation Science Course in Australia: A Feasibility Study. Education Sciences, 15(7), 930. https://doi.org/10.3390/educsci15070930