1. Introduction

Over the past decade, online learning has become an integral part of academic life and a crucial element in the long-term strategy of educational institutions. The rapid advancement of digital technology, combined with globalization and the increasing demand for accessible education, has transformed traditional learning environments (

Chen et al., 2020). As universities seek to adapt to the evolving needs of students, digital education has gained prominence, offering new opportunities for learning beyond geographical and temporal constraints. The shift from conventional classroom-based instruction to blended and fully online learning models has not only expanded access to education but also redefined pedagogical strategies, assessment methods, and student engagement (

Correia & Good, 2023).

One of the driving forces behind this transformation has been the trend toward openness and information sharing, facilitated by digital platforms and cloud-based learning management systems (

McGrath et al., 2023). These innovations have enabled the widespread adoption of e-learning tools, virtual classrooms, and remote collaboration software, fostering a more dynamic and interconnected academic landscape (

Correia & Good, 2023). The proliferation of digital resources, such as open educational materials, online courses, and interactive simulations, has empowered students to take a more self-directed approach to their learning (

Chen et al., 2020). Moreover, technological advancements have led to the development of adaptive learning systems that personalize educational content based on individual progress and learning styles, making education more inclusive and tailored to diverse student needs (

Ateş & Köroğlu, 2024).

With the continuous evolution of digital tools, students increasingly engage with diverse technological resources to enhance their learning experiences. From artificial intelligence (AI)-powered tutoring systems and automated feedback mechanisms to immersive virtual reality environments, digital technology is revolutionizing the way students acquire knowledge, collaborate, and interact with course materials (

Tumaini et al., 2021). Integrating digital platforms, artificial intelligence, and collaborative tools has reshaped the traditional academic landscape, enabling more flexible and interactive forms of education (

Kamalov et al., 2023). These developments have made learning more engaging and introduced new teaching methodologies, such as flipped classrooms and gamified learning experiences, which have been shown to improve student motivation and knowledge retention (

Kovari, 2025).

In recent years, artificial intelligence has emerged as a key driver of educational transformation, enabling more adaptive and personalized learning experiences. AI-powered tools, such as intelligent tutoring systems, automated grading software, and predictive analytics, have the potential to enhance academic performance, optimize instructional methods, and improve student engagement (

Farhan et al., 2024). These innovations allow educators to gain deeper insights into student learning patterns, identify struggling students early, and provide targeted interventions to support their academic progress (

Chu et al., 2022). At the same time, the increasing reliance on digital technology raises concerns about its excessive use and its potential negative impact on students’ well-being and learning outcomes. The convenience of online learning and the abundance of digital resources have contributed to a shift in study habits, with students spending more time on screens and engaging in multitasking behaviors that may hinder deep learning and cognitive processing (

Aivaz & Teodorescu, 2022). Thus, the balance between leveraging technology for academic success and mitigating its potential drawbacks remains a critical issue in higher education research (

Chu et al., 2022;

Aivaz & Teodorescu, 2022).

Despite numerous studies on digital learning, there is still a need for a comprehensive analysis that explores multiple dimensions of technology use in higher education. This study seeks to bridge this gap by analyzing four key constructs: excessive use of technology, the use of E-Boards for learning, online collaboration, and AI integration in education. By investigating these dimensions collectively, this research aims to provide a more holistic understanding of how digital tools shape the educational environment. To achieve this, a survey was conducted among undergraduate and postgraduate students at the University of Maribor, Faculty of Economics and Business, with the aim of examining their engagement with various digital learning tools and assessing their perceptions of technology’s impact on their studies. The survey sought to explore also students’ attitudes toward online learning environments, AI-assisted educational technologies, and collaborative platforms.

This research, therefore, aims to measure the students’ use of digital technology to support their studies. The objectives include developing the measurement process, building the multi-criteria model, and applying it to a real-life example of determining the degree of students’ use of digital technology in relation to the demonstrated quality of academic performance.

In the remainder of the paper, a literature review provides a comprehensive examination of the four examined constructs of digital technology use in studying. However, there is a gap in the comparative analysis of digital technology usage levels considering individual dimensions and concerning multiple dimensions, particularly in relation to the demonstrated quality of students’ academic performance. To fill this gap, the research questions are as follows:

What are the levels of students’ use of digital technology in studying concerning the demonstrated quality of academic performance?

In which dimensions of digital technology use in studying do students excel based on the demonstrated quality of their academic performance?

We then introduce the methodology used in the study, covering data collection, the sample, the measurement instrument, statistical analysis, and multicriteria measurement. This is followed by a detailed presentation of the results, whose significance and theoretical and practical implications are discussed in the conclusion, along with identified limitations and possibilities for future research.

3. Materials and Methods

3.1. Data and Sample

From 3 January 2024 to 20 February 2024, a total of 287 undergraduate and postgraduate students from the Faculty of Economics and Business at the University of Maribor in Slovenia participated in an online survey. Of the respondents, 54.4% were undergraduate students, while 45.6% were enrolled in postgraduate programs. The sample comprised 43% male and 57% female students. Regarding their field of study, the distribution was as follows: 3% were from strategic and project management, 3% from international business economics, 7% from economics, 6% from accounting, auditing, and taxation, 9% from entrepreneurship and innovation, 8% from management informatics and e-business, 16% from finance and banking, 26% from marketing, and 22% from management, organization, and human resources. Among students who recorded their average grades, 25% demonstrated acceptable-quality achievements with defects, 50% moderate-quality achievements, 23% very-high-quality achievements, and 2% demonstrated outstanding achievements.

3.2. Measurement Instrument

A closed-type online questionnaire was employed as the research instrument. The students were asked to rate their agreement with the provided statements on a 5-point Likert-type scale ranging from 1, which corresponds to ‘strongly disagree’, to 5, which corresponds to ‘completely agree’. The research was conducted using an online survey, which guarantees complete anonymity and the absence of any collection of personal data.

The questionnaire was reviewed prior to distribution by experts specializing in educational technology and quantitative research methods to ensure content validity and appropriateness of the items in the context of higher education. Reliability of the constructs was tested using Cronbach’s alpha, with all constructs exceeding the recommended value of 0.8. The items were adopted from previously validated studies conducted in university settings; although these studies originate from various countries, they share a comparable research context in terms of population (university students) and focus (use of digital tools in learning). Participation in the survey was entirely voluntary. An introductory note informed students that the data would be used for research purposes in an anonymized form.

The questionnaire focused on students’ self-reported use of digital tools across their study experiences. It did not evaluate tool use in a specific course or setting but rather examined general learning behaviors. Examples of tools referenced include AI-based educational tools (such as ChatGPT or other AI writing assistants), collaborative platforms (e.g., Microsoft Teams and Google Docs), and digital whiteboards or E-Boards used during lectures or study sessions. E-Board use was included as separate construct because digital whiteboards are widely and consistently used across lectures in the participating institution, particularly in subjects involving quantitative and analytical content. Their role is pedagogically significant as they support structured content delivery, visualization, and active problem-solving during teaching sessions. Students also reported on their use of apps or functions for managing screen time and minimizing distractions. As this study is based on perceived usage rather than direct observation or experimental implementation, it reflects students’ organic engagement with digital tools in their academic routines.

Students were asked to indicate their average academic grade based on their most recent semester. This grade typically reflects a weighted average of exam performance, written assignments, and continuous assessment, depending on the course structure.

3.3. Data Analysis

The frame procedure for multi-criteria decision-making (

Čančer & Mulej, 2010) based on the analytic hierarchy process (AHP) (

Saaty, 1986) was used in measuring students’ use of digital technology to support their studies. Groups of students formed according to their grade point averages, based on demonstration of achievements, are defined as alternatives: outstanding, very high quality, moderate quality, and acceptable quality with defects. Based on the above-mentioned survey, factor analysis (

Costello & Osborne, 2005) was used to form the criteria structure.

In this paper, the expression ‘achievement in the data’ refers to the students’ self-reported academic performance, measured by their average study grades. Based on these grades, students were categorized into four achievement groups: (1) students with acceptable-quality achievements with defects, (2) students with moderate-quality achievements, (3) students with very-high-quality achievements, and (4) students with outstanding achievements. These categories served as alternatives in the multi-criteria evaluation process, enabling us to compare digital technology use across different levels of academic performance.

The results of the descriptive statistical analysis, namely, the means of agreement with the statements that represent items in factor analysis, by the considered groups of students, designed with regard to their academic performance, were inputs for measuring the alternatives’ values with value functions. Pairwise comparisons (

Saaty, 1986;

Saaty & Sodenkamp, 2010) were used to collect the individual judgments of teachers dealing with the use of digital technology by students at the 1st and 2nd levels of study. Individual weights were aggregated by geometric means and normalized to obtain group weights expressing the importance of each criterion. The aggregate values of alternatives obtained with the distributive mode of synthesis (

Saaty & Sodenkamp, 2010;

Saaty, 2008) indicate the level of the use of digital technology in the study for a particular alternative. Based on the obtained aggregated values according to different levels of criteria and according to all criteria, the alternatives were ranked. Gradient and dynamic sensitivity analyses were performed to determine the stability of the results obtained, and performance sensitivity analysis was performed to identify the key strengths and weaknesses of each student group when using the technology in the study process.

4. Results

The Keiser–Meyer–Olkin (KMO) values of sampling adequacy (KMO > 0.5) and the results of Bartlett’s test of sphericity (p < 0.001) indicated that the use of factor analysis for each construct is justified. Due to the low proportions of the total variance of certain variables explained by the selected factors (communality < 0.4), we excluded the items ‘I find AI-based tools like language translators or grammar checkers beneficial in my studies’, ‘I believe AI can help me manage my study schedule effectively by providing reminders and updates’, and ‘I trust AI’s ability to provide accurate and unbiased information’ from the construct ‘use of AI in education’. Similarly, the items ‘I find online collaboration tools easy to use’, ‘I would prefer in-person collaborations over online collaborations’, and ‘I feel comfortable expressing disagreement or offering constructive criticism in an online collaborative setting’ were excluded from the construct ‘online collaboration’. Furthermore, the items ‘I am more likely to skim through the material on an E-Board rather than reading it thoroughly’, ‘navigating through digital content on an E-Board is easier than flipping through pages of a book’, ‘I experience more eye strain when using E-Boards, which affects my study sessions’, and ‘I feel that lack of physically highlighting or annotating affects my ability to memorize material on E-Boards’ were excluded from the construct ‘using an E-Board for learning’. Finally, the item ‘I often use technology (like study apps or online resources) positively to assist in my studying’ was excluded from the construct ‘excessive use of technology’.

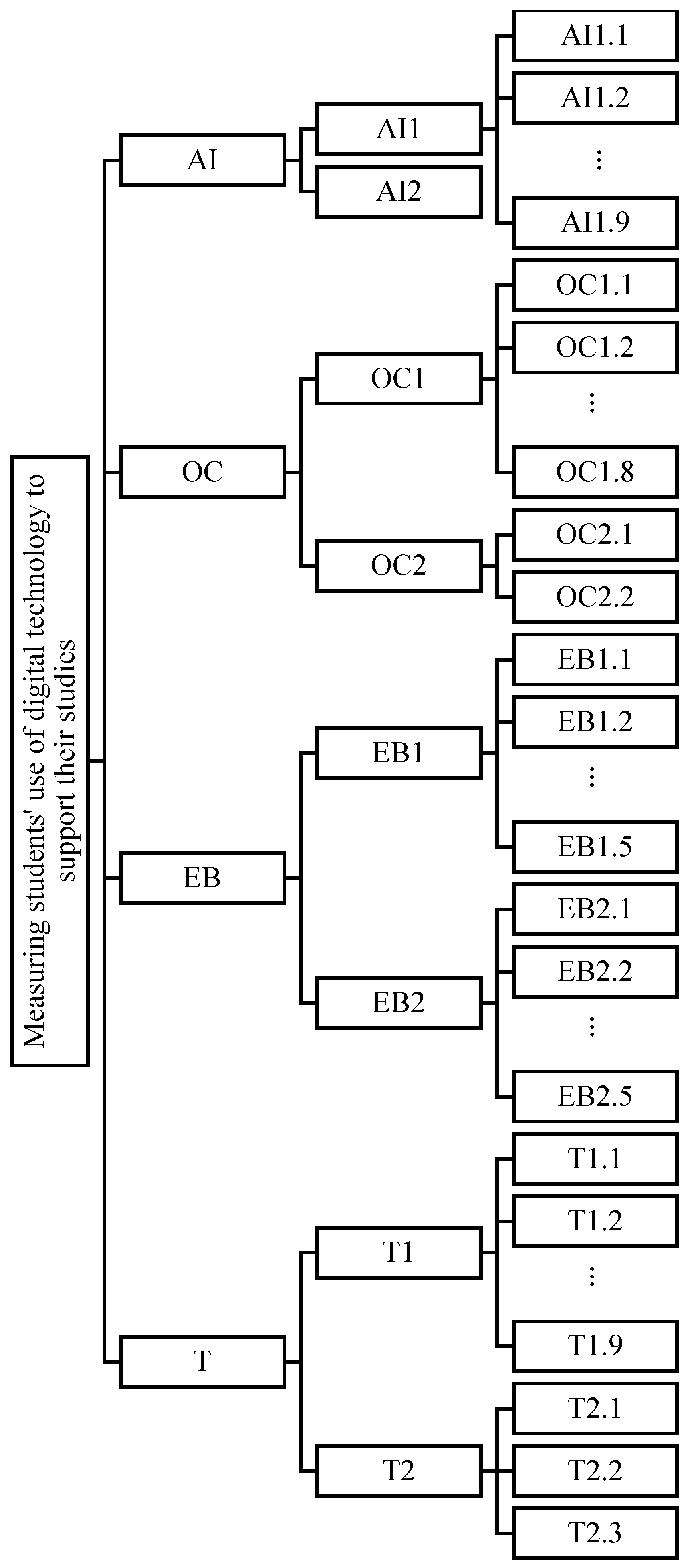

The resulting factor structures based on the factor loadings values as presented in

Table 1,

Table 2,

Table 3 and

Table 4 indicate the hierarchy of criteria (

Figure 1,

Table 5): in the multi-criteria model, constructs represent first-level criteria, and factors serve as second-level criteria, while the items from the questionnaire, renamed as criteria, form the third level of criteria hierarchy. The only exception is the second-level criterion ‘AI and human interactions concerns’, which originates from the item ‘I am concerned that AI use in education could reduce the need for human interaction’ (

Table 1). To avoid unnecessary expansion of the model, this item is incorporated into the second-level criterion. Structuring the hierarchy of criteria using factor analysis is also conceptually meaningful. The factor loadings presented in

Table 1,

Table 2,

Table 3 and

Table 4 represent the strength of the relationship between each questionnaire item and the underlying latent constructs (factors) identified through exploratory factor analysis. A factor can be thought of as a hidden dimension that explains the common variance among several related items. Loadings range between −1 and 1, with values closer to ±1 indicating a stronger association between the item and the factor. In these tables, two factors were extracted for each digital tool usage dimension, labeled Factor 1 and Factor 2. These factors group together items that reflect similar patterns of responses. For example, in the dimension of AI usage in education, Factor 1 may capture items related to the benefits of AI in education, while Factor 2 may reflect perceived human interaction concerns due to AI. The loadings help interpret how each item contributes to the overall structure of that dimension.

The scheme of the criteria hierarchy for measuring students’ use of digital technology in their studies is shown in

Figure 1, with descriptions of the meaning of symbols in

Table 5.

Table 5 shows the group weights of all criteria included in the model, relative to the higher-level, i.e., the upper criterion. The group weights shown in

Table 5 indicate how important each criterion (i.e., the dimension of digital technology use) is in evaluating the overall performance of student groups. In the context of multi-criteria decision-making, each criterion contributes differently to the final outcome, depending on its weight. A higher group weight means that the corresponding digital tool usage dimension had a greater influence in distinguishing between student groups. These weights were calculated using the aggregation by geometric mean from individual weights of teachers dealing with the use of digital technology by students (

Table A2).

The obtained group weights in

Table 5 show that, concerning the students’ use of digital technology supportive of their studies, the most important first-level criterion is ‘use of AI in education’, followed by ‘using an E-Board for learning’, ‘excessive use of technology’, and ‘online communication’. Regarding the second-level criteria, ‘benefits of AI in education’ are more important than ‘AI and human education concerns’ in the use of AI in education. Similarly, ‘positive engagement in online collaboration’ outweighs ‘collaboration challenges’. Concerning using an E-Board for learning, however, ‘E-Board learning challenges’ are more important than ‘traditional learning advantages’, and concerning excessive use of technology, ‘technology-induced study distractions’ outweigh ‘technology-use regulation effects’. On the third level, the most important benefit of AI in education is its capacity to make learning more engaging and interactive, while its capacity to personalize learning to suit students’ needs and pace was judged to be the least important. Developing skills that are relevant for students’ future careers through online collaboration is the most important, and enrichment of students’ learning by the diverse perspectives shared during online collaborations is the least important for positive engagement in online collaboration. Managing students’ time effectively during online collaborative tasks is a more important collaboration challenge than establishing personal connections with peers in an online environment. The most important E-Board learning challenge is the convenience of switching between different materials or resources on an E-Board to disrupt students’ focused study time, while the effects of the lack of tactile experience in E-Boards as compared to physical books on the students’ ability to learn are judged the least important E-Board learning challenge. Better memorization of the material because of studying from physical books, less promotion of in-depth reading and understanding by E-Boards compared to physical books, and greater contribution of traditional book-based learning to retain and recall information, despite the benefits of technology, are judged to be the most important traditional learning advantages. Diverting students’ attention from studying by online content (like videos, articles, or social media posts) is the most important, and frequent use of smartphones or computers for non-study purposes during study is the least important study distraction induced by technology. Regular use of apps or features to limit students’ screen time during the study is judged to be the most important technology-use regulation effect (

Table 5).

Alternatives’ values concerning each lowest-level criterion obtained by value functions are presented in

Table 6. For each criterion at the lowest level, the lower bound is equal to the minimum mean and the upper bound is equal to the maximum mean (

Table A1 in

Appendix A) to ensure greater differentiation between alternatives. To measure the supporting power of the use of digital technology in the study, we used the increasing linear value functions concerning all the lowest-level criteria of ‘use of AI in education’, and also ‘positive engagement in online collaboration’, which is the second-level criterion of ‘online collaboration’; on the contrary, the decreasing linear value functions were used concerning both lowest-level criteria of another second-level criterion ‘collaboration challenges’. Regarding ‘using an E-Board for learning’, an increasing linear function was used in the case of ‘greater difficulty in remembering information learned from physical books compared to an E-Board’, while concerning all other the lowest-level criteria, the decreasing linear value functions were employed. The use of decreasing functions also prevailed in criteria at the lowest-level of the criterion ‘excessive use of technology’, except for ‘regular use of apps or features to limit students’ screen time during study hours’. Although the outstanding quality (OQ) group includes only five students, it was retained as a separate cluster due to its distinct digital tool usage profile and maximum academic achievement level (grades 9.5–10). The statistically significant differences observed in comparison with other groups, especially the very-high-quality (VHQ) group, suggest that OQ students may engage with digital learning tools in a qualitatively different and more strategic way (

Table 6 and

Table 7). While the small sample size is noted as a limitation, the preservation of this group allows for a more nuanced understanding of digital behavior patterns among the most academically successful students.

Table 7 includes aggregate values of alternatives concerning the global goal, as well as to first- and second-level criteria. The ranks in

Table 7 show the final position of each student group after aggregating their values across all evaluated dimensions of digital technology use and across first- and second-level criteria. These ranks reflect the relative standing of each group in terms of how effectively and responsibly they reported using digital tools to support their learning. The ranking is based on the aggregated alternatives’ values derived through a multi-criteria evaluation approach, where each digital tool dimension was weighted (as shown in

Table 5), and student group performance according to the lowest-level criteria was assessed with local values (

Table 6). A lower numerical rank (e.g., 1st) indicates a stronger overall digital usage profile in relation to academic success, while a higher rank (e.g., 4th) suggests less effective or less regulated use of digital technologies.

The results in

Table 7 report that students who demonstrate very-high-quality achievements demonstrate the highest level of the use of digital technology to support their studies, although they do not excel considering any of the first-level criteria. Considering all criteria for measuring the students’ use of digital technology to support their studies, they are followed by students who demonstrate outstanding achievements—these excel in using an E-Board for learning and in responsibility regarding excessive technology use, and by the ones who demonstrate acceptable-quality achievements with defects—these excel in the use of AI in education and in online collaboration. The lowest level of the use of digital technology to support their studies was achieved by students demonstrating moderate-quality achievements. Gradient and dynamic sensitivity analysis let us report that the first rank is sensitive to changes in the first-level criteria as follows: if the group weight of ‘use of AI in education’ decreases by 0.066 or of ‘excessive use of technology’ increases by 0.060, i.e., less than 0.1, students that demonstrate outstanding achievements replace students that demonstrate very-high-quality achievements in the first place. Changes in the second- and third-level criteria weights by less than or equal to 0.1 do not change the order of the first and second-ranked alternatives.

5. Discussion

The results obtained let us answer the first research question. The highest level of digital technology use in studying (0.279) is achieved by the students with very-high-quality academic performance, followed by the ones with outstanding-quality academic performance (0.263), those that demonstrate acceptable-quality achievements with defects (0.241), and the students with moderate-quality achievements (0.218). Together with the assigned weights of criteria, the obtained levels and ranks are further explained by answering the second research question. We would like to point out that students that demonstrate very-high-quality achievements, first-ranked concerning the global goal, do not achieve the highest aggregate value according to any of the first-level criteria, but they demonstrate the highest level of responsible use of technology in their studies according to the following second-level criteria: ‘AI and human interaction concerns’, ‘collaboration challenges’, ‘E-Board learning challenges’, and ‘technology-use regulation effects’; these students achieve the lowest aggregate level concerning the second-level criterion ‘traditional learning advantages’. Students that demonstrate outstanding achievements, second-ranked concerning the global goal, achieved the highest aggregate value concerning two first-level criteria, i.e., ‘using an E-Board for learning’ and ‘excessive use of technology’, and the second-level criteria ‘traditional learning advantages’ and ‘technology-induced study distractions’. On the other hand, these students are last-ranked concerning the first-level criteria ‘use of AI in education’ and ‘online collaboration’ and to all their second-level criteria, as well as to the second-level criterion ‘technology-use regulation effects’. On the contrary, students that demonstrate acceptable achievements with defects, third-ranked concerning the global goal, achieved the highest values concerning the first-level criteria ‘use of AI in education’ and ‘online collaboration’ and the second-level criterion ‘positive engagement in online collaboration’, but they are last-ranked concerning the first level criterion ‘excessive use of technology’, its second-level criterion ‘technology-induced distractions’, and also concerning the second-level criterion ‘E-Board learning challenges’. The last-ranked group of students concerning the global goal, i.e., students that demonstrate moderate-quality achievements, has the highest aggregate value concerning only one second-level criterion, i.e., ‘benefits of AI in education’, and the lowest aggregate value concerning the first-level criterion ‘using an E-Board for learning’. The findings of this study align with prior research emphasizing that digital technology integration enhances students’ academic performance, particularly when used within structured learning environments. Our results indicate that students who utilize digital tools in a strategic and goal-oriented manner achieve better academic outcomes, which is consistent with the conclusions of

Al-Abdullatif and Gameil (

2021). Their research found that perceived ease of use and perceived usefulness of digital technology positively influence students’ attitudes toward learning and their engagement, ultimately leading to better academic performance. The findings of this study highlight the diverse ways students engage with digital technology in their academic environment and how these interactions influence their learning outcomes. The data reveal that students with lower academic performance tend to rely more on AI tools and online collaboration platforms, while those with higher academic achievements demonstrate a more selective and responsible approach to digital technology use. These results align with previous research suggesting that unstructured and excessive technology use may negatively impact academic performance, whereas strategic and goal-oriented usage can enhance learning outcomes (

Navarro-Martínez & Peña-Acuña, 2022). Furthermore, our study confirms that the manner in which students engage with digital tools significantly affects their learning experience. While increased use of digital platforms does not necessarily translate to better academic performance, structured and goal-oriented use—such as critical engagement with e-learning tools and responsible technology consumption—seems to foster higher achievement levels. This observation is in line with the systematic review findings, which emphasize that the effectiveness of ICT in education depends on the quality of its integration into pedagogical strategies rather than its mere availability (

Valverde-Berrocoso et al., 2022).

Romi (

2024) found that a combination of common digital communication skills, educational problem-solving abilities, and advanced digital competencies is necessary to maximize students’ academic success. While general digital literacy, such as online communication and transaction skills, enhances academic efficiency and satisfaction, more advanced digital skills, including database management, application development, and data processing, show a stronger correlation with academic effectiveness. This suggests that merely providing students with digital tools is insufficient. Institutions must also foster strategic digital engagement that encourages problem-solving, information literacy, and critical evaluation of digital content.

A recent systematic review by

Sun and Zhou (

2024) highlights the dual role of AI tools in education, emphasizing their potential to enhance student engagement and provide personalized support when used in guided and structured settings. However, they also caution that without adequate digital literacy and pedagogical framing, students may misuse such tools as shortcut generators, leading to superficial learning and a reduction in metacognitive awareness. This dual perspective aligns with our findings, where students with lower academic performance reported more frequent use of AI tools, potentially indicating a compensatory, yet ultimately counterproductive, pattern of engagement. Recent research into digital technology use among university students has highlighted the importance of behavioral and motivational factors in shaping academic outcomes. A study by

Saleem et al. (

2024) showed that frequent use of digital tools does not automatically lead to improved academic performance. What matters more is how students engage with these tools. If digital resources are used with clear educational goals in mind, they can support learning. However, when they are used primarily as a means of distraction, the outcomes are often negative. The study also pointed out that multitasking and unregulated technology use tend to reduce concentration and mental stamina, which in turn impairs learning effectiveness. Moreover, students with lower levels of intrinsic motivation were more inclined to use technology as a way to avoid academic challenges, which led to more superficial learning. These findings underline that digital literacy involves more than just technical skills. It also requires critical thinking, the ability to self-regulate, and a deliberate approach to using digital tools. Our study builds on these insights by applying a structured multi-criteria framework to explore how various forms of technology use relate to different levels of academic success. Findings from a longitudinal study by

Al-Abdullatif and Gameil (

2021) further support our results by confirming that academic benefits from digital tool use depend on consistency, structure, and clear learning intentions. Their research showed that students who engaged with learning technologies regularly and strategically performed significantly better than their peers who used digital tools irregularly or without a defined learning goal. These patterns align closely with our analysis, which revealed that students with the highest academic achievement made purposeful use of AI tools and digital platforms such as e-tables. Conversely, students with lower academic performance tended to use digital tools in an unstructured and compensatory way, reflecting a similar lack of intentionality as described in the longitudinal profiles identified in their study. Additional insights are offered by a recent study on university students’ perceptions of AI-powered learning (

Rosaura et al., 2024), which revealed that while most students believe AI tools assist their learning, many use them passively, often without critically assessing the output or understanding how the tools function. This superficial engagement aligns with our findings among students with lower academic performance who use AI tools frequently but without demonstrable academic benefit. Moreover, the study reported that students often rely on AI to save time or generate quick summaries, rather than as a complement to deeper learning processes. This reflects a broader pattern of compensatory behavior, as also observed in our data. These results further confirm the need to distinguish between the frequency of AI tool usage and meaningful, critical engagement with digital technology in an educational context.

The findings from this study will provide valuable insights into both the advantages and limitations of digital education, shedding light on key factors that contribute to students’ academic success in a technology-driven learning environment. Understanding students’ experiences and perspectives is essential for identifying best practices in digital education and for addressing areas that require improvement. The paper fills the gap in comparative analysis of technology usage levels considering individual dimensions and concerning multiple dimensions, particularly in relation to the demonstrated quality of students’ academic performance. Furthermore, the study’s findings will serve as a valuable resource for educators, policymakers, and institutional leaders, helping them refine curriculum design, implement supportive digital policies, and invest in technological innovations that align with students’ educational needs and learning preferences. As higher education continues to evolve in response to technological advancements, it is imperative to strike a balance between harnessing the benefits of digital learning and addressing its inherent challenges. By offering a comprehensive analysis of students’ engagement with digital tools, this study aims to inform the development of more effective strategies for integrating technology into academic settings. Ultimately, the goal is to ensure that digital advancements act as enablers of student success, fostering deeper engagement, personalized learning experiences, and improved academic performance, rather than creating barriers to meaningful education.

These results have several practical implications for higher education institutions. For example, students who achieved the highest academic performance showed more regulated use of digital tools, especially in managing distractions and selecting learning technologies strategically. This suggests that fostering digital self-regulation and critical digital literacy may be more effective than merely increasing access to technology. Educators could integrate digital awareness modules into their courses, helping students to reflect on their technology habits and align tool use with academic goals. Moreover, the strong reliance on AI and online collaboration tools among students with lower achievement levels may indicate a need for structured guidance on how to use these technologies meaningfully, beyond convenience or surface engagement. This aligns with prior research emphasizing that the quality of technology use is more critical than the quantity.

While this study provides valuable insights into students’ use of digital technology in higher education, it is important to acknowledge certain limitations that may influence the interpretation and generalizability of the findings. These limitations primarily concern the scope of the sample, the methodology, and the contextual factors that may shape students’ digital learning behaviors. Addressing these constraints in future research will help refine our understanding of how technology supports academic performance and identify opportunities for optimizing digital learning environments. The research was conducted exclusively among students at the Faculty of Economics and Business, University of Maribor. Future studies should consider expanding the sample to include students from diverse fields, such as natural sciences, engineering, and social sciences, to provide a more comprehensive understanding of digital technology use in higher education. This research represents a cross-sectional analysis, capturing students’ use of digital tools at a specific moment in time. Consequently, it does not provide insights into longitudinal trends in technology adoption throughout different stages of academic life. A longitudinal study would allow researchers to track changes in digital learning habits across semesters or years, helping to understand how students adapt their use of technology over time. Also, it should be noted that the research design does not allow for the establishment of a causal relationship between the use of digital tools and academic performance. Rather, the study identifies associations and patterns among student groups with different achievement levels. Future studies using longitudinal or experimental designs would be needed to explore causality more directly. One important limitation of this study is the absence of a control group. The research was designed to compare different levels of digital tool usage among student groups with varying academic achievement levels, rather than to test the effects of a specific intervention. Future research could benefit from experimental or longitudinal designs incorporating control groups to better assess the impact of targeted digital learning strategies. Moreover, the study does not account for external factors that may influence students’ digital learning behavior, such as learning styles, prior digital literacy, socioeconomic background, or the role of instructors in guiding technology use. Future studies could incorporate these variables to explore their impact on students’ engagement with digital tools and academic performance.