4.1. Demographic Information

Table 2 provides a summary of the demographic characteristics of the study participants. The participants were recruited from five undergraduate programs: Translation, Computing and Interactive Entertainment, New Music and Interactive Entertainment, Data Science and AI, and Computer Science. The sample consisted of 43 females (52%) and 40 males (48%). Analysis revealed that 17% of the students were enrolled in STEM programs, while the remaining 83% were in non-STEM programs. The majority of participants were aged between 20–24 years (48%) or 19 years and younger (47%), with only 5% aged 25 or older.

Over 90% of participants reported prior experience with generative AI tools. Specifically, 69% (n = 57) indicated they had used generative AI tools at university, 5% (n = 4) had only used them in secondary school, and 18% (22%) reported using them in both contexts. A small minority (5%, n = 4) reported never using such tools. Regarding frequency of use, 8.4% (n = 7) reported daily usage, while the majority (43.4%, n = 36) used these tools weekly. Monthly usage was reported by 25.3% (n = 21), and 22% (n = 16) reported quarterly or no usage.

Participants identified various purposes for using generative AI tools, including finding information (n = 53), generating ideas (n = 53), translating texts (n = 52), summarising texts (n = 34), creating images/audios/videos (n = 32), drafting texts (e.g., emails, essays) (n = 20), analysing data (n = 15), asking for advice (n = 15), seeking recommendations (n = 10), and preparing for job applications/interviews (n = 7). The most commonly used tools were chatbot programs such as ChatGPT (n = 57) and Poe (n = 22).

4.2. Descriptive Results

Table 3 presents participants’ perceptions of the ease of use of generative AI tools. Overall, participants found generative AI tools user-friendly, with moderate to high average scores across the three statements. The statement “Generative AI tools are easy to use” received the highest mean score (3.91, SD = 0.94). Confidence slightly declined for statements about learning how to use these tools (mean = 3.87, SD = 0.96), and applying them to academic tasks (mean = 3.29, SD = 1.00). The results suggest that while students found the tools intuitive, their application in academic contexts may require additional effort.

Table 4 highlights participants’ understanding of the limitations of generative AI tools. Scores ranged from moderate to high (mean = 3.28 to 4.39), with the highest-rated concern being the potential for inaccurate outputs (mean = 4.39, SD = 0.66). Participants also acknowledged limitations such as the generation of irrelevant outputs (mean = 3.94, SD = 0.85) and overreliance on statistical data, which may limit usefulness in novel contexts (mean = 3.80, SD = 0.84). However, there was less certainty regarding issues such as bias in outputs (mean = 3.34, SD = 0.93) and the tools’ lack of emotional intelligence (mean = 3.28, SD = 1.06).

Table 5 outlines participants’ attitudes toward the benefits of generative AI tools. Responses were generally positive, with the highest-rated statement being “Generative AI tools provide students with additional learning support” (mean = 4.10, SD = 0.67). Other highly rated benefits included the tools’ usefulness for study purposes (mean = 3.95, SD = 0.70) and their ability to complement students’ learning needs (mean = 3.80, SD = 0.74). However, the statement “Generate AI tools clarify many points that teachers cannot cover in their explanation” received a lower score (mean = 3.41, SD = 0.91), suggesting that students viewed these tools as supplementary aids rather than replacements for traditional teaching methods.

Table 6 illustrates participants’ perceptions of potential concerns associated with generative AI usage. Overall, concerns were rated relatively low, with an average score of 2.51 across all statements. Participants did not perceive significant risks to their development of transferable skills (mean = 2.51, SD = 1.07), their ability to interact with peers (mean = 2.41, SD = 1.02), or their communication with teachers (mean = 2.41, SD = 1.10). Similarly, concerns about over-reliance on AI tools (mean = 2.51, SD = 1.09) and the diminishing role of teachers (mean = 2.43, SD = 1.11) were rated low. These findings reinforce the perception that generative AI tools are viewed as complementary aids rather than disruptive forces in traditional education.

Table 7 highlights participants’ perceptions regarding the impact of generative AI on the future of jobs. The two statements “Generative AI technology steals people’s jobs” (mean = 3.10, SD = 1.11) and “Generative AI technology will make it harder for me to find a job after graduation” (mean = 3.25, SD = 1.03) indicate a moderate level of agreement among respondents. The results suggest that students are somewhat concerned about the potential challenges generative AI may pose to their post-graduation employment prospects.

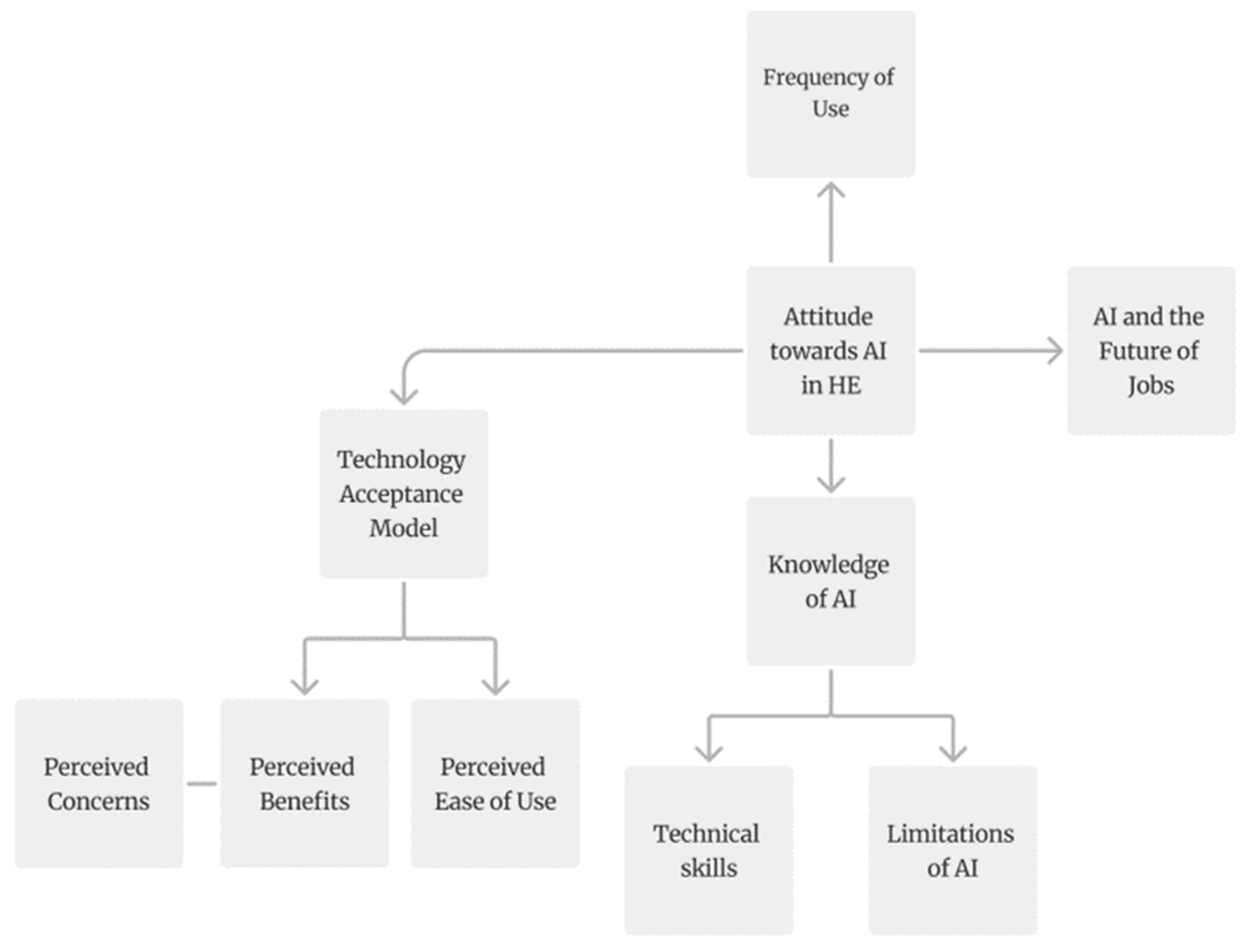

4.3. Regression Analysis

To examine the relationships between student attitudes toward generative AI tools and their reported frequency of use, multiple regression analyses were conducted. Diagnostic tests were performed to ensure adherence to regression assumptions. Variance inflation factor (VIF) values ranged from 1.05 to 1.66, indicating no issues with multicollinearity. The Durbin–Watson statistic was 1.63, suggesting residual independence. Visual inspection of residual plots confirmed linearity, homoscedasticity, and normality.

A multiple regression analysis was conducted to examine the relationship between the subscales and students’ reported frequency of use of generative AI tools. The model was statistically significant, (F(4, 78) = 5.89,

p < 0.001), R

2 = 0.23, indicating that the predictors collectively explained 23% of the variance in frequency of use. The regression coefficients are presented in

Table 8.

To explore the multi-faceted nature of student attitudes towards generative AI in education and the future of work, partial regression analyses were conducted to examine the relationships between the subscales.

A regression analysis was conducted to test the relationship between perceived ease of use, perceived benefits, and frequency of use. The resulting model was statistically significant, F(2, 80) = 9.83,

p < 0.001, with a coefficient of determination of R = 0.44; R

2 = 0.20, indicating that 20% of the variance in frequency of use was explained by the predictors. However, the results revealed mixed findings, as shown in

Table 9.

The analysis revealed that perceived benefits significantly predicted frequency of use (β = −0.29, t(80) = −2.54, p= 0.01), while perceived ease of use did not meet the significance threshold (β = −0.22, t(80) = −1.95, p = 0.06).

While previous studies, such as

Rožman et al. (

2023) and

Yao et al. (

2024), have demonstrated that perceived ease of use positively influences technology adoption, the findings in this study suggest that perceived benefits play a more significant role in predicting frequency of use.

A regression analysis was conducted to test the relationship between knowledge of AI and frequency of use. The resulting model was not statistically significant, F(1, 81) = 1.26,

p = 0.27, with a coefficient of determination of R

2 = 0.02, indicating that only 2% of the variance in frequency of use was explained by the predictor. The regression coefficients are presented in

Table 10.

The analysis revealed that knowledge of AI did not significantly predict frequency of use (β = −0.12, t(80) = −1.12, p = 0.27). Participants demonstrated a medium level of literacy regarding AI limitations, with a mean score of 3.61 (SD = 0.43). However, the lack of statistical significance suggests that knowledge of AI does not directly influence usage frequency.

The literature on the relationship between AI literacy levels and usage frequency is mixed.

Acosta-Enriquez et al. (

2024) found no significant influence between these variables among Generation Z university students, while

C. K. Y. Chan and Hu (

2023) reported a positive correlation between AI literacy and rates of its adoption among Hong Kong university students. However,

C. K. Y. Chan and Hu (

2023) did not specifically examine knowledge of AI limitations. The findings of the current study aligned more closely with

Acosta-Enriquez et al. (

2024), as no significant relationship was observed.

Relationship between Perceived Ease of Use, Perceived Benefits, Perceived Concerns, and Frequency of Use

A multiple regression analysis was conducted to predict frequency of use based on perceived ease of use, perceived benefits, and perceived concerns. The model was statistically significant, (F(3, 79) = 7.95, p < 0.001), with R2 = 0.23, indicating that the predictors collectively explained 23% of the variance in frequency of use.

Table 11 presents the regression coefficients, which indicate that perceived ease of use (β = −0.25,

p = 0.03) and perceived benefits (β = −0.24,

p = 0.04) were significant predictors of frequency of use. Interestingly, perceived concerns did not significantly predict frequency of use (β = 0.19,

p = 0.06), despite its marginal

p-value. Given the borderline significance, perceived concerns cannot be confidently interpreted as influential in this model.

The impact of perceived risk and trust on the adoption of technology has been extensively studied. For example,

Yao et al. (

2024) found that 43.2% of the variance in trust in mobility technologies could be explained by perceived risk and belief. Similarly,

Acosta-Enriquez et al. (

2024) noted that while students perceived language learning models as valuable, they also expressed concerns about potential shortcomings, such as limited accuracy, misinformation, and over-dependency. In a study of U.S. college students’ adoption of ChatGPT,

Baek et al. (

2024) highlighted themes of pragmatism, optimism, and collaboration among those who expressed “no concerns” about AI.

Perceived concerns did not emerge as a significant predictor of frequency of use. Instead, perceived ease of use and perceived benefits were more influential. These findings align with prior research emphasising the importance of perceived usefulness in technology adoption (

Noh et al., 2021). While the original TAM framework prioritises positive perceptions (e.g., usefulness and ease of use), this study highlights the limited role of negative perceptions (e.g., concerns) in influencing the adoption of generative AI.

Relationship between Perceived Concerns about generative AI in education and perceptions of its role in the workplace

Two regression analyses were conducted to examine the relationship between perceived concerns and two dependent variables: (1) the belief that generative AI will steal jobs ([R22]) (

Table 12) and (2) the belief that generative AI will negatively impact individual employment prospects ([R23]) (

Table 13).

The first regression model predicting [R22] was statistically significant, (F(1, 81) = 5.97,

p = 0.02), with R

2 = 0.07, indicating that perceived concerns explained 7% of the variance in the belief that generative AI will steal jobs (

Table 12). The regression coefficient for perceived concerns was significant (β = 0.26,

p = 0.02), suggesting that students who expressed greater concerns about generative AI were more likely to believe that these technologies would lead to job displacement.

The second regression model predicting [R23] was not statistically significant, (F(1, 81) = 1.17,

p = 0.28), with R

2 = 0.01, indicating that perceived concerns explained only 1% of the variance in the belief that generative AI will negatively impact individual employment prospects (

Table 13). The regression coefficient for perceived concerns was not significant (β = 0.12,

p = 0.28), suggesting that concerns about generative AI do not strongly influence students’ views of their employment prospects.

While perceived concerns significantly predicted the belief that generative AI will steal jobs ([R22]), they did not significantly predict the belief that generative AI will negatively impact individual employment prospects ([R23]). This discrepancy aligns with prior studies highlighting nuanced perspectives on AI’s impact on the job market. For example,

Vicsek et al. (

2022) found that students often differentiate between AI’s collective impact on the labour market and their personal employment prospects. Similarly,

Dekker et al. (

2017) noted that fears of automation were more pronounced among individuals in manual labour roles or with lower educational credentials. While concerns about generative AI influence beliefs about job displacement at a societal level, they appear less relevant to students’ personal employment outlooks.