Abstract

This study, grounded in constructivist theory, presents an innovative artificial intelligence (AI) course framework for undergraduate students from the School of Artificial Intelligence at Anhui University. This research focuses on students’ perceptions and explores the impact of blogs as a platform for assignment submission in an AI course. Data are collected using structured questionnaires and analyzed quantitatively using a partial least squares structural equation model. Additionally, interviews are conducted to provide nuanced insights and contextual explanations, thus supplementing the quantitative findings. This study explores the specific impacts of blogs on students’ learning abilities, learning experiences, academic outcomes, and overall satisfaction within the AI course. By leveraging blogs as pedagogical tools, this study highlights their potential to transform traditional teaching methods and promote active learning and knowledge sharing in higher education. The proposed course framework also offers a scalable model that can be adapted for other science and engineering disciplines in colleges and universities.

1. Introduction

As artificial intelligence (AI) continues to evolve rapidly (Chaudhry & Kazim, 2022; Winkler et al., 2020), numerous universities in China have responded by establishing dedicated AI schools. For example, the University of the Chinese Academy of Sciences launched the country’s first AI school in 2017, followed by Nanjing University in 2018, and several others thereafter. This expansion reflects the growing trend among universities to integrate AI courses into their curricula to meet the increasing demand for expertise in this field. However, their growth remains a significant challenge.

First, the dynamic and forward-looking nature of AI content imposes considerable demand on course designers (De Veaux et al., 2017). Second, AI, as an engineering discipline, requires the robust integration of theory and practice, complicating the teaching process (Mosly, 2024). For instance, AI plays a pivotal role in autonomous driving, where deep learning models process real-time sensor data to make driving decisions (Goodfellow et al., 2016). Similarly, in intelligent manufacturing, AI-powered robots and computer vision systems optimize the production efficiency and ensure quality control (Licardo et al., 2024). These engineering applications underscore the importance of combining theoretical AI concepts with hands-on practices, thereby presenting unique challenges for AI course designers.

These challenges are particularly evident in Chinese universities, where students often face difficulties in self-regulated learning and self-management skills (Zhu et al., 2016). Although undergraduates typically possess foundational knowledge of subjects such as linear algebra and C programming, their practical skills frequently fall short, adding another layer of complexity to the design of AI courses that are both effective and accessible (Zhang et al., 2020).

To address these challenges, the potential of blogs as communication tools in the digital age has become increasingly relevant. Blogs offer a unique platform for idea sharing and interaction (Nardi et al., 2004), and their use is widespread among engineers and professionals (Efimova & Grudin, 2007). Research has shown that blogs can enhance students’ learning perceptions (Garcia et al., 2019), improve learning outcomes (Halic et al., 2010), and promote knowledge sharing (Li et al., 2013). Given these benefits, this study leverages a Natural Language Processing (NLP) course from the School of AI at Anhui University (AU-AI School) as a case study to design and implement an innovative AI course that incorporates blogs as the primary platform for assignment submission. This study addresses the following question: Can blog assignments benefit AI course learning? (Blog assignments: Students complete assignments by writing blog posts, allowing them to express their ideas or understanding more flexibly).

The choice of NLP course in this study is strategic. The core curriculum of the AI program at AU-AI School spans a wide range of areas, including the Introduction to AI, Machine Learning, Data Structures and Algorithms, Computer Vision, and NLP, which collectively help students build a solid technical foundation and enhance their engineering practice capabilities. Among these courses, NLP holds a particularly significant position, as it equips students with key technologies in language processing, emphasizing the ability to solve complex engineering problems and research skills.

This study focuses on undergraduate students enrolled in an AI program at the AU-AI School. A mixed-method approach, including surveys and interviews, is employed to explore the impact of blogs on students’ learning abilities, experiences, outcomes, and overall satisfaction. The integration of these two methods is achieved using qualitative findings to interpret and elaborate on the quantitative results. For instance, while the survey data provide measurable outcomes, the interview responses help to explain the underlying reasons behind these outcomes, offering a deeper and more comprehensive understanding of the research findings.

This study is guided by the following objectives: How do blogs influence students’ learning abilities and experiences in an AI course? What are the effects of blogs on student learning outcomes in AI courses? To what extent are students satisfied with the use of blogs as a platform for assignment submission? What underlying mechanisms explain the impact of blogs on students’ learning in science and engineering courses? Ultimately, this study seeks to determine whether blogs have a significant effect on learning outcomes and to explore the mechanisms behind this impact, offering valuable insights for the design of science and engineering curricula.

2. Literature Review

2.1. Blogs Integrated Teaching

Blogs have been increasingly integrated into the teaching and learning processes of modern higher education (Deng & Yuen, 2011). An expanding body of research suggests that blogs have significant potential to enhance student learning outcomes. For instance, Ducate, Lomicka, and Churchill (Churchill, 2009; Halic et al., 2010) pointed out that blog writing enhances students’ cognitive and perceptual learning abilities in various contexts. Blogs not only promote interactions among students but also strengthen communication and cooperation within groups, thereby facilitating the social construction of knowledge (Leslie & Murphy, 2008). Studies have demonstrated that blog activities can significantly improve learning outcomes (Brott, 2023; Shim & Guo, 2009; Xie et al., 2008). Meirbekov also discovered that Instagram, TikTok, educational online blogs, and online courses, when integrated with traditional academic education, can enhance students’ academic performance and boost their motivation to learn languages (Meirbekov et al., 2024). For example, Xie examined the impact of blog activities on students’ reflective thinking and found that peer feedback did not provide the expected support for learning (Xie et al., 2008).

Building on this, our research draws upon key concepts from the existing literature regarding student autonomy, reflective thinking, and self-assessment. For example, Alalwan argued that social media (including blogs) can significantly increase student engagement and enhance learning experiences, particularly when these platforms promote active interactive learning (Alalwan, 2022). Ansari and Khan emphasized the important role of social media in facilitating collaborative learning, demonstrating from a constructivist perspective how digital tools enable students to engage in shared learning activities (Ansari & Khan, 2020). The social learning theory also suggests that integrating social media can promote collaboration and knowledge sharing, further enriching students’ educational experiences (Hamadi et al., 2022).

This study primarily investigates whether blogs, as online communities, can influence student learning within the framework of AI courses, particularly in STEM education, and what unique roles they might play. Based on constructivist theory (Jonassen & Rohrer-Murphy, 1999) and drawing from students’ prior experiences, we create “contexts” (Anderson, 1984) to establish a cognitive framework through “collaboration” and “communication”, aiming to accelerate the “construct sense” and “make meaning” at both the knowledge and community levels (Bereiter & Scardamalia, 2006). This approach is designed to enhance students’ problem-solving abilities and to provide a more comprehensive learning experience.

2.2. Constructivist Curriculum

Constructivism is a learner-centered cognitive theoretical framework that posits knowledge construction as an active process. Learners integrate new information with prior experience through interaction and reflection, thereby constructing meaning rather than passively receiving knowledge (Fosnot, 2013). For instance, in a science classroom, students might engage in hands-on experiments or group discussions to explore scientific concepts, rather than simply receiving answers through lectures. This approach aligns with the idea that learning is an active participatory process, in which students construct their understanding through practical engagement. This theory is grounded in the foundational work of (Piaget, 2005) and (Vygotsky, 1978), who expanded their understanding from different perspectives. Piaget emphasized individual cognitive development and proposed that assimilation and accommodation dynamically adjust cognitive structures, believing that learners actively build new cognitive structures by integrating new information with their prior knowledge. This process allowed them to adapt to their thoughts. In contrast, Vygotsky focused on sociocultural factors, introducing the “zone of proximal development” (ZPD). The ZPD highlights the importance of social interaction in learning, suggesting that learners can tackle tasks that they cannot yet perform independently with the help of a more knowledgeable other, such as a teacher or peer.

In educational settings, constructivism encourages learners to actively build their understanding through methods such as group discussions, experiments, and problem-solving tasks rather than relying on rote memorization or passive listening. By engaging with the content in this way, students become more involved in their learning process, connecting new knowledge with their existing experiences. This active engagement leads to deeper understanding and more meaningful retention of information.

2.2.1. Key Dimensions of Knowledge Construction

Autonomous learning and collaborative exploration: Constructivism emphasizes the combination of autonomous learning and collaborative exploration. Problem-Based Learning (PBL) and Project-Based Learning (PjBL) enable students to understand theoretical knowledge in practice while developing higher-order cognitive and critical thinking skills (Fosnot, 2013). These dynamic learning models effectively enhance problem-solving abilities.

Enhancing motivation and behavior: Constructivist teaching strategies significantly improve students’ learning motivation, self-confidence, and classroom engagement. Designing open-ended questions and real-life scenarios stimulates learners’ curiosity and strengthens their ability to solve real-world problems (Alt, 2016).

Personalized and collaborative learning: Constructivist methods supported by technology can tailor learning paths for individual students, while promoting group collaboration and team-building skills. This approach balances personalization and collaboration, thereby creating opportunities for students with diverse learning styles (Charania et al., 2021).

Formative assessment and feedback: Formative assessments, such as learning portfolios and performance tasks, help students reflect on their learning process. Teachers provide timely feedback, aiding students in optimizing learning strategies and achieving their objectives (Black & Wiliam, 2010).

2.2.2. Integration of Constructivism and Technology

With advancements in educational technology, constructivist applications have also expanded. Technology-enhanced constructivism has become a popular research topic in the recent years. T. Yang and Zeng (2022) explored how virtual reality (VR) and AI can support personalized learning paths, enhancing learners’ immersion and collaborative efficiency. Interdisciplinary module designs such as integrating biology, chemistry, and physics have demonstrated the advantages of constructivism in promoting knowledge transfer and integration (Gouvea et al., 2013).

2.2.3. Application of PjBL

PjBL demonstrated the practical value of constructivism in STEM education. Moreno and Mayer (2007) found that through the design of real-world tasks and team collaboration, students not only apply theoretical knowledge in complex situations but also develop innovative and practical skills. This approach is particularly effective in facilitating knowledge construction during problem-solving.

In conclusion, constructivism provides both a theoretical framework and practical learning strategy. Its integration with modern technologies and teaching methods supports students in achieving deep learning and personalized development. The synergy between theory and practice offers rich insights into the novel and the advancement of education.

3. Hypotheses Development

3.1. NLP Course as a Representative

The NLP course, coded ZH52404/05, is a crucial core course within the AI program curriculum at AU-AI School, encompassing 36 h of theory instruction and 24 h of laboratory instruction. NLP plays a central role in the field of AI and serves as a bridge between computational technology and human-language processing.

The primary objective of an NLP course is to equip students with a comprehensive understanding of its fundamental principles, core techniques, and practical applications of Natural Language Processing. This includes grasping essential concepts such as language modeling, lexical analysis, syntactic analysis, and semantic analysis, and mastering core techniques such as information retrieval, text classification, sentiment analysis, and machine translation. The course also emphasizes applying these skills through hands-on projects and case studies, with the aim of enhancing students’ ability to address and solve real-world problems using NLP technologies.

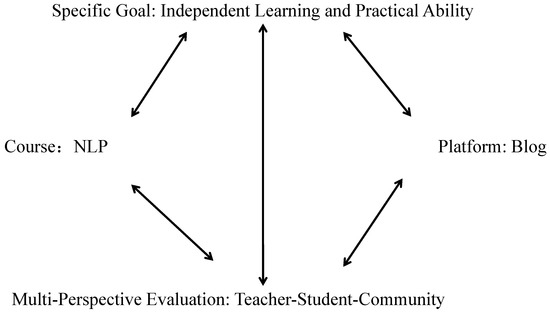

This study begins with fundamental theories of constructivism. By organizing and arranging course elements using constructivism as the primary guiding principle, we develop a novel course design. As illustrated in Figure 1, the teacher, guided by constructivist learning theory, designs an NLP course that views students as proactive knowledge developers. The course creates realistic problem contexts, such as determining nationality based on names on social platforms, or facilitating barrier-free communication through machine translation on international exchange platforms. The introduction of a blog platform extends learning activities beyond the classroom, allowing students to connect with learners, teachers, and scholars from other schools, thus forming a remote, collaborative learning community. Additionally, the interactive nature of the blog platform facilitates the assessment of students’ learning processes and outcomes. This evaluation system includes interactions between teachers and students as well as peer exchanges and feedback from other learners and experts. Through this multifaceted assessment system and a technology-supported learning environment, students can effectively construct and apply knowledge in real-world contexts, further developing their skills in autonomous, collaborative, and reflective learning.

Figure 1.

Components of course design.

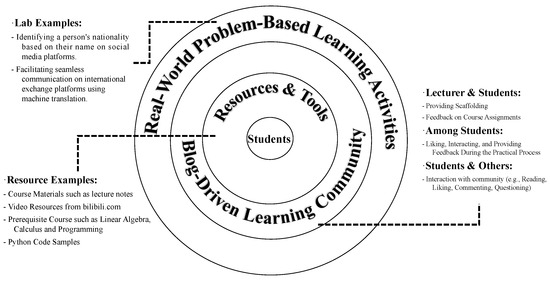

As illustrated in Figure 2, the teacher employs a student-centered teaching strategy and provides students with a rich array of learning materials. These materials included course resources on NLP; video resources from the Bilibili platform; and preparatory course materials on linear algebra, calculus, programming, and Python programming code developed by the teacher. These resources are designed to support students in constructing the cognitive dimensions of knowledge and serve as an instructional scaffolding.

Figure 2.

Framework of course design.

Following this, the teacher creates various real-world scenarios, such as identifying nationality based on names on social platforms, and using machine translation to achieve barrier-free communication on international exchange platforms. In this context, students are encouraged to publish their work in a blog community. Feedback from course assignments, such as comments, likes, exchanges, and evaluations between teachers and students, as well as among students themselves, facilitates mutual learning and progress. Additionally, feedback from experts or non-experts, including reading, liking, commenting, and questioning assignments, promotes student attention and reflection, thereby enhancing metacognitive abilities. This contributes to the improvement in students’ practical skills.

3.2. Research Questions

Existing research has demonstrated that blogs can significantly enhance various aspects of student learning, including abilities, experiences, outcomes, and overall satisfaction. Specifically, studies have shown that blogs help students develop a deeper understanding of course materials, foster autonomous learning, promote critical thinking, and improve overall learning efficiency (Chu et al., 2012; Halic et al., 2010). In addition, students often report positive experiences when using blogs, including increased engagement, better comprehension of the material, and a heightened sense of achievement (Ellison & Wu, 2008; Williams & Jacobs, 2004; Xie et al., 2008). Moreover, blogs have been found to contribute to improved learning outcomes, such as higher grades and greater satisfaction, which is attributed to the interactive and flexible nature of the platform (Ferdig & Trammell, 2005; S.-H. Yang, 2009).

From a practical perspective, the use of blogs in AI education can be enhanced by incorporating evaluative and interactive elements. Blog assignments can be assessed through multiple criteria such as content quality, depth of reflection, and student engagement, which can be tracked via behaviors such as comments, likes, and shares. These interactions can provide valuable insights into the students’ levels of engagement and understanding, which can be reflected in the effective implementation of the grading process. Widely used platforms such as CSDN, CNBlogs, and GitHub are recommended, as they are not only commonly used in China but also offer features that facilitate meaningful interaction and assessment in educational settings.

Building on these findings, the following research questions are formulated to guide this study:

- (1)

- How do blogs influence students’ learning abilities and experience in AI courses?

- (2)

- What impact do blogs have on students’ learning outcomes in AI courses?

- (3)

- How satisfied are the students using blogs as a platform for assignment submission?

- (4)

- What are the effects of using blogs on students’ learning outcomes in an AI course?

These questions aim to explore the effects of blogs on students’ learning processes and to uncover the underlying mechanisms through which these effects occur. Ultimately, the goal is to generate insights that can inform the design of effective science and engineering curricula.

Based on insights derived from the literature, we propose the following hypotheses:

Hypothesis 1.

AI course design based on a blog platform enhances students’ learning abilities, including (1) faster mastery of cutting-edge technologies and knowledge, (2) improvement in autonomous learning capabilities, and (3) enhancement of programming skills.

Hypothesis 2.

An AI course design based on a blog platform provides students with a positive learning experience, including (1) increased interest in the course, (2) better understanding of the material, and (3) a sense of achievement.

Hypothesis 3.

An AI course design based on a blog platform positively impacts course implementation, including (1) students’ self-reported course effectiveness, (2) performance in theoretical course assessments, and (3) performance in practical course assessments.

Hypothesis 4.

AI course design based on a blog platform leads to high student satisfaction, including (1) satisfaction with course resources, (2) satisfaction with the course programming source code, (3) satisfaction with course assignments, (4) satisfaction with course outcomes, and (5) satisfaction with course difficulty.

4. Methods

4.1. Lecture Details

There were 245 students enrolled in the AI program, all from the same cohort, in their third year of college when lecturing (class 2021). An NLP course is mandatory for all students, and requires full participation.

Owing to classroom capacity constraints, the cohort was divided into two groups for theory lectures and further divided into four smaller groups for laboratory sessions given the limitations of laboratory space. The course structure consisted of 36 h of theory lectures, which were completed first, followed by 24 h of laboratory sessions.

The entire theory and laboratory course was delivered by a single lecturer using the same lecturing approach, ensuring consistency in instruction. All lectures and laboratories were conducted at a fixed location according to a predetermined schedule to minimize external variability.

Regarding the course’s lecturing approach, in addition to traditional methods, such as lectures, laboratory demonstrations, and question-and-answer sessions, the course introduced a notable innovation in assignment submission. Instead of conventional laboratory reports, students were required to submit their work via blogs. The assignments covered key issues in NLP, including machine translation, text classification, Chinese-word segmentation, and word embedding. Students had to clearly explain the principles of the NLP techniques, implement them in the code, and provide performance evaluation results for their final tasks.

For example, within the course, students delved into algorithms for tasks, such as sentiment analysis. During the sentiment analysis module, students were tasked with crafting blog posts that synthesized the various algorithms that they had studied. They compared the performance of the algorithms in terms of their accuracy and computational cost. In addition, they shared real-world illustrations of sentiment analysis applications, such as their use in social media monitoring and customer feedback evaluation. Another case occurred when students explored neural-network-based language generation models. They utilized blogs to record their experiments with different model architectures and discussed challenges, such as overfitting, and the solutions they attempted.

4.2. Learning Variables

The questionnaire was designed to assess students’ engagement with five key learning variables: course design (CD), learning ability (LA), learning experience (LE), learning outcomes (LO), and learning satisfaction (LS). These dimensions explored the relationship between course content, teaching methods, and students’ learning experiences. Specifically, they examined how well the course facilitated the students’ development of skills and knowledge as well as how students perceived their satisfaction with different aspects of the course.

The questionnaire consisted of 32 questions categorized into the following five key dimensions:

- Course Design (CD): 18 questions that evaluated the overall structure of the course, including teaching methods, resource availability, and opportunities for practical application. Students rated these statements on a five-point Likert scale, ranging from “strongly agree” to “strongly disagree”. These questions aimed to gather comprehensive insights into students’ perceptions of course design. Key indicators within this dimension included content organization, platform interactivity, and course difficulty. An example question is the following: “Do you find the interactive features of the blog platform helpful for your learning?”

- Learning Ability (LA): 3 questions that assessed students’ perceived growth in learning, with a focus on their ability to learn autonomously and apply programming skills. This dimension included indicators such as independent learning ability, speed of knowledge acquisition, and improvements in programming skills. These data helped to analyze the relationship between learning ability, outcomes, and satisfaction. An example question is the following: “Can you quickly master the programming knowledge taught in this course?”

- Learning Experience (LE): 3 questions that explored students’ experiences with challenges, interests, and satisfaction throughout the course. This dimension included indicators such as learning methods (e.g., autonomous or collaborative learning), classroom interaction, and depth of knowledge application. These data shed light on how learning experiences affect students’ outcomes and satisfaction. An example question is the following: “Are you able to apply the programming knowledge learned in the course to real-world projects?”

- Learning Outcomes (LO): 3 questions that assessed the specific knowledge and skills students have acquired and their ability to apply these skills in real-world situations. This dimension included indicators such as mastery of theoretical knowledge, improvement in practical skills, and self-assessment of learning outcomes. An example question is the following: “How would you assess your overall learning outcomes in this course?”

- Learning Satisfaction (LS): 5 questions that evaluated students’ overall satisfaction with the course, including its content, assignments, teaching feedback, and interaction opportunities. The data collected in this dimension supported a comprehensive analysis of the relationships among course design, learning abilities, experiences, outcomes, and overall satisfaction. An example question is the following: "Are you satisfied with your overall learning experience in this course?"

By employing this approach, the study gathered quantitative data from these five dimensions, offering a solid foundation for analyzing the interrelationships between course design and various learning variables. The insights derived from these data will help to better understand how course design influences students’ learning outcomes, abilities, experiences, and satisfaction, ultimately contributing to recommendations for optimizing AI programming education.

4.3. Samples Collection

To gather data on students’ experiences in the NLP course, an online survey was conducted targeting undergraduate students from the 2022 cohort at the School of AI, Anhui University. A total of 245 questionnaires were distributed, and 146 valid responses were received, yielding a response rate of 59.6%. Surveys are effective research tools that enable the exploration of multiple dimensions, such as learning abilities and experiences, and provide data for hypothesis testing (Berends, 2012; King et al., 2014). The survey used a Likert scale to measure responses and ensure precision. A pilot test was conducted before the distribution, followed by revisions to enhance the clarity of the target sample. Several reverse-scored questions were included to reduce the methodological bias.

At the end of the survey, we invited students to respond to an open-ended question in a text-based interview: do you have any feedback on the course? A total of 41 students responded to this question. The comments collected focused on students’ feedback regarding their learning experiences, feelings, and interaction patterns on the blog platform.

5. Data Analysis and Results

To test the proposed hypotheses, we employed a Partial Least Squares Structural Equation Modeling (PLS-SEM) approach known for its predictive capabilities, which has been widely recognized in academia (Leguina, 2015). This study aimed to explore the relationship between the use of blog platforms and students’ learning abilities, experiences, outcomes, and satisfaction from a constructivist perspective. The analysis was conducted using variance-based techniques and specifically implemented using the WarpPLS 8.0 software (Kock, 2017).

5.1. Measurement Model

In this study, the measurement model was meticulously evaluated for its reliability, validity, and collinearity. Reliability was assessed using Cronbach’s alpha coefficient and Composite Reliability (CR) as indicators (Leguina, 2015). According to the analysis, if the alpha coefficient is above 0.8, the reliability is considered high; if it falls between 0.7 and 0.8, the reliability is deemed good; if it is between 0.6 and 0.7, the reliability is acceptable; and if it is below 0.6, the reliability is considered poor (Eisinga et al., 2013).

As shown in Table 1, the overall reliability coefficient is 0.965, which exceeds 0.9, indicating a very high level of data reliability. Factor analysis was conducted on the items, and the Kaiser–Meyer–Olkin (KMO) value was analyzed. A KMO value greater than 0.8 indicates that the data are very suitable for factor extraction, which also indirectly reflects good validity (Gim Chung et al., 2004).

Table 1.

Reliability analysis.

As shown in Table 2, the KMO value is 0.918, which is greater than 0.8, indicating that the data are highly suitable for factor extraction (reflecting good validity). Based on this, discriminant and convergent validity were tested. Validity assessment was conducted using indicators such as factor loadings, Average Variance Extracted (AVE), and the square root of AVE to measure convergent and discriminant validity. The satisfactory standard for convergent validity is that both the indicator loadings and AVE of variables are greater than 0.7 and 0.5, respectively (Vom Brocke et al., 2014). Discriminant validity is established when the square root of each variable’s AVE is greater than its correlation with other variables in the model (Fornell & Larcker, 1981). Finally, to detect multicollinearity issues, all variables were tested using the Variance Inflation Factor (VIF), with the critical threshold not exceeding 5.0.

Table 2.

Validity Analysis.

As shown in Table 3, the measurement quality satisfied the required standards. The CR values for each dimension were all above 0.7, indicating good internal consistency of the measurement items across dimensions. Except for Learning Ability (NL), which was slightly below 0.7 (but still within an acceptable range), the alpha values for the other dimensions were all above 0.7, indicating good reliability of the scale. The AVE values for all dimensions were above 0.5, indicating a good convergent validity of the measurement items. The VIF values for all dimensions ranged from 1.318 to 1.069, which is well below the threshold of 10, indicating that multicollinearity was not a significant issue among the dimensions.

Table 3.

Composite Reliability, Cronbach’s Alpha Reliability, AVE, and VIF.

In conclusion, the dimensions presented in the table exhibit good reliability and validity. The CR and Cronbach’s alpha values indicate a high internal consistency of the scale. The AVE values demonstrate good convergent validity, and the VIF values suggest that multicollinearity is not a significant concern. Therefore, the scale is acceptable in terms of both reliability and validity.

5.2. Structural Model

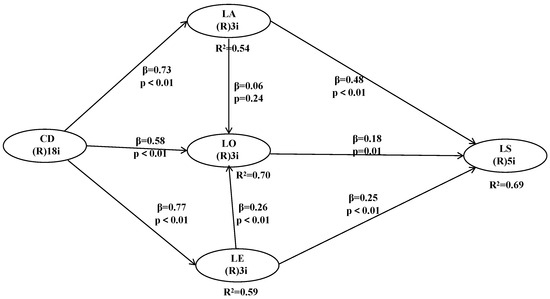

The results of the Structural Equation Modeling (SEM) analysis are illustrated in Figure 3. The arrows and adjacent numerical values within the figure reveal the relationships between the variables along with the corresponding standardized regression coefficients ( coefficients), values, and significance levels (p-values).

Figure 3.

Structural equation modeling. Notation under latent variable acronym describes measurement approach and number of indicators, e.g., (R)18i = reflective measurement with 18 indicators.

The value is a measure of how well the independent variables explain the variance in the dependent variables. An value closer to one suggests that the model has strong explanatory power, meaning that it effectively accounts for a large proportion of the variance in the dependent variables. The values shown next to the arrows indicate the strength of the effect of the independent variable on each dependent variable. Larger absolute values suggest that the independent variable has a more substantial effect on dependent variables. In other words, a higher value indicates that changes in the independent variable (course design) lead to more significant changes in the dependent variable (e.g., learning outcomes). The p-values adjacent to the values indicate whether the observed effects are statistically significant. A p-value less than 0.01 indicates a very high level of statistical significance, meaning that there is a strong likelihood that the observed relationship is not due to random chance.

H1, H2, and H3 are supported as valid hypotheses due to the direct relationships demonstrated between the course design and the respective dependent variables.

The values for H1, H2, and H3 are 0.54, 0.70, and 0.59, respectively, indicating that the model explains a substantial portion of the variance in students’ learning ability, experience, and outcomes. Based on the SEM results in Figure 3, CDLA ( = 0.73, p < 0.01, = 0.54) suggests that course design has a strong positive effect on the students’ learning ability, explaining 54% of its variance. CDLE ( = 0.58, p < 0.01, = 0.70) indicates a moderately positive effect of course design on learning experience, with the model accounting for 70% of the variance. CDLO ( = 0.77, p < 0.01, = 0.59) reflects the strong influence of the course design on learning outcomes, explaining 59% of the variance.

These results collectively underscore the critical role of course design in boosting students’ academic performance and support the effectiveness of AI-based course design in achieving intended educational outcomes.

H4 is supported as a valid hypothesis due to the mediating factors. The analysis begins by examining the impact of learning ability on learning outcomes LALO. The value of 0.06 from Figure 3 indicates a positive but very minor effect of learning ability on the learning outcomes. The p-value of 0.24 (greater than 0.05) suggests that this effect is not statistically significant, implying that the impact of learning ability on the learning outcomes cannot be confirmed as significant.

Regarding the impact of learning experience on learning outcomes LELO, the value of 0.26 reflects a moderately positive impact of learning experience on the learning outcomes. A p-value less than 0.01 indicates that this effect is statistically significant, demonstrating that an improved learning experience significantly enhances the learning outcomes.

In terms of student satisfaction, learning ability LALS shows a strong positive impact, with a value of 0.48. This suggests that improvements in learning ability significantly increase overall student satisfaction. A p-value of less than 0.01 reinforces the high significance of this effect.

Furthermore, the learning experience also contributes to student satisfaction, LELO, with a value of 0.25. This moderate positive effect, supported by a p-value less than 0.01, suggests that a positive learning experience is instrumental in elevating student satisfaction.

Finally, while the impact of learning outcomes on student satisfaction, LOLS, is relatively weaker as indicated by the value of 0.18, it remains significant. A p-value less than 0.01 implies that improvements in learning outcomes contribute to an increase in student satisfaction, albeit to a lesser extent.

In summary, learning experience has a significantly moderate positive effect on learning outcomes (, ), whereas the impact of learning ability on learning outcomes is weak and insignificant (, ). In terms of student satisfaction, learning ability demonstrates a strong positive impact (, ), whereas learning experience and learning outcomes have moderately positive effects (, and , , respectively). These relationships underscore the importance of learning ability and learning experience in driving student satisfaction and learning outcomes, thereby supporting H4.

6. Discussions

6.1. Constructivist Theory in NLP Course

The core perspective of constructivist theory is that learning is an active process. Learners do not passively receive knowledge but actively construct their own knowledge systems (Fosnot, 2013; T. Yang & Zeng, 2022). Under constructivist theory, knowledge is not simply imparted to students by teachers. Instead, it is constructed by students based on their existing knowledge and experience through the interpretation, understanding, and internalization of new information, and with the help of activities such as practice, exploration, interaction, and reflection.

With the rapid development of artificial intelligence technology, the education sector is facing unprecedented challenges and opportunities. The rapid advancement of AI has introduced new tools, frameworks, and methodologies, forcing educators to adapt their teaching methods to keep pace with technological changes. However, in practice-oriented fields such as NLP, teaching often struggles to keep pace with the rapid pace of technological developments. NLP education not only requires students to grasp theoretical knowledge but also hands-on practice to solve real-world problems, thus posing the challenge of balancing theory and practice.

Given these considerations, this study introduces a blog-based platform as an alternative to traditional exam assessments, deeply integrating a student-centered teaching approach. Through continuous interaction and feedback, this platform enhances students’ understanding and application of the course content.

As shown in Section 5.2, learners’ experiences play a vital role in this course design. This is highly consistent with constructivist theory, which emphasizes that course design should always revolve around learners and promote student learning as the core orientation. During the design process, it is necessary to fully consider the learners’ existing experience, knowledge reserves, and cognitive characteristics to ensure that the course content and teaching methods can stimulate students to actively participate in learning and guide them to build new knowledge systems based on their original experience, thereby achieving the internalization of knowledge and the enhancement of abilities.

6.2. Expanding the Reach of Blog-Based Teaching Across Disciplines

Although the research focuses on NLP, the teaching challenges it addresses are similar to those faced in other AI fields such as computer vision, machine learning, and robotics. More specifically, in computer vision courses, students can use a blog-based platform to document their progress on projects such as image classification or object detection. They can share their codes, discuss challenges, and receive feedback from their peers and instructors. This continuous interaction would help deepen their understanding of complex algorithms and improve their problem-solving skills. In machine learning courses, students can blog about their experiences with different algorithms, datasets, and model-tuning techniques. They can also discuss the ethical implications of their models, fostering a holistic understanding of the subject. This strategy could serve as a space for collaborative learning, where students share insights and troubleshoot issues. Blog-based teaching could be particularly useful in interdisciplinary courses that combine AI with other fields, such as healthcare, finance, and environmental science. Students can use the platform to explore how AI techniques can be applied to solve problems in these domains, fostering a deeper understanding of both technical and domain-specific aspects. Therefore, the proposed teaching strategies and solutions are broadly applicable across various AI domains.

On the other hand, interest in using blogs as a teaching tool may vary depending on the subject matter. Variations in interests across topics can be influenced by several factors. For instance, technical or niche subjects, such as artificial intelligence, might attract students who are already inclined toward technology and innovation, potentially leading to higher engagement. Additionally, the perceived relevance of a topic to students’ academic or career goals could play a significant role. For example, students in STEM fields might find blogs on AI more directly applicable to their studies than those in life sciences. This area could benefit from further exploration, perhaps through comparative studies across different disciplines.

6.3. Student-Centered Course Design

Significant progress has been made at the macro-level in higher education research. However, research on micro-level aspects of teaching and learning remains insufficient (Boughey & McKenna, 2021; Tight, 2018). These micro-level studies are crucial to the core of educational research (Matthews et al., 2009); therefore, research has increasingly focused on student-centered course design (Bovill et al., 2011; Hoidn & Reusser, 2020).

In line with previous studies advocating student-centered approaches, our research emphasizes the centrality of students in the learning process. Similar to the findings of Carless (Carless, 2022), who highlighted the importance of teacher–student interactions, our study acknowledges the significance of this factor. However, we further extend this by integrating a blog-based platform that not only enhances such interactions but also creates a community learning environment. This novel approach enables students to engage in continuous communication and mutual learning beyond the traditional classroom boundaries. Through this platform, students can share their learning experiences, ask questions, and provide feedback in real time, which is a new dimension that has not been fully explored in previous research that focuses solely on classroom-based interactions.

Despite these efforts, the current research and practice still fall short (Peterson et al., 2018). Existing studies indicate that students are more concerned with their personal experiences (Tynjälä, 2020; Waldeck, 2007), teacher–student interactions (Carless, 2020; Miao et al., 2022; Pianta et al., 2012), and classroom teaching effectiveness (Andrade, 2013). However, in practice, the genuine feelings of students are often not fully expressed (Harris et al., 2014; McCune, 2009; Pitchford et al., 2020). Academic organizations and disciplinary associations have a pressing need to intensify research on effective teaching and provide more methods and techniques for student-centered course design. Additionally, university educators must recognize that evaluation should be integrated into the teaching process and provide diverse channels for expression to ensure that students’ authentic experiences are reflected in their assessments. To address this, our blog-based platform replaces traditional exams with a dynamic and community-based learning approach, deepening student-centered course practices. Our interview data further support this hypothesis. For example, one student commented,

This emphasizes the necessity of practical operations in experimental courses and reflects students’ demands for changes in learning formats. Another student noted,“The programming tasks in the experimental course vary too widely in difficulty. Instead of having us write simple code on our own, requiring us to focus on adding comments would be more effective for learning.”

This indicates broad recognition of real-world project-based practical methods among students, representing a specific manifestation of dynamic learning. Additionally, students suggested,“I really like the real-world problem-based approach in practical aspects. It is highly praised and much more practical than other courses, and the results are more apparent. Please keep it up!”

This suggests that students value the ongoing access to resources, which facilitates deeper learning engagement. This feedback highlights students’ emphasis on the continuous accessibility of learning resources, further supporting a student-centered teaching design. Overall, positive feedback from students also confirms the effectiveness of our teaching methods:“Lab source code should be permanently available before the course ends for easy reference.”

“The course is very practical, learned a lot on the blog, and truly benefited.”

In summary, the student-centered course design framework proposed in this study received positive feedback in practical teaching. The course design emphasizes students’ feedback on their teaching and learning experiences, focuses on enhancing their self-abilities, encourages communication and learning among peers and community members, and fosters mutual improvement among students. Simultaneously, teachers engage in multidimensional evaluation practices for student learning outcomes and establish effective self-evaluation systems for students.“Very good course, cutting-edge knowledge, excellent teacher. I hope the college does not cancel it and continues to improve.”

6.4. Learning-Centered Course Design

In traditional higher education systems, course design often emphasizes knowledge transmission, with educational administrators typically employing summative evaluation methods that focus primarily on students’ exam scores and classroom performance (Boud & Soler, 2016; van den Bos & Brouwer, 2014). However, this approach overlooks a deeper focus on the learning process, particularly the enhancement of learning abilities (Biggs et al., 2022; Hattie & Yates, 2013). Although many educators have begun to integrate assessments of student learning outcomes and peer feedback into their teaching practices, these measures are still insufficient for comprehensively improving the quality of teaching and learning (Rizzo, 2022; Trumbull & Lash, 2013).

To achieve a student-centered course design, we must move beyond mere performance evaluation to adopt more interactive and reflective learning models (Freeman et al., 2014; Prince, 2004). First, in daily teaching, we actively incorporate continuous feedback and guidance into students’ learning. Our course design utilizes a blog platform, incorporating a series of interactive tasks that guide students to focus not only on the results of their learning but also on reflection and self-assessment during the learning process. For example, a student reported,

This model not only helps students gain a deeper understanding of course content but also effectively avoids the limitations of traditional result-oriented teaching by embedding assessment into the entire learning process, encouraging students to continuously improve their learning outcomes through ongoing reflection and self-evaluation.“I received multiple prompts from the teacher while doing my assignments, which allowed me to make timely adjustments.”

Furthermore, traditional evaluations are often one-time assessments, which makes it difficult for students to review and reflect on their learning outcomes after the course ends(Boud & Soler, 2016; Sadler, 1989). To overcome this limitation, we introduce the blog platform into our course design as a continuously accessible learning archive (or logs). Students can review their learning records and teacher feedback at any time, thereby reinforcing the continuity and depth of learning. For instance, a student noted,

This design not only supports students’ metacognitive development but also strengthens their long-term learning abilities. By reviewing and reflecting, students can identify shortcomings in their past learning methods and improve their future learning strategies, thereby leading to better learning outcomes.“The course blog is not deleted, so I regularly review my blog and the feedback received, sometimes gaining new insights.”

Finally, the course design emphasizes a multilayered feedback mechanism that includes feedback from teachers, peer evaluations, and expert reviews. A student mentioned,

Another student added,“By reviewing other students’ projects, I learned many new ways of thinking, and this mutual learning approach gave me a deeper understanding of the course content.”

This interactive feedback mechanism based on the learning community, which includes both student discussions and expert guidance owing to its public nature, helps students continuously enhance their abilities during the learning process. Through these diverse feedback channels, students’ learning capabilities and professional skills are effectively strengthened.“I was thrilled when around 20 users liked my work!”

In conclusion, our student-centered course design, through repeated self-assessments, continuously accessible learning archives, and multilayered feedback mechanisms, effectively promotes the comprehensive development of students’ core abilities. The course framework focuses on cultivating students’ learning abilities by employing project-driven, problem-oriented teaching strategies that stimulate their interest and curiosity. Our emphasis is on teaching students how to learn, ensuring that they continue to grow through reflection and self-improvement. Our course design not only facilitates learning but also teaches students how to learn, ultimately achieving the goals of student-centered education.

7. Conclusions

7.1. Contributions of the Study

This study makes several contributions to the AI course design and teaching practices. First, by integrating assessment into the teaching process, we move away from the traditional exam-centric evaluation model and provide students with diverse channels of expression. This student-centered course design focuses not only on learning outcomes but also on reflection and self-assessment throughout the learning process, thereby fostering the comprehensive development of students’ core abilities. This approach is in harmonious alignment with the burgeoning trends in educational research that ardently stress the indispensability of holistic student development during the learning odyssey (Carless, 2022; Hattie & Yates, 2013)

Second, the study introduces a blog platform and community-based learning strategy to provide students with a continuously accessible learning archive. This design supports students’ metacognitive development and strengthens their learning abilities over the long term. By reviewing and reflecting on their learning records and teacher feedback, students can identify the shortcomings of their past learning methods and improve their future learning strategies, thereby leading to better educational outcomes. The blog platform assumes the role of a digital chronicle, meticulously documenting students’ progress and metamorphosis and facilitating a retrospective dissection that can astutely inform future learning endeavors.

Additionally, this study emphasizes a multilayered feedback mechanism that includes feedback from teachers, peer evaluations, and expert reviews. This interactive feedback mechanism based on the learning community, which involves not only student discussions but also expert guidance owing to its public nature, helps students to continuously enhance their abilities during the learning process. Diverse feedback channels effectively reinforce student learning and professional skills. The incorporation of expert reviews injects a fresh dimension of credibility and industry pertinence into the feedback ecosystem, thereby deftly bridging the gap between academic learning and professional practice.

Finally, the course design presented in this study focuses on developing students’ learning abilities through project-driven and problem-oriented teaching strategies. This approach stimulates students’ interest in and curiosity about learning. Our emphasis is on teaching students how to learn, ensuring that they continue to grow through reflection and self-improvement. The course design not only facilitates learning but also educates students on how to learn, ultimately achieving the goals of student-centered education.

In summary, this study offers new perspectives and methods for course design and teaching practices, providing strong support for the reform and development of higher education. By adopting a student-centered course design, we not only improve the quality of student learning but also foster lifelong learning abilities, offering valuable insights for educators in course design.

7.2. Uniqueness of the Study

This study adopts a single case study approach. The uniqueness of this study is first reflected in the selection of both the study population and the course content. Our research focuses on third-year undergraduate students who have completed foundational coursework but are not from top-tier universities; instead, they are from general institutions. This provides the study with a certain degree of applicability and representativeness. Furthermore, the AI course in this study specifically focuses on NLP, a core area of AI technology. Unlike traditional AI courses, such as software engineering, NLP focuses on language data processing, which has unique characteristics and is rapidly evolving. Therefore, this study not only provides valuable insights into student learning at different institutions but also highlights the differences in NLP course design compared to traditional courses. Although this study employs a single case study methodology, we acknowledge the limitations of this approach. The results of a single case study may lack broad applicability in different academic contexts and course structures. Therefore, we plan to expand the scope of future research by incorporating more cases for comparison to validate and broaden the conclusions of the current study.

This study employs a mixed-methods approach, combining both qualitative and quantitative data, to offer a more comprehensive exploration of the uniqueness of the AI course and its impact on students’ learning processes and outcomes. Qualitative data provide in-depth insights into students’ learning motivations, course experiences, and interactions, while quantitative data offer strong statistical support, allowing for a more holistic and detailed analysis. By combining these approaches, the reliability and validity of the study’s findings can be enhanced. Through this innovative research design, the study not only fills a gap in the existing literature on AI course-teaching methods but also offers new perspectives and methodologies for future research in related fields.

7.3. Limitations of the Study

This study has certain limitations. While 245 samples were collected, providing valuable support for addressing the research questions, the relatively small sample size and focus on a single course (NLP) may limit the generalizability of the findings. Furthermore, as participation was voluntary, sample selection bias may have occurred, potentially skewing the results, as the backgrounds and attitudes of participants may differ from those of non-participants. This could have affected the external validity of the study.

Another limitation is the exclusive focus on the NLP course without extending the study to other AI or engineering courses. Consequently, the applicability of these findings across different disciplines may be limited. However, we believe that the characteristics of NLP are sufficiently representative of other AI subfields such as computer vision, meaning that the conclusions could be relevant to a broader range of AI-related courses. Future research should aim to increase the sample size, encompass a wider variety of courses, and explore cross-cultural and cross-regional differences to enhance the generalizability of the findings.

A textual or content analysis of blogs could yield valuable insights into their educational value, engagement strategies, and potential to foster constructivist learning. However, it is important to note that such an analysis has not yet been conducted, which is a limitation of this study. In future research, we plan to address this gap by leveraging AI tools to evaluate blog content. For instance, AI could be utilized to automate the analysis of blog posts, uncovering deeper insights into student learning patterns, engagement levels, and the overall effectiveness of blogging activities. This could involve identifying indicators, such as the depth of technical understanding, clarity of explanations, or even the emotional tone of student reflections. By incorporating AI tools into future studies, we aim to enhance the rigor and depth of our analysis.

Despite these limitations, the overall significance of the present study remains unclear. These insights offer valuable implications for optimizing teaching strategies and broadening the scope of AI education. With an expanded sample size and a refined methodology, future research is expected to provide deeper and more widely applicable insights.

Author Contributions

Conceptualization, D.W.; methodology, D.W.; validation, X.D. and J.Z.; formal analysis, D.W.; investigation, D.W.; data curation, X.D. and D.W.; writing—original draft preparation, D.W.; writing—review and editing, J.Z.; supervision, X.D. and J.Z. All authors have read and agreed to the published version of the manuscript.

Funding

This study was supported by the startup funding provided to X.D. by Anhui University.

Institutional Review Board Statement

The study complies with all ethical principles established by Anhui University. No bio-samples are involved, and only anonymous survey data are used. Ethical standards and informed consent procedures were adhered to; therefore, the creation of a specific ethics approval code or registration number was not required.

Informed Consent Statement

Informed consent was obtained from all subjects involved in this study.

Data Availability Statement

The data presented in this study are available on request from the corresponding author. (The data are not publicly available due to privacy restrictions).

Conflicts of Interest

The authors declare no conflicts of interest.

Nomenclature

Measurement Model: Assess the reliability, validity, and collinearity of a set of variables or measurement items.

Reliability: The consistency or stability of the measurement model is assessed using indicators such as Cronbach’s Alpha and Composite Reliability (CR).

Cronbach’s Alpha: This is a measure of internal consistency that indicates how well the items in a scale are related to each other. A high value (>0.8) indicates good reliability.

Composite Reliability (CR): Similar to Cronbach’s alpha, it assesses the internal consistency of the construct.

Kaiser–Meyer–Olkin (KMO): This is a measure of sampling adequacy used to assess whether the data are suitable for factor analysis. A value greater than 0.8 suggests that the data are suitable for factor extraction.

Factor Analysis: A statistical method to identify the underlying relationships between observed variables by grouping them into factors.

Discriminant Validity: This is the degree of distinctness of a construct from others; it is shown when the square root of AVE > correlations with other variables.

Convergent Validity: This is the relatedness of two measures of the same concept is confirmed when factor loadings > 0.7 and AVE > 0.5.

Average Variance Extracted (AVE): This measure is used to assess the convergent validity of a construct. A value above 0.5 indicates that the construct explains more than half of the variance of its indicators.

Variance Inflation Factor (VIF): This is a measure to detect multicollinearity between variables. A VIF greater than five indicates potential multicollinearity issues, meaning that the variables are highly correlated

Structural Equation Modeling (SEM): This is a statistical technique used to test and estimate relationships between variables, including direct and indirect effects, typically through path analysis.

Coefficient (Beta Coefficient): This is a standardized coefficient in SEM; it shows the strength/direction of the relationship. Larger absolute values indicate stronger effects.

p value: This assesses significance; <0.01, highly significant. The R2 value indicates the proportion of variance in the dependent variables explained by the independent variables. An R2 value closer to 1 suggests strong explanatory power.

Course Design (CD): This refers to how the curriculum or course structure is planned and organized, and how it influences student learning outcomes, ability, and experiences.

Learning Ability (LA): This is the capacity of students to acquire, process, and apply knowledge. This study reflects on the students’ fundamental learning skills.

Learning Experience (LE): This is the quality and nature of students’ interactions with the course content, instructors, and peers affects their engagement and overall satisfaction with the learning process.

Learning Outcomes (LO): This is the students’ results or achievements from their learning experiences, typically measured in terms of knowledge or skills acquired.

Learning Satisfaction (LS): This is the degree to which students feel content with or fulfilled by their learning experience. It often reflects both the quality of the course and students’ outcomes.

References

- Alalwan, N. (2022). Actual use of social media for engagement to enhance students’ learning. Education and Information Technologies, 27(7), 9767–9789. [Google Scholar] [CrossRef] [PubMed]

- Alt, D. (2016). Contemporary constructivist practices in higher education settings and academic motivational factors. Australian Journal of Adult Learning, 56(3), 374–399. Available online: https://search.informit.org/doi/10.3316/informit.459301185740227 (accessed on 23 January 2025).

- Anderson, R. C. (1984). Some reflections on the acquisition of knowledge. In Educational researcher (pp. 5–10). Sage Publications. [Google Scholar]

- Andrade, H. L. (2013). Classroom assessment in the context of learning theory and research. In SAGE handbook of research on classroom assessment (pp. 17–34). Sage Publishing. [Google Scholar]

- Ansari, J. A. N., & Khan, N. A. (2020). Exploring the role of social media in collaborative learning: The new domain of learning. Smart Learning Environments, 7(1), 1–16. [Google Scholar] [CrossRef]

- Bereiter, C., & Scardamalia, M. (2006). Knowledge building: Theory, pedagogy and technology. In Cambridge Handbook of Learning Sciences (pp. 97–118). Cambridge University Press. [Google Scholar]

- Berends, M. (2012). Survey methods in educational research. In Handbook of complementary methods in education research (pp. 623–640). Routledge. [Google Scholar]

- Biggs, J., Tang, C., & Kennedy, G. (2022). Teaching for quality learning at university 5e. McGraw-Hill Education (UK). [Google Scholar]

- Black, P., & Wiliam, D. (2010). Inside the black box: Raising standards through classroom assessment. Phi Delta Kappan, 92(8), 81–90. [Google Scholar] [CrossRef]

- Boud, D., & Soler, R. (2016). Sustainable assessment revisited. Assessment & Evaluation in Higher Education, 41(3), 400–413. [Google Scholar]

- Boughey, C., & McKenna, S. (2021). Understanding higher education: Alternative perspectives. African Minds. [Google Scholar]

- Bovill, C., Cook-Sather, A., & Felten, P. (2011). Students as co-creators of teaching approaches, course design, and curricula: Implications for academic developers. International Journal for Academic Development, 16(2), 133–145. [Google Scholar] [CrossRef]

- Brott, P. E. (2023). Vlogging and reflexive applications. Open Learning: The Journal of Open, Distance and e-Learning, 38(3), 281–293. [Google Scholar] [CrossRef]

- Carless, D. (2020). Longitudinal perspectives on students’ experiences of feedback: A need for teacher–student partnerships. Higher Education Research & Development, 39(3), 425–438. [Google Scholar]

- Carless, D. (2022). From teacher transmission of information to student feedback literacy: Activating the learner role in feedback processes. Active Learning in Higher Education, 23(2), 143–153. [Google Scholar] [CrossRef]

- Charania, A., Bakshani, U., Paltiwale, S., Kaur, I., & Nasrin, N. (2021). Constructivist teaching and learning with technologies in the COVID-19 lockdown in Eastern India. British Journal of Educational Technology, 52(4), 1478–1493. [Google Scholar] [CrossRef] [PubMed]

- Chaudhry, M. A., & Kazim, E. (2022). Artificial Intelligence in Education (AIEd): A high-level academic and industry note 2021. AI and Ethics, 2(1), 157–165. [Google Scholar] [CrossRef] [PubMed]

- Chu, S. K., Chan, C. K., & Tiwari, A. F. (2012). Using blogs to support learning during internship. Computers & Education, 58(3), 989–1000. [Google Scholar]

- Churchill, D. (2009). Educational applications of Web 2.0: Using blogs to support teaching and learning. British Journal of Educational Technology, 40(1), 179–183. [Google Scholar] [CrossRef]

- Deng, L., & Yuen, A. H. (2011). Towards a framework for educational affordances of blogs. Computers & Education, 56(2), 441–451. [Google Scholar]

- De Veaux, R. D., Agarwal, M., Averett, M., Baumer, B. S., Bray, A., Bressoud, T. C., Bryant, L., Cheng, L. Z., Francis, A., Gould, R., Kim, A. Y., Kretchmar, R. M., Lu, Q., Moskol, A., Nolan, D., Pelayo, R., Raleigh, S., Sethi, R. J., Sondjaja, M., … Ye, P. (2017). Curriculum guidelines for undergraduate programs in data science. Annual Review of Statistics and Its Application, 4(1), 15–30. [Google Scholar] [CrossRef]

- Efimova, L., & Grudin, J. (2007, January 3–7). Crossing boundaries: A case study of employee blogging. 2007 40th Annual Hawaii International Conference on System Sciences (HICSS’07) (p. 86), Big Island, HI, USA. [Google Scholar]

- Eisinga, R., Grotenhuis, M. t., & Pelzer, B. (2013). The reliability of a two-item scale: Pearson, Cronbach, or Spearman-Brown? International Journal of Public Health, 58, 637–642. [Google Scholar] [CrossRef] [PubMed]

- Ellison, N., & Wu, Y. (2008). Blogging in the classroom: A preliminary exploration of student attitudes and impact on comprehension. Journal of Educational Multimedia and Hypermedia, 17(1), 99–122. [Google Scholar]

- Ferdig, R. E., & Trammell, K. D. (2005). Content Delivery in the “Blogosphere”. Journal of Educational Technology, 1(4), 16–19. [Google Scholar] [CrossRef][Green Version]

- Fornell, C., & Larcker, D. F. (1981). Evaluating structural equation models with unobservable variables and measurement error. Journal of Marketing Research, 18(1), 39–50. [Google Scholar] [CrossRef]

- Fosnot, C. (2013). Constructivism: Theory, perspectives, and practice. Teachers College Press. [Google Scholar]

- Freeman, S., Eddy, S. L., McDonough, M., Smith, M. K., Okoroafor, N., Jordt, H., & Wenderoth, M. P. (2014). Active learning increases student performance in science, engineering, and mathematics. Proceedings of the National Academy of Sciences, 111(23), 8410–8415. [Google Scholar] [CrossRef]

- Garcia, E., Moizer, J., Wilkins, S., & Haddoud, M. Y. (2019). Student learning in higher education through blogging in the classroom. Computers & Education, 136, 61–74. [Google Scholar]

- Gim Chung, R. H., Kim, B. S., & Abreu, J. M. (2004). Asian American multidimensional acculturation scale: Development, factor analysis, reliability, and validity. Cultural Diversity and Ethnic Minority Psychology, 10(1), 66. [Google Scholar] [CrossRef] [PubMed]

- Goodfellow, I., Bengio, Y., & Courville, A. (2016). Deep learning. MIT Press. [Google Scholar]

- Gouvea, J. S., Sawtelle, V., Geller, B. D., & Turpen, C. (2013). A framework for analyzing interdisciplinary tasks: Implications for student learning and curricular design. CBE Life Sciences Education, 12(2), 187–205. [Google Scholar] [CrossRef] [PubMed]

- Halic, O., Lee, D., Paulus, T., & Spence, M. (2010). To blog or not to blog: Student perceptions of blog effectiveness for learning in a college-level course. The Internet and Higher Education, 13(4), 206–213. [Google Scholar] [CrossRef]

- Hamadi, M., El-Den, J., Azam, S., & Sriratanaviriyakul, N. (2022). Integrating social media as cooperative learning tool in higher education classrooms: An empirical study. Journal of King Saud University-Computer and Information Sciences, 34(6), 3722–3731. [Google Scholar] [CrossRef]

- Harris, L. R., Brown, G. T., & Harnett, J. A. (2014). Understanding classroom feedback practices: A study of New Zealand student experiences, perceptions, and emotional responses. Educational Assessment, Evaluation and Accountability, 26, 107–133. [Google Scholar] [CrossRef]

- Hattie, J., & Yates, G. C. (2013). Visible learning and the science of how we learn. Routledge. [Google Scholar]

- Hoidn, S., & Reusser, K. (2020). Foundations of student-centered learning and teaching. In The routledge international handbook of student-centered learning and teaching in higher education (pp. 17–46). Routledge. [Google Scholar]

- Jonassen, D. H., & Rohrer-Murphy, L. (1999). Activity theory as a framework for designing constructivist learning environments. In Educational technology research and development (pp. 61–79). Springer. [Google Scholar]

- King, D. B., O’Rourke, N., & DeLongis, A. (2014). Social media recruitment and online data collection: A beginner’s guide and best practices for accessing low-prevalence and hard-to-reach populations. Canadian Psychology/Psychologie canadienne, 55(4), 240. [Google Scholar] [CrossRef]

- Kock, N. (2017). WarpPLS user manual: Version 6.0 (Vol. 141, pp. 47–60). ScriptWarp Systems. [Google Scholar]

- Leguina, A. (2015). A primer on partial least squares structural equation modeling (pls-sem). Taylor & Francis. [Google Scholar]

- Leslie, P. H., & Murphy, E. (2008). Post-secondary students’ purposes for blogging. International Review of Research in Open and Distributed Learning, 9(3), 1–17. [Google Scholar] [CrossRef][Green Version]

- Li, K., Bado, N., Smith, J., & Moore, D. (2013). Blogging for teaching and learning: An examination of experience, attitudes, and levels of thinking. Contemporary Educational Technology, 4(3), 172–186. [Google Scholar] [CrossRef] [PubMed]

- Licardo, J. T., Domjan, M., & Orehovački, T. (2024). Intelligent Robotics—A Systematic Review of Emerging Technologies and Trends. Electronics, 13(3), 542. [Google Scholar] [CrossRef]

- Matthews, K. E., Adams, P., & Gannaway, D. (2009, June 29–July 1). The impact of social learning spaces on student engagement. 12th Annual Pacific Rim First Year in Higher Education Conference (Vol. 13), Townsville, Australia. [Google Scholar]

- McCune, V. (2009). Final year biosciences students’ willingness to engage: Teaching–learning environments, authentic learning experiences and identities. Studies in Higher Education, 34(3), 347–361. [Google Scholar] [CrossRef]

- Meirbekov, A., Nyshanova, S., Meiirbekov, A., Kazykhankyzy, L., Burayeva, Z., & Abzhekenova, B. (2024). Digitisation of English language education: Instagram and TikTok online educational blogs and courses vs. traditional academic education. How to increase student motivation? Education and Information Technologies, 29(11), 13635–13662. [Google Scholar]

- Miao, J., Chang, J., & Ma, L. (2022). Teacher–student interaction, student–student interaction and social presence: Their impacts on learning engagement in online learning environments. The Journal of Genetic Psychology, 183(6), 514–526. [Google Scholar] [CrossRef] [PubMed]

- Moreno, R., & Mayer, R. (2007). Interactive multimodal learning environments: Special issue on interactive learning environments: Contemporary issues and trends. Educational Psychology Review, 19, 309–326. [Google Scholar] [CrossRef]

- Mosly, I. (2024). Artificial Intelligence’s Opportunities and Challenges in Engineering Curricular Design: A Combined Review and Focus Group Study. Societies, 14(6), 89. [Google Scholar] [CrossRef]

- Nardi, B. A., Schiano, D. J., Gumbrecht, M., & Swartz, L. (2004). Why we blog. Communications of the ACM, 47(12), 41–46. [Google Scholar]

- Peterson, A., Dumont, H., Lafuente, M., & Law, N. (2018). Understanding innovative pedagogies: Key themes to analyse new approaches to teaching and learning. In OECD Education Working Papers (p. 172). OECD Publishing. [Google Scholar] [CrossRef]

- Piaget, J. (2005). The psychology of intelligence. Routledge. [Google Scholar]

- Pianta, R. C., Hamre, B. K., & Allen, J. P. (2012). Teacher-student relationships and engagement: Conceptualizing, measuring, and improving the capacity of classroom interactions. In Handbook of research on student engagement (pp. 365–386). Springer. [Google Scholar]

- Pitchford, A., Owen, D., & Stevens, E. (2020). A handbook for authentic learning in higher education: Transformational learning through real world experiences. Routledge. [Google Scholar]

- Prince, M. (2004). Does active learning work? A review of the research. Journal of Engineering Education, 93(3), 223–231. [Google Scholar] [CrossRef]

- Rizzo, L. (2022). Creating the schools our children need: Why what we’re doing now won’t help much (and what we can do instead). L’Italian Journal of Special Education for Inclusion, 10(2), 261–264. [Google Scholar]

- Sadler, D. R. (1989). Formative assessment and the design of instructional systems. Instructional Science, 18(2), 119–144. [Google Scholar] [CrossRef]

- Shim, J., & Guo, C. (2009). Weblog technology for instruction, learning, and information delivery. Decision Sciences Journal of Innovative Education, 7(1), 171–193. [Google Scholar] [CrossRef]

- Tight, M. (2018). Higher education research: The developing field. Bloomsbury Publishing. [Google Scholar]

- Trumbull, E., & Lash, A. (2013). Understanding formative assessment: Insights from Learn theory and measurement theory. WestEd. [Google Scholar]

- Tynjälä, P. (2020). Pedagogical perspectives in higher education research. In The international encyclopedia of higher education systems and institutions (pp. 2186–2191). Springer. [Google Scholar]

- van den Bos, P., & Brouwer, J. (2014). Learning to teach in higher education: How to link theory and practice. Teaching in Higher Education, 19(7), 772–786. [Google Scholar] [CrossRef]

- Vom Brocke, J., Schmiedel, T., Recker, J., Trkman, P., Mertens, W., & Viaene, S. (2014). Ten principles of good business process management. Business Process Management Journal, 20(4), 530–548. [Google Scholar] [CrossRef]

- Vygotsky, L. (1978). Mind in society: The development of higher psychological processes. Harvard UP. [Google Scholar]

- Waldeck, J. H. (2007). Answering the question: Student perceptions of personalized education and the construct’s relationship to learning outcomes. Communication Education, 56(4), 409–432. [Google Scholar] [CrossRef]

- Williams, J. B., & Jacobs, J. (2004). Exploring the use of blogs as learning spaces in the higher education sector. Australasian Journal of Educational Technology, 20(2), 232–247. [Google Scholar] [CrossRef]

- Winkler, R., Hobert, S., Salovaara, A., Söllner, M., & Leimeister, J. M. (2020, April 25–30). Sara, the lecturer: Improving learning in online education with a scaffolding—Based conversational agent. 2020 CHI Conference on Human Factors in Computing Systems (pp. 1–14), Honolulu, HI, USA. [Google Scholar]

- Xie, Y., Ke, F., & Sharma, P. (2008). The effect of peer feedback for blogging on college students’ reflective learning processes. The Internet and Higher Education, 11(1), 18–25. [Google Scholar] [CrossRef]

- Yang, S.-H. (2009). Using blogs to enhance critical reflection and community of practice. Journal of Educational Technology & Society, 12(2), 11–21. [Google Scholar]

- Yang, T., & Zeng, Q. (2022). Study on the design and optimization of learning environment based on artificial intelligence and virtual reality technology. Computational Intelligence and Neuroscience, 2022, 1–9. [Google Scholar] [CrossRef]

- Zhang, T., Huang, H., & Chen, F. (2020, October 21–24). A new training mode and practice for master of engineering in a chinese university. 2020 IEEE Frontiers in Education Conference (FIE) (pp. 1–8), Uppsala, Sweden. [Google Scholar]

- Zhu, Y., Au, W., & Yates, G. (2016). University students’ self-control and self-regulated learning in a blended course. The Internet and Higher Education, 30, 54–62. [Google Scholar] [CrossRef]

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).