Machine Learning-Assisted Systematic Review: A Case Study in Learning Analytics

Abstract

1. Introduction

2. Materials and Methods

2.1. Data

2.2. Models

2.2.1. Traditional Machine Learning

2.2.2. Large Language Models (ChatGPT)

- Temperature Setting: The temperature was set to 0.50 to balance creativity with coherence, enabling the model to capture nuanced aspects of complex abstracts while maintaining consistent decision-making. Using this setting also introduced controlled stochasticity across the ten runs, allowing us to evaluate the model’s reliability under realistic variability.

- Max Tokens: The maximum number of tokens was set to 1 to strictly control response length. This design follows evidence from Yu et al. (2024), who showed that in classification tasks, additional generated tokens are often redundant and do not improve decision accuracy. Restricted to a single token to enforce strictly binary output (“0” = exclude, “1” = include). This constraint ensured concise and consistent classifications, minimized variability, and prevented extraneous text.

- Top-p Value: The top-p value was set to 1.00 to allow the model to consider the full probability distribution of possible outputs. In line with standard practice for binary classification tasks, leaving top-p unconstrained ensures that all high-probability tokens are evaluated, thereby supporting consistent inclusion and exclusion decisions without artificially narrowing the model’s response space.

- Frequency and Presence Penalties: Both frequency and presence penalties were set to zeros. This configuration reduces repetition and encourages the model to introduce new topics, ensuring that responses are varied and contextually appropriate, thus improving the screening process by avoiding redundant information.

2.2.3. Model Comparison

3. Results and Discussion

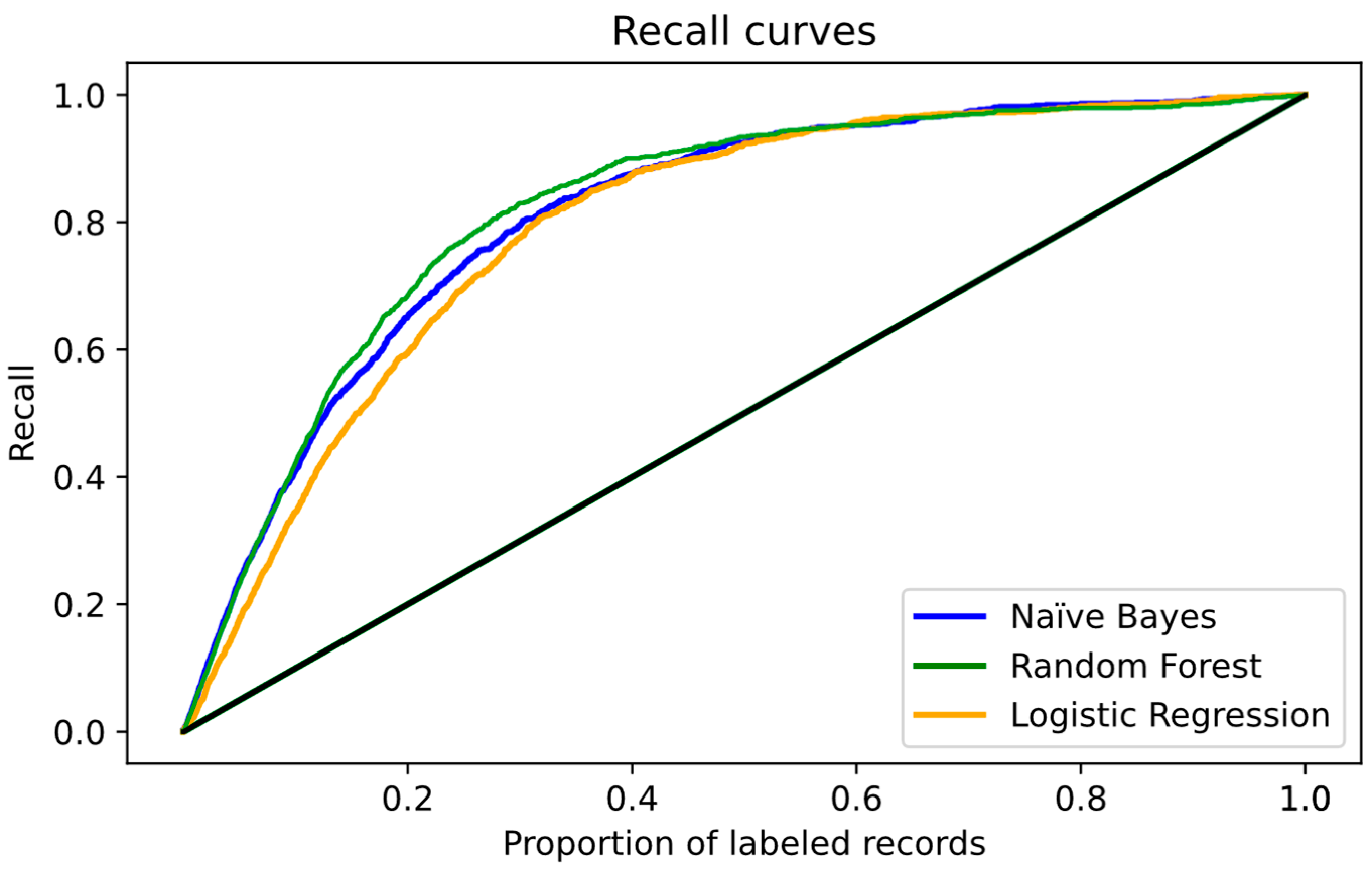

3.1. Traditional Machine Learning

3.2. Large Language Model

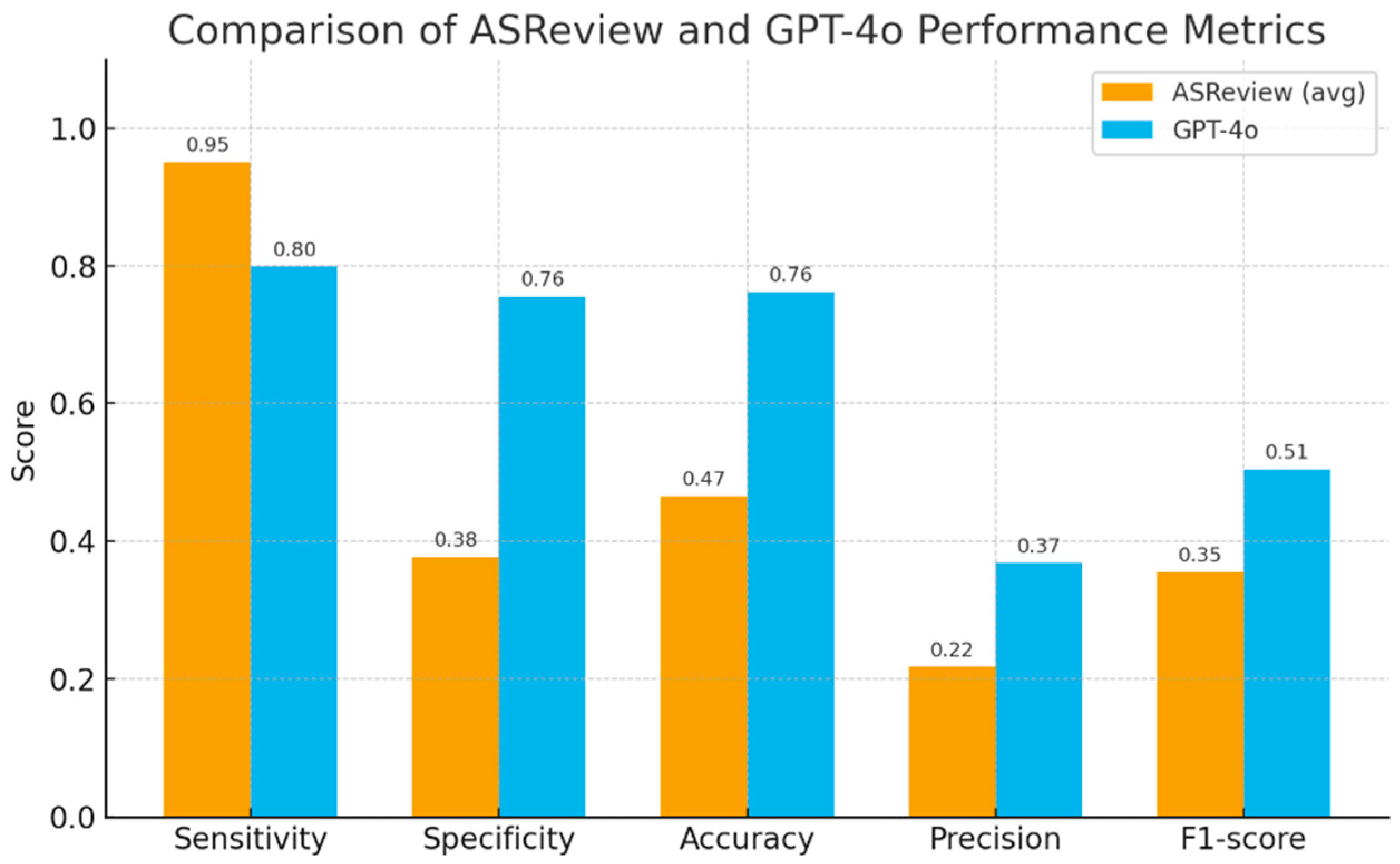

3.3. Model Comparison

3.3.1. Sensitivity, Specificity, and Precision

3.3.2. Accuracy, F1-Score and Overall Performance

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

Abbreviations

| LA | Learning Analytics |

| ML | Machine Learning |

| TP | True Positives |

| FP | False Positives |

| TN | True Negatives |

| FN | False Negatives |

| WSS | Work Saved over Sampling |

Appendix A. Prompt Used to Evaluate Each Article Using the OpenAI API

- “Learning Analytics (LA) in Higher Education”.

- -

- If in doubt or information is missing, INCLUDE (1).

- -

- Only EXCLUDE (0) if the abstract/title clearly and explicitly meets an exclusion criterion.

- -

- 0 = Exclude

- -

- 1 = Include

- -

- No explanations. No extra characters.

- -

- Title: {title}

- -

- Abstract: {abstract}

- -

- Year: {year}

- -

- I1. Focus on Learning Analytics (e.g., LMS logs, interaction/behavior data, at-risk prediction, study behaviors linked to outcomes).

- -

- I2. Evaluates students’ academic performance or related outcomes (grades, GPA, course completion, exam scores).

- -

- I3. Higher education setting (undergraduate/graduate, for-credit). MOOCs are NOT higher ed.

- -

- I4. Empirical study using learner-level primary data (institutional logs, course records). If unclear, assume primary.

- -

- I5. Participants are formal learners (enrolled in credit-bearing programs).

- -

- I6. Publication is peer-reviewed journal, peer-reviewed conference, or dissertation. (Exclude book chapters, reports.)

- -

- I7. English language.

- -

- I8. Published Jan 2011–Jan 2023.

- -

- E1. Not Learning Analytics.

- -

- E2. No focus on academic performance/outcomes.

- -

- E3. No learning impact or outcome evaluation.

- -

- E4. No empirical evidence (e.g., reviews without data, purely secondary/aggregate data).

- -

- E5. Not higher education (K-12, workforce training, MOOCs).

- -

- E6. Method development only, no application to outcomes.

- -

- E7. Literature review, meta-analysis, commentary, editorial.

- -

- E8. Not English.

- -

- E9. Published outside Jan 2011–Jan 2023.

- -

- If an exclusion criterion is explicitly and unambiguously met → 0.

- -

- Otherwise (unclear, missing, or ambiguous) → 1.

Appendix B. Fine-Tune GPT Model

References

- Adamse, I., Eichelsheim, V., Blokland, A., & Schoonmade, L. (2024). The risk and protective factors for entering organized crime groups and their association with different entering mechanisms: A systematic review using ASReview. European Journal of Criminology, 21(6), 859–886. [Google Scholar] [CrossRef]

- Alshami, A., Elsayed, M., Ali, E., Eltoukhy, A. E., & Zayed, T. (2023). Harnessing the power of ChatGPT for automating systematic review process: Methodology, case study, limitations, and future directions. Systems, 11(7), 351. [Google Scholar] [CrossRef]

- Bannach-Brown, A., Przybyła, P., Thomas, J., Rice, A. S., Ananiadou, S., Liao, J., & Macleod, M. R. (2019). Machine learning algorithms for systematic review: Reducing workload in a preclinical review of animal studies and reducing human screening error. Systematic Reviews, 8, 23. [Google Scholar] [CrossRef]

- Chai, K. E., Lines, R. L., Gucciardi, D. F., & Ng, L. (2021). Research screener: A machine learning tool to semi-automate abstract screening for systematic reviews. Systematic Reviews, 10, 93. [Google Scholar] [CrossRef]

- Dennstädt, F., Zink, J., Putora, P. M., Hastings, J., & Cihoric, N. (2024). Title and abstract screening for literature reviews using large language models: An exploratory study in the biomedical domain. Systematic Reviews, 13(1), 158. [Google Scholar] [CrossRef] [PubMed]

- Gates, A., Guitard, S., Pillay, J., Elliott, S. A., Dyson, M. P., Newton, A. S., & Hartling, L. (2019). Performance and usability of machine learning for screening in systematic reviews: A comparative evaluation of three tools. Systematic Reviews, 8, 278. [Google Scholar] [CrossRef] [PubMed]

- Gates, A., Johnson, C., & Hartling, L. (2018). Technology-assisted title and abstract screening for systematic reviews: A retrospective evaluation of the abstrackr machine learning tool. Systematic Reviews, 7, 45. [Google Scholar] [CrossRef] [PubMed]

- Guo, E., Gupta, M., Deng, J., Park, Y. J., Paget, M., & Naugler, C. (2024). Automated paper screening for clinical reviews using large language models: Data analysis study. Journal of Medical Internet Research, 26, e48996. [Google Scholar] [CrossRef]

- Hanna, M. G., Pantanowitz, L., Jackson, B., Palmer, O., Visweswaran, S., Pantanowitz, J., Deebajah, M., & Rashidi, H. H. (2025). Ethical and bias considerations in artificial intelligence/machine learning. Modern Pathology, 38(3), 100686. [Google Scholar] [CrossRef]

- Issaiy, M., Ghanaati, H., Kolahi, S., Shakiba, M., Jalali, A. H., Zarei, D., Kazemian, S., Avanaki, M. A., & Firouznia, K. (2024). Methodological insights into ChatGPT’s screening performance in systematic reviews. BMC Medical Research Methodology, 24(1), 78. [Google Scholar] [CrossRef]

- Kempeneer, S., Pirannejad, A., & Wolswinkel, J. (2023). Open government data from a legal perspective: An AI-driven systematic literature review. Government Information Quarterly, 40(3), 101823. [Google Scholar] [CrossRef]

- Li, M., Sun, J., & Tan, X. (2024). Evaluating the effectiveness of large language models in abstract screening: A comparative analysis. Systematic Reviews, 13(1), 219. [Google Scholar] [CrossRef] [PubMed]

- Motzfeldt Jensen, M., Brix Danielsen, M., Riis, J., Assifuah Kristjansen, K., Andersen, S., Okubo, Y., & Jørgensen, M. G. (2025). ChatGPT-4o can serve as the second rater for data extraction in systematic reviews. PLoS ONE, 20(1), e0313401. [Google Scholar] [CrossRef] [PubMed]

- Muthu, S. (2023). The efficiency of machine learning-assisted platform for article screening in systematic reviews in orthopaedics. International Orthopaedics, 47(2), 551–556. [Google Scholar] [CrossRef]

- Ofori-Boateng, R., Aceves-Martins, M., Wiratunga, N., & Moreno-Garcia, C. F. (2024). Towards the automation of systematic reviews using natural language processing, machine learning, and deep learning: A comprehensive review. Artificial Intelligence Review, 57(8), 200. [Google Scholar] [CrossRef]

- Pijls, B. G. (2023). Machine Learning assisted systematic reviewing in orthopaedics. Journal of Orthopaedics, 48, 103–106. [Google Scholar] [CrossRef]

- Pranckevičius, T., & Marcinkevičius, V. (2017). Comparison of naive bayes, random forest, decision tree, support vector machines, and logistic regression classifiers for text reviews classification. Baltic Journal of Modern Computing, 5(2), 221. [Google Scholar] [CrossRef]

- Quan, Y., Tytko, T., & Hui, B. (2024). Utilizing ASReview in screening primary studies for meta-research in SLA: A step-by-step tutorial. Research Methods in Applied Linguistics, 3(1), 100101. [Google Scholar] [CrossRef]

- Rainio, O., Teuho, J., & Klén, R. (2024). Evaluation metrics and statistical tests for machine learning. Scientific Reports, 14(1), 6086. [Google Scholar] [CrossRef]

- Scherbakov, D., Hubig, N., Jansari, V., Bakumenko, A., & Lenert, L. A. (2024). The emergence of large language models (llm) as a tool in literature reviews: An llm automated systematic review. arXiv, arXiv:2409.04600. [Google Scholar] [CrossRef]

- Spedener, L. (2023). Towards performance comparability: An implementation of new metrics into the ASReview active learning screening prioritization software for systematic literature reviews [Unpublished Master’s Thesis, Utrecht University]. Available online: https://studenttheses.uu.nl/handle/20.500.12932/45187 (accessed on 1 November 2025).

- Syriani, E., David, I., & Kumar, G. (2024). Screening articles for systematic reviews with ChatGPT. Journal of Computer Languages, 80, 101287. [Google Scholar] [CrossRef]

- Van De Schoot, R., De Bruin, J., Schram, R., Zahedi, P., De Boer, J., Weijdema, F., Kramer, B., Huijts, M., Hoogerwerf, M., Ferdinands, G., Harkema, A., Willemsen, J., Ma, Y., Fang, Q., Hindriks, S., Tummers, L., & Oberski, D. L. (2021). An open source machine learning framework for efficient and transparent systematic reviews. Nature Machine Intelligence, 3(2), 125–133. [Google Scholar] [CrossRef]

- Xiong, Z., Liu, T., Tse, G., Gong, M., Gladding, P. A., Smaill, B. H., Stiles, M. K., Gillis, A. M., & Zhao, J. (2018). A machine learning aided systematic review and meta-analysis of the relative risk of atrial fibrillation in patients with diabetes mellitus. Frontiers in Physiology, 9, 835. [Google Scholar] [CrossRef] [PubMed]

- Yao, X., Kumar, M. V., Su, E., Miranda, A. F., Saha, A., & Sussman, J. (2024). Evaluating the efficacy of artificial intelligence tools for the automation of systematic reviews in cancer research: A systematic review. Cancer Epidemiology, 88, 102511. [Google Scholar] [CrossRef] [PubMed]

- Yu, Y. C., Kuo, C. C., Ye, Z., Chang, Y. C., & Li, Y. S. (2024). Breaking the ceiling of the llm community by treating token generation as a classification for ensembling. arXiv, arXiv:2406.12585. [Google Scholar] [CrossRef]

- Zeng, G. (2020). On the confusion matrix in credit scoring and its analytical properties. Communications in Statistics-Theory and Methods, 49(9), 2080–2093. [Google Scholar] [CrossRef]

- Zhu, W., Zeng, N., & Wang, N. (2010, November 14–17). Sensitivity, specificity, accuracy, associated confidence interval and ROC analysis with practical SAS implementations. NESUG Proceeding: Health Care and Life Sciences (Vol. 19, p. 67), Baltimore, MD, USA. [Google Scholar]

- Zimmerman, J., Soler, R. E., Lavinder, J., Murphy, S., Atkins, C., Hulbert, L., Lusk, R., & Ng, B. P. (2021). Iterative guided machine learning-assisted systematic literature reviews: A diabetes case study. Systematic Reviews, 10(1), 97. [Google Scholar] [CrossRef]

| Author | Tool |

|---|---|

| Gates et al. (2018, 2019) | Abstrackr |

| Gates et al. (2019) | DistillerSR and RobotAnalyst |

| Chai et al. (2021) | Research Screener |

| Muthu (2023); Pijls (2023); Quan et al. (2024) | ASReview |

| Alshami et al. (2023); Issaiy et al. (2024) | ChatGPT |

| Naïve Bayes | Random Forest | Logistic Regression | |

|---|---|---|---|

| Records for 95% Recall | 5103 (4044–6648) | 5585 (4409–6691) | 4833 (4000–6702) |

| WSS@95 (%) | 27.26 (13.94–40.59) | 20.40 (9.12–31.68) | 31.12 (18.33–43.91) |

| RRF@10 (%) | 27.09 (10.04–39.31) | 19.15 (9.47–30.39) | 31.02 (9.38–40.81) |

| Metric | Naïve Bayes | Random Forest | Logistic Regression |

|---|---|---|---|

| True Positives | 1032 (1020–1045) | 1032 (1019–1044) | 1032 (1019–1044) |

| False Positives | 3661 (3400–3910) | 3727 (3460–3980) | 3703 (3440–3950) |

| True Negatives | 2273 (2120– 2410) | 2207 (2070–2340) | 2231 (2100–2360) |

| False Negatives | 54 (49–59) | 54 (49–59) | 54 (49–59) |

| Sensitivity | 0.950 (0.946–0.954) | 0.950 (0.946–0.954) | 0.950 (0.946–0.954) |

| Specificity | 0.383 (0.357–0.409) | 0.372 (0.346–0.398) | 0.376 (0.350–0.402) |

| Accuracy | 0.471 (0.454–0.488) | 0.461 (0.444–0.478) | 0.465 (0.448–0.482) |

| Precision | 0.220 (0.207–0.233) | 0.217 (0.204–0.230) | 0.218 (0.205–0.231) |

| F1-score | 0.357 (0.341–0.373) | 0.353 (0.337–0.369) | 0.355 (0.339–0.371) |

| Confusion Matrix Counts | Mean | 95% CI |

|---|---|---|

| True Positive (TP) | 847 | (842–852) |

| False Positive (FP) | 1870 | (1845–1895) |

| True Negative (TN) | 4080 | (4055–4105) |

| False Negative (FN) | 219 | (214–224) |

| Evaluation Metrics | Mean | 95% CI |

| Sensitivity | 0.795 | (0.790–0.800) |

| Specificity | 0.688 | (0.684–0.692) |

| Accuracy | 0.704 | (0.701–0.707) |

| Precision | 0.314 | (0.311–0.317) |

| F1-score | 0.449 | (0.445–0.453) |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2025 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, Z.; Zhuang, X.; Ma, S. Machine Learning-Assisted Systematic Review: A Case Study in Learning Analytics. Educ. Sci. 2025, 15, 1488. https://doi.org/10.3390/educsci15111488

Xu Z, Zhuang X, Ma S. Machine Learning-Assisted Systematic Review: A Case Study in Learning Analytics. Education Sciences. 2025; 15(11):1488. https://doi.org/10.3390/educsci15111488

Chicago/Turabian StyleXu, Zhihong, Xiting Zhuang, and Shuai Ma. 2025. "Machine Learning-Assisted Systematic Review: A Case Study in Learning Analytics" Education Sciences 15, no. 11: 1488. https://doi.org/10.3390/educsci15111488

APA StyleXu, Z., Zhuang, X., & Ma, S. (2025). Machine Learning-Assisted Systematic Review: A Case Study in Learning Analytics. Education Sciences, 15(11), 1488. https://doi.org/10.3390/educsci15111488